Github user sujith71955 commented on a diff in the pull request:

https://github.com/apache/spark/pull/20611#discussion_r181543985

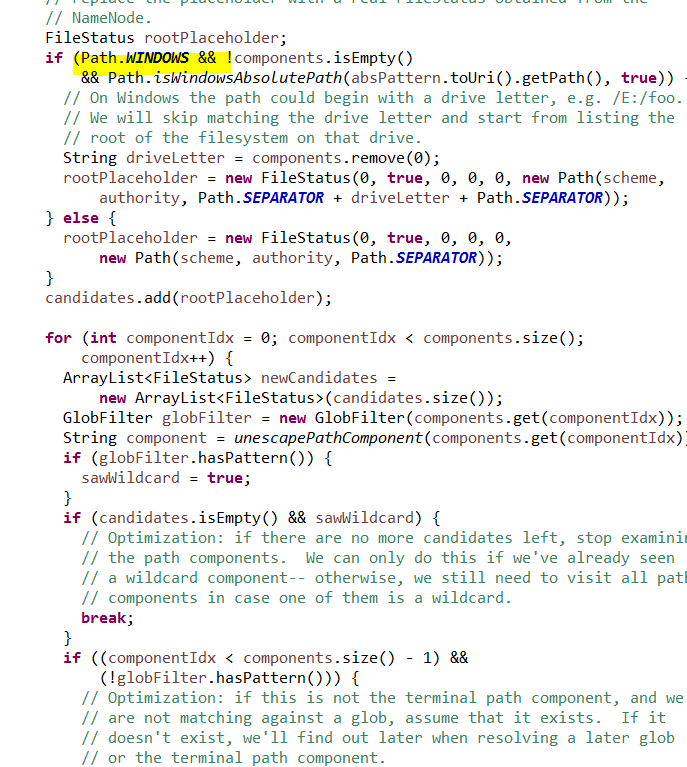

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/command/tables.scala ---

@@ -304,45 +304,14 @@ case class LoadDataCommand(

}

}

- val loadPath =

+ val loadPath = {

if (isLocal) {

val uri = Utils.resolveURI(path)

- val file = new File(uri.getPath)

- val exists = if (file.getAbsolutePath.contains("*")) {

- val fileSystem = FileSystems.getDefault

- val dir = file.getParentFile.getAbsolutePath

- if (dir.contains("*")) {

- throw new AnalysisException(

- s"LOAD DATA input path allows only filename wildcard: $path")

- }

-

- // Note that special characters such as "*" on Windows are not

allowed as a path.

--- End diff --

@wzhfy All test-cases related to windows query suite are passing i think in

previous code, for reading the files based on wildcard char, we are trying to

read the files parent directory first, and we were listing all files inside

that folder and then we are trying to match the pattern for each file inside

directory, so i think for getting the parent path we need to explicitly check

that it should not have any unsupported characters like '*' ,But now we are

directly passing the path with wildchar to globStatus() API of hdfs and this

should able to pattern match irrespective of directory/files, in globStatus

API i could see they have special handling for windows path, i will look into

more details regarding this.

Thanks all for the valuable feedbacks .

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org