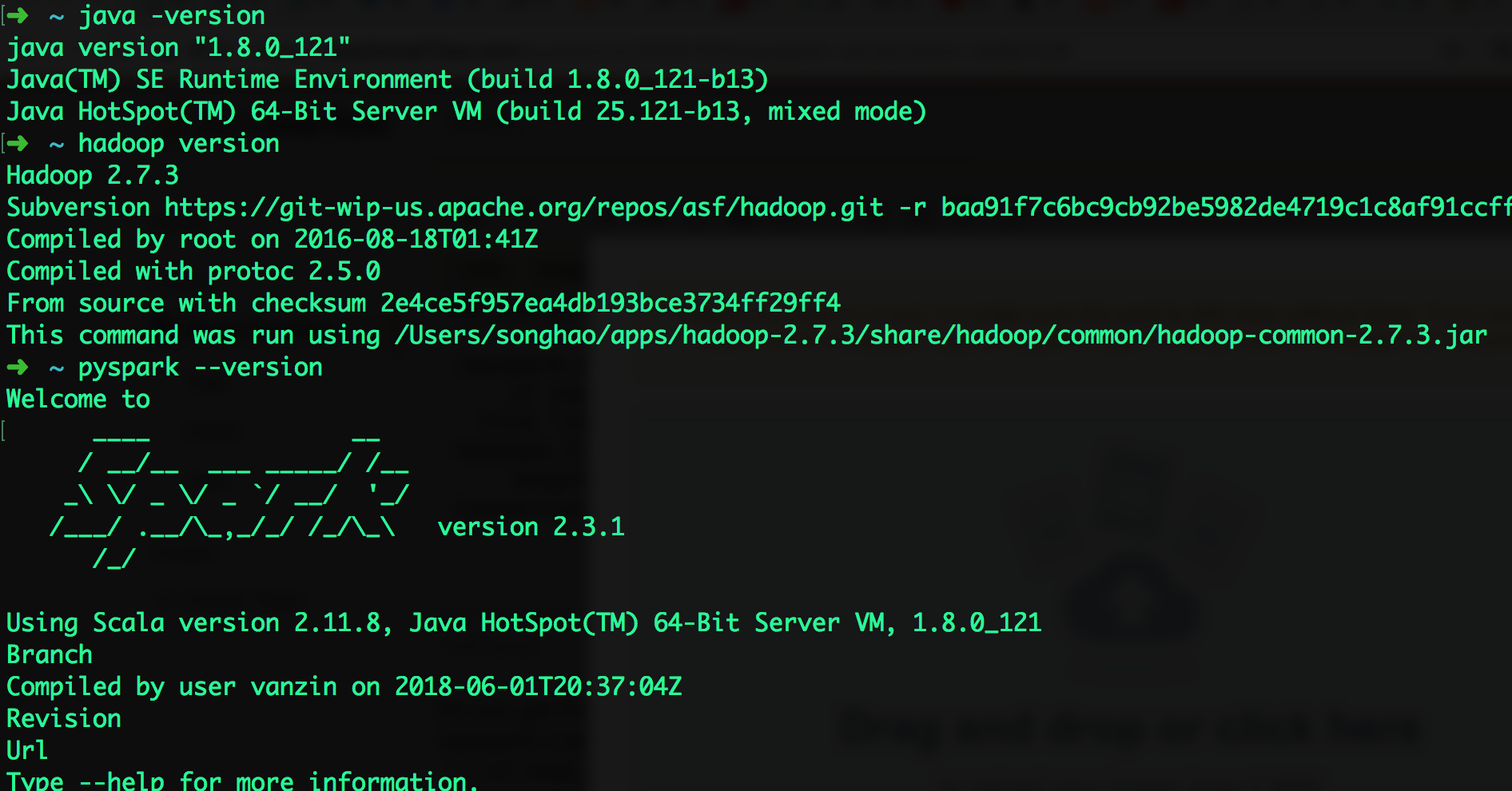

GitHub user lovezeropython opened a pull request:

https://github.com/apache/spark/pull/21870

Branch 2.3

EOFError

# ConnectionResetError: [Errno 54] Connection reset by peer

(Please fill in changes proposed in this fix)

```

/pyspark.zip/pyspark/worker.py", line 255, in main

if read_int(infile) == SpecialLengths.END_OF_STREAM:

File

"/Users/songhao/apps/spark-2.3.1-bin-hadoop2.7/python/lib/pyspark.zip/pyspark/serializers.py",

line 683, in read_int

length = stream.read(4)

ConnectionResetError: [Errno 54] Connection reset by peerenter code here

```

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/apache/spark branch-2.3

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/21870.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #21870

----

commit 3737c3d32bb92e73cadaf3b1b9759d9be00b288d

Author: gatorsmile <gatorsmile@...>

Date: 2018-02-13T06:05:13Z

[SPARK-20090][FOLLOW-UP] Revert the deprecation of `names` in PySpark

## What changes were proposed in this pull request?

Deprecating the field `name` in PySpark is not expected. This PR is to

revert the change.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsm...@gmail.com>

Closes #20595 from gatorsmile/removeDeprecate.

(cherry picked from commit 407f67249639709c40c46917700ed6dd736daa7d)

Signed-off-by: hyukjinkwon <gurwls...@gmail.com>

commit 1c81c0c626f115fbfe121ad6f6367b695e9f3b5f

Author: guoxiaolong <guo.xiaolong1@...>

Date: 2018-02-13T12:23:10Z

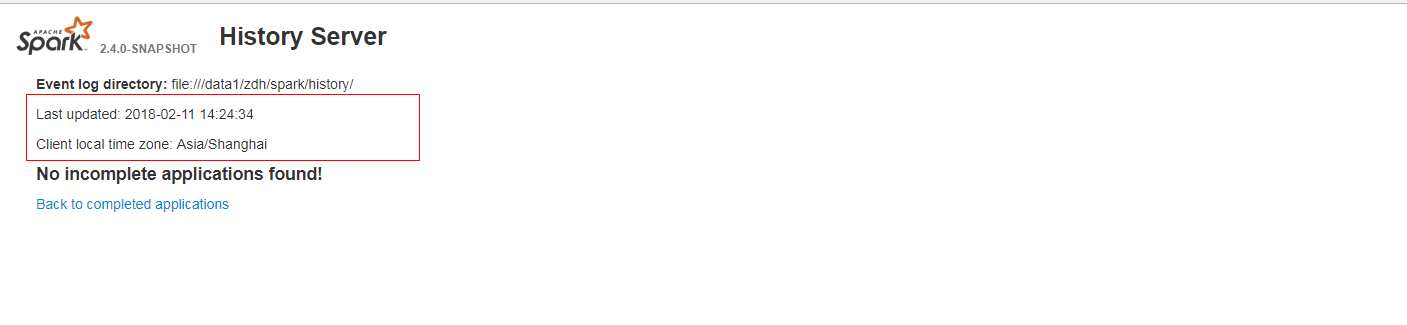

[SPARK-23384][WEB-UI] When it has no incomplete(completed) applications

found, the last updated time is not formatted and client local time zone is not

show in history server web ui.

## What changes were proposed in this pull request?

When it has no incomplete(completed) applications found, the last updated

time is not formatted and client local time zone is not show in history server

web ui. It is a bug.

fix before:

fix after:

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration

tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise,

remove this)

Please review http://spark.apache.org/contributing.html before opening a

pull request.

Author: guoxiaolong <guo.xiaolo...@zte.com.cn>

Closes #20573 from guoxiaolongzte/SPARK-23384.

(cherry picked from commit 300c40f50ab4258d697f06a814d1491dc875c847)

Signed-off-by: Sean Owen <so...@cloudera.com>

commit dbb1b399b6cf8372a3659c472f380142146b1248

Author: huangtengfei <huangtengfei@...>

Date: 2018-02-13T15:59:21Z

[SPARK-23053][CORE] taskBinarySerialization and task partitions calculate

in DagScheduler.submitMissingTasks should keep the same RDD checkpoint status

## What changes were proposed in this pull request?

When we run concurrent jobs using the same rdd which is marked to do

checkpoint. If one job has finished running the job, and start the process of

RDD.doCheckpoint, while another job is submitted, then submitStage and

submitMissingTasks will be called. In

[submitMissingTasks](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L961),

will serialize taskBinaryBytes and calculate task partitions which are both

affected by the status of checkpoint, if the former is calculated before

doCheckpoint finished, while the latter is calculated after doCheckpoint

finished, when run task, rdd.compute will be called, for some rdds with

particular partition type such as

[UnionRDD](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/rdd/UnionRDD.scala)

who will do partition type cast, will get a ClassCastException because the

part params is actually a CheckpointRDDPartition.

This error occurs because rdd.doCheckpoint occurs in the same thread that

called sc.runJob, while the task serialization occurs in the DAGSchedulers

event loop.

## How was this patch tested?

the exist uts and also add a test case in DAGScheduerSuite to show the

exception case.

Author: huangtengfei <huangtengfei@huangtengfeideMacBook-Pro.local>

Closes #20244 from ivoson/branch-taskpart-mistype.

(cherry picked from commit 091a000d27f324de8c5c527880854ecfcf5de9a4)

Signed-off-by: Imran Rashid <iras...@cloudera.com>

commit ab01ba718c7752b564e801a1ea546aedc2055dc0

Author: Bogdan Raducanu <bogdan@...>

Date: 2018-02-13T17:49:52Z

[SPARK-23316][SQL] AnalysisException after max iteration reached for IN

query

## What changes were proposed in this pull request?

Added flag ignoreNullability to DataType.equalsStructurally.

The previous semantic is for ignoreNullability=false.

When ignoreNullability=true equalsStructurally ignores nullability of

contained types (map key types, value types, array element types, structure

field types).

In.checkInputTypes calls equalsStructurally to check if the children types

match. They should match regardless of nullability (which is just a hint), so

it is now called with ignoreNullability=true.

## How was this patch tested?

New test in SubquerySuite

Author: Bogdan Raducanu <bog...@databricks.com>

Closes #20548 from bogdanrdc/SPARK-23316.

(cherry picked from commit 05d051293fe46938e9cb012342fea6e8a3715cd4)

Signed-off-by: gatorsmile <gatorsm...@gmail.com>

commit 320ffb1309571faedb271f2c769b4ab1ee1cd267

Author: Joseph K. Bradley <joseph@...>

Date: 2018-02-13T19:18:45Z

[SPARK-23154][ML][DOC] Document backwards compatibility guarantees for ML

persistence

## What changes were proposed in this pull request?

Added documentation about what MLlib guarantees in terms of loading ML

models and Pipelines from old Spark versions. Discussed & confirmed on linked

JIRA.

Author: Joseph K. Bradley <jos...@databricks.com>

Closes #20592 from jkbradley/SPARK-23154-backwards-compat-doc.

(cherry picked from commit d58fe28836639e68e262812d911f167cb071007b)

Signed-off-by: Joseph K. Bradley <jos...@databricks.com>

commit 4f6a457d464096d791e13e57c55bcf23c01c418f

Author: gatorsmile <gatorsmile@...>

Date: 2018-02-13T19:56:49Z

[SPARK-23400][SQL] Add a constructors for ScalaUDF

## What changes were proposed in this pull request?

In this upcoming 2.3 release, we changed the interface of `ScalaUDF`.

Unfortunately, some Spark packages (e.g., spark-deep-learning) are using our

internal class `ScalaUDF`. In the release 2.3, we added new parameters into

this class. The users hit the binary compatibility issues and got the exception:

```

> java.lang.NoSuchMethodError:

org.apache.spark.sql.catalyst.expressions.ScalaUDF.<init>(Ljava/lang/Object;Lorg/apache/spark/sql/types/DataType;Lscala/collection/Seq;Lscala/collection/Seq;Lscala/Option;)V

```

This PR is to improve the backward compatibility. However, we definitely

should not encourage the external packages to use our internal classes. This

might make us hard to maintain/develop the codes in Spark.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsm...@gmail.com>

Closes #20591 from gatorsmile/scalaUDF.

(cherry picked from commit 2ee76c22b6e48e643694c9475e5f0d37124215e7)

Signed-off-by: Shixiong Zhu <zsxw...@gmail.com>

commit bb26bdb55fdf84c4e36fd66af9a15e325a3982d6

Author: Dongjoon Hyun <dongjoon@...>

Date: 2018-02-14T02:55:24Z

[SPARK-23399][SQL] Register a task completion listener first for

OrcColumnarBatchReader

This PR aims to resolve an open file leakage issue reported at

[SPARK-23390](https://issues.apache.org/jira/browse/SPARK-23390) by moving the

listener registration position. Currently, the sequence is like the following.

1. Create `batchReader`

2. `batchReader.initialize` opens a ORC file.

3. `batchReader.initBatch` may take a long time to alloc memory in some

environment and cause errors.

4. `Option(TaskContext.get()).foreach(_.addTaskCompletionListener(_ =>

iter.close()))`

This PR moves 4 before 2 and 3. To sum up, the new sequence is 1 -> 4 -> 2

-> 3.

Manual. The following test case makes OOM intentionally to cause leaked

filesystem connection in the current code base. With this patch, leakage

doesn't occurs.

```scala

// This should be tested manually because it raises OOM intentionally

// in order to cause `Leaked filesystem connection`.

test("SPARK-23399 Register a task completion listener first for

OrcColumnarBatchReader") {

withSQLConf(SQLConf.ORC_VECTORIZED_READER_BATCH_SIZE.key ->

s"${Int.MaxValue}") {

withTempDir { dir =>

val basePath = dir.getCanonicalPath

Seq(0).toDF("a").write.format("orc").save(new Path(basePath,

"first").toString)

Seq(1).toDF("a").write.format("orc").save(new Path(basePath,

"second").toString)

val df = spark.read.orc(

new Path(basePath, "first").toString,

new Path(basePath, "second").toString)

val e = intercept[SparkException] {

df.collect()

}

assert(e.getCause.isInstanceOf[OutOfMemoryError])

}

}

}

```

Author: Dongjoon Hyun <dongj...@apache.org>

Closes #20590 from dongjoon-hyun/SPARK-23399.

(cherry picked from commit 357babde5a8eb9710de7016d7ae82dee21fa4ef3)

Signed-off-by: Wenchen Fan <wenc...@databricks.com>

commit fd66a3b7b151514a9f626444ef8710f64dab6813

Author: âattilapirosâ <piros.attila.zsolt@...>

Date: 2018-02-14T14:45:54Z

[SPARK-23394][UI] In RDD storage page show the executor addresses instead

of the IDs

## What changes were proposed in this pull request?

Extending RDD storage page to show executor addresses in the block table.

## How was this patch tested?

Manually:

Author: âattilapirosâ <piros.attila.zs...@gmail.com>

Closes #20589 from attilapiros/SPARK-23394.

(cherry picked from commit 140f87533a468b1046504fc3ff01fbe1637e41cd)

Signed-off-by: Marcelo Vanzin <van...@cloudera.com>

commit a5a8a86e213c34d6fb32f0ae52db24d8f1ef0905

Author: gatorsmile <gatorsmile@...>

Date: 2018-02-14T18:59:36Z

Revert "[SPARK-23249][SQL] Improved block merging logic for partitions"

This reverts commit f5f21e8c4261c0dfe8e3e788a30b38b188a18f67.

commit bd83f7ba097d9bca9a0e8c072f7566a645887a96

Author: gatorsmile <gatorsmile@...>

Date: 2018-02-15T07:52:59Z

[SPARK-23421][SPARK-22356][SQL] Document the behavior change in

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/19579 introduces a behavior change. We

need to document it in the migration guide.

## How was this patch tested?

Also update the HiveExternalCatalogVersionsSuite to verify it.

Author: gatorsmile <gatorsm...@gmail.com>

Closes #20606 from gatorsmile/addMigrationGuide.

(cherry picked from commit a77ebb0921e390cf4fc6279a8c0a92868ad7e69b)

Signed-off-by: gatorsmile <gatorsm...@gmail.com>

commit 129fd45efb418c6afa95aa26e5b96f03a39dcdd0

Author: gatorsmile <gatorsmile@...>

Date: 2018-02-15T07:56:02Z

[SPARK-23094] Revert [] Fix invalid character handling in JsonDataSource

## What changes were proposed in this pull request?

This PR is to revert the PR https://github.com/apache/spark/pull/20302,

because it causes a regression.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsm...@gmail.com>

Closes #20614 from gatorsmile/revertJsonFix.

(cherry picked from commit 95e4b4916065e66a4f8dba57e98e725796f75e04)

Signed-off-by: gatorsmile <gatorsm...@gmail.com>

commit f2c0585652a8262801e92a02b56c56f16b8926e5

Author: Wenchen Fan <wenchen@...>

Date: 2018-02-15T08:59:44Z

[SPARK-23419][SPARK-23416][SS] data source v2 write path should re-throw

interruption exceptions directly

## What changes were proposed in this pull request?

Streaming execution has a list of exceptions that means interruption, and

handle them specially. `WriteToDataSourceV2Exec` should also respect this list

and not wrap them with `SparkException`.

## How was this patch tested?

existing test.

Author: Wenchen Fan <wenc...@databricks.com>

Closes #20605 from cloud-fan/write.

(cherry picked from commit f38c760638063f1fb45e9ee2c772090fb203a4a0)

Signed-off-by: Wenchen Fan <wenc...@databricks.com>

commit d24d13179f0e9d125eaaebfcc225e1ec30c5cb83

Author: Gabor Somogyi <gabor.g.somogyi@...>

Date: 2018-02-15T11:52:40Z

[SPARK-23422][CORE] YarnShuffleIntegrationSuite fix when SPARK_PREPENâ¦

â¦D_CLASSES set to 1

## What changes were proposed in this pull request?

YarnShuffleIntegrationSuite fails when SPARK_PREPEND_CLASSES set to 1.

Normally mllib built before yarn module. When SPARK_PREPEND_CLASSES used

mllib classes are on yarn test classpath.

Before 2.3 that did not cause issues. But 2.3 has SPARK-22450, which

registered some mllib classes with the kryo serializer. Now it dies with the

following error:

`

18/02/13 07:33:29 INFO SparkContext: Starting job: collect at

YarnShuffleIntegrationSuite.scala:143

Exception in thread "dag-scheduler-event-loop"

java.lang.NoClassDefFoundError: breeze/linalg/DenseMatrix

`

In this PR NoClassDefFoundError caught only in case of testing and then do

nothing.

## How was this patch tested?

Automated: Pass the Jenkins.

Author: Gabor Somogyi <gabor.g.somo...@gmail.com>

Closes #20608 from gaborgsomogyi/SPARK-23422.

(cherry picked from commit 44e20c42254bc6591b594f54cd94ced5fcfadae3)

Signed-off-by: Marcelo Vanzin <van...@cloudera.com>

commit bae4449ad836a64db853e297d33bfd1a725faa0b

Author: Dongjoon Hyun <dongjoon@...>

Date: 2018-02-15T16:55:39Z

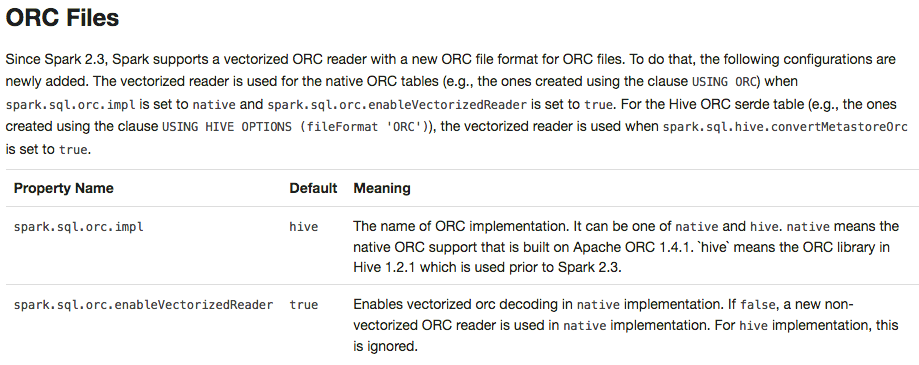

[SPARK-23426][SQL] Use `hive` ORC impl and disable PPD for Spark 2.3.0

## What changes were proposed in this pull request?

To prevent any regressions, this PR changes ORC implementation to `hive` by

default like Spark 2.2.X.

Users can enable `native` ORC. Also, ORC PPD is also restored to `false`

like Spark 2.2.X.

## How was this patch tested?

Pass all test cases.

Author: Dongjoon Hyun <dongj...@apache.org>

Closes #20610 from dongjoon-hyun/SPARK-ORC-DISABLE.

(cherry picked from commit 2f0498d1e85a53b60da6a47d20bbdf56b42b7dcb)

Signed-off-by: gatorsmile <gatorsm...@gmail.com>

commit 03960faa621710388c0de91d16a71dda749d173f

Author: Dongjoon Hyun <dongjoon@...>

Date: 2018-02-15T17:40:08Z

[MINOR][SQL] Fix an error message about inserting into bucketed tables

## What changes were proposed in this pull request?

This replaces `Sparkcurrently` to `Spark currently` in the following error

message.

```scala

scala> sql("insert into t2 select * from v1")

org.apache.spark.sql.AnalysisException: Output Hive table `default`.`t2`

is bucketed but Sparkcurrently does NOT populate bucketed ...

```

## How was this patch tested?

Manual.

Author: Dongjoon Hyun <dongj...@apache.org>

Closes #20617 from dongjoon-hyun/SPARK-ERROR-MSG.

(cherry picked from commit 6968c3cfd70961c4e86daffd6a156d0a9c1d7a2a)

Signed-off-by: gatorsmile <gatorsm...@gmail.com>

commit 0bd7765cd9832bee348af87663f3d424b61e92fc

Author: Liang-Chi Hsieh <viirya@...>

Date: 2018-02-15T17:54:39Z

[SPARK-23377][ML] Fixes Bucketizer with multiple columns persistence bug

## What changes were proposed in this pull request?

#### Problem:

Since 2.3, `Bucketizer` supports multiple input/output columns. We will

check if exclusive params are set during transformation. E.g., if `inputCols`

and `outputCol` are both set, an error will be thrown.

However, when we write `Bucketizer`, looks like the default params and

user-supplied params are merged during writing. All saved params are loaded

back and set to created model instance. So the default `outputCol` param in

`HasOutputCol` trait will be set in `paramMap` and become an user-supplied

param. That makes the check of exclusive params failed.

#### Fix:

This changes the saving logic of Bucketizer to handle this case. This is a

quick fix to catch the time of 2.3. We should consider modify the persistence

mechanism later.

Please see the discussion in the JIRA.

Note: The multi-column `QuantileDiscretizer` also has the same issue.

## How was this patch tested?

Modified tests.

Author: Liang-Chi Hsieh <vii...@gmail.com>

Closes #20594 from viirya/SPARK-23377-2.

(cherry picked from commit db45daab90ede4c03c1abc9096f4eac584e9db17)

Signed-off-by: Joseph K. Bradley <jos...@databricks.com>

commit 75bb19a018f9260eab3ea0ba3ea46e84b87eabf2

Author: âattilapirosâ <piros.attila.zsolt@...>

Date: 2018-02-15T20:03:41Z

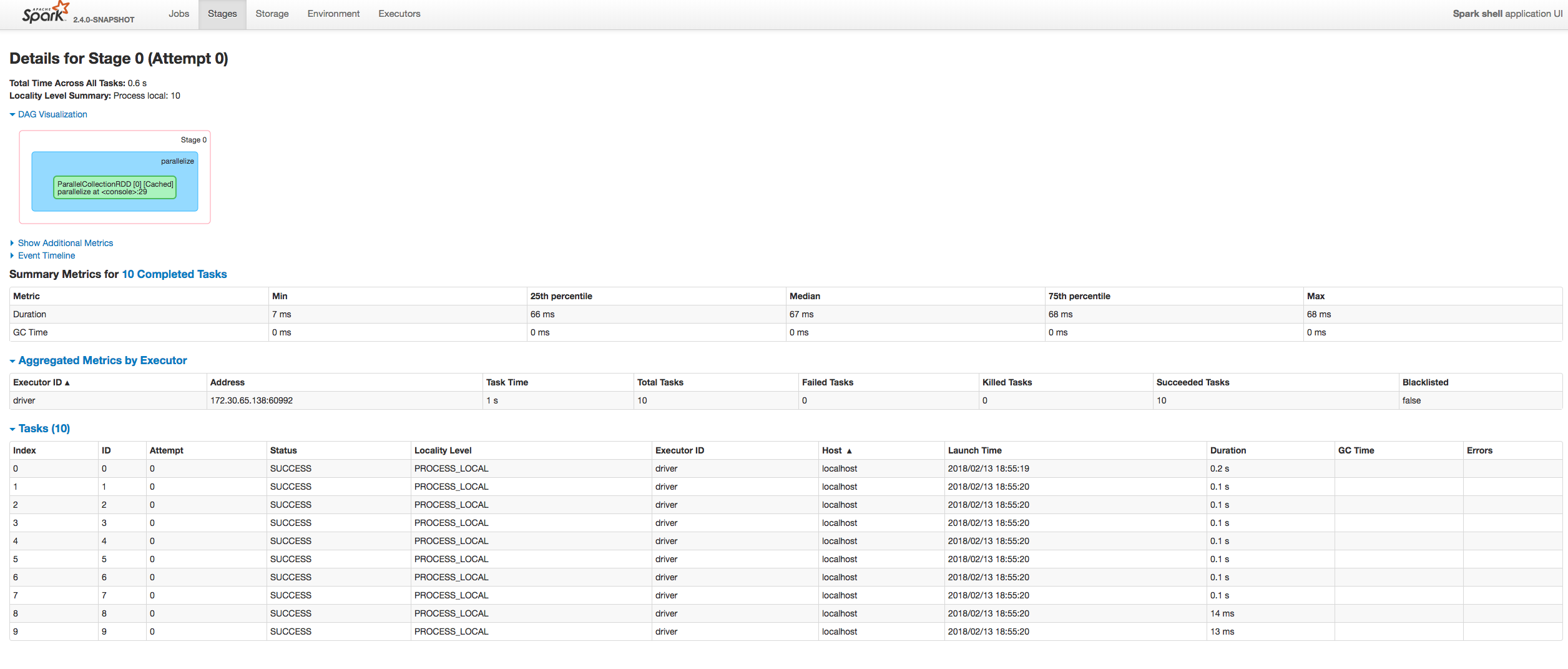

[SPARK-23413][UI] Fix sorting tasks by Host / Executor ID at the Stagâ¦

â¦e page

## What changes were proposed in this pull request?

Fixing exception got at sorting tasks by Host / Executor ID:

```

java.lang.IllegalArgumentException: Invalid sort column: Host

at org.apache.spark.ui.jobs.ApiHelper$.indexName(StagePage.scala:1017)

at

org.apache.spark.ui.jobs.TaskDataSource.sliceData(StagePage.scala:694)

at org.apache.spark.ui.PagedDataSource.pageData(PagedTable.scala:61)

at org.apache.spark.ui.PagedTable$class.table(PagedTable.scala:96)

at org.apache.spark.ui.jobs.TaskPagedTable.table(StagePage.scala:708)

at org.apache.spark.ui.jobs.StagePage.liftedTree1$1(StagePage.scala:293)

at org.apache.spark.ui.jobs.StagePage.render(StagePage.scala:282)

at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82)

at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82)

at org.apache.spark.ui.JettyUtils$$anon$3.doGet(JettyUtils.scala:90)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:687)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at

org.spark_project.jetty.servlet.ServletHolder.handle(ServletHolder.java:848)

at

org.spark_project.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:584)

```

Moreover some refactoring to avoid similar problems by introducing

constants for each header name and reusing them at the identification of the

corresponding sorting index.

## How was this patch tested?

Manually:

(cherry picked from commit 1dc2c1d5e85c5f404f470aeb44c1f3c22786bdea)

Author: âattilapirosâ <piros.attila.zs...@gmail.com>

Closes #20623 from squito/fix_backport.

commit ccb0a59d7383db451b86aee67423eb6e28f1f982

Author: hyukjinkwon <gurwls223@...>

Date: 2018-02-16T17:41:17Z

[SPARK-23446][PYTHON] Explicitly check supported types in toPandas

## What changes were proposed in this pull request?

This PR explicitly specifies and checks the types we supported in

`toPandas`. This was a hole. For example, we haven't finished the binary type

support in Python side yet but now it allows as below:

```python

spark.conf.set("spark.sql.execution.arrow.enabled", "false")

df = spark.createDataFrame([[bytearray("a")]])

df.toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", "true")

df.toPandas()

```

```

_1

0 [97]

_1

0 a

```

This should be disallowed. I think the same things also apply to nested

timestamps too.

I also added some nicer message about `spark.sql.execution.arrow.enabled`

in the error message.

## How was this patch tested?

Manually tested and tests added in `python/pyspark/sql/tests.py`.

Author: hyukjinkwon <gurwls...@gmail.com>

Closes #20625 from HyukjinKwon/pandas_convertion_supported_type.

(cherry picked from commit c5857e496ff0d170ed0339f14afc7d36b192da6d)

Signed-off-by: gatorsmile <gatorsm...@gmail.com>

commit 8360da07110d847a01b243e6d786922a5057ad9f

Author: Shintaro Murakami <mrkm4ntr@...>

Date: 2018-02-17T01:17:55Z

[SPARK-23381][CORE] Murmur3 hash generates a different value from other

implementations

## What changes were proposed in this pull request?

Murmur3 hash generates a different value from the original and other

implementations (like Scala standard library and Guava or so) when the length

of a bytes array is not multiple of 4.

## How was this patch tested?

Added a unit test.

**Note: When we merge this PR, please give all the credits to Shintaro

Murakami.**

Author: Shintaro Murakami <mrkm4ntrgmail.com>

Author: gatorsmile <gatorsm...@gmail.com>

Author: Shintaro Murakami <mrkm4...@gmail.com>

Closes #20630 from gatorsmile/pr-20568.

(cherry picked from commit d5ed2108d32e1d95b26ee7fed39e8a733e935e2c)

Signed-off-by: gatorsmile <gatorsm...@gmail.com>

commit 44095cb65500739695b0324c177c19dfa1471472

Author: Sameer Agarwal <sameerag@...>

Date: 2018-02-17T01:29:46Z

Preparing Spark release v2.3.0-rc4

commit c7a0dea46a251a27b304ac2ec9f07f97aca4b1d0

Author: Sameer Agarwal <sameerag@...>

Date: 2018-02-17T01:29:51Z

Preparing development version 2.3.1-SNAPSHOT

commit a1ee6f1fc543120763f1b373bb31bc6d84004318

Author: Marcelo Vanzin <vanzin@...>

Date: 2018-02-21T01:54:06Z

[SPARK-23470][UI] Use first attempt of last stage to define job description.

This is much faster than finding out what the last attempt is, and the

data should be the same.

There's room for improvement in this page (like only loading data for

the jobs being shown, instead of loading all available jobs and sorting

them), but this should bring performance on par with the 2.2 version.

Author: Marcelo Vanzin <van...@cloudera.com>

Closes #20644 from vanzin/SPARK-23470.

(cherry picked from commit 2ba77ed9e51922303e3c3533e368b95788bd7de5)

Signed-off-by: Sameer Agarwal <samee...@apache.org>

commit 1d78f03ae3037f1ddbe6533a6733b7805a6705bf

Author: Marcelo Vanzin <vanzin@...>

Date: 2018-02-21T02:06:21Z

[SPARK-23468][CORE] Stringify auth secret before storing it in credentials.

The secret is used as a string in many parts of the code, so it has

to be turned into a hex string to avoid issues such as the random

byte sequence not containing a valid UTF8 sequence.

Author: Marcelo Vanzin <van...@cloudera.com>

Closes #20643 from vanzin/SPARK-23468.

(cherry picked from commit 6d398c05cbad69aa9093429e04ae44c73b81cd5a)

Signed-off-by: Marcelo Vanzin <van...@cloudera.com>

commit 3e7269eb904b591883300d7433e5c99be0b3b5b3

Author: Tathagata Das <tathagata.das1565@...>

Date: 2018-02-21T02:16:10Z

[SPARK-23454][SS][DOCS] Added trigger information to the Structured

Streaming programming guide

## What changes were proposed in this pull request?

- Added clear information about triggers

- Made the semantics guarantees of watermarks more clear for streaming

aggregations and stream-stream joins.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration

tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise,

remove this)

Please review http://spark.apache.org/contributing.html before opening a

pull request.

Author: Tathagata Das <tathagata.das1...@gmail.com>

Closes #20631 from tdas/SPARK-23454.

(cherry picked from commit 601d653bff9160db8477f86d961e609fc2190237)

Signed-off-by: Tathagata Das <tathagata.das1...@gmail.com>

commit 373ac642fc145527bdfccae046d0e98f105ee7b3

Author: Tathagata Das <tathagata.das1565@...>

Date: 2018-02-21T22:56:13Z

[SPARK-23484][SS] Fix possible race condition in KafkaContinuousReader

## What changes were proposed in this pull request?

var `KafkaContinuousReader.knownPartitions` should be threadsafe as it is

accessed from multiple threads - the query thread at the time of reader factory

creation, and the epoch tracking thread at the time of `needsReconfiguration`.

## How was this patch tested?

Existing tests.

Author: Tathagata Das <tathagata.das1...@gmail.com>

Closes #20655 from tdas/SPARK-23484.

(cherry picked from commit 3fd0ccb13fea44727d970479af1682ef00592147)

Signed-off-by: Tathagata Das <tathagata.das1...@gmail.com>

commit 23ba4416e1bbbaa818876d7a837f7a5e260aa048

Author: Shixiong Zhu <zsxwing@...>

Date: 2018-02-21T23:37:28Z

[SPARK-23481][WEBUI] lastStageAttempt should fail when a stage doesn't exist

## What changes were proposed in this pull request?

The issue here is `AppStatusStore.lastStageAttempt` will return the next

available stage in the store when a stage doesn't exist.

This PR adds `last(stageId)` to ensure it returns a correct `StageData`

## How was this patch tested?

The new unit test.

Author: Shixiong Zhu <zsxw...@gmail.com>

Closes #20654 from zsxwing/SPARK-23481.

(cherry picked from commit 744d5af652ee8cece361cbca31e5201134e0fb42)

Signed-off-by: Shixiong Zhu <zsxw...@gmail.com>

commit a0d7949896e70f427e7f3942ff340c9484ff0aab

Author: Shixiong Zhu <zsxwing@...>

Date: 2018-02-22T03:43:11Z

[SPARK-23475][WEBUI] Skipped stages should be evicted before completed

stages

## What changes were proposed in this pull request?

The root cause of missing completed stages is because `cleanupStages` will

never remove skipped stages.

This PR changes the logic to always remove skipped stage first. This is

safe since the job itself contains enough information to render skipped stages

in the UI.

## How was this patch tested?

The new unit tests.

Author: Shixiong Zhu <zsxw...@gmail.com>

Closes #20656 from zsxwing/SPARK-23475.

(cherry picked from commit 45cf714ee6d4eead2fe00794a0d754fa6d33d4a6)

Signed-off-by: gatorsmile <gatorsm...@gmail.com>

commit 992447fb30ee9ebb3cf794f2d06f4d63a2d792db

Author: Sameer Agarwal <sameerag@...>

Date: 2018-02-22T17:56:57Z

Preparing Spark release v2.3.0-rc5

commit 285b841ffbfb21c0af3f83800f7815fb0bfe3627

Author: Sameer Agarwal <sameerag@...>

Date: 2018-02-22T17:57:03Z

Preparing development version 2.3.1-SNAPSHOT

commit 578607b30a65d101380acbe9c9740dc267a5d55c

Author: Marco Gaido <marcogaido91@...>

Date: 2018-02-24T02:27:33Z

[SPARK-23475][UI][BACKPORT-2.3] Show also skipped stages

## What changes were proposed in this pull request?

SPARK-20648 introduced the status `SKIPPED` for the stages. On the UI,

previously, skipped stages were shown as `PENDING`; after this change, they are

not shown on the UI.

The PR introduce a new section in order to show also `SKIPPED` stages in a

proper table.

Manual backport from to branch-2.3.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaid...@gmail.com>

Closes #20662 from mgaido91/SPARK-23475_2.3.

----

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org