svn commit: r30175 - in /dev/spark/2.4.1-SNAPSHOT-2018_10_19_22_02-e3a60b0-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Sat Oct 20 05:16:47 2018 New Revision: 30175 Log: Apache Spark 2.4.1-SNAPSHOT-2018_10_19_22_02-e3a60b0 docs [This commit notification would consist of 1477 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r30174 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_19_20_02-3370865-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Sat Oct 20 03:16:56 2018 New Revision: 30174 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_19_20_02-3370865 docs [This commit notification would consist of 1483 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25785][SQL] Add prettyNames for from_json, to_json, from_csv, and schema_of_json

Repository: spark

Updated Branches:

refs/heads/master 4acbda4a9 -> 3370865b0

[SPARK-25785][SQL] Add prettyNames for from_json, to_json, from_csv, and

schema_of_json

## What changes were proposed in this pull request?

This PR adds `prettyNames` for `from_json`, `to_json`, `from_csv`, and

`schema_of_json` so that appropriate names are used.

## How was this patch tested?

Unit tests

Closes #22773 from HyukjinKwon/minor-prettyNames.

Authored-by: hyukjinkwon

Signed-off-by: hyukjinkwon

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/3370865b

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/3370865b

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/3370865b

Branch: refs/heads/master

Commit: 3370865b0ebe9b04c6671631aee5917b41ceba9c

Parents: 4acbda4

Author: hyukjinkwon

Authored: Sat Oct 20 10:15:53 2018 +0800

Committer: hyukjinkwon

Committed: Sat Oct 20 10:15:53 2018 +0800

--

.../catalyst/expressions/csvExpressions.scala | 2 +

.../catalyst/expressions/jsonExpressions.scala | 6 +++

.../sql-tests/results/csv-functions.sql.out | 4 +-

.../sql-tests/results/json-functions.sql.out| 50 ++--

.../native/stringCastAndExpressions.sql.out | 2 +-

5 files changed, 36 insertions(+), 28 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/3370865b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/csvExpressions.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/csvExpressions.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/csvExpressions.scala

index a63b624..853b1ea 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/csvExpressions.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/csvExpressions.scala

@@ -117,4 +117,6 @@ case class CsvToStructs(

}

override def inputTypes: Seq[AbstractDataType] = StringType :: Nil

+

+ override def prettyName: String = "from_csv"

}

http://git-wip-us.apache.org/repos/asf/spark/blob/3370865b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

index 9f28483..b4815b4 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/jsonExpressions.scala

@@ -610,6 +610,8 @@ case class JsonToStructs(

case _: MapType => "entries"

case _ => super.sql

}

+

+ override def prettyName: String = "from_json"

}

/**

@@ -730,6 +732,8 @@ case class StructsToJson(

override def nullSafeEval(value: Any): Any = converter(value)

override def inputTypes: Seq[AbstractDataType] = TypeCollection(ArrayType,

StructType) :: Nil

+

+ override def prettyName: String = "to_json"

}

/**

@@ -774,6 +778,8 @@ case class SchemaOfJson(

UTF8String.fromString(dt.catalogString)

}

+

+ override def prettyName: String = "schema_of_json"

}

object JsonExprUtils {

http://git-wip-us.apache.org/repos/asf/spark/blob/3370865b/sql/core/src/test/resources/sql-tests/results/csv-functions.sql.out

--

diff --git

a/sql/core/src/test/resources/sql-tests/results/csv-functions.sql.out

b/sql/core/src/test/resources/sql-tests/results/csv-functions.sql.out

index 15dbe36..f19f34a 100644

--- a/sql/core/src/test/resources/sql-tests/results/csv-functions.sql.out

+++ b/sql/core/src/test/resources/sql-tests/results/csv-functions.sql.out

@@ -5,7 +5,7 @@

-- !query 0

select from_csv('1, 3.14', 'a INT, f FLOAT')

-- !query 0 schema

-struct>

+struct>

-- !query 0 output

{"a":1,"f":3.14}

@@ -13,7 +13,7 @@ struct>

-- !query 1

select from_csv('26/08/2015', 'time Timestamp', map('timestampFormat',

'dd/MM/'))

-- !query 1 schema

-struct>

+struct>

-- !query 1 output

{"time":2015-08-26 00:00:00.0}

http://git-wip-us.apache.org/repos/asf/spark/blob/3370865b/sql/core/src/test/resources/sql-tests/results/json-functions.sql.out

--

diff --git

a/sql/core/src/test/resources/sql-tests/results/json-functions.sql.out

b/sql/core/src/test/resources/sql-tests/results/json-functions.sql.out

index 77e9000..868eee8 100644

--- a/sql/core/src/test/resources/sql-tests/results/json-functions.sql.out

+++

spark git commit: Revert "[SPARK-25764][ML][EXAMPLES] Update BisectingKMeans example to use ClusteringEvaluator"

Repository: spark

Updated Branches:

refs/heads/branch-2.4 432697c7b -> e3a60b0d6

Revert "[SPARK-25764][ML][EXAMPLES] Update BisectingKMeans example to use

ClusteringEvaluator"

This reverts commit 36307b1e4b42ce22b07e7a3fc2679c4b5e7c34c8.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/e3a60b0d

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/e3a60b0d

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/e3a60b0d

Branch: refs/heads/branch-2.4

Commit: e3a60b0d63eec58adbb1aabb9640b049e40be3bf

Parents: 432697c

Author: Wenchen Fan

Authored: Sat Oct 20 09:30:12 2018 +0800

Committer: Wenchen Fan

Committed: Sat Oct 20 09:30:12 2018 +0800

--

.../spark/examples/ml/JavaBisectingKMeansExample.java | 12 +++-

.../src/main/python/ml/bisecting_k_means_example.py | 12 +++-

.../spark/examples/ml/BisectingKMeansExample.scala | 12 +++-

3 files changed, 9 insertions(+), 27 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/e3a60b0d/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

--

diff --git

a/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

b/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

index f517dc3..8c82aaa 100644

---

a/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

+++

b/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

@@ -20,7 +20,6 @@ package org.apache.spark.examples.ml;

// $example on$

import org.apache.spark.ml.clustering.BisectingKMeans;

import org.apache.spark.ml.clustering.BisectingKMeansModel;

-import org.apache.spark.ml.evaluation.ClusteringEvaluator;

import org.apache.spark.ml.linalg.Vector;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

@@ -51,14 +50,9 @@ public class JavaBisectingKMeansExample {

BisectingKMeans bkm = new BisectingKMeans().setK(2).setSeed(1);

BisectingKMeansModel model = bkm.fit(dataset);

-// Make predictions

-Dataset predictions = model.transform(dataset);

-

-// Evaluate clustering by computing Silhouette score

-ClusteringEvaluator evaluator = new ClusteringEvaluator();

-

-double silhouette = evaluator.evaluate(predictions);

-System.out.println("Silhouette with squared euclidean distance = " +

silhouette);

+// Evaluate clustering.

+double cost = model.computeCost(dataset);

+System.out.println("Within Set Sum of Squared Errors = " + cost);

// Shows the result.

System.out.println("Cluster Centers: ");

http://git-wip-us.apache.org/repos/asf/spark/blob/e3a60b0d/examples/src/main/python/ml/bisecting_k_means_example.py

--

diff --git a/examples/src/main/python/ml/bisecting_k_means_example.py

b/examples/src/main/python/ml/bisecting_k_means_example.py

index 82adb33..7842d20 100644

--- a/examples/src/main/python/ml/bisecting_k_means_example.py

+++ b/examples/src/main/python/ml/bisecting_k_means_example.py

@@ -24,7 +24,6 @@ from __future__ import print_function

# $example on$

from pyspark.ml.clustering import BisectingKMeans

-from pyspark.ml.evaluation import ClusteringEvaluator

# $example off$

from pyspark.sql import SparkSession

@@ -42,14 +41,9 @@ if __name__ == "__main__":

bkm = BisectingKMeans().setK(2).setSeed(1)

model = bkm.fit(dataset)

-# Make predictions

-predictions = model.transform(dataset)

-

-# Evaluate clustering by computing Silhouette score

-evaluator = ClusteringEvaluator()

-

-silhouette = evaluator.evaluate(predictions)

-print("Silhouette with squared euclidean distance = " + str(silhouette))

+# Evaluate clustering.

+cost = model.computeCost(dataset)

+print("Within Set Sum of Squared Errors = " + str(cost))

# Shows the result.

print("Cluster Centers: ")

http://git-wip-us.apache.org/repos/asf/spark/blob/e3a60b0d/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

--

diff --git

a/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

b/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

index 14e13df..5f8f2c9 100644

---

a/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

+++

b/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

@@ -21,7 +21,6 @@ package org.apache.spark.examples.ml

// $example on$

import org.apache.spark.ml.clustering.BisectingKMeans

-import org.apache.spark.ml.evaluation.ClusteringEvaluator

//

spark git commit: Revert "[SPARK-25764][ML][EXAMPLES] Update BisectingKMeans example to use ClusteringEvaluator"

Repository: spark

Updated Branches:

refs/heads/master ec96d34e7 -> 4acbda4a9

Revert "[SPARK-25764][ML][EXAMPLES] Update BisectingKMeans example to use

ClusteringEvaluator"

This reverts commit d0ecff28545ac81f5ba7ac06957ced65b6e3ebcd.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/4acbda4a

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/4acbda4a

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/4acbda4a

Branch: refs/heads/master

Commit: 4acbda4a96a5d6ef9065544631a3457e8d7b1748

Parents: ec96d34

Author: Wenchen Fan

Authored: Sat Oct 20 09:28:53 2018 +0800

Committer: Wenchen Fan

Committed: Sat Oct 20 09:28:53 2018 +0800

--

.../spark/examples/ml/JavaBisectingKMeansExample.java | 12 +++-

.../src/main/python/ml/bisecting_k_means_example.py | 12 +++-

.../spark/examples/ml/BisectingKMeansExample.scala | 12 +++-

3 files changed, 9 insertions(+), 27 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/4acbda4a/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

--

diff --git

a/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

b/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

index f517dc3..8c82aaa 100644

---

a/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

+++

b/examples/src/main/java/org/apache/spark/examples/ml/JavaBisectingKMeansExample.java

@@ -20,7 +20,6 @@ package org.apache.spark.examples.ml;

// $example on$

import org.apache.spark.ml.clustering.BisectingKMeans;

import org.apache.spark.ml.clustering.BisectingKMeansModel;

-import org.apache.spark.ml.evaluation.ClusteringEvaluator;

import org.apache.spark.ml.linalg.Vector;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

@@ -51,14 +50,9 @@ public class JavaBisectingKMeansExample {

BisectingKMeans bkm = new BisectingKMeans().setK(2).setSeed(1);

BisectingKMeansModel model = bkm.fit(dataset);

-// Make predictions

-Dataset predictions = model.transform(dataset);

-

-// Evaluate clustering by computing Silhouette score

-ClusteringEvaluator evaluator = new ClusteringEvaluator();

-

-double silhouette = evaluator.evaluate(predictions);

-System.out.println("Silhouette with squared euclidean distance = " +

silhouette);

+// Evaluate clustering.

+double cost = model.computeCost(dataset);

+System.out.println("Within Set Sum of Squared Errors = " + cost);

// Shows the result.

System.out.println("Cluster Centers: ");

http://git-wip-us.apache.org/repos/asf/spark/blob/4acbda4a/examples/src/main/python/ml/bisecting_k_means_example.py

--

diff --git a/examples/src/main/python/ml/bisecting_k_means_example.py

b/examples/src/main/python/ml/bisecting_k_means_example.py

index 82adb33..7842d20 100644

--- a/examples/src/main/python/ml/bisecting_k_means_example.py

+++ b/examples/src/main/python/ml/bisecting_k_means_example.py

@@ -24,7 +24,6 @@ from __future__ import print_function

# $example on$

from pyspark.ml.clustering import BisectingKMeans

-from pyspark.ml.evaluation import ClusteringEvaluator

# $example off$

from pyspark.sql import SparkSession

@@ -42,14 +41,9 @@ if __name__ == "__main__":

bkm = BisectingKMeans().setK(2).setSeed(1)

model = bkm.fit(dataset)

-# Make predictions

-predictions = model.transform(dataset)

-

-# Evaluate clustering by computing Silhouette score

-evaluator = ClusteringEvaluator()

-

-silhouette = evaluator.evaluate(predictions)

-print("Silhouette with squared euclidean distance = " + str(silhouette))

+# Evaluate clustering.

+cost = model.computeCost(dataset)

+print("Within Set Sum of Squared Errors = " + str(cost))

# Shows the result.

print("Cluster Centers: ")

http://git-wip-us.apache.org/repos/asf/spark/blob/4acbda4a/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

--

diff --git

a/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

b/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

index 14e13df..5f8f2c9 100644

---

a/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

+++

b/examples/src/main/scala/org/apache/spark/examples/ml/BisectingKMeansExample.scala

@@ -21,7 +21,6 @@ package org.apache.spark.examples.ml

// $example on$

import org.apache.spark.ml.clustering.BisectingKMeans

-import org.apache.spark.ml.evaluation.ClusteringEvaluator

// $example

svn commit: r30172 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_19_16_03-ec96d34-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 19 23:17:26 2018 New Revision: 30172 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_19_16_03-ec96d34 docs [This commit notification would consist of 1483 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25745][K8S] Improve docker-image-tool.sh script

Repository: spark

Updated Branches:

refs/heads/master 43717dee5 -> ec96d34e7

[SPARK-25745][K8S] Improve docker-image-tool.sh script

## What changes were proposed in this pull request?

Adds error checking and handling to `docker` invocations ensuring the script

terminates early in the event of any errors. This avoids subtle errors that

can occur e.g. if the base image fails to build the Python/R images can end up

being built from outdated base images and makes it more explicit to the user

that something went wrong.

Additionally the provided `Dockerfiles` assume that Spark was first built

locally or is a runnable distribution however it didn't previously enforce

this. The script will now check the JARs folder to ensure that Spark JARs

actually exist and if not aborts early reminding the user they need to build

locally first.

## How was this patch tested?

- Tested with a `mvn clean` working copy and verified that the script now

terminates early

- Tested with bad `Dockerfiles` that fail to build to see that early

termination occurred

Closes #22748 from rvesse/SPARK-25745.

Authored-by: Rob Vesse

Signed-off-by: Marcelo Vanzin

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/ec96d34e

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/ec96d34e

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/ec96d34e

Branch: refs/heads/master

Commit: ec96d34e74148803190db8dcf9fda527eeea9255

Parents: 43717de

Author: Rob Vesse

Authored: Fri Oct 19 15:03:53 2018 -0700

Committer: Marcelo Vanzin

Committed: Fri Oct 19 15:03:53 2018 -0700

--

bin/docker-image-tool.sh | 41 +++--

1 file changed, 31 insertions(+), 10 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/ec96d34e/bin/docker-image-tool.sh

--

diff --git a/bin/docker-image-tool.sh b/bin/docker-image-tool.sh

index f17791a..001590a 100755

--- a/bin/docker-image-tool.sh

+++ b/bin/docker-image-tool.sh

@@ -44,6 +44,7 @@ function image_ref {

function build {

local BUILD_ARGS

local IMG_PATH

+ local JARS

if [ ! -f "$SPARK_HOME/RELEASE" ]; then

# Set image build arguments accordingly if this is a source repo and not a

distribution archive.

@@ -53,26 +54,38 @@ function build {

# the examples directory is cleaned up before generating the distribution

tarball, so this

# issue does not occur.

IMG_PATH=resource-managers/kubernetes/docker/src/main/dockerfiles

+JARS=assembly/target/scala-$SPARK_SCALA_VERSION/jars

BUILD_ARGS=(

${BUILD_PARAMS}

--build-arg

img_path=$IMG_PATH

--build-arg

- spark_jars=assembly/target/scala-$SPARK_SCALA_VERSION/jars

+ spark_jars=$JARS

--build-arg

example_jars=examples/target/scala-$SPARK_SCALA_VERSION/jars

--build-arg

k8s_tests=resource-managers/kubernetes/integration-tests/tests

)

else

-# Not passed as an argument to docker, but used to validate the Spark

directory.

+# Not passed as arguments to docker, but used to validate the Spark

directory.

IMG_PATH="kubernetes/dockerfiles"

+JARS=jars

BUILD_ARGS=(${BUILD_PARAMS})

fi

+ # Verify that the Docker image content directory is present

if [ ! -d "$IMG_PATH" ]; then

error "Cannot find docker image. This script must be run from a runnable

distribution of Apache Spark."

fi

+

+ # Verify that Spark has actually been built/is a runnable distribution

+ #Â i.e. the Spark JARs that the Docker files will place into the image are

present

+ local TOTAL_JARS=$(ls $JARS/spark-* | wc -l)

+ TOTAL_JARS=$(( $TOTAL_JARS ))

+ if [ "${TOTAL_JARS}" -eq 0 ]; then

+error "Cannot find Spark JARs. This script assumes that Apache Spark has

first been built locally or this is a runnable distribution."

+ fi

+

local BINDING_BUILD_ARGS=(

${BUILD_PARAMS}

--build-arg

@@ -85,29 +98,37 @@ function build {

docker build $NOCACHEARG "${BUILD_ARGS[@]}" \

-t $(image_ref spark) \

-f "$BASEDOCKERFILE" .

- if [[ $? != 0 ]]; then

-error "Failed to build Spark docker image."

+ if [ $? -ne 0 ]; then

+error "Failed to build Spark JVM Docker image, please refer to Docker

build output for details."

fi

docker build $NOCACHEARG "${BINDING_BUILD_ARGS[@]}" \

-t $(image_ref spark-py) \

-f "$PYDOCKERFILE" .

- if [[ $? != 0 ]]; then

-error "Failed to build PySpark docker image."

- fi

-

+if [ $? -ne 0 ]; then

+ error "Failed to build PySpark Docker image, please refer to Docker

build output for details."

+fi

docker build $NOCACHEARG "${BINDING_BUILD_ARGS[@]}" \

-t $(image_ref spark-r) \

-f "$RDOCKERFILE" .

- if [[ $? != 0 ]]; then

-error "Failed to build

spark git commit: Revert "[SPARK-25758][ML] Deprecate computeCost on BisectingKMeans"

Repository: spark

Updated Branches:

refs/heads/branch-2.4 1001d2314 -> 432697c7b

Revert "[SPARK-25758][ML] Deprecate computeCost on BisectingKMeans"

This reverts commit c2962546d9a5900a5628a31b83d2c4b22c3a7936.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/432697c7

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/432697c7

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/432697c7

Branch: refs/heads/branch-2.4

Commit: 432697c7b58785ca8439717fa748a72224cf0859

Parents: 1001d23

Author: gatorsmile

Authored: Fri Oct 19 14:57:52 2018 -0700

Committer: gatorsmile

Committed: Fri Oct 19 14:57:52 2018 -0700

--

.../scala/org/apache/spark/ml/clustering/BisectingKMeans.scala | 5 -

python/pyspark/ml/clustering.py| 6 --

2 files changed, 11 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/432697c7/mllib/src/main/scala/org/apache/spark/ml/clustering/BisectingKMeans.scala

--

diff --git

a/mllib/src/main/scala/org/apache/spark/ml/clustering/BisectingKMeans.scala

b/mllib/src/main/scala/org/apache/spark/ml/clustering/BisectingKMeans.scala

index 2243d99..5cb16cc 100644

--- a/mllib/src/main/scala/org/apache/spark/ml/clustering/BisectingKMeans.scala

+++ b/mllib/src/main/scala/org/apache/spark/ml/clustering/BisectingKMeans.scala

@@ -125,13 +125,8 @@ class BisectingKMeansModel private[ml] (

/**

* Computes the sum of squared distances between the input points and their

corresponding cluster

* centers.

- *

- * @deprecated This method is deprecated and will be removed in 3.0.0. Use

ClusteringEvaluator

- * instead. You can also get the cost on the training dataset in

the summary.

*/

@Since("2.0.0")

- @deprecated("This method is deprecated and will be removed in 3.0.0. Use

ClusteringEvaluator " +

-"instead. You can also get the cost on the training dataset in the

summary.", "2.4.0")

def computeCost(dataset: Dataset[_]): Double = {

SchemaUtils.validateVectorCompatibleColumn(dataset.schema, getFeaturesCol)

val data = DatasetUtils.columnToOldVector(dataset, getFeaturesCol)

http://git-wip-us.apache.org/repos/asf/spark/blob/432697c7/python/pyspark/ml/clustering.py

--

diff --git a/python/pyspark/ml/clustering.py b/python/pyspark/ml/clustering.py

index 11eb124..5ef4e76 100644

--- a/python/pyspark/ml/clustering.py

+++ b/python/pyspark/ml/clustering.py

@@ -540,13 +540,7 @@ class BisectingKMeansModel(JavaModel, JavaMLWritable,

JavaMLReadable):

"""

Computes the sum of squared distances between the input points

and their corresponding cluster centers.

-

-..note:: Deprecated in 2.4.0. It will be removed in 3.0.0. Use

ClusteringEvaluator instead.

- You can also get the cost on the training dataset in the summary.

"""

-warnings.warn("Deprecated in 2.4.0. It will be removed in 3.0.0. Use

ClusteringEvaluator "

- "instead. You can also get the cost on the training

dataset in the summary.",

- DeprecationWarning)

return self._call_java("computeCost", dataset)

@property

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r30171 - in /dev/spark/2.4.1-SNAPSHOT-2018_10_19_14_03-1001d23-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 19 21:17:15 2018 New Revision: 30171 Log: Apache Spark 2.4.1-SNAPSHOT-2018_10_19_14_03-1001d23 docs [This commit notification would consist of 1477 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r30168 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_19_12_02-43717de-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 19 19:16:51 2018 New Revision: 30168 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_19_12_02-43717de docs [This commit notification would consist of 1483 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25704][CORE] Allocate a bit less than Int.MaxValue

Repository: spark

Updated Branches:

refs/heads/branch-2.4 9c0c6d4d5 -> 1001d2314

[SPARK-25704][CORE] Allocate a bit less than Int.MaxValue

JVMs don't you allocate arrays of length exactly Int.MaxValue, so leave

a little extra room. This is necessary when reading blocks >2GB off

the network (for remote reads or for cache replication).

Unit tests via jenkins, ran a test with blocks over 2gb on a cluster

Closes #22705 from squito/SPARK-25704.

Authored-by: Imran Rashid

Signed-off-by: Imran Rashid

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/1001d231

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/1001d231

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/1001d231

Branch: refs/heads/branch-2.4

Commit: 1001d2314275c902da519725da266a23b537e33a

Parents: 9c0c6d4

Author: Imran Rashid

Authored: Fri Oct 19 12:52:41 2018 -0500

Committer: Imran Rashid

Committed: Fri Oct 19 12:54:08 2018 -0500

--

.../org/apache/spark/storage/BlockManager.scala | 6 ++

.../apache/spark/util/io/ChunkedByteBuffer.scala| 16 +---

2 files changed, 11 insertions(+), 11 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/1001d231/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

--

diff --git a/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

b/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

index 0fe82ac..c01a453 100644

--- a/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

+++ b/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

@@ -133,8 +133,6 @@ private[spark] class BlockManager(

private[spark] val externalShuffleServiceEnabled =

conf.get(config.SHUFFLE_SERVICE_ENABLED)

- private val chunkSize =

-conf.getSizeAsBytes("spark.storage.memoryMapLimitForTests",

Int.MaxValue.toString).toInt

private val remoteReadNioBufferConversion =

conf.getBoolean("spark.network.remoteReadNioBufferConversion", false)

@@ -451,7 +449,7 @@ private[spark] class BlockManager(

new EncryptedBlockData(tmpFile, blockSize, conf,

key).toChunkedByteBuffer(allocator)

case None =>

-ChunkedByteBuffer.fromFile(tmpFile,

conf.get(config.MEMORY_MAP_LIMIT_FOR_TESTS).toInt)

+ChunkedByteBuffer.fromFile(tmpFile)

}

putBytes(blockId, buffer, level)(classTag)

tmpFile.delete()

@@ -797,7 +795,7 @@ private[spark] class BlockManager(

if (remoteReadNioBufferConversion) {

return Some(new ChunkedByteBuffer(data.nioByteBuffer()))

} else {

- return Some(ChunkedByteBuffer.fromManagedBuffer(data, chunkSize))

+ return Some(ChunkedByteBuffer.fromManagedBuffer(data))

}

}

logDebug(s"The value of block $blockId is null")

http://git-wip-us.apache.org/repos/asf/spark/blob/1001d231/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

--

diff --git

a/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

b/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

index 4aa8d45..9547cb4 100644

--- a/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

+++ b/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

@@ -30,6 +30,7 @@ import org.apache.spark.internal.config

import org.apache.spark.network.buffer.{FileSegmentManagedBuffer,

ManagedBuffer}

import org.apache.spark.network.util.{ByteArrayWritableChannel,

LimitedInputStream}

import org.apache.spark.storage.StorageUtils

+import org.apache.spark.unsafe.array.ByteArrayMethods

import org.apache.spark.util.Utils

/**

@@ -169,24 +170,25 @@ private[spark] class ChunkedByteBuffer(var chunks:

Array[ByteBuffer]) {

}

-object ChunkedByteBuffer {

+private[spark] object ChunkedByteBuffer {

+

+

// TODO eliminate this method if we switch BlockManager to getting

InputStreams

- def fromManagedBuffer(data: ManagedBuffer, maxChunkSize: Int):

ChunkedByteBuffer = {

+ def fromManagedBuffer(data: ManagedBuffer): ChunkedByteBuffer = {

data match {

case f: FileSegmentManagedBuffer =>

-fromFile(f.getFile, maxChunkSize, f.getOffset, f.getLength)

+fromFile(f.getFile, f.getOffset, f.getLength)

case other =>

new ChunkedByteBuffer(other.nioByteBuffer())

}

}

- def fromFile(file: File, maxChunkSize: Int): ChunkedByteBuffer = {

-fromFile(file, maxChunkSize, 0, file.length())

+ def fromFile(file: File): ChunkedByteBuffer = {

+fromFile(file, 0, file.length())

}

private def fromFile(

file: File,

- maxChunkSize: Int,

offset: Long,

length: Long):

spark git commit: [SPARK-25704][CORE] Allocate a bit less than Int.MaxValue

Repository: spark

Updated Branches:

refs/heads/master 130121711 -> 43717dee5

[SPARK-25704][CORE] Allocate a bit less than Int.MaxValue

JVMs don't you allocate arrays of length exactly Int.MaxValue, so leave

a little extra room. This is necessary when reading blocks >2GB off

the network (for remote reads or for cache replication).

Unit tests via jenkins, ran a test with blocks over 2gb on a cluster

Closes #22705 from squito/SPARK-25704.

Authored-by: Imran Rashid

Signed-off-by: Imran Rashid

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/43717dee

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/43717dee

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/43717dee

Branch: refs/heads/master

Commit: 43717dee570dc41d71f0b27b8939f6297a029a02

Parents: 1301217

Author: Imran Rashid

Authored: Fri Oct 19 12:52:41 2018 -0500

Committer: Imran Rashid

Committed: Fri Oct 19 12:52:41 2018 -0500

--

.../org/apache/spark/storage/BlockManager.scala | 6 ++

.../apache/spark/util/io/ChunkedByteBuffer.scala| 16 +---

2 files changed, 11 insertions(+), 11 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/43717dee/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

--

diff --git a/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

b/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

index 0fe82ac..c01a453 100644

--- a/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

+++ b/core/src/main/scala/org/apache/spark/storage/BlockManager.scala

@@ -133,8 +133,6 @@ private[spark] class BlockManager(

private[spark] val externalShuffleServiceEnabled =

conf.get(config.SHUFFLE_SERVICE_ENABLED)

- private val chunkSize =

-conf.getSizeAsBytes("spark.storage.memoryMapLimitForTests",

Int.MaxValue.toString).toInt

private val remoteReadNioBufferConversion =

conf.getBoolean("spark.network.remoteReadNioBufferConversion", false)

@@ -451,7 +449,7 @@ private[spark] class BlockManager(

new EncryptedBlockData(tmpFile, blockSize, conf,

key).toChunkedByteBuffer(allocator)

case None =>

-ChunkedByteBuffer.fromFile(tmpFile,

conf.get(config.MEMORY_MAP_LIMIT_FOR_TESTS).toInt)

+ChunkedByteBuffer.fromFile(tmpFile)

}

putBytes(blockId, buffer, level)(classTag)

tmpFile.delete()

@@ -797,7 +795,7 @@ private[spark] class BlockManager(

if (remoteReadNioBufferConversion) {

return Some(new ChunkedByteBuffer(data.nioByteBuffer()))

} else {

- return Some(ChunkedByteBuffer.fromManagedBuffer(data, chunkSize))

+ return Some(ChunkedByteBuffer.fromManagedBuffer(data))

}

}

logDebug(s"The value of block $blockId is null")

http://git-wip-us.apache.org/repos/asf/spark/blob/43717dee/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

--

diff --git

a/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

b/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

index 4aa8d45..9547cb4 100644

--- a/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

+++ b/core/src/main/scala/org/apache/spark/util/io/ChunkedByteBuffer.scala

@@ -30,6 +30,7 @@ import org.apache.spark.internal.config

import org.apache.spark.network.buffer.{FileSegmentManagedBuffer,

ManagedBuffer}

import org.apache.spark.network.util.{ByteArrayWritableChannel,

LimitedInputStream}

import org.apache.spark.storage.StorageUtils

+import org.apache.spark.unsafe.array.ByteArrayMethods

import org.apache.spark.util.Utils

/**

@@ -169,24 +170,25 @@ private[spark] class ChunkedByteBuffer(var chunks:

Array[ByteBuffer]) {

}

-object ChunkedByteBuffer {

+private[spark] object ChunkedByteBuffer {

+

+

// TODO eliminate this method if we switch BlockManager to getting

InputStreams

- def fromManagedBuffer(data: ManagedBuffer, maxChunkSize: Int):

ChunkedByteBuffer = {

+ def fromManagedBuffer(data: ManagedBuffer): ChunkedByteBuffer = {

data match {

case f: FileSegmentManagedBuffer =>

-fromFile(f.getFile, maxChunkSize, f.getOffset, f.getLength)

+fromFile(f.getFile, f.getOffset, f.getLength)

case other =>

new ChunkedByteBuffer(other.nioByteBuffer())

}

}

- def fromFile(file: File, maxChunkSize: Int): ChunkedByteBuffer = {

-fromFile(file, maxChunkSize, 0, file.length())

+ def fromFile(file: File): ChunkedByteBuffer = {

+fromFile(file, 0, file.length())

}

private def fromFile(

file: File,

- maxChunkSize: Int,

offset: Long,

length: Long):

svn commit: r30165 - in /dev/spark/2.3.3-SNAPSHOT-2018_10_19_10_03-5cef11a-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 19 17:19:05 2018 New Revision: 30165 Log: Apache Spark 2.3.3-SNAPSHOT-2018_10_19_10_03-5cef11a docs [This commit notification would consist of 1443 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r30164 - in /dev/spark/2.4.1-SNAPSHOT-2018_10_19_10_03-9c0c6d4-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 19 17:18:18 2018 New Revision: 30164 Log: Apache Spark 2.4.1-SNAPSHOT-2018_10_19_10_03-9c0c6d4 docs [This commit notification would consist of 1478 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: fix security issue of zinc(update run-tests.py)

Repository: spark

Updated Branches:

refs/heads/master 9ad0f6ea8 -> 130121711

fix security issue of zinc(update run-tests.py)

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/13012171

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/13012171

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/13012171

Branch: refs/heads/master

Commit: 130121711c3258cc5cb6123379f2b4b419851c6e

Parents: 9ad0f6e

Author: Wenchen Fan

Authored: Sat Oct 20 00:21:22 2018 +0800

Committer: Wenchen Fan

Committed: Sat Oct 20 00:23:16 2018 +0800

--

dev/run-tests.py | 10 --

1 file changed, 10 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/13012171/dev/run-tests.py

--

diff --git a/dev/run-tests.py b/dev/run-tests.py

index 26045ee..7ec7334 100755

--- a/dev/run-tests.py

+++ b/dev/run-tests.py

@@ -249,15 +249,6 @@ def get_zinc_port():

return random.randrange(3030, 4030)

-def kill_zinc_on_port(zinc_port):

-"""

-Kill the Zinc process running on the given port, if one exists.

-"""

-cmd = "%s -P |grep %s | grep LISTEN | awk '{ print $2; }' | xargs kill"

-lsof_exe = which("lsof")

-subprocess.check_call(cmd % (lsof_exe if lsof_exe else "/usr/sbin/lsof",

zinc_port), shell=True)

-

-

def exec_maven(mvn_args=()):

"""Will call Maven in the current directory with the list of mvn_args

passed

in and returns the subprocess for any further processing"""

@@ -267,7 +258,6 @@ def exec_maven(mvn_args=()):

zinc_flag = "-DzincPort=%s" % zinc_port

flags = [os.path.join(SPARK_HOME, "build", "mvn"), "--force", zinc_flag]

run_cmd(flags + mvn_args)

-kill_zinc_on_port(zinc_port)

def exec_sbt(sbt_args=()):

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

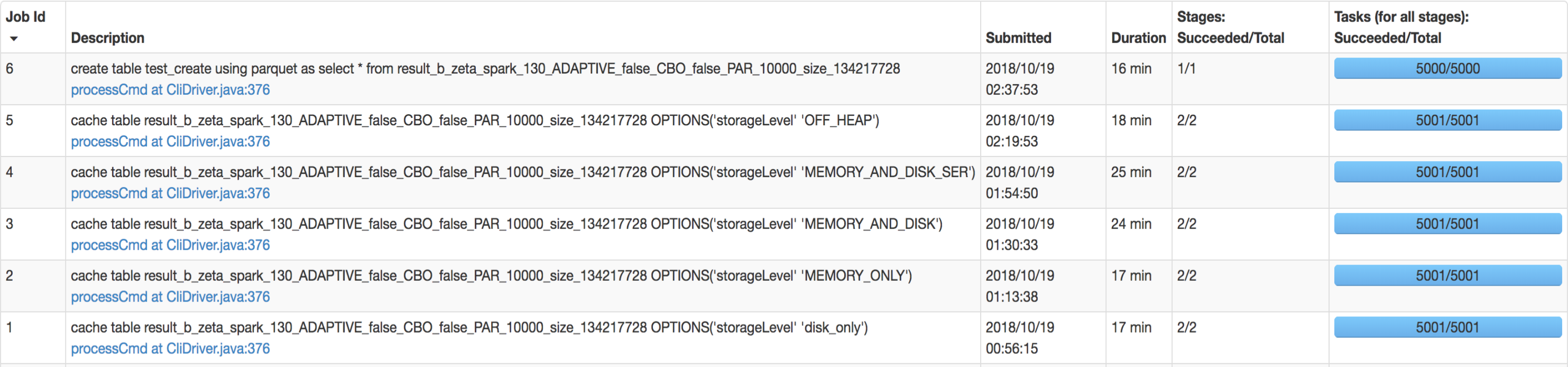

spark git commit: [SPARK-25269][SQL] SQL interface support specify StorageLevel when cache table

Repository: spark

Updated Branches:

refs/heads/master ac586bbb0 -> 9ad0f6ea8

[SPARK-25269][SQL] SQL interface support specify StorageLevel when cache table

## What changes were proposed in this pull request?

SQL interface support specify `StorageLevel` when cache table. The semantic is:

```sql

CACHE TABLE tableName OPTIONS('storageLevel' 'DISK_ONLY');

```

All supported `StorageLevel` are:

https://github.com/apache/spark/blob/eefdf9f9dd8afde49ad7d4e230e2735eb817ab0a/core/src/main/scala/org/apache/spark/storage/StorageLevel.scala#L172-L183

## How was this patch tested?

unit tests and manual tests.

manual tests configuration:

```

--executor-memory 15G --executor-cores 5 --num-executors 50

```

Data:

Input Size / Records: 1037.7 GB / 11732805788

Result:

Closes #22263 from wangyum/SPARK-25269.

Authored-by: Yuming Wang

Signed-off-by: Dongjoon Hyun

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/9ad0f6ea

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/9ad0f6ea

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/9ad0f6ea

Branch: refs/heads/master

Commit: 9ad0f6ea89435391ec16e436bc4c4d5bf6b68493

Parents: ac586bb

Author: Yuming Wang

Authored: Fri Oct 19 09:15:55 2018 -0700

Committer: Dongjoon Hyun

Committed: Fri Oct 19 09:15:55 2018 -0700

--

.../apache/spark/sql/catalyst/parser/SqlBase.g4 | 3 +-

.../spark/sql/execution/SparkSqlParser.scala| 3 +-

.../spark/sql/execution/command/cache.scala | 23 +++-

.../org/apache/spark/sql/CachedTableSuite.scala | 60

.../apache/spark/sql/hive/test/TestHive.scala | 2 +-

5 files changed, 86 insertions(+), 5 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/9ad0f6ea/sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBase.g4

--

diff --git

a/sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBase.g4

b/sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBase.g4

index 0569986..e2d34d1 100644

---

a/sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBase.g4

+++

b/sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBase.g4

@@ -162,7 +162,8 @@ statement

tableIdentifier partitionSpec? describeColName?

#describeTable

| REFRESH TABLE tableIdentifier

#refreshTable

| REFRESH (STRING | .*?)

#refreshResource

-| CACHE LAZY? TABLE tableIdentifier (AS? query)?

#cacheTable

+| CACHE LAZY? TABLE tableIdentifier

+(OPTIONS options=tablePropertyList)? (AS? query)?

#cacheTable

| UNCACHE TABLE (IF EXISTS)? tableIdentifier

#uncacheTable

| CLEAR CACHE

#clearCache

| LOAD DATA LOCAL? INPATH path=STRING OVERWRITE? INTO TABLE

http://git-wip-us.apache.org/repos/asf/spark/blob/9ad0f6ea/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkSqlParser.scala

--

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkSqlParser.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkSqlParser.scala

index 4ed14d3..364efea 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkSqlParser.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkSqlParser.scala

@@ -282,7 +282,8 @@ class SparkSqlAstBuilder(conf: SQLConf) extends

AstBuilder(conf) {

throw new ParseException(s"It is not allowed to add database prefix

`$database` to " +

s"the table name in CACHE TABLE AS SELECT", ctx)

}

-CacheTableCommand(tableIdent, query, ctx.LAZY != null)

+val options =

Option(ctx.options).map(visitPropertyKeyValues).getOrElse(Map.empty)

+CacheTableCommand(tableIdent, query, ctx.LAZY != null, options)

}

/**

http://git-wip-us.apache.org/repos/asf/spark/blob/9ad0f6ea/sql/core/src/main/scala/org/apache/spark/sql/execution/command/cache.scala

--

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/command/cache.scala

b/sql/core/src/main/scala/org/apache/spark/sql/execution/command/cache.scala

index 6b00426..728604a 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/execution/command/cache.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/execution/command/cache.scala

@@ -17,16 +17,21 @@

package org.apache.spark.sql.execution.command

+import java.util.Locale

svn commit: r30163 - in /dev/spark/3.0.0-SNAPSHOT-2018_10_19_08_03-ec1fafe-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Fri Oct 19 15:17:35 2018 New Revision: 30163 Log: Apache Spark 3.0.0-SNAPSHOT-2018_10_19_08_03-ec1fafe docs [This commit notification would consist of 1484 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] Git Push Summary

Repository: spark Updated Tags: refs/tags/v2.4.0-rc4 [deleted] 1ff8dd424 - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[2/2] spark git commit: Preparing development version 2.4.1-SNAPSHOT

Preparing development version 2.4.1-SNAPSHOT

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/9c0c6d4d

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/9c0c6d4d

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/9c0c6d4d

Branch: refs/heads/branch-2.4

Commit: 9c0c6d4d5039267be35e564d8d6712318d557317

Parents: 1ff8dd4

Author: Wenchen Fan

Authored: Fri Oct 19 14:22:04 2018 +

Committer: Wenchen Fan

Committed: Fri Oct 19 14:22:04 2018 +

--

R/pkg/DESCRIPTION | 2 +-

assembly/pom.xml | 2 +-

common/kvstore/pom.xml | 2 +-

common/network-common/pom.xml | 2 +-

common/network-shuffle/pom.xml | 2 +-

common/network-yarn/pom.xml| 2 +-

common/sketch/pom.xml | 2 +-

common/tags/pom.xml| 2 +-

common/unsafe/pom.xml | 2 +-

core/pom.xml | 2 +-

docs/_config.yml | 4 ++--

examples/pom.xml | 2 +-

external/avro/pom.xml | 2 +-

external/docker-integration-tests/pom.xml | 2 +-

external/flume-assembly/pom.xml| 2 +-

external/flume-sink/pom.xml| 2 +-

external/flume/pom.xml | 2 +-

external/kafka-0-10-assembly/pom.xml | 2 +-

external/kafka-0-10-sql/pom.xml| 2 +-

external/kafka-0-10/pom.xml| 2 +-

external/kafka-0-8-assembly/pom.xml| 2 +-

external/kafka-0-8/pom.xml | 2 +-

external/kinesis-asl-assembly/pom.xml | 2 +-

external/kinesis-asl/pom.xml | 2 +-

external/spark-ganglia-lgpl/pom.xml| 2 +-

graphx/pom.xml | 2 +-

hadoop-cloud/pom.xml | 2 +-

launcher/pom.xml | 2 +-

mllib-local/pom.xml| 2 +-

mllib/pom.xml | 2 +-

pom.xml| 2 +-

python/pyspark/version.py | 2 +-

repl/pom.xml | 2 +-

resource-managers/kubernetes/core/pom.xml | 2 +-

resource-managers/kubernetes/integration-tests/pom.xml | 2 +-

resource-managers/mesos/pom.xml| 2 +-

resource-managers/yarn/pom.xml | 2 +-

sql/catalyst/pom.xml | 2 +-

sql/core/pom.xml | 2 +-

sql/hive-thriftserver/pom.xml | 2 +-

sql/hive/pom.xml | 2 +-

streaming/pom.xml | 2 +-

tools/pom.xml | 2 +-

43 files changed, 44 insertions(+), 44 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/9c0c6d4d/R/pkg/DESCRIPTION

--

diff --git a/R/pkg/DESCRIPTION b/R/pkg/DESCRIPTION

index f52d785..714b6f1 100644

--- a/R/pkg/DESCRIPTION

+++ b/R/pkg/DESCRIPTION

@@ -1,6 +1,6 @@

Package: SparkR

Type: Package

-Version: 2.4.0

+Version: 2.4.1

Title: R Frontend for Apache Spark

Description: Provides an R Frontend for Apache Spark.

Authors@R: c(person("Shivaram", "Venkataraman", role = c("aut", "cre"),

http://git-wip-us.apache.org/repos/asf/spark/blob/9c0c6d4d/assembly/pom.xml

--

diff --git a/assembly/pom.xml b/assembly/pom.xml

index 63ab510..ee0de73 100644

--- a/assembly/pom.xml

+++ b/assembly/pom.xml

@@ -21,7 +21,7 @@

org.apache.spark

spark-parent_2.11

-2.4.0

+2.4.1-SNAPSHOT

../pom.xml

http://git-wip-us.apache.org/repos/asf/spark/blob/9c0c6d4d/common/kvstore/pom.xml

--

diff --git a/common/kvstore/pom.xml b/common/kvstore/pom.xml

index b10e118..b89e0fe 100644

--- a/common/kvstore/pom.xml

+++ b/common/kvstore/pom.xml

@@ -22,7 +22,7 @@

org.apache.spark

spark-parent_2.11

-2.4.0

+2.4.1-SNAPSHOT

../../pom.xml

http://git-wip-us.apache.org/repos/asf/spark/blob/9c0c6d4d/common/network-common/pom.xml

--

diff --git a/common/network-common/pom.xml

[spark] Git Push Summary

Repository: spark Updated Tags: refs/tags/v2.4.0-rc4 [created] 1ff8dd424 - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: fix security issue of zinc

Repository: spark

Updated Branches:

refs/heads/branch-2.2 2e3b923e0 -> d6542fa3f

fix security issue of zinc

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/d6542fa3

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/d6542fa3

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/d6542fa3

Branch: refs/heads/branch-2.2

Commit: d6542fa3f02587712d26e4e191353362a4031794

Parents: 2e3b923

Author: Wenchen Fan

Authored: Fri Oct 19 21:39:58 2018 +0800

Committer: Wenchen Fan

Committed: Fri Oct 19 21:40:40 2018 +0800

--

build/mvn | 31 ---

1 file changed, 24 insertions(+), 7 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/d6542fa3/build/mvn

--

diff --git a/build/mvn b/build/mvn

index 1e393c3..926027e 100755

--- a/build/mvn

+++ b/build/mvn

@@ -130,8 +130,17 @@ if [ "$1" == "--force" ]; then

shift

fi

+if [ "$1" == "--zinc" ]; then

+ echo "Using zinc for incremental compilation. Be sure you are aware of the

implications of "

+ echo "running this server process on your machine"

+ USE_ZINC=1

+ shift

+fi

+

# Install the proper version of Scala, Zinc and Maven for the build

-install_zinc

+if [ -n "${USE_ZINC}" ]; then

+ install_zinc

+fi

install_scala

install_mvn

@@ -140,12 +149,15 @@ cd "${_CALLING_DIR}"

# Now that zinc is ensured to be installed, check its status and, if its

# not running or just installed, start it

-if [ -n "${ZINC_INSTALL_FLAG}" -o -z "`"${ZINC_BIN}" -status -port

${ZINC_PORT}`" ]; then

- export ZINC_OPTS=${ZINC_OPTS:-"$_COMPILE_JVM_OPTS"}

- "${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

- "${ZINC_BIN}" -start -port ${ZINC_PORT} \

--scala-compiler "${SCALA_COMPILER}" \

--scala-library "${SCALA_LIBRARY}" &>/dev/null

+if [ -n "${USE_ZINC}" ]; then

+ if [ -n "${ZINC_INSTALL_FLAG}" -o -z "`"${ZINC_BIN}" -status -port

${ZINC_PORT}`" ]; then

+export ZINC_OPTS=${ZINC_OPTS:-"$_COMPILE_JVM_OPTS"}

+"${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

+"${ZINC_BIN}" -start -port ${ZINC_PORT} -server 127.0.0.1 \

+ -idle-timeout 30m \

+ -scala-compiler "${SCALA_COMPILER}" \

+ -scala-library "${SCALA_LIBRARY}" &>/dev/null

+ fi

fi

# Set any `mvn` options if not already present

@@ -155,3 +167,8 @@ echo "Using \`mvn\` from path: $MVN_BIN" 1>&2

# Last, call the `mvn` command as usual

${MVN_BIN} -DzincPort=${ZINC_PORT} "$@"

+

+if [ -n "${USE_ZINC}" ]; then

+ # Try to shut down zinc explicitly

+ "${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

+fi

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: fix security issue of zinc

Repository: spark

Updated Branches:

refs/heads/branch-2.3 353d32804 -> 5cef11acc

fix security issue of zinc

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/5cef11ac

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/5cef11ac

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/5cef11ac

Branch: refs/heads/branch-2.3

Commit: 5cef11acc0770ca49a0487d6543eb81022b7415d

Parents: 353d328

Author: Wenchen Fan

Authored: Fri Oct 19 21:39:58 2018 +0800

Committer: Wenchen Fan

Committed: Fri Oct 19 21:39:58 2018 +0800

--

build/mvn | 31 ---

1 file changed, 24 insertions(+), 7 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/5cef11ac/build/mvn

--

diff --git a/build/mvn b/build/mvn

index efa4f93..9c0d1a7 100755

--- a/build/mvn

+++ b/build/mvn

@@ -130,8 +130,17 @@ if [ "$1" == "--force" ]; then

shift

fi

+if [ "$1" == "--zinc" ]; then

+ echo "Using zinc for incremental compilation. Be sure you are aware of the

implications of "

+ echo "running this server process on your machine"

+ USE_ZINC=1

+ shift

+fi

+

# Install the proper version of Scala, Zinc and Maven for the build

-install_zinc

+if [ -n "${USE_ZINC}" ]; then

+ install_zinc

+fi

install_scala

install_mvn

@@ -140,12 +149,15 @@ cd "${_CALLING_DIR}"

# Now that zinc is ensured to be installed, check its status and, if its

# not running or just installed, start it

-if [ -n "${ZINC_INSTALL_FLAG}" -o -z "`"${ZINC_BIN}" -status -port

${ZINC_PORT}`" ]; then

- export ZINC_OPTS=${ZINC_OPTS:-"$_COMPILE_JVM_OPTS"}

- "${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

- "${ZINC_BIN}" -start -port ${ZINC_PORT} \

--scala-compiler "${SCALA_COMPILER}" \

--scala-library "${SCALA_LIBRARY}" &>/dev/null

+if [ -n "${USE_ZINC}" ]; then

+ if [ -n "${ZINC_INSTALL_FLAG}" -o -z "`"${ZINC_BIN}" -status -port

${ZINC_PORT}`" ]; then

+export ZINC_OPTS=${ZINC_OPTS:-"$_COMPILE_JVM_OPTS"}

+"${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

+"${ZINC_BIN}" -start -port ${ZINC_PORT} -server 127.0.0.1 \

+ -idle-timeout 30m \

+ -scala-compiler "${SCALA_COMPILER}" \

+ -scala-library "${SCALA_LIBRARY}" &>/dev/null

+ fi

fi

# Set any `mvn` options if not already present

@@ -155,3 +167,8 @@ echo "Using \`mvn\` from path: $MVN_BIN" 1>&2

# Last, call the `mvn` command as usual

${MVN_BIN} -DzincPort=${ZINC_PORT} "$@"

+

+if [ -n "${USE_ZINC}" ]; then

+ # Try to shut down zinc explicitly

+ "${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

+fi

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: fix security issue of zinc

Repository: spark

Updated Branches:

refs/heads/branch-2.4 6a06b8cce -> 8926c4a62

fix security issue of zinc

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/8926c4a6

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/8926c4a6

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/8926c4a6

Branch: refs/heads/branch-2.4

Commit: 8926c4a6237ab059875aa7502d6417317d58381a

Parents: 6a06b8c

Author: Wenchen Fan

Authored: Fri Oct 19 21:34:35 2018 +0800

Committer: Wenchen Fan

Committed: Fri Oct 19 21:34:35 2018 +0800

--

build/mvn | 31 ---

1 file changed, 24 insertions(+), 7 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/8926c4a6/build/mvn

--

diff --git a/build/mvn b/build/mvn

index 2487b81..0289ef3 100755

--- a/build/mvn

+++ b/build/mvn

@@ -139,8 +139,17 @@ if [ "$1" == "--force" ]; then

shift

fi

+if [ "$1" == "--zinc" ]; then

+ echo "Using zinc for incremental compilation. Be sure you are aware of the

implications of "

+ echo "running this server process on your machine"

+ USE_ZINC=1

+ shift

+fi

+

# Install the proper version of Scala, Zinc and Maven for the build

-install_zinc

+if [ -n "${USE_ZINC}" ]; then

+ install_zinc

+fi

install_scala

install_mvn

@@ -149,12 +158,15 @@ cd "${_CALLING_DIR}"

# Now that zinc is ensured to be installed, check its status and, if its

# not running or just installed, start it

-if [ -n "${ZINC_INSTALL_FLAG}" -o -z "`"${ZINC_BIN}" -status -port

${ZINC_PORT}`" ]; then

- export ZINC_OPTS=${ZINC_OPTS:-"$_COMPILE_JVM_OPTS"}

- "${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

- "${ZINC_BIN}" -start -port ${ZINC_PORT} \

--scala-compiler "${SCALA_COMPILER}" \

--scala-library "${SCALA_LIBRARY}" &>/dev/null

+if [ -n "${USE_ZINC}" ]; then

+ if [ -n "${ZINC_INSTALL_FLAG}" -o -z "`"${ZINC_BIN}" -status -port

${ZINC_PORT}`" ]; then

+export ZINC_OPTS=${ZINC_OPTS:-"$_COMPILE_JVM_OPTS"}

+"${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

+"${ZINC_BIN}" -start -port ${ZINC_PORT} -server 127.0.0.1 \

+ -idle-timeout 30m \

+ -scala-compiler "${SCALA_COMPILER}" \

+ -scala-library "${SCALA_LIBRARY}" &>/dev/null

+ fi

fi

# Set any `mvn` options if not already present

@@ -164,3 +176,8 @@ echo "Using \`mvn\` from path: $MVN_BIN" 1>&2

# Last, call the `mvn` command as usual

"${MVN_BIN}" -DzincPort=${ZINC_PORT} "$@"

+

+if [ -n "${USE_ZINC}" ]; then

+ # Try to shut down zinc explicitly

+ "${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

+fi

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: fix security issue of zinc

Repository: spark

Updated Branches:

refs/heads/master f38594fc5 -> ec1fafe3e

fix security issue of zinc

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/ec1fafe3

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/ec1fafe3

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/ec1fafe3

Branch: refs/heads/master

Commit: ec1fafe3e78a40372975dfacb2516fe24bdfa2d2

Parents: f38594f

Author: Wenchen Fan

Authored: Fri Oct 19 21:33:11 2018 +0800

Committer: Wenchen Fan

Committed: Fri Oct 19 21:33:11 2018 +0800

--

build/mvn | 31 ---

1 file changed, 24 insertions(+), 7 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/ec1fafe3/build/mvn

--

diff --git a/build/mvn b/build/mvn

index 2487b81..0289ef3 100755

--- a/build/mvn

+++ b/build/mvn

@@ -139,8 +139,17 @@ if [ "$1" == "--force" ]; then

shift

fi

+if [ "$1" == "--zinc" ]; then

+ echo "Using zinc for incremental compilation. Be sure you are aware of the

implications of "

+ echo "running this server process on your machine"

+ USE_ZINC=1

+ shift

+fi

+

# Install the proper version of Scala, Zinc and Maven for the build

-install_zinc

+if [ -n "${USE_ZINC}" ]; then

+ install_zinc

+fi

install_scala

install_mvn

@@ -149,12 +158,15 @@ cd "${_CALLING_DIR}"

# Now that zinc is ensured to be installed, check its status and, if its

# not running or just installed, start it

-if [ -n "${ZINC_INSTALL_FLAG}" -o -z "`"${ZINC_BIN}" -status -port

${ZINC_PORT}`" ]; then

- export ZINC_OPTS=${ZINC_OPTS:-"$_COMPILE_JVM_OPTS"}

- "${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

- "${ZINC_BIN}" -start -port ${ZINC_PORT} \

--scala-compiler "${SCALA_COMPILER}" \

--scala-library "${SCALA_LIBRARY}" &>/dev/null

+if [ -n "${USE_ZINC}" ]; then

+ if [ -n "${ZINC_INSTALL_FLAG}" -o -z "`"${ZINC_BIN}" -status -port

${ZINC_PORT}`" ]; then

+export ZINC_OPTS=${ZINC_OPTS:-"$_COMPILE_JVM_OPTS"}

+"${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

+"${ZINC_BIN}" -start -port ${ZINC_PORT} -server 127.0.0.1 \

+ -idle-timeout 30m \

+ -scala-compiler "${SCALA_COMPILER}" \

+ -scala-library "${SCALA_LIBRARY}" &>/dev/null

+ fi

fi

# Set any `mvn` options if not already present

@@ -164,3 +176,8 @@ echo "Using \`mvn\` from path: $MVN_BIN" 1>&2

# Last, call the `mvn` command as usual

"${MVN_BIN}" -DzincPort=${ZINC_PORT} "$@"

+

+if [ -n "${USE_ZINC}" ]; then

+ # Try to shut down zinc explicitly

+ "${ZINC_BIN}" -shutdown -port ${ZINC_PORT}

+fi

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-25768][SQL] fix constant argument expecting UDAFs

Repository: spark

Updated Branches:

refs/heads/branch-2.3 61b301cc7 -> 353d32804

[SPARK-25768][SQL] fix constant argument expecting UDAFs

## What changes were proposed in this pull request?

Without this PR some UDAFs like `GenericUDAFPercentileApprox` can throw an

exception because expecting a constant parameter (object inspector) as a

particular argument.

The exception is thrown because `toPrettySQL` call in `ResolveAliases` analyzer

rule transforms a `Literal` parameter to a `PrettyAttribute` which is then

transformed to an `ObjectInspector` instead of a `ConstantObjectInspector`.

The exception comes from `getEvaluator` method of `GenericUDAFPercentileApprox`

that actually shouldn't be called during `toPrettySQL` transformation. The

reason why it is called are the non lazy fields in `HiveUDAFFunction`.

This PR makes all fields of `HiveUDAFFunction` lazy.

## How was this patch tested?

added new UT

Closes #22766 from peter-toth/SPARK-25768.

Authored-by: Peter Toth

Signed-off-by: Wenchen Fan

(cherry picked from commit f38594fc561208e17af80d17acf8da362b91fca4)

Signed-off-by: Wenchen Fan

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/353d3280

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/353d3280

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/353d3280

Branch: refs/heads/branch-2.3

Commit: 353d328041397762e12acf915967cafab5dcdade

Parents: 61b301c

Author: Peter Toth

Authored: Fri Oct 19 21:17:14 2018 +0800

Committer: Wenchen Fan

Committed: Fri Oct 19 21:18:36 2018 +0800

--

.../org/apache/spark/sql/hive/hiveUDFs.scala| 53 +++-

.../spark/sql/hive/execution/HiveUDFSuite.scala | 14 ++

2 files changed, 42 insertions(+), 25 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/353d3280/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

--

diff --git a/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

b/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

index 68af99e..4a84509 100644

--- a/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

+++ b/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

@@ -340,39 +340,40 @@ private[hive] case class HiveUDAFFunction(

resolver.getEvaluator(parameterInfo)

}

- // The UDAF evaluator used to consume raw input rows and produce partial

aggregation results.

- @transient

- private lazy val partial1ModeEvaluator = newEvaluator()

+ private case class HiveEvaluator(

+ evaluator: GenericUDAFEvaluator,

+ objectInspector: ObjectInspector)

+ // The UDAF evaluator used to consume raw input rows and produce partial

aggregation results.

// Hive `ObjectInspector` used to inspect partial aggregation results.

@transient

- private val partialResultInspector = partial1ModeEvaluator.init(

-GenericUDAFEvaluator.Mode.PARTIAL1,

-inputInspectors

- )

+ private lazy val partial1HiveEvaluator = {

+val evaluator = newEvaluator()

+HiveEvaluator(evaluator,

evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL1, inputInspectors))

+ }

// The UDAF evaluator used to merge partial aggregation results.

@transient

private lazy val partial2ModeEvaluator = {

val evaluator = newEvaluator()

-evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL2,

Array(partialResultInspector))

+evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL2,

Array(partial1HiveEvaluator.objectInspector))

evaluator

}

// Spark SQL data type of partial aggregation results

@transient

- private lazy val partialResultDataType =

inspectorToDataType(partialResultInspector)

+ private lazy val partialResultDataType =

+inspectorToDataType(partial1HiveEvaluator.objectInspector)

// The UDAF evaluator used to compute the final result from a partial

aggregation result objects.

- @transient

- private lazy val finalModeEvaluator = newEvaluator()

-

// Hive `ObjectInspector` used to inspect the final aggregation result

object.

@transient

- private val returnInspector = finalModeEvaluator.init(

-GenericUDAFEvaluator.Mode.FINAL,

-Array(partialResultInspector)

- )

+ private lazy val finalHiveEvaluator = {

+val evaluator = newEvaluator()

+HiveEvaluator(

+ evaluator,

+ evaluator.init(GenericUDAFEvaluator.Mode.FINAL,

Array(partial1HiveEvaluator.objectInspector)))

+ }

// Wrapper functions used to wrap Spark SQL input arguments into Hive

specific format.

@transient

@@ -381,7 +382,7 @@ private[hive] case class HiveUDAFFunction(

// Unwrapper function used to unwrap final aggregation result objects

returned by Hive UDAFs into

// Spark SQL specific format.

@transient

- private lazy val

spark git commit: [SPARK-25768][SQL] fix constant argument expecting UDAFs

Repository: spark

Updated Branches:

refs/heads/branch-2.4 df60d9f34 -> 6a06b8cce

[SPARK-25768][SQL] fix constant argument expecting UDAFs

## What changes were proposed in this pull request?

Without this PR some UDAFs like `GenericUDAFPercentileApprox` can throw an

exception because expecting a constant parameter (object inspector) as a

particular argument.

The exception is thrown because `toPrettySQL` call in `ResolveAliases` analyzer

rule transforms a `Literal` parameter to a `PrettyAttribute` which is then

transformed to an `ObjectInspector` instead of a `ConstantObjectInspector`.

The exception comes from `getEvaluator` method of `GenericUDAFPercentileApprox`

that actually shouldn't be called during `toPrettySQL` transformation. The

reason why it is called are the non lazy fields in `HiveUDAFFunction`.

This PR makes all fields of `HiveUDAFFunction` lazy.

## How was this patch tested?

added new UT

Closes #22766 from peter-toth/SPARK-25768.

Authored-by: Peter Toth

Signed-off-by: Wenchen Fan

(cherry picked from commit f38594fc561208e17af80d17acf8da362b91fca4)

Signed-off-by: Wenchen Fan

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/6a06b8cc

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/6a06b8cc

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/6a06b8cc

Branch: refs/heads/branch-2.4

Commit: 6a06b8ccef57017172666cd004d5eb6be994d19e

Parents: df60d9f

Author: Peter Toth

Authored: Fri Oct 19 21:17:14 2018 +0800

Committer: Wenchen Fan

Committed: Fri Oct 19 21:17:49 2018 +0800

--

.../org/apache/spark/sql/hive/hiveUDFs.scala| 53 +++-

.../spark/sql/hive/execution/HiveUDFSuite.scala | 14 ++

2 files changed, 42 insertions(+), 25 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/6a06b8cc/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

--

diff --git a/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

b/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

index 68af99e..4a84509 100644

--- a/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

+++ b/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

@@ -340,39 +340,40 @@ private[hive] case class HiveUDAFFunction(

resolver.getEvaluator(parameterInfo)

}

- // The UDAF evaluator used to consume raw input rows and produce partial

aggregation results.

- @transient

- private lazy val partial1ModeEvaluator = newEvaluator()

+ private case class HiveEvaluator(

+ evaluator: GenericUDAFEvaluator,

+ objectInspector: ObjectInspector)

+ // The UDAF evaluator used to consume raw input rows and produce partial

aggregation results.

// Hive `ObjectInspector` used to inspect partial aggregation results.

@transient

- private val partialResultInspector = partial1ModeEvaluator.init(

-GenericUDAFEvaluator.Mode.PARTIAL1,

-inputInspectors

- )

+ private lazy val partial1HiveEvaluator = {

+val evaluator = newEvaluator()

+HiveEvaluator(evaluator,

evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL1, inputInspectors))

+ }

// The UDAF evaluator used to merge partial aggregation results.

@transient

private lazy val partial2ModeEvaluator = {

val evaluator = newEvaluator()

-evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL2,

Array(partialResultInspector))

+evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL2,

Array(partial1HiveEvaluator.objectInspector))

evaluator

}

// Spark SQL data type of partial aggregation results

@transient

- private lazy val partialResultDataType =

inspectorToDataType(partialResultInspector)

+ private lazy val partialResultDataType =

+inspectorToDataType(partial1HiveEvaluator.objectInspector)

// The UDAF evaluator used to compute the final result from a partial

aggregation result objects.

- @transient

- private lazy val finalModeEvaluator = newEvaluator()

-

// Hive `ObjectInspector` used to inspect the final aggregation result

object.

@transient

- private val returnInspector = finalModeEvaluator.init(

-GenericUDAFEvaluator.Mode.FINAL,

-Array(partialResultInspector)

- )

+ private lazy val finalHiveEvaluator = {

+val evaluator = newEvaluator()

+HiveEvaluator(

+ evaluator,

+ evaluator.init(GenericUDAFEvaluator.Mode.FINAL,

Array(partial1HiveEvaluator.objectInspector)))

+ }

// Wrapper functions used to wrap Spark SQL input arguments into Hive

specific format.

@transient

@@ -381,7 +382,7 @@ private[hive] case class HiveUDAFFunction(

// Unwrapper function used to unwrap final aggregation result objects

returned by Hive UDAFs into

// Spark SQL specific format.

@transient

- private lazy val

spark git commit: [SPARK-25768][SQL] fix constant argument expecting UDAFs

Repository: spark

Updated Branches:

refs/heads/master e8167768c -> f38594fc5

[SPARK-25768][SQL] fix constant argument expecting UDAFs

## What changes were proposed in this pull request?

Without this PR some UDAFs like `GenericUDAFPercentileApprox` can throw an

exception because expecting a constant parameter (object inspector) as a

particular argument.

The exception is thrown because `toPrettySQL` call in `ResolveAliases` analyzer

rule transforms a `Literal` parameter to a `PrettyAttribute` which is then

transformed to an `ObjectInspector` instead of a `ConstantObjectInspector`.

The exception comes from `getEvaluator` method of `GenericUDAFPercentileApprox`

that actually shouldn't be called during `toPrettySQL` transformation. The

reason why it is called are the non lazy fields in `HiveUDAFFunction`.

This PR makes all fields of `HiveUDAFFunction` lazy.

## How was this patch tested?

added new UT

Closes #22766 from peter-toth/SPARK-25768.

Authored-by: Peter Toth

Signed-off-by: Wenchen Fan

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/f38594fc

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/f38594fc

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/f38594fc

Branch: refs/heads/master

Commit: f38594fc561208e17af80d17acf8da362b91fca4

Parents: e816776

Author: Peter Toth

Authored: Fri Oct 19 21:17:14 2018 +0800

Committer: Wenchen Fan

Committed: Fri Oct 19 21:17:14 2018 +0800

--

.../org/apache/spark/sql/hive/hiveUDFs.scala| 53 +++-

.../spark/sql/hive/execution/HiveUDFSuite.scala | 14 ++

2 files changed, 42 insertions(+), 25 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/f38594fc/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

--

diff --git a/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

b/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

index 68af99e..4a84509 100644

--- a/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

+++ b/sql/hive/src/main/scala/org/apache/spark/sql/hive/hiveUDFs.scala

@@ -340,39 +340,40 @@ private[hive] case class HiveUDAFFunction(

resolver.getEvaluator(parameterInfo)

}

- // The UDAF evaluator used to consume raw input rows and produce partial

aggregation results.

- @transient

- private lazy val partial1ModeEvaluator = newEvaluator()

+ private case class HiveEvaluator(

+ evaluator: GenericUDAFEvaluator,

+ objectInspector: ObjectInspector)

+ // The UDAF evaluator used to consume raw input rows and produce partial

aggregation results.

// Hive `ObjectInspector` used to inspect partial aggregation results.

@transient

- private val partialResultInspector = partial1ModeEvaluator.init(

-GenericUDAFEvaluator.Mode.PARTIAL1,

-inputInspectors

- )

+ private lazy val partial1HiveEvaluator = {

+val evaluator = newEvaluator()

+HiveEvaluator(evaluator,

evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL1, inputInspectors))

+ }

// The UDAF evaluator used to merge partial aggregation results.

@transient

private lazy val partial2ModeEvaluator = {

val evaluator = newEvaluator()

-evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL2,

Array(partialResultInspector))

+evaluator.init(GenericUDAFEvaluator.Mode.PARTIAL2,

Array(partial1HiveEvaluator.objectInspector))

evaluator

}

// Spark SQL data type of partial aggregation results

@transient

- private lazy val partialResultDataType =

inspectorToDataType(partialResultInspector)

+ private lazy val partialResultDataType =

+inspectorToDataType(partial1HiveEvaluator.objectInspector)

// The UDAF evaluator used to compute the final result from a partial

aggregation result objects.

- @transient

- private lazy val finalModeEvaluator = newEvaluator()

-

// Hive `ObjectInspector` used to inspect the final aggregation result

object.

@transient

- private val returnInspector = finalModeEvaluator.init(

-GenericUDAFEvaluator.Mode.FINAL,

-Array(partialResultInspector)

- )

+ private lazy val finalHiveEvaluator = {

+val evaluator = newEvaluator()

+HiveEvaluator(

+ evaluator,

+ evaluator.init(GenericUDAFEvaluator.Mode.FINAL,

Array(partial1HiveEvaluator.objectInspector)))

+ }

// Wrapper functions used to wrap Spark SQL input arguments into Hive

specific format.

@transient

@@ -381,7 +382,7 @@ private[hive] case class HiveUDAFFunction(

// Unwrapper function used to unwrap final aggregation result objects

returned by Hive UDAFs into

// Spark SQL specific format.

@transient

- private lazy val resultUnwrapper = unwrapperFor(returnInspector)

+ private lazy val resultUnwrapper =

[1/2] spark git commit: [SPARK-25044][FOLLOW-UP] Change ScalaUDF constructor signature

Repository: spark

Updated Branches:

refs/heads/branch-2.4 9ed2e4204 -> df60d9f34

http://git-wip-us.apache.org/repos/asf/spark/blob/df60d9f3/sql/core/src/main/scala/org/apache/spark/sql/expressions/UserDefinedFunction.scala

--

diff --git