[GitHub] [spark] HeartSaVioR commented on a diff in pull request #38503: [SPARK-40940] Remove Multi-stateful operator checkers for streaming queries.

HeartSaVioR commented on code in PR #38503:

URL: https://github.com/apache/spark/pull/38503#discussion_r1023563189

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/UnsupportedOperationChecker.scala:

##

@@ -42,40 +43,101 @@ object UnsupportedOperationChecker extends Logging {

}

/**

- * Checks for possible correctness issue in chained stateful operators. The

behavior is

- * controlled by SQL config

`spark.sql.streaming.statefulOperator.checkCorrectness.enabled`.

- * Once it is enabled, an analysis exception will be thrown. Otherwise,

Spark will just

- * print a warning message.

+ * Checks if the expression has a event time column

+ * @param exp the expression to be checked

+ * @return true if it is a event time column.

*/

- def checkStreamingQueryGlobalWatermarkLimit(

- plan: LogicalPlan,

- outputMode: OutputMode): Unit = {

-def isStatefulOperationPossiblyEmitLateRows(p: LogicalPlan): Boolean = p

match {

- case s: Aggregate

-if s.isStreaming && outputMode == InternalOutputModes.Append => true

- case Join(left, right, joinType, _, _)

-if left.isStreaming && right.isStreaming && joinType != Inner => true

- case f: FlatMapGroupsWithState

-if f.isStreaming && f.outputMode == OutputMode.Append() => true

- case _ => false

+ private def hasEventTimeCol(exp: Expression): Boolean = exp.exists {

+case a: AttributeReference =>

a.metadata.contains(EventTimeWatermark.delayKey)

+case _ => false

+ }

+

+ /**

+ * Checks if the expression contains a range comparison, in which

+ * either side of the comparison is an event-time column. This is used for

checking

+ * stream-stream time interval join.

+ * @param e the expression to be checked

+ * @return true if there is a time-interval join.

+ */

+ private def hasRangeExprAgainstEventTimeCol(e: Expression): Boolean = {

+def hasEventTimeColBinaryComp(neq: Expression): Boolean = {

+ val exp = neq.asInstanceOf[BinaryComparison]

+ hasEventTimeCol(exp.left) || hasEventTimeCol(exp.right)

}

-def isStatefulOperation(p: LogicalPlan): Boolean = p match {

- case s: Aggregate if s.isStreaming => true

- case _ @ Join(left, right, _, _, _) if left.isStreaming &&

right.isStreaming => true

- case f: FlatMapGroupsWithState if f.isStreaming => true

- case f: FlatMapGroupsInPandasWithState if f.isStreaming => true

- case d: Deduplicate if d.isStreaming => true

+e.exists {

+ case neq @ (_: LessThanOrEqual | _: LessThan | _: GreaterThanOrEqual |

_: GreaterThan) =>

+hasEventTimeColBinaryComp(neq)

case _ => false

}

+ }

-val failWhenDetected = SQLConf.get.statefulOperatorCorrectnessCheckEnabled

+ /**

+ * This method, combined with isStatefulOperationPossiblyEmitLateRows,

determines all disallowed

+ * behaviors in multiple stateful operators.

+ * Concretely, All conditions defined below cannot be followed by any

streaming stateful

+ * operator as defined in isStatefulOperationPossiblyEmitLateRows.

+ * @param p logical plan to be checked

+ * @param outputMode query output mode

+ * @return true if it is not allowed when followed by any streaming stateful

+ * operator as defined in isStatefulOperationPossiblyEmitLateRows.

+ */

+ private def ifCannotBeFollowedByStatefulOperation(

+ p: LogicalPlan, outputMode: OutputMode): Boolean = p match {

+case ExtractEquiJoinKeys(_, _, _, otherCondition, _, left, right, _) =>

+ left.isStreaming && right.isStreaming &&

+otherCondition.isDefined &&

hasRangeExprAgainstEventTimeCol(otherCondition.get)

+// FlatMapGroupsWithState configured with event time

+case f @ FlatMapGroupsWithState(_, _, _, _, _, _, _, _, _, timeout, _, _,

_, _, _, _)

+ if f.isStreaming && timeout == GroupStateTimeout.EventTimeTimeout => true

+case p @ FlatMapGroupsInPandasWithState(_, _, _, _, _, timeout, _)

+ if p.isStreaming && timeout == GroupStateTimeout.EventTimeTimeout => true

+case a: Aggregate if a.isStreaming && outputMode !=

InternalOutputModes.Append => true

+// Since the Distinct node will be replaced to Aggregate in the optimizer

rule

+// [[ReplaceDistinctWithAggregate]], here we also need to check all

Distinct node by

+// assuming it as Aggregate.

+case d @ Distinct(_: LogicalPlan) if d.isStreaming

+ && outputMode != InternalOutputModes.Append => true

+case _ => false

+ }

+ /**

+ * This method is only used with ifCannotBeFollowedByStatefulOperation.

+ * As can tell from the name, it doesn't contain ALL streaming stateful

operations,

+ * only the stateful operations that are possible to emit late rows.

+ * for example, a Deduplicate without a event time column is still a

stateful operation

+ * but of less interested because it won't emit late records because of

watermark.

+ * @param p the logical plan to be checked

+ * @return

[GitHub] [spark] dongjoon-hyun commented on pull request #38352: [SPARK-40801][BUILD][3.2] Upgrade `Apache commons-text` to 1.10

dongjoon-hyun commented on PR #38352: URL: https://github.com/apache/spark/pull/38352#issuecomment-1316546164 +1 for @sunchao 's comment. To @bsikander , it would be great if you can participate [[VOTE] Release Spark 3.2.3 (RC1)](https://lists.apache.org/thread/gh2oktrndxopqnyxbsvp2p0k6jk1n9fs). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on a diff in pull request #38503: [SPARK-40940] Remove Multi-stateful operator checkers for streaming queries.

HeartSaVioR commented on code in PR #38503:

URL: https://github.com/apache/spark/pull/38503#discussion_r1023563189

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/UnsupportedOperationChecker.scala:

##

@@ -42,40 +43,101 @@ object UnsupportedOperationChecker extends Logging {

}

/**

- * Checks for possible correctness issue in chained stateful operators. The

behavior is

- * controlled by SQL config

`spark.sql.streaming.statefulOperator.checkCorrectness.enabled`.

- * Once it is enabled, an analysis exception will be thrown. Otherwise,

Spark will just

- * print a warning message.

+ * Checks if the expression has a event time column

+ * @param exp the expression to be checked

+ * @return true if it is a event time column.

*/

- def checkStreamingQueryGlobalWatermarkLimit(

- plan: LogicalPlan,

- outputMode: OutputMode): Unit = {

-def isStatefulOperationPossiblyEmitLateRows(p: LogicalPlan): Boolean = p

match {

- case s: Aggregate

-if s.isStreaming && outputMode == InternalOutputModes.Append => true

- case Join(left, right, joinType, _, _)

-if left.isStreaming && right.isStreaming && joinType != Inner => true

- case f: FlatMapGroupsWithState

-if f.isStreaming && f.outputMode == OutputMode.Append() => true

- case _ => false

+ private def hasEventTimeCol(exp: Expression): Boolean = exp.exists {

+case a: AttributeReference =>

a.metadata.contains(EventTimeWatermark.delayKey)

+case _ => false

+ }

+

+ /**

+ * Checks if the expression contains a range comparison, in which

+ * either side of the comparison is an event-time column. This is used for

checking

+ * stream-stream time interval join.

+ * @param e the expression to be checked

+ * @return true if there is a time-interval join.

+ */

+ private def hasRangeExprAgainstEventTimeCol(e: Expression): Boolean = {

+def hasEventTimeColBinaryComp(neq: Expression): Boolean = {

+ val exp = neq.asInstanceOf[BinaryComparison]

+ hasEventTimeCol(exp.left) || hasEventTimeCol(exp.right)

}

-def isStatefulOperation(p: LogicalPlan): Boolean = p match {

- case s: Aggregate if s.isStreaming => true

- case _ @ Join(left, right, _, _, _) if left.isStreaming &&

right.isStreaming => true

- case f: FlatMapGroupsWithState if f.isStreaming => true

- case f: FlatMapGroupsInPandasWithState if f.isStreaming => true

- case d: Deduplicate if d.isStreaming => true

+e.exists {

+ case neq @ (_: LessThanOrEqual | _: LessThan | _: GreaterThanOrEqual |

_: GreaterThan) =>

+hasEventTimeColBinaryComp(neq)

case _ => false

}

+ }

-val failWhenDetected = SQLConf.get.statefulOperatorCorrectnessCheckEnabled

+ /**

+ * This method, combined with isStatefulOperationPossiblyEmitLateRows,

determines all disallowed

+ * behaviors in multiple stateful operators.

+ * Concretely, All conditions defined below cannot be followed by any

streaming stateful

+ * operator as defined in isStatefulOperationPossiblyEmitLateRows.

+ * @param p logical plan to be checked

+ * @param outputMode query output mode

+ * @return true if it is not allowed when followed by any streaming stateful

+ * operator as defined in isStatefulOperationPossiblyEmitLateRows.

+ */

+ private def ifCannotBeFollowedByStatefulOperation(

+ p: LogicalPlan, outputMode: OutputMode): Boolean = p match {

+case ExtractEquiJoinKeys(_, _, _, otherCondition, _, left, right, _) =>

+ left.isStreaming && right.isStreaming &&

+otherCondition.isDefined &&

hasRangeExprAgainstEventTimeCol(otherCondition.get)

+// FlatMapGroupsWithState configured with event time

+case f @ FlatMapGroupsWithState(_, _, _, _, _, _, _, _, _, timeout, _, _,

_, _, _, _)

+ if f.isStreaming && timeout == GroupStateTimeout.EventTimeTimeout => true

+case p @ FlatMapGroupsInPandasWithState(_, _, _, _, _, timeout, _)

+ if p.isStreaming && timeout == GroupStateTimeout.EventTimeTimeout => true

+case a: Aggregate if a.isStreaming && outputMode !=

InternalOutputModes.Append => true

+// Since the Distinct node will be replaced to Aggregate in the optimizer

rule

+// [[ReplaceDistinctWithAggregate]], here we also need to check all

Distinct node by

+// assuming it as Aggregate.

+case d @ Distinct(_: LogicalPlan) if d.isStreaming

+ && outputMode != InternalOutputModes.Append => true

+case _ => false

+ }

+ /**

+ * This method is only used with ifCannotBeFollowedByStatefulOperation.

+ * As can tell from the name, it doesn't contain ALL streaming stateful

operations,

+ * only the stateful operations that are possible to emit late rows.

+ * for example, a Deduplicate without a event time column is still a

stateful operation

+ * but of less interested because it won't emit late records because of

watermark.

+ * @param p the logical plan to be checked

+ * @return

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #38651: [SPARK-41136][K8S] Shorten graceful shutdown time of ExecutorPodsSnapshotsStoreImpl to prevent blocking shutdown process

dongjoon-hyun commented on code in PR #38651: URL: https://github.com/apache/spark/pull/38651#discussion_r1023621416 ## resource-managers/kubernetes/core/src/main/scala/org/apache/spark/scheduler/cluster/k8s/KubernetesClusterManager.scala: ## @@ -103,7 +103,7 @@ private[spark] class KubernetesClusterManager extends ExternalClusterManager wit val subscribersExecutor = ThreadUtils .newDaemonThreadPoolScheduledExecutor( "kubernetes-executor-snapshots-subscribers", 2) -val snapshotsStore = new ExecutorPodsSnapshotsStoreImpl(subscribersExecutor) +val snapshotsStore = new ExecutorPodsSnapshotsStoreImpl(sc.conf, subscribersExecutor) Review Comment: BTW, it seems that we don't need to hand over the whole `SparkConf` here. What we need is only `sc.conf.get(KUBERNETES_EXECUTOR_SNAPSHOTS_SUBSCRIBERS_GRACE_PERIOD)`, isnt' it? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #38651: [SPARK-41136][K8S] Shorten graceful shutdown time of ExecutorPodsSnapshotsStoreImpl to prevent blocking shutdown process

dongjoon-hyun commented on code in PR #38651: URL: https://github.com/apache/spark/pull/38651#discussion_r1023621416 ## resource-managers/kubernetes/core/src/main/scala/org/apache/spark/scheduler/cluster/k8s/KubernetesClusterManager.scala: ## @@ -103,7 +103,7 @@ private[spark] class KubernetesClusterManager extends ExternalClusterManager wit val subscribersExecutor = ThreadUtils .newDaemonThreadPoolScheduledExecutor( "kubernetes-executor-snapshots-subscribers", 2) -val snapshotsStore = new ExecutorPodsSnapshotsStoreImpl(subscribersExecutor) +val snapshotsStore = new ExecutorPodsSnapshotsStoreImpl(sc.conf, subscribersExecutor) Review Comment: BTW, it seems that we don't need to hand over the whole `SparkConf` here. What we need is only `conf.get(KUBERNETES_EXECUTOR_SNAPSHOTS_SUBSCRIBERS_GRACE_PERIOD)`, isnt' it? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #38651: [SPARK-41136][K8S] Shorten graceful shutdown time of ExecutorPodsSnapshotsStoreImpl to prevent blocking shutdown process

dongjoon-hyun commented on code in PR #38651:

URL: https://github.com/apache/spark/pull/38651#discussion_r1023614721

##

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/scheduler/cluster/k8s/ExecutorPodsSnapshotsStoreImpl.scala:

##

@@ -57,10 +60,22 @@ import org.apache.spark.util.ThreadUtils

* The subscriber notification callback is guaranteed to be called from a

single thread at a time.

*/

private[spark] class ExecutorPodsSnapshotsStoreImpl(

+conf: SparkConf,

subscribersExecutor: ScheduledExecutorService,

clock: Clock = new SystemClock)

extends ExecutorPodsSnapshotsStore with Logging {

+ private[spark] def this(

+ subscribersExecutor: ScheduledExecutorService) = {

+this(new SparkConf, subscribersExecutor, new SystemClock)

+ }

+

+ private[spark] def this(

+ subscribersExecutor: ScheduledExecutorService,

+ clock: Clock) = {

+this(new SparkConf, subscribersExecutor, clock)

+ }

Review Comment:

Oh, interesting.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #38669: [SPARK-41155][SQL] Add error message to SchemaColumnConvertNotSupportedException

dongjoon-hyun commented on code in PR #38669:

URL: https://github.com/apache/spark/pull/38669#discussion_r1023607778

##

sql/core/src/main/java/org/apache/spark/sql/execution/datasources/SchemaColumnConvertNotSupportedException.java:

##

@@ -54,7 +54,8 @@ public SchemaColumnConvertNotSupportedException(

String column,

String physicalType,

String logicalType) {

-super();

+super("column: " + column + ", physicalType: " + physicalType +

+", logicalType: " + logicalType);

Review Comment:

Is the indentation correct, @viirya ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #38668: [SPARK-41153][CORE] Log migrated shuffle data size and migration time

LuciferYang commented on code in PR #38668: URL: https://github.com/apache/spark/pull/38668#discussion_r1023595740 ## core/src/main/scala/org/apache/spark/storage/BlockManagerDecommissioner.scala: ## @@ -125,7 +127,11 @@ private[storage] class BlockManagerDecommissioner( logDebug(s"Migrated sub-block $blockId") } } - logInfo(s"Migrated $shuffleBlockInfo to $peer") + val endTime = System.nanoTime() Review Comment: Why do we need take `nanoTime()` then convert it `toMillis`? What is the problem of directly getting `System.currentTimeMillis()` for calculation? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on a diff in pull request #38644: [SPARK-41130][SQL] Rename `OUT_OF_DECIMAL_TYPE_RANGE` to `NUMERIC_OUT_OF_SUPPORTED_RANGE`

itholic commented on code in PR #38644:

URL: https://github.com/apache/spark/pull/38644#discussion_r1021424319

##

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/CastWithAnsiOnSuite.scala:

##

@@ -242,9 +242,13 @@ class CastWithAnsiOnSuite extends CastSuiteBase with

QueryErrorsBase {

test("Fast fail for cast string type to decimal type in ansi mode") {

checkEvaluation(cast("12345678901234567890123456789012345678",

DecimalType(38, 0)),

Decimal("12345678901234567890123456789012345678"))

-checkExceptionInExpression[ArithmeticException](

- cast("123456789012345678901234567890123456789", DecimalType(38, 0)),

- "Out of decimal type range")

+checkError(

+ exception = intercept[SparkArithmeticException] {

+evaluateWithoutCodegen(cast("123456789012345678901234567890123456789",

DecimalType(38, 0)))

+ },

+ errorClass = "NUMERIC_OUT_OF_SUPPORTED_RANGE",

+ parameters = Map("value" -> "123456789012345678901234567890123456789")

+)

Review Comment:

CI complains `org.scalatest.exceptions.TestFailedException: Expected

exception org.apache.spark.SparkArithmeticException to be thrown, but no

exception was thrown

`, but this is passed in my local test env both with/without ANSI mode.

Any suggestion for this fix? or we should just use

`checkExceptionInExpression` for now ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38495: [SPARK-35531][SQL] Update hive table stats without unnecessary convert

cloud-fan commented on code in PR #38495:

URL: https://github.com/apache/spark/pull/38495#discussion_r1023592944

##

sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala:

##

@@ -609,6 +609,20 @@ private[hive] class HiveClientImpl(

shim.alterTable(client, qualifiedTableName, hiveTable)

}

+ override def alterTableStats(

+ dbName: String,

+ tableName: String,

+ stats: Map[String, String]): Unit = withHiveState {

+val hiveTable = getRawHiveTable(dbName,

tableName).rawTable.asInstanceOf[HiveTable]

+val newParameters = new JHashMap[String, String]()

+

hiveTable.getParameters.asScala.toMap.filterNot(_._1.startsWith(STATISTICS_PREFIX))

Review Comment:

It's a bit tricky to make `HiveClient` handle this `STATISTICS_PREFIX`. It

should be the responsibility of `HiveExternalCatalog`. `HiveClient` should only

take care of the communication with HMS.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #38668: [SPARK-41153][CORE] Log migrated shuffle data size and migration time

LuciferYang commented on code in PR #38668: URL: https://github.com/apache/spark/pull/38668#discussion_r1023590958 ## core/src/main/scala/org/apache/spark/storage/BlockManagerDecommissioner.scala: ## @@ -125,7 +127,11 @@ private[storage] class BlockManagerDecommissioner( logDebug(s"Migrated sub-block $blockId") } } - logInfo(s"Migrated $shuffleBlockInfo to $peer") + val endTime = System.nanoTime() + val duration = Duration(endTime - startTime, NANOSECONDS) + val totalBlockSize = Utils.bytesToString(blocks.map(b => b._2.size()).sum) Review Comment: hmm... if `duration` and `totalBlockSize` are defined as `val`, they will be calculated even if `log.isInfoEnabled` is `false` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on pull request #38665: [SPARK-41156][SQL] Remove the class `TypeCheckFailure`

cloud-fan commented on PR #38665: URL: https://github.com/apache/spark/pull/38665#issuecomment-1316496874 I'm OK to reuse the usage of `TypeCheckFailure`, but many advanced users use catalyst plans/expressions directly. It's frustrating to remove it and break third party Spark extensions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on pull request #38262: [SPARK-40801][BUILD] Upgrade `Apache commons-text` to 1.10

dongjoon-hyun commented on PR #38262: URL: https://github.com/apache/spark/pull/38262#issuecomment-1316472970 @Stycos SPARK-40801 is arrived after 3.3.1 release.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng opened a new pull request, #38670: [SPARK-41157][CONNECT][PYTHON][TEST] Show detailed differences in test

zhengruifeng opened a new pull request, #38670: URL: https://github.com/apache/spark/pull/38670 ### What changes were proposed in this pull request? use `assert_eq` in `PandasOnSparkTestCase` to compare dataframes ### Why are the changes needed? show detailed error message before: ``` Traceback (most recent call last): File "/home/jenkins/python/pyspark/sql/tests/connect/test_connect_basic.py", line 244, in test_fill_na self.assertTrue( AssertionError: False is not true ``` after: ``` AssertionError: DataFrame.iloc[:, 0] (column name="id") are different DataFrame.iloc[:, 0] (column name="id") values are different (100.0 %) [index]: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9] [left]: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9] [right]: [1, 2, 3, 4, 5, 6, 7, 8, 9, 10] Left: id idint64 dtype: object Right: id idint64 dtype: object ``` ### Does this PR introduce _any_ user-facing change? No, test only ### How was this patch tested? existing UT -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #38635: [SPARK-41118][SQL] `to_number`/`try_to_number` should return `null` when format is `null`

LuciferYang commented on code in PR #38635:

URL: https://github.com/apache/spark/pull/38635#discussion_r1023569619

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/numberFormatExpressions.scala:

##

@@ -26,6 +26,62 @@ import org.apache.spark.sql.catalyst.util.ToNumberParser

import org.apache.spark.sql.types.{AbstractDataType, DataType, Decimal,

DecimalType, StringType}

import org.apache.spark.unsafe.types.UTF8String

+abstract class ToNumberBase(left: Expression, right: Expression, errorOnFail:

Boolean)

+ extends BinaryExpression with Serializable with ImplicitCastInputTypes with

NullIntolerant {

+

+ private lazy val numberFormatter = {

+val value = right.eval()

+if (value != null) {

+ new ToNumberParser(value.toString.toUpperCase(Locale.ROOT), errorOnFail)

+} else {

+ null

+}

+ }

+

+ override def dataType: DataType = if (numberFormatter != null) {

+numberFormatter.parsedDecimalType

+ } else {

+DecimalType.USER_DEFAULT

+ }

+

+ override def inputTypes: Seq[DataType] = Seq(StringType, StringType)

+

+ override def checkInputDataTypes(): TypeCheckResult = {

+val inputTypeCheck = super.checkInputDataTypes()

+if (inputTypeCheck.isSuccess) {

+ if (numberFormatter == null) {

+TypeCheckResult.TypeCheckSuccess

+ } else if (right.foldable) {

+numberFormatter.check()

+ } else {

+TypeCheckResult.TypeCheckFailure(s"Format expression must be foldable,

but got $right")

Review Comment:

https://github.com/apache/spark/pull/38531 is merged

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #34367: [SPARK-37099][SQL] Introduce a rank-based filter to optimize top-k computation

beliefer commented on PR #34367: URL: https://github.com/apache/spark/pull/34367#issuecomment-1316464454 > It is a long time since I initially sent this PR, and I don't have time to work on it, if any guys are interested in this optimization, feel free to take over it. cc @beliefer OK. Let me see. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wangyum commented on a diff in pull request #38649: [SPARK-41132][SQL] Convert LikeAny and NotLikeAny to InSet if no pattern contains wildcards

wangyum commented on code in PR #38649:

URL: https://github.com/apache/spark/pull/38649#discussion_r1023546094

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/expressions.scala:

##

@@ -780,6 +780,13 @@ object LikeSimplification extends Rule[LogicalPlan] {

} else {

simplifyLike(input, pattern.toString, escapeChar).getOrElse(l)

}

+case LikeAny(child, patterns)

+ if patterns.map(_.toString).forall { case equalTo(_) => true case _ =>

false } =>

+ InSet(child, patterns.toSet)

Review Comment:

Could we also support this case?

```sql

SELECT * FROM tab WHERE trim(addr) LIKE ANY ('5000', '5001', '5002%',

'5003%', '5004')

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] MaxGekk commented on pull request #38665: [SPARK-41156][SQL] Remove the class `TypeCheckFailure`

MaxGekk commented on PR #38665: URL: https://github.com/apache/spark/pull/38665#issuecomment-1316427566 @LuciferYang @panbingkun @itholic @cloud-fan @srielau @anchovYu Could you review this PR, please. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] sunchao commented on pull request #38352: [SPARK-40801][BUILD][3.2] Upgrade `Apache commons-text` to 1.10

sunchao commented on PR #38352: URL: https://github.com/apache/spark/pull/38352#issuecomment-1316416703 @bsikander again, pls check [d...@spark.apache.org](mailto:d...@spark.apache.org) - it's being voted. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] bsikander commented on pull request #38352: [SPARK-40801][BUILD][3.2] Upgrade `Apache commons-text` to 1.10

bsikander commented on PR #38352: URL: https://github.com/apache/spark/pull/38352#issuecomment-1316415618 @sunchao @bjornjorgensen any update on this release? As internal alarms are going off continuously, i am desperately looking for the release. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] itholic commented on a diff in pull request #38576: [SPARK-41062][SQL] Rename `UNSUPPORTED_CORRELATED_REFERENCE` to `CORRELATED_REFERENCE`

itholic commented on code in PR #38576:

URL: https://github.com/apache/spark/pull/38576#discussion_r1023536354

##

core/src/main/resources/error/error-classes.json:

##

@@ -1277,6 +1277,11 @@

"A correlated outer name reference within a subquery expression body

was not found in the enclosing query: "

]

},

+ "CORRELATED_REFERENCE" : {

+"message" : [

+ "Expressions referencing the outer query are not supported outside

of WHERE/HAVING clauses"

Review Comment:

Yes, just fixed it to show SQL expression!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on pull request #38669: [SPARK-41155][SQL] Add error message to SchemaColumnConvertNotSupportedException

viirya commented on PR #38669: URL: https://github.com/apache/spark/pull/38669#issuecomment-1316408322 Thank you @sunchao ! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] yabola commented on pull request #38560: [WIP][SPARK-38005][core] Support cleaning up merged shuffle files and state from external shuffle service

yabola commented on PR #38560: URL: https://github.com/apache/spark/pull/38560#issuecomment-1316389795 @mridulm as your comment said https://github.com/apache/spark/pull/37922#discussion_r990763769 , I want to Improve this part of the deletion logic -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #34367: [SPARK-37099][SQL] Introduce a rank-based filter to optimize top-k computation

zhengruifeng commented on PR #34367: URL: https://github.com/apache/spark/pull/34367#issuecomment-1316354088 It is a long time since I initially sent this PR, and I don't have time to work on it, if any guys are interested in this optimization, feel free to take over it. cc @beliefer -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on pull request #38669: [SPARK-41155][SQL] Add error message to SchemaColumnConvertNotSupportedException

viirya commented on PR #38669: URL: https://github.com/apache/spark/pull/38669#issuecomment-1316351255 Thank you @dongjoon-hyun ! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya commented on pull request #38669: [SPARK-41155][SQL] Add error message to SchemaColumnConvertNotSupportedException

viirya commented on PR #38669: URL: https://github.com/apache/spark/pull/38669#issuecomment-1316348790 cc @dongjoon-hyun @sunchao -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #38666: [CONENCT][PYTHON][DOC] Document how to run the module of tests for Spark Connect Python tests

zhengruifeng commented on code in PR #38666: URL: https://github.com/apache/spark/pull/38666#discussion_r1023503919 ## connector/connect/README.md: ## @@ -52,9 +52,15 @@ To use the release version of Spark Connect: ### Run Tests ```bash +# Run a single Python test. ./python/run-tests --testnames 'pyspark.sql.tests.connect.test_connect_basic' Review Comment: ```suggestion # Run a single Python class. ./python/run-tests --testnames 'pyspark.sql.tests.connect.test_connect_basic' # Run a single test case in a specific class: ./python/run-tests --testnames 'pyspark.sql.tests.connect.test_connect_basic SparkConnectTests.test_schema' ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] viirya opened a new pull request, #38669: [SPARK-41155][SQL] Add error message to SchemaColumnConvertNotSupportedException

viirya opened a new pull request, #38669: URL: https://github.com/apache/spark/pull/38669 ### What changes were proposed in this pull request? This patch adds error message to `SchemaColumnConvertNotSupportedException`. ### Why are the changes needed? Just found the fact that `SchemaColumnConvertNotSupportedException` doesn't have any error message is annoying for debugging. In stack trace, we only see `SchemaColumnConvertNotSupportedException` but don't know what column is wrong. After this change, we should be able to see it, e.g., ``` org.apache.spark.sql.execution.datasources.SchemaColumnConvertNotSupportedException: column: [_1], physicalType: INT32, logicalType: bigint ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existing tests. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on a diff in pull request #38666: [CONENCT][PYTHON][DOC] Document how to run the module of tests for Spark Connect Python tests

zhengruifeng commented on code in PR #38666: URL: https://github.com/apache/spark/pull/38666#discussion_r1023503919 ## connector/connect/README.md: ## @@ -52,9 +52,15 @@ To use the release version of Spark Connect: ### Run Tests ```bash +# Run a single Python test. ./python/run-tests --testnames 'pyspark.sql.tests.connect.test_connect_basic' Review Comment: ```suggestion # Run a single Python class. ./python/run-tests --testnames 'pyspark.sql.tests.connect.test_connect_basic' # Run a single test case in a specific class: ./python/run-tests --testnames 'pyspark.sql.tests.connect.test_connect_basic SparkConnectTests.test_schema' ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon closed pull request #38667: [SPARK-40798][DOCS][FOLLOW-UP] Fix a typo in the configuration name at migration guide

HyukjinKwon closed pull request #38667: [SPARK-40798][DOCS][FOLLOW-UP] Fix a typo in the configuration name at migration guide URL: https://github.com/apache/spark/pull/38667 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #38667: [SPARK-40798][DOCS][FOLLOW-UP] Fix a typo in the configuration name at migration guide

HyukjinKwon commented on PR #38667: URL: https://github.com/apache/spark/pull/38667#issuecomment-1316341751 Merged to master. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] grundprinzip commented on pull request #38630: [SPARK-41115][CONNECT] Add ClientType to proto to indicate which client sends a request

grundprinzip commented on PR #38630: URL: https://github.com/apache/spark/pull/38630#issuecomment-1316339424 @amaliujia can you please update the pr description to remove the enum part. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wankunde commented on a diff in pull request #38495: [SPARK-35531][SQL] Update hive table stats without unnecessary convert

wankunde commented on code in PR #38495:

URL: https://github.com/apache/spark/pull/38495#discussion_r1023487514

##

sql/hive/src/main/scala/org/apache/spark/sql/hive/HiveExternalCatalog.scala:

##

@@ -722,18 +722,15 @@ private[spark] class HiveExternalCatalog(conf: SparkConf,

hadoopConf: Configurat

stats: Option[CatalogStatistics]): Unit = withClient {

requireTableExists(db, table)

val rawTable = getRawTable(db, table)

Review Comment:

If we can call client.getRawHiveTable here, will throw exception

`java.lang.LinkageError: loader constraint violation: loader (instance of

sun/misc/Launcher$AppClassLoader) previously initiated loading for a different

type with name "org/apache/hadoop/hive/ql/metadata/Table"`

Detail stack:

```

[info]

org.apache.spark.sql.hive.execution.command.AlterTableDropPartitionSuite ***

ABORTED *** (18 seconds, 552 milliseconds)

[info] java.lang.LinkageError: loader constraint violation: loader

(instance of sun/misc/Launcher$AppClassLoader) previously initiated loading for

a different type with name "org/apache/hadoop/hive/ql/metadata/Table"

[info] at java.lang.ClassLoader.defineClass1(Native Method)

[info] at java.lang.ClassLoader.defineClass(ClassLoader.java:756)

[info] at

java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

[info] at java.net.URLClassLoader.defineClass(URLClassLoader.java:468)

[info] at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

[info] at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

[info] at java.net.URLClassLoader$1.run(URLClassLoader.java:363)

[info] at java.security.AccessController.doPrivileged(Native Method)

[info] at java.net.URLClassLoader.findClass(URLClassLoader.java:362)

[info] at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

[info] at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355)

[info] at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

[info] at

org.apache.spark.sql.hive.client.HiveClientImpl$.toHiveTable(HiveClientImpl.scala:1115)

[info] at

org.apache.spark.sql.hive.execution.V1WritesHiveUtils.getDynamicPartitionColumns(V1WritesHiveUtils.scala:51)

[info] at

org.apache.spark.sql.hive.execution.V1WritesHiveUtils.getDynamicPartitionColumns$(V1WritesHiveUtils.scala:43)

[info] at

org.apache.spark.sql.hive.execution.InsertIntoHiveTable.getDynamicPartitionColumns(InsertIntoHiveTable.scala:70)

[info] at

org.apache.spark.sql.hive.execution.InsertIntoHiveTable.partitionColumns$lzycompute(InsertIntoHiveTable.scala:80)

[info] at

org.apache.spark.sql.hive.execution.InsertIntoHiveTable.partitionColumns(InsertIntoHiveTable.scala:79)

[info] at

org.apache.spark.sql.execution.datasources.V1Writes$.org$apache$spark$sql$execution$datasources$V1Writes$$prepareQuery(V1Writes.scala:75)

[info] at

org.apache.spark.sql.execution.datasources.V1Writes$$anonfun$apply$1.applyOrElse(V1Writes.scala:57)

[info] at

org.apache.spark.sql.execution.datasources.V1Writes$$anonfun$apply$1.applyOrElse(V1Writes.scala:55)

[info] at

org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$1(TreeNode.scala:512)

[info] at

org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:104)

[info] at

org.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:512)

[info] at

org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.org$apache$spark$sql$catalyst$plans$logical$AnalysisHelper$$super$transformDownWithPruning(LogicalPlan.scala:30)

[info] at

org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning(AnalysisHelper.scala:267)

[info] at

org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning$(AnalysisHelper.scala:263)

[info] at

org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

[info] at

org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

[info] at

org.apache.spark.sql.catalyst.trees.TreeNode.transformDown(TreeNode.scala:488)

[info] at

org.apache.spark.sql.execution.datasources.V1Writes$.apply(V1Writes.scala:55)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] warrenzhu25 opened a new pull request, #38668: [SPARK-41153][CORE] Log migrated shuffle data size and migration time

warrenzhu25 opened a new pull request, #38668: URL: https://github.com/apache/spark/pull/38668 ### What changes were proposed in this pull request? Log migrated shuffle data size and migration time ### Why are the changes needed? Get info about migrated shuffle data size and migration time ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Manually tested -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pan3793 commented on a diff in pull request #38651: [SPARK-41136][K8S] Shorten graceful shutdown time of ExecutorPodsSnapshotsStoreImpl to prevent blocking shutdown process

pan3793 commented on code in PR #38651:

URL: https://github.com/apache/spark/pull/38651#discussion_r1023478697

##

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/scheduler/cluster/k8s/ExecutorPodsSnapshotsStoreImpl.scala:

##

@@ -57,6 +60,7 @@ import org.apache.spark.util.ThreadUtils

* The subscriber notification callback is guaranteed to be called from a

single thread at a time.

*/

private[spark] class ExecutorPodsSnapshotsStoreImpl(

+conf: SparkConf,

Review Comment:

the backward-compatible constructors were added

##

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala:

##

@@ -723,6 +723,18 @@ private[spark] object Config extends Logging {

.checkValue(value => value > 0, "Maximum number of pending pods should

be a positive integer")

.createWithDefault(Int.MaxValue)

+ val KUBERNETES_EXECUTOR_SNAPSHOTS_SUBSCRIBERS_GRACE_PERIOD =

+

ConfigBuilder("spark.kubernetes.executorSnapshotsSubscribersShutdownGracePeriod")

+ .doc("Time to wait for graceful shutdown

kubernetes-executor-snapshots-subscribers " +

+"thread pool. Since it may be called by ShutdownHookManager, where

timeout is " +

+"controlled by hadoop configuration `hadoop.service.shutdown.timeout`

" +

+"(default is 30s). As the whole Spark shutdown procedure shares the

above timeout, " +

+"this value should be short than that to prevent blocking the

following shutdown " +

+"procedures.")

+ .version("3.4.0")

+ .timeConf(TimeUnit.SECONDS)

Review Comment:

added

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pan3793 commented on pull request #38651: [SPARK-41136][K8S] Shorten graceful shutdown time of ExecutorPodsSnapshotsStoreImpl to prevent blocking shutdown process

pan3793 commented on PR #38651: URL: https://github.com/apache/spark/pull/38651#issuecomment-1316286965 @dongjoon-hyun thanks for review, I addressed your comments. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] pan3793 commented on a diff in pull request #38651: [SPARK-41136][K8S] Shorten graceful shutdown time of ExecutorPodsSnapshotsStoreImpl to prevent blocking shutdown process

pan3793 commented on code in PR #38651:

URL: https://github.com/apache/spark/pull/38651#discussion_r1023477562

##

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/scheduler/cluster/k8s/ExecutorPodsSnapshotsStoreImpl.scala:

##

@@ -57,10 +60,22 @@ import org.apache.spark.util.ThreadUtils

* The subscriber notification callback is guaranteed to be called from a

single thread at a time.

*/

private[spark] class ExecutorPodsSnapshotsStoreImpl(

+conf: SparkConf,

subscribersExecutor: ScheduledExecutorService,

clock: Clock = new SystemClock)

extends ExecutorPodsSnapshotsStore with Logging {

+ private[spark] def this(

+ subscribersExecutor: ScheduledExecutorService) = {

+this(new SparkConf, subscribersExecutor, new SystemClock)

+ }

+

+ private[spark] def this(

+ subscribersExecutor: ScheduledExecutorService,

+ clock: Clock) = {

+this(new SparkConf, subscribersExecutor, clock)

+ }

Review Comment:

I can not merge these two constructers into one,

```

private[spark] def this(

subscribersExecutor: ScheduledExecutorService,

clock: Clock = new SystemClock) = {

this(new SparkConf, subscribersExecutor, clock)

}

```

it fails compilation

```

[error]

/Users/chengpan/Projects/apache-spark/resource-managers/kubernetes/core/src/main/scala/org/apache/spark/scheduler/cluster/k8s/KubernetesClusterManager.scala:106:64:

type mismatch;

[error] found : org.apache.spark.SparkConf

[error] required: java.util.concurrent.ScheduledExecutorService

[error] val snapshotsStore = new ExecutorPodsSnapshotsStoreImpl(sc.conf,

subscribersExecutor)

[error]

``

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] ulysses-you commented on pull request #38667: [SPARK-40798][DOCS][FOLLOW-UP] Fix a typo in the configuration name at migration guide

ulysses-you commented on PR #38667: URL: https://github.com/apache/spark/pull/38667#issuecomment-1316283965 thank you @HyukjinKwon @anchovYu -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #38620: [SPARK-41113][BUILD] Upgrade sbt to 1.8.0

LuciferYang commented on PR #38620: URL: https://github.com/apache/spark/pull/38620#issuecomment-1316264566 Thanks @dongjoon-hyun @HyukjinKwon -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] wankunde commented on pull request #38495: [SPARK-35531][SQL] Update hive table stats without unnecessary convert

wankunde commented on PR #38495: URL: https://github.com/apache/spark/pull/38495#issuecomment-1316254348 Retest this please -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

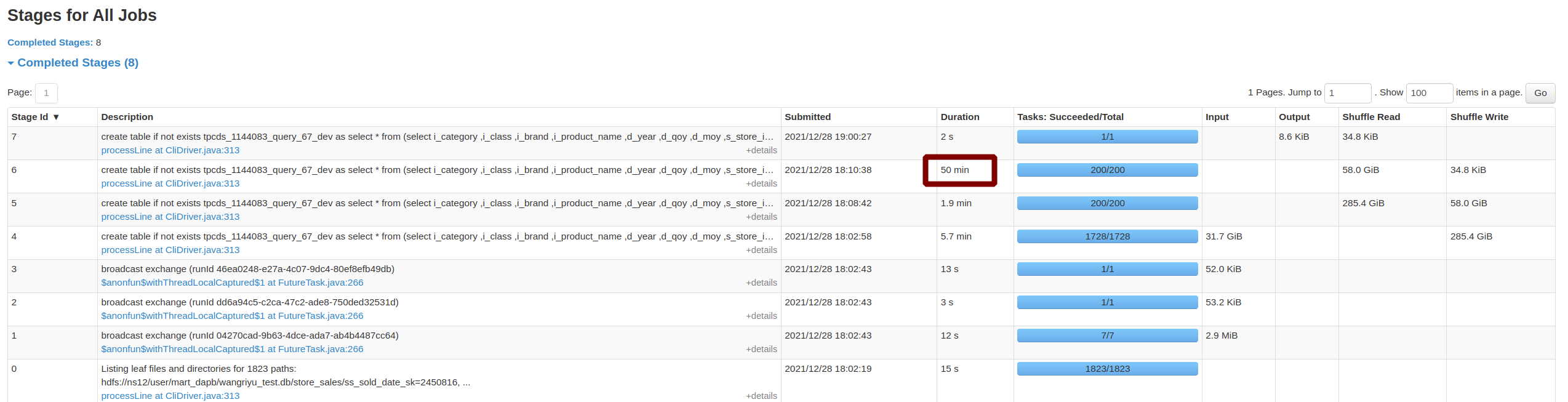

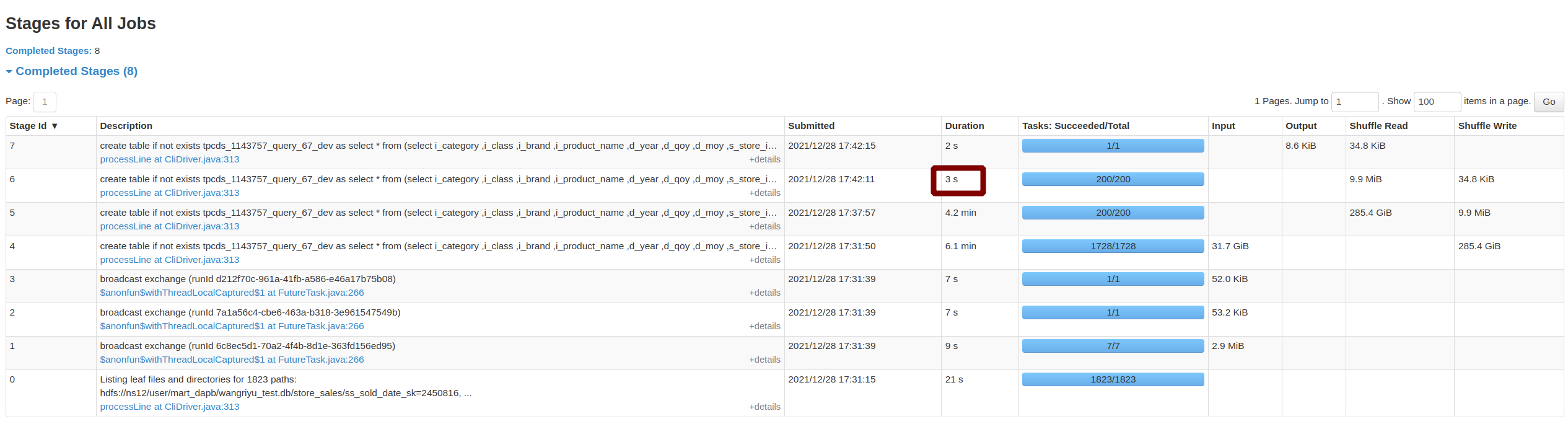

[GitHub] [spark] zhengruifeng opened a new pull request, #34367: [SPARK-37099][SQL] Introduce a rank-based filter to optimize top-k computation

zhengruifeng opened a new pull request, #34367: URL: https://github.com/apache/spark/pull/34367 ### What changes were proposed in this pull request? introduce a new node `RankLimit` to filter out uncessary rows based on rank computed on partial dataset. it supports following pattern: ``` select (... (row_number|rank|dense_rank)() over ( [partition by ...] order by ... ) as rn) where rn (==|<|<=) k and other conditions ``` For these three rank functions (row_number|rank|dense_rank), the rank of a key computed on partitial dataset always <= its final rank computed on the whole dataset,so we can safely discard rows with partitial rank > `k`, anywhere. ### Why are the changes needed? 1, reduce the shuffle write; 2, solve skewed-window problem, a practical case was optimized from 2.5h to 26min ### Does this PR introduce _any_ user-facing change? a new config is added ### How was this patch tested? 1, added testsuits, practical cases on our production system 2, 10TiB TPC-DS - q67: Before this PR | After this PR --- | --- Job Duration=58min|Job Duration=11min Stage Duration=50min|Stage Duration=3sec Stage Shuffle=58.0 GiB|Stage Shuffle=9.9 MiB | | 3, added benchmark: ``` [info] Java HotSpot(TM) 64-Bit Server VM 1.8.0_301-b09 on Linux 5.11.0-41-generic [info] Intel(R) Core(TM) i7-8850H CPU @ 2.60GHz [info] Benchmark Top-K: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative [info] [info] ROW_NUMBER WITHOUT PARTITION 10688 11377 664 2.0 509.6 1.0X [info] ROW_NUMBER WITHOUT PARTITION (RANKLIMIT Sorting) 2678 2962 137 7.8 127.7 4.0X [info] ROW_NUMBER WITHOUT PARTITION (RANKLIMIT TakeOrdered) 1585 1611 19 13.2 75.6 6.7X [info] RANK WITHOUT PARTITION11504 12056 406 1.8 548.6 0.9X [info] RANK WITHOUT PARTITION (RANKLIMIT) 3020 3148 89 6.9 144.0 3.5X [info] DENSE_RANK WITHOUT PARTITION 11728 11915 216 1.8 559.3 0.9X [info] DENSE_RANK WITHOUT PARTITION (RANKLIMIT) 2632 2906 182 8.0 125.5 4.1X [info] ROW_NUMBER WITH PARTITION 23139 24025 500 0.91103.4 0.5X [info] ROW_NUMBER WITH PARTITION (RANKLIMIT Sorting) 7034 7575 361 3.0 335.4 1.5X [info] ROW_NUMBER WITH PARTITION (RANKLIMIT TakeOrdered) 5958 6391 311 3.5 284.1 1.8X [info] RANK WITH PARTITION 24942 26005 795 0.81189.4 0.4X [info] RANK WITH PARTITION (RANKLIMIT)7217 7517 219 2.9 344.1 1.5X [info] DENSE_RANK WITH PARTITION 24843 26726 221 0.81184.6 0.4X [info] DENSE_RANK WITH PARTITION (RANKLIMIT) 7455 7978 560 2.8 355.5 1.4X ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a diff in pull request #38257: [SPARK-40798][SQL] Alter partition should verify value follow storeAssignmentPolicy

HyukjinKwon commented on code in PR #38257: URL: https://github.com/apache/spark/pull/38257#discussion_r1023455115 ## docs/sql-migration-guide.md: ## @@ -34,6 +34,7 @@ license: | - Valid hexadecimal strings should include only allowed symbols (0-9A-Fa-f). - Valid values for `fmt` are case-insensitive `hex`, `base64`, `utf-8`, `utf8`. - Since Spark 3.4, Spark throws only `PartitionsAlreadyExistException` when it creates partitions but some of them exist already. In Spark 3.3 or earlier, Spark can throw either `PartitionsAlreadyExistException` or `PartitionAlreadyExistsException`. + - Since Spark 3.4, Spark will do validation for partition spec in ALTER PARTITION to follow the behavior of `spark.sql.storeAssignmentPolicy` which may cause an exception if type conversion fails, e.g. `ALTER TABLE .. ADD PARTITION(p='a')` if column `p` is int type. To restore the legacy behavior, set `spark.sql.legacy.skipPartitionSpecTypeValidation` to `true`. Review Comment: just made a PR :-) https://github.com/apache/spark/pull/38667 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon opened a new pull request, #38667: [SPARK-40798][DOCS] Fix a typo in the configuration name at migration guide

HyukjinKwon opened a new pull request, #38667: URL: https://github.com/apache/spark/pull/38667 ### What changes were proposed in this pull request? This PR is a followup of https://github.com/apache/spark/pull/38257 to fix a typo from `spark.sql.legacy.skipPartitionSpecTypeValidation` to `spark.sql.legacy.skipTypeValidationOnAlterPartition`. ### Why are the changes needed? To show users the correct configuration name for legacy behaviours. ### Does this PR introduce _any_ user-facing change? No, doc-only. ### How was this patch tested? N/A -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] mridulm commented on pull request #38064: [SPARK-40622][SQL][CORE]Remove the limitation that single task result must fit in 2GB

mridulm commented on PR #38064: URL: https://github.com/apache/spark/pull/38064#issuecomment-1316229029 Merged to master. Thanks for fixing this @liuzqt ! Thanks for the reviews @Ngone51, @sadikovi, @jiangxb1987 :-) And thanks for help with GA @HyukjinKwon and @Yikun ! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a diff in pull request #38257: [SPARK-40798][SQL] Alter partition should verify value follow storeAssignmentPolicy

HyukjinKwon commented on code in PR #38257: URL: https://github.com/apache/spark/pull/38257#discussion_r1023453997 ## docs/sql-migration-guide.md: ## @@ -34,6 +34,7 @@ license: | - Valid hexadecimal strings should include only allowed symbols (0-9A-Fa-f). - Valid values for `fmt` are case-insensitive `hex`, `base64`, `utf-8`, `utf8`. - Since Spark 3.4, Spark throws only `PartitionsAlreadyExistException` when it creates partitions but some of them exist already. In Spark 3.3 or earlier, Spark can throw either `PartitionsAlreadyExistException` or `PartitionAlreadyExistsException`. + - Since Spark 3.4, Spark will do validation for partition spec in ALTER PARTITION to follow the behavior of `spark.sql.storeAssignmentPolicy` which may cause an exception if type conversion fails, e.g. `ALTER TABLE .. ADD PARTITION(p='a')` if column `p` is int type. To restore the legacy behavior, set `spark.sql.legacy.skipPartitionSpecTypeValidation` to `true`. Review Comment: nice catch -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] asfgit closed pull request #38064: [SPARK-40622][SQL][CORE]Remove the limitation that single task result must fit in 2GB

asfgit closed pull request #38064: [SPARK-40622][SQL][CORE]Remove the limitation that single task result must fit in 2GB URL: https://github.com/apache/spark/pull/38064 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #38609: [SPARK-40593][BUILD][CONNECT] Make user can build and test `connect` module by specifying the user-defined `protoc` and `protoc

LuciferYang commented on code in PR #38609:

URL: https://github.com/apache/spark/pull/38609#discussion_r1023446576

##

project/SparkBuild.scala:

##

@@ -109,6 +109,14 @@ object SparkBuild extends PomBuild {

if (profiles.contains("jdwp-test-debug")) {

sys.props.put("test.jdwp.enabled", "true")

}

+if (profiles.contains("user-defined-pb")) {

Review Comment:

done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #38609: [SPARK-40593][BUILD][CONNECT] Make user can build and test `connect` module by specifying the user-defined `protoc` and `protoc

LuciferYang commented on code in PR #38609:

URL: https://github.com/apache/spark/pull/38609#discussion_r1023446458

##

connector/connect/pom.xml:

##

@@ -371,4 +350,68 @@

+

+

+ official-pb

Review Comment:

done

##

connector/connect/pom.xml:

##

@@ -371,4 +350,68 @@

+

+

+ official-pb

+

+true

+

+

+

+

+

+org.xolstice.maven.plugins

+protobuf-maven-plugin

+0.6.1

+

+

com.google.protobuf:protoc:${protobuf.version}:exe:${os.detected.classifier}

+ grpc-java

+

io.grpc:protoc-gen-grpc-java:${io.grpc.version}:exe:${os.detected.classifier}

+ src/main/protobuf

+

+

+

+

+ compile

+ compile-custom

+ test-compile

+

+

+

+

+

+

+

+

+ user-defined-pb

Review Comment:

done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #38609: [SPARK-40593][BUILD][CONNECT] Make user can build and test `connect` module by specifying the user-defined `protoc` and `protoc

LuciferYang commented on code in PR #38609: URL: https://github.com/apache/spark/pull/38609#discussion_r1023446300 ## connector/connect/README.md: ## @@ -24,7 +24,31 @@ or ```bash ./build/sbt -Phive clean package ``` - + +### Build with user-defined `protoc` and `protoc-gen-grpc-java` + +When the user cannot use the official `protoc` and `protoc-gen-grpc-java` binary files to build the `connect` module in the compilation environment, +for example, compiling `connect` module on CentOS 6 or CentOS 7 which the default `glibc` version is less than 2.14, we can try to compile and test by +specifying the user-defined `protoc` and `protoc-gen-grpc-java` binary files as follows: + +```bash +export CONNECT_PROTOC_EXEC_PATH=/path-to-protoc-exe +export CONNECT_PLUGIN_EXEC_PATH=/path-to-protoc-gen-grpc-java-exe +./build/mvn -Phive -Puser-defined-pb clean package Review Comment: done ## connector/connect/README.md: ## @@ -24,7 +24,31 @@ or ```bash ./build/sbt -Phive clean package ``` - + +### Build with user-defined `protoc` and `protoc-gen-grpc-java` + +When the user cannot use the official `protoc` and `protoc-gen-grpc-java` binary files to build the `connect` module in the compilation environment, +for example, compiling `connect` module on CentOS 6 or CentOS 7 which the default `glibc` version is less than 2.14, we can try to compile and test by +specifying the user-defined `protoc` and `protoc-gen-grpc-java` binary files as follows: + +```bash +export CONNECT_PROTOC_EXEC_PATH=/path-to-protoc-exe +export CONNECT_PLUGIN_EXEC_PATH=/path-to-protoc-gen-grpc-java-exe +./build/mvn -Phive -Puser-defined-pb clean package +``` + +or + +```bash +export CONNECT_PROTOC_EXEC_PATH=/path-to-protoc-exe +export CONNECT_PLUGIN_EXEC_PATH=/path-to-protoc-gen-grpc-java-exe +./build/sbt -Puser-defined-pb clean package Review Comment: done -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Yaohua628 commented on a diff in pull request #38663: [SPARK-41143][SQL] Add named argument function syntax support

Yaohua628 commented on code in PR #38663:

URL: https://github.com/apache/spark/pull/38663#discussion_r1023434857

##

sql/catalyst/src/main/scala/org/apache/spark/sql/errors/QueryCompilationErrors.scala:

##

@@ -3380,4 +3380,20 @@ private[sql] object QueryCompilationErrors extends

QueryErrorsBase {

"unsupported" -> unsupported.toString,

"class" -> unsupported.getClass.toString))

}

+

+ def tableFunctionDuplicateNamedArguments(name: String, pos: Int): Throwable

= {

+new AnalysisException(

Review Comment:

Thanks for the feedback!

Honestly, I think the specific error is more like a compilation error

instead of a parsing error?

We can parse it correctly, there is no syntax error, it is more like failing

to resolve/compile the given `UnresolvedTableValuedFunction` into an actual

resolved function.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Yaohua628 commented on a diff in pull request #38663: [SPARK-41143][SQL] Add named argument function syntax support

Yaohua628 commented on code in PR #38663:

URL: https://github.com/apache/spark/pull/38663#discussion_r1023431618

##

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/parser/PlanParserSuite.scala:

##

@@ -852,6 +852,31 @@ class PlanParserSuite extends AnalysisTest {

stop = 43))

}

+ test("table valued function with named arguments") {

+// All named arguments

+assertEqual(

+ "select * from my_tvf(arg1 => 'value1', arg2 => true)",

+ UnresolvedTableValuedFunction("my_tvf",

+NamedArgumentExpression("arg1", Literal("value1")) ::

+ NamedArgumentExpression("arg2", Literal(true)) :: Nil,

Seq.empty).select(star()))

+

+// Unnamed and named arguments

+assertEqual(

+ "select * from my_tvf(2, arg1 => 'value1', arg2 => true)",

+ UnresolvedTableValuedFunction("my_tvf",

+Literal(2) ::

+ NamedArgumentExpression("arg1", Literal("value1")) ::

+ NamedArgumentExpression("arg2", Literal(true)) :: Nil,

Seq.empty).select(star()))

+

+// Mixed arguments

+assertEqual(

+ "select * from my_tvf(arg1 => 'value1', 2, arg2 => true)",

Review Comment:

Got it! Good point, added a test, it will throw:

```

org.apache.spark.sql.AnalysisException: could not resolve `my_func` to a

table-valued function; line 1 pos 14;

'Project [*]

+- 'UnresolvedTableValuedFunction [my_func], [jack, age => 18]

```

And the next step, we need to resolve this `UnresolvedTableValuedFunction`

plan to actual function.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Yaohua628 commented on a diff in pull request #38663: [SPARK-41143][SQL] Add named argument function syntax support

Yaohua628 commented on code in PR #38663:

URL: https://github.com/apache/spark/pull/38663#discussion_r1023428966

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/NamedArgumentFunction.scala:

##

@@ -0,0 +1,92 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.plans.logical

+

+import java.util.Locale

+

+import scala.collection.mutable

+

+import org.apache.spark.sql.catalyst.expressions.{Expression,

NamedArgumentExpression}

+import

org.apache.spark.sql.errors.QueryCompilationErrors.{tableFunctionDuplicateNamedArguments,

tableFunctionUnexpectedArgument}

+import org.apache.spark.sql.types._

+

+/**

+ * A trait to define a named argument function:

+ * Usage: _FUNC_(arg0, arg1, arg2, arg5 => value5, arg8 => value8)

+ *

+ * - Arguments can be passed positionally or by name

+ * - Positional arguments cannot come after a named argument

+ */

+trait NamedArgumentFunction {

+ /**

+ * A trait [[Param]] that is used to define function parameter

+ * - name: case insensitive name

Review Comment:

Thanks for your feedback!