This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch branch-3.3

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.3 by this push:

new fdc51c73fb0 [SPARK-40705][SQL] Handle case of using mutable array when

converting Row to JSON for Scala 2.13

fdc51c73fb0 is described below

commit fdc51c73fb08eb2cd234cdaf1032a4e54ff0b1a4

Author: Ait Zeouay Amrane <a.zeouayam...@gmail.com>

AuthorDate: Mon Oct 10 10:18:51 2022 -0500

[SPARK-40705][SQL] Handle case of using mutable array when converting Row

to JSON for Scala 2.13

### What changes were proposed in this pull request?

I encountered an issue using Spark while reading JSON files based on a

schema it throws every time an exception related to conversion of types.

>Note: This issue can be reproduced only with Scala `2.13`, I'm not having

this issue with `2.12`

````

Failed to convert value ArraySeq(1, 2, 3) (class of class

scala.collection.mutable.ArraySeq$ofRef}) with the type of

ArrayType(StringType,true) to JSON.

java.lang.IllegalArgumentException: Failed to convert value ArraySeq(1, 2,

3) (class of class scala.collection.mutable.ArraySeq$ofRef}) with the type of

ArrayType(StringType,true) to JSON.

````

If I add ArraySeq to the matching cases, the test that I added passed

successfully

With the current code source, the test fails and we have this following

error

### Why are the changes needed?

If the person is using Scala 2.13, they can't parse an array. Which means

they need to fallback to 2.12 to keep the project functioning

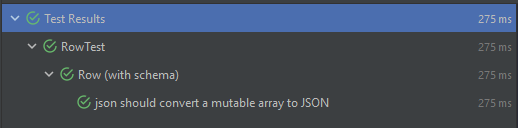

### How was this patch tested?

I added a sample unit test for the case, but I can add more if you want to.

Closes #38154 from Amraneze/fix/spark_40705.

Authored-by: Ait Zeouay Amrane <a.zeouayam...@gmail.com>

Signed-off-by: Sean Owen <sro...@gmail.com>

(cherry picked from commit 9a97f8c62bcd1ad9f34c6318792ae443af46ea85)

Signed-off-by: Sean Owen <sro...@gmail.com>

---

sql/catalyst/src/main/scala/org/apache/spark/sql/Row.scala | 2 ++

.../src/test/scala/org/apache/spark/sql/RowTest.scala | 11 +++++++++++

2 files changed, 13 insertions(+)

diff --git a/sql/catalyst/src/main/scala/org/apache/spark/sql/Row.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/Row.scala

index 4f6c9a8c703..72e1dd94c94 100644

--- a/sql/catalyst/src/main/scala/org/apache/spark/sql/Row.scala

+++ b/sql/catalyst/src/main/scala/org/apache/spark/sql/Row.scala

@@ -584,6 +584,8 @@ trait Row extends Serializable {

case (i: CalendarInterval, _) => JString(i.toString)

case (a: Array[_], ArrayType(elementType, _)) =>

iteratorToJsonArray(a.iterator, elementType)

+ case (a: mutable.ArraySeq[_], ArrayType(elementType, _)) =>

+ iteratorToJsonArray(a.iterator, elementType)

case (s: Seq[_], ArrayType(elementType, _)) =>

iteratorToJsonArray(s.iterator, elementType)

case (m: Map[String @unchecked, _], MapType(StringType, valueType, _)) =>

diff --git a/sql/catalyst/src/test/scala/org/apache/spark/sql/RowTest.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/RowTest.scala

index 385f7497368..82731cdb220 100644

--- a/sql/catalyst/src/test/scala/org/apache/spark/sql/RowTest.scala

+++ b/sql/catalyst/src/test/scala/org/apache/spark/sql/RowTest.scala

@@ -17,6 +17,9 @@

package org.apache.spark.sql

+import scala.collection.mutable.ArraySeq

+

+import org.json4s.JsonAST.{JArray, JObject, JString}

import org.scalatest.funspec.AnyFunSpec

import org.scalatest.matchers.must.Matchers

import org.scalatest.matchers.should.Matchers._

@@ -91,6 +94,14 @@ class RowTest extends AnyFunSpec with Matchers {

it("getAs() on type extending AnyVal does not throw exception when value

is null") {

sampleRowWithoutCol3.getAs[String](sampleRowWithoutCol3.fieldIndex("col1"))

shouldBe null

}

+

+ it("json should convert a mutable array to JSON") {

+ val schema = new StructType().add(StructField("list",

ArrayType(StringType)))

+ val values = ArraySeq("1", "2", "3")

+ val row = new GenericRowWithSchema(Array(values), schema)

+ val expectedList = JArray(JString("1") :: JString("2") :: JString("3")

:: Nil)

+ row.jsonValue shouldBe new JObject(("list", expectedList) :: Nil)

+ }

}

describe("row equals") {

---------------------------------------------------------------------

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org