Repository: spark

Updated Branches:

refs/heads/branch-1.5 8a7956283 - 8b00c0690

[SPARK-8978] [STREAMING] Implements the DirectKafkaRateController

Author: Dean Wampler d...@concurrentthought.com

Author: Nilanjan Raychaudhuri nraychaudh...@gmail.com

Author: François Garillot

Repository: spark

Updated Branches:

refs/heads/master 0d7aac99d - a1bbf1bc5

[SPARK-8978] [STREAMING] Implements the DirectKafkaRateController

Author: Dean Wampler d...@concurrentthought.com

Author: Nilanjan Raychaudhuri nraychaudh...@gmail.com

Author: François Garillot franc...@garillot.net

Repository: spark

Updated Branches:

refs/heads/branch-1.5 3997dd3fd - 8ecfb05e3

[DOCS] [STREAMING] make the existing parameter docs for OffsetRange acâ¦

â¦tually visible

Author: cody koeninger c...@koeninger.org

Closes #7995 from koeninger/doc-fixes and squashes the following commits:

Repository: spark

Updated Branches:

refs/heads/master 0a078303d - 1723e3489

[DOCS] [STREAMING] make the existing parameter docs for OffsetRange acâ¦

â¦tually visible

Author: cody koeninger c...@koeninger.org

Closes #7995 from koeninger/doc-fixes and squashes the following commits:

Repository: spark

Updated Branches:

refs/heads/master 1f62f104c - 54c0789a0

[SPARK-9493] [ML] add featureIndex to handle vector features in

IsotonicRegression

This PR contains the following changes:

* add `featureIndex` to handle vector features (in order to chain isotonic

regression easily

Repository: spark

Updated Branches:

refs/heads/branch-1.5 92e8acc98 - ee43d355b

[SPARK-9493] [ML] add featureIndex to handle vector features in

IsotonicRegression

This PR contains the following changes:

* add `featureIndex` to handle vector features (in order to chain isotonic

regression

Repository: spark

Updated Branches:

refs/heads/master 54c0789a0 - abfedb9cd

[SPARK-9211] [SQL] [TEST] normalize line separators before generating MD5 hash

The golden answer file names for the existing Hive comparison tests were

generated using a MD5 hash of the query text which uses

Repository: spark

Updated Branches:

refs/heads/branch-1.5 3137628bc - 3997dd3fd

[SPARK-9556] [SPARK-9619] [SPARK-9624] [STREAMING] Make BlockGenerator more

robust and make all BlockGenerators subscribe to rate limit updates

In some receivers, instead of using the default `BlockGenerator` in

[SPARK-9630] [SQL] Clean up new aggregate operators (SPARK-9240 follow up)

This is the followup of https://github.com/apache/spark/pull/7813. It renames

`HybridUnsafeAggregationIterator` to `TungstenAggregationIterator` and makes it

only work with `UnsafeRow`. Also, I add a `TungstenAggregate`

[SPARK-9630] [SQL] Clean up new aggregate operators (SPARK-9240 follow up)

This is the followup of https://github.com/apache/spark/pull/7813. It renames

`HybridUnsafeAggregationIterator` to `TungstenAggregationIterator` and makes it

only work with `UnsafeRow`. Also, I add a `TungstenAggregate`

Repository: spark

Updated Branches:

refs/heads/branch-1.5 980687206 - 272e88342

http://git-wip-us.apache.org/repos/asf/spark/blob/272e8834/sql/core/src/main/scala/org/apache/spark/sql/execution/aggregate/utils.scala

--

diff

Repository: spark

Updated Branches:

refs/heads/master 346209097 - 3504bf3aa

http://git-wip-us.apache.org/repos/asf/spark/blob/3504bf3a/sql/core/src/main/scala/org/apache/spark/sql/execution/aggregate/utils.scala

--

diff --git

Repository: spark

Updated Branches:

refs/heads/branch-1.5 272e88342 - d0a648ca1

[SPARK-9645] [YARN] [CORE] Allow shuffle service to read shuffle files.

Spark should not mess with the permissions of directories created

by the cluster manager. Here, by setting the block manager dir

permissions

Repository: spark

Updated Branches:

refs/heads/master 3504bf3aa - e234ea1b4

[SPARK-9645] [YARN] [CORE] Allow shuffle service to read shuffle files.

Spark should not mess with the permissions of directories created

by the cluster manager. Here, by setting the block manager dir

permissions to

Repository: spark

Updated Branches:

refs/heads/branch-1.5 8b00c0690 - d5f788121

[SPARK-9618] [SQL] Use the specified schema when reading Parquet files

The user specified schema is currently ignored when loading Parquet files.

One workaround is to use the `format` and `load` methods instead

Repository: spark

Updated Branches:

refs/heads/master 21fdfd7d6 - 0a078303d

[SPARK-9556] [SPARK-9619] [SPARK-9624] [STREAMING] Make BlockGenerator more

robust and make all BlockGenerators subscribe to rate limit updates

In some receivers, instead of using the default `BlockGenerator` in

Repository: spark

Updated Branches:

refs/heads/branch-1.5 d5f788121 - 3d247672b

[SPARK-9381] [SQL] Migrate JSON data source to the new partitioning data source

Support partitioning for the JSON data source.

Still 2 open issues for the `HadoopFsRelation`

- `refresh()` will invoke the

Repository: spark

Updated Branches:

refs/heads/branch-1.5 3d247672b - 92e8acc98

[SPARK-6923] [SPARK-7550] [SQL] Persists data source relations in Hive

compatible format when possible

This PR is a fork of PR #5733 authored by chenghao-intel. For committers who's

going to merge this PR,

Repository: spark

Updated Branches:

refs/heads/master abfedb9cd - 21fdfd7d6

[SPARK-9548][SQL] Add a destructive iterator for BytesToBytesMap

This pull request adds a destructive iterator to BytesToBytesMap. When used,

the iterator frees pages as it traverses them. This is part of the effort

Repository: spark

Updated Branches:

refs/heads/master a1bbf1bc5 - 1f62f104c

[SPARK-9632][SQL] update InternalRow.toSeq to make it accept data type info

This re-applies #7955, which was reverted due to a race condition to fix build

breaking.

Author: Wenchen Fan cloud0...@outlook.com

Author:

Repository: spark

Updated Branches:

refs/heads/branch-1.5 990b4bf7c - 3137628bc

[SPARK-9548][SQL] Add a destructive iterator for BytesToBytesMap

This pull request adds a destructive iterator to BytesToBytesMap. When used,

the iterator frees pages as it traverses them. This is part of the

Repository: spark

Updated Branches:

refs/heads/branch-1.5 8ecfb05e3 - 980687206

[SPARK-9639] [STREAMING] Fix a potential NPE in Streaming JobScheduler

Because `JobScheduler.stop(false)` may set `eventLoop` to null when

`JobHandler` is running, then it's possible that when `post` is called,

Repository: spark

Updated Branches:

refs/heads/master 672f46766 - f0cda587f

[SPARK-7550] [SQL] [MINOR] Fixes logs when persisting DataFrames

Author: Cheng Lian l...@databricks.com

Closes #8021 from liancheng/spark-7550/fix-logs and squashes the following

commits:

b7bd0ed [Cheng Lian] Fixes

Repository: spark

Updated Branches:

refs/heads/master fe12277b4 - 672f46766

[SPARK-8057][Core]Call TaskAttemptContext.getTaskAttemptID using Reflection

Someone may use the Spark core jar in the maven repo with hadoop 1. SPARK-2075

has already resolved the compatibility issue to support it.

Repository: spark

Updated Branches:

refs/heads/branch-1.5 5491dfb9a - e902c4f26

[SPARK-8057][Core]Call TaskAttemptContext.getTaskAttemptID using Reflection

Someone may use the Spark core jar in the maven repo with hadoop 1. SPARK-2075

has already resolved the compatibility issue to support

Repository: spark

Updated Branches:

refs/heads/master 17284db31 - fe12277b4

Fix doc typo

Straightforward fix on doc typo

Author: Jeff Zhang zjf...@apache.org

Closes #8019 from zjffdu/master and squashes the following commits:

aed6e64 [Jeff Zhang] Fix doc typo

Project:

Repository: spark

Updated Branches:

refs/heads/branch-1.5 472f0dc34 - 5491dfb9a

Fix doc typo

Straightforward fix on doc typo

Author: Jeff Zhang zjf...@apache.org

Closes #8019 from zjffdu/master and squashes the following commits:

aed6e64 [Jeff Zhang] Fix doc typo

(cherry picked from

Repository: spark

Updated Branches:

refs/heads/branch-1.5 e902c4f26 - aedc8f3c3

[SPARK-7550] [SQL] [MINOR] Fixes logs when persisting DataFrames

Author: Cheng Lian l...@databricks.com

Closes #8021 from liancheng/spark-7550/fix-logs and squashes the following

commits:

b7bd0ed [Cheng Lian]

Repository: spark

Updated Branches:

refs/heads/master f0cda587f - 7aaed1b11

[SPARK-8862][SQL]Support multiple SQLContexts in Web UI

This is a follow-up PR to solve the UI issue when there are multiple

SQLContexts. Each SQLContext has a separate tab and contains queries which are

executed by

Repository: spark

Updated Branches:

refs/heads/branch-1.5 aedc8f3c3 - c34fdaf55

[SPARK-8862][SQL]Support multiple SQLContexts in Web UI

This is a follow-up PR to solve the UI issue when there are multiple

SQLContexts. Each SQLContext has a separate tab and contains queries which are

Repository: spark

Updated Branches:

refs/heads/branch-1.4 10ea6fb4d - 116f61187

[SPARK-9633] [BUILD] SBT download locations outdated; need an update

Remove 2 defunct SBT download URLs and replace with the 1 known download URL.

Also, use https.

Follow up on

Repository: spark

Updated Branches:

refs/heads/branch-1.3 384793dff - b104501d3

[SPARK-9633] [BUILD] SBT download locations outdated; need an update

Remove 2 defunct SBT download URLs and replace with the 1 known download URL.

Also, use https.

Follow up on

Repository: spark

Updated Branches:

refs/heads/branch-1.5 d0a648ca1 - 985e454cb

[SPARK-9633] [BUILD] SBT download locations outdated; need an update

Remove 2 defunct SBT download URLs and replace with the 1 known download URL.

Also, use https.

Follow up on

Repository: spark

Updated Branches:

refs/heads/master e234ea1b4 - 681e3024b

[SPARK-9633] [BUILD] SBT download locations outdated; need an update

Remove 2 defunct SBT download URLs and replace with the 1 known download URL.

Also, use https.

Follow up on

Repository: spark

Updated Branches:

refs/heads/master 4e70e8256 - 0867b23c7

[SPARK-9650][SQL] Fix quoting behavior on interpolated column names

Make sure that `$column` is consistent with other methods with respect to

backticks. Adds a bunch of tests for various ways of constructing

Repository: spark

Updated Branches:

refs/heads/branch-1.5 985e454cb - 75b4e5ab3

[SPARK-9691] [SQL] PySpark SQL rand function treats seed 0 as no seed

https://issues.apache.org/jira/browse/SPARK-9691

jkbradley rxin

Author: Yin Huai yh...@databricks.com

Closes #7999 from yhuai/pythonRand and

Repository: spark

Updated Branches:

refs/heads/master 681e3024b - baf4587a5

[SPARK-9691] [SQL] PySpark SQL rand function treats seed 0 as no seed

https://issues.apache.org/jira/browse/SPARK-9691

jkbradley rxin

Author: Yin Huai yh...@databricks.com

Closes #7999 from yhuai/pythonRand and

Repository: spark

Updated Branches:

refs/heads/branch-1.5 b4feccf6c - 9be9d3842

[SPARK-9650][SQL] Fix quoting behavior on interpolated column names

Make sure that `$column` is consistent with other methods with respect to

backticks. Adds a bunch of tests for various ways of constructing

Repository: spark

Updated Branches:

refs/heads/branch-1.5 75b4e5ab3 - b4feccf6c

[SPARK-9228] [SQL] use tungsten.enabled in public for both of codegen/unsafe

spark.sql.tungsten.enabled will be the default value for both codegen and

unsafe, they are kept internally for debug/testing.

cc

Repository: spark

Updated Branches:

refs/heads/master baf4587a5 - 4e70e8256

[SPARK-9228] [SQL] use tungsten.enabled in public for both of codegen/unsafe

spark.sql.tungsten.enabled will be the default value for both codegen and

unsafe, they are kept internally for debug/testing.

cc marmbrus

Repository: spark

Updated Branches:

refs/heads/branch-1.4 116f61187 - e5a994f21

[SPARK-9691] [SQL] PySpark SQL rand function treats seed 0 as no seed

https://issues.apache.org/jira/browse/SPARK-9691

jkbradley rxin

Author: Yin Huai yh...@databricks.com

Closes #7999 from yhuai/pythonRand and

Repository: spark

Updated Branches:

refs/heads/master b87825310 - 014a9f9d8

[SPARK-9709] [SQL] Avoid starving unsafe operators that use sort

The issue is that a task may run multiple sorts, and the sorts run by the child

operator (i.e. parent RDD) may acquire all available memory such that

Repository: spark

Updated Branches:

refs/heads/master 0867b23c7 - 49b1504fe

Revert [SPARK-9228] [SQL] use tungsten.enabled in public for both of

codegen/unsafe

This reverts commit 4e70e8256ce2f45b438642372329eac7b1e9e8cf.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit:

Repository: spark

Updated Branches:

refs/heads/branch-1.5 9be9d3842 - 37b6403cb

[SPARK-9692] Remove SqlNewHadoopRDD's generated Tuple2 and

InterruptibleIterator.

A small performance optimization â we don't need to generate a Tuple2 and

then immediately discard the key. We also don't need

Repository: spark

Updated Branches:

refs/heads/master 49b1504fe - b87825310

[SPARK-9692] Remove SqlNewHadoopRDD's generated Tuple2 and

InterruptibleIterator.

A small performance optimization â we don't need to generate a Tuple2 and

then immediately discard the key. We also don't need an

Repository: spark

Updated Branches:

refs/heads/branch-1.5 37b6403cb - 472f0dc34

[SPARK-9709] [SQL] Avoid starving unsafe operators that use sort

The issue is that a task may run multiple sorts, and the sorts run by the child

operator (i.e. parent RDD) may acquire all available memory such

Repository: spark

Updated Branches:

refs/heads/master 98e69467d - 5e1b0ef07

[SPARK-9659][SQL] Rename inSet to isin to match Pandas function.

Inspiration drawn from this blog post:

https://lab.getbase.com/pandarize-spark-dataframes/

Author: Reynold Xin r...@databricks.com

Closes #7977 from

Repository: spark

Updated Branches:

refs/heads/branch-1.5 78f168e97 - 6b8d2d7ed

[SPARK-9659][SQL] Rename inSet to isin to match Pandas function.

Inspiration drawn from this blog post:

https://lab.getbase.com/pandarize-spark-dataframes/

Author: Reynold Xin r...@databricks.com

Closes #7977

Repository: spark

Updated Branches:

refs/heads/branch-1.5 b51159def - 8a7956283

[SPARK-9641] [DOCS] spark.shuffle.service.port is not documented

Document spark.shuffle.service.{enabled,port}

CC sryza tgravescs

This is pretty minimal; is there more to say here about the service?

Author: Sean

Repository: spark

Updated Branches:

refs/heads/master 93085c992 - 9f94c85ff

[SPARK-9593] [SQL] [HOTFIX] Makes the Hadoop shims loading fix more robust

This is a follow-up of #7929.

We found that Jenkins SBT master build still fails because of the Hadoop shims

loading issue. But the failure

Repository: spark

Updated Branches:

refs/heads/branch-1.5 c39d5d144 - 11c28a568

[SPARK-9593] [SQL] Fixes Hadoop shims loading

This PR is used to workaround CDH Hadoop versions like 2.0.0-mr1-cdh4.1.1.

Internally, Hive `ShimLoader` tries to load different versions of Hadoop shims

by checking

Repository: spark

Updated Branches:

refs/heads/branch-1.5 e24b97650 - 78f168e97

[SPARK-9615] [SPARK-9616] [SQL] [MLLIB] Bugs related to FrequentItems when

merging and with Tungsten

In short:

1- FrequentItems should not use the InternalRow representation, because the

keys in the map get

Repository: spark

Updated Branches:

refs/heads/master c5c6aded6 - 076ec0568

[SPARK-9533] [PYSPARK] [ML] Add missing methods in Word2Vec ML

After https://github.com/apache/spark/pull/7263 it is pretty straightforward to

Python wrappers.

Author: MechCoder manojkumarsivaraj...@gmail.com

Repository: spark

Updated Branches:

refs/heads/branch-1.5 70b9ed11d - e24b97650

[SPARK-9533] [PYSPARK] [ML] Add missing methods in Word2Vec ML

After https://github.com/apache/spark/pull/7263 it is pretty straightforward to

Python wrappers.

Author: MechCoder manojkumarsivaraj...@gmail.com

Repository: spark

Updated Branches:

refs/heads/master 5e1b0ef07 - 6e009cb9c

[SPARK-9632][SQL] update InternalRow.toSeq to make it accept data type info

Author: Wenchen Fan cloud0...@outlook.com

Closes #7955 from cloud-fan/toSeq and squashes the following commits:

21665e2 [Wenchen Fan] fix

Repository: spark

Updated Branches:

refs/heads/branch-1.5 6b8d2d7ed - 2382b483a

[SPARK-9632][SQL] update InternalRow.toSeq to make it accept data type info

Author: Wenchen Fan cloud0...@outlook.com

Closes #7955 from cloud-fan/toSeq and squashes the following commits:

21665e2 [Wenchen Fan]

Repository: spark

Updated Branches:

refs/heads/master 6e009cb9c - 2eca46a17

Revert [SPARK-9632][SQL] update InternalRow.toSeq to make it accept data type

info

This reverts commit 6e009cb9c4d7a395991e10dab427f37019283758.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit:

Repository: spark

Updated Branches:

refs/heads/branch-1.5 2382b483a - b51159def

[SPARK-9632] [SQL] [HOT-FIX] Fix build.

seems https://github.com/apache/spark/pull/7955 breaks the build.

Author: Yin Huai yh...@databricks.com

Closes #8001 from yhuai/SPARK-9632-fixBuild and squashes the

Repository: spark

Updated Branches:

refs/heads/master 2eca46a17 - cdd53b762

[SPARK-9632] [SQL] [HOT-FIX] Fix build.

seems https://github.com/apache/spark/pull/7955 breaks the build.

Author: Yin Huai yh...@databricks.com

Closes #8001 from yhuai/SPARK-9632-fixBuild and squashes the following

Repository: spark

Updated Branches:

refs/heads/branch-1.5 11c28a568 - cc4c569a8

[SPARK-9593] [SQL] [HOTFIX] Makes the Hadoop shims loading fix more robust

This is a follow-up of #7929.

We found that Jenkins SBT master build still fails because of the Hadoop shims

loading issue. But the

Repository: spark

Updated Branches:

refs/heads/master 9f94c85ff - c5c6aded6

[SPARK-9112] [ML] Implement Stats for LogisticRegression

I have added support for stats in LogisticRegression. The API is similar to

that of LinearRegression with LogisticRegressionTrainingSummary and

Repository: spark

Updated Branches:

refs/heads/branch-1.5 cc4c569a8 - 70b9ed11d

[SPARK-9112] [ML] Implement Stats for LogisticRegression

I have added support for stats in LogisticRegression. The API is similar to

that of LinearRegression with LogisticRegressionTrainingSummary and

Repository: spark

Updated Branches:

refs/heads/master 076ec0568 - 98e69467d

[SPARK-9615] [SPARK-9616] [SQL] [MLLIB] Bugs related to FrequentItems when

merging and with Tungsten

In short:

1- FrequentItems should not use the InternalRow representation, because the

keys in the map get messed

Repository: spark

Updated Branches:

refs/heads/master aead18ffc - 5b965d64e

[SPARK-9644] [SQL] Support update DecimalType with precision 18 in UnsafeRow

In order to support update a varlength (actually fixed length) object, the

space should be preserved even it's null. And, we can't call

Repository: spark

Updated Branches:

refs/heads/branch-1.5 43b30bc6c - c39d5d144

[SPARK-9482] [SQL] Fix thread-safey issue of using UnsafeProjection in join

This PR also change to use `def` instead of `lazy val` for UnsafeProjection,

because it's not thread safe.

TODO: cleanup the debug code

Repository: spark

Updated Branches:

refs/heads/master 5b965d64e - 93085c992

[SPARK-9482] [SQL] Fix thread-safey issue of using UnsafeProjection in join

This PR also change to use `def` instead of `lazy val` for UnsafeProjection,

because it's not thread safe.

TODO: cleanup the debug code

Repository: spark

Updated Branches:

refs/heads/branch-1.5 29ace3bbf - cab86c4b7

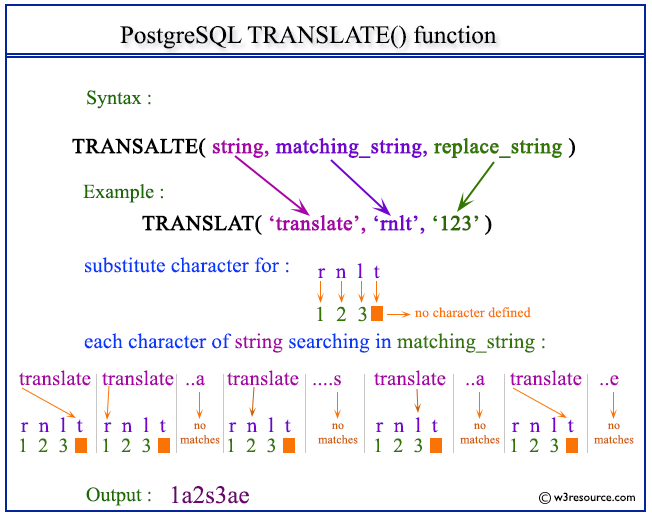

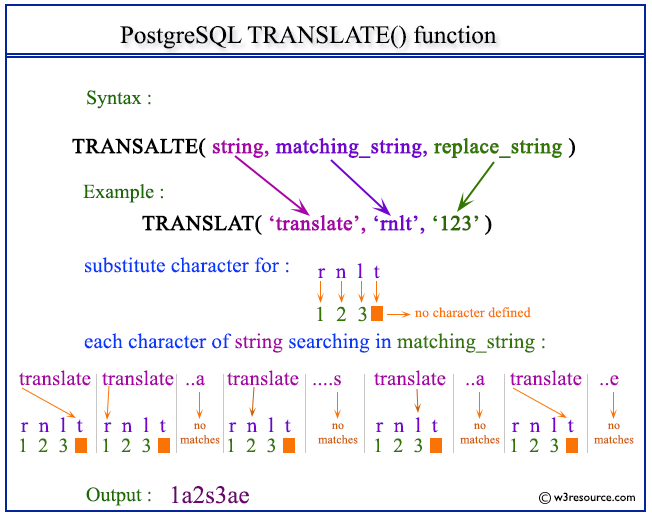

[SPARK-8266] [SQL] add function translate

Author: zhichao.li zhichao...@intel.com

Closes #7709 from zhichao-li/translate and

Repository: spark

Updated Branches:

refs/heads/master d5a9af323 - aead18ffc

[SPARK-8266] [SQL] add function translate

Author: zhichao.li zhichao...@intel.com

Closes #7709 from zhichao-li/translate and

68 matches

Mail list logo