GitHub user lovezeropython opened a pull request:

https://github.com/apache/spark/pull/21870

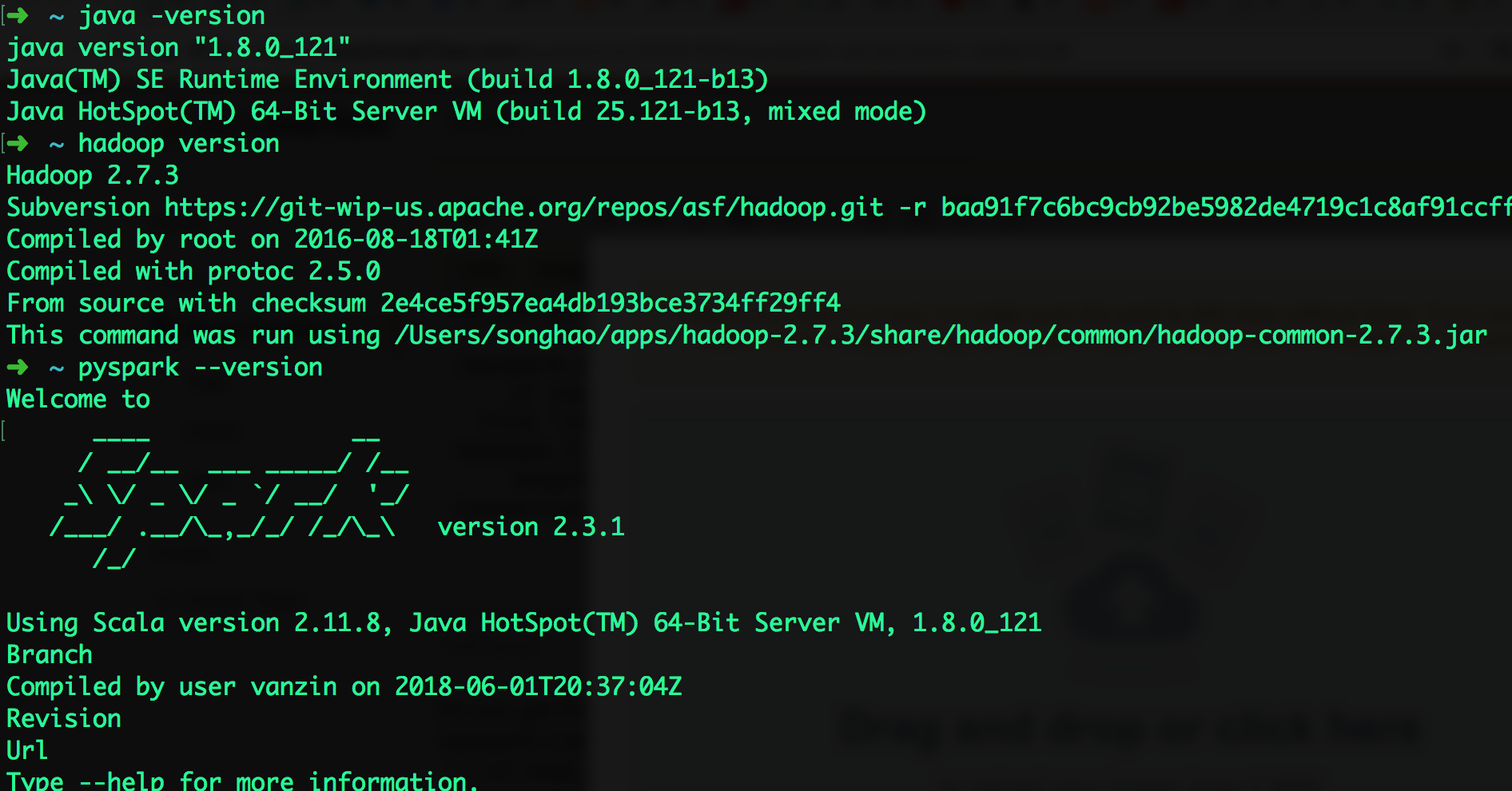

Branch 2.3

EOFError

# ConnectionResetError: [Errno 54] Connection reset by peer

(Please fill in changes proposed in this fix)

```

/pyspark.zip/pyspark/worker.py", line 255, in main

if read_int(infile) == SpecialLengths.END_OF_STREAM:

File

"/Users/songhao/apps/spark-2.3.1-bin-hadoop2.7/python/lib/pyspark.zip/pyspark/serializers.py",

line 683, in read_int

length = stream.read(4)

ConnectionResetError: [Errno 54] Connection reset by peerenter code here

```

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/apache/spark branch-2.3

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/21870.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #21870

commit 3737c3d32bb92e73cadaf3b1b9759d9be00b288d

Author: gatorsmile

Date: 2018-02-13T06:05:13Z

[SPARK-20090][FOLLOW-UP] Revert the deprecation of `names` in PySpark

## What changes were proposed in this pull request?

Deprecating the field `name` in PySpark is not expected. This PR is to

revert the change.

## How was this patch tested?

N/A

Author: gatorsmile

Closes #20595 from gatorsmile/removeDeprecate.

(cherry picked from commit 407f67249639709c40c46917700ed6dd736daa7d)

Signed-off-by: hyukjinkwon

commit 1c81c0c626f115fbfe121ad6f6367b695e9f3b5f

Author: guoxiaolong

Date: 2018-02-13T12:23:10Z

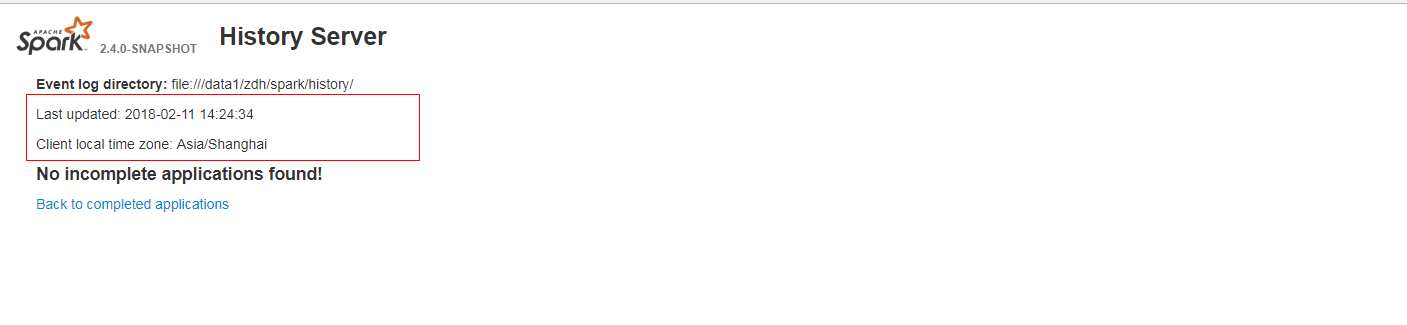

[SPARK-23384][WEB-UI] When it has no incomplete(completed) applications

found, the last updated time is not formatted and client local time zone is not

show in history server web ui.

## What changes were proposed in this pull request?

When it has no incomplete(completed) applications found, the last updated

time is not formatted and client local time zone is not show in history server

web ui. It is a bug.

fix before:

fix after:

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration

tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise,

remove this)

Please review http://spark.apache.org/contributing.html before opening a

pull request.

Author: guoxiaolong

Closes #20573 from guoxiaolongzte/SPARK-23384.

(cherry picked from commit 300c40f50ab4258d697f06a814d1491dc875c847)

Signed-off-by: Sean Owen

commit dbb1b399b6cf8372a3659c472f380142146b1248

Author: huangtengfei

Date: 2018-02-13T15:59:21Z

[SPARK-23053][CORE] taskBinarySerialization and task partitions calculate

in DagScheduler.submitMissingTasks should keep the same RDD checkpoint status

## What changes were proposed in this pull request?

When we run concurrent jobs using the same rdd which is marked to do

checkpoint. If one job has finished running the job, and start the process of

RDD.doCheckpoint, while another job is submitted, then submitStage and

submitMissingTasks will be called. In

[submitMissingTasks](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L961),

will serialize taskBinaryBytes and calculate task partitions which are both

affected by the status of checkpoint, if the former is calculated before

doCheckpoint finished, while the latter is calculated after doCheckpoint

finished, when run task, rdd.compute will be called, for some rdds with

particular partition type such as

[UnionRDD](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/rdd/UnionRDD.scala)

who will do partition type cast, will get a ClassCastException because the

part params is actually a CheckpointRDDPartition.

This error occurs because rdd.doCheckpoint occurs in the same thread that

called sc.runJob, while the task serialization occurs in the DAGSchedulers

event loop.

## How was this patch tested?

the exist uts and also add a test case in DAGScheduerSuite to show the

exception case.

Author: huangtengfei

Closes #20244 from ivoson/branch-taskpart-mistype.

(cherry picked from commit 091a000d27f324de8c5c527880854ecfcf5de9a4)

Signed-off-by: Imran Rashid

commit ab01ba718c7752b564e801a1ea546aedc2055dc0

Author: Bogdan Raducanu

Date: 2018-02-13T17:49:52Z

[SPARK-23316][SQL]