[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21644 **[Test build #92384 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92384/testReport)** for PR 21644 at commit [`0be2525`](https://github.com/apache/spark/commit/0be25254a3f045babd36011c81733acc23fe3cfc). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21611: [SPARK-24569][SQL] Aggregator with output type Op...

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/21611#discussion_r198509965

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/DatasetAggregatorSuite.scala ---

@@ -333,4 +406,28 @@ class DatasetAggregatorSuite extends QueryTest with

SharedSQLContext {

df.groupBy($"i").agg(VeryComplexResultAgg.toColumn),

Row(1, Row(Row(1, "a"), Row(1, "a"))) :: Row(2, Row(Row(2, "bc"),

Row(2, "bc"))) :: Nil)

}

+

+ test("SPARK-24569: Aggregator with output type Option[Boolean] creates

column of type Row") {

+val df = Seq(

+ OptionBooleanData("bob", Some(true)),

+ OptionBooleanData("bob", Some(false)),

+ OptionBooleanData("bob", None)).toDF()

+val group = df

+ .groupBy("name")

--- End diff --

Yes, if you use similar `Aggregator` with `groupByKey`, you gets a struct

too:

```scala

val df = Seq(

OptionBooleanData("bob", Some(true)),

OptionBooleanData("bob", Some(false)),

OptionBooleanData("bob", None)).toDF()

val df2 = df.groupByKey((r: Row) => r.getString(0))

.agg(OptionBooleanAggregator("isGood").toColumn)

df2.printSchema

```

```

root

|-- value: string (nullable = true)

|-- OptionBooleanAggregator(org.apache.spark.sql.Row): struct (nullable =

true)

||-- value: boolean (nullable = true)

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21533: [SPARK-24195][Core] Bug fix for local:/ path in SparkCon...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21533 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21553: [SPARK-24215][PySpark][Follow Up] Implement eager evalua...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21553 **[Test build #92377 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92377/testReport)** for PR 21553 at commit [`00ae164`](https://github.com/apache/spark/commit/00ae164b535f5e4be6bfa2b496124760d0cdafdd). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21553: [SPARK-24215][PySpark][Follow Up] Implement eager evalua...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21553 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21553: [SPARK-24215][PySpark][Follow Up] Implement eager evalua...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21553 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92377/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18900: [SPARK-21687][SQL] Spark SQL should set createTime for H...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18900 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92376/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18900: [SPARK-21687][SQL] Spark SQL should set createTime for H...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/18900 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18900: [SPARK-21687][SQL] Spark SQL should set createTime for H...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/18900 **[Test build #92376 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92376/testReport)** for PR 18900 at commit [`18c85b6`](https://github.com/apache/spark/commit/18c85b61139e2b9d434214b9082b43a46e1c8787). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21389: [SPARK-24204][SQL] Verify a schema in Json/Orc/ParquetFi...

Github user maropu commented on the issue: https://github.com/apache/spark/pull/21389 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21635: [SPARK-24594][YARN] Introducing metrics for YARN

Github user tgravescs commented on the issue: https://github.com/apache/spark/pull/21635 do we have follow on jira or epic to actually public certain metrics? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

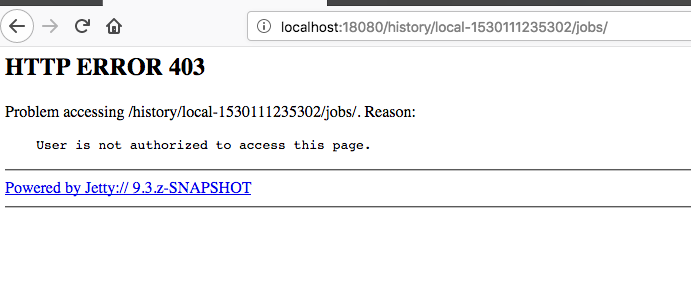

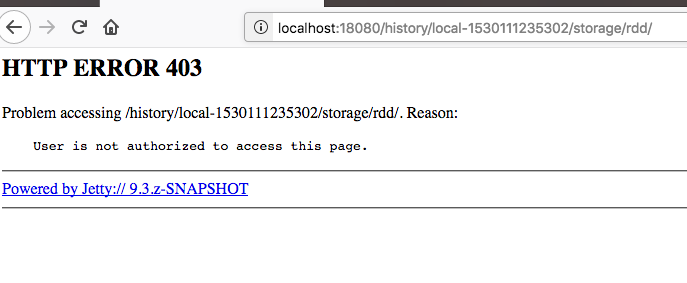

Github user mgaido91 commented on the issue: https://github.com/apache/spark/pull/21644 @jerryshao yes, I checked other too, here it is a couple of examples:   --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21495: [SPARK-24418][Build] Upgrade Scala to 2.11.12 and 2.12.6

Github user dbtsai commented on the issue: https://github.com/apache/spark/pull/21495 I was on a family leave for couple weeks. Thank you all for helping out and merging it. The only change with this PR is that the welcome message will be printed first, and then the Spark URL will be shown latter. It's a minor difference. I had an offline discussion with @adriaanm in Scala community. To overcome this issue, he suggested we can override the entire `def process(settings: Settings): Boolean` to put our initialization code. I have a working implementation of this, but this will copy a lot of code from Scala to just get the printing order right. If we decide to have a consistent printing order, I can submit a separate PR for this. He is open to working with us to add proper hook in Scala so we don't need use hacks to initialize our code. Thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21389: [SPARK-24204][SQL] Verify a schema in Json/Orc/ParquetFi...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21389 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/514/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21389: [SPARK-24204][SQL] Verify a schema in Json/Orc/ParquetFi...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21389 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16677: [SPARK-19355][SQL] Use map output statistics to improve ...

Github user viirya commented on the issue: https://github.com/apache/spark/pull/16677 retest this please. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/516/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20451: [SPARK-23146][WIP] Support client mode for Kubernetes cl...

Github user rayburgemeestre commented on the issue: https://github.com/apache/spark/pull/20451 Just wanted to say this PR is really great, with this it's possible to for example use Jupyter with Spark on K8s:  --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21061: [SPARK-23914][SQL] Add array_union function

Github user kiszk commented on the issue: https://github.com/apache/spark/pull/21061 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21061: [SPARK-23914][SQL] Add array_union function

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21061 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/513/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21061: [SPARK-23914][SQL] Add array_union function

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21061 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21061: [SPARK-23914][SQL] Add array_union function

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21061 **[Test build #92381 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92381/testReport)** for PR 21061 at commit [`6f721f0`](https://github.com/apache/spark/commit/6f721f0f53361743e86980c8a45807d14a303666). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user jerryshao commented on the issue: https://github.com/apache/spark/pull/21644 @mgaido91 , would you please check all other response to see if it returns as expected, not only download link. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #19410: [SPARK-22184][CORE][GRAPHX] GraphX fails in case of insu...

Github user szhem commented on the issue: https://github.com/apache/spark/pull/19410 Hi @mallman! In case of ``` StorageLevel.MEMORY_AND_DISK StorageLevel.MEMORY_AND_DISK_SER_2 ``` ... tests pass. They still fail in case of ``` StorageLevel.MEMORY_ONLY StorageLevel.MEMORY_ONLY_SER ``` Although it works, I'm not sure that changing the caching level of the graph is really a good option to go with as Spark starts complaining [here](https://github.com/apache/spark/blob/f830bb9170f6b853565d9dd30ca7418b93a54fe3/graphx/src/main/scala/org/apache/spark/graphx/impl/VertexPartitionBaseOps.scala#L111) and [here](https://github.com/apache/spark/blob/f830bb9170f6b853565d9dd30ca7418b93a54fe3/graphx/src/main/scala/org/apache/spark/graphx/impl/VertexPartitionBaseOps.scala#L131) ``` 18/06/27 16:08:46.802 Executor task launch worker for task 3 WARN ShippableVertexPartitionOps: Diffing two VertexPartitions with different indexes is slow. 18/06/27 16:08:47.000 Executor task launch worker for task 4 WARN ShippableVertexPartitionOps: Diffing two VertexPartitions with different indexes is slow. 18/06/27 16:08:47.164 Executor task launch worker for task 5 WARN ShippableVertexPartitionOps: Diffing two VertexPartitions with different indexes is slow. 18/06/27 16:08:48.724 Executor task launch worker for task 18 WARN ShippableVertexPartitionOps: Joining two VertexPartitions with different indexes is slow. 18/06/27 16:08:48.749 Executor task launch worker for task 18 WARN ShippableVertexPartitionOps: Diffing two VertexPartitions with different indexes is slow. 18/06/27 16:08:48.868 Executor task launch worker for task 19 WARN ShippableVertexPartitionOps: Joining two VertexPartitions with different indexes is slow. 18/06/27 16:08:48.899 Executor task launch worker for task 19 WARN ShippableVertexPartitionOps: Diffing two VertexPartitions with different indexes is slow. 18/06/27 16:08:49.008 Executor task launch worker for task 20 WARN ShippableVertexPartitionOps: Joining two VertexPartitions with different indexes is slow. 18/06/27 16:08:49.028 Executor task launch worker for task 20 WARN ShippableVertexPartitionOps: Diffing two VertexPartitions with different indexes is slow. ``` P.S. To emulate the lack of memory I just set the following options like [here](https://github.com/apache/spark/pull/19410/files?utf8=%E2%9C%93=unified#diff-c2823ca69af75fc6cdfd1ebbf25c2aefR85) to emulate lack of memory resources. ``` spark.testing.reservedMemory spark.testing.memory ``` --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #15120: [SPARK-4563][core] Allow driver to advertise a different...

Github user sangramga commented on the issue: https://github.com/apache/spark/pull/15120 @SparkQA Is this feature available for only YARN cluster manager? Can Spark standalone manager can also use this? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92380/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21533: [SPARK-24195][Core] Bug fix for local:/ path in SparkCon...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21533 **[Test build #92379 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92379/testReport)** for PR 21533 at commit [`ac12568`](https://github.com/apache/spark/commit/ac12568f90e27a1748c73de57f3d63190c823278). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21533: [SPARK-24195][Core] Bug fix for local:/ path in SparkCon...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21533 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92379/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21635: [SPARK-24594][YARN] Introducing metrics for YARN

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21635 **[Test build #92385 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92385/testReport)** for PR 21635 at commit [`9735525`](https://github.com/apache/spark/commit/9735525157b63248cba699abbd1ecba20c5d1904). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21635: [SPARK-24594][YARN] Introducing metrics for YARN

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21635 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21635: [SPARK-24594][YARN] Introducing metrics for YARN

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21635 **[Test build #92385 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92385/testReport)** for PR 21635 at commit [`9735525`](https://github.com/apache/spark/commit/9735525157b63248cba699abbd1ecba20c5d1904). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #20451: [SPARK-23146][WIP] Support client mode for Kubernetes cl...

Github user echarles commented on the issue: https://github.com/apache/spark/pull/20451 @rayburgemeestre This is cool! Apache Toree configuration for K8S is on my todo list but would be happy to copycat your conf... Any gist? IMHO both client and cluster mode should be fine with Toree. Did you try cluster mode? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/517/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21598: [SPARK-24605][SQL] size(null) returns null instea...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21598#discussion_r198562854

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/collectionOperations.scala

---

@@ -75,28 +75,44 @@ trait BinaryArrayExpressionWithImplicitCast extends

BinaryExpression

> SELECT _FUNC_(array('b', 'd', 'c', 'a'));

4

""")

-case class Size(child: Expression) extends UnaryExpression with

ExpectsInputTypes {

+case class Size(

+child: Expression,

+legacySizeOfNull: Boolean)

+ extends UnaryExpression with ExpectsInputTypes {

+

+ def this(child: Expression) =

+this(

+ child,

+ legacySizeOfNull = SQLConf.get.getConf(SQLConf.LEGACY_SIZE_OF_NULL))

--- End diff --

it was made possible in https://github.com/apache/spark/pull/21376

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21560: [SPARK-24386][SS] coalesce(1) aggregates in continuous p...

Github user jose-torres commented on the issue: https://github.com/apache/spark/pull/21560 Sorry, that wasn't meant to be a complete push. Added the tests now. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21560: [SPARK-24386][SS] coalesce(1) aggregates in conti...

Github user jose-torres commented on a diff in the pull request:

https://github.com/apache/spark/pull/21560#discussion_r198571496

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/UnsupportedOperationChecker.scala

---

@@ -349,6 +349,17 @@ object UnsupportedOperationChecker {

_: DeserializeToObject | _: SerializeFromObject | _:

SubqueryAlias |

_: TypedFilter) =>

case node if node.nodeName == "StreamingRelationV2" =>

+case Repartition(1, false, _) =>

+case node: Aggregate =>

+ val aboveSinglePartitionCoalesce = node.find {

+case Repartition(1, false, _) => true

+case _ => false

+ }.isDefined

+

+ if (!aboveSinglePartitionCoalesce) {

--- End diff --

(same comment as above applies here - we don't have partitioning

information in analysis)

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user vanzin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198574641

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/OneApplicationResource.scala

---

@@ -140,11 +140,9 @@ private[v1] class AbstractApplicationResource extends

BaseAppResource {

.header("Content-Type", MediaType.APPLICATION_OCTET_STREAM)

.build()

} catch {

- case NonFatal(e) =>

-Response.serverError()

- .entity(s"Event logs are not available for app: $appId.")

- .status(Response.Status.SERVICE_UNAVAILABLE)

- .build()

+ case NonFatal(_) =>

+UIUtils.buildErrorResponse(Response.Status.SERVICE_UNAVAILABLE,

--- End diff --

This could just `throw ServiceUnavailable`, no?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21476: [SPARK-24446][yarn] Properly quote library path for YARN...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21476 **[Test build #92388 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92388/testReport)** for PR 21476 at commit [`87e207c`](https://github.com/apache/spark/commit/87e207c768c4aa958fb1b8e3b2899d1b7f99c41e). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21476: [SPARK-24446][yarn] Properly quote library path for YARN...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21476 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21635: [SPARK-24594][YARN] Introducing metrics for YARN

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21635 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92385/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user vanzin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198548600

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/OneApplicationResource.scala

---

@@ -140,11 +140,9 @@ private[v1] class AbstractApplicationResource extends

BaseAppResource {

.header("Content-Type", MediaType.APPLICATION_OCTET_STREAM)

.build()

} catch {

- case NonFatal(e) =>

-Response.serverError()

- .entity(s"Event logs are not available for app: $appId.")

- .status(Response.Status.SERVICE_UNAVAILABLE)

- .build()

+ case NonFatal(_) =>

+UIUtils.buildErrorResponse(Response.Status.SERVICE_UNAVAILABLE,

--- End diff --

If doing the cleanup, it's probably ok to just throw the exception here.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21389: [SPARK-24204][SQL] Verify a schema in Json/Orc/ParquetFi...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21389 **[Test build #92382 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92382/testReport)** for PR 21389 at commit [`1cfc7b0`](https://github.com/apache/spark/commit/1cfc7b02089401eca2f17db55b113e6620f398be). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21389: [SPARK-24204][SQL] Verify a schema in Json/Orc/ParquetFi...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21389 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21061: [SPARK-23914][SQL] Add array_union function

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21061 **[Test build #92381 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92381/testReport)** for PR 21061 at commit [`6f721f0`](https://github.com/apache/spark/commit/6f721f0f53361743e86980c8a45807d14a303666). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user vanzin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198561212

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/ApiRootResource.scala ---

@@ -148,38 +148,21 @@ private[v1] trait BaseAppResource extends

ApiRequestContext {

}

private[v1] class ForbiddenException(msg: String) extends

WebApplicationException(

- Response.status(Response.Status.FORBIDDEN).entity(msg).build())

+UIUtils.buildErrorResponse(Response.Status.FORBIDDEN, msg))

private[v1] class NotFoundException(msg: String) extends

WebApplicationException(

- new NoSuchElementException(msg),

-Response

- .status(Response.Status.NOT_FOUND)

- .entity(ErrorWrapper(msg))

- .build()

-)

+new NoSuchElementException(msg),

+UIUtils.buildErrorResponse(Response.Status.NOT_FOUND, msg))

private[v1] class ServiceUnavailable(msg: String) extends

WebApplicationException(

- new ServiceUnavailableException(msg),

- Response

-.status(Response.Status.SERVICE_UNAVAILABLE)

-.entity(ErrorWrapper(msg))

-.build()

-)

+new ServiceUnavailableException(msg),

--- End diff --

I think it's ok to leave (almost) as is. It avoid duplicating the status

code in every call, which would be annoying.

But we can remove the "cause" exceptions from all these wrappers since

they're redundant.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21061: [SPARK-23914][SQL] Add array_union function

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21061 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92381/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21061: [SPARK-23914][SQL] Add array_union function

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21061 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21560: [SPARK-24386][SS] coalesce(1) aggregates in continuous p...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21560 **[Test build #92387 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92387/testReport)** for PR 21560 at commit [`f77b12b`](https://github.com/apache/spark/commit/f77b12ba92a868274ecdfea331786addb2d9ca83). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21575: [SPARK-24566][CORE] spark.storage.blockManagerSla...

Github user zsxwing commented on a diff in the pull request:

https://github.com/apache/spark/pull/21575#discussion_r198576712

--- Diff: core/src/test/scala/org/apache/spark/SparkConfSuite.scala ---

@@ -371,6 +371,23 @@ class SparkConfSuite extends SparkFunSuite with

LocalSparkContext with ResetSyst

assert(thrown.getMessage.contains(key))

}

}

+

+ test("SPARK-24566") {

--- End diff --

This test is useless. It tests copy-pasted codes and if someone changes the

original codes, this will still pass. I prefer to not add this one.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21575: [SPARK-24566][CORE] spark.storage.blockManagerSla...

Github user zsxwing commented on a diff in the pull request:

https://github.com/apache/spark/pull/21575#discussion_r198578198

--- Diff: core/src/main/scala/org/apache/spark/HeartbeatReceiver.scala ---

@@ -74,17 +75,17 @@ private[spark] class HeartbeatReceiver(sc:

SparkContext, clock: Clock)

// "spark.network.timeout" uses "seconds", while

`spark.storage.blockManagerSlaveTimeoutMs` uses

// "milliseconds"

- private val slaveTimeoutMs =

-sc.conf.getTimeAsMs("spark.storage.blockManagerSlaveTimeoutMs", "120s")

private val executorTimeoutMs =

-sc.conf.getTimeAsSeconds("spark.network.timeout",

s"${slaveTimeoutMs}ms") * 1000

+sc.conf.getTimeAsSeconds("spark.network.timeout",

--- End diff --

I meant something like this to match the docs:

```

private val executorTimeoutMs =

sc.conf.getTimeAsMs(

"spark.storage.blockManagerSlaveTimeoutMs",

s"${sc.conf.getTimeAsSeconds("spark.network.timeout", "120s")}s")

```

Could you also change

https://github.com/apache/spark/blob/53c06ddabbdf689f8823807445849ad63173676f/resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosCoarseGrainedSchedulerBackend.scala#L637

to use the above pattern?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #17086: [SPARK-24101][ML][MLLIB] ML Evaluators should use weight...

Github user imatiach-msft commented on the issue: https://github.com/apache/spark/pull/17086 @jkbradley @mengxr ping... would you be able to take a look at this PR? I received an email recently from someone who asked when this feature (weight columns in evaluators) is expected to be added, it sounds like other devs would like this feature as well. Thank you! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21623: [SPARK-24638][SQL] StringStartsWith support push ...

Github user rdblue commented on a diff in the pull request:

https://github.com/apache/spark/pull/21623#discussion_r198551889

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFilterSuite.scala

---

@@ -660,6 +661,56 @@ class ParquetFilterSuite extends QueryTest with

ParquetTest with SharedSQLContex

assert(df.where("col > 0").count() === 2)

}

}

+

+ test("filter pushdown - StringStartsWith") {

+withParquetDataFrame((1 to 4).map(i => Tuple1(i + "str" + i))) {

implicit df =>

--- End diff --

I think that all of these tests go through the `keep` method instead of the

`canDrop` and `inverseCanDrop`. I think those methods need to be tested. You

can do that by constructing a Parquet file with row groups that have

predictable statistics, but that would be difficult. An easier way to do this

is to define the predicate class elsewhere and create a unit test for it that

passes in different statistics values.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21623: [SPARK-24638][SQL] StringStartsWith support push ...

Github user rdblue commented on a diff in the pull request:

https://github.com/apache/spark/pull/21623#discussion_r198553569

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFilters.scala

---

@@ -22,16 +22,23 @@ import java.sql.Date

import org.apache.parquet.filter2.predicate._

import org.apache.parquet.filter2.predicate.FilterApi._

import org.apache.parquet.io.api.Binary

+import org.apache.parquet.schema.PrimitiveComparator

import org.apache.spark.sql.catalyst.util.DateTimeUtils

import org.apache.spark.sql.catalyst.util.DateTimeUtils.SQLDate

+import org.apache.spark.sql.internal.SQLConf

import org.apache.spark.sql.sources

import org.apache.spark.sql.types._

+import org.apache.spark.unsafe.types.UTF8String

/**

* Some utility function to convert Spark data source filters to Parquet

filters.

*/

-private[parquet] class ParquetFilters(pushDownDate: Boolean) {

+private[parquet] class ParquetFilters() {

+

+ val sqlConf: SQLConf = SQLConf.get

--- End diff --

This should pass in `pushDownDate` and `pushDownStartWith` like the

previous version did with just the date setting.

The SQLConf is already available in ParquetFileFormat and it *would* be

better to pass it in. The problem is that this class is instantiated in the

function (`(file: PartitionedFile) => { ... }`) that gets serialized and sent

to executors. That means we don't want SQLConf and its references in the

function's closure. The way we got around this before was to put boolean config

vals in the closure instead. I think you should go with that approach.

I'm not sure what `SQLConf.get` is for or what a correct use would be.

@gatorsmile, can you comment on use of `SQLConf.get`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198562109

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/ApiRootResource.scala ---

@@ -148,38 +148,21 @@ private[v1] trait BaseAppResource extends

ApiRequestContext {

}

private[v1] class ForbiddenException(msg: String) extends

WebApplicationException(

- Response.status(Response.Status.FORBIDDEN).entity(msg).build())

+UIUtils.buildErrorResponse(Response.Status.FORBIDDEN, msg))

private[v1] class NotFoundException(msg: String) extends

WebApplicationException(

- new NoSuchElementException(msg),

-Response

- .status(Response.Status.NOT_FOUND)

- .entity(ErrorWrapper(msg))

- .build()

-)

+new NoSuchElementException(msg),

+UIUtils.buildErrorResponse(Response.Status.NOT_FOUND, msg))

private[v1] class ServiceUnavailable(msg: String) extends

WebApplicationException(

- new ServiceUnavailableException(msg),

- Response

-.status(Response.Status.SERVICE_UNAVAILABLE)

-.entity(ErrorWrapper(msg))

-.build()

-)

+new ServiceUnavailableException(msg),

--- End diff --

I agree, I updated with your suggestion, thanks

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21476: [SPARK-24446][yarn] Properly quote library path for YARN...

Github user vanzin commented on the issue: https://github.com/apache/spark/pull/21476 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21476: [SPARK-24446][yarn] Properly quote library path for YARN...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21476 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/518/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18900: [SPARK-21687][SQL] Spark SQL should set createTime for H...

Github user debugger87 commented on the issue: https://github.com/apache/spark/pull/18900 @cloud-fan Any suggestions? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21635: [SPARK-24594][YARN] Introducing metrics for YARN

Github user attilapiros commented on a diff in the pull request:

https://github.com/apache/spark/pull/21635#discussion_r198538655

--- Diff:

resource-managers/yarn/src/main/scala/org/apache/spark/deploy/yarn/ApplicationMaster.scala

---

@@ -309,6 +312,9 @@ private[spark] class ApplicationMaster(args:

ApplicationMasterArguments) extends

finish(FinalApplicationStatus.FAILED,

ApplicationMaster.EXIT_UNCAUGHT_EXCEPTION,

"Uncaught exception: " + StringUtils.stringifyException(e))

+} finally {

+ metricsSystem.report()

--- End diff --

Thanks Tom, documentation is added.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user mgaido91 commented on the issue: https://github.com/apache/spark/pull/21644 thanks @attilapiros for taking a look at this! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21495: [SPARK-24418][Build] Upgrade Scala to 2.11.12 and 2.12.6

Github user felixcheung commented on the issue: https://github.com/apache/spark/pull/21495 I think itâd be great to not change the order --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198554094

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/ApiRootResource.scala ---

@@ -148,38 +148,21 @@ private[v1] trait BaseAppResource extends

ApiRequestContext {

}

private[v1] class ForbiddenException(msg: String) extends

WebApplicationException(

- Response.status(Response.Status.FORBIDDEN).entity(msg).build())

+UIUtils.buildErrorResponse(Response.Status.FORBIDDEN, msg))

private[v1] class NotFoundException(msg: String) extends

WebApplicationException(

- new NoSuchElementException(msg),

-Response

- .status(Response.Status.NOT_FOUND)

- .entity(ErrorWrapper(msg))

- .build()

-)

+new NoSuchElementException(msg),

+UIUtils.buildErrorResponse(Response.Status.NOT_FOUND, msg))

private[v1] class ServiceUnavailable(msg: String) extends

WebApplicationException(

- new ServiceUnavailableException(msg),

- Response

-.status(Response.Status.SERVICE_UNAVAILABLE)

-.entity(ErrorWrapper(msg))

-.build()

-)

+new ServiceUnavailableException(msg),

--- End diff --

thanks for your review. Sure I can do the refactor, but the helper method

would be needed anyway. Indeed, the problem which was present before the PR is

that we are not specifying the type of the response, so it takes the type which

is produced. And in the default exceptions you mentioned the `Response` is

built without setting the type, so we still need to pass them the `Response` we

build in the helper method.

I will follow your suggestion (so I'll clean up our exceptions using the

jax ones), but keeping the helper method for this reason. Thanks.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21623: [SPARK-24638][SQL] StringStartsWith support push down

Github user rdblue commented on the issue: https://github.com/apache/spark/pull/21623 Overall, I think this is close. The tests need to cover the row group stats case and we should update how configuration is passed to the filters. Thanks for working on this, @wangyum! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21389: [SPARK-24204][SQL] Verify a schema in Json/Orc/ParquetFi...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21389 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92382/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198560296

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/ApiRootResource.scala ---

@@ -148,38 +148,21 @@ private[v1] trait BaseAppResource extends

ApiRequestContext {

}

private[v1] class ForbiddenException(msg: String) extends

WebApplicationException(

- Response.status(Response.Status.FORBIDDEN).entity(msg).build())

+UIUtils.buildErrorResponse(Response.Status.FORBIDDEN, msg))

private[v1] class NotFoundException(msg: String) extends

WebApplicationException(

- new NoSuchElementException(msg),

-Response

- .status(Response.Status.NOT_FOUND)

- .entity(ErrorWrapper(msg))

- .build()

-)

+new NoSuchElementException(msg),

+UIUtils.buildErrorResponse(Response.Status.NOT_FOUND, msg))

private[v1] class ServiceUnavailable(msg: String) extends

WebApplicationException(

- new ServiceUnavailableException(msg),

- Response

-.status(Response.Status.SERVICE_UNAVAILABLE)

-.entity(ErrorWrapper(msg))

-.build()

-)

+new ServiceUnavailableException(msg),

--- End diff --

yes, indeed they do. Not too much code but

```

throw new ForbiddenException(raw"""user "$user" is not authorized""")

```

would become

```

throw new ForbiddenException(UIUtils.buildErrorResponse(

Response.Status.FORBIDDEN, raw"""user "$user" is not

authorized"""))

```

what do you think? Shall we do the refactor?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21644 **[Test build #92386 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92386/testReport)** for PR 21644 at commit [`a9baa7c`](https://github.com/apache/spark/commit/a9baa7cc3bd894cf06f98a5b58a4c2754b11aef1). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21560: [SPARK-24386][SS] coalesce(1) aggregates in conti...

Github user jose-torres commented on a diff in the pull request:

https://github.com/apache/spark/pull/21560#discussion_r198571824

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/continuous/ContinuousCoalesceRDD.scala

---

@@ -0,0 +1,108 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.streaming.continuous

+

+import org.apache.spark._

+import org.apache.spark.rdd.{CoalescedRDDPartition, RDD}

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.UnsafeRow

+import org.apache.spark.sql.execution.streaming.continuous.shuffle._

+import org.apache.spark.util.ThreadUtils

+

+case class ContinuousCoalesceRDDPartition(index: Int) extends Partition {

+ // This flag will be flipped on the executors to indicate that the

threads processing

+ // partitions of the write-side RDD have been started. These will run

indefinitely

+ // asynchronously as epochs of the coalesce RDD complete on the read

side.

+ private[continuous] var writersInitialized: Boolean = false

+}

+

+/**

+ * RDD for continuous coalescing. Asynchronously writes all partitions of

`prev` into a local

+ * continuous shuffle, and then reads them in the task thread using

`reader`.

+ */

+class ContinuousCoalesceRDD(

+context: SparkContext,

+numPartitions: Int,

+readerQueueSize: Int,

+epochIntervalMs: Long,

+readerEndpointName: String,

+prev: RDD[InternalRow])

+ extends RDD[InternalRow](context, Nil) {

+

+ override def getPartitions: Array[Partition] =

Array(ContinuousCoalesceRDDPartition(0))

+

+ val readerRDD = new ContinuousShuffleReadRDD(

+sparkContext,

+numPartitions,

+readerQueueSize,

+prev.getNumPartitions,

+epochIntervalMs,

+Seq(readerEndpointName))

+

+ private lazy val threadPool = ThreadUtils.newDaemonFixedThreadPool(

+prev.getNumPartitions,

+this.name)

+

+ override def compute(split: Partition, context: TaskContext):

Iterator[InternalRow] = {

+assert(split.index == 0)

+// lazy initialize endpoint so writer can send to it

+

readerRDD.partitions(0).asInstanceOf[ContinuousShuffleReadPartition].endpoint

+

+if

(!split.asInstanceOf[ContinuousCoalesceRDDPartition].writersInitialized) {

+ val rpcEnv = SparkEnv.get.rpcEnv

+ val outputPartitioner = new HashPartitioner(1)

+ val endpointRefs = readerRDD.endpointNames.map { endpointName =>

+ rpcEnv.setupEndpointRef(rpcEnv.address, endpointName)

+ }

+

+ val runnables = prev.partitions.map { prevSplit =>

+new Runnable() {

+ override def run(): Unit = {

+TaskContext.setTaskContext(context)

+

+val writer: ContinuousShuffleWriter = new

RPCContinuousShuffleWriter(

+ prevSplit.index, outputPartitioner, endpointRefs.toArray)

+

+EpochTracker.initializeCurrentEpoch(

+

context.getLocalProperty(ContinuousExecution.START_EPOCH_KEY).toLong)

+while (!context.isInterrupted() && !context.isCompleted()) {

+ writer.write(prev.compute(prevSplit,

context).asInstanceOf[Iterator[UnsafeRow]])

+ // Note that current epoch is a non-inheritable thread

local, so each writer thread

+ // can properly increment its own epoch without affecting

the main task thread.

+ EpochTracker.incrementCurrentEpoch()

+}

+ }

+}

+ }

+

+ context.addTaskCompletionListener { ctx =>

+threadPool.shutdownNow()

+ }

+

+

split.asInstanceOf[ContinuousCoalesceRDDPartition].writersInitialized = true

+

+ runnables.foreach(threadPool.execute)

+}

+

+readerRDD.compute(readerRDD.partitions(split.index), context)

--- End diff --

Yeah, it could be

[GitHub] spark pull request #20351: [SPARK-23014][SS] Fully remove V1 memory sink.

Github user jose-torres closed the pull request at: https://github.com/apache/spark/pull/20351 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21476: [SPARK-24446][yarn] Properly quote library path for YARN...

Github user vanzin commented on the issue: https://github.com/apache/spark/pull/21476 Merging to master. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21575: [SPARK-24566][CORE] spark.storage.blockManagerSla...

Github user zsxwing commented on a diff in the pull request:

https://github.com/apache/spark/pull/21575#discussion_r198579275

--- Diff: core/src/main/scala/org/apache/spark/HeartbeatReceiver.scala ---

@@ -74,17 +75,17 @@ private[spark] class HeartbeatReceiver(sc:

SparkContext, clock: Clock)

// "spark.network.timeout" uses "seconds", while

`spark.storage.blockManagerSlaveTimeoutMs` uses

// "milliseconds"

- private val slaveTimeoutMs =

-sc.conf.getTimeAsMs("spark.storage.blockManagerSlaveTimeoutMs", "120s")

private val executorTimeoutMs =

-sc.conf.getTimeAsSeconds("spark.network.timeout",

s"${slaveTimeoutMs}ms") * 1000

+sc.conf.getTimeAsSeconds("spark.network.timeout",

+ s"${sc.conf.getTimeAsMs("spark.storage.blockManagerSlaveTimeoutMs",

+"120s")}ms").seconds.toMillis

// "spark.network.timeoutInterval" uses "seconds", while

// "spark.storage.blockManagerTimeoutIntervalMs" uses "milliseconds"

- private val timeoutIntervalMs =

-sc.conf.getTimeAsMs("spark.storage.blockManagerTimeoutIntervalMs",

"60s")

private val checkTimeoutIntervalMs =

-sc.conf.getTimeAsSeconds("spark.network.timeoutInterval",

s"${timeoutIntervalMs}ms") * 1000

+sc.conf.getTimeAsSeconds("spark.network.timeoutInterval",

--- End diff --

please revert this since it's unrelated.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21575: [SPARK-24566][CORE] spark.storage.blockManagerSla...

Github user zsxwing commented on a diff in the pull request:

https://github.com/apache/spark/pull/21575#discussion_r198579348

--- Diff: core/src/main/scala/org/apache/spark/HeartbeatReceiver.scala ---

@@ -21,6 +21,7 @@ import java.util.concurrent.{ScheduledFuture, TimeUnit}

import scala.collection.mutable

import scala.concurrent.Future

+import scala.concurrent.duration._

--- End diff --

this will be unused after you address my comments

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user vanzin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198548363

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/ApiRootResource.scala ---

@@ -148,38 +148,21 @@ private[v1] trait BaseAppResource extends

ApiRequestContext {

}

private[v1] class ForbiddenException(msg: String) extends

WebApplicationException(

- Response.status(Response.Status.FORBIDDEN).entity(msg).build())

+UIUtils.buildErrorResponse(Response.Status.FORBIDDEN, msg))

private[v1] class NotFoundException(msg: String) extends

WebApplicationException(

- new NoSuchElementException(msg),

-Response

- .status(Response.Status.NOT_FOUND)

- .entity(ErrorWrapper(msg))

- .build()

-)

+new NoSuchElementException(msg),

+UIUtils.buildErrorResponse(Response.Status.NOT_FOUND, msg))

private[v1] class ServiceUnavailable(msg: String) extends

WebApplicationException(

- new ServiceUnavailableException(msg),

- Response

-.status(Response.Status.SERVICE_UNAVAILABLE)

-.entity(ErrorWrapper(msg))

-.build()

-)

+new ServiceUnavailableException(msg),

--- End diff --

Hmm... none of this is your fault, but it seems these exceptions are not

needed anymore. jax-rs 2.1 has exceptions for all these and they could just

replace these wrappers.

e.g. if you just throw `ServiceUnavailableException` directly instead of

this wrapper, the result would be the same.

You can find all exceptions at:

https://jax-rs.github.io/apidocs/2.1/javax/ws/rs/package-summary.html

I think it's better to clean this up instead of adding another helper API.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user vanzin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198558986

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/ApiRootResource.scala ---

@@ -148,38 +148,21 @@ private[v1] trait BaseAppResource extends

ApiRequestContext {

}

private[v1] class ForbiddenException(msg: String) extends

WebApplicationException(

- Response.status(Response.Status.FORBIDDEN).entity(msg).build())

+UIUtils.buildErrorResponse(Response.Status.FORBIDDEN, msg))

private[v1] class NotFoundException(msg: String) extends

WebApplicationException(

- new NoSuchElementException(msg),

-Response

- .status(Response.Status.NOT_FOUND)

- .entity(ErrorWrapper(msg))

- .build()

-)

+new NoSuchElementException(msg),

+UIUtils.buildErrorResponse(Response.Status.NOT_FOUND, msg))

private[v1] class ServiceUnavailable(msg: String) extends

WebApplicationException(

- new ServiceUnavailableException(msg),

- Response

-.status(Response.Status.SERVICE_UNAVAILABLE)

-.entity(ErrorWrapper(msg))

-.build()

-)

+new ServiceUnavailableException(msg),

--- End diff --

Hmm, if that's the case then probably the wrappers can save some code

(since they abstract the building of the response)...

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21638: [SPARK-22357][CORE] SparkContext.binaryFiles ignore minP...

Github user bomeng commented on the issue: https://github.com/apache/spark/pull/21638 @HyukjinKwon please review. thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21476: [SPARK-24446][yarn] Properly quote library path for YARN...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21476 **[Test build #92388 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92388/testReport)** for PR 21476 at commit [`87e207c`](https://github.com/apache/spark/commit/87e207c768c4aa958fb1b8e3b2899d1b7f99c41e). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21553: [SPARK-24215][PySpark][Follow Up] Implement eager...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/21553 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21476: [SPARK-24446][yarn] Properly quote library path for YARN...

Github user vanzin commented on the issue: https://github.com/apache/spark/pull/21476 Merging to master. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21389: [SPARK-24204][SQL] Verify a schema in Json/Orc/ParquetFi...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/21389 LGTM --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21511: [SPARK-24491][Kubernetes] Configuration support f...

Github user alexmilowski commented on a diff in the pull request:

https://github.com/apache/spark/pull/21511#discussion_r198591146

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Config.scala

---

@@ -104,6 +104,20 @@ private[spark] object Config extends Logging {

.stringConf

.createOptional

+ val KUBERNETES_EXECUTOR_LIMIT_GPUS =

--- End diff --

Would drivers need GPU acceleration? My assumption was the executor code

was where all the possibly acceleration would be needed.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198598085

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/OneApplicationResource.scala

---

@@ -140,11 +140,9 @@ private[v1] class AbstractApplicationResource extends

BaseAppResource {

.header("Content-Type", MediaType.APPLICATION_OCTET_STREAM)

.build()

} catch {

- case NonFatal(e) =>

-Response.serverError()

- .entity(s"Event logs are not available for app: $appId.")

- .status(Response.Status.SERVICE_UNAVAILABLE)

- .build()

+ case NonFatal(_) =>

+UIUtils.buildErrorResponse(Response.Status.SERVICE_UNAVAILABLE,

--- End diff --

oh, now I see what you mean, sorry, I didn't get it. I am doing that,

thanks.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21589: [SPARK-24591][CORE] Number of cores and executors in the...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21589 **[Test build #92393 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92393/testReport)** for PR 21589 at commit [`c280b6c`](https://github.com/apache/spark/commit/c280b6c6471f2699fa971a48bab958a2e0b40f5a). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21649: SPARK[23648][R][SQL]Adds more types for hint in S...

GitHub user huaxingao opened a pull request: https://github.com/apache/spark/pull/21649 SPARK[23648][R][SQL]Adds more types for hint in SparkR ## What changes were proposed in this pull request? Addition of numeric and list hints for SparkR. ## How was this patch tested? Add test in test_sparkSQL.R Please review http://spark.apache.org/contributing.html before opening a pull request. You can merge this pull request into a Git repository by running: $ git pull https://github.com/huaxingao/spark spark-23648 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21649.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21649 commit 009f82c984c559b68169bb1944d2921b0c218e9e Author: Huaxin Gao Date: 2018-06-27T19:48:44Z SPARK[23648][R][SQL]Adds more types for hint in SparkR --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21649: [SPARK-23648][R][SQL]Adds more types for hint in SparkR

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21649 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/522/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21649: [SPARK-23648][R][SQL]Adds more types for hint in SparkR

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21649 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user vanzin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198586841

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/OneApplicationResource.scala

---

@@ -140,11 +140,9 @@ private[v1] class AbstractApplicationResource extends

BaseAppResource {

.header("Content-Type", MediaType.APPLICATION_OCTET_STREAM)

.build()

} catch {

- case NonFatal(e) =>

-Response.serverError()

- .entity(s"Event logs are not available for app: $appId.")

- .status(Response.Status.SERVICE_UNAVAILABLE)

- .build()

+ case NonFatal(_) =>

+UIUtils.buildErrorResponse(Response.Status.SERVICE_UNAVAILABLE,

--- End diff --

But isn't the type being set in the exception now? Seems like the same

thing that happens in other cases, where the response is set to json but the

exception overrides it when thrown.

But if it doesn't really work, then ok.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21511: [SPARK-24491][Kubernetes] Configuration support f...

Github user alexmilowski commented on a diff in the pull request: https://github.com/apache/spark/pull/21511#discussion_r198591324 --- Diff: resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/BasicExecutorFeatureStep.scala --- @@ -172,7 +184,7 @@ private[spark] class BasicExecutorFeatureStep( .addToImagePullSecrets(kubernetesConf.imagePullSecrets(): _*) .endSpec() .build() -SparkPod(executorPod, containerWithLimitCores) +SparkPod(executorPod, containerWithLimitGpus) --- End diff -- Yes ... not in love with the way this is currently structured. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16677: [SPARK-19355][SQL] Use map output statistics to improve ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/16677 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92383/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #16677: [SPARK-19355][SQL] Use map output statistics to improve ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/16677 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21589: [SPARK-24591][CORE] Number of cores and executors in the...

Github user MaxGekk commented on the issue: https://github.com/apache/spark/pull/21589 > what's the convention here, I thought SparkContext has get* methods instead `SparkContext` has a few methods without such prefix, for example: `defaultParallelism`, `defaultMinPartitions`. New methods falls to the same category. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/521/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21649: SPARK[23648][R][SQL]Adds more types for hint in SparkR

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21649 **[Test build #92394 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92394/testReport)** for PR 21649 at commit [`009f82c`](https://github.com/apache/spark/commit/009f82c984c559b68169bb1944d2921b0c218e9e). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21644: [SPARK-24660][SHS] Show correct error pages when ...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21644#discussion_r198585141

--- Diff:

core/src/main/scala/org/apache/spark/status/api/v1/OneApplicationResource.scala

---

@@ -140,11 +140,9 @@ private[v1] class AbstractApplicationResource extends

BaseAppResource {

.header("Content-Type", MediaType.APPLICATION_OCTET_STREAM)

.build()

} catch {

- case NonFatal(e) =>

-Response.serverError()

- .entity(s"Event logs are not available for app: $appId.")

- .status(Response.Status.SERVICE_UNAVAILABLE)

- .build()

+ case NonFatal(_) =>

+UIUtils.buildErrorResponse(Response.Status.SERVICE_UNAVAILABLE,

--- End diff --

no, we need to set the type, otherwise it returns the response as

octet-stream which is causing the issue..

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21589: [SPARK-24591][CORE] Number of cores and executors in the...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21589 **[Test build #92389 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92389/testReport)** for PR 21589 at commit [`2e6dce4`](https://github.com/apache/spark/commit/2e6dce489ea2e2cae36732d6a834302b2076bcb2). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21589: [SPARK-24591][CORE] Number of cores and executors...

Github user MaxGekk commented on a diff in the pull request:

https://github.com/apache/spark/pull/21589#discussion_r198590931

--- Diff: R/pkg/R/context.R ---

@@ -25,6 +25,22 @@ getMinPartitions <- function(sc, minPartitions) {

as.integer(minPartitions)

}

+#' Total number of CPU cores of all executors registered in the cluster at

the moment.

+#'

+#' @param sc SparkContext to use

+#' @return current number of cores in the cluster.

+numCores <- function(sc) {

+ callJMethod(sc, "numCores")

+}

+

+#' Total number of executors registered in the cluster at the moment.

+#'

+#' @param sc SparkContext to use

+#' @return current number of executors in the cluster.

+numExecutors <- function(sc) {

+ callJMethod(sc, "numExecutors")

+}

+

--- End diff --

Thank you for pointing me out the example of `spark.addFile`. I changed

`spark.numCores` and `spark.numExecutors` in the same way.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21644: [SPARK-24660][SHS] Show correct error pages when downloa...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21644 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/92384/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #18900: [SPARK-21687][SQL] Spark SQL should set createTime for H...

Github user gatorsmile commented on the issue: https://github.com/apache/spark/pull/18900 LGTM Thanks! Merged to master. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21542: [SPARK-24529][Build][test-maven] Add spotbugs into maven...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21542 **[Test build #92391 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/92391/testReport)** for PR 21542 at commit [`1330fa6`](https://github.com/apache/spark/commit/1330fa6e3ad5ffd38e2f2c10c1561951a6ef221f). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org