xuanyuanaosheng opened a new issue, #6614:

URL: https://github.com/apache/cloudstack/issues/6614

##### ISSUE TYPE

<!-- Pick one below and delete the rest -->

* Bug Report

##### CLOUDSTACK VERSION

1. CloudStack 4.15.2.0

2. ceph version 15.2.16

3. cloudstack agent os version: Oracle Linux Server 8.3

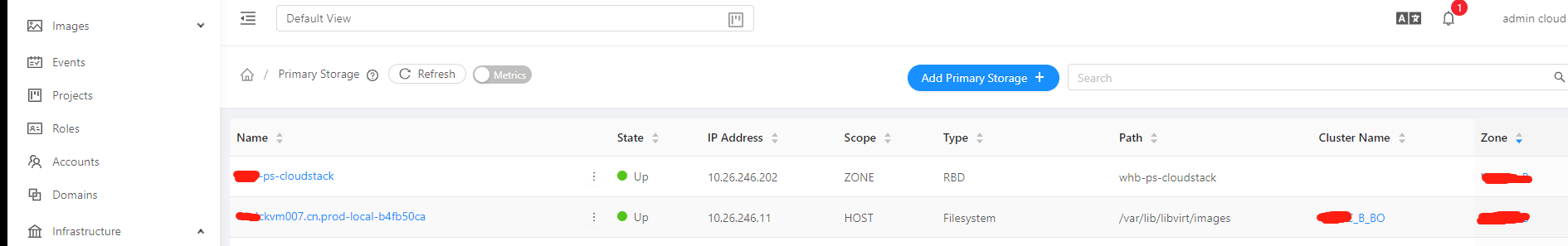

The cloudstack env using the Ceph RBD and local storage as cloudstack

cluster primary storage.

The cloudstack agent host info:

```

# rpm -qa | grep libvirt

libvirt-daemon-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-iscsi-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-nwfilter-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-mpath-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-kvm-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-config-network-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-dbus-1.3.0-2.module+el8.3.0+7860+a7792d29.x86_64

libvirt-daemon-driver-interface-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-config-nwfilter-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-rbd-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-nss-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-gobject-3.0.0-1.el8.x86_64

libvirt-gconfig-3.0.0-1.el8.x86_64

libvirt-libs-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-secret-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-gluster-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-docs-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

python3-libvirt-6.0.0-1.module+el8.3.0+7860+a7792d29.x86_64

libvirt-daemon-driver-qemu-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-disk-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-devel-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-core-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-iscsi-direct-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-bash-completion-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-lock-sanlock-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-glib-3.0.0-1.el8.x86_64

libvirt-client-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-admin-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-network-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-logical-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-nodedev-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

libvirt-daemon-driver-storage-scsi-6.0.0-37.1.0.1.module+el8.5.0+20490+52363fdb.x86_64

# rpm -qa | grep cloudstack

cloudstack-agent-4.15.2.0-1.el8.x86_64

cloudstack-common-4.15.2.0-1.el8.x86_64

# rpm -qa | grep ceph

python3-ceph-argparse-15.2.16-0.el8.x86_64

python3-ceph-common-15.2.16-0.el8.x86_64

python3-cephfs-15.2.16-0.el8.x86_64

libcephfs2-15.2.16-0.el8.x86_64

ceph-common-15.2.16-0.el8.x86_64

# virsh list

Id Name State

----------------------------

1 v-25921-VM running

2 s-25922-VM running

3 r-25817-VM running

```

The virsh pool seems ok

```

# virsh pool-list

Name State Autostart

------------------------------------------------------------

0edb0e58-6b90-3bee-8e7b-13007b0f7bf9 active no

b4fb50ca-79f2-46ba-bd1b-092f9bd14d4e active no

```

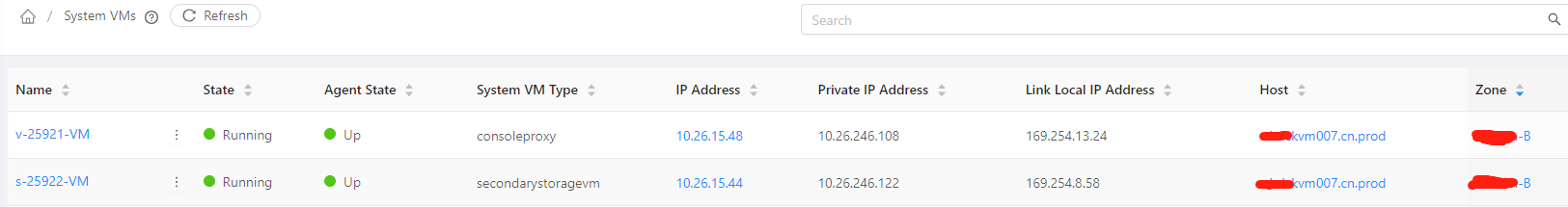

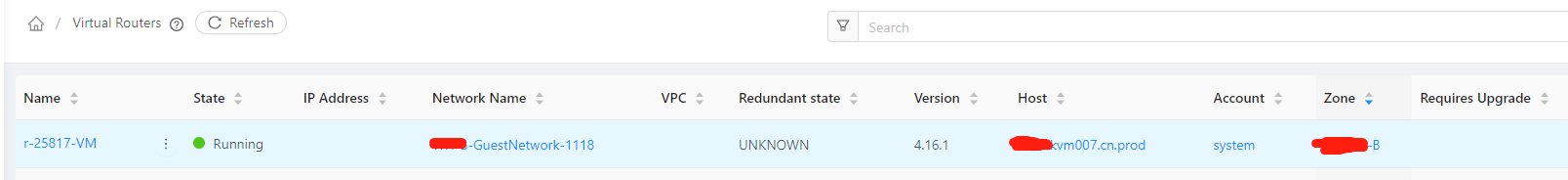

The system VM and virtual routers VM status seems OK:

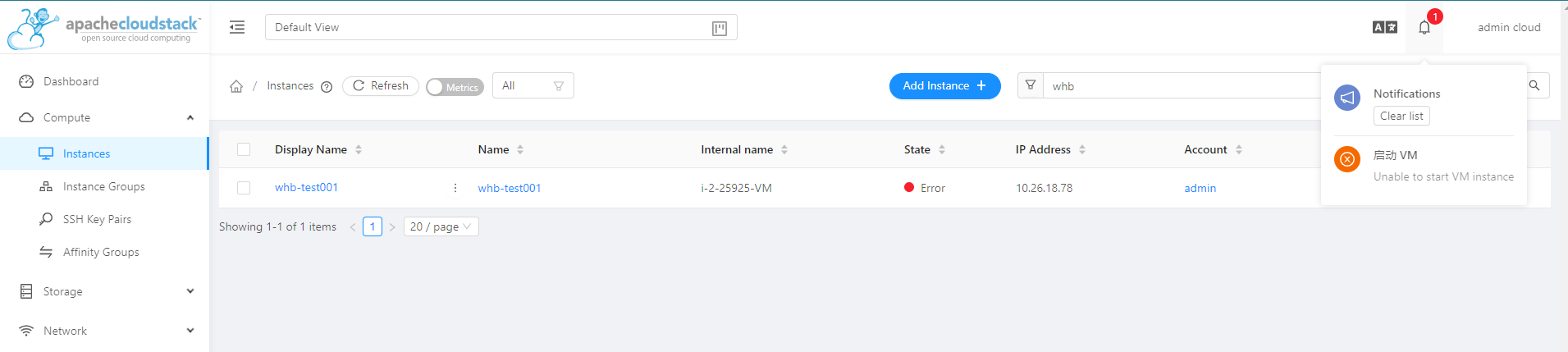

I can not create the VM:

The details error:

```

2022-08-04 18:06:37,920 DEBUG [cloud.agent.Agent] (Agent-Handler-4:null)

(logid:) Received response: Seq 32-27: { Ans: , MgmtId: 345052215515, via: 32,

Ver: v

1, Flags: 100010,

[{"com.cloud.agent.api.PingAnswer":{"_command":{"hostType":"Routing","hostId":"32","wait":"0","bypassHostMaintenance":"false"},"result":"true

","wait":"0","bypassHostMaintenance":"false"}}] }

2022-08-04 18:07:04,391 INFO [direct.download.DirectTemplateDownloaderImpl]

(agentRequest-Handler-4:null) (logid:ebc3bf86) No checksum provided, skipping

chec

ksum validation

2022-08-04 18:07:04,393 DEBUG [kvm.storage.LibvirtStorageAdaptor]

(agentRequest-Handler-4:null) (logid:ebc3bf86) Could not find volume

e7eed1fb-e20d-4e9e-be93-

7db00003de11: Storage volume not found: no storage vol with matching name

'e7eed1fb-e20d-4e9e-be93-7db00003de11'

2022-08-04 18:07:04,394 DEBUG [kvm.storage.LibvirtStorageAdaptor]

(agentRequest-Handler-4:null) (logid:ebc3bf86) Refreshing storage pool

0edb0e58-6b90-3bee-8e7

b-13007b0f7bf9

2022-08-04 18:07:04,414 WARN [kvm.storage.KVMStorageProcessor]

(agentRequest-Handler-4:null) (logid:ebc3bf86) Error downloading template 227

due to: Could not

find volume e7eed1fb-e20d-4e9e-be93-7db00003de11: Storage volume not found:

no storage vol with matching name 'e7eed1fb-e20d-4e9e-be93-7db00003de11'

2022-08-04 18:07:04,415 DEBUG [cloud.agent.Agent]

(agentRequest-Handler-4:null) (logid:ebc3bf86) Seq 32-5530983292364390524: {

Ans: , MgmtId: 345052215515, vi

a: 32, Ver: v1, Flags: 10,

[{"org.apache.cloudstack.agent.directdownload.DirectDownloadAnswer":{"retryOnOtherHosts":"true","result":"false","details":"Unable

t

o download template: Could not find volume

e7eed1fb-e20d-4e9e-be93-7db00003de11: Storage volume not found: no storage vol

with matching name 'e7eed1fb-e20d-4e9

e-be93-7db00003de11'","wait":"0","bypassHostMaintenance":"false"}}] }

2022-08-04 18:07:04,444 DEBUG [cloud.agent.Agent]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Request:Seq

32-5530983292364390525: { Cmd , MgmtId: 345052215

515, via: 32, Ver: v1, Flags: 100011,

[{"com.cloud.agent.api.StopCommand":{"isProxy":"false","checkBeforeCleanup":"false","forceStop":"false","volumesToDisconn

ect":[],"vmName":"i-2-25925-VM","executeInSequence":"false","wait":"0","bypassHostMaintenance":"false"}}]

}

2022-08-04 18:07:04,444 DEBUG [cloud.agent.Agent]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Processing command:

com.cloud.agent.api.StopCommand

2022-08-04 18:07:04,444 DEBUG [kvm.resource.LibvirtConnection]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Looking for libvirtd connection

at: qemu:///syste

m

2022-08-04 18:07:04,459 DEBUG [kvm.resource.LibvirtConnection]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Can not find KVM connection for

Instance: i-2-259

25-VM, continuing.

2022-08-04 18:07:04,459 DEBUG [kvm.resource.LibvirtConnection]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Looking for libvirtd connection

at: lxc:///

2022-08-04 18:07:04,459 INFO [kvm.resource.LibvirtConnection]

(agentRequest-Handler-3:null) (logid:ebc3bf86) No existing libvirtd connection

found. Opening a

new one

2022-08-04 18:07:04,462 DEBUG [kvm.resource.LibvirtConnection]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Can not find LXC connection for

Instance: i-2-259

25-VM, continuing.

2022-08-04 18:07:04,462 WARN [kvm.resource.LibvirtConnection]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Can not find a connection for

Instance i-2-25925-

VM. Assuming the default connection.

2022-08-04 18:07:04,462 DEBUG [kvm.resource.LibvirtConnection]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Looking for libvirtd connection

at: qemu:///syste

m

2022-08-04 18:07:04,465 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Failed to get dom xml:

org.libvirt.Libvirt

Exception: Domain not found: no domain with matching name 'i-2-25925-VM'

2022-08-04 18:07:04,465 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Failed to get dom xml:

org.libvirt.Libvirt

Exception: Domain not found: no domain with matching name 'i-2-25925-VM'

2022-08-04 18:07:04,466 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Failed to get dom xml:

org.libvirt.Libvirt

Exception: Domain not found: no domain with matching name 'i-2-25925-VM'

2022-08-04 18:07:04,466 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Executing:

/usr/share/cloudstack-common/sc

ripts/vm/network/security_group.py destroy_network_rules_for_vm --vmname

i-2-25925-VM

2022-08-04 18:07:04,467 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Executing while with timeout :

1800000

2022-08-04 18:07:04,625 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Execution is successful.

2022-08-04 18:07:04,626 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) ebtables: No chain/target/match

by that na

me

ebtables: No chain/target/match by that name

ebtables: No chain/target/match by that name

ebtables: No chain/target/match by that name

ebtables: No chain/target/match by that name

ebtables: No chain/target/match by that name

iptables: No chain/target/match by that name.

iptables: No chain/target/match by that name.

iptables: No chain/target/match by that name.

iptables: No chain/target/match by that name.

iptables: No chain/target/match by that name.

iptables: No chain/target/match by that name.

ipset v7.1: The set with the given name does not exist

2022-08-04 18:07:04,628 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Failed to get vm :Domain not

found: no dom

ain with matching name 'i-2-25925-VM'

2022-08-04 18:07:04,628 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Try to stop the vm at first

2022-08-04 18:07:04,628 DEBUG [kvm.resource.LibvirtComputingResource]

(agentRequest-Handler-3:null) (logid:ebc3bf86) VM i-2-25925-VM doesn't exist,

no need to

stop it

2022-08-04 18:07:04,628 WARN [kvm.resource.LibvirtKvmAgentHook]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Groovy script

'/etc/cloudstack/agent/hooks/libv

irt-vm-state-change.groovy' is not available. Transformations will not be

applied.

2022-08-04 18:07:04,628 WARN [kvm.resource.LibvirtKvmAgentHook]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Groovy scripting engine is not

initialized. Dat

a transformation skipped.

2022-08-04 18:07:04,629 DEBUG [cloud.agent.Agent]

(agentRequest-Handler-3:null) (logid:ebc3bf86) Seq 32-5530983292364390525: {

Ans: , MgmtId: 345052215515, vi

a: 32, Ver: v1, Flags: 10,

[{"com.cloud.agent.api.StopAnswer":{"result":"true","wait":"0","bypassHostMaintenance":"false"}}]

}

```

[agent.log](https://github.com/apache/cloudstack/files/9259863/agent.log)

But I don't know why i can not create the vm, could you please give some

advices?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]