thetumbled opened a new pull request, #18390: URL: https://github.com/apache/pulsar/pull/18390

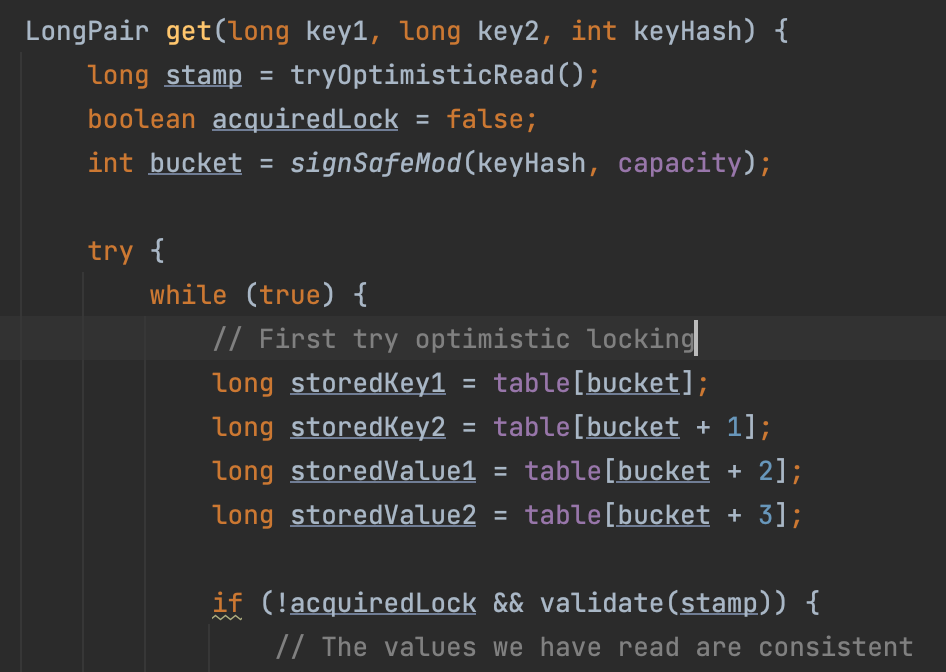

<!-- Either this PR fixes an issue, --> Fixes #18388 ### Motivation ConcurrentLongLongPairHashMap use optimistic locking to read data in Section, but if there is another thread that is removing data from the same Section, and trigger the shrink process, then the index calculated basing on dirty capacity exceed the array size, an ArrayIndexOut0fBoundsException will be throw, which is not handled now. Eventually the connection with client will be closed.  ### Modifications just catch the exception. If any thread reads a dirty capacity, if will fall back to pessimistic reading. ### Verifying this change - [ ] Make sure that the change passes the CI checks. This change is already covered by existing tests, such as *(please describe tests)*. ### Does this pull request potentially affect one of the following parts: *If the box was checked, please highlight the changes* - [ ] Dependencies (add or upgrade a dependency) - [ ] The public API - [ ] The schema - [ ] The default values of configurations - [ ] The threading model - [ ] The binary protocol - [ ] The REST endpoints - [ ] The admin CLI options - [ ] Anything that affects deployment ### Documentation <!-- DO NOT REMOVE THIS SECTION. CHECK THE PROPER BOX ONLY. --> - [ ] `doc` <!-- Your PR contains doc changes. Please attach the local preview screenshots (run `sh start.sh` at `pulsar/site2/website`) to your PR description, or else your PR might not get merged. --> - [ ] `doc-required` <!-- Your PR changes impact docs and you will update later --> - [ ] `doc-not-needed` <!-- Your PR changes do not impact docs --> - [ ] `doc-complete` <!-- Docs have been already added --> ### Matching PR in forked repository PR in forked repository: <!-- ENTER URL HERE --> -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]