[

https://issues.apache.org/jira/browse/HDFS-16575?focusedWorklogId=768305&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-768305

]

ASF GitHub Bot logged work on HDFS-16575:

-----------------------------------------

Author: ASF GitHub Bot

Created on: 10/May/22 04:09

Start Date: 10/May/22 04:09

Worklog Time Spent: 10m

Work Description: liubingxing opened a new pull request, #4295:

URL: https://github.com/apache/hadoop/pull/4295

The SPS may have misjudged in the following scenario:

1. Create a file with one block and this block have 3 replication with

**DISK** type [DISK, DISK, DISK].

2. Set this file with **ALL_SSD** storage policy.

3. The replication of this file may become [DISK, DISK, **SSD**, DISK] with

**decommission**.

4. Set this file with **HOT** storage policy and satisfy storage policy on

this file.

5. The replication finally look like [DISK, DISK, SSD] not [DISK, DISK,

DISK] after decommissioned node offline.

6. The reason is that SPS get the block replications by

FileStatus.getReplication() which is not the real num of the block.

So this block will be ignored, because it have 3 replications with DISK type

already ( one replication in a decommissioning node)

I think we can use blockInfo.getLocations().length to count the replication

of block instead of FileStatus.getReplication().

Issue Time Tracking

-------------------

Worklog Id: (was: 768305)

Remaining Estimate: 0h

Time Spent: 10m

> [SPS]: Should use real replication num instead getReplication from namenode

> ---------------------------------------------------------------------------

>

> Key: HDFS-16575

> URL: https://issues.apache.org/jira/browse/HDFS-16575

> Project: Hadoop HDFS

> Issue Type: Bug

> Reporter: qinyuren

> Priority: Major

> Attachments: image-2022-05-10-11-21-13-627.png,

> image-2022-05-10-11-21-31-987.png

>

> Time Spent: 10m

> Remaining Estimate: 0h

>

> The SPS may have misjudged in the following scenario:

> # Create a file with one block and this block have 3 replication with

> {color:#de350b}DISK{color} type [DISK, DISK, DISK].

> # Set this file with {color:#de350b}ALL_SSD{color} storage policy.

> # The replication of this file may become [DISK, DISK,

> {color:#de350b}SSD{color}, DISK] with {color:#de350b}decommission{color}.

> # Set this file with {color:#de350b}HOT{color} storage policy and satisfy

> storage policy on this file.

> # The replication finally look like [DISK, DISK, SSD] not [DISK, DISK,

> DISK] after decommissioned node offline.

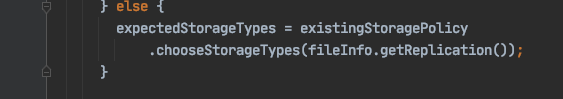

> The reason is that SPS get the block replications by

> FileStatus.getReplication() which is not the real num of the block.

> !image-2022-05-10-11-21-13-627.png|width=432,height=76!

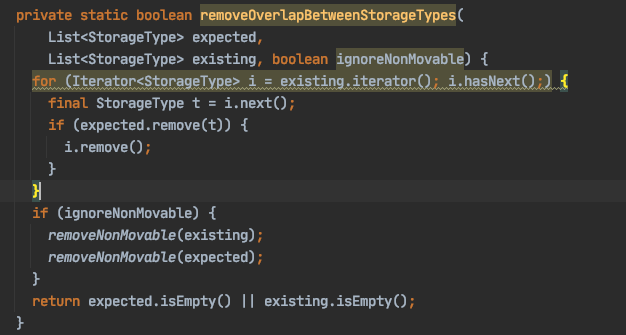

> So this block will be ignored, because it have 3 replications with DISK type

> already ( one replication in a decommissioning node)

> !image-2022-05-10-11-21-31-987.png|width=334,height=179!

> I think we can use blockInfo.getLocations().length to count the replication

> of block instead of FileStatus.getReplication().

--

This message was sent by Atlassian Jira

(v8.20.7#820007)

---------------------------------------------------------------------

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org