Silecne666 opened a new issue, #25163: URL: https://github.com/apache/shardingsphere/issues/25163

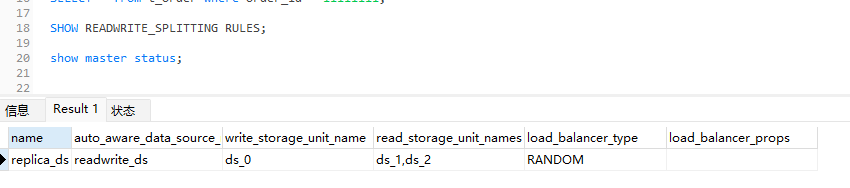

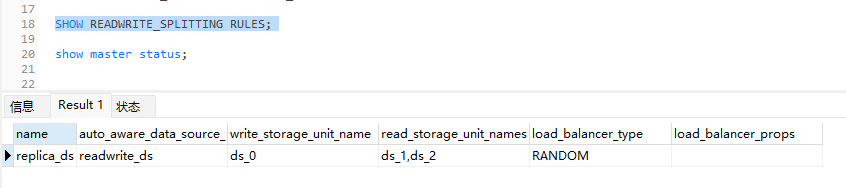

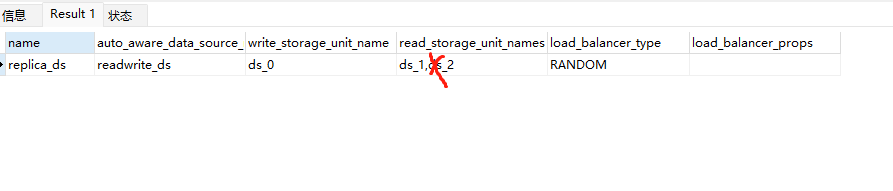

## Bug Report I am using shardingshper proxy+MGR, with one master and two slaves, to simulate that when a slave node goes down, it cannot be found that the slave node is unusable and will still be routed to the down node **For English only**, other languages will not accept. Before report a bug, make sure you have: - Searched open and closed [GitHub issues](https://github.com/apache/shardingsphere/issues). - Read documentation: [ShardingSphere Doc](https://shardingsphere.apache.org/document/current/en/overview). Please pay attention on issues you submitted, because we maybe need more details. If no response anymore and we cannot reproduce it on current information, we will **close it**. Please answer these questions before submitting your issue. Thanks! ### Which version of ShardingSphere did you use? 5.3.2 ### Which project did you use? ShardingSphere-JDBC or ShardingSphere-Proxy? ShardingSphere-Proxy ### Expected behavior After a node goes down, it is no longer routed to that node during queries ### Actual behavior Still routing according to routing strategy ### Reason analyze (If you can) ### Steps to reproduce the behavior, such as: SQL to execute, sharding rule configuration, when exception occur etc. server.yaml ```authority: users: - user: root password: root - user: sharding password: sharding privilege: type: ALL_PERMITTED props: max-connections-size-per-query: 1 kernel-executor-size: 16 # Infinite by default. proxy-frontend-flush-threshold: 128 # The default value is 128. sql-show: true proxy.transaction.type: LOCAL #默认为LOCAL事务 proxy.opentracing.enabled: false #是否开启链路追踪功能,默认为不开启。 query.with.cipher.column: true check.table.metadata.enabled: true #是否在启动时检查分表元数据一致性,默认值: false overwrite: true proxy-mysql-default-version: 8.0.26 allow.range.query.with.inline.sharding: true`` ``` config-database-discovery.yaml ``` databaseName: database_discovery_db dataSources: ds_0: url: jdbc:mysql://server02:3306/demo_primary_ds?serverTimezone=UTC&useSSL=false username: root password: 123456 connectionTimeoutMilliseconds: 3000 idleTimeoutMilliseconds: 60000 maxLifetimeMilliseconds: 1800000 maxPoolSize: 50 minPoolSize: 1 ds_1: url: jdbc:mysql://10.254.1.225:3306/demo_primary_ds?serverTimezone=UTC&useSSL=false username: root password: 123456 connectionTimeoutMilliseconds: 3000 idleTimeoutMilliseconds: 60000 maxLifetimeMilliseconds: 1800000 maxPoolSize: 50 minPoolSize: 1 ds_2: url: jdbc:mysql://server01:3306/demo_primary_ds?serverTimezone=UTC&useSSL=false username: root password: 123456 connectionTimeoutMilliseconds: 3000 idleTimeoutMilliseconds: 60000 maxLifetimeMilliseconds: 1800000 maxPoolSize: 50 minPoolSize: 1 rules: - !READWRITE_SPLITTING dataSources: replica_ds: dynamicStrategy: autoAwareDataSourceName: readwrite_ds writeDataSourceQueryEnabled: true loadBalancerName: random loadBalancers: random: type: RANDOM - !DB_DISCOVERY dataSources: readwrite_ds: dataSourceNames: - ds_0 - ds_1 - ds_2 discoveryHeartbeatName: mgr_heartbeat discoveryTypeName: mgr discoveryHeartbeats: mgr_heartbeat: props: keep-alive-cron: '0/5 * * * * ?' discoveryTypes: mgr: type: MySQL.MGR props: group-name: aadaaaaa-adda-adda-aaaa-aaaaaaddaaaa``` ``` Normal situation ``` mysql> select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ | group_replication_applier | a79ecc9f-d41f-11ed-b0f9-00163e126f06 | server01 | 3306 | ONLINE | SECONDARY | 8.0.26 | | group_replication_applier | ba91cbfd-d420-11ed-a466-00163e0abb33 | 10.254.1.225 | 3306 | ONLINE | SECONDARY | 8.0.26 | | group_replication_applier | efb22439-d41d-11ed-89bd-00163e0a040f | server02 | 3306 | ONLINE | PRIMARY | 8.0.26 | +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ 3 rows in set (0.00 sec) ```  ``` Simulate slave node downtime mysql> select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ | group_replication_applier | ba91cbfd-d420-11ed-a466-00163e0abb33 | 10.254.1.225 | 3306 | ONLINE | SECONDARY | 8.0.26 | | group_replication_applier | efb22439-d41d-11ed-89bd-00163e0a040f | server02 | 3306 | ONLINE | PRIMARY | 8.0.26 | +---------------------------+--------------------------------------+--------------+-------------+--------------+-------------+----------------+ 2 rows in set (0.00 sec) ```  Expected  ### Example codes for reproduce this issue (such as a github link). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: [email protected] For queries about this service, please contact Infrastructure at: [email protected]