sandeep-katta commented on a change in pull request #29649:

URL: https://github.com/apache/spark/pull/29649#discussion_r484018660

##########

File path:

sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveShim.scala

##########

@@ -1329,8 +1329,7 @@ private[client] class Shim_v3_0 extends Shim_v2_3 {

isSrcLocal: Boolean): Unit = {

val session = SparkSession.getActiveSession

assert(session.nonEmpty)

- val database = session.get.sessionState.catalog.getCurrentDatabase

- val table = hive.getTable(database, tableName)

+ val table = hive.getTable(tableName)

Review comment:

This should not be a problem, reason as per below

Spark prefixes the database name to the table name, so hive can resolve the

database from tablename

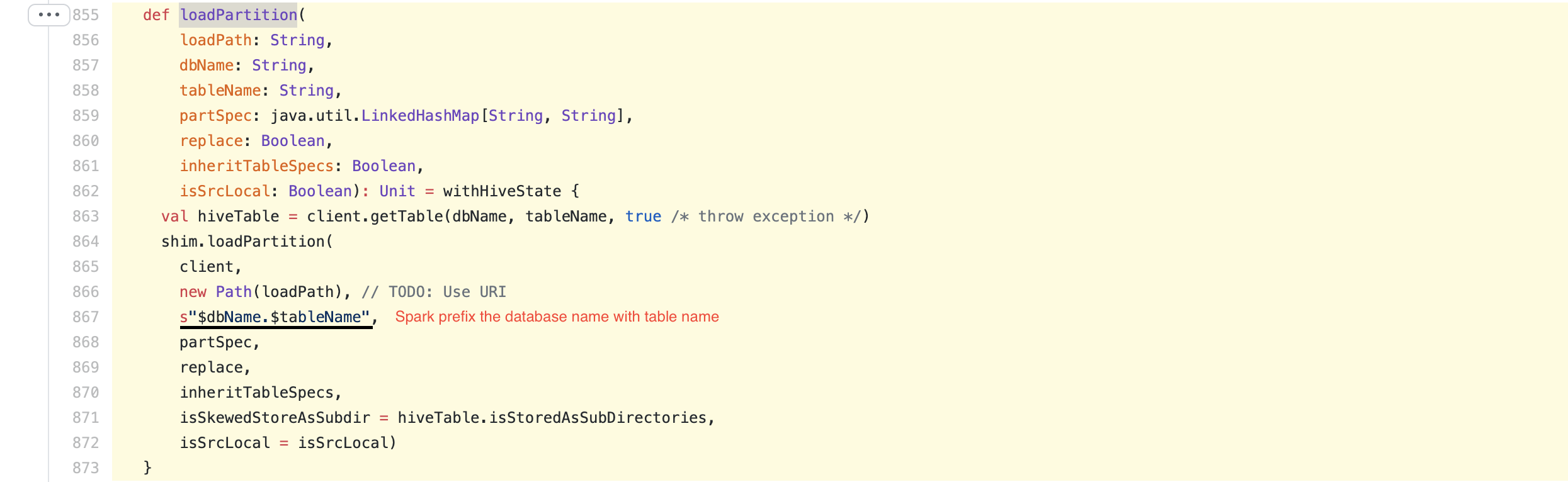

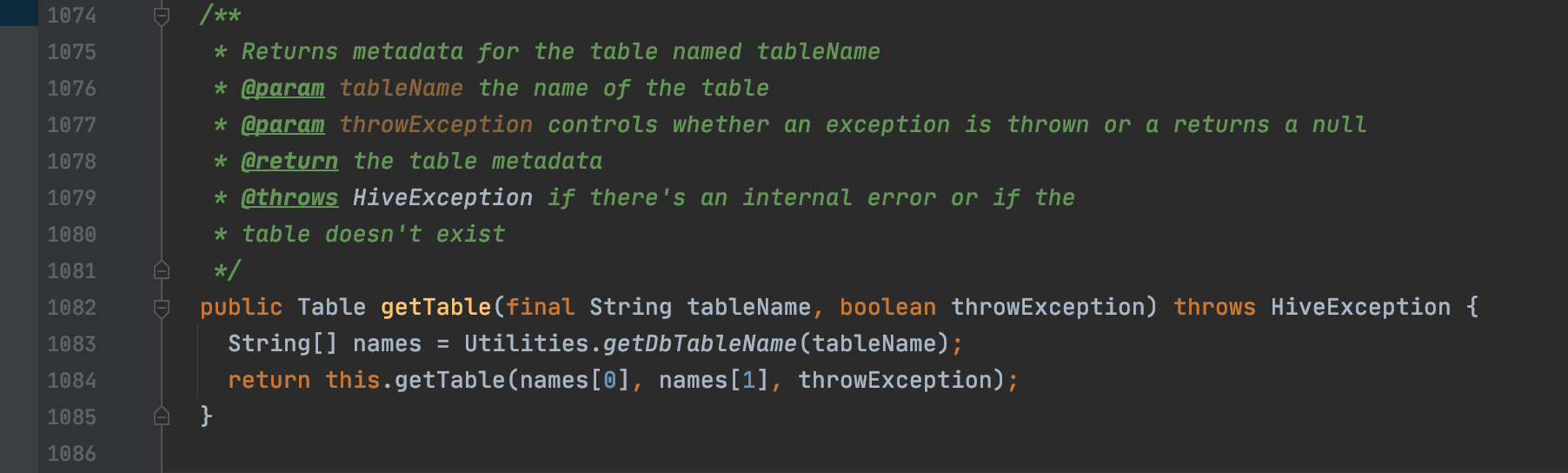

**Spark Code**

https://github.com/apache/spark/blob/master/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala#L855-L873

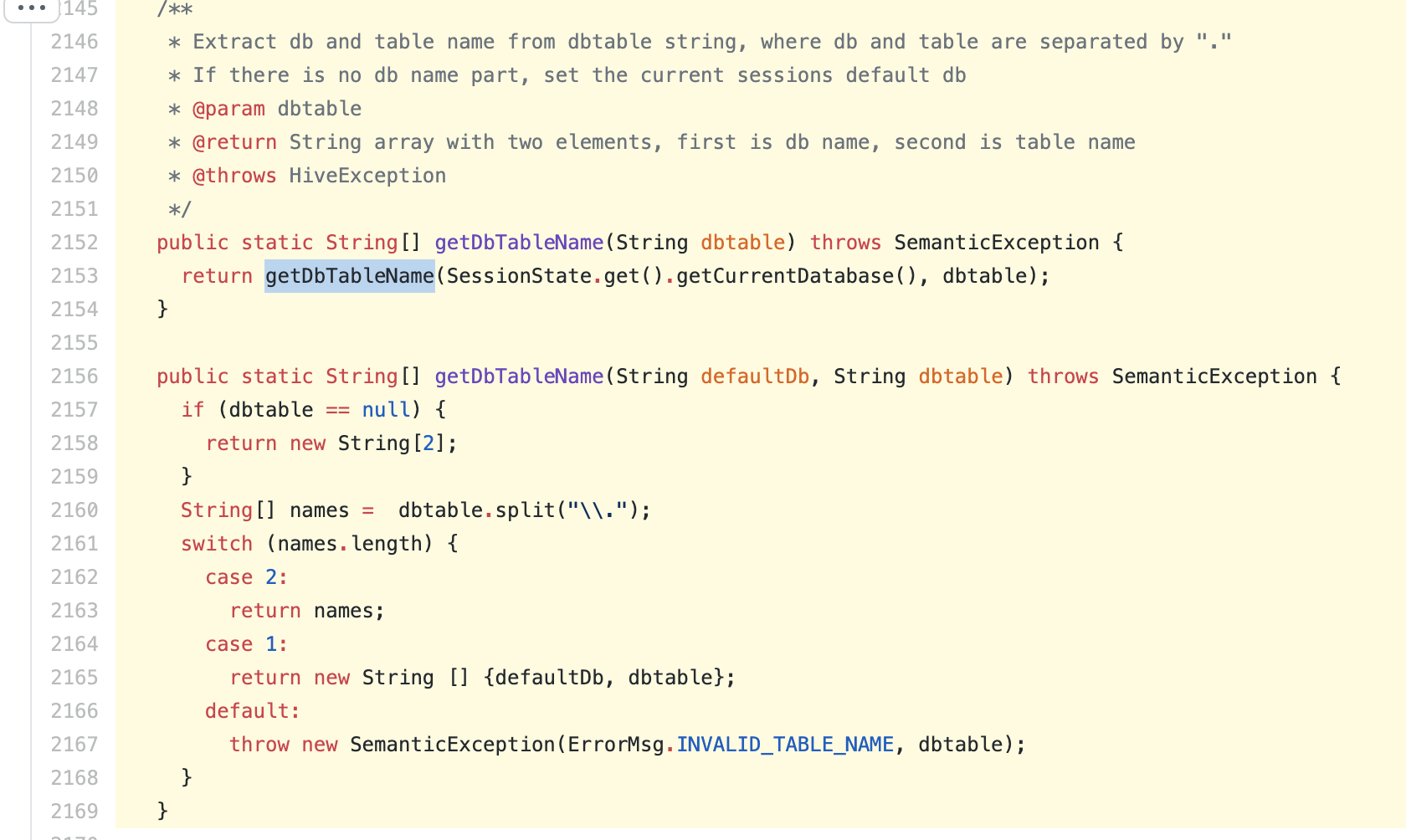

**Hive Code**

https://github.com/apache/hive/blob/rel/release-3.1.0/ql/src/java/org/apache/hadoop/hive/ql/exec/Utilities.java#L2145-L2169

P.S : Above mentioned logic is already working with Hive-2.1 support

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org