gengliangwang commented on a change in pull request #33615:

URL: https://github.com/apache/spark/pull/33615#discussion_r683232709

##########

File path: docs/configuration.md

##########

@@ -3134,3 +3134,111 @@ The stage level scheduling feature allows users to

specify task and executor res

This is only available for the RDD API in Scala, Java, and Python. It is

available on YARN and Kubernetes when dynamic allocation is enabled. See the

[YARN](running-on-yarn.html#stage-level-scheduling-overview) page or

[Kubernetes](running-on-kubernetes.html#stage-level-scheduling-overview) page

for more implementation details.

See the `RDD.withResources` and `ResourceProfileBuilder` API's for using this

feature. The current implementation acquires new executors for each

`ResourceProfile` created and currently has to be an exact match. Spark does

not try to fit tasks into an executor that require a different ResourceProfile

than the executor was created with. Executors that are not in use will idle

timeout with the dynamic allocation logic. The default configuration for this

feature is to only allow one ResourceProfile per stage. If the user associates

more then 1 ResourceProfile to an RDD, Spark will throw an exception by

default. See config `spark.scheduler.resource.profileMergeConflicts` to control

that behavior. The current merge strategy Spark implements when

`spark.scheduler.resource.profileMergeConflicts` is enabled is a simple max of

each resource within the conflicting ResourceProfiles. Spark will create a new

ResourceProfile with the max of each of the resources.

+

+# Push-based shuffle overview

+

+Push based shuffle helps improve the reliability and performance of spark

shuffle. It takes a best-effort approach to push the shuffle blocks generated

by the map tasks to remote shuffle services to be merged per shuffle partition.

Reduce tasks fetch a combination of merged shuffle partitions and original

shuffle blocks as their input data, resulting in converting small random disk

reads by shuffle services into large sequential reads. Possibility of better

data locality for reduce tasks additionally helps minimize network IO.

+

+<p> <b> Currently push-based shuffle is only supported for Spark on YARN with

external shuffle service. </b></p>

+

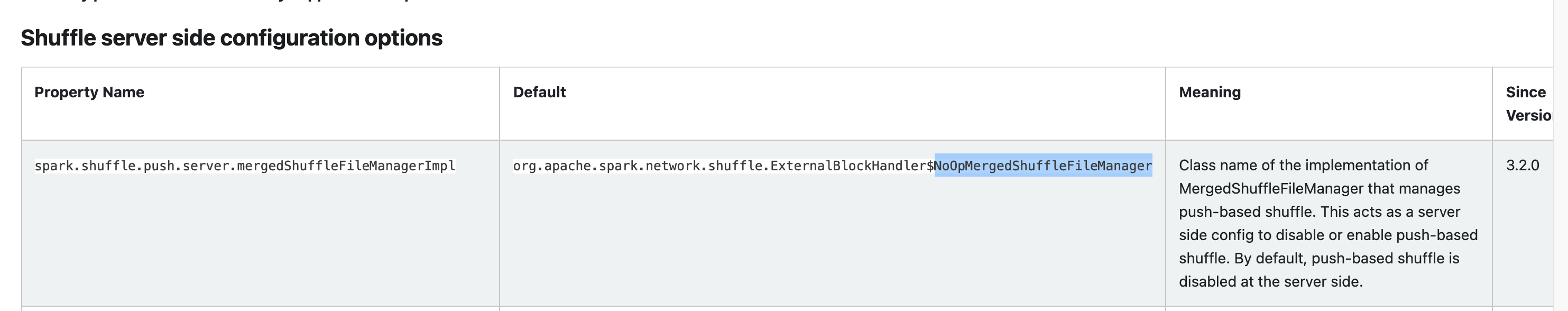

+### Shuffle server side configuration options

+

+<table class="table">

+<tr><th>Property Name</th><th>Default</th><th>Meaning</th><th>Since

Version</th></tr>

+<tr>

+ <td><code>spark.shuffle.push.server.mergedShuffleFileManagerImpl</code></td>

+ <td>

+

<code>org.apache.spark.network.shuffle.ExternalBlockHandler$NoOpMergedShuffleFileManager</code>

Review comment:

It can be tricky to contain `$` in the configuration value. Also, the

config value is so long that it affects the readability of the doc:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org