[spark] branch master updated (4d4c00c -> 0ce5519)

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 4d4c00c [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL add 0ce5519 [SPARK-31153][BUILD] Cleanup several failures in lint-python No new revisions were added by this update. Summary of changes: dev/lint-python | 35 +++ 1 file changed, 19 insertions(+), 16 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] gatorsmile commented on issue #263: Add "Amend Spark's Semantic Versioning Policy"

gatorsmile commented on issue #263: Add "Amend Spark's Semantic Versioning Policy" URL: https://github.com/apache/spark-website/pull/263#issuecomment-599152558 Thanks! Merged This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] gatorsmile merged pull request #263: Add "Amend Spark's Semantic Versioning Policy"

gatorsmile merged pull request #263: Add "Amend Spark's Semantic Versioning Policy" URL: https://github.com/apache/spark-website/pull/263 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark-website] branch asf-site updated: Add "Amend Spark's Semantic Versioning Policy" #263

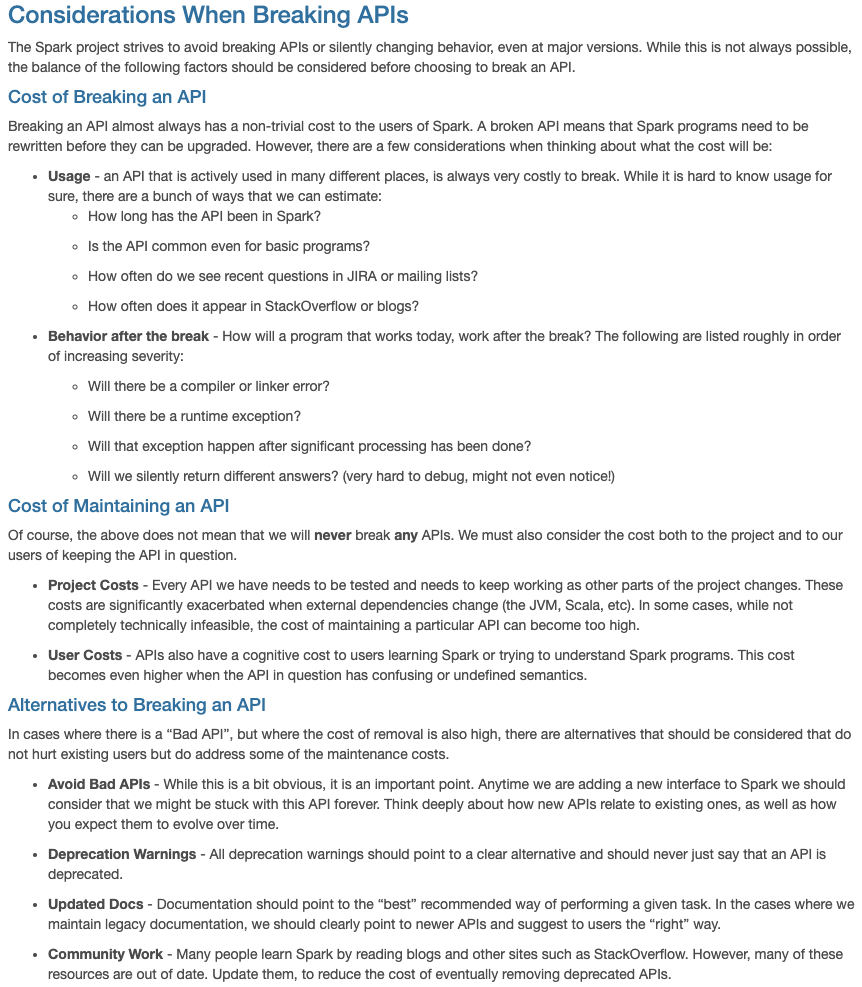

This is an automated email from the ASF dual-hosted git repository. lixiao pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/spark-website.git The following commit(s) were added to refs/heads/asf-site by this push: new 6f1e0de Add "Amend Spark's Semantic Versioning Policy" #263 6f1e0de is described below commit 6f1e0deb6632f75ad0492ffba372f1ebb828ddfb Author: Xiao Li AuthorDate: Sat Mar 14 17:40:30 2020 -0700 Add "Amend Spark's Semantic Versioning Policy" #263 The vote of "Amend Spark's Semantic Versioning Policy" passed in the dev mailing list http://apache-spark-developers-list.1001551.n3.nabble.com/VOTE-Amend-Spark-s-Semantic-Versioning-Policy-td28988.html This PR is to add it to the versioning-policy page.  --- site/versioning-policy.html | 77 + versioning-policy.md| 47 +++ 2 files changed, 124 insertions(+) diff --git a/site/versioning-policy.html b/site/versioning-policy.html index 34547e8..679e9b2 100644 --- a/site/versioning-policy.html +++ b/site/versioning-policy.html @@ -245,6 +245,83 @@ maximum compatibility. Code should not be merged into the project as expe a plan to change the API later, because users expect the maximum compatibility from all available APIs. +Considerations When Breaking APIs + +The Spark project strives to avoid breaking APIs or silently changing behavior, even at major versions. While this is not always possible, the balance of the following factors should be considered before choosing to break an API. + +Cost of Breaking an API + +Breaking an API almost always has a non-trivial cost to the users of Spark. A broken API means that Spark programs need to be rewritten before they can be upgraded. However, there are a few considerations when thinking about what the cost will be: + + + Usage - an API that is actively used in many different places, is always very costly to break. While it is hard to know usage for sure, there are a bunch of ways that we can estimate: + + +How long has the API been in Spark? + + +Is the API common even for basic programs? + + +How often do we see recent questions in JIRA or mailing lists? + + +How often does it appear in StackOverflow or blogs? + + + + +Behavior after the break - How will a program that works today, work after the break? The following are listed roughly in order of increasing severity: + + + +Will there be a compiler or linker error? + + +Will there be a runtime exception? + + +Will that exception happen after significant processing has been done? + + +Will we silently return different answers? (very hard to debug, might not even notice!) + + + + + +Cost of Maintaining an API + +Of course, the above does not mean that we will never break any APIs. We must also consider the cost both to the project and to our users of keeping the API in question. + + + +Project Costs - Every API we have needs to be tested and needs to keep working as other parts of the project changes. These costs are significantly exacerbated when external dependencies change (the JVM, Scala, etc). In some cases, while not completely technically infeasible, the cost of maintaining a particular API can become too high. + + +User Costs - APIs also have a cognitive cost to users learning Spark or trying to understand Spark programs. This cost becomes even higher when the API in question has confusing or undefined semantics. + + + +Alternatives to Breaking an API + +In cases where there is a Bad API, but where the cost of removal is also high, there are alternatives that should be considered that do not hurt existing users but do address some of the maintenance costs. + + + +Avoid Bad APIs - While this is a bit obvious, it is an important point. Anytime we are adding a new interface to Spark we should consider that we might be stuck with this API forever. Think deeply about how new APIs relate to existing ones, as well as how you expect them to evolve over time. + + +Deprecation Warnings - All deprecation warnings should point to a clear alternative and should never just say that an API is deprecated. + + +Updated Docs - Documentation should point to the best recommended way of performing a given task. In the cases where we maintain legacy documentation, we should clearly point to newer APIs and suggest to users the right way. + + +Community Work - Many people learn Spark by reading blogs and other sites such as StackOverflow. However, many of these resources are out of date. Update them, to reduce the cost of eventually removing

[spark] branch branch-3.0 updated: [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

This is an automated email from the ASF dual-hosted git repository.

yamamuro pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new f83ef7d [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

f83ef7d is described below

commit f83ef7d143aafbbdd1bb322567481f68db72195a

Author: gatorsmile

AuthorDate: Sun Mar 15 07:35:20 2020 +0900

[SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

### What changes were proposed in this pull request?

The current migration guide of SQL is too long for most readers to find the

needed info. This PR is to group the items in the migration guide of Spark SQL

based on the corresponding components.

Note. This PR does not change the contents of the migration guides.

Attached figure is the screenshot after the change.

### Why are the changes needed?

The current migration guide of SQL is too long for most readers to find the

needed info.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes #27909 from gatorsmile/migrationGuideReorg.

Authored-by: gatorsmile

Signed-off-by: Takeshi Yamamuro

(cherry picked from commit 4d4c00c1b564b57d3016ce8c3bfcffaa6e58f012)

Signed-off-by: Takeshi Yamamuro

---

docs/sql-migration-guide.md | 287 +++-

1 file changed, 150 insertions(+), 137 deletions(-)

diff --git a/docs/sql-migration-guide.md b/docs/sql-migration-guide.md

index 19c744c..31d5c68 100644

--- a/docs/sql-migration-guide.md

+++ b/docs/sql-migration-guide.md

@@ -23,92 +23,119 @@ license: |

{:toc}

## Upgrading from Spark SQL 2.4 to 3.0

- - Since Spark 3.0, when inserting a value into a table column with a

different data type, the type coercion is performed as per ANSI SQL standard.

Certain unreasonable type conversions such as converting `string` to `int` and

`double` to `boolean` are disallowed. A runtime exception will be thrown if the

value is out-of-range for the data type of the column. In Spark version 2.4 and

earlier, type conversions during table insertion are allowed as long as they

are valid `Cast`. When inse [...]

- - In Spark 3.0, the deprecated methods `SQLContext.createExternalTable` and

`SparkSession.createExternalTable` have been removed in favor of its

replacement, `createTable`.

-

- - In Spark 3.0, the deprecated `HiveContext` class has been removed. Use

`SparkSession.builder.enableHiveSupport()` instead.

-

- - Since Spark 3.0, configuration `spark.sql.crossJoin.enabled` become

internal configuration, and is true by default, so by default spark won't raise

exception on sql with implicit cross join.

-

- - In Spark version 2.4 and earlier, SQL queries such as `FROM ` or

`FROM UNION ALL FROM ` are supported by accident. In hive-style

`FROM SELECT `, the `SELECT` clause is not negligible. Neither

Hive nor Presto support this syntax. Therefore we will treat these queries as

invalid since Spark 3.0.

+### Dataset/DataFrame APIs

- Since Spark 3.0, the Dataset and DataFrame API `unionAll` is not

deprecated any more. It is an alias for `union`.

- - In Spark version 2.4 and earlier, the parser of JSON data source treats

empty strings as null for some data types such as `IntegerType`. For

`FloatType`, `DoubleType`, `DateType` and `TimestampType`, it fails on empty

strings and throws exceptions. Since Spark 3.0, we disallow empty strings and

will throw exceptions for data types except for `StringType` and `BinaryType`.

The previous behaviour of allowing empty string can be restored by setting

`spark.sql.legacy.json.allowEmptyStrin [...]

-

- - Since Spark 3.0, the `from_json` functions supports two modes -

`PERMISSIVE` and `FAILFAST`. The modes can be set via the `mode` option. The

default mode became `PERMISSIVE`. In previous versions, behavior of `from_json`

did not conform to either `PERMISSIVE` nor `FAILFAST`, especially in processing

of malformed JSON records. For example, the JSON string `{"a" 1}` with the

schema `a INT` is converted to `null` by previous versions but Spark 3.0

converts it to `Row(null)`.

-

- - The `ADD JAR` command previously returned a result set with the single

value 0. It now returns an empty result set.

-

- - In Spark version 2.4 and earlier, users can create map values with map

type key via built-in function such as `CreateMap`, `MapFromArrays`, etc. Since

Spark 3.0, it's not allowed to create map values with map type key with these

built-in functions. Users can use `map_entries` function to convert map to

array> as a workaround. In addition, users can still read

map values with map type key

[spark] branch branch-3.0 updated: [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

This is an automated email from the ASF dual-hosted git repository.

yamamuro pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new f83ef7d [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

f83ef7d is described below

commit f83ef7d143aafbbdd1bb322567481f68db72195a

Author: gatorsmile

AuthorDate: Sun Mar 15 07:35:20 2020 +0900

[SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

### What changes were proposed in this pull request?

The current migration guide of SQL is too long for most readers to find the

needed info. This PR is to group the items in the migration guide of Spark SQL

based on the corresponding components.

Note. This PR does not change the contents of the migration guides.

Attached figure is the screenshot after the change.

### Why are the changes needed?

The current migration guide of SQL is too long for most readers to find the

needed info.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes #27909 from gatorsmile/migrationGuideReorg.

Authored-by: gatorsmile

Signed-off-by: Takeshi Yamamuro

(cherry picked from commit 4d4c00c1b564b57d3016ce8c3bfcffaa6e58f012)

Signed-off-by: Takeshi Yamamuro

---

docs/sql-migration-guide.md | 287 +++-

1 file changed, 150 insertions(+), 137 deletions(-)

diff --git a/docs/sql-migration-guide.md b/docs/sql-migration-guide.md

index 19c744c..31d5c68 100644

--- a/docs/sql-migration-guide.md

+++ b/docs/sql-migration-guide.md

@@ -23,92 +23,119 @@ license: |

{:toc}

## Upgrading from Spark SQL 2.4 to 3.0

- - Since Spark 3.0, when inserting a value into a table column with a

different data type, the type coercion is performed as per ANSI SQL standard.

Certain unreasonable type conversions such as converting `string` to `int` and

`double` to `boolean` are disallowed. A runtime exception will be thrown if the

value is out-of-range for the data type of the column. In Spark version 2.4 and

earlier, type conversions during table insertion are allowed as long as they

are valid `Cast`. When inse [...]

- - In Spark 3.0, the deprecated methods `SQLContext.createExternalTable` and

`SparkSession.createExternalTable` have been removed in favor of its

replacement, `createTable`.

-

- - In Spark 3.0, the deprecated `HiveContext` class has been removed. Use

`SparkSession.builder.enableHiveSupport()` instead.

-

- - Since Spark 3.0, configuration `spark.sql.crossJoin.enabled` become

internal configuration, and is true by default, so by default spark won't raise

exception on sql with implicit cross join.

-

- - In Spark version 2.4 and earlier, SQL queries such as `FROM ` or

`FROM UNION ALL FROM ` are supported by accident. In hive-style

`FROM SELECT `, the `SELECT` clause is not negligible. Neither

Hive nor Presto support this syntax. Therefore we will treat these queries as

invalid since Spark 3.0.

+### Dataset/DataFrame APIs

- Since Spark 3.0, the Dataset and DataFrame API `unionAll` is not

deprecated any more. It is an alias for `union`.

- - In Spark version 2.4 and earlier, the parser of JSON data source treats

empty strings as null for some data types such as `IntegerType`. For

`FloatType`, `DoubleType`, `DateType` and `TimestampType`, it fails on empty

strings and throws exceptions. Since Spark 3.0, we disallow empty strings and

will throw exceptions for data types except for `StringType` and `BinaryType`.

The previous behaviour of allowing empty string can be restored by setting

`spark.sql.legacy.json.allowEmptyStrin [...]

-

- - Since Spark 3.0, the `from_json` functions supports two modes -

`PERMISSIVE` and `FAILFAST`. The modes can be set via the `mode` option. The

default mode became `PERMISSIVE`. In previous versions, behavior of `from_json`

did not conform to either `PERMISSIVE` nor `FAILFAST`, especially in processing

of malformed JSON records. For example, the JSON string `{"a" 1}` with the

schema `a INT` is converted to `null` by previous versions but Spark 3.0

converts it to `Row(null)`.

-

- - The `ADD JAR` command previously returned a result set with the single

value 0. It now returns an empty result set.

-

- - In Spark version 2.4 and earlier, users can create map values with map

type key via built-in function such as `CreateMap`, `MapFromArrays`, etc. Since

Spark 3.0, it's not allowed to create map values with map type key with these

built-in functions. Users can use `map_entries` function to convert map to

array> as a workaround. In addition, users can still read

map values with map type key

[spark] branch master updated (9628aca -> 4d4c00c)

This is an automated email from the ASF dual-hosted git repository. yamamuro pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 9628aca [MINOR][DOCS] Fix [[...]] to `...` and ... in documentation add 4d4c00c [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL No new revisions were added by this update. Summary of changes: docs/sql-migration-guide.md | 287 +++- 1 file changed, 150 insertions(+), 137 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

This is an automated email from the ASF dual-hosted git repository.

yamamuro pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 4d4c00c [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

4d4c00c is described below

commit 4d4c00c1b564b57d3016ce8c3bfcffaa6e58f012

Author: gatorsmile

AuthorDate: Sun Mar 15 07:35:20 2020 +0900

[SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

### What changes were proposed in this pull request?

The current migration guide of SQL is too long for most readers to find the

needed info. This PR is to group the items in the migration guide of Spark SQL

based on the corresponding components.

Note. This PR does not change the contents of the migration guides.

Attached figure is the screenshot after the change.

### Why are the changes needed?

The current migration guide of SQL is too long for most readers to find the

needed info.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes #27909 from gatorsmile/migrationGuideReorg.

Authored-by: gatorsmile

Signed-off-by: Takeshi Yamamuro

---

docs/sql-migration-guide.md | 287 +++-

1 file changed, 150 insertions(+), 137 deletions(-)

diff --git a/docs/sql-migration-guide.md b/docs/sql-migration-guide.md

index 7cca43e..d6b663d 100644

--- a/docs/sql-migration-guide.md

+++ b/docs/sql-migration-guide.md

@@ -26,92 +26,119 @@ license: |

- Since Spark 3.1, grouping_id() returns long values. In Spark version 3.0

and earlier, this function returns int values. To restore the behavior before

Spark 3.0, you can set `spark.sql.legacy.integerGroupingId` to `true`.

## Upgrading from Spark SQL 2.4 to 3.0

- - Since Spark 3.0, when inserting a value into a table column with a

different data type, the type coercion is performed as per ANSI SQL standard.

Certain unreasonable type conversions such as converting `string` to `int` and

`double` to `boolean` are disallowed. A runtime exception will be thrown if the

value is out-of-range for the data type of the column. In Spark version 2.4 and

earlier, type conversions during table insertion are allowed as long as they

are valid `Cast`. When inse [...]

- - In Spark 3.0, the deprecated methods `SQLContext.createExternalTable` and

`SparkSession.createExternalTable` have been removed in favor of its

replacement, `createTable`.

-

- - In Spark 3.0, the deprecated `HiveContext` class has been removed. Use

`SparkSession.builder.enableHiveSupport()` instead.

-

- - Since Spark 3.0, configuration `spark.sql.crossJoin.enabled` become

internal configuration, and is true by default, so by default spark won't raise

exception on sql with implicit cross join.

-

- - In Spark version 2.4 and earlier, SQL queries such as `FROM ` or

`FROM UNION ALL FROM ` are supported by accident. In hive-style

`FROM SELECT `, the `SELECT` clause is not negligible. Neither

Hive nor Presto support this syntax. Therefore we will treat these queries as

invalid since Spark 3.0.

+### Dataset/DataFrame APIs

- Since Spark 3.0, the Dataset and DataFrame API `unionAll` is not

deprecated any more. It is an alias for `union`.

- - In Spark version 2.4 and earlier, the parser of JSON data source treats

empty strings as null for some data types such as `IntegerType`. For

`FloatType`, `DoubleType`, `DateType` and `TimestampType`, it fails on empty

strings and throws exceptions. Since Spark 3.0, we disallow empty strings and

will throw exceptions for data types except for `StringType` and `BinaryType`.

The previous behaviour of allowing empty string can be restored by setting

`spark.sql.legacy.json.allowEmptyStrin [...]

-

- - Since Spark 3.0, the `from_json` functions supports two modes -

`PERMISSIVE` and `FAILFAST`. The modes can be set via the `mode` option. The

default mode became `PERMISSIVE`. In previous versions, behavior of `from_json`

did not conform to either `PERMISSIVE` nor `FAILFAST`, especially in processing

of malformed JSON records. For example, the JSON string `{"a" 1}` with the

schema `a INT` is converted to `null` by previous versions but Spark 3.0

converts it to `Row(null)`.

-

- - The `ADD JAR` command previously returned a result set with the single

value 0. It now returns an empty result set.

-

- - In Spark version 2.4 and earlier, users can create map values with map

type key via built-in function such as `CreateMap`, `MapFromArrays`, etc. Since

Spark 3.0, it's not allowed to create map values with map type key with these

built-in functions. Users can use `map_entries` function to

[spark] branch branch-3.0 updated: [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

This is an automated email from the ASF dual-hosted git repository.

yamamuro pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new f83ef7d [SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

f83ef7d is described below

commit f83ef7d143aafbbdd1bb322567481f68db72195a

Author: gatorsmile

AuthorDate: Sun Mar 15 07:35:20 2020 +0900

[SPARK-31151][SQL][DOC] Reorganize the migration guide of SQL

### What changes were proposed in this pull request?

The current migration guide of SQL is too long for most readers to find the

needed info. This PR is to group the items in the migration guide of Spark SQL

based on the corresponding components.

Note. This PR does not change the contents of the migration guides.

Attached figure is the screenshot after the change.

### Why are the changes needed?

The current migration guide of SQL is too long for most readers to find the

needed info.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes #27909 from gatorsmile/migrationGuideReorg.

Authored-by: gatorsmile

Signed-off-by: Takeshi Yamamuro

(cherry picked from commit 4d4c00c1b564b57d3016ce8c3bfcffaa6e58f012)

Signed-off-by: Takeshi Yamamuro

---

docs/sql-migration-guide.md | 287 +++-

1 file changed, 150 insertions(+), 137 deletions(-)

diff --git a/docs/sql-migration-guide.md b/docs/sql-migration-guide.md

index 19c744c..31d5c68 100644

--- a/docs/sql-migration-guide.md

+++ b/docs/sql-migration-guide.md

@@ -23,92 +23,119 @@ license: |

{:toc}

## Upgrading from Spark SQL 2.4 to 3.0

- - Since Spark 3.0, when inserting a value into a table column with a

different data type, the type coercion is performed as per ANSI SQL standard.

Certain unreasonable type conversions such as converting `string` to `int` and

`double` to `boolean` are disallowed. A runtime exception will be thrown if the

value is out-of-range for the data type of the column. In Spark version 2.4 and

earlier, type conversions during table insertion are allowed as long as they

are valid `Cast`. When inse [...]

- - In Spark 3.0, the deprecated methods `SQLContext.createExternalTable` and

`SparkSession.createExternalTable` have been removed in favor of its

replacement, `createTable`.

-

- - In Spark 3.0, the deprecated `HiveContext` class has been removed. Use

`SparkSession.builder.enableHiveSupport()` instead.

-

- - Since Spark 3.0, configuration `spark.sql.crossJoin.enabled` become

internal configuration, and is true by default, so by default spark won't raise

exception on sql with implicit cross join.

-

- - In Spark version 2.4 and earlier, SQL queries such as `FROM ` or

`FROM UNION ALL FROM ` are supported by accident. In hive-style

`FROM SELECT `, the `SELECT` clause is not negligible. Neither

Hive nor Presto support this syntax. Therefore we will treat these queries as

invalid since Spark 3.0.

+### Dataset/DataFrame APIs

- Since Spark 3.0, the Dataset and DataFrame API `unionAll` is not

deprecated any more. It is an alias for `union`.

- - In Spark version 2.4 and earlier, the parser of JSON data source treats

empty strings as null for some data types such as `IntegerType`. For

`FloatType`, `DoubleType`, `DateType` and `TimestampType`, it fails on empty

strings and throws exceptions. Since Spark 3.0, we disallow empty strings and

will throw exceptions for data types except for `StringType` and `BinaryType`.

The previous behaviour of allowing empty string can be restored by setting

`spark.sql.legacy.json.allowEmptyStrin [...]

-

- - Since Spark 3.0, the `from_json` functions supports two modes -

`PERMISSIVE` and `FAILFAST`. The modes can be set via the `mode` option. The

default mode became `PERMISSIVE`. In previous versions, behavior of `from_json`

did not conform to either `PERMISSIVE` nor `FAILFAST`, especially in processing

of malformed JSON records. For example, the JSON string `{"a" 1}` with the

schema `a INT` is converted to `null` by previous versions but Spark 3.0

converts it to `Row(null)`.

-

- - The `ADD JAR` command previously returned a result set with the single

value 0. It now returns an empty result set.

-

- - In Spark version 2.4 and earlier, users can create map values with map

type key via built-in function such as `CreateMap`, `MapFromArrays`, etc. Since

Spark 3.0, it's not allowed to create map values with map type key with these

built-in functions. Users can use `map_entries` function to convert map to

array> as a workaround. In addition, users can still read

map values with map type key