thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1583807262

I probably looked at the hive branch 2.0 2.1 code and it should be the same

as 2.2

--

This is an automated message from the Apache Git Service.

To respond to the message,

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1583792529

@danny0405 I submitted a pr to be compatible with hive2.2, copied part of

the code of hive2.3 to hudi, and converted the data structure of hive2.2 to the

form in 2.3 for

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1582168044

Look at this test, the logic of this split should not be careless

--

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1581778050

> On yarn

The problem of failure in this place should be

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1580676828

>

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1580633139

> > NoClassDefFoundError: org/apache/hadoop/hive/common/StringInternUtils

>

> we may borrow `StringInternUtils` from hive to support hive version < 2.3

Some minor

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1580630562

> > NoClassDefFoundError:org/apache/hadoop/hive/common/StringInternUtils

>

> 我们可以从 hive 借用`StringInternUtils`以支持 hive version < 2.3

Some minor changes on the hive

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1578533981

On yarn

--

This is an automated message from the Apache Git Service.

To

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1578529904

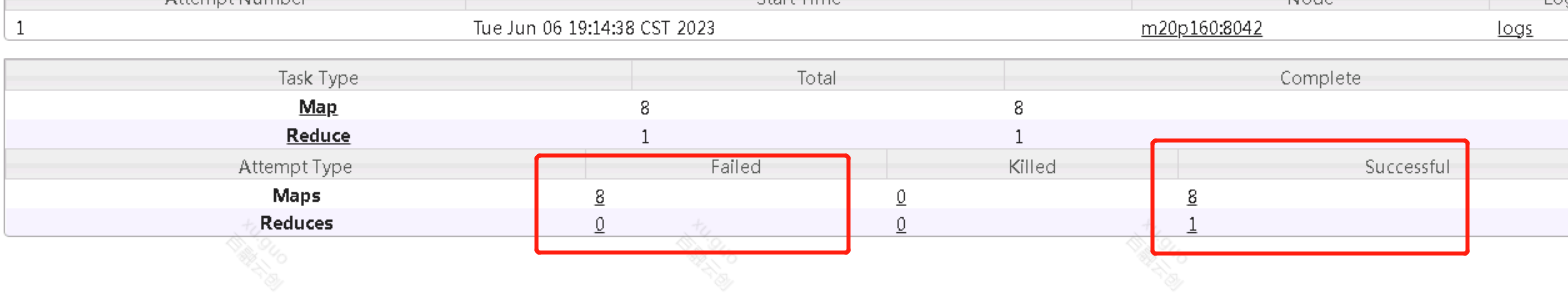

I also found another problem. When I set

mapreduce.input.fileinputformat.split.maxsize=1, although the map task is

greater than 1, there will be a task failure on yarn and then

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1578525134

--

This is an automated message from the Apache Git Service.

To respond to

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1578523112

Why is this implemented here? mapreduce.input.fileinputformat.split.maxsize

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1578020377

I tried to modify it directly, but the map split of the hive query is only

1, causing the task to exceed the yarn memory limit and fail. When the query

limit is 10, the data in

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1578017076

In hive 2.2

In hive 2.3

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1578015039

The main difference is that the structure returned when obtaining Path ->

Alias from MapWork is different. At present, if the hive version is directly

modified, then the part

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1576517627

I tried to make modifications and found that it's not just a simple copy

related class and renamed it. There are changes in the API for hive2.2 and

hive2.3, and there are also

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1576140270

Thank you for your reply. Once the test is successful, I will submit a PR

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1576108820

> Yeah, do you have intreast to contribute a fix for this? We can write our

own impl for `StringInternUtils` because it does not have good version

compatibility.

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1575949058

Can these methods be extended once to be better compatible with hive?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1575945668

I confirmed that this class does not exist in the currently used hive

version, so it only supports part of the Hive2.x version

--

This is an automated message from the Apache

thomasg19930417 commented on issue #8882:

URL: https://github.com/apache/hudi/issues/8882#issuecomment-1575944762

I found a similar issue https://github.com/apache/hudi/issues/3795

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to

20 matches

Mail list logo