[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use

cached-SQL query building for hot-path queries

URL: https://github.com/apache/airflow/pull/6792#discussion_r357000170

##

File path: airflow/jobs/scheduler_job.py

##

@@ -1006,8 +978,7 @@ def _change_state_for_tis_without_dagrun(self,

)

Stats.gauge('scheduler.tasks.without_dagrun', tis_changed)

-@provide_session

-def __get_concurrency_maps(self, states, session=None):

+def __get_concurrency_maps(self, states, session):

Review comment:

This method has an invalid rtype. Returns two dictionaries in a tuple, not

just one dictionary. Can you correct that?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use

cached-SQL query building for hot-path queries

URL: https://github.com/apache/airflow/pull/6792#discussion_r357000170

##

File path: airflow/jobs/scheduler_job.py

##

@@ -1006,8 +978,7 @@ def _change_state_for_tis_without_dagrun(self,

)

Stats.gauge('scheduler.tasks.without_dagrun', tis_changed)

-@provide_session

-def __get_concurrency_maps(self, states, session=None):

+def __get_concurrency_maps(self, states, session):

Review comment:

This method has an invalid rtype. Returns two dictionaries in a tuple, not

just one. Can you correct that?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use

cached-SQL query building for hot-path queries

URL: https://github.com/apache/airflow/pull/6792#discussion_r357000170

##

File path: airflow/jobs/scheduler_job.py

##

@@ -1006,8 +978,7 @@ def _change_state_for_tis_without_dagrun(self,

)

Stats.gauge('scheduler.tasks.without_dagrun', tis_changed)

-@provide_session

-def __get_concurrency_maps(self, states, session=None):

+def __get_concurrency_maps(self, states, session):

Review comment:

This method has an invalid rtype. Returns two dictionaries in a tuple, not

just one.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries URL: https://github.com/apache/airflow/pull/6792#discussion_r356997115 ## File path: airflow/models/dagrun.py ## @@ -286,25 +321,27 @@ def update_state(self, session=None): session=session Review comment: Can you check if double calling get_task_instances is faster than filtering the list in Python? Line 319 and 306 contains calls to the get_task_instances method, and this method invokes a database query. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] RosterIn commented on issue #2460: [AIRFLOW-1424] make the next execution date of DAGs visible

RosterIn commented on issue #2460: [AIRFLOW-1424] make the next execution date of DAGs visible URL: https://github.com/apache/airflow/pull/2460#issuecomment-564890113 Would it be possible for this column to be configured from airflow.cfg with default `False`? Something like: `show_next_execution_column_in_ui = False` I do think this feature is valuable but not all users may require it. This new column doesn't bring new information to the UI (as it can be understood from the last run + interval) so I think it should be hidden by default and shown for users who needs it. **OR (and maybe even better)** If not from airflow.cfg then maybe the UI itself can have hide/show feature something similar to the hide paused DAGs button? This will allow every user to decide for himself if he wants to see it. This way no need to define standard that effects all users but it's more a personalised UI. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use

cached-SQL query building for hot-path queries

URL: https://github.com/apache/airflow/pull/6792#discussion_r356995764

##

File path: airflow/models/dagrun.py

##

@@ -263,10 +294,14 @@ def update_state(self, session=None):

Determines the overall state of the DagRun based on the state

of its TaskInstances.

-:return: State

+:return: state, schedulable_task_instances

+:rtype: (State, list[TaskInstance])

"""

+from airflow.ti_deps.deps.ready_to_reschedule import

ReadyToRescheduleDep

+from airflow.ti_deps.deps.not_in_retry_period_dep import

NotInRetryPeriodDep

dag = self.get_dag()

+tis_to_schedule = []

tis = self.get_task_instances(session=session)

self.log.debug("Updating state for %s considering %s task(s)", self,

len(tis))

Review comment:

Do you think it is worth dividing the loop from line 272 into two loops? One

loop will filters the elements and the second loop will set tasks on task

instances. This does not affect performance, but will make it easier to

understand the code.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries URL: https://github.com/apache/airflow/pull/6792#discussion_r356994088 ## File path: airflow/models/dagrun.py ## @@ -263,10 +294,14 @@ def update_state(self, session=None): Determines the overall state of the DagRun based on the state Review comment: Is it not necessary to change the method name? Now does not contain information about tasks. This may not be clear in the future. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] albertusk95 commented on a change in pull request #6795: Adjust the MASTER_URL of spark-submit in SparkSubmitHook

albertusk95 commented on a change in pull request #6795: Adjust the MASTER_URL

of spark-submit in SparkSubmitHook

URL: https://github.com/apache/airflow/pull/6795#discussion_r356992460

##

File path: airflow/contrib/hooks/spark_submit_hook.py

##

@@ -185,6 +185,8 @@ def _resolve_connection(self):

conn_data['master'] = "{}:{}".format(conn.host, conn.port)

else:

conn_data['master'] = conn.host

+if conn.uri:

+conn_data['master'] = conn.uri

Review comment:

since the specified URI might consist of other attributes other than scheme,

host, and port (ex: query & schema), I think we couldn't directly assign the

master address to `conn.uri`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries URL: https://github.com/apache/airflow/pull/6792#discussion_r356991452 ## File path: airflow/jobs/scheduler_job.py ## @@ -1057,30 +1027,34 @@ def _find_executable_task_instances(self, simple_dag_bag, states, session=None): TI = models.TaskInstance DR = models.DagRun DM = models.DagModel -ti_query = ( -session -.query(TI) -.filter(TI.dag_id.in_(simple_dag_bag.dag_ids)) +ti_query = BAKED_QUERIES( +lambda session: session.query(TI).filter( +TI.dag_id.in_(simple_dag_bag.dag_ids) +) .outerjoin( DR, and_(DR.dag_id == TI.dag_id, DR.execution_date == TI.execution_date) ) -.filter(or_(DR.run_id == None, # noqa: E711 pylint: disable=singleton-comparison -not_(DR.run_id.like(BackfillJob.ID_PREFIX + '%' +.filter(or_(DR.run_id.is_(None), +not_(DR.run_id.like(BackfillJob.ID_PREFIX + '%' Review comment: I really don't like filtering with the like expression. This makes the query very difficult to optimize. It is not possible to store it in a simple data structure. We have to have a very complex binary tree, but which takes more memory than a simple structure with 3 values. Which causes other problems, e.g. unbalanced tree, and thus performance degradation. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use

cached-SQL query building for hot-path queries

URL: https://github.com/apache/airflow/pull/6792#discussion_r356988665

##

File path: airflow/jobs/scheduler_job.py

##

@@ -1006,8 +978,7 @@ def _change_state_for_tis_without_dagrun(self,

)

Stats.gauge('scheduler.tasks.without_dagrun', tis_changed)

-@provide_session

-def __get_concurrency_maps(self, states, session=None):

+def __get_concurrency_maps(self, states, session):

Review comment:

Why did you delete this decorator? It has no effect on performance because

it is very simple logic.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries URL: https://github.com/apache/airflow/pull/6792#discussion_r356988211 ## File path: airflow/jobs/scheduler_job.py ## @@ -686,10 +664,10 @@ def _process_dags(self, dagbag, dags, tis_out): :type dagbag: airflow.models.DagBag :param dags: the DAGs from the DagBag to process :type dags: airflow.models.DAG -:param tis_out: A list to add generated TaskInstance objects -:type tis_out: list[TaskInstance] -:rtype: None +:return: A list of TaskInstance objects +:rtype: list[TaskInstance] Review comment: Can you also add rtype for _process_task_instances also? Now it is difficult to check if this is true. Especially since this method previously use TaskInstanceKeyType. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries URL: https://github.com/apache/airflow/pull/6792#discussion_r356986778 ## File path: airflow/__init__.py ## @@ -48,3 +48,8 @@ login: Optional[Callable] = None integrate_plugins() + + +# Ensure that this query is build in the master process, before we fork of a sub-process to parse the DAGs +from . import ti_deps Review comment: I don't know if this should be done here or when starting SchedulerJob. In my opinion, adding additional logic to init is not the best solution and we can probably avoid it in this situation. We don't need this query to be loaded in many cases, e.g. on workers. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries URL: https://github.com/apache/airflow/pull/6792#discussion_r356986173 ## File path: airflow/ti_deps/deps/trigger_rule_dep.py ## @@ -34,9 +35,38 @@ class TriggerRuleDep(BaseTIDep): IGNOREABLE = True IS_TASK_DEP = True +@staticmethod +def bake_dep_status_query(): +TI = airflow.models.TaskInstance +# TODO(unknown): this query becomes quite expensive with dags that have many +# tasks. It should be refactored to let the task report to the dag run and get the +# aggregates from there. +q = BAKED_QUERIES(lambda session: session.query( +func.coalesce(func.sum(case([(TI.state == State.SUCCESS, 1)], else_=0)), 0), Review comment: Can you provide me this query in SQL format? I think it can be optimized for PostgresQL by using COUNT...FILTER syntax. However, this also requires checking if this syntax has an effect on performance, or is it just syntactic sugar. But for logic this additional information can be used by the planner to make a more efficient query. https://www.postgresql.org/docs/9.4/sql-expressions.html#SYNTAX-AGGREGATES This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries

mik-laj commented on a change in pull request #6792: [AIRFLOW-5930] Use cached-SQL query building for hot-path queries URL: https://github.com/apache/airflow/pull/6792#discussion_r356986173 ## File path: airflow/ti_deps/deps/trigger_rule_dep.py ## @@ -34,9 +35,38 @@ class TriggerRuleDep(BaseTIDep): IGNOREABLE = True IS_TASK_DEP = True +@staticmethod +def bake_dep_status_query(): +TI = airflow.models.TaskInstance +# TODO(unknown): this query becomes quite expensive with dags that have many +# tasks. It should be refactored to let the task report to the dag run and get the +# aggregates from there. +q = BAKED_QUERIES(lambda session: session.query( +func.coalesce(func.sum(case([(TI.state == State.SUCCESS, 1)], else_=0)), 0), Review comment: Can you provide me this query in SQL format? I think it can be optimized for PostgresQL by using COUNT...FILTER syntax. However, this also requires checking if this syntax has an effect on performance, or is it just syntactic sugar. https://www.postgresql.org/docs/9.4/sql-expressions.html#SYNTAX-AGGREGATES This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on issue #6791: [AIRFLOW-XXX] Add link to XCom section in concepts.rst

mik-laj commented on issue #6791: [AIRFLOW-XXX] Add link to XCom section in concepts.rst URL: https://github.com/apache/airflow/pull/6791#issuecomment-564874675 @dimberman This is just a change in the documentation. Does this require a ticket? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Comment Edited] (AIRFLOW-6214) Spark driver status tracking for standalone, YARN, Mesos and K8s with cluster deploy mode

[

https://issues.apache.org/jira/browse/AIRFLOW-6214?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16994276#comment-16994276

]

xifeng edited comment on AIRFLOW-6214 at 12/12/19 6:32 AM:

---

Hi Albertus,

yes, I agree, I think it the conn.host should be only hostname, without

scheme.

I'm not sure why in testcase it is written as

host='spark://spark-standalone-master:6066'.

I just issued a PR: https://github.com/apache/airflow/pull/6795

was (Author: dennisli):

yes, I agree, I think the conn.host should be only hostname, without scheme.

But I'm not sure why in testcase it is written as

host='spark://spark-standalone-master:6066'.

I just issued a PR: https://github.com/apache/airflow/pull/6795

> Spark driver status tracking for standalone, YARN, Mesos and K8s with cluster

> deploy mode

> -

>

> Key: AIRFLOW-6214

> URL: https://issues.apache.org/jira/browse/AIRFLOW-6214

> Project: Apache Airflow

> Issue Type: Improvement

> Components: hooks, operators

>Affects Versions: 1.10.6

>Reporter: Albertus Kelvin

>Assignee: xifeng

>Priority: Minor

>

> Based on the following code snippet:

> {code:python}

> def _resolve_should_track_driver_status(self):

> return ('spark://' in self._connection['master'] and

> self._connection['deploy_mode'] == 'cluster')

> {code}

>

> It seems that the above code will always return *False* because the master

> address for standalone cluster doesn't contain *spark://* as shown from the

> below code snippet.

> {code:python}

> conn = self.get_connection(self._conn_id)

> if conn.port:

> conn_data['master'] = "{}:{}".format(conn.host, conn.port)

> else:

> conn_data['master'] = conn.host

> {code}

> Additionally, I think this driver status tracker should also be enabled for

> mesos and kubernetes with cluster mode since the *--status* argument supports

> all of these cluster managers. Refer to

> [this|https://github.com/apache/spark/blob/be867e8a9ee8fc5e4831521770f51793e9265550/core/src/main/scala/org/apache/spark/deploy/SparkSubmitArguments.scala#L543].

> For YARN cluster mode, I think we can use built-in commands from yarn itself,

> such as *yarn application -status *.

> Therefore, the *_build_track_driver_status_command* method should be updated

> accordingly to accommodate such a need, such as the following.

> {code:python}

> def _build_track_driver_status_command(self):

> # The driver id so we can poll for its status

> if not self._driver_id:

> raise AirflowException(

> "Invalid status: attempted to poll driver " +

> "status but no driver id is known. Giving up.")

> if self._connection['master'].startswith("spark://") or

>self._connection['master'].startswith("mesos://") or

>self._connection['master'].startswith("k8s://"):

> # standalone, mesos, kubernetes

> connection_cmd = self._get_spark_binary_path()

> connection_cmd += ["--master", self._connection['master']]

> connection_cmd += ["--status", self._driver_id]

> else:

> # yarn

> connection_cmd = ["yarn application -status"]

> connection_cmd += [self._driver_id]

> self.log.debug("Poll driver status cmd: %s", connection_cmd)

> return connection_cmd

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (AIRFLOW-6214) Spark driver status tracking for standalone, YARN, Mesos and K8s with cluster deploy mode

[

https://issues.apache.org/jira/browse/AIRFLOW-6214?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16994276#comment-16994276

]

xifeng commented on AIRFLOW-6214:

-

yes, I agree, I think the conn.host should be only hostname, without scheme.

But I'm not sure why in testcase it is written as

host='spark://spark-standalone-master:6066'.

I just issued a PR: https://github.com/apache/airflow/pull/6795

> Spark driver status tracking for standalone, YARN, Mesos and K8s with cluster

> deploy mode

> -

>

> Key: AIRFLOW-6214

> URL: https://issues.apache.org/jira/browse/AIRFLOW-6214

> Project: Apache Airflow

> Issue Type: Improvement

> Components: hooks, operators

>Affects Versions: 1.10.6

>Reporter: Albertus Kelvin

>Assignee: xifeng

>Priority: Minor

>

> Based on the following code snippet:

> {code:python}

> def _resolve_should_track_driver_status(self):

> return ('spark://' in self._connection['master'] and

> self._connection['deploy_mode'] == 'cluster')

> {code}

>

> It seems that the above code will always return *False* because the master

> address for standalone cluster doesn't contain *spark://* as shown from the

> below code snippet.

> {code:python}

> conn = self.get_connection(self._conn_id)

> if conn.port:

> conn_data['master'] = "{}:{}".format(conn.host, conn.port)

> else:

> conn_data['master'] = conn.host

> {code}

> Additionally, I think this driver status tracker should also be enabled for

> mesos and kubernetes with cluster mode since the *--status* argument supports

> all of these cluster managers. Refer to

> [this|https://github.com/apache/spark/blob/be867e8a9ee8fc5e4831521770f51793e9265550/core/src/main/scala/org/apache/spark/deploy/SparkSubmitArguments.scala#L543].

> For YARN cluster mode, I think we can use built-in commands from yarn itself,

> such as *yarn application -status *.

> Therefore, the *_build_track_driver_status_command* method should be updated

> accordingly to accommodate such a need, such as the following.

> {code:python}

> def _build_track_driver_status_command(self):

> # The driver id so we can poll for its status

> if not self._driver_id:

> raise AirflowException(

> "Invalid status: attempted to poll driver " +

> "status but no driver id is known. Giving up.")

> if self._connection['master'].startswith("spark://") or

>self._connection['master'].startswith("mesos://") or

>self._connection['master'].startswith("k8s://"):

> # standalone, mesos, kubernetes

> connection_cmd = self._get_spark_binary_path()

> connection_cmd += ["--master", self._connection['master']]

> connection_cmd += ["--status", self._driver_id]

> else:

> # yarn

> connection_cmd = ["yarn application -status"]

> connection_cmd += [self._driver_id]

> self.log.debug("Poll driver status cmd: %s", connection_cmd)

> return connection_cmd

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (AIRFLOW-6212) SparkSubmitHook failed to execute spark-submit to standalone cluster

[

https://issues.apache.org/jira/browse/AIRFLOW-6212?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16994260#comment-16994260

]

xifeng commented on AIRFLOW-6212:

-

Fix it with: https://github.com/apache/airflow/pull/6795

> SparkSubmitHook failed to execute spark-submit to standalone cluster

>

>

> Key: AIRFLOW-6212

> URL: https://issues.apache.org/jira/browse/AIRFLOW-6212

> Project: Apache Airflow

> Issue Type: Bug

> Components: hooks, operators

>Affects Versions: 1.10.6

>Reporter: Albertus Kelvin

>Assignee: xifeng

>Priority: Trivial

>

> I was trying to submit a pyspark job with spark-submit using

> SparkSubmitOperator. I already set up the master appropriately via

> environment variable (AIRFLOW_CONN_SPARK_DEFAULT). The value was something

> like *spark://host:port*.

> However, an exception occurred:

> {noformat}

> airflow.exceptions.AirflowException: Cannot execute: ['path/to/spark-submit',

> '--master', 'host:port', 'job.py']

> {noformat}

> Turns out that the master should have *spark://* preceding the host:port. I

> checked the code and found that this wasn't handled.

> {code:python}

> conn = self.get_connection(self._conn_id)

> if conn.port:

> conn_data['master'] = "{}:{}".format(conn.host, conn.port)

> else:

> conn_data['master'] = conn.host

> {code}

> I think the protocol should be added like the following.

> {code:python}

> conn_data['master'] = "spark://{}:{}".format(conn.host, conn.port)

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [airflow] baolsen commented on a change in pull request #6773: [AIRFLOW-6038] AWS DataSync example_dags added

baolsen commented on a change in pull request #6773: [AIRFLOW-6038] AWS DataSync example_dags added URL: https://github.com/apache/airflow/pull/6773#discussion_r356971393 ## File path: airflow/providers/amazon/aws/operators/datasync.py ## @@ -27,25 +25,45 @@ from airflow.utils.decorators import apply_defaults -class AWSDataSyncCreateTaskOperator(BaseOperator): -r"""Create an AWS DataSync Task. +# pylint: disable=too-many-instance-attributes, too-many-arguments +class AWSDataSyncOperator(BaseOperator): +r"""Find, Create, Update, Execute and Delete AWS DataSync Tasks. + +If ``do_xcom_push`` is True, then the TaskArn and TaskExecutionArn which +were executed will be pushed to an XCom. -If there are existing Locations which match the specified -source and destination URIs then these will be used for the Task. -Otherwise, new Locations can be created automatically, -depending on input parameters. +.. seealso:: +For more information on how to use this operator, take a look at the guide: +:ref:`howto/operator:AWSDataSyncOperator` -If ``do_xcom_push`` is True, the TaskArn which is created -will be pushed to an XCom. +.. note:: There may be 0, 1, or many existing DataSync Tasks. The default +behavior is to create a new Task if there are 0, or execute the Task +if there was 1 Task, or fail if there were many Tasks. :param str aws_conn_id: AWS connection to use. -:param str source_location_uri: Source location URI. +:param int wait_for_task_execution: Time to wait between two +consecutive calls to check TaskExecution status. +:param str task_arn: AWS DataSync TaskArn to use. If None, then this operator will +attempt to either search for an existing Task or create a new Task. +:param str source_location_uri: Source location URI to search for. All DataSync +Tasks with a LocationArn with this URI will be considered. Example: ``smb://server/subdir`` -:param str destination_location_uri: Destination location URI. +:param str destination_location_uri: Destination location URI to search for. +All DataSync Tasks with a LocationArn with this URI will be considered. Example: ``s3://airflow_bucket/stuff`` -:param bool case_sensitive_location_search: Whether or not to do a +:param bool location_search_case_sensitive: Whether or not to do a Review comment: Happy with that suggestion, I will make it default as it is more intuitive. I will leave the option in the datasync_hook constructor, in case the user wants to change this default behavior. They can inherit and override the Operator methods if they want. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] baolsen commented on a change in pull request #6773: [AIRFLOW-6038] AWS DataSync example_dags added

baolsen commented on a change in pull request #6773: [AIRFLOW-6038] AWS

DataSync example_dags added

URL: https://github.com/apache/airflow/pull/6773#discussion_r356969219

##

File path:

airflow/providers/amazon/aws/example_dags/example_datasync_complex.py

##

@@ -0,0 +1,101 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+"""

+This is an example dag for using `AWSDataSyncOperator` in a more complex

manner.

+

+- Try to get a TaskArn. If one exists, update it.

+- If no tasks exist, try to create a new DataSync Task.

+- If source and destination locations dont exist for the new task, create

them first

+- If many tasks exist, raise an Exception

+- After getting or creating a DataSync Task, run it

+

+This DAG relies on the following environment variables:

+

+* SOURCE_LOCATION_URI - Source location URI, usually on premisis SMB or NFS

+* DESTINATION_LOCATION_URI - Destination location URI, usually S3

+* CREATE_TASK_KWARGS - Passed to boto3.create_task(**kwargs)

+* CREATE_SOURCE_LOCATION_KWARGS - Passed to boto3.create_location(**kwargs)

+* CREATE_DESTINATION_LOCATION_KWARGS - Passed to

boto3.create_location(**kwargs)

+* UPDATE_TASK_KWARGS - Passed to boto3.update_task(**kwargs)

+"""

+

+import json

+from os import getenv

+

+from airflow import models, utils

+from airflow.providers.amazon.aws.operators.datasync import AWSDataSyncOperator

+

+# [START howto_operator_datasync_complex_args]

+SOURCE_LOCATION_URI = getenv(

+"SOURCE_LOCATION_URI", "smb://hostname/directory/")

+

+DESTINATION_LOCATION_URI = getenv(

+"DESTINATION_LOCATION_URI", "s3://mybucket/prefix")

+

+default_create_task_kwargs = '{"Name": "Created by Airflow"}'

+CREATE_TASK_KWARGS = json.loads(

+getenv("CREATE_TASK_KWARGS", default_create_task_kwargs)

+)

+

+default_create_source_location_kwargs = "{}"

+CREATE_SOURCE_LOCATION_KWARGS = json.loads(

+getenv("CREATE_SOURCE_LOCATION_KWARGS",

+ default_create_source_location_kwargs)

+)

+

+bucket_access_role_arn = (

+"arn:aws:iam::2223344:role/r-2223344-my-bucket-access-role"

+)

+default_destination_location_kwargs = """\

+{"S3BucketArn": "arn:aws:s3:::mybucket",

+"S3Config": {"BucketAccessRoleArn": bucket_access_role_arn}

+}"""

+CREATE_DESTINATION_LOCATION_KWARGS = json.loads(

+getenv("CREATE_DESTINATION_LOCATION_KWARGS",

+ default_destination_location_kwargs)

+)

+

+default_update_task_kwargs = '{"Name": "Updated by Airflow"}'

+UPDATE_TASK_KWARGS = json.loads(

+getenv("UPDATE_TASK_KWARGS", default_update_task_kwargs)

+)

+

+default_args = {"start_date": utils.dates.days_ago(1)}

+# [END howto_operator_datasync_complex_args]

+

+with models.DAG(

+"example_datasync_complex",

Review comment:

Agreed :) I'll change them to "example_1" and "example_2" to make it clearer.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Comment Edited] (AIRFLOW-6214) Spark driver status tracking for standalone, YARN, Mesos and K8s with cluster deploy mode

[

https://issues.apache.org/jira/browse/AIRFLOW-6214?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16994238#comment-16994238

]

Albertus Kelvin edited comment on AIRFLOW-6214 at 12/12/19 5:44 AM:

hi [~dennisli], thanks for your comment. Really appreciate.

Just fyi, I set up the connection via environment variables and provided the

URI. But I think it should apply to db as well.

I investigated the *Connection* module (airflow.models.connection) further and

found that if we provide the URI (ex: spark://host:port), then the attributes

will be derived by parsing the URI.

When parsing the host

([code|https://github.com/apache/airflow/blob/master/airflow/models/connection.py#L137]),

the resulting value was only the hostname without the scheme.

Therefore, the *conn.host* in the following code will only contain the hostname.

{code:python}

conn = self.get_connection(self._conn_id)

if conn.port:

conn_data['master'] = "{}:{}".format(conn.host, conn.port)

else:

conn_data['master'] = conn.host

{code}

Since *conn* consists of several attributes, including scheme (conn_type), host

(host), and port (_port_), I think the *conn_data['master']* should be resolved

like:

{code:python}

conn = self.get_connection(self._conn_id)

if conn.port:

conn_data['master'] = "{}://{}:{}".format(conn.conn_type, conn.host,

conn.port)

else:

conn_data['master'] = "{}://{}".format(conn.conn_type, conn.host)

{code}

In addition to your note about the scheme should be put in the *host* (like in

the unit test), I think it is somewhat not relevant to how the *Connection*

module works. It also might result in some kinds of exception since the

*Connection* table has a dedicated column for *scheme* and *host*. Moreover, I

didn't find any method that parse the scheme from the host.

What do you think?

was (Author: albertus-kelvin):

hi [~dennisli], thanks for your comment. Really appreciate.

Just fyi, I set up the connection via environment variables and provided the

URI. But I think it should apply to db as well.

I investigated the *Connection* module (airflow.models.connection) further and

found that if we provide the URI (ex: spark://host:port), then the attributes

will be derived by parsing the URI.

When parsing the host

([code|https://github.com/apache/airflow/blob/master/airflow/models/connection.py#L137]),

the resulting value was only the hostname without the scheme.

Therefore, the *conn.host* in the following code will only contain the hostname.

{code:python}

conn = self.get_connection(self._conn_id)

if conn.port:

conn_data['master'] = "{}:{}".format(conn.host, conn.port)

else:

conn_data['master'] = conn.host

{code}

Since *conn* consists of several attributes, including scheme, host, and port,

I think the *conn_data['master']* should be resolved like:

{code:python}

conn = self.get_connection(self._conn_id)

if conn.port:

conn_data['master'] = "{}://{}:{}".format(conn.conn_type, conn.host,

conn.port)

else:

conn_data['master'] = "{}://{}".format(conn.conn_type, conn.host)

{code}

In addition to your note about the scheme should be put in the *host* (like in

the unit test), I think it is somewhat not relevant to how the *Connection*

module works. It also might result in some kinds of exception since the

*Connection* table has a dedicated column for *scheme* and *host*. Moreover, I

didn't find any method that parse the scheme from the host.

What do you think?

> Spark driver status tracking for standalone, YARN, Mesos and K8s with cluster

> deploy mode

> -

>

> Key: AIRFLOW-6214

> URL: https://issues.apache.org/jira/browse/AIRFLOW-6214

> Project: Apache Airflow

> Issue Type: Improvement

> Components: hooks, operators

>Affects Versions: 1.10.6

>Reporter: Albertus Kelvin

>Assignee: xifeng

>Priority: Minor

>

> Based on the following code snippet:

> {code:python}

> def _resolve_should_track_driver_status(self):

> return ('spark://' in self._connection['master'] and

> self._connection['deploy_mode'] == 'cluster')

> {code}

>

> It seems that the above code will always return *False* because the master

> address for standalone cluster doesn't contain *spark://* as shown from the

> below code snippet.

> {code:python}

> conn = self.get_connection(self._conn_id)

> if conn.port:

> conn_data['master'] = "{}:{}".format(conn.host, conn.port)

> else:

> conn_data['master'] = conn.host

> {code}

> Additionally, I think this driver status tracker should also be enabled for

> mesos and kubernetes with cluster mode since the *--status* argument supports

> all of these cluster managers. Refer to

>

[jira] [Commented] (AIRFLOW-6214) Spark driver status tracking for standalone, YARN, Mesos and K8s with cluster deploy mode

[

https://issues.apache.org/jira/browse/AIRFLOW-6214?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16994238#comment-16994238

]

Albertus Kelvin commented on AIRFLOW-6214:

--

hi [~dennisli], thanks for your comment. Really appreciate.

Just fyi, I set up the connection via environment variables and provided the

URI. But I think it should apply to db as well.

I investigated the *Connection* module (airflow.models.connection) further and

found that if we provide the URI (ex: spark://host:port), then the attributes

will be derived by parsing the URI.

When parsing the host

([code|https://github.com/apache/airflow/blob/master/airflow/models/connection.py#L137]),

the resulting value was only the hostname without the scheme.

Therefore, the *conn.host* in the following code will only contain the hostname.

{code:python}

conn = self.get_connection(self._conn_id)

if conn.port:

conn_data['master'] = "{}:{}".format(conn.host, conn.port)

else:

conn_data['master'] = conn.host

{code}

Since *conn* consists of several attributes, including scheme, host, and port,

I think the *conn_data['master']* should be resolved like:

{code:python}

conn = self.get_connection(self._conn_id)

if conn.port:

conn_data['master'] = "{}://{}:{}".format(conn.conn_type, conn.host,

conn.port)

else:

conn_data['master'] = "{}://{}".format(conn.conn_type, conn.host)

{code}

In addition to your note about the scheme should be put in the *host* (like in

the unit test), I think it is somewhat not relevant to how the *Connection*

module works. It also might result in some kinds of exception since the

*Connection* table has a dedicated column for *scheme* and *host*. Moreover, I

didn't find any method that parse the scheme from the host.

What do you think?

> Spark driver status tracking for standalone, YARN, Mesos and K8s with cluster

> deploy mode

> -

>

> Key: AIRFLOW-6214

> URL: https://issues.apache.org/jira/browse/AIRFLOW-6214

> Project: Apache Airflow

> Issue Type: Improvement

> Components: hooks, operators

>Affects Versions: 1.10.6

>Reporter: Albertus Kelvin

>Assignee: xifeng

>Priority: Minor

>

> Based on the following code snippet:

> {code:python}

> def _resolve_should_track_driver_status(self):

> return ('spark://' in self._connection['master'] and

> self._connection['deploy_mode'] == 'cluster')

> {code}

>

> It seems that the above code will always return *False* because the master

> address for standalone cluster doesn't contain *spark://* as shown from the

> below code snippet.

> {code:python}

> conn = self.get_connection(self._conn_id)

> if conn.port:

> conn_data['master'] = "{}:{}".format(conn.host, conn.port)

> else:

> conn_data['master'] = conn.host

> {code}

> Additionally, I think this driver status tracker should also be enabled for

> mesos and kubernetes with cluster mode since the *--status* argument supports

> all of these cluster managers. Refer to

> [this|https://github.com/apache/spark/blob/be867e8a9ee8fc5e4831521770f51793e9265550/core/src/main/scala/org/apache/spark/deploy/SparkSubmitArguments.scala#L543].

> For YARN cluster mode, I think we can use built-in commands from yarn itself,

> such as *yarn application -status *.

> Therefore, the *_build_track_driver_status_command* method should be updated

> accordingly to accommodate such a need, such as the following.

> {code:python}

> def _build_track_driver_status_command(self):

> # The driver id so we can poll for its status

> if not self._driver_id:

> raise AirflowException(

> "Invalid status: attempted to poll driver " +

> "status but no driver id is known. Giving up.")

> if self._connection['master'].startswith("spark://") or

>self._connection['master'].startswith("mesos://") or

>self._connection['master'].startswith("k8s://"):

> # standalone, mesos, kubernetes

> connection_cmd = self._get_spark_binary_path()

> connection_cmd += ["--master", self._connection['master']]

> connection_cmd += ["--status", self._driver_id]

> else:

> # yarn

> connection_cmd = ["yarn application -status"]

> connection_cmd += [self._driver_id]

> self.log.debug("Poll driver status cmd: %s", connection_cmd)

> return connection_cmd

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [airflow] baolsen commented on issue #6773: [AIRFLOW-6038] AWS DataSync example_dags added

baolsen commented on issue #6773: [AIRFLOW-6038] AWS DataSync example_dags added URL: https://github.com/apache/airflow/pull/6773#issuecomment-564857060 Thanks for the great feedback @potiuk and @dimberman , I'll work through them now. A good opportunity for me to try some of the Git features suggested by @potiuk before :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] vsoch commented on issue #4846: [AIRFLOW-4030] adding start to singularity for airflow

vsoch commented on issue #4846: [AIRFLOW-4030] adding start to singularity for airflow URL: https://github.com/apache/airflow/pull/4846#issuecomment-564847214 I thought so too! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] pbranson commented on issue #4846: [AIRFLOW-4030] adding start to singularity for airflow

pbranson commented on issue #4846: [AIRFLOW-4030] adding start to singularity for airflow URL: https://github.com/apache/airflow/pull/4846#issuecomment-564846284 I would like to add some community support for this to be merged please We would make use of this for using airflow in the HPC context This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io edited a comment on issue #6765: [AIRFLOW-5889] Fix polling for AWS Batch job status

codecov-io edited a comment on issue #6765: [AIRFLOW-5889] Fix polling for AWS Batch job status URL: https://github.com/apache/airflow/pull/6765#issuecomment-563889078 # [Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=h1) Report > Merging [#6765](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/0863d41254f9eea0bd66fd096dccf574fa041960?src=pr=desc) will **decrease** coverage by `0.01%`. > The diff coverage is `98.61%`. [](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=tree) ```diff @@Coverage Diff@@ ## master #6765 +/- ## = - Coverage 84.32% 84.3% -0.02% = Files 672 672 Lines 38179 38210 +31 = + Hits32195 32214 +19 - Misses 59845996 +12 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/contrib/operators/awsbatch\_operator.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy9hd3NiYXRjaF9vcGVyYXRvci5weQ==) | `95.83% <98.61%> (+17.18%)` | :arrow_up: | | [airflow/kubernetes/volume\_mount.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZV9tb3VudC5weQ==) | `44.44% <0%> (-55.56%)` | :arrow_down: | | [airflow/kubernetes/volume.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZS5weQ==) | `52.94% <0%> (-47.06%)` | :arrow_down: | | [airflow/kubernetes/pod\_launcher.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3BvZF9sYXVuY2hlci5weQ==) | `45.25% <0%> (-46.72%)` | :arrow_down: | | [airflow/kubernetes/refresh\_config.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3JlZnJlc2hfY29uZmlnLnB5) | `50.98% <0%> (-23.53%)` | :arrow_down: | | [...rflow/contrib/operators/kubernetes\_pod\_operator.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy9rdWJlcm5ldGVzX3BvZF9vcGVyYXRvci5weQ==) | `78.2% <0%> (-20.52%)` | :arrow_down: | | [airflow/utils/dag\_processing.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kYWdfcHJvY2Vzc2luZy5weQ==) | `87.42% <0%> (-0.39%)` | :arrow_down: | | [airflow/hooks/dbapi\_hook.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9kYmFwaV9ob29rLnB5) | `91.52% <0%> (+0.84%)` | :arrow_up: | | [airflow/models/connection.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMvY29ubmVjdGlvbi5weQ==) | `68.96% <0%> (+0.98%)` | :arrow_up: | | [airflow/hooks/hive\_hooks.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9oaXZlX2hvb2tzLnB5) | `77.6% <0%> (+1.52%)` | :arrow_up: | | ... and [3 more](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=footer). Last update [0863d41...c52463e](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io edited a comment on issue #6765: [AIRFLOW-5889] Fix polling for AWS Batch job status

codecov-io edited a comment on issue #6765: [AIRFLOW-5889] Fix polling for AWS Batch job status URL: https://github.com/apache/airflow/pull/6765#issuecomment-563889078 # [Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=h1) Report > Merging [#6765](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/0863d41254f9eea0bd66fd096dccf574fa041960?src=pr=desc) will **decrease** coverage by `0.01%`. > The diff coverage is `98.61%`. [](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=tree) ```diff @@Coverage Diff@@ ## master #6765 +/- ## = - Coverage 84.32% 84.3% -0.02% = Files 672 672 Lines 38179 38210 +31 = + Hits32195 32214 +19 - Misses 59845996 +12 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/contrib/operators/awsbatch\_operator.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy9hd3NiYXRjaF9vcGVyYXRvci5weQ==) | `95.83% <98.61%> (+17.18%)` | :arrow_up: | | [airflow/kubernetes/volume\_mount.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZV9tb3VudC5weQ==) | `44.44% <0%> (-55.56%)` | :arrow_down: | | [airflow/kubernetes/volume.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZS5weQ==) | `52.94% <0%> (-47.06%)` | :arrow_down: | | [airflow/kubernetes/pod\_launcher.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3BvZF9sYXVuY2hlci5weQ==) | `45.25% <0%> (-46.72%)` | :arrow_down: | | [airflow/kubernetes/refresh\_config.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3JlZnJlc2hfY29uZmlnLnB5) | `50.98% <0%> (-23.53%)` | :arrow_down: | | [...rflow/contrib/operators/kubernetes\_pod\_operator.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy9rdWJlcm5ldGVzX3BvZF9vcGVyYXRvci5weQ==) | `78.2% <0%> (-20.52%)` | :arrow_down: | | [airflow/utils/dag\_processing.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kYWdfcHJvY2Vzc2luZy5weQ==) | `87.42% <0%> (-0.39%)` | :arrow_down: | | [airflow/hooks/dbapi\_hook.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9kYmFwaV9ob29rLnB5) | `91.52% <0%> (+0.84%)` | :arrow_up: | | [airflow/models/connection.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMvY29ubmVjdGlvbi5weQ==) | `68.96% <0%> (+0.98%)` | :arrow_up: | | [airflow/hooks/hive\_hooks.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9oaXZlX2hvb2tzLnB5) | `77.6% <0%> (+1.52%)` | :arrow_up: | | ... and [3 more](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=footer). Last update [0863d41...c52463e](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io edited a comment on issue #6765: [AIRFLOW-5889] Fix polling for AWS Batch job status

codecov-io edited a comment on issue #6765: [AIRFLOW-5889] Fix polling for AWS Batch job status URL: https://github.com/apache/airflow/pull/6765#issuecomment-563889078 # [Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=h1) Report > Merging [#6765](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/0863d41254f9eea0bd66fd096dccf574fa041960?src=pr=desc) will **decrease** coverage by `0.01%`. > The diff coverage is `98.61%`. [](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=tree) ```diff @@Coverage Diff@@ ## master #6765 +/- ## = - Coverage 84.32% 84.3% -0.02% = Files 672 672 Lines 38179 38210 +31 = + Hits32195 32214 +19 - Misses 59845996 +12 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/contrib/operators/awsbatch\_operator.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy9hd3NiYXRjaF9vcGVyYXRvci5weQ==) | `95.83% <98.61%> (+17.18%)` | :arrow_up: | | [airflow/kubernetes/volume\_mount.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZV9tb3VudC5weQ==) | `44.44% <0%> (-55.56%)` | :arrow_down: | | [airflow/kubernetes/volume.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZS5weQ==) | `52.94% <0%> (-47.06%)` | :arrow_down: | | [airflow/kubernetes/pod\_launcher.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3BvZF9sYXVuY2hlci5weQ==) | `45.25% <0%> (-46.72%)` | :arrow_down: | | [airflow/kubernetes/refresh\_config.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3JlZnJlc2hfY29uZmlnLnB5) | `50.98% <0%> (-23.53%)` | :arrow_down: | | [...rflow/contrib/operators/kubernetes\_pod\_operator.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy9rdWJlcm5ldGVzX3BvZF9vcGVyYXRvci5weQ==) | `78.2% <0%> (-20.52%)` | :arrow_down: | | [airflow/utils/dag\_processing.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kYWdfcHJvY2Vzc2luZy5weQ==) | `87.42% <0%> (-0.39%)` | :arrow_down: | | [airflow/hooks/dbapi\_hook.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9kYmFwaV9ob29rLnB5) | `91.52% <0%> (+0.84%)` | :arrow_up: | | [airflow/models/connection.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMvY29ubmVjdGlvbi5weQ==) | `68.96% <0%> (+0.98%)` | :arrow_up: | | [airflow/hooks/hive\_hooks.py](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree#diff-YWlyZmxvdy9ob29rcy9oaXZlX2hvb2tzLnB5) | `77.6% <0%> (+1.52%)` | :arrow_up: | | ... and [3 more](https://codecov.io/gh/apache/airflow/pull/6765/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=footer). Last update [0863d41...c52463e](https://codecov.io/gh/apache/airflow/pull/6765?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-4184) Add an AWS Athena Helper to insert into table

[

https://issues.apache.org/jira/browse/AIRFLOW-4184?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16994113#comment-16994113

]

Junyoung Park commented on AIRFLOW-4184:

Now Athena support INSERT INTO clause.

[https://docs.aws.amazon.com/athena/latest/ug/insert-into.html]

> Add an AWS Athena Helper to insert into table

> -

>

> Key: AIRFLOW-4184

> URL: https://issues.apache.org/jira/browse/AIRFLOW-4184

> Project: Apache Airflow

> Issue Type: New Feature

>Reporter: Bryan Yang

>Assignee: Bryan Yang

>Priority: Major

>

> AWS Athena does not support {{inert into table}} clause now, but this

> function is really critical for ETL.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

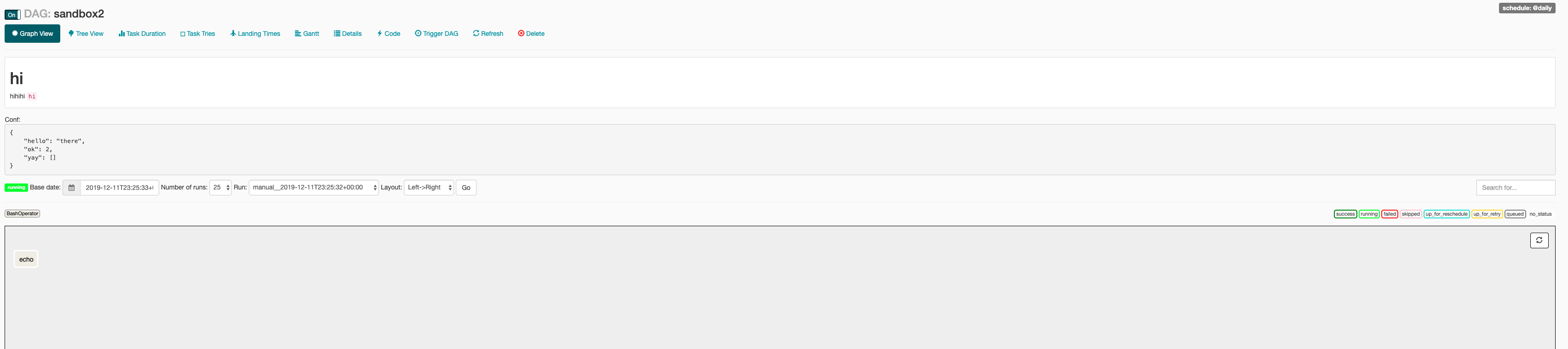

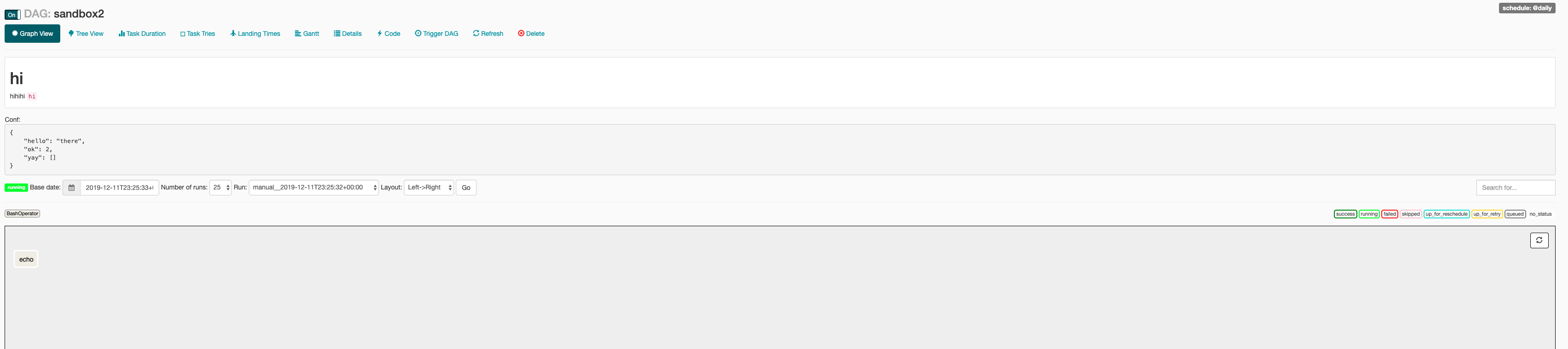

[GitHub] [airflow] codecov-io edited a comment on issue #6794: [AIRFLOW-6231] Display DAG run conf in the graph view

codecov-io edited a comment on issue #6794: [AIRFLOW-6231] Display DAG run conf in the graph view URL: https://github.com/apache/airflow/pull/6794#issuecomment-564805241 # [Codecov](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=h1) Report > Merging [#6794](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/3bf5195e9e32cc9bfff4e0c1b3f958740225f444?src=pr=desc) will **decrease** coverage by `75.06%`. > The diff coverage is `0%`. [](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=tree) ```diff @@Coverage Diff @@ ## master #6794 +/- ## == - Coverage 84.54% 9.48% -75.07% == Files 672 671-1 Lines 38175 38169-6 == - Hits322753619-28656 - Misses 5900 34550+28650 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/www/views.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `0% <0%> (-75.94%)` | :arrow_down: | | [...low/contrib/operators/wasb\_delete\_blob\_operator.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy93YXNiX2RlbGV0ZV9ibG9iX29wZXJhdG9yLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [...flow/contrib/example\_dags/example\_qubole\_sensor.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL2V4YW1wbGVfZGFncy9leGFtcGxlX3F1Ym9sZV9zZW5zb3IucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/example\_dags/subdags/subdag.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3Mvc3ViZGFncy9zdWJkYWcucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/gcp/sensors/bigquery\_dts.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9nY3Avc2Vuc29ycy9iaWdxdWVyeV9kdHMucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/operators/dummy\_operator.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvZHVtbXlfb3BlcmF0b3IucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/gcp/operators/text\_to\_speech.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9nY3Avb3BlcmF0b3JzL3RleHRfdG9fc3BlZWNoLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [...ample\_dags/example\_emr\_job\_flow\_automatic\_steps.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL2V4YW1wbGVfZGFncy9leGFtcGxlX2Vtcl9qb2JfZmxvd19hdXRvbWF0aWNfc3RlcHMucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [...irflow/providers/apache/cassandra/sensors/table.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvYXBhY2hlL2Nhc3NhbmRyYS9zZW5zb3JzL3RhYmxlLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [...contrib/example\_dags/example\_papermill\_operator.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL2V4YW1wbGVfZGFncy9leGFtcGxlX3BhcGVybWlsbF9vcGVyYXRvci5weQ==) | `0% <0%> (-100%)` | :arrow_down: | | ... and [596 more](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=footer). Last update [3bf5195...8e856e2](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io commented on issue #6794: [AIRFLOW-6231] Display DAG run conf in the graph view

codecov-io commented on issue #6794: [AIRFLOW-6231] Display DAG run conf in the graph view URL: https://github.com/apache/airflow/pull/6794#issuecomment-564805241 # [Codecov](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=h1) Report > Merging [#6794](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/3bf5195e9e32cc9bfff4e0c1b3f958740225f444?src=pr=desc) will **decrease** coverage by `75.06%`. > The diff coverage is `0%`. [](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=tree) ```diff @@Coverage Diff @@ ## master #6794 +/- ## == - Coverage 84.54% 9.48% -75.07% == Files 672 671-1 Lines 38175 38169-6 == - Hits322753619-28656 - Misses 5900 34550+28650 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/www/views.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `0% <0%> (-75.94%)` | :arrow_down: | | [...low/contrib/operators/wasb\_delete\_blob\_operator.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy93YXNiX2RlbGV0ZV9ibG9iX29wZXJhdG9yLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [...flow/contrib/example\_dags/example\_qubole\_sensor.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL2V4YW1wbGVfZGFncy9leGFtcGxlX3F1Ym9sZV9zZW5zb3IucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/example\_dags/subdags/subdag.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9leGFtcGxlX2RhZ3Mvc3ViZGFncy9zdWJkYWcucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/gcp/sensors/bigquery\_dts.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9nY3Avc2Vuc29ycy9iaWdxdWVyeV9kdHMucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/operators/dummy\_operator.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvZHVtbXlfb3BlcmF0b3IucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/gcp/operators/text\_to\_speech.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9nY3Avb3BlcmF0b3JzL3RleHRfdG9fc3BlZWNoLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [...ample\_dags/example\_emr\_job\_flow\_automatic\_steps.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL2V4YW1wbGVfZGFncy9leGFtcGxlX2Vtcl9qb2JfZmxvd19hdXRvbWF0aWNfc3RlcHMucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [...irflow/providers/apache/cassandra/sensors/table.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvYXBhY2hlL2Nhc3NhbmRyYS9zZW5zb3JzL3RhYmxlLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [...contrib/example\_dags/example\_papermill\_operator.py](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL2V4YW1wbGVfZGFncy9leGFtcGxlX3BhcGVybWlsbF9vcGVyYXRvci5weQ==) | `0% <0%> (-100%)` | :arrow_down: | | ... and [596 more](https://codecov.io/gh/apache/airflow/pull/6794/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=footer). Last update [3bf5195...8e856e2](https://codecov.io/gh/apache/airflow/pull/6794?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] darrenleeweber commented on a change in pull request #6765: [AIRFLOW-5889] Fix polling for AWS Batch job status

darrenleeweber commented on a change in pull request #6765: [AIRFLOW-5889] Fix

polling for AWS Batch job status

URL: https://github.com/apache/airflow/pull/6765#discussion_r356908610

##

File path: airflow/contrib/operators/awsbatch_operator.py

##

@@ -156,32 +179,68 @@ def _wait_for_task_ended(self):

waiter.config.max_attempts = sys.maxsize # timeout is managed by

airflow

waiter.wait(jobs=[self.jobId])

except ValueError:

-# If waiter not available use expo

+self._poll_for_task_ended()

-# Allow a batch job some time to spin up. A random interval

-# decreases the chances of exceeding an AWS API throttle

-# limit when there are many concurrent tasks.

-pause = randint(5, 30)

+def _poll_for_task_ended(self):

+"""

+Poll for task status using a exponential backoff

-retries = 1

-while retries <= self.max_retries:

-self.log.info('AWS Batch job (%s) status check (%d of %d) in

the next %.2f seconds',

- self.jobId, retries, self.max_retries, pause)

-sleep(pause)

+* docs.aws.amazon.com/general/latest/gr/api-retries.html

+"""

+# Allow a batch job some time to spin up. A random interval

+# decreases the chances of exceeding an AWS API throttle

+# limit when there are many concurrent tasks.

+pause = randint(5, 30)

Review comment:

The details on how quickly a batch job can possibly start are complex and

captured in some JIRA tickets related to that change (see commit message for

JIRA ticket). That was all reviewed in a prior PR, so I'd prefer not to

revisit that every time. Details are to be found in:

- https://issues.apache.org/jira/browse/AIRFLOW-5218

- https://github.com/apache/airflow/pull/5825

If it should be configured, please open a new JIRA issue for that

enhancement and propose how to handle/allow the configuration options. My best

guess is that it might be a callable, but I don't want to confuse the focus of

this PR with that enhancement.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] darrenleeweber commented on a change in pull request #6765: [WIP][AIRFLOW-5889] Fix polling for AWS Batch job status

darrenleeweber commented on a change in pull request #6765: [WIP][AIRFLOW-5889]

Fix polling for AWS Batch job status

URL: https://github.com/apache/airflow/pull/6765#discussion_r356908842

##

File path: airflow/contrib/operators/awsbatch_operator.py

##

@@ -156,32 +179,68 @@ def _wait_for_task_ended(self):

waiter.config.max_attempts = sys.maxsize # timeout is managed by

airflow

waiter.wait(jobs=[self.jobId])

except ValueError:

-# If waiter not available use expo

+self._poll_for_task_ended()

-# Allow a batch job some time to spin up. A random interval

-# decreases the chances of exceeding an AWS API throttle

-# limit when there are many concurrent tasks.

-pause = randint(5, 30)

+def _poll_for_task_ended(self):

+"""

+Poll for task status using a exponential backoff

-retries = 1

-while retries <= self.max_retries:

-self.log.info('AWS Batch job (%s) status check (%d of %d) in

the next %.2f seconds',

- self.jobId, retries, self.max_retries, pause)

-sleep(pause)

+* docs.aws.amazon.com/general/latest/gr/api-retries.html

+"""

+# Allow a batch job some time to spin up. A random interval

+# decreases the chances of exceeding an AWS API throttle

+# limit when there are many concurrent tasks.

+pause = randint(5, 30)

+

+retries = 1

+while retries <= self.max_retries:

+self.log.info(

+'AWS Batch job (%s) status check (%d of %d) in the next %.2f

seconds',

+self.jobId,

+retries,

+self.max_retries,

+pause,

+)

+sleep(pause)

+

+response = self._get_job_status()

+status = response['jobs'][-1]['status'] # check last job status

+self.log.info('AWS Batch job (%s) status: %s', self.jobId, status)

+

+# jobStatus:

'SUBMITTED'|'PENDING'|'RUNNABLE'|'STARTING'|'RUNNING'|'SUCCEEDED'|'FAILED'

+if status in ['SUCCEEDED', 'FAILED']:

+break

+

+retries += 1

+pause = 1 + pow(retries * 0.3, 2)

+

+def _get_job_status(self) -> Optional[dict]:

+"""

+Get job description

+*

https://docs.aws.amazon.com/batch/latest/APIReference/API_DescribeJobs.html

+"""

+tries = 0

+while tries <= 10:

+tries += 1

+try:

response = self.client.describe_jobs(jobs=[self.jobId])

-status = response['jobs'][-1]['status']

-self.log.info('AWS Batch job (%s) status: %s', self.jobId,

status)

-if status in ['SUCCEEDED', 'FAILED']:

-break

-

-retries += 1

-pause = 1 + pow(retries * 0.3, 2)

+if response and response.get('jobs'):

+return response

+except botocore.exceptions.ClientError as err:

+response = err.response

+self.log.info('Failed to get job status: ', response)

+if response:

+if response.get('Error', {}).get('Code') ==

'TooManyRequestsException':

+self.log.info('Continue for TooManyRequestsException')

+sleep(randint(1, 10)) # avoid excess requests with a

random pause

+continue

+

+self.log.error('Failed to get job status: ', self.jobId)

Review comment:

The latest commits should resolve this.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] darrenleeweber commented on a change in pull request #6765: [WIP][AIRFLOW-5889] Fix polling for AWS Batch job status

darrenleeweber commented on a change in pull request #6765: [WIP][AIRFLOW-5889]

Fix polling for AWS Batch job status

URL: https://github.com/apache/airflow/pull/6765#discussion_r356908610

##

File path: airflow/contrib/operators/awsbatch_operator.py

##

@@ -156,32 +179,68 @@ def _wait_for_task_ended(self):

waiter.config.max_attempts = sys.maxsize # timeout is managed by

airflow

waiter.wait(jobs=[self.jobId])

except ValueError:

-# If waiter not available use expo

+self._poll_for_task_ended()

-# Allow a batch job some time to spin up. A random interval

-# decreases the chances of exceeding an AWS API throttle

-# limit when there are many concurrent tasks.

-pause = randint(5, 30)

+def _poll_for_task_ended(self):

+"""

+Poll for task status using a exponential backoff

-retries = 1

-while retries <= self.max_retries:

-self.log.info('AWS Batch job (%s) status check (%d of %d) in

the next %.2f seconds',

- self.jobId, retries, self.max_retries, pause)

-sleep(pause)

+* docs.aws.amazon.com/general/latest/gr/api-retries.html

+"""

+# Allow a batch job some time to spin up. A random interval

+# decreases the chances of exceeding an AWS API throttle

+# limit when there are many concurrent tasks.

+pause = randint(5, 30)

Review comment:

The details on how quickly a batch job can possibly start are complex and

captured in some JIRA tickets related to that change (see commit message for

JIRA ticket). That was all reviewed in a prior PR, so I'd prefer not to

revisit that every time. If it must be configured, please open a new JIRA

issue for it and propose how to handle/allow the configuration options.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] konqui0 commented on a change in pull request #6767: [AIRFLOW-6208] Implement fileno in StreamLogWriter

konqui0 commented on a change in pull request #6767: [AIRFLOW-6208] Implement fileno in StreamLogWriter URL: https://github.com/apache/airflow/pull/6767#discussion_r356894253 ## File path: airflow/utils/log/logging_mixin.py ## @@ -116,6 +116,13 @@ def isatty(self): """ return False +def fileno(self): +""" +Returns the stdout file descriptor 1. +For compatibility reasons e.g python subprocess module stdout redirection. +""" +return 1 Review comment: Is there a way to identify if the stream is stderr within the StreamLogWriter? That's true, the only alternative I can think of would be creating a pipe, returning its fd and writing everything written to that pipe. I'm not sure if this would be an acceptable solution / workaround. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] dimberman commented on issue #6643: [AIRFLOW-6040] Fix KubernetesJobWatcher Read time out error

dimberman commented on issue #6643: [AIRFLOW-6040] Fix KubernetesJobWatcher Read time out error URL: https://github.com/apache/airflow/pull/6643#issuecomment-564787657 @ashb @davlum bumping this ticket as I would like to get this merged. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-6084) Add info endpoint to experimental api

[ https://issues.apache.org/jira/browse/AIRFLOW-6084?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=16994007#comment-16994007 ] ASF GitHub Bot commented on AIRFLOW-6084: - dimberman commented on pull request #6651: [AIRFLOW-6084] Add info endpoint to experimental api URL: https://github.com/apache/airflow/pull/6651 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Add info endpoint to experimental api > - > > Key: AIRFLOW-6084 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6084 > Project: Apache Airflow > Issue Type: Improvement > Components: api >Affects Versions: 1.10.6 >Reporter: Alexandre YANG >Assignee: Alexandre YANG >Priority: Minor > > Add version info endpoint to experimental api. > Use case: version info is useful for audit/monitoring purpose. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (AIRFLOW-6084) Add info endpoint to experimental api