[GitHub] [airflow] galuszkak commented on issue #6660: [AIRFLOW-6065] Add Stackdriver Task Handler

galuszkak commented on issue #6660: [AIRFLOW-6065] Add Stackdriver Task Handler URL: https://github.com/apache/airflow/pull/6660#issuecomment-588672062 @mik-laj we have every week mini hackathon so if this is not urgent we are doing this every Thursday 8:00-9:30 with 5-6 people. Now we are working on enabling multiple remote loggers in Airflow, and we can also try to take this Stackdriver logs in Web UI. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Assigned] (AIRFLOW-6848) Can i create the viewer filter in LDAP ad group

[ https://issues.apache.org/jira/browse/AIRFLOW-6848?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Deepak Parashar reassigned AIRFLOW-6848: Assignee: Kamil Bregula > Can i create the viewer filter in LDAP ad group > > > Key: AIRFLOW-6848 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6848 > Project: Apache Airflow > Issue Type: New Feature > Components: authentication >Affects Versions: 1.10.5, 1.10.8 >Reporter: Deepak Parashar >Assignee: Kamil Bregula >Priority: Major > Labels: authentication, authorization, ldap > > Hi , > I am using ad based authentication to access the airflow in my company , i > have created two groups in ad(ldap) for superuser_filter and data_profiler , > i want to create or integrate one group like viewer which can help me to > allow only read only access of dags and other system information for the > users who are part of this viewer group . > has someone already did it ? > group_member_attr = memberOf superuser_filter = > memberOf=CN=airflow-super-users,OU=Groups,OU=RWC,OU=US,OU=NORAM,DC=example,DC=com > data_profiler_filter = > memberOf=CN=airflow-data-profilers,OU=Groups,OU=RWC,OU=US,OU=NORAM,DC=example,DC=com > > like these two above groups , similarly we can create one for viewer . > if its not already available can someone please help me how can i implement > it in the current setup . > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (AIRFLOW-6848) Can i create the viewer filter in LDAP ad group

[ https://issues.apache.org/jira/browse/AIRFLOW-6848?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Deepak Parashar updated AIRFLOW-6848: - Labels: authentication authorization ldap (was: ) > Can i create the viewer filter in LDAP ad group > > > Key: AIRFLOW-6848 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6848 > Project: Apache Airflow > Issue Type: New Feature > Components: authentication >Affects Versions: 1.10.5, 1.10.8 >Reporter: Deepak Parashar >Priority: Major > Labels: authentication, authorization, ldap > > Hi , > I am using ad based authentication to access the airflow in my company , i > have created two groups in ad(ldap) for superuser_filter and data_profiler , > i want to create or integrate one group like viewer which can help me to > allow only read only access of dags and other system information for the > users who are part of this viewer group . > has someone already did it ? > group_member_attr = memberOf superuser_filter = > memberOf=CN=airflow-super-users,OU=Groups,OU=RWC,OU=US,OU=NORAM,DC=example,DC=com > data_profiler_filter = > memberOf=CN=airflow-data-profilers,OU=Groups,OU=RWC,OU=US,OU=NORAM,DC=example,DC=com > > like these two above groups , similarly we can create one for viewer . > if its not already available can someone please help me how can i implement > it in the current setup . > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (AIRFLOW-6848) Can i create the viewer filter in LDAP ad group

Deepak Parashar created AIRFLOW-6848: Summary: Can i create the viewer filter in LDAP ad group Key: AIRFLOW-6848 URL: https://issues.apache.org/jira/browse/AIRFLOW-6848 Project: Apache Airflow Issue Type: New Feature Components: authentication Affects Versions: 1.10.8, 1.10.5 Reporter: Deepak Parashar Hi , I am using ad based authentication to access the airflow in my company , i have created two groups in ad(ldap) for superuser_filter and data_profiler , i want to create or integrate one group like viewer which can help me to allow only read only access of dags and other system information for the users who are part of this viewer group . has someone already did it ? group_member_attr = memberOf superuser_filter = memberOf=CN=airflow-super-users,OU=Groups,OU=RWC,OU=US,OU=NORAM,DC=example,DC=com data_profiler_filter = memberOf=CN=airflow-data-profilers,OU=Groups,OU=RWC,OU=US,OU=NORAM,DC=example,DC=com like these two above groups , similarly we can create one for viewer . if its not already available can someone please help me how can i implement it in the current setup . -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] codecov-io edited a comment on issue #7471: [AIRFLOW-6840] Bump up version of future

codecov-io edited a comment on issue #7471: [AIRFLOW-6840] Bump up version of future URL: https://github.com/apache/airflow/pull/7471#issuecomment-588604085 # [Codecov](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=h1) Report > Merging [#7471](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=desc) into [v1-10-test](https://codecov.io/gh/apache/airflow/commit/6135f7dcd76db27acdb70e4394db7449fa1bcf7a?src=pr&el=desc) will **increase** coverage by `<.01%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=tree) ```diff @@ Coverage Diff @@ ## v1-10-test#7471 +/- ## == + Coverage 81.63% 81.64% +<.01% == Files 529 529 Lines 3643736437 == + Hits2974729749 +2 + Misses 6690 6688 -2 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=tree) | Coverage Δ | | |---|---|---| | [airflow/operators/postgres\_operator.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvcG9zdGdyZXNfb3BlcmF0b3IucHk=) | `100% <0%> (ø)` | :arrow_up: | | [airflow/operators/mysql\_operator.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvbXlzcWxfb3BlcmF0b3IucHk=) | `100% <0%> (ø)` | :arrow_up: | | [airflow/operators/mysql\_to\_hive.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvbXlzcWxfdG9faGl2ZS5weQ==) | `100% <0%> (ø)` | :arrow_up: | | [airflow/operators/generic\_transfer.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvZ2VuZXJpY190cmFuc2Zlci5weQ==) | `100% <0%> (ø)` | :arrow_up: | | [airflow/contrib/kubernetes/volume\_mount.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9jb250cmliL2t1YmVybmV0ZXMvdm9sdW1lX21vdW50LnB5) | `100% <0%> (ø)` | :arrow_up: | | [airflow/hooks/hdfs\_hook.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9ob29rcy9oZGZzX2hvb2sucHk=) | `92.5% <0%> (ø)` | :arrow_up: | | [airflow/contrib/kubernetes/volume.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9jb250cmliL2t1YmVybmV0ZXMvdm9sdW1lLnB5) | `100% <0%> (ø)` | :arrow_up: | | [airflow/contrib/kubernetes/pod\_launcher.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9jb250cmliL2t1YmVybmV0ZXMvcG9kX2xhdW5jaGVyLnB5) | `92.48% <0%> (ø)` | :arrow_up: | | [airflow/security/kerberos.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9zZWN1cml0eS9rZXJiZXJvcy5weQ==) | `75.55% <0%> (ø)` | :arrow_up: | | [airflow/contrib/kubernetes/pod\_generator.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy9jb250cmliL2t1YmVybmV0ZXMvcG9kX2dlbmVyYXRvci5weQ==) | `87.5% <0%> (ø)` | :arrow_up: | | ... and [25 more](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=footer). Last update [6135f7d...2506022](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io commented on issue #7471: [AIRFLOW-6840] Bump up version of future

codecov-io commented on issue #7471: [AIRFLOW-6840] Bump up version of future URL: https://github.com/apache/airflow/pull/7471#issuecomment-588604085 # [Codecov](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=h1) Report > Merging [#7471](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=desc) into [v1-10-test](https://codecov.io/gh/apache/airflow/commit/6135f7dcd76db27acdb70e4394db7449fa1bcf7a?src=pr&el=desc) will **increase** coverage by `<.01%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=tree) ```diff @@ Coverage Diff @@ ## v1-10-test#7471 +/- ## == + Coverage 81.63% 81.64% +<.01% == Files 529 529 Lines 3643736437 == + Hits2974729749 +2 + Misses 6690 6688 -2 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=tree) | Coverage Δ | | |---|---|---| | [airflow/utils/dag\_processing.py](https://codecov.io/gh/apache/airflow/pull/7471/diff?src=pr&el=tree#diff-YWlyZmxvdy91dGlscy9kYWdfcHJvY2Vzc2luZy5weQ==) | `92.33% <0%> (+0.32%)` | :arrow_up: | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=footer). Last update [6135f7d...2506022](https://codecov.io/gh/apache/airflow/pull/7471?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io commented on issue #7470: [AIRFLOW-6834] Fix flaky test_scheduler_job by sorting TaskInstance

codecov-io commented on issue #7470: [AIRFLOW-6834] Fix flaky test_scheduler_job by sorting TaskInstance URL: https://github.com/apache/airflow/pull/7470#issuecomment-588602591 # [Codecov](https://codecov.io/gh/apache/airflow/pull/7470?src=pr&el=h1) Report > Merging [#7470](https://codecov.io/gh/apache/airflow/pull/7470?src=pr&el=desc) into [master](https://codecov.io/gh/apache/airflow/commit/1a9a9f7618f1c22e3e9a6ef4ec73b717c7760c7d?src=pr&el=desc) will **decrease** coverage by `0.45%`. > The diff coverage is `100%`. [](https://codecov.io/gh/apache/airflow/pull/7470?src=pr&el=tree) ```diff @@Coverage Diff @@ ## master#7470 +/- ## == - Coverage 86.68% 86.22% -0.46% == Files 882 882 Lines 4152641526 == - Hits3599735806 -191 - Misses 5529 5720 +191 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/7470?src=pr&el=tree) | Coverage Δ | | |---|---|---| | [airflow/models/dagrun.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9tb2RlbHMvZGFncnVuLnB5) | `96.55% <100%> (ø)` | :arrow_up: | | [...w/providers/apache/hive/operators/mysql\_to\_hive.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvYXBhY2hlL2hpdmUvb3BlcmF0b3JzL215c3FsX3RvX2hpdmUucHk=) | `35.84% <0%> (-64.16%)` | :arrow_down: | | [airflow/kubernetes/volume\_mount.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZV9tb3VudC5weQ==) | `44.44% <0%> (-55.56%)` | :arrow_down: | | [airflow/kubernetes/volume.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZS5weQ==) | `52.94% <0%> (-47.06%)` | :arrow_down: | | [airflow/security/kerberos.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9zZWN1cml0eS9rZXJiZXJvcy5weQ==) | `30.43% <0%> (-45.66%)` | :arrow_down: | | [airflow/kubernetes/pod\_launcher.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3BvZF9sYXVuY2hlci5weQ==) | `47.18% <0%> (-45.08%)` | :arrow_down: | | [airflow/providers/mysql/operators/mysql.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvbXlzcWwvb3BlcmF0b3JzL215c3FsLnB5) | `55% <0%> (-45%)` | :arrow_down: | | [...viders/cncf/kubernetes/operators/kubernetes\_pod.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvY25jZi9rdWJlcm5ldGVzL29wZXJhdG9ycy9rdWJlcm5ldGVzX3BvZC5weQ==) | `69.38% <0%> (-25.52%)` | :arrow_down: | | [airflow/kubernetes/refresh\_config.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3JlZnJlc2hfY29uZmlnLnB5) | `50.98% <0%> (-23.53%)` | :arrow_down: | | [airflow/providers/apache/hive/hooks/hive.py](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvYXBhY2hlL2hpdmUvaG9va3MvaGl2ZS5weQ==) | `76.02% <0%> (-1.54%)` | :arrow_down: | | ... and [2 more](https://codecov.io/gh/apache/airflow/pull/7470/diff?src=pr&el=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/7470?src=pr&el=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/7470?src=pr&el=footer). Last update [1a9a9f7...13e180b](https://codecov.io/gh/apache/airflow/pull/7470?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] yuqian90 opened a new pull request #7471: [AIRFLOW-6840] Bump up version of future

yuqian90 opened a new pull request #7471: [AIRFLOW-6840] Bump up version of future URL: https://github.com/apache/airflow/pull/7471 --- Issue link: WILL BE INSERTED BY [boring-cyborg](https://github.com/kaxil/boring-cyborg) Make sure to mark the boxes below before creating PR: [x] - [ ] Description above provides context of the change - [ ] Commit message/PR title starts with `[AIRFLOW-]`. AIRFLOW- = JIRA ID* - [ ] Unit tests coverage for changes (not needed for documentation changes) - [ ] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [ ] Relevant documentation is updated including usage instructions. - [ ] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). * For document-only changes commit message can start with `[AIRFLOW-]`. --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-6840) Bump up version of future to 0.18.2 in v-10-testing to get closer to python 3.8

[ https://issues.apache.org/jira/browse/AIRFLOW-6840?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17040608#comment-17040608 ] ASF GitHub Bot commented on AIRFLOW-6840: - yuqian90 commented on pull request #7471: [AIRFLOW-6840] Bump up version of future URL: https://github.com/apache/airflow/pull/7471 --- Issue link: WILL BE INSERTED BY [boring-cyborg](https://github.com/kaxil/boring-cyborg) Make sure to mark the boxes below before creating PR: [x] - [ ] Description above provides context of the change - [ ] Commit message/PR title starts with `[AIRFLOW-]`. AIRFLOW- = JIRA ID* - [ ] Unit tests coverage for changes (not needed for documentation changes) - [ ] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [ ] Relevant documentation is updated including usage instructions. - [ ] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). * For document-only changes commit message can start with `[AIRFLOW-]`. --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Bump up version of future to 0.18.2 in v-10-testing to get closer to python > 3.8 > --- > > Key: AIRFLOW-6840 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6840 > Project: Apache Airflow > Issue Type: Bug > Components: core >Affects Versions: 1.10.9 >Reporter: Qian Yu >Assignee: Qian Yu >Priority: Major > > Bump up future from 0.17 to 0.18.2. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (AIRFLOW-6834) Fix flaky test test_scheduler_job.py::TestDagFileProcessor::test_dag_file_processor_process_task_instances_depends_on_past

[

https://issues.apache.org/jira/browse/AIRFLOW-6834?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Qian Yu reassigned AIRFLOW-6834:

Assignee: Qian Yu

> Fix flaky test

> test_scheduler_job.py::TestDagFileProcessor::test_dag_file_processor_process_task_instances_depends_on_past

> --

>

> Key: AIRFLOW-6834

> URL: https://issues.apache.org/jira/browse/AIRFLOW-6834

> Project: Apache Airflow

> Issue Type: Bug

> Components: tests

>Affects Versions: 1.10.9

>Reporter: Qian Yu

>Assignee: Qian Yu

>Priority: Major

>

> test_scheduler_job.py has a few flaky tests. Some are marked with

> pytest.mark.xfail, but this one is not marked flaky. It sometimes fails in

> Travis. For example:

>

> {code:python}

> FAILURES

>

> _

> TestDagFileProcessor.test_dag_file_processor_process_task_instances_depends_on_past_0

> __

> a = ( testMethod=test_dag_file_processor_process_task_instances_depends_on_past_0>,)

> @wraps(func)

> def standalone_func(*a):

> > return func(*(a + p.args), **p.kwargs)

> /usr/local/lib/python3.6/site-packages/parameterized/parameterized.py:518:

> _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

> _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

> self = testMethod=test_dag_file_processor_process_task_instances_depends_on_past_0>

> state = None, start_date = None, end_date = None

> @parameterized.expand([

> [State.NONE, None, None],

> [State.UP_FOR_RETRY, timezone.utcnow() -

> datetime.timedelta(minutes=30),

> timezone.utcnow() - datetime.timedelta(minutes=15)],

> [State.UP_FOR_RESCHEDULE, timezone.utcnow() -

> datetime.timedelta(minutes=30),

> timezone.utcnow() - datetime.timedelta(minutes=15)],

> ])

> def test_dag_file_processor_process_task_instances_depends_on_past(self,

> state, start_date, end_date):

> """

> Test if _process_task_instances puts the right task instances into the

> mock_list.

> """

> dag = DAG(

> dag_id='test_scheduler_process_execute_task_depends_on_past',

> start_date=DEFAULT_DATE,

> default_args={

> 'depends_on_past': True,

> },

> )

> dag_task1 = DummyOperator(

> task_id='dummy1',

> dag=dag,

> owner='airflow')

> dag_task2 = DummyOperator(

> task_id='dummy2',

> dag=dag,

> owner='airflow')

> with create_session() as session:

> orm_dag = DagModel(dag_id=dag.dag_id)

> session.merge(orm_dag)

> dag_file_processor = DagFileProcessor(dag_ids=[],

> log=mock.MagicMock())

> dag.clear()

> dr = dag_file_processor.create_dag_run(dag)

> self.assertIsNotNone(dr)

> with create_session() as session:

> tis = dr.get_task_instances(session=session)

> for ti in tis:

> ti.state = state

> ti.start_date = start_date

> ti.end_date = end_date

> ti_to_schedule = []

> dag_file_processor._process_task_instances(dag,

> task_instances_list=ti_to_schedule)

> > assert ti_to_schedule == [

> (dag.dag_id, dag_task1.task_id, DEFAULT_DATE, TRY_NUMBER),

> (dag.dag_id, dag_task2.task_id, DEFAULT_DATE, TRY_NUMBER),

> ]

> E AssertionError: assert

> [('test_scheduler_process_execute_task_depends_on_past',\n 'dummy2',\n

> datetime.datetime(2016, 1, 1, 0, 0, tzinfo= +00:00:00, STD]>),\n 1),\n

> ('test_scheduler_process_execute_task_depends_on_past',\n 'dummy1',\n

> datetime.datetime(2016, 1, 1, 0, 0, tzinfo= +00:00:00, STD]>),\n 1)] ==

> [('test_scheduler_process_execute_task_depends_on_past',\n 'dummy1',\n

> datetime.datetime(2016, 1, 1, 0, 0, tzinfo=),\n 1),\n

> ('test_scheduler_process_execute_task_depends_on_past',\n 'dummy2',\n

> datetime.datetime(2016, 1, 1, 0, 0, tzinfo=),\n 1)]

> E At index 0 diff:

> ('test_scheduler_process_execute_task_depends_on_past', 'dummy2',

> datetime.datetime(2016, 1, 1, 0, 0, tzinfo= +00:00:00, STD]>), 1) !=

> ('test_scheduler_process_execute_task_depends_on_past', 'dummy1',

> datetime.datetime(2016, 1, 1, 0, 0, tzinfo=), 1)

> E Full diff:

> E [

> E('test_scheduler_process_execute_task_depends_on_past',

> E - 'dummy2',

> E ? ^

> E + 'dummy1',

> E ? ^

> E - datetime.datetime(2016, 1, 1, 0, 0, tzinfo= GMT, +00:00:00, STD]>),

>

[jira] [Commented] (AIRFLOW-6834) Fix flaky test test_scheduler_job.py::TestDagFileProcessor::test_dag_file_processor_process_task_instances_depends_on_past

[

https://issues.apache.org/jira/browse/AIRFLOW-6834?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17040604#comment-17040604

]

ASF GitHub Bot commented on AIRFLOW-6834:

-

yuqian90 commented on pull request #7470: [AIRFLOW-6834] Fix flaky

test_scheduler_job by sorting TaskInstance

URL: https://github.com/apache/airflow/pull/7470

Fix a flaky test that fails because `TaskInstance` are returned in

non-deterministic order:

```

test_scheduler_job.py::TestDagFileProcessor::test_dag_file_processor_process_task_instances_depends_on_past

```

---

Issue link: WILL BE INSERTED BY

[boring-cyborg](https://github.com/kaxil/boring-cyborg)

Make sure to mark the boxes below before creating PR: [x]

- [x] Description above provides context of the change

- [x] Commit message/PR title starts with `[AIRFLOW-]`. AIRFLOW- =

JIRA ID*

- [x] Unit tests coverage for changes (not needed for documentation changes)

- [x] Commits follow "[How to write a good git commit

message](http://chris.beams.io/posts/git-commit/)"

- [x] Relevant documentation is updated including usage instructions.

- [x] I will engage committers as explained in [Contribution Workflow

Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example).

* For document-only changes commit message can start with

`[AIRFLOW-]`.

---

In case of fundamental code change, Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in

[UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md).

Read the [Pull Request

Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines)

for more information.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Fix flaky test

> test_scheduler_job.py::TestDagFileProcessor::test_dag_file_processor_process_task_instances_depends_on_past

> --

>

> Key: AIRFLOW-6834

> URL: https://issues.apache.org/jira/browse/AIRFLOW-6834

> Project: Apache Airflow

> Issue Type: Bug

> Components: tests

>Affects Versions: 1.10.9

>Reporter: Qian Yu

>Priority: Major

>

> test_scheduler_job.py has a few flaky tests. Some are marked with

> pytest.mark.xfail, but this one is not marked flaky. It sometimes fails in

> Travis. For example:

>

> {code:python}

> FAILURES

>

> _

> TestDagFileProcessor.test_dag_file_processor_process_task_instances_depends_on_past_0

> __

> a = ( testMethod=test_dag_file_processor_process_task_instances_depends_on_past_0>,)

> @wraps(func)

> def standalone_func(*a):

> > return func(*(a + p.args), **p.kwargs)

> /usr/local/lib/python3.6/site-packages/parameterized/parameterized.py:518:

> _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

> _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

> self = testMethod=test_dag_file_processor_process_task_instances_depends_on_past_0>

> state = None, start_date = None, end_date = None

> @parameterized.expand([

> [State.NONE, None, None],

> [State.UP_FOR_RETRY, timezone.utcnow() -

> datetime.timedelta(minutes=30),

> timezone.utcnow() - datetime.timedelta(minutes=15)],

> [State.UP_FOR_RESCHEDULE, timezone.utcnow() -

> datetime.timedelta(minutes=30),

> timezone.utcnow() - datetime.timedelta(minutes=15)],

> ])

> def test_dag_file_processor_process_task_instances_depends_on_past(self,

> state, start_date, end_date):

> """

> Test if _process_task_instances puts the right task instances into the

> mock_list.

> """

> dag = DAG(

> dag_id='test_scheduler_process_execute_task_depends_on_past',

> start_date=DEFAULT_DATE,

> default_args={

> 'depends_on_past': True,

> },

> )

> dag_task1 = DummyOperator(

> task_id='dummy1',

> dag=dag,

> owner='airflow')

> dag_task2 = DummyOp

[GitHub] [airflow] yuqian90 opened a new pull request #7470: [AIRFLOW-6834] Fix flaky test_scheduler_job by sorting TaskInstance

yuqian90 opened a new pull request #7470: [AIRFLOW-6834] Fix flaky test_scheduler_job by sorting TaskInstance URL: https://github.com/apache/airflow/pull/7470 Fix a flaky test that fails because `TaskInstance` are returned in non-deterministic order: ``` test_scheduler_job.py::TestDagFileProcessor::test_dag_file_processor_process_task_instances_depends_on_past ``` --- Issue link: WILL BE INSERTED BY [boring-cyborg](https://github.com/kaxil/boring-cyborg) Make sure to mark the boxes below before creating PR: [x] - [x] Description above provides context of the change - [x] Commit message/PR title starts with `[AIRFLOW-]`. AIRFLOW- = JIRA ID* - [x] Unit tests coverage for changes (not needed for documentation changes) - [x] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [x] Relevant documentation is updated including usage instructions. - [x] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). * For document-only changes commit message can start with `[AIRFLOW-]`. --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] codecov-io edited a comment on issue #6870: [AIRFLOW-0578] Check return code

codecov-io edited a comment on issue #6870: [AIRFLOW-0578] Check return code URL: https://github.com/apache/airflow/pull/6870#issuecomment-569120114 # [Codecov](https://codecov.io/gh/apache/airflow/pull/6870?src=pr&el=h1) Report > Merging [#6870](https://codecov.io/gh/apache/airflow/pull/6870?src=pr&el=desc) into [master](https://codecov.io/gh/apache/airflow/commit/3730c24c41470cd331c5109539ee2fa0c9f4e74a?src=pr&el=desc) will **increase** coverage by `0.88%`. > The diff coverage is `100%`. [](https://codecov.io/gh/apache/airflow/pull/6870?src=pr&el=tree) ```diff @@Coverage Diff@@ ## master #6870 +/- ## = + Coverage 85.52% 86.4% +0.88% = Files 758 882 +124 Lines 39932 41541+1609 = + Hits34150 35892+1742 + Misses 57825649 -133 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/6870?src=pr&el=tree) | Coverage Δ | | |---|---|---| | [airflow/utils/state.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy91dGlscy9zdGF0ZS5weQ==) | `96.55% <100%> (+0.25%)` | :arrow_up: | | [airflow/jobs/local\_task\_job.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9qb2JzL2xvY2FsX3Rhc2tfam9iLnB5) | `90.8% <100%> (+1.06%)` | :arrow_up: | | [airflow/jobs/base\_job.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9qb2JzL2Jhc2Vfam9iLnB5) | `91.15% <100%> (-1.05%)` | :arrow_down: | | [airflow/operators/postgres\_operator.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvcG9zdGdyZXNfb3BlcmF0b3IucHk=) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/operators/mysql\_to\_hive.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvbXlzcWxfdG9faGl2ZS5weQ==) | `0% <0%> (-100%)` | :arrow_down: | | [...rflow/providers/apache/cassandra/sensors/record.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvYXBhY2hlL2Nhc3NhbmRyYS9zZW5zb3JzL3JlY29yZC5weQ==) | `0% <0%> (-100%)` | :arrow_down: | | [...irflow/providers/apache/cassandra/sensors/table.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvYXBhY2hlL2Nhc3NhbmRyYS9zZW5zb3JzL3RhYmxlLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/contrib/operators/snowflake\_operator.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy9zbm93Zmxha2Vfb3BlcmF0b3IucHk=) | `0% <0%> (-95.84%)` | :arrow_down: | | [airflow/operators/s3\_to\_hive\_operator.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9vcGVyYXRvcnMvczNfdG9faGl2ZV9vcGVyYXRvci5weQ==) | `0% <0%> (-93.97%)` | :arrow_down: | | [airflow/contrib/hooks/grpc\_hook.py](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree#diff-YWlyZmxvdy9jb250cmliL2hvb2tzL2dycGNfaG9vay5weQ==) | `0% <0%> (-91.94%)` | :arrow_down: | | ... and [979 more](https://codecov.io/gh/apache/airflow/pull/6870/diff?src=pr&el=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/6870?src=pr&el=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/6870?src=pr&el=footer). Last update [3730c24...6ea63bd](https://codecov.io/gh/apache/airflow/pull/6870?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

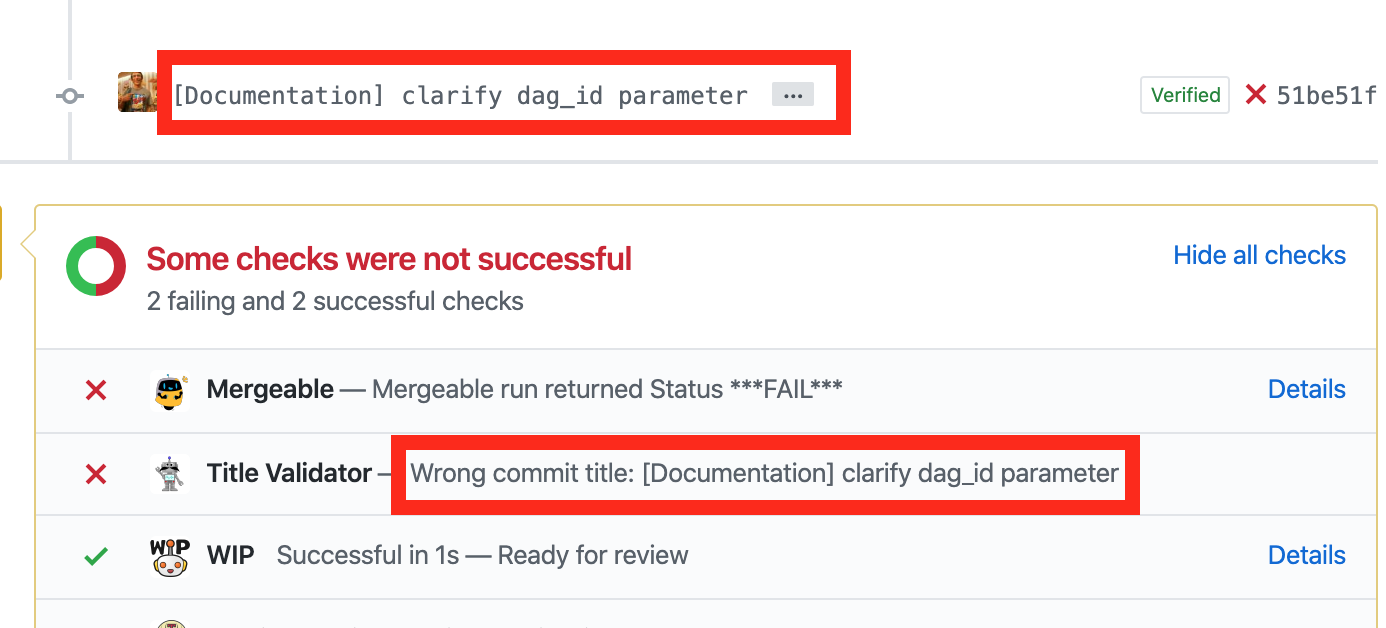

[GitHub] [airflow] zhongjiajie commented on issue #7463: [AIRFLOW-XXXX] clarify dag_id parameter

zhongjiajie commented on issue #7463: [AIRFLOW-] clarify dag_id parameter URL: https://github.com/apache/airflow/pull/7463#issuecomment-588573087 @MichaelChirico Not only your PR title, but also your git commit message.  you could change and then force push to restart validator This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #5177: [AIRFLOW-4084] Fix bug downloading incomplete logs from ElasticSearch

mik-laj edited a comment on issue #5177: [AIRFLOW-4084] Fix bug downloading incomplete logs from ElasticSearch URL: https://github.com/apache/airflow/pull/5177#issuecomment-557788417 I wonder why this change had to make changes to the ``views.py`` file. Why were the Task Handler not updated? In my opinion, we should check the existence of the ``download_logs`` key in the implementation of the handler logic and then disable the pagination mechanism. Now the abstraction of code is running away and it is possible that we are breaking other handlers because they expected different behavior. I am working on documentation for this class and if we revert this change we will be able to do it as follows. In my opinion this is the original behavior of this code. ```python class TaskHandler(logging.Handler, ABC): """ Handler that allows you to write and read information about a specific task. """ @abstractmethod def read( self, task_instance: TaskInstance, try_number: Optional[int] = None, metadata: Optional[Dict] = None ) -> Tuple[List[str], List[Dict]]: """ Read logs of given task instance. It supports log pagination. To do this, the first call to this function contains an empty metadata object. As a result, list of logs and list of metadata should be returned. The resulting metadata should contain the key ``end_of_logs``, which determines whether pagination should be continued. It is possible to return more metadata objects, but only the first is used, so you should always return a list with one item. The remaining keys in the dictionary are sent back to the method without changes, which means that if you add an additional key with a token or page number, you can expect that the key will be available in the next request for logs. If the metadata in the call contains the ``download_logs'' key, then full logs should be returned without pagination. :param task_instance: task instance object :param try_number: task instance try_number to read logs from. If None it returns all logs separated by try_number :param metadata: log metadata, can be used for steaming log reading and auto-tailing. :return: a list of logs and list of metadata objects. """ ... @abstractmethod def set_context(self, task_instance: TaskInstance) -> None: """ Provide task_instance context to airflow task handler. Different implementations provide different behavior. Examples of behavior are: * in the case of handlers writing to a file, it may start writing to another file; * for remote services, it can start adding labels to logs. This allows us to later search for logs for a single task :param task_instance: task instance object """ ... ``` I would like to point out that in this PR there is a duplicate mechanism of handling the case when try_numbers is empty, i.e. the log for all try numbers is downloaded. Previously it was part of the file_task_handler handler, and now it has been copied a second time to another place despite the es_task_handler extended from this handler. https://github.com/apache/airflow/blob/master/airflow/utils/log/file_task_handler.py#L155-L164 https://github.com/apache/airflow/blob/master/airflow/utils/log/es_task_handler.py#L36 https://github.com/apache/airflow/blob/master/airflow/www/views.py#L595-L599 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] digger commented on issue #6371: [AIRFLOW-5691] Rewrite Dataproc operators to use python library

digger commented on issue #6371: [AIRFLOW-5691] Rewrite Dataproc operators to use python library URL: https://github.com/apache/airflow/pull/6371#issuecomment-588548518 @dossett, I don't know if that was intentional. I just shared my feedback on changes made in AIRFLOW-3211. In the company I work for we use Airflow and we had to patch Airflow 1.10.7 and 1.10.9, reverting changes from AIRFLOW-3211, in order to make the dataproc functionality work. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[airflow-site] branch asf-site updated: Update - Thu Feb 20 00:19:54 UTC 2020

This is an automated email from the ASF dual-hosted git repository. kamilbregula pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/airflow-site.git The following commit(s) were added to refs/heads/asf-site by this push: new 3ffa600 Update - Thu Feb 20 00:19:54 UTC 2020 3ffa600 is described below commit 3ffa6004f0a4a9b1e22574263453a40aef2be76c Author: Kamil Bregula AuthorDate: Thu Feb 20 00:19:55 2020 + Update - Thu Feb 20 00:19:54 UTC 2020 --- 404.html | 4 +- blog/airflow-survey/index.html | 4 +- blog/announcing-new-website/index.html | 4 +- .../index.html | 4 +- .../index.html | 4 +- .../index.html | 4 +- blog/index.html| 4 +- .../index.html | 4 +- blog/tags/community/index.html | 4 +- blog/tags/development/index.html | 4 +- blog/tags/documentation/index.html | 4 +- blog/tags/survey/index.html| 4 +- blog/tags/users/index.html | 4 +- categories/index.html | 4 +- community/index.html | 4 +- index.html | 32 ++--- install/index.html | 4 +- integration-logos/apache/cassandra-3.png | Bin 0 -> 87960 bytes integration-logos/apache/druid-1.png | Bin 0 -> 28442 bytes integration-logos/apache/hadoop.png| Bin 0 -> 52103 bytes integration-logos/apache/hive.png | Bin 0 -> 141920 bytes integration-logos/apache/pig.png | Bin 0 -> 112286 bytes integration-logos/apache/pinot.png | Bin 0 -> 26792 bytes integration-logos/apache/spark.png | Bin 0 -> 66895 bytes integration-logos/apache/sqoop.png | Bin 0 -> 42874 bytes integration-logos/aws/aws-batch_light...@4x.png| Bin 0 -> 7274 bytes integration-logos/aws/aws-glue_light...@4x.png | Bin 0 -> 4392 bytes integration-logos/aws/aws-lambda_light...@4x.png | Bin 0 -> 4262 bytes .../aws/amazon-athena_light...@4x.png | Bin 0 -> 10215 bytes .../aws/amazon-cloudwatch_light...@4x.png | Bin 0 -> 7248 bytes .../aws/amazon-dynamodb_light...@4x.png| Bin 0 -> 7570 bytes integration-logos/aws/amazon-ec2_light...@4x.png | Bin 0 -> 2134 bytes integration-logos/aws/amazon-emr_light...@4x.png | Bin 0 -> 9456 bytes .../amazon-kinesis-data-firehose_light...@4x.png | Bin 0 -> 5259 bytes .../aws/amazon-redshift_light...@4x.png| Bin 0 -> 6391 bytes .../aws/amazon-sagemaker_light...@4x.png | Bin 0 -> 7702 bytes ...simple-notification-service-sns_light...@4x.png | Bin 0 -> 8103 bytes ...amazon-simple-queue-service-sqs_light...@4x.png | Bin 0 -> 8759 bytes ...mazon-simple-storage-service-s3_light...@4x.png | Bin 0 -> 7252 bytes integration-logos/azure-logo.svg | 24 -- integration-logos/azure/Azure Cosmos DB.svg| 11 + integration-logos/azure/Azure Files.svg| 8 integration-logos/azure/Blob Storage.svg | 9 integration-logos/azure/Container Instances.svg| 9 integration-logos/azure/Data Lake Storage.svg | 37 +++ integration-logos/gcp/AI-Platform.png | Bin 0 -> 6284 bytes integration-logos/gcp/BigQuery.png | Bin 0 -> 6210 bytes integration-logos/gcp/Cloud-AutoML.png | Bin 0 -> 6100 bytes integration-logos/gcp/Cloud-Bigtable.png | Bin 0 -> 8346 bytes integration-logos/gcp/Cloud-Build.png | Bin 0 -> 7075 bytes integration-logos/gcp/Cloud-Dataflow.png | Bin 0 -> 7332 bytes integration-logos/gcp/Cloud-Dataproc.png | Bin 0 -> 7257 bytes integration-logos/gcp/Cloud-Datastore.png | Bin 0 -> 4355 bytes integration-logos/gcp/Cloud-Functions.png | Bin 0 -> 5004 bytes integration-logos/gcp/Cloud-Memorystore.png| Bin 0 -> 4184 bytes integration-logos/gcp/Cloud-NLP.png| Bin 0 -> 3824 bytes integration-logos/gcp/Cloud-PubSub.png | Bin 0 -> 6756 bytes integration-logos/gcp/Cloud-SQL.png| Bin 0 -> 6704 bytes integration-logos/gcp/Cloud-Spanner.png| Bin 0 -> 5940 bytes integration-logos/gcp/Cloud-Speech-to-Text.png | Bin 0 -> 3830 bytes integration-logos/gcp/Cloud-Storage.png| Bin 0 -> 4189 bytes integration-logos/gcp/Cloud-Tasks.png | Bin 0 -> 17170 bytes integration-logos/gcp/Cloud-Text-to-Speech.png | Bin 0 -> 5030 bytes integration-logos/gcp/Cloud-Translation-API.png| Bin 0 -> 6645 bytes .../g

[GitHub] [airflow-site] mik-laj merged pull request #245: Add logos to integrations list

mik-laj merged pull request #245: Add logos to integrations list URL: https://github.com/apache/airflow-site/pull/245 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[airflow-site] branch master updated: Add logos to integrations list (#245)

This is an automated email from the ASF dual-hosted git repository.

kamilbregula pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/airflow-site.git

The following commit(s) were added to refs/heads/master by this push:

new 8495412 Add logos to integrations list (#245)

8495412 is described below

commit 8495412957c20862448eae671064125d8f887a4e

Author: Louis Guitton

AuthorDate: Thu Feb 20 01:10:02 2020 +0100

Add logos to integrations list (#245)

---

landing-pages/site/assets/scss/_list-boxes.scss| 2 +

.../integration-logos/apache/cassandra-3.png | Bin 0 -> 87960 bytes

.../static/integration-logos/apache/druid-1.png| Bin 0 -> 28442 bytes

.../static/integration-logos/apache/hadoop.png | Bin 0 -> 52103 bytes

.../site/static/integration-logos/apache/hive.png | Bin 0 -> 141920 bytes

.../site/static/integration-logos/apache/pig.png | Bin 0 -> 112286 bytes

.../site/static/integration-logos/apache/pinot.png | Bin 0 -> 26792 bytes

.../site/static/integration-logos/apache/spark.png | Bin 0 -> 66895 bytes

.../site/static/integration-logos/apache/sqoop.png | Bin 0 -> 42874 bytes

.../aws/aws-batch_light...@4x.png | Bin 0 -> 7274 bytes

.../integration-logos/aws/aws-glue_light...@4x.png | Bin 0 -> 4392 bytes

.../aws/aws-lambda_light...@4x.png | Bin 0 -> 4262 bytes

.../aws/amazon-athena_light...@4x.png | Bin 0 -> 10215 bytes

.../aws/amazon-cloudwatch_light...@4x.png | Bin 0 -> 7248 bytes

.../aws/amazon-dynamodb_light...@4x.png| Bin 0 -> 7570 bytes

.../aws/amazon-ec2_light...@4x.png | Bin 0 -> 2134 bytes

.../aws/amazon-emr_light...@4x.png | Bin 0 -> 9456 bytes

.../amazon-kinesis-data-firehose_light...@4x.png | Bin 0 -> 5259 bytes

.../aws/amazon-redshift_light...@4x.png| Bin 0 -> 6391 bytes

.../aws/amazon-sagemaker_light...@4x.png | Bin 0 -> 7702 bytes

...simple-notification-service-sns_light...@4x.png | Bin 0 -> 8103 bytes

...amazon-simple-queue-service-sqs_light...@4x.png | Bin 0 -> 8759 bytes

...mazon-simple-storage-service-s3_light...@4x.png | Bin 0 -> 7252 bytes

.../site/static/integration-logos/azure-logo.svg | 24 --

.../integration-logos/azure/Azure Cosmos DB.svg| 11 +

.../static/integration-logos/azure/Azure Files.svg | 8

.../integration-logos/azure/Blob Storage.svg | 9

.../azure/Container Instances.svg | 9

.../integration-logos/azure/Data Lake Storage.svg | 37 +++

.../static/integration-logos/gcp/AI-Platform.png | Bin 0 -> 6284 bytes

.../site/static/integration-logos/gcp/BigQuery.png | Bin 0 -> 6210 bytes

.../static/integration-logos/gcp/Cloud-AutoML.png | Bin 0 -> 6100 bytes

.../integration-logos/gcp/Cloud-Bigtable.png | Bin 0 -> 8346 bytes

.../static/integration-logos/gcp/Cloud-Build.png | Bin 0 -> 7075 bytes

.../integration-logos/gcp/Cloud-Dataflow.png | Bin 0 -> 7332 bytes

.../integration-logos/gcp/Cloud-Dataproc.png | Bin 0 -> 7257 bytes

.../integration-logos/gcp/Cloud-Datastore.png | Bin 0 -> 4355 bytes

.../integration-logos/gcp/Cloud-Functions.png | Bin 0 -> 5004 bytes

.../integration-logos/gcp/Cloud-Memorystore.png| Bin 0 -> 4184 bytes

.../static/integration-logos/gcp/Cloud-NLP.png | Bin 0 -> 3824 bytes

.../static/integration-logos/gcp/Cloud-PubSub.png | Bin 0 -> 6756 bytes

.../static/integration-logos/gcp/Cloud-SQL.png | Bin 0 -> 6704 bytes

.../static/integration-logos/gcp/Cloud-Spanner.png | Bin 0 -> 5940 bytes

.../integration-logos/gcp/Cloud-Speech-to-Text.png | Bin 0 -> 3830 bytes

.../static/integration-logos/gcp/Cloud-Storage.png | Bin 0 -> 4189 bytes

.../static/integration-logos/gcp/Cloud-Tasks.png | Bin 0 -> 17170 bytes

.../integration-logos/gcp/Cloud-Text-to-Speech.png | Bin 0 -> 5030 bytes

.../gcp/Cloud-Translation-API.png | Bin 0 -> 6645 bytes

.../gcp/Cloud-Video-Intelligence-API.png | Bin 0 -> 5810 bytes

.../integration-logos/gcp/Cloud-Vision-API.png | Bin 0 -> 5822 bytes

.../integration-logos/gcp/Compute-Engine.png | Bin 0 -> 4817 bytes

.../gcp/Key-Management-Service.png | Bin 0 -> 7056 bytes

.../integration-logos/gcp/Kubernetes-Engine.png| Bin 0 -> 6967 bytes

landing-pages/site/static/integrations.json| 52 +

54 files changed, 128 insertions(+), 24 deletions(-)

diff --git a/landing-pages/site/assets/scss/_list-boxes.scss

b/landing-pages/site/assets/scss/_list-boxes.scss

index 30709b3..c65afab 100644

--- a/landing-pages/site/assets/scss/_list-boxes.scss

+++ b/landing-pages/site/assets/scss/_list-boxes.scss

@@ -192,6 +192,8 @@ $card-margin: 20px;

margin: auto 0;

filter: grayscale(1);

opacity: 0.6;

+ max-width: 100%;

+ max-height: 100%;

}

&--name {

diff --git a/landing-pages/site/static/integration-logos/

[GitHub] [airflow-site] mik-laj commented on issue #245: Add logos to integrations list

mik-laj commented on issue #245: Add logos to integrations list URL: https://github.com/apache/airflow-site/pull/245#issuecomment-588540722 @kaxil This person is no longer working on Airflow, but I have a response from the design team. > cool, only those gray squares would reduce a bit (but that they would still be centered), because now they are a bit close to the text It's "cool", so we can merge it. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow-site] mik-laj commented on issue #246: Expand the guide for contributors

mik-laj commented on issue #246: Expand the guide for contributors URL: https://github.com/apache/airflow-site/pull/246#issuecomment-588539848 Thanks @kaxil This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor

mik-laj commented on a change in pull request #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor URL: https://github.com/apache/airflow/pull/7163#discussion_r381607848 ## File path: tests/test_project_structure.py ## @@ -36,6 +36,9 @@ 'tests/providers/apache/pig/operators/test_pig.py', 'tests/providers/apache/spark/hooks/test_spark_jdbc_script.py', 'tests/providers/cncf/kubernetes/operators/test_kubernetes_pod.py', + 'tests/providers/cncf/kubernetes/operators/test_spark_kubernetes_operator.py', +'tests/providers/cncf/kubernetes/hooks/test_kubernetes_hook.py', +'tests/providers/cncf/kubernetes/sensors/test_spark_kubernetes_sensor.py', Review comment: Why is this not possible? I think that shouldn't be a problem. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] dossett commented on issue #6371: [AIRFLOW-5691] Rewrite Dataproc operators to use python library

dossett commented on issue #6371: [AIRFLOW-5691] Rewrite Dataproc operators to use python library URL: https://github.com/apache/airflow/pull/6371#issuecomment-588523405 @digger I take your points about the original change. Do you know if reverting that functionality was an intentional part of this PR? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow-site] kaxil commented on issue #245: Add logos to integrations list

kaxil commented on issue #245: Add logos to integrations list URL: https://github.com/apache/airflow-site/pull/245#issuecomment-588512075 Awesome work @louisguitton 🎉 @kgabryje Any comments? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[airflow-site] branch master updated: Expand the guide for contributors (#246)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/airflow-site.git The following commit(s) were added to refs/heads/master by this push: new ed0cf1a Expand the guide for contributors (#246) ed0cf1a is described below commit ed0cf1a0c0432e7f3f72c35080fc8953e042402d Author: Kamil Breguła AuthorDate: Wed Feb 19 23:41:14 2020 +0100 Expand the guide for contributors (#246) --- CONTRIBUTE.md | 559 ++ site.sh | 2 +- 2 files changed, 452 insertions(+), 109 deletions(-) diff --git a/CONTRIBUTE.md b/CONTRIBUTE.md index ea6f089..5a0d671 100644 --- a/CONTRIBUTE.md +++ b/CONTRIBUTE.md @@ -17,9 +17,10 @@ under the License. --> -## General directory structure +Contributor Guide += -```bash +``` . ├── dist ├── docs-archive @@ -54,49 +55,120 @@ └── sphinx_airflow_theme ``` -## Working with the project +# Working with the project + +Work with the site and documentation requires that your computer be properly prepared. Most tasks can +be done by the site.sh script + +### Prerequisite Tasks + +The following applications must be installed to use the project: + +* git +* docker + +It is also worth adding SSH keys for the `github.com` server to trusted ones. It is necessary to clone repositories. You can do this using following command: +```bash +ssh-keyscan -t rsa -H github.com >> ~/.ssh/known_hosts +``` + +**Debian instalation** + +To install git on Debian, run the following command: +```bash +sudo apt install git -y +``` + +To install docker, run the following command: +```bash +curl -fsSL https://get.docker.com -o get-docker.sh && sh get-docker.sh +sudo usermod -aG docker $USER +``` + +Git must have commit author information configured, run these commands +```bash +git config --global user.email '' +git config --global user.name '' +``` + +### Static checks + +The project uses many static checks using fantastic [pre-commit](https://pre-commit.com/). Every change is checked on CI and if it does not pass the tests it cannot be accepted. If you want to check locally then you should install Python3.6 or newer together with pip and run following command to install pre-commit: + +```bash +pip install -r requirements.txt +``` + +To turn on pre-commit checks for commit operations in git, enter: +```bash +pre-commit install +``` + +To run all checks on your staged files, enter: +```bash +pre-commit run +``` + +To run all checks on all files, enter: +```bash +pre-commit run --all-files +``` + +Pre-commit check results are also attached to your PR through integration with Travis CI. + +### Clone repository + +To clone repository from github.com to local disk, run following command + +```bash +git clone g...@github.com:apache/airflow-site.git +git submodule update --init --recursive +``` + +### Use `site.sh` script In order to run an environment for the project, make sure that you have Docker installed. Then, use the `site.sh` script to work with the website in a Docker container. `site.sh` provides the following commands. -build-site Prepare dist directory with landing pages and documentation -preview-siteStarts the web server with preview of the website -build-landing-pages Builds a landing pages -prepare-theme Prepares and copies files needed for the proper functioning of the sphinx theme. -shell Start shell -build-image Build a Docker image with a environment -install-node-deps Download all the Node dependencies -check-site-linksChecks if the links are correct in the website -lint-cssLint CSS files -lint-js Lint Javascript files -cleanup Delete the virtual environment in Docker -stopStop the environment -helpDisplay usage +build-sitePrepare dist directory with landing pages and documentation +preview-landing-pages Starts the web server with preview of the website +build-landing-pages Builds a landing pages +prepare-theme Prepares and copies files needed for the proper functioning of the sphinx theme. +shell Start shell +build-image Build a Docker image with a environment +install-node-deps Download all the Node dependencies +check-site-links Checks if the links are correct in the website +lint-css Lint CSS files +lint-js Lint Javascript files +cleanup Delete the virtual environment in Docker +stop Stop the environment +help Display usage ### How to add a new blogpost To add a new blogpost with pre-filled frontmatter, in `/landing-pages/site` run: - -hugo new blog/my-new-blogpost

[GitHub] [airflow-site] kaxil merged pull request #246: Expand the guide for contributors

kaxil merged pull request #246: Expand the guide for contributors URL: https://github.com/apache/airflow-site/pull/246 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] roitvt commented on a change in pull request #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor

roitvt commented on a change in pull request #7163: [AIRFLOW-6542] add

spark-on-k8s operator/hook/sensor

URL: https://github.com/apache/airflow/pull/7163#discussion_r381585525

##

File path:

airflow/providers/cncf/kubernetes/operators/spark_kubernetes_operator.py

##

@@ -0,0 +1,83 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+from typing import Optional

+

+import yaml

+from kubernetes import client

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.cncf.kubernetes.hooks.kubernetes_hook import

Kuberneteshook

+from airflow.utils.decorators import apply_defaults

+

+

+class SparkKubernetesOperator(BaseOperator):

+"""

+Creates sparkApplication object in kubernetes cluster:

+ .. seealso::

+For more detail about Spark Application Object have a look at the

reference:

+

https://github.com/GoogleCloudPlatform/spark-on-k8s-operator/blob/master/docs/api-docs.md#sparkapplication

+

+:param sparkapplication_file: filepath to kubernetes

custom_resource_definition of sparkApplication

+:type sparkapplication_file: str

+:param namespace: kubernetes namespace to put sparkApplication

+:type namespace: str

+:param conn_id: the connection to Kubernetes cluster

+:type conn_id: str

+"""

+

+template_fields = ['sparkapplication_file', 'namespace']

+template_ext = ('yaml', 'yml', 'json')

+ui_color = '#f4a460'

+

+@apply_defaults

+def __init__(self,

+ sparkapplication_file: str,

+ namespace: Optional[str] = None,

+ conn_id: str = 'kubernetes_default',

+ *args, **kwargs) -> None:

+super().__init__(*args, **kwargs)

+self.sparkapplication_file = sparkapplication_file

+self.namespace = namespace

+self.conn_id = conn_id

+

+def execute(self, context):

+self.log.info("Creating sparkApplication")

+hook = Kuberneteshook(conn_id=self.conn_id)

+api_client = hook.get_conn()

+api = client.CustomObjectsApi(api_client)

Review comment:

but then I'll need to write code in the hook for every Kubernetes API

instead of just return general API connection and the operator decide which API

kind he opens.

what do you think is right?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on issue #7458: [AIRFLOW-6838][WIP] Introduce real subcommands for Breeze

mik-laj commented on issue #7458: [AIRFLOW-6838][WIP] Introduce real subcommands for Breeze URL: https://github.com/apache/airflow/pull/7458#issuecomment-588507636 > they're still there. pre-commit got stuck when asking to rebuild the image. I pushed the changes now. > Not necessary. that's just splitting help - nothing else. One parser should be enough. Again - happy to add it. I invite you to contribute. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] roitvt commented on a change in pull request #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor

roitvt commented on a change in pull request #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor URL: https://github.com/apache/airflow/pull/7163#discussion_r381583482 ## File path: tests/test_project_structure.py ## @@ -36,6 +36,9 @@ 'tests/providers/apache/pig/operators/test_pig.py', 'tests/providers/apache/spark/hooks/test_spark_jdbc_script.py', 'tests/providers/cncf/kubernetes/operators/test_kubernetes_pod.py', + 'tests/providers/cncf/kubernetes/operators/test_spark_kubernetes_operator.py', +'tests/providers/cncf/kubernetes/hooks/test_kubernetes_hook.py', +'tests/providers/cncf/kubernetes/sensors/test_spark_kubernetes_sensor.py', Review comment: I know :( unfortunately it still not possible to mock Kubernetes API in python for unit testing but I'll try to write tests to what I can. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #7450: [AIRFLOW-6829][WIP] Introduce BaseOperatorMetaClass - auto-apply apply_default

mik-laj commented on a change in pull request #7450: [AIRFLOW-6829][WIP]

Introduce BaseOperatorMetaClass - auto-apply apply_default

URL: https://github.com/apache/airflow/pull/7450#discussion_r381580741

##

File path: airflow/operators/check_operator.py

##

@@ -123,11 +120,10 @@ class ValueCheckOperator(BaseOperator):

__mapper_args__ = {

'polymorphic_identity': 'ValueCheckOperator'

}

-template_fields = ('sql', 'pass_value',) # type: Iterable[str]

+template_fields = ('sql', 'pass_value',) # type: Tuple[str, ...]

Review comment:

mypy is going crazy in many places now. It was one of the attempts to fix

it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] roitvt commented on a change in pull request #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor

roitvt commented on a change in pull request #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor URL: https://github.com/apache/airflow/pull/7163#discussion_r381580906 ## File path: airflow/providers/cncf/kubernetes/hooks/kubernetes_hook.py ## @@ -0,0 +1,77 @@ +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. +import tempfile + +from kubernetes import client, config + +from airflow.hooks.base_hook import BaseHook + + +class Kuberneteshook(BaseHook): Review comment: so would you like me to open new PR for the Kubernetes connection? I think that KubePodOperator can benefit from using this hook as it can make it work on remote k8s clusters. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #7450: [AIRFLOW-6829][WIP] Introduce BaseOperatorMetaClass - auto-apply apply_default

mik-laj commented on a change in pull request #7450: [AIRFLOW-6829][WIP]

Introduce BaseOperatorMetaClass - auto-apply apply_default

URL: https://github.com/apache/airflow/pull/7450#discussion_r381580741

##

File path: airflow/operators/check_operator.py

##

@@ -123,11 +120,10 @@ class ValueCheckOperator(BaseOperator):

__mapper_args__ = {

'polymorphic_identity': 'ValueCheckOperator'

}

-template_fields = ('sql', 'pass_value',) # type: Iterable[str]

+template_fields = ('sql', 'pass_value',) # type: Tuple[str, ...]

Review comment:

mypy is going crazy in many places now. It was one of the attempts to do it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Commented] (AIRFLOW-6847) Integrate Apache Hive tests with Breeze

[ https://issues.apache.org/jira/browse/AIRFLOW-6847?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17040477#comment-17040477 ] Kamil Bregula commented on AIRFLOW-6847: I updated the title and the description to better describe the necessary scope of work. > Integrate Apache Hive tests with Breeze > --- > > Key: AIRFLOW-6847 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6847 > Project: Apache Airflow > Issue Type: Improvement > Components: tests >Affects Versions: 1.10.9 >Reporter: Cooper Gillan >Priority: Minor > > Currently, tests for Apache Hive are not run on CI. This is very > sad.:crying_cat_face: > However, the tests exist in the repository and can be run using > AIRFLOW_RUNALL_TESTS environment variable. > I think that to solve this problem we need to follow these steps. > # Add Hive integration in Breeze > # Replace AIRFLOW_RUNALL_TESTS env variable with pytest marker > # Update .travis.yml > > This problem was found while working on splitting out Hive tests captured in > AIRFLOW-6721 and initially worked on in > [https://github.com/apache/airflow/pull/7468], > More info on airflow's use of Breeze integration and pytest markers can be > found here: > [https://github.com/apache/airflow/blob/master/TESTING.rst#airflow-integration-tests] > This ticket rose out of [this comment in PR #7468's > review|https://github.com/apache/airflow/pull/7468#issuecomment-588458850]. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (AIRFLOW-6847) Integrate Apache Hive tests with Breeze

[ https://issues.apache.org/jira/browse/AIRFLOW-6847?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Kamil Bregula updated AIRFLOW-6847: --- Description: Currently, tests for Apache Hive are not run on CI. This is very sad.:crying_cat_face: However, the tests exist in the repository and can be run using AIRFLOW_RUNALL_TESTS environment variable. I think that to solve this problem we need to follow these steps. # Add Hive integration in Breeze # Replace AIRFLOW_RUNALL_TESTS env variable with pytest marker # Update .travis.yml This problem was found while working on splitting out Hive tests captured in AIRFLOW-6721 and initially worked on in [https://github.com/apache/airflow/pull/7468], More info on airflow's use of Breeze integration and pytest markers can be found here: [https://github.com/apache/airflow/blob/master/TESTING.rst#airflow-integration-tests] This ticket rose out of [this comment in PR #7468's review|https://github.com/apache/airflow/pull/7468#issuecomment-588458850]. was: Currently, tests for Apache Hive are not run on CI. This is very sad.:crying_cat_face: However, the tests exist in the repository and can be run using AIRFLOW_RUNALL_TESTS environment variable. I think that to solve this problem we need to follow these steps. # Add Hive integration in Breeze # Replace AIRFLOW_RUNALL_TESTS env variable use of with pytest marker # Update .travis.yml This problem was found while working on splitting out Hive tests captured in AIRFLOW-6721 and initially worked on in [https://github.com/apache/airflow/pull/7468], More info on airflow's use of Breeze integration and pytest markers can be found here: [https://github.com/apache/airflow/blob/master/TESTING.rst#airflow-integration-tests] This ticket rose out of [this comment in PR #7468's review|https://github.com/apache/airflow/pull/7468#issuecomment-588458850]. > Integrate Apache Hive tests with Breeze > --- > > Key: AIRFLOW-6847 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6847 > Project: Apache Airflow > Issue Type: Improvement > Components: tests >Affects Versions: 1.10.9 >Reporter: Cooper Gillan >Priority: Minor > > Currently, tests for Apache Hive are not run on CI. This is very > sad.:crying_cat_face: > However, the tests exist in the repository and can be run using > AIRFLOW_RUNALL_TESTS environment variable. > I think that to solve this problem we need to follow these steps. > # Add Hive integration in Breeze > # Replace AIRFLOW_RUNALL_TESTS env variable with pytest marker > # Update .travis.yml > > This problem was found while working on splitting out Hive tests captured in > AIRFLOW-6721 and initially worked on in > [https://github.com/apache/airflow/pull/7468], > More info on airflow's use of Breeze integration and pytest markers can be > found here: > [https://github.com/apache/airflow/blob/master/TESTING.rst#airflow-integration-tests] > This ticket rose out of [this comment in PR #7468's > review|https://github.com/apache/airflow/pull/7468#issuecomment-588458850]. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] roitvt commented on a change in pull request #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor

roitvt commented on a change in pull request #7163: [AIRFLOW-6542] add

spark-on-k8s operator/hook/sensor

URL: https://github.com/apache/airflow/pull/7163#discussion_r381579048

##

File path: airflow/providers/cncf/kubernetes/hooks/kubernetes_hook.py

##

@@ -0,0 +1,77 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+import tempfile

+

+from kubernetes import client, config

+

+from airflow.hooks.base_hook import BaseHook

+

+

+class Kuberneteshook(BaseHook):

+"""

+Creates Kubernetes API connection.

+

+:param conn_id: the connection to Kubernetes cluster

+"""

+

+def __init__(

+self,

+conn_id="kubernetes_default"

+):

+self.connection = self.get_connection(conn_id)

+self.extras = self.connection.extra_dejson

+

+def get_conn(self):

+"""

+Returns kubernetes api session for use with requests

+"""

+

+if self._get_field(("in_cluster")):

+self.log.debug("loading kube_config from: in_cluster

configuration")

+config.load_incluster_config()

+elif self._get_field("kube_config") is None or

self._get_field("kube_config") == '':

+self.log.debug("loading kube_config from: default file")

+else:

+with tempfile.NamedTemporaryFile() as temp_config:

+self.log.debug("loading kube_config from: connection

kube_config")

+temp_config.write(self._get_field("kube_config").encode())

+config.load_kube_config(temp_config.name)

+temp_config.flush()

Review comment:

I don't like this solution either, but I dug into Kubernetes python API and

didn't found a better solution. so I write the Kube config to temp file let

Kubernetes python API to load it and then flush.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Updated] (AIRFLOW-6847) Integrate Apache Hive tests with Breeze

[

https://issues.apache.org/jira/browse/AIRFLOW-6847?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Kamil Bregula updated AIRFLOW-6847:

---

Description:

Currently, tests for Apache Hive are not run on CI. This is very

sad.:crying_cat_face:

However, the tests exist in the repository and can be run using

AIRFLOW_RUNALL_TESTS environment variable.

I think that to solve this problem we need to follow these steps.

# Add Hive integration in Breeze

# Replace AIRFLOW_RUNALL_TESTS env variable use of with pytest marker

# Update .travis.yml

This problem was found while working on splitting out Hive tests captured in

AIRFLOW-6721 and initially worked on in

[https://github.com/apache/airflow/pull/7468],

More info on airflow's use of Breeze integration and pytest markers can be

found here:

[https://github.com/apache/airflow/blob/master/TESTING.rst#airflow-integration-tests]

This ticket rose out of [this comment in PR #7468's

review|https://github.com/apache/airflow/pull/7468#issuecomment-588458850].

was:

Building off work completed to split out Hive tests captured in AIRFLOW-6721

and initially worked on in https://github.com/apache/airflow/pull/7468, remove

use of the {{AIRFLOW_RUNALL_TESTS}} environment variable for skipping certain

tests in favor of using pytest markers.

More info on airflow's use of pytest markers can be found here:

https://github.com/apache/airflow/blob/master/TESTING.rst#airflow-integration-tests

This ticket rose out of [this comment in PR #7468's

review|https://github.com/apache/airflow/pull/7468#issuecomment-588458850].

Summary: Integrate Apache Hive tests with Breeze (was: Replace use of

AIRFLOW_RUNALL_TESTS with pytest markers)

> Integrate Apache Hive tests with Breeze

> ---

>

> Key: AIRFLOW-6847

> URL: https://issues.apache.org/jira/browse/AIRFLOW-6847

> Project: Apache Airflow

> Issue Type: Improvement

> Components: tests

>Affects Versions: 1.10.9

>Reporter: Cooper Gillan

>Priority: Minor

>

> Currently, tests for Apache Hive are not run on CI. This is very

> sad.:crying_cat_face:

> However, the tests exist in the repository and can be run using

> AIRFLOW_RUNALL_TESTS environment variable.

> I think that to solve this problem we need to follow these steps.

> # Add Hive integration in Breeze

> # Replace AIRFLOW_RUNALL_TESTS env variable use of with pytest marker

> # Update .travis.yml

>

> This problem was found while working on splitting out Hive tests captured in

> AIRFLOW-6721 and initially worked on in

> [https://github.com/apache/airflow/pull/7468],

> More info on airflow's use of Breeze integration and pytest markers can be

> found here:

> [https://github.com/apache/airflow/blob/master/TESTING.rst#airflow-integration-tests]

> This ticket rose out of [this comment in PR #7468's

> review|https://github.com/apache/airflow/pull/7468#issuecomment-588458850].

--

This message was sent by Atlassian Jira