[GitHub] [airflow] fuguixing edited a comment on issue #17429: Scan the DAGs directory for new files througth restfull API

fuguixing edited a comment on issue #17429: URL: https://github.com/apache/airflow/issues/17429#issuecomment-894013281 Thanks for your reply! In our env, I need to make the new specified DAG file available immediately without waiting for Airflow call **_refresh_dag_dir**(in the dag_processing.py), if **dag_dir_list_interval** is too small, which may cause a waste of resources. I tried the following code, which can load the new specified DAG file to the database and make it available immediately, and it uses **DagFileProcessor** in the scheduler_job.py. Can I encapsulate it as a restful API? heartbeat() in the dag_processing.py `processor = self._processor_factory(file_path, self._zombies)` `processor.start()` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] fuguixing commented on issue #17429: Scan the DAGs directory for new files througth restfull API

fuguixing commented on issue #17429: URL: https://github.com/apache/airflow/issues/17429#issuecomment-894013281 Thanks for your reply! In our env, I need to make the new specified DAG available immediately without waiting for Airflow call **_refresh_dag_dir**(in the dag_processing.py), if **dag_dir_list_interval** is too small, which may cause a waste of resources. I tried the following code, which can load the new specified DAG to the database and make it available immediately, and it uses **DagFileProcessor** in the scheduler_job.py. Can I encapsulate it as a restful API? heartbeat() in the dag_processing.py `processor = self._processor_factory(file_path, self._zombies)` `processor.start()` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] baolsen commented on issue #10874: SSHHook get_conn() does not re-use client

baolsen commented on issue #10874: URL: https://github.com/apache/airflow/issues/10874#issuecomment-894009918 PR is up :) Observations and suggestions welcome. Happy to make other improvements based on the feedback. https://github.com/apache/airflow/pull/17378 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] goodhamgupta edited a comment on issue #16728: SparkKubernetesOperator to support arguments

goodhamgupta edited a comment on issue #16728:

URL: https://github.com/apache/airflow/issues/16728#issuecomment-894005544

Hi @potiuk,

I think I don't understand the issue well enough. I see that for the

SparkKubernetesOperator, the following code segment:

```py

class SparkKubernetesOperator(BaseOperator):

"""

"""

template_fields = ['application_file', 'namespace']

template_ext = ('.yaml', '.yml', '.json')

ui_color = '#f4a460'

```

I think this should already allow .json type file to be uploaded from the

UI? I would appreciate it if you could provide me with any pointers on how to

start this issue.

Thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] goodhamgupta commented on issue #16728: SparkKubernetesOperator to support arguments

goodhamgupta commented on issue #16728:

URL: https://github.com/apache/airflow/issues/16728#issuecomment-894005544

Hi @potiuk,

I think I don't understand the issue well enough. I see that for the

SparkKubernetesOperator, the following code segment:

```py

class SparkKubernetesOperator(BaseOperator):

"""

"""

template_fields = ['application_file', 'namespace']

template_ext = ('.yaml', '.yml', '.json')

ui_color = '#f4a460'

```

I think this should already allow .json type file to be uploaded from the

UI? I would appreciate if you could provide me with any pointers on how to

start this issue.

Thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #17456: Adding TaskGroup support in chain()

github-actions[bot] commented on pull request #17456: URL: https://github.com/apache/airflow/pull/17456#issuecomment-894005427 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest main at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #16866: Remove default_args pattern + added get_current_context() use for Core Airflow example DAGs

uranusjr commented on a change in pull request #16866: URL: https://github.com/apache/airflow/pull/16866#discussion_r683950115 ## File path: airflow/example_dags/tutorial_taskflow_api_etl_virtualenv.py ## @@ -19,24 +19,14 @@ # [START tutorial] # [START import_module] -import json Review comment: Why move this? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] josh-fell opened a new pull request #17456: Adding TaskGroup support in chain()

josh-fell opened a new pull request #17456: URL: https://github.com/apache/airflow/pull/17456 Related to: #17083, #16635 This PR ensures that `TaskGroups` can be used to set dependencies while calling the `chain()` method. Support for `XComArgs` and `EdgeModifiers` has been implemented in previous PRs: #16732, #17099 --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (AIRFLOW-4922) If a task crashes, host name is not committed to the database so logs aren't able to be seen in the UI

[

https://issues.apache.org/jira/browse/AIRFLOW-4922?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17394452#comment-17394452

]

ASF GitHub Bot commented on AIRFLOW-4922:

-

gowdra01 commented on pull request #6722:

URL: https://github.com/apache/airflow/pull/6722#issuecomment-893961036

I am stuck with this too I am using 2.1.0 version and getting the error

*** Failed to fetch log file from worker. 503 Server Error: Service

Unavailable for url:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> If a task crashes, host name is not committed to the database so logs aren't

> able to be seen in the UI

> --

>

> Key: AIRFLOW-4922

> URL: https://issues.apache.org/jira/browse/AIRFLOW-4922

> Project: Apache Airflow

> Issue Type: Bug

> Components: logging

>Affects Versions: 1.10.3

>Reporter: Andrew Harmon

>Assignee: wanghong-T

>Priority: Major

>

> Sometimes when a task fails, the log show the following

> {code}

> *** Log file does not exist:

> /usr/local/airflow/logs/my_dag/my_task/2019-07-07T09:00:00+00:00/1.log***

> Fetching from:

> http://:8793/log/my_dag/my_task/2019-07-07T09:00:00+00:00/1.log***

> Failed to fetch log file from worker. Invalid URL

> 'http://:8793/log/my_dag/my_task/2019-07-07T09:00:00+00:00/1.log': No host

> supplied

> {code}

> I believe this is due to the fact that the row is not committed to the

> database until after the task finishes.

> https://github.com/apache/airflow/blob/a1f9d9a03faecbb4ab52def2735e374b2e88b2b9/airflow/models/taskinstance.py#L857

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [airflow] gowdra01 commented on pull request #6722: [AIRFLOW-4922]Fix task get log by Web UI

gowdra01 commented on pull request #6722: URL: https://github.com/apache/airflow/pull/6722#issuecomment-893961036 I am stuck with this too I am using 2.1.0 version and getting the error *** Failed to fetch log file from worker. 503 Server Error: Service Unavailable for url: -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (AIRFLOW-4922) If a task crashes, host name is not committed to the database so logs aren't able to be seen in the UI

[

https://issues.apache.org/jira/browse/AIRFLOW-4922?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17394442#comment-17394442

]

ASF GitHub Bot commented on AIRFLOW-4922:

-

xuemengran commented on pull request #6722:

URL: https://github.com/apache/airflow/pull/6722#issuecomment-893956847

In the 2.1.1 version, I tried to modify the

airflow/utils/log/file_task_handler.py file to obtain the hostname information

by reading the log table.

I confirmed through debug that I could get the host information in this way,

but a bigger problem appeared.

The task is marked as successful without scheduling, and the log is still

not viewable, so I confirm that to solve this problem, the host information

must be written to the task_instance table before the task is executed.

I think this bug is very Important, because it directly affects the use of

airflow in distributed scenarios, please solve it as soon as possible!!!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> If a task crashes, host name is not committed to the database so logs aren't

> able to be seen in the UI

> --

>

> Key: AIRFLOW-4922

> URL: https://issues.apache.org/jira/browse/AIRFLOW-4922

> Project: Apache Airflow

> Issue Type: Bug

> Components: logging

>Affects Versions: 1.10.3

>Reporter: Andrew Harmon

>Assignee: wanghong-T

>Priority: Major

>

> Sometimes when a task fails, the log show the following

> {code}

> *** Log file does not exist:

> /usr/local/airflow/logs/my_dag/my_task/2019-07-07T09:00:00+00:00/1.log***

> Fetching from:

> http://:8793/log/my_dag/my_task/2019-07-07T09:00:00+00:00/1.log***

> Failed to fetch log file from worker. Invalid URL

> 'http://:8793/log/my_dag/my_task/2019-07-07T09:00:00+00:00/1.log': No host

> supplied

> {code}

> I believe this is due to the fact that the row is not committed to the

> database until after the task finishes.

> https://github.com/apache/airflow/blob/a1f9d9a03faecbb4ab52def2735e374b2e88b2b9/airflow/models/taskinstance.py#L857

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [airflow] xuemengran commented on pull request #6722: [AIRFLOW-4922]Fix task get log by Web UI

xuemengran commented on pull request #6722: URL: https://github.com/apache/airflow/pull/6722#issuecomment-893956847 In the 2.1.1 version, I tried to modify the airflow/utils/log/file_task_handler.py file to obtain the hostname information by reading the log table. I confirmed through debug that I could get the host information in this way, but a bigger problem appeared. The task is marked as successful without scheduling, and the log is still not viewable, so I confirm that to solve this problem, the host information must be written to the task_instance table before the task is executed. I think this bug is very Important, because it directly affects the use of airflow in distributed scenarios, please solve it as soon as possible!!! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Sonins edited a comment on pull request #17428: Fix elasticsearch-secret template port default function

Sonins edited a comment on pull request #17428: URL: https://github.com/apache/airflow/pull/17428#issuecomment-893932430 Thank you for your review @jedcunningham . I did the unit test using pytest in breeze environment, but I don't know how to attach the result to this PR in fancy style. So, I just paste the result of pytest. ``` root@fa3d600de005:/opt/airflow/chart# pytest tests/ = test session starts == platform linux -- Python 3.6.14, pytest-6.2.4, py-1.10.0, pluggy-0.13.1 -- /usr/local/bin/python cachedir: .pytest_cache rootdir: /opt/airflow, configfile: pytest.ini plugins: requests-mock-1.9.3, flaky-3.7.0, timeouts-1.2.1, anyio-3.3.0, forked-1.3.0, httpx-0.12.0, instafail-0.4.2, cov-2.12.1, xdist-2.3.0, rerunfailures-9.1.1, celery-4.4.7 setup timeout: 0.0s, execution timeout: 0.0s, teardown timeout: 0.0s collected 400 items tests/test_airflow_common.py::AirflowCommon::test_annotations PASSED [ 0%] tests/test_airflow_common.py::AirflowCommon::test_dags_mount_0 PASSED[ 0%] tests/test_airflow_common.py::AirflowCommon::test_dags_mount_1 PASSED[ 0%] tests/test_airflow_common.py::AirflowCommon::test_dags_mount_2 PASSED[ 1%] tests/test_annotations.py::AnnotationsTest::test_service_account_annotations PASSED [ 1%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_annotations_on_airflow_pods_in_deployment PASSED [ 1%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_basic_deployment_without_default_users PASSED [ 1%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_basic_deployments PASSED [ 2%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_chart_is_consistent_with_official_airflow_image PASSED [ 2%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_dags_access_mode PASSED [ 2%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_0_airflow PASSED [ 2%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_1_pod_template PASSED [ 3%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_2_flower PASSED [ 3%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_3_statsd PASSED [ 3%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_4_redis PASSED [ 3%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_5_pgbouncer PASSED [ 4%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_6_pgbouncerExporter PASSED [ 4%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_7_gitSync PASSED [ 4%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_labels_are_valid PASSED [ 4%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_network_policies_are_valid PASSED [ 5%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_unsupported_executor PASSED [ 5%] tests/test_celery_kubernetes_executor.py::CeleryKubernetesExecutorTest::test_should_create_a_worker_deployment_with_the_celery_executor PASSED [ 5%] tests/test_celery_kubernetes_executor.py::CeleryKubernetesExecutorTest::test_should_create_a_worker_deployment_with_the_celery_kubernetes_executor PASSED [ 5%] tests/test_chart_quality.py::ChartQualityTest::test_values_validate_schema PASSED [ 6%] tests/test_cleanup_pods.py::CleanupPodsTest::test_should_change_image_when_set_airflow_image PASSED [ 6%] tests/test_cleanup_pods.py::CleanupPodsTest::test_should_create_cronjob_for_enabled_cleanup PASSED [ 6%] tests/test_cleanup_pods.py::CleanupPodsTest::test_should_create_valid_affinity_tolerations_and_node_selector PASSED [ 6%] tests/test_configmap.py::ConfigmapTest::test_airflow_local_settings PASSED [ 7%] tests/test_configmap.py::ConfigmapTest::test_kerberos_config_available_with_celery_executor PASSED [ 7%] tests/test_configmap.py::ConfigmapTest::test_multiple_annotations PASSED [ 7%] tests/test_configmap.py::ConfigmapTest::test_no_airflow_local_settings_by_default PASSED [ 7%] tests/test_configmap.py::ConfigmapTest::test_single_annotation PASSED[ 8%] tests/test_create_user_job.py::CreateUserJobTest::test_should_create_valid_affinity_tolerations_and_node_selector PASSED [ 8%] tests/test_create_user_job.py::CreateUserJobTest::test_should_run_by_default PASSED [ 8%] tests/test_create_user_job.py::CreateUserJobTest::test_should_support_annotations PASSED [ 8%] tests/test_dags_persistent_volume_claim.py::DagsPersistentVolumeClaimTest::test_should_generate_a_document_if_persistence_is_enabled_and_not_using_an_existing_claim PASSED [ 9%] tests/test_dags_persistent_volume_claim.py::DagsPersistentVolumeClaimTest::test_should_not_generate_a_document_if_persistence_is_disabled PASSED [ 9%]

[GitHub] [airflow] Sonins commented on pull request #17428: Fix elasticsearch-secret template port default function

Sonins commented on pull request #17428: URL: https://github.com/apache/airflow/pull/17428#issuecomment-893932430 Thank you for your review @jedcunningham . I did the unit test using pytest in breeze environment, but I don't know how to attach the result to this PR in fancy style. So, I just paste the result of pytest. ``` = test session starts == platform linux -- Python 3.6.14, pytest-6.2.4, py-1.10.0, pluggy-0.13.1 -- /usr/local/bin/python cachedir: .pytest_cache rootdir: /opt/airflow, configfile: pytest.ini plugins: requests-mock-1.9.3, flaky-3.7.0, timeouts-1.2.1, anyio-3.3.0, forked-1.3.0, httpx-0.12.0, instafail-0.4.2, cov-2.12.1, xdist-2.3.0, rerunfailures-9.1.1, celery-4.4.7 setup timeout: 0.0s, execution timeout: 0.0s, teardown timeout: 0.0s collected 400 items tests/test_airflow_common.py::AirflowCommon::test_annotations PASSED [ 0%] tests/test_airflow_common.py::AirflowCommon::test_dags_mount_0 PASSED[ 0%] tests/test_airflow_common.py::AirflowCommon::test_dags_mount_1 PASSED[ 0%] tests/test_airflow_common.py::AirflowCommon::test_dags_mount_2 PASSED[ 1%] tests/test_annotations.py::AnnotationsTest::test_service_account_annotations PASSED [ 1%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_annotations_on_airflow_pods_in_deployment PASSED [ 1%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_basic_deployment_without_default_users PASSED [ 1%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_basic_deployments PASSED [ 2%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_chart_is_consistent_with_official_airflow_image PASSED [ 2%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_dags_access_mode PASSED [ 2%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_0_airflow PASSED [ 2%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_1_pod_template PASSED [ 3%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_2_flower PASSED [ 3%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_3_statsd PASSED [ 3%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_4_redis PASSED [ 3%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_5_pgbouncer PASSED [ 4%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_6_pgbouncerExporter PASSED [ 4%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_invalid_pull_policy_7_gitSync PASSED [ 4%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_labels_are_valid PASSED [ 4%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_network_policies_are_valid PASSED [ 5%] tests/test_basic_helm_chart.py::TestBaseChartTest::test_unsupported_executor PASSED [ 5%] tests/test_celery_kubernetes_executor.py::CeleryKubernetesExecutorTest::test_should_create_a_worker_deployment_with_the_celery_executor PASSED [ 5%] tests/test_celery_kubernetes_executor.py::CeleryKubernetesExecutorTest::test_should_create_a_worker_deployment_with_the_celery_kubernetes_executor PASSED [ 5%] tests/test_chart_quality.py::ChartQualityTest::test_values_validate_schema PASSED [ 6%] tests/test_cleanup_pods.py::CleanupPodsTest::test_should_change_image_when_set_airflow_image PASSED [ 6%] tests/test_cleanup_pods.py::CleanupPodsTest::test_should_create_cronjob_for_enabled_cleanup PASSED [ 6%] tests/test_cleanup_pods.py::CleanupPodsTest::test_should_create_valid_affinity_tolerations_and_node_selector PASSED [ 6%] tests/test_configmap.py::ConfigmapTest::test_airflow_local_settings PASSED [ 7%] tests/test_configmap.py::ConfigmapTest::test_kerberos_config_available_with_celery_executor PASSED [ 7%] tests/test_configmap.py::ConfigmapTest::test_multiple_annotations PASSED [ 7%] tests/test_configmap.py::ConfigmapTest::test_no_airflow_local_settings_by_default PASSED [ 7%] tests/test_configmap.py::ConfigmapTest::test_single_annotation PASSED[ 8%] tests/test_create_user_job.py::CreateUserJobTest::test_should_create_valid_affinity_tolerations_and_node_selector PASSED [ 8%] tests/test_create_user_job.py::CreateUserJobTest::test_should_run_by_default PASSED [ 8%] tests/test_create_user_job.py::CreateUserJobTest::test_should_support_annotations PASSED [ 8%] tests/test_dags_persistent_volume_claim.py::DagsPersistentVolumeClaimTest::test_should_generate_a_document_if_persistence_is_enabled_and_not_using_an_existing_claim PASSED [ 9%] tests/test_dags_persistent_volume_claim.py::DagsPersistentVolumeClaimTest::test_should_not_generate_a_document_if_persistence_is_disabled PASSED [ 9%]

[GitHub] [airflow] mik-laj commented on a change in pull request #16571: Implemented Basic EKS Integration

mik-laj commented on a change in pull request #16571:

URL: https://github.com/apache/airflow/pull/16571#discussion_r683872000

##

File path: airflow/providers/amazon/aws/hooks/eks.py

##

@@ -0,0 +1,420 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+"""Interact with Amazon EKS, using the boto3 library."""

+import base64

+import json

+import re

+import tempfile

+from contextlib import contextmanager

+from functools import partial

+from typing import Callable, Dict, List, Optional

+

+import yaml

+from botocore.signers import RequestSigner

+

+from airflow.providers.amazon.aws.hooks.base_aws import AwsBaseHook

+from airflow.utils.json import AirflowJsonEncoder

+

+DEFAULT_CONTEXT_NAME = 'aws'

+DEFAULT_PAGINATION_TOKEN = ''

+DEFAULT_POD_USERNAME = 'aws'

+STS_TOKEN_EXPIRES_IN = 60

+

+

+class EKSHook(AwsBaseHook):

+"""

+Interact with Amazon EKS, using the boto3 library.

+

+Additional arguments (such as ``aws_conn_id``) may be specified and

+are passed down to the underlying AwsBaseHook.

+

+.. seealso::

+:class:`~airflow.providers.amazon.aws.hooks.base_aws.AwsBaseHook`

+"""

+

+client_type = 'eks'

+

+def __init__(self, *args, **kwargs) -> None:

+kwargs["client_type"] = self.client_type

+super().__init__(*args, **kwargs)

+

+def create_cluster(self, name: str, roleArn: str, resourcesVpcConfig:

Dict, **kwargs) -> Dict:

+"""

+Creates an Amazon EKS control plane.

+

+:param name: The unique name to give to your Amazon EKS Cluster.

+:type name: str

+:param roleArn: The Amazon Resource Name (ARN) of the IAM role that

provides permissions

+ for the Kubernetes control plane to make calls to AWS API operations

on your behalf.

+:type roleArn: str

+:param resourcesVpcConfig: The VPC configuration used by the cluster

control plane.

+:type resourcesVpcConfig: Dict

+

+:return: Returns descriptive information about the created EKS Cluster.

+:rtype: Dict

+"""

+eks_client = self.conn

+

+response = eks_client.create_cluster(

+name=name, roleArn=roleArn, resourcesVpcConfig=resourcesVpcConfig,

**kwargs

+)

+

+self.log.info("Created cluster with the name %s.",

response.get('cluster').get('name'))

+return response

+

+def create_nodegroup(

+self, clusterName: str, nodegroupName: str, subnets: List[str],

nodeRole: str, **kwargs

+) -> Dict:

+"""

+Creates an Amazon EKS Managed Nodegroup for an EKS Cluster.

+

+:param clusterName: The name of the cluster to create the EKS Managed

Nodegroup in.

+:type clusterName: str

+:param nodegroupName: The unique name to give your managed nodegroup.

+:type nodegroupName: str

+:param subnets: The subnets to use for the Auto Scaling group that is

created for your nodegroup.

+:type subnets: List[str]

+:param nodeRole: The Amazon Resource Name (ARN) of the IAM role to

associate with your nodegroup.

+:type nodeRole: str

+

+:return: Returns descriptive information about the created EKS Managed

Nodegroup.

+:rtype: Dict

+"""

+eks_client = self.conn

+# The below tag is mandatory and must have a value of either 'owned'

or 'shared'

+# A value of 'owned' denotes that the subnets are exclusive to the

nodegroup.

+# The 'shared' value allows more than one resource to use the subnet.

+tags = {'kubernetes.io/cluster/' + clusterName: 'owned'}

+if "tags" in kwargs:

+tags = {**tags, **kwargs["tags"]}

+kwargs.pop("tags")

+

+response = eks_client.create_nodegroup(

+clusterName=clusterName,

+nodegroupName=nodegroupName,

+subnets=subnets,

+nodeRole=nodeRole,

+tags=tags,

+**kwargs,

+)

+

+self.log.info(

+"Created a managed nodegroup named %s in cluster %s",

+response.get('nodegroup').get('nodegroupName'),

+response.get('nodegroup').get('clusterName'),

+)

+return response

+

+def delete_cluster(self, name: str) ->

[GitHub] [airflow] github-actions[bot] commented on pull request #16268: Change Regex For Inclusive Words

github-actions[bot] commented on pull request #16268: URL: https://github.com/apache/airflow/pull/16268#issuecomment-893904676 This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #16012: Fixing Glue hooks/operators

github-actions[bot] commented on pull request #16012: URL: https://github.com/apache/airflow/pull/16012#issuecomment-893904709 This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj edited a comment on issue #17429: Scan the DAGs directory for new files througth restfull API

mik-laj edited a comment on issue #17429: URL: https://github.com/apache/airflow/issues/17429#issuecomment-893892411 Will the proposed imrpovments from https://github.com/apache/airflow/issues/17437 solve your problem? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jarfgit edited a comment on pull request #17145: Adds an s3 list prefixes operator

jarfgit edited a comment on pull request #17145: URL: https://github.com/apache/airflow/pull/17145#issuecomment-893890145 @o-nikolas @iostreamdoth @potiuk Ok, at long last I've updated this pull request: * Refactored `S3ListOperator` to take a `recursive` parameter * Refactored the `s3_list_keys` hook to return both a list of keys and list of common prefixes (I know this is out of scope for the issue, but making unnecessary API calls bothers me and it was easy enough to do) * Experimented with deleting the `s3_list_prefixes` hook altogether, but it's used elsewhere and constituted some serious scope creep * If `recursive == True` then we add the prefixes to the list of keys returned by the operator * Ensured `recursive` defaults to `False` and _shouldn't_ present a breaking change * Added unit tests to both the `s3_list_keys` and `s3_list_prefixes` hooks to make sure I (and presumably future contributors) fully understood what the `delimiter` and `prefix` args were doing and how various combinations affected what was returned when `recursive == True` The above assumes the following: * The user wants to retrieve both keys and prefixes using the same optional params (i.e. `delimiter` and `prefix`). * The user can distinguish keys from prefixes from the list returned by the operator. I would assume that keys have a file extension and prefixes would include the delimiter... but it seems possible that keys may not _always_ have a file extension and I'm basing my understanding of the prefixes containing the delimiter on the unit tests. So I have this question: If we want to refactor the operator to return both prefixes and keys but a user might want to use different optional params between keys and prefixes, I don't see an alternative other than requiring the user to use the operator twice with different params. With this in mind, is there a valid argument to have a dedicated `S3ListPrefixes` operator after all? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on issue #17429: Scan the DAGs directory for new files througth restfull API

mik-laj commented on issue #17429: URL: https://github.com/apache/airflow/issues/17429#issuecomment-893892411 Related PR: https://github.com/apache/airflow/issues/17437 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on issue #17429: Scan the DAGs directory for new files througth restfull API

mik-laj commented on issue #17429: URL: https://github.com/apache/airflow/issues/17429#issuecomment-893891678 This can be problematic as the webserver is stateless and does not have access to the DAG files. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on issue #17443: No link for kibana when using frontend configuration

mik-laj commented on issue #17443: URL: https://github.com/apache/airflow/issues/17443#issuecomment-893891221 To enable external links for logs, you need to use a task handler that supports external links. In this case, you should use https://github.com/apache/airflow/blob/866a601b76e219b3c043e1dbbc8fb22300866351/airflow/providers/elasticsearch/log/es_task_handler.py#L44 It looks like you haven't remote logging turned on, so this task handler is not used. ``` [logging] remote_logging = True ``` To check the current task handler, you can use ``airflow info`` command: ``` $ airflow info | grep 'task_logging_handler' task_logging_handler | airflow.utils.log.file_task_handler.FileTaskHandler ``` For more details, see: http://airflow.apache.org/docs/apache-airflow-providers-elasticsearch/stable/logging.html -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jarfgit commented on pull request #17145: Adds an s3 list prefixes operator

jarfgit commented on pull request #17145: URL: https://github.com/apache/airflow/pull/17145#issuecomment-893890145 @o-nikolas @iostreamdoth @potiuk Ok, at long last I've updated this pull request: * Refactored `S3ListOperator` to take a `recursive` parameter * Refactored the S3 list keys hook to return both a list of keys and list of common prefixes (I know this is out of scope for the issue, but making unnecessary API calls bothers me and it was easy enough to do) * If `recursive == True` then we add the prefixes to the list of keys returned by the operator * Ensured `recursive` defaults to `False` and _shouldn't_ present a breaking change * Added unit tests to both the `s3_list_keys` and `s3_list_prefixes` hooks to make sure I (and presumably future contributors) fully understood what the `delimiter` and `prefix` args were doing and how various combinations affected what was returned when `recursive == True` The above assumes the following: * The user wants to retrieve both keys and prefixes using the same optional params (i.e. `delimiter` and `prefix`). * The user can distinguish keys from prefixes from the list returned by the operator. I would assume that keys have a file extension and prefixes would include the delimiter... but it seems possible that keys may not _always_ have a file extension and I'm basing my understanding of the prefixes containing the delimiter on the unit tests. So I have this question: If we want to refactor the operator to return both prefixes and keys but a user might want to use different optional params between keys and prefixes, I don't see an alternative other than requiring the user to use the operator twice with different params. With this in mind, is there a valid argument to have a dedicated `S3ListPrefixes` operator after all? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #17400: Example dag slackfile

mik-laj commented on a change in pull request #17400:

URL: https://github.com/apache/airflow/pull/17400#discussion_r683846325

##

File path: airflow/providers/slack/operators/slack.py

##

@@ -195,49 +196,50 @@ class SlackAPIFileOperator(SlackAPIOperator):

:type content: str

"""

-template_fields = ('channel', 'initial_comment', 'filename', 'filetype',

'content')

+template_fields = ('channel', 'initial_comment', 'filetype', 'content')

ui_color = '#44BEDF'

def __init__(

self,

channel: str = '#general',

initial_comment: str = 'No message has been set!',

-filename: str = None,

+file: str = None,

Review comment:

It looks like a breaking change. Is there any way we can keep backward

compatibility? If not, can you add a note to the changelog? For example note,

see: google provider

https://github.com/apache/airflow/blob/main/airflow/providers/google/CHANGELOG.rst

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #17426: Allow using default celery commands for custom Celery executors subclassed from existing

mik-laj commented on a change in pull request #17426:

URL: https://github.com/apache/airflow/pull/17426#discussion_r683844854

##

File path: airflow/cli/cli_parser.py

##

@@ -60,10 +62,17 @@ def _check_value(self, action, value):

if action.dest == 'subcommand' and value == 'celery':

executor = conf.get('core', 'EXECUTOR')

if executor not in (CELERY_EXECUTOR, CELERY_KUBERNETES_EXECUTOR):

-message = (

-f'celery subcommand works only with CeleryExecutor, your

current executor: {executor}'

-)

-raise ArgumentError(action, message)

+executor_cls =

import_string(ExecutorLoader.executors.get(executor, executor))

Review comment:

I am not sure if this will allow the executor to load in all cases, and

in particular if it works with executed provided by plugins.

`ExecutorLoader.load_executor` supports one more syntax:

https://github.com/apache/airflow/blob/8505d2f0a4524313e3eff7a4f16b9a9439c7a79f/airflow/executors/executor_loader.py#L73

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #17426: Allow using default celery commands for custom Celery executors subclassed from existing

mik-laj commented on a change in pull request #17426:

URL: https://github.com/apache/airflow/pull/17426#discussion_r683844854

##

File path: airflow/cli/cli_parser.py

##

@@ -60,10 +62,17 @@ def _check_value(self, action, value):

if action.dest == 'subcommand' and value == 'celery':

executor = conf.get('core', 'EXECUTOR')

if executor not in (CELERY_EXECUTOR, CELERY_KUBERNETES_EXECUTOR):

-message = (

-f'celery subcommand works only with CeleryExecutor, your

current executor: {executor}'

-)

-raise ArgumentError(action, message)

+executor_cls =

import_string(ExecutorLoader.executors.get(executor, executor))

Review comment:

I am not sure if this will allow the executor to load in all cases, and

in particular if it works with executed provided by plugins.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #17448: Aws secrets manager backend

mik-laj commented on a change in pull request #17448:

URL: https://github.com/apache/airflow/pull/17448#discussion_r683842856

##

File path: airflow/providers/amazon/aws/secrets/secrets_manager.py

##

@@ -96,28 +97,74 @@ def __init__(

@cached_property

def client(self):

-"""Create a Secrets Manager client"""

+"""

+Create a Secrets Manager client

+"""

session = boto3.session.Session(

-profile_name=self.profile_name,

+profile_name=self.profile_name

)

return session.client(service_name="secretsmanager", **self.kwargs)

-def get_conn_uri(self, conn_id: str) -> Optional[str]:

+def _get_extra(self, secret, conn_string):

+if 'extra' in secret:

+extra_dict = secret['extra']

+kvs = "&".join([f"{key}={value}" for key, value in

extra_dict.items()])

Review comment:

What do you think about

[urllib.parse.urlencode](https://docs.python.org/3/library/urllib.parse.html#urllib.parse.urlencode).

I'm afraid the current implementation may have problems with some special

characters.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #17448: Aws secrets manager backend

mik-laj commented on a change in pull request #17448:

URL: https://github.com/apache/airflow/pull/17448#discussion_r683842856

##

File path: airflow/providers/amazon/aws/secrets/secrets_manager.py

##

@@ -96,28 +97,74 @@ def __init__(

@cached_property

def client(self):

-"""Create a Secrets Manager client"""

+"""

+Create a Secrets Manager client

+"""

session = boto3.session.Session(

-profile_name=self.profile_name,

+profile_name=self.profile_name

)

return session.client(service_name="secretsmanager", **self.kwargs)

-def get_conn_uri(self, conn_id: str) -> Optional[str]:

+def _get_extra(self, secret, conn_string):

+if 'extra' in secret:

+extra_dict = secret['extra']

+kvs = "&".join([f"{key}={value}" for key, value in

extra_dict.items()])

Review comment:

What do you think about

[urllib.parse.urlencode(](https://docs.python.org/3/library/urllib.parse.html#urllib.parse.urlencode).

I'm afraid the current implementation may have problems with some special

characters.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on pull request #17448: Aws secrets manager backend

mik-laj commented on pull request #17448: URL: https://github.com/apache/airflow/pull/17448#issuecomment-893875377 Can you also update the docs? http://airflow.apache.org/docs/apache-airflow-providers-amazon/stable/secrets-backends/aws-secrets-manager.html -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] wolfier opened a new issue #17453: SQLAlchemy constraint for apache-airflow-snowflake-provider installation

wolfier opened a new issue #17453:

URL: https://github.com/apache/airflow/issues/17453

**Apache Airflow version**: 2.1.0 or really any Airflow versions

**What happened**:

When I try to install the snowflake provider, the version of SQLAlchemy also

get upgraded. Due to the dependency of packages installed by the snowflake

provider, more specifically the requirements of snowflake-sqlalchemy,

SQLAlchemy is forced toe upgraded.

This upgrade caused some issues with the webserver startup, which generated

this unhelpful error log.

```

[2021-08-04 19:37:45,566] {abstract.py:229} ERROR - Failed to add operation

for GET /api/v1/connections

Traceback (most recent call last):

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/apis/abstract.py",

line 209, in add_paths

self.add_operation(path, method)

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/apis/abstract.py",

line 173, in add_operation

pass_context_arg_name=self.pass_context_arg_name

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/operations/__init__.py",

line 8, in make_operation

return spec.operation_cls.from_spec(spec, *args, **kwargs)

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/operations/openapi.py",

line 138, in from_spec

**kwargs

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/operations/openapi.py",

line 89, in __init__

pass_context_arg_name=pass_context_arg_name

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/operations/abstract.py",

line 96, in __init__

self._resolution = resolver.resolve(self)

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/resolver.py",

line 40, in resolve

return Resolution(self.resolve_function_from_operation_id(operation_id),

operation_id)

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/resolver.py",

line 66, in resolve_function_from_operation_id

raise ResolverError(str(e), sys.exc_info())

airflow._vendor.connexion.exceptions.ResolverError:

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/bin/airflow", line 8, in

sys.exit(main())

File "/usr/local/lib/python3.7/site-packages/airflow/__main__.py", line

40, in main

args.func(args)

File "/usr/local/lib/python3.7/site-packages/airflow/cli/cli_parser.py",

line 48, in command

return func(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/airflow/utils/cli.py", line

91, in wrapper

return f(*args, **kwargs)

File

"/usr/local/lib/python3.7/site-packages/airflow/cli/commands/sync_perm_command.py",

line 26, in sync_perm

appbuilder = cached_app().appbuilder # pylint: disable=no-member

File "/usr/local/lib/python3.7/site-packages/airflow/www/app.py", line

146, in cached_app

app = create_app(config=config, testing=testing)

File "/usr/local/lib/python3.7/site-packages/airflow/www/app.py", line

130, in create_app

init_api_connexion(flask_app)

File

"/usr/local/lib/python3.7/site-packages/airflow/www/extensions/init_views.py",

line 186, in init_api_connexion

specification='v1.yaml', base_path=base_path, validate_responses=True,

strict_validation=True

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/apps/flask_app.py",

line 57, in add_api

api = super(FlaskApp, self).add_api(specification, **kwargs)

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/apps/abstract.py",

line 156, in add_api

options=api_options.as_dict())

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/apis/abstract.py",

line 111, in __init__

self.add_paths()

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/apis/abstract.py",

line 216, in add_paths

self._handle_add_operation_error(path, method, err.exc_info)

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/apis/abstract.py",

line 231, in _handle_add_operation_error

raise value.with_traceback(traceback)

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/resolver.py",

line 61, in resolve_function_from_operation_id

return self.function_resolver(operation_id)

File

"/usr/local/lib/python3.7/site-packages/airflow/_vendor/connexion/utils.py",

line 111, in get_function_from_name

module = importlib.import_module(module_name)

File "/usr/local/lib/python3.7/importlib/__init__.py", line 127, in

import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "", line 1006, in _gcd_import

File "", line 983, in _find_and_load

File "", line 967, in _find_and_load_unlocked

File "", line 677, in

[GitHub] [airflow] jedcunningham opened a new pull request #17452: Pin snowflake-sqlalchemy

jedcunningham opened a new pull request #17452: URL: https://github.com/apache/airflow/pull/17452 snowflake-sqlalchemy 1.3 now depends on sqlalchemy 1.4+, so pin to a max of 1.2.x. The webserver cannot start when we have sqlalchemy 1.4, throwing the following exceptions: ```airflow._vendor.connexion.exceptions.ResolverError: ``` and ```AttributeError: columns``` A workaround for any users trying to install `apache-airflow-providers-snowflake`, simply also add `snowflake-sqlalchemy==1.2.5` (as of writing) to avoid pulling in the latest version of `snowflake-sqlalchemy`, and as a result, the latest `sqlalchemy`. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Wittline commented on issue #8177: provide_context=True not working with PythonVirtualenvOperator

Wittline commented on issue #8177: URL: https://github.com/apache/airflow/issues/8177#issuecomment-893833279 Hi @jatejeda Please check my github, I used the **PythonVirtualenvOperator** in a personal project using the version 2. https://github.com/Wittline/uber-expenses-tracking -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jatejeda commented on issue #8177: provide_context=True not working with PythonVirtualenvOperator

jatejeda commented on issue #8177: URL: https://github.com/apache/airflow/issues/8177#issuecomment-893831486 no, it wasn't fixed ... I have the same bug in 2.0.2 > Hi, > > Was this bug fixed in Airflow version 2? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] fatmumuhomer commented on a change in pull request #17236: [Airflow 16364] Add conn_timeout and cmd_timeout params to SSHOperator; add conn_timeout param to SSHHook

fatmumuhomer commented on a change in pull request #17236: URL: https://github.com/apache/airflow/pull/17236#discussion_r683804274 ## File path: docs/apache-airflow-providers-ssh/connections/ssh.rst ## @@ -47,7 +47,8 @@ Extra (optional) * ``key_file`` - Full Path of the private SSH Key file that will be used to connect to the remote_host. * ``private_key`` - Content of the private key used to connect to the remote_host. * ``private_key_passphrase`` - Content of the private key passphrase used to decrypt the private key. -* ``timeout`` - An optional timeout (in seconds) for the TCP connect. Default is ``10``. +* ``conn_timeout`` - An optional timeout (in seconds) for the TCP connect. Default is ``10``. The paramater to :class:`~airflow.providers.ssh.hooks.ssh.SSHHook` conn_timeout takes precedence. Review comment: Sorry - what do you mean by "on the other side"? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

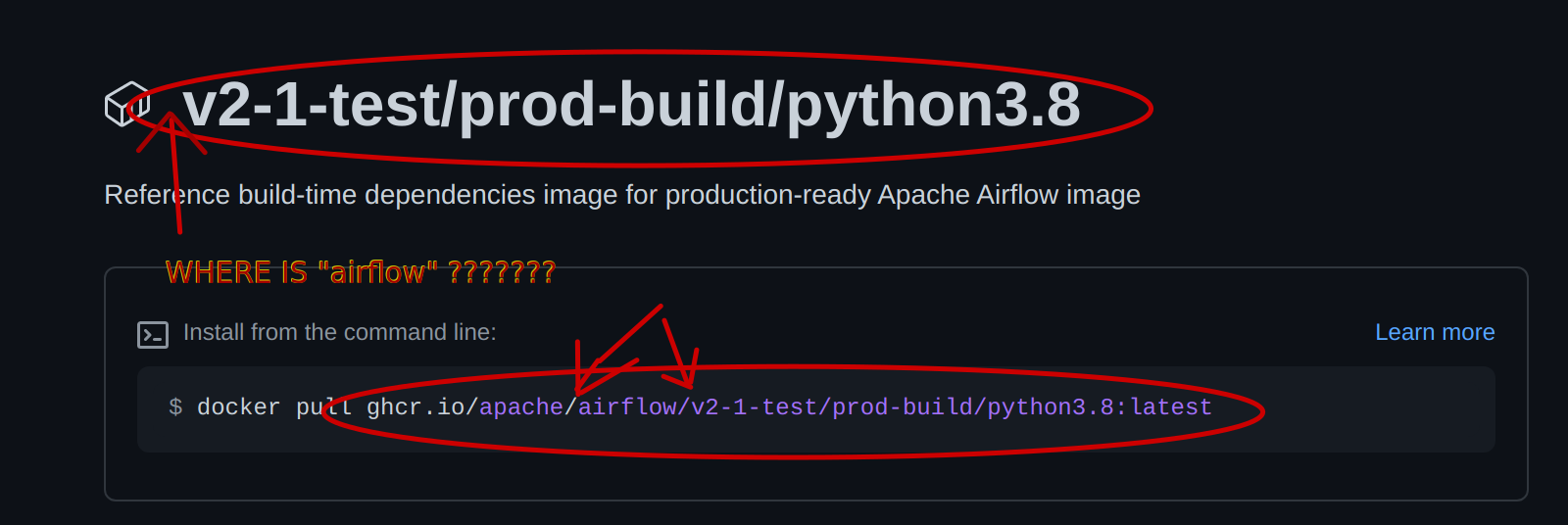

[airflow] 17/17: Switches to "/" convention in ghcr.io images (#17356)

This is an automated email from the ASF dual-hosted git repository.

potiuk pushed a commit to branch v2-1-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit 8fc76971c80d1b52a32bc244548c058aaffe27dd

Author: Jarek Potiuk

AuthorDate: Thu Aug 5 18:39:43 2021 +0200

Switches to "/" convention in ghcr.io images (#17356)

We are using ghcr.io as image cache for our CI builds and Breeze

and it seems ghcr.io is being "rebuilt" while running.

We had been using "airflow-.." image convention before,

bacause multiple nesting levels of images were not supported,

however we experienced errors recently with pushing 2.1 images

(https://issues.apache.org/jira/browse/INFRA-22124) and during

investigation it turned out, that it is possible now to use "/"

in the name of the image, and while it still does not introduce

multiple nesting levels and folder structure, the UI of GitHub

treats it like that and if you have image which starts wiht

"airflow/", the airflow prefix is stripped out and you can also

have even more "/" in then name to introduce further hierarchy.

Since we have to change image naming convention due to (still

unresolved) bug with no permission to push the v2-1-test image

we've decided to change naming convention for all our cache

images to follow this - now available - "/" connvention to make

it better structured and easier to manage/understand.

Some more optimisations are implemented - Python, prod-build and

ci-manifest images are only pushed when "latest" image is prepared.

They are not needed for the COMMIT builds because we only need

final images for those builds. This simplified the code quite

a bit.

The push of cache image in CI is done in one job for both

CI and PROD images and the image is rebuilt again with

latest constraints, to account for the latest constraints

but to make sure that UPGRADE_TO_NEWER_DEPENDENCIES

is not set during the build (which invalidates the cache

for next non-upgrade builds)

Backwards-compatibility was implemented to allow PRs that have

not been upgraded to continue building after this one is merged,

also a workaround has been implemented to make this change

to work even if it is not merged yet to main.

This "legacy" mode will be removed in ~week when everybody rebase

on top of main.

Documentation is updated reflecting those changes.

(cherry picked from commit 1bd3a5c68c88cf3840073d6276460a108f864187)

---

.github/workflows/build-images.yml | 18 +++

.github/workflows/ci.yml | 159 +++--

CI.rst | 51 ---

IMAGES.rst | 24 ++--

README.md | 127 +---

breeze | 17 +--

dev/retag_docker_images.py | 9 +-

scripts/ci/images/ci_prepare_ci_image_on_ci.sh | 19 +--

scripts/ci/images/ci_prepare_prod_image_on_ci.sh | 29 +---

...ify_ci_image.sh => ci_push_legacy_ci_images.sh} | 35 +

...y_ci_image.sh => ci_push_legacy_prod_images.sh} | 35 +

.../images/ci_wait_for_and_verify_all_ci_images.sh | 2 +

.../ci_wait_for_and_verify_all_prod_images.sh | 2 +

.../ci/images/ci_wait_for_and_verify_ci_image.sh | 27 ++--

.../ci/images/ci_wait_for_and_verify_prod_image.sh | 32 +++--

scripts/ci/libraries/_build_images.sh | 109 --

scripts/ci/libraries/_initialization.sh| 16 +--

scripts/ci/libraries/_kind.sh | 16 +--

scripts/ci/libraries/_parallel.sh | 7 +-

scripts/ci/libraries/_push_pull_remove_images.sh | 117 +--

scripts/ci/libraries/_script_init.sh | 2 +-

scripts/ci/selective_ci_checks.sh | 10 +-

22 files changed, 423 insertions(+), 440 deletions(-)

diff --git a/.github/workflows/build-images.yml

b/.github/workflows/build-images.yml

index ec8f435..c2a9054 100644

--- a/.github/workflows/build-images.yml

+++ b/.github/workflows/build-images.yml

@@ -203,6 +203,10 @@ jobs:

run: ./scripts/ci/images/ci_prepare_ci_image_on_ci.sh

- name: "Push CI images ${{ matrix.python-version }}:${{

env.TARGET_COMMIT_SHA }}"

run: ./scripts/ci/images/ci_push_ci_images.sh

+ # Remove me on 15th of August 2021 after all users had chance to rebase

+ - name: "Push Legacy CI images ${{ matrix.python-version }}:${{

env.TARGET_COMMIT_SHA }}"

+run: ./scripts/ci/images/ci_push_legacy_ci_images.sh

+if: github.event_name == 'pull_request_target'

build-prod-images:

permissions:

@@ -229,8 +233,11 @@ jobs:

VERSION_SUFFIX_FOR_PYPI: ".dev0"

steps:

- name: Set envs

+# Set pull image tag for CI image build,

[airflow] 11/17: Increases timeout for helm chart builds (#17417)

This is an automated email from the ASF dual-hosted git repository.

potiuk pushed a commit to branch v2-1-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit 38c7115599884e1b927fc3c06d8035fb59aff3f2

Author: Jarek Potiuk

AuthorDate: Wed Aug 4 22:02:46 2021 +0200

Increases timeout for helm chart builds (#17417)

(cherry picked from commit 4348239686b3e2d3df17e5e8ed6462dfc6b98164)

---

.github/workflows/ci.yml | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

index 8c31784..22634cf 100644

--- a/.github/workflows/ci.yml

+++ b/.github/workflows/ci.yml

@@ -561,7 +561,7 @@ ${{ hashFiles('.pre-commit-config.yaml') }}"

PACKAGE_FORMAT: "sdist"

tests-helm:

-timeout-minutes: 20

+timeout-minutes: 40

name: "Python unit tests for helm chart"

runs-on: ${{ fromJson(needs.build-info.outputs.runsOn) }}

needs: [build-info, ci-images]

[airflow] 05/17: bump dnspython (#16698)

This is an automated email from the ASF dual-hosted git repository. potiuk pushed a commit to branch v2-1-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 867adda5e28b5d3c366e3cd3e1f8f60f5f412c4b Author: kurtqq <47721902+kur...@users.noreply.github.com> AuthorDate: Tue Jun 29 00:21:24 2021 +0300 bump dnspython (#16698) (cherry picked from commit 57dcac22137bc958c1ed9f12fa54484e13411a6f) --- setup.py | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/setup.py b/setup.py index e6c1d46..9e8c28d 100644 --- a/setup.py +++ b/setup.py @@ -370,7 +370,7 @@ ldap = [ ] leveldb = ['plyvel'] mongo = [ -'dnspython>=1.13.0,<2.0.0', +'dnspython>=1.13.0,<3.0.0', 'pymongo>=3.6.0', ] mssql = [

[airflow] 07/17: Update alias for field_mask in Google Memmcache (#16975)

This is an automated email from the ASF dual-hosted git repository. potiuk pushed a commit to branch v2-1-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 37c935d601ff6c3f501fcfab3bf1b57665fddbc1 Author: Jarek Potiuk AuthorDate: Tue Jul 13 20:54:38 2021 +0200 Update alias for field_mask in Google Memmcache (#16975) The July 12 2021 release of google-memcache library removed field_mask alias from the library which broke our typechecking and made google provider unimportable. This PR fixes the import to use the actual import. (cherry picked from commit a3f5c93806258b5ad396a638ba0169eca7f9d065) --- .../providers/google/cloud/hooks/cloud_memorystore.py| 16 setup.py | 4 +++- 2 files changed, 11 insertions(+), 9 deletions(-) diff --git a/airflow/providers/google/cloud/hooks/cloud_memorystore.py b/airflow/providers/google/cloud/hooks/cloud_memorystore.py index caf1cd6..8f4165b 100644 --- a/airflow/providers/google/cloud/hooks/cloud_memorystore.py +++ b/airflow/providers/google/cloud/hooks/cloud_memorystore.py @@ -487,8 +487,8 @@ class CloudMemorystoreHook(GoogleBaseHook): - ``redisConfig`` If a dict is provided, it must be of the same form as the protobuf message -:class:`~google.cloud.redis_v1.types.FieldMask` -:type update_mask: Union[Dict, google.cloud.redis_v1.types.FieldMask] +:class:`~google.protobuf.field_mask_pb2.FieldMask` +:type update_mask: Union[Dict, google.protobuf.field_mask_pb2.FieldMask] :param instance: Required. Update description. Only fields specified in ``update_mask`` are updated. If a dict is provided, it must be of the same form as the protobuf message @@ -871,7 +871,7 @@ class CloudMemorystoreMemcachedHook(GoogleBaseHook): @GoogleBaseHook.fallback_to_default_project_id def update_instance( self, -update_mask: Union[Dict, cloud_memcache.field_mask.FieldMask], +update_mask: Union[Dict, FieldMask], instance: Union[Dict, cloud_memcache.Instance], project_id: str, location: Optional[str] = None, @@ -889,9 +889,9 @@ class CloudMemorystoreMemcachedHook(GoogleBaseHook): - ``displayName`` If a dict is provided, it must be of the same form as the protobuf message - :class:`~google.cloud.memcache_v1beta2.types.cloud_memcache.field_mask.FieldMask` +:class:`~google.protobuf.field_mask_pb2.FieldMask`) :type update_mask: -Union[Dict, google.cloud.memcache_v1beta2.types.cloud_memcache.field_mask.FieldMask] +Union[Dict, google.protobuf.field_mask_pb2.FieldMask] :param instance: Required. Update description. Only fields specified in ``update_mask`` are updated. If a dict is provided, it must be of the same form as the protobuf message @@ -935,7 +935,7 @@ class CloudMemorystoreMemcachedHook(GoogleBaseHook): @GoogleBaseHook.fallback_to_default_project_id def update_parameters( self, -update_mask: Union[Dict, cloud_memcache.field_mask.FieldMask], +update_mask: Union[Dict, FieldMask], parameters: Union[Dict, cloud_memcache.MemcacheParameters], project_id: str, location: str, @@ -951,9 +951,9 @@ class CloudMemorystoreMemcachedHook(GoogleBaseHook): :param update_mask: Required. Mask of fields to update. If a dict is provided, it must be of the same form as the protobuf message - :class:`~google.cloud.memcache_v1beta2.types.cloud_memcache.field_mask.FieldMask` +:class:`~google.protobuf.field_mask_pb2.FieldMask` :type update_mask: -Union[Dict, google.cloud.memcache_v1beta2.types.cloud_memcache.field_mask.FieldMask] +Union[Dict, google.protobuf.field_mask_pb2.FieldMask] :param parameters: The parameters to apply to the instance. If a dict is provided, it must be of the same form as the protobuf message :class:`~google.cloud.memcache_v1beta2.types.cloud_memcache.MemcacheParameters` diff --git a/setup.py b/setup.py index 9dde824..46ff73d 100644 --- a/setup.py +++ b/setup.py @@ -292,7 +292,9 @@ google = [ 'google-cloud-kms>=2.0.0,<3.0.0', 'google-cloud-language>=1.1.1,<2.0.0', 'google-cloud-logging>=2.1.1,<3.0.0', -'google-cloud-memcache>=0.2.0', +# 1.1.0 removed field_mask and broke import for released providers +# We can remove the <1.1.0 limitation after we release new Google Provider +'google-cloud-memcache>=0.2.0,<1.1.0', 'google-cloud-monitoring>=2.0.0,<3.0.0', 'google-cloud-os-login>=2.0.0,<3.0.0', 'google-cloud-pubsub>=2.0.0,<3.0.0',

[airflow] 15/17: Improve diagnostics message when users have secret_key misconfigured (#17410)

This is an automated email from the ASF dual-hosted git repository. potiuk pushed a commit to branch v2-1-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 2df7e6e41cf6968b48658c21e989868cb3960027 Author: Jarek Potiuk AuthorDate: Wed Aug 4 15:15:38 2021 +0200 Improve diagnostics message when users have secret_key misconfigured (#17410) * Improve diagnostics message when users have secret_key misconfigured Recently fixed log open-access vulnerability have caused quite a lot of questions and issues from the affected users who did not have webserver/secret_key configured for their workers (effectively leading to random value for those keys for workers) This PR explicitly explains the possible reason for the problem and encourages the user to configure their webserver's secret_key in both - workers and webserver. Related to: #17251 and a number of similar slack discussions. (cherry picked from commit 2321020e29511f3741940440739e4cc01c0a7ba2) --- airflow/utils/log/file_task_handler.py | 5 + 1 file changed, 5 insertions(+) diff --git a/airflow/utils/log/file_task_handler.py b/airflow/utils/log/file_task_handler.py index 2dc9beb..56b9d23 100644 --- a/airflow/utils/log/file_task_handler.py +++ b/airflow/utils/log/file_task_handler.py @@ -186,6 +186,11 @@ class FileTaskHandler(logging.Handler): ) response.encoding = "utf-8" +if response.status_code == 403: +log += "*** Please make sure that all your webservers and workers have" \ + " the same 'secret_key' configured in 'webserver' section !\n***" +log += "*** See more at https://airflow.apache.org/docs/apache-airflow/; \ + "stable/configurations-ref.html#secret-key\n***" # Check if the resource was properly fetched response.raise_for_status()

[airflow] 13/17: Optimizes structure of the Dockerfiles and use latest tools (#17418)

This is an automated email from the ASF dual-hosted git repository.

potiuk pushed a commit to branch v2-1-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit 106d9f09852090da21f3dfb744c69e4be5fd90e5

Author: Jarek Potiuk

AuthorDate: Wed Aug 4 23:32:12 2021 +0200

Optimizes structure of the Dockerfiles and use latest tools (#17418)

* Remove CONTINUE_ON_PIP_CHECK_FAILURE parameter

This parameter was useful when upgrading new dependencies,

however it is going to be replaced with better approach in the

upcoming image convention change.

* Optimizes structure of the Dockerfiles and use latest tools

This PR optimizes the structure of Dockerfile by moving some

expensive operations before the COPY sources so that

rebuilding image when only few sources change is much faster.

At the same time, we upgrade PIP and HELM chart used to latest

versions and clean-up some parameter inconsistencies.

(cherry picked from commit 94b03f6f43277e7332c25fdc63aedfde605f9773)

---

.github/workflows/build-images.yml | 1 -

BREEZE.rst | 10 +

Dockerfile | 27 +--

Dockerfile.ci | 52 +++---

IMAGES.rst | 6 ---

breeze | 9

breeze-complete| 2 +-

docs/docker-stack/build-arg-ref.rst| 6 ---

scripts/ci/libraries/_build_images.sh | 20 -

scripts/ci/libraries/_initialization.sh| 10 ++---

scripts/docker/install_additional_dependencies.sh | 5 +--

scripts/docker/install_airflow.sh | 4 +-

...nstall_airflow_dependencies_from_branch_tip.sh} | 11 ++---

.../docker/install_from_docker_context_files.sh| 2 +-

14 files changed, 55 insertions(+), 110 deletions(-)

diff --git a/.github/workflows/build-images.yml

b/.github/workflows/build-images.yml

index f29e199..ec8f435 100644

--- a/.github/workflows/build-images.yml

+++ b/.github/workflows/build-images.yml

@@ -148,7 +148,6 @@ jobs:

BACKEND: postgres

PYTHON_MAJOR_MINOR_VERSION: ${{ matrix.python-version }}

UPGRADE_TO_NEWER_DEPENDENCIES: ${{

needs.build-info.outputs.upgradeToNewerDependencies }}

- CONTINUE_ON_PIP_CHECK_FAILURE: "true"

DOCKER_CACHE: ${{ needs.build-info.outputs.cacheDirective }}

CHECK_IF_BASE_PYTHON_IMAGE_UPDATED: >

${{ github.event_name == 'pull_request_target' && 'false' || 'true' }}

diff --git a/BREEZE.rst b/BREEZE.rst

index 90d3f0b..468709b 100644

--- a/BREEZE.rst

+++ b/BREEZE.rst

@@ -1279,9 +1279,6 @@ This is the current syntax for `./breeze <./breeze>`_:

--upgrade-to-newer-dependencies

Upgrades PIP packages to latest versions available without looking

at the constraints.

- --continue-on-pip-check-failure

- Continue even if 'pip check' fails.

-

-I, --production-image

Use production image for entering the environment and builds (not

for tests).

@@ -2382,9 +2379,9 @@ This is the current syntax for `./breeze <./breeze>`_:

Helm version - only used in case one of kind-cluster commands is

used.

One of:

- v3.2.4

+ v3.6.3

- Default: v3.2.4

+ Default: v3.6.3

--executor EXECUTOR

Executor to use in a kubernetes cluster.

@@ -2435,9 +2432,6 @@ This is the current syntax for `./breeze <./breeze>`_:

--upgrade-to-newer-dependencies

Upgrades PIP packages to latest versions available without looking

at the constraints.

- --continue-on-pip-check-failure

- Continue even if 'pip check' fails.

-

Use different Airflow version at runtime in CI image

diff --git a/Dockerfile b/Dockerfile

index 66c9649..210918c 100644

--- a/Dockerfile

+++ b/Dockerfile

@@ -44,7 +44,8 @@ ARG AIRFLOW_GID="5"

ARG PYTHON_BASE_IMAGE="python:3.6-slim-buster"

-ARG AIRFLOW_PIP_VERSION=21.1.2

+ARG AIRFLOW_PIP_VERSION=21.2.2

+ARG AIRFLOW_IMAGE_REPOSITORY="https://github.com/apache/airflow;

# By default PIP has progress bar but you can disable it.

ARG PIP_PROGRESS_BAR="on"

@@ -108,12 +109,13 @@ ARG DEV_APT_COMMAND="\

&& curl https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add - >

/dev/null \

&& echo 'deb https://dl.yarnpkg.com/debian/ stable main' >

/etc/apt/sources.list.d/yarn.list"

ARG ADDITIONAL_DEV_APT_COMMAND="echo"

+ARG ADDITIONAL_DEV_APT_ENV=""

ENV DEV_APT_DEPS=${DEV_APT_DEPS} \

ADDITIONAL_DEV_APT_DEPS=${ADDITIONAL_DEV_APT_DEPS} \

DEV_APT_COMMAND=${DEV_APT_COMMAND} \

ADDITIONAL_DEV_APT_COMMAND=${ADDITIONAL_DEV_APT_COMMAND} \

-ADDITIONAL_DEV_APT_ENV=""

+

[airflow] 12/17: Improve image building documentation for new users (#17409)

This is an automated email from the ASF dual-hosted git repository.

potiuk pushed a commit to branch v2-1-test

in repository https://gitbox.apache.org/repos/asf/airflow.git

commit 8609c82c7cdd3047efee0c55bb214541e3ea293d

Author: Jarek Potiuk

AuthorDate: Wed Aug 4 23:07:22 2021 +0200

Improve image building documentation for new users (#17409)

* Improve image building documentation for new users

This PR improves documentation for building images of airflow,

specifically targetting users who do not have big experience with

building the images. It shows examples on how custom image building

can be easily used to upgrade provider packages as well as how

image building can be easily integrated in quick-start using

docker-compose.

(cherry picked from commit 4ee4199f6f0107f39726fc3551d1bf20b8b1283c)

---

docs/apache-airflow-providers/index.rst | 5 +

docs/apache-airflow/start/docker-compose.yaml | 6 +-

docs/apache-airflow/start/docker.rst| 12

docs/docker-stack/build.rst | 13 +

.../docker-examples/extending/add-apt-packages/Dockerfile | 2 +-

.../extending/add-build-essential-extend/Dockerfile | 2 +-

.../extending/{embedding-dags => add-providers}/Dockerfile | 7 +++

.../docker-examples/extending/add-pypi-packages/Dockerfile | 2 +-

.../docker-examples/extending/embedding-dags/Dockerfile | 2 +-

.../docker-examples/extending/writable-directory/Dockerfile | 2 +-

10 files changed, 43 insertions(+), 10 deletions(-)

diff --git a/docs/apache-airflow-providers/index.rst

b/docs/apache-airflow-providers/index.rst

index 7329b7f..71c5132 100644

--- a/docs/apache-airflow-providers/index.rst

+++ b/docs/apache-airflow-providers/index.rst

@@ -21,6 +21,8 @@ Provider packages

.. contents:: :local:

+.. _providers:community-maintained-providers:

+

Community maintained providers

''

@@ -31,6 +33,9 @@ Those provider packages are separated per-provider (for

example ``amazon``, ``go

etc.). Those packages are available as ``apache-airflow-providers`` packages -

separately per each provider

(for example there is an ``apache-airflow-providers-amazon`` or

``apache-airflow-providers-google`` package).

+The full list of community managed providers is available at

+`Providers Index

`_.

+

You can install those provider packages separately in order to interface with

a given service. For those

providers that have corresponding extras, the provider packages (latest

version from PyPI) are installed

automatically when Airflow is installed with the extra.

diff --git a/docs/apache-airflow/start/docker-compose.yaml

b/docs/apache-airflow/start/docker-compose.yaml

index 5a301cf..06991e7 100644

--- a/docs/apache-airflow/start/docker-compose.yaml

+++ b/docs/apache-airflow/start/docker-compose.yaml

@@ -44,7 +44,11 @@

version: '3'

x-airflow-common:

+ # In order to add custom dependencies or upgrade provider packages you can

use your extended image.

+ # Comment the image line, place your Dockerfile in the directory where you

placed the docker-compose.yaml

+ # and uncomment the "build" line below, Then run `docker-compose build` to

build the images.

image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:|version|}

+ # build: .

environment:

AIRFLOW__CORE__EXECUTOR: CeleryExecutor

@@ -60,7 +64,7 @@ x-airflow-common:

- ./dags:/opt/airflow/dags

- ./logs:/opt/airflow/logs

- ./plugins:/opt/airflow/plugins

- user: "${AIRFLOW_UID:-5}:${AIRFLOW_GID:-5}"

+ user: "${AIRFLOW_UID:-5}:${AIRFLOW_GID:-0}"

depends_on:

redis:

condition: service_healthy

diff --git a/docs/apache-airflow/start/docker.rst

b/docs/apache-airflow/start/docker.rst

index 77c9333..747f87c 100644

--- a/docs/apache-airflow/start/docker.rst

+++ b/docs/apache-airflow/start/docker.rst

@@ -68,6 +68,18 @@ If you need install a new Python library or system library,

you can :doc:`build

.. _initializing_docker_compose_environment:

+Using custom images

+===

+

+When you want to run Airflow locally, you might want to use an extended image,

containing some additional dependencies - for

+example you might add new python packages, or upgrade airflow providers to a

later version. This can be done very easily

+by placing a custom Dockerfile alongside your `docker-compose.yaml`. Then you

can use `docker-compose build` command to build your image (you need to

+do it only once). You can also add the `--build` flag to your `docker-compose`

commands to rebuild the images

+on-the-fly when you run other `docker-compose` commands.

+

+Examples of how you can extend the image with custom providers, python

packages,

+apt packages and more can be found in :doc:`Building the image

`.

+

[airflow] 04/17: Add type annotations to setup.py (#16658)