[GitHub] [airflow] potiuk commented on pull request #18419: Fix ``docker-stack`` docs build

potiuk commented on pull request #18419: URL: https://github.com/apache/airflow/pull/18419#issuecomment-924639800 Thhanks @jedcunningham ! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: Fix ``docker-stack`` docs build (#18419)

This is an automated email from the ASF dual-hosted git repository.

potiuk pushed a commit to branch main

in repository https://gitbox.apache.org/repos/asf/airflow.git

The following commit(s) were added to refs/heads/main by this push:

new bbb5fe2 Fix ``docker-stack`` docs build (#18419)

bbb5fe2 is described below

commit bbb5fe28066809e26232e403417c92c53cfa13d3

Author: Jed Cunningham <66968678+jedcunning...@users.noreply.github.com>

AuthorDate: Wed Sep 22 00:54:21 2021 -0600

Fix ``docker-stack`` docs build (#18419)

---

docs/docker-stack/build-arg-ref.rst | 2 ++

scripts/ci/pre_commit/pre_commit_check_order_dockerfile_extras.py | 4 ++--

2 files changed, 4 insertions(+), 2 deletions(-)

diff --git a/docs/docker-stack/build-arg-ref.rst

b/docs/docker-stack/build-arg-ref.rst

index 6dc4238..2f4afe9 100644

--- a/docs/docker-stack/build-arg-ref.rst

+++ b/docs/docker-stack/build-arg-ref.rst

@@ -70,6 +70,7 @@ Those are the most common arguments that you use when you

want to build a custom

List of default extras in the production Dockerfile:

.. BEGINNING OF EXTRAS LIST UPDATED BY PRE COMMIT

+

* amazon

* async

* celery

@@ -96,6 +97,7 @@ List of default extras in the production Dockerfile:

* ssh

* statsd

* virtualenv

+

.. END OF EXTRAS LIST UPDATED BY PRE COMMIT

Image optimization options

diff --git a/scripts/ci/pre_commit/pre_commit_check_order_dockerfile_extras.py

b/scripts/ci/pre_commit/pre_commit_check_order_dockerfile_extras.py

index 17309e7..113d0c2 100755

--- a/scripts/ci/pre_commit/pre_commit_check_order_dockerfile_extras.py

+++ b/scripts/ci/pre_commit/pre_commit_check_order_dockerfile_extras.py

@@ -67,12 +67,12 @@ def check_dockerfile():

is_copying = True

for line in content:

if line.startswith(START_LINE):

-result.append(line)

+result.append(f"{line}\n")

is_copying = False

for extra in extras_list:

result.append(f'* {extra}')

elif line.startswith(END_LINE):

-result.append(line)

+result.append(f"\n{line}")

is_copying = True

elif is_copying:

result.append(line)

[GitHub] [airflow] potiuk merged pull request #18419: Fix ``docker-stack`` docs build

potiuk merged pull request #18419: URL: https://github.com/apache/airflow/pull/18419 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] yuanke7 commented on issue #18422: No response from request api(longer than 30min) while using SimpleHttpOperator

yuanke7 commented on issue #18422: URL: https://github.com/apache/airflow/issues/18422#issuecomment-924637244 > If had a similar issue recently (with a flask app I created myself). > I was using postman to do some test and I didn't realize I had the content-length header still defined. It caused all my requests to wait indefinitely until I would get a time-out. > I'm just guessing here but is it possible that something is happening here as well? > > 1. Are you setting any headers? > 2. What do you get when you query any other endpoint? No I didn't set any headers cuz my own api is really simple , I'll try it, thanks !!! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] dstandish commented on issue #18401: downstream tasks "upstream failed" when upstream retries and succeeds

dstandish commented on issue #18401: URL: https://github.com/apache/airflow/issues/18401#issuecomment-924631409 I did nothing manually. The scheduler retried the tasks (which are green now because they succeeded on second try). but the orange tasks never ran -- they seem to've gone immediately to "upstream failed" -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Jorricks commented on issue #18422: No response from request api(longer than 30min) while using SimpleHttpOperator

Jorricks commented on issue #18422: URL: https://github.com/apache/airflow/issues/18422#issuecomment-924620575 If had a similar issue recently (with a flask app I created myself). I was using postman to do some test and I didn't realize I had the content-length header still defined. It caused all my requests to wait indefinitely until I would get a time-out. I'm just guessing here but is it possible that something is happening here as well? 2. Are you setting any headers? 3. What do you get when you query any other endpoint? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Jorricks commented on issue #18401: downstream tasks "upstream failed" when upstream retries and succeeds

Jorricks commented on issue #18401: URL: https://github.com/apache/airflow/issues/18401#issuecomment-924617163 The DagRuns (circles at the top) make me think that the task first failed completely and then you manually asked it to retry. So the question: did you manually retry them or did the Airflow scheduler do it automatically for you? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Jorricks commented on issue #18409: Setting max_active_runs = 1 - still have multiple instances of the same dag running

Jorricks commented on issue #18409: URL: https://github.com/apache/airflow/issues/18409#issuecomment-924615761 Hello @denysivanov, In the example code you submitted, you create a DagRun with status Running. However, this is done directly on the database and thereby you could consider this to be a manual overwrite. The limits, max_active_runs, are enforced by the scheduler component of Airflow. This component will create DagRuns based on the schedule and put them in the queued state first. It will only mark them as running if that will not surpass any thresholds like the max_active_runs. By completely skipping those scheduler steps, there is no way for Airflow to enforce the limit on max_active_runs. Hence, I propose you change the code you showed to set the DagRun to Queued state instead of Running. That should fix your issue :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pritampbonde commented on issue #18091: Possible PyJWT dependency upgrade?

pritampbonde commented on issue #18091: URL: https://github.com/apache/airflow/issues/18091#issuecomment-924581725 @potiuk : As per suggestion given have raise a new request but I found there is already one request one on similar lines https://github.com/dpgaspar/Flask-AppBuilder/issues/1691. I have added the comment on same issue, let us know if you require to raise a new request with Flask-AppBuilder team -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] r-richmond edited a comment on issue #18051: Airflow scheduling only 1 dag at a time leaving other dags in a queued state

r-richmond edited a comment on issue #18051:

URL: https://github.com/apache/airflow/issues/18051#issuecomment-924580052

Going to close this out since I assume #18061 tackled it and I'm on 2.1.4.

now. If this issue presents itself again I'll re-open.

Thanks again @ephraimbuddy

note: the only thing that I find still strange is the error logs from the

scheduler that I originally had in particular the error

```

Traceback (most recent call last):

File "/usr/local/lib/python3.8/multiprocessing/process.py", line 315, in

_bootstrap

self.run()

File "/usr/local/lib/python3.8/multiprocessing/process.py", line 108, in

run

self._target(*self._args, **self._kwargs)

File

"/usr/local/lib/python3.8/site-packages/airflow/dag_processing/manager.py",

line 370, in _run_processor_manager

processor_manager.start()

File

"/usr/local/lib/python3.8/site-packages/airflow/dag_processing/manager.py",

line 610, in start

return self._run_parsing_loop()

File

"/usr/local/lib/python3.8/site-packages/airflow/dag_processing/manager.py",

line 671, in _run_parsing_loop

self._collect_results_from_processor(processor)

File

"/usr/local/lib/python3.8/site-packages/airflow/dag_processing/manager.py",

line 981, in _collect_results_from_processor

if processor.result is not None:

File

"/usr/local/lib/python3.8/site-packages/airflow/dag_processing/processor.py",

line 321, in result

if not self.done:

File

"/usr/local/lib/python3.8/site-packages/airflow/dag_processing/processor.py",

line 286, in done

if self._parent_channel.poll():

File "/usr/local/lib/python3.8/multiprocessing/connection.py", line 255,

in poll

self._check_closed()

File "/usr/local/lib/python3.8/multiprocessing/connection.py", line 136,

in _check_closed

raise OSError("handle is closed")

```

however, that hasn't come up again so... fingers crossed it won't happen

again?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] r-richmond closed issue #18051: Airflow scheduling only 1 dag at a time leaving other dags in a queued state

r-richmond closed issue #18051: URL: https://github.com/apache/airflow/issues/18051 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] r-richmond commented on issue #18051: Airflow scheduling only 1 dag at a time leaving other dags in a queued state

r-richmond commented on issue #18051: URL: https://github.com/apache/airflow/issues/18051#issuecomment-924580052 Going to close this out since I assume #18061 tackled it and I'm on 2.1.4. now. If this issue presents itself again I'll re-open. Thanks again @ephraimbuddy -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #18226: Add start date to trigger_dagrun operator

uranusjr commented on a change in pull request #18226: URL: https://github.com/apache/airflow/pull/18226#discussion_r713582288 ## File path: tests/operators/test_trigger_dagrun.py ## @@ -250,8 +250,36 @@ def test_trigger_dagrun_with_wait_for_completion_true_fail(self): execution_date=execution_date, wait_for_completion=True, poke_interval=10, -failed_states=[State.RUNNING], +failed_states=[State.QUEUED], dag=self.dag, ) with pytest.raises(AirflowException): task.run(start_date=execution_date, end_date=execution_date) + +def test_trigger_dagrun_triggering_itself(self): +"""Test TriggerDagRunOperator that triggers itself""" +execution_date = DEFAULT_DATE +task = TriggerDagRunOperator( +task_id="test_task", +trigger_dag_id=self.dag.dag_id, +dag=self.dag, +) +task.run(start_date=execution_date, end_date=execution_date) + +with create_session() as session: +dagruns = session.query(DagRun).filter(DagRun.dag_id == self.dag.dag_id).all() Review comment: You probably want to add an explicit `order_by` here, the db does not always return rows in a deterministic order. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on pull request #18226: Add start date to trigger_dagrun operator

uranusjr commented on pull request #18226: URL: https://github.com/apache/airflow/pull/18226#issuecomment-924573995 Failure seems unrelated ``` _ test_external_task_marker_exception __ dag_bag_ext = def test_external_task_marker_exception(dag_bag_ext): """ Clearing across multiple DAGs should raise AirflowException if more levels are being cleared than allowed by the recursion_depth of the first ExternalTaskMarker being cleared. """ > run_tasks(dag_bag_ext) ... > assert task_instance.state == state E AssertionError: assert None == E+ where None = .state ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #18309: task logs for dummy operators

uranusjr commented on a change in pull request #18309:

URL: https://github.com/apache/airflow/pull/18309#discussion_r713580537

##

File path: tests/utils/log/test_log_reader.py

##

@@ -79,29 +81,66 @@ def configure_loggers(self, log_dir, settings_folder):

@pytest.fixture(autouse=True)

def prepare_log_files(self, log_dir):

-dir_path =

f"{log_dir}/{self.DAG_ID}/{self.TASK_ID}/2017-09-01T00.00.00+00.00/"

-os.makedirs(dir_path)

-for try_number in range(1, 4):

-with open(f"{dir_path}/{try_number}.log", "w+") as f:

-f.write(f"try_number={try_number}.\n")

-f.flush()

+for dag_id in {self.DAG_ID, self.DUMMY_DAG_ID,

self.EXTERNAL_TASK_MARKER_DAG_ID}:

+dir_path =

f"{log_dir}/{dag_id}/{self.TASK_ID}/2017-09-01T00.00.00+00.00/"

+os.makedirs(dir_path)

+for try_number in range(1, 4):

+with open(f"{dir_path}/{try_number}.log", "w+") as f:

+f.write(f"try_number={try_number}.\n")

+f.flush()

@pytest.fixture(autouse=True)

-def prepare_db(self, session, create_task_instance):

-ti = create_task_instance(

+def prepare_db(self, session, create_task_instance_of_operator):

+from airflow.operators.bash import BashOperator

+

+ti = create_task_instance_of_operator(

+operator_class=BashOperator,

dag_id=self.DAG_ID,

task_id=self.TASK_ID,

start_date=self.DEFAULT_DATE,

run_type=DagRunType.SCHEDULED,

execution_date=self.DEFAULT_DATE,

state=TaskInstanceState.RUNNING,

+bash_command="echo 'hello'",

)

ti.try_number = 3

self.ti = ti

yield

clear_db_runs()

clear_db_dags()

+@pytest.fixture(scope="function")

+def dummy_ti(self, session, create_task_instance):

+dummy_ti = create_task_instance(

+dag_id=self.DUMMY_DAG_ID,

+task_id=self.TASK_ID,

+start_date=self.DEFAULT_DATE,

+run_type=DagRunType.SCHEDULED,

+execution_date=self.DEFAULT_DATE,

+state=TaskInstanceState.RUNNING,

+)

+dummy_ti.operator = 'DummyOperator'

+self.dummy_ti = dummy_ti

+yield

+clear_db_runs()

+clear_db_dags()

+

+@pytest.fixture(scope="function")

+def external_task_marker_ti(self, session, create_task_instance):

+external_task_marker_ti = create_task_instance(

+dag_id=self.EXTERNAL_TASK_MARKER_DAG_ID,

+task_id=self.TASK_ID,

+start_date=self.DEFAULT_DATE,

+run_type=DagRunType.SCHEDULED,

+execution_date=self.DEFAULT_DATE,

+state=TaskInstanceState.RUNNING,

+)

+external_task_marker_ti.operator = 'ExternalTaskMarker'

+self.external_task_marker_ti = external_task_marker_ti

+yield

Review comment:

You can instead `yield external_task_marker_ti` and use the fixture

directly instead of `self.external_task_marker_ti` in tests. This makes the

code a bit easier to trace IMO.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on pull request #18425: Installation Page

uranusjr commented on pull request #18425: URL: https://github.com/apache/airflow/pull/18425#issuecomment-924564644 Your change does not match your PR description at all. Is there something wrong? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #18425: Installation Page

boring-cyborg[bot] commented on pull request #18425: URL: https://github.com/apache/airflow/pull/18425#issuecomment-924562811 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (flake8, mypy and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/main/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/main/docs/apache-airflow/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/main/BREEZE.rst) for testing locally, it’s a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Please follow [ASF Code of Conduct](https://www.apache.org/foundation/policies/conduct) for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better 🚀. In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://s.apache.org/airflow-slack -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] DeathGun01 opened a new pull request #18425: Installation Page

DeathGun01 opened a new pull request #18425: URL: https://github.com/apache/airflow/pull/18425 Briefly defining the installation options to be easily understood before the user dives in on any of them. Along with it, the documentation could use a lot more flowcharts or something similar to go along with the website's UI. --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on pull request #18424: Delete extra space in adding connections doc

uranusjr commented on pull request #18424: URL: https://github.com/apache/airflow/pull/18424#issuecomment-924561752 It’s probably better to change the entire block to indent by four spaces (it’s currently indented by three). This makes future changes easier. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #18042: Fixing ses email backend

uranusjr commented on a change in pull request #18042:

URL: https://github.com/apache/airflow/pull/18042#discussion_r713571942

##

File path: airflow/utils/email.py

##

@@ -87,8 +91,10 @@ def send_email_smtp(

"""

smtp_mail_from = conf.get('smtp', 'SMTP_MAIL_FROM')

+mail_from = smtp_mail_from or from_email

Review comment:

Yes, but I don’t think it’s a bad thing since those functions are

fundamentally different. It also allows a new backend to choose to *not*

respect the `email_from` config if that makes sense.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #18424: Delete extra space in adding connections doc

boring-cyborg[bot] commented on pull request #18424: URL: https://github.com/apache/airflow/pull/18424#issuecomment-924561121 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (flake8, mypy and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/main/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/main/docs/apache-airflow/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/main/BREEZE.rst) for testing locally, it’s a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Please follow [ASF Code of Conduct](https://www.apache.org/foundation/policies/conduct) for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better 🚀. In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://s.apache.org/airflow-slack -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] GonzaloUlla opened a new pull request #18424: Delete extra space in adding connections doc

GonzaloUlla opened a new pull request #18424: URL: https://github.com/apache/airflow/pull/18424 Extra space in secret keys was causing an error while parsing values.yaml Before this change, copying and pasting the example from the docs was causing: ```bash Error: failed to parse values.yaml: error converting YAML to JSON: yaml: line 19: did not find expected key ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] fredthomsen opened a new pull request #18423: Add Opus Interactive to INTHEWILD

fredthomsen opened a new pull request #18423: URL: https://github.com/apache/airflow/pull/18423 Adds Opus Interactive to list of companies using Airflow. --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on issue #18398: When clearing a successful Subdag, child tasks are not ran

uranusjr commented on issue #18398: URL: https://github.com/apache/airflow/issues/18398#issuecomment-924545967 cc @ephraimbuddy -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr closed issue #18102: Snowflake provider not accepting private_key_file in extras

uranusjr closed issue #18102: URL: https://github.com/apache/airflow/issues/18102 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] yuanke7 opened a new issue #18422: No response from request api(longer than 30min) while using SimpleHttpOperator

yuanke7 opened a new issue #18422:

URL: https://github.com/apache/airflow/issues/18422

### Apache Airflow version

2.1.2

### Operating System

CentOS Linux 7

### Versions of Apache Airflow Providers

apache-airflow-providers-amazon==2.0.0

apache-airflow-providers-celery==2.0.0

apache-airflow-providers-cncf-kubernetes==2.0.0

apache-airflow-providers-docker==2.0.0

apache-airflow-providers-elasticsearch==2.0.2

apache-airflow-providers-ftp==2.0.0

apache-airflow-providers-google==4.0.0

apache-airflow-providers-grpc==2.0.0

apache-airflow-providers-hashicorp==2.0.0

apache-airflow-providers-http==2.0.0

apache-airflow-providers-imap==2.0.0

apache-airflow-providers-microsoft-azure==3.0.0

apache-airflow-providers-mysql==2.0.0

apache-airflow-providers-postgres==2.0.0

apache-airflow-providers-redis==2.0.0

apache-airflow-providers-sendgrid==2.0.0

apache-airflow-providers-sftp==2.0.0

apache-airflow-providers-slack==4.0.0

apache-airflow-providers-sqlite==2.0.0

apache-airflow-providers-ssh==2.0.0

### Deployment

Docker-Compose

### Deployment details

_No response_

### What happened

I'm using SimpleHttpOperator to request an api which get reponse longer than

30min often, but task is still in running state even api has already returned a

response.

Here is one of my task code:

`psd_report = SimpleHttpOperator( task_id='psd_report', method='GET',

http_conn_id=WIN_SERVER_1, endpoint='general/psd_report', response_check=lambda

response : response.json().get('Success'), response_filter=lambda response :

response.json(), execution_timeout=timedelta(hours=1), dag=dag, )`

Here is my api response data:

`{"success":True}`

### What you expected to happen

I expected that task using SimpleHttpOperator could switch state from

running to success so that downstream task could get into the queue.

### How to reproduce

Creat a SimpleHttpOperator task, request an api that need at least 30 min or

more to response. Trigger this task you could find even api has returned a

response, the task is still in running state.

### Anything else

_No response_

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of

Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #18370: Properly fix dagrun update state endpoint

uranusjr commented on a change in pull request #18370:

URL: https://github.com/apache/airflow/pull/18370#discussion_r713535348

##

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##

@@ -1162,16 +1162,19 @@ def test_should_raises_403_unauthorized(self):

class TestPatchDagRunState(TestDagRunEndpoint):

-@pytest.mark.need_serialized_dag(True)

@pytest.mark.parametrize("state", ["failed", "success"])

-@freeze_time(TestDagRunEndpoint.default_time)

-def test_should_respond_200(self, state, dag_maker, session):

+def test_should_respond_200(self, state, session):

dag_id = "TEST_DAG_ID"

dag_run_id = 'TEST_DAG_RUN_ID'

-with dag_maker(dag_id):

-DummyOperator(task_id='task_id')

-dr = dag_maker.create_dagrun(run_id=dag_run_id)

+date = datetime(2021, 1, 1)

+dag = DAG(dag_id, schedule_interval=None, start_date=date)

+task = DummyOperator(task_id='task_id', dag=dag)

+dag.tasks.append(task)

Review comment:

Why is this needed?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #18421: Correctly select DagRun.execution_date from db

uranusjr commented on a change in pull request #18421: URL: https://github.com/apache/airflow/pull/18421#discussion_r713525205 ## File path: airflow/models/dag.py ## @@ -1269,8 +1269,8 @@ def get_task_instances_before( ``base_date``, or more if there are manual task runs between the requested period, which does not count toward ``num``. """ -min_date = ( -session.query(DagRun) +min_date: Optional[datetime] = ( +session.query(DagRun.execution_date) Review comment: This line is the only relevant fix; the rest are type annotations I added for debugging, they should be useful in the future as well. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr opened a new pull request #18421: Correctly select DagRun.execution_date from db

uranusjr opened a new pull request #18421: URL: https://github.com/apache/airflow/pull/18421 Fix #3413. The importance of test coverage and type annotations… -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #18418: Fix task instance url in webserver utils

uranusjr commented on a change in pull request #18418:

URL: https://github.com/apache/airflow/pull/18418#discussion_r713520604

##

File path: airflow/www/utils.py

##

@@ -228,7 +228,7 @@ def task_instance_link(attr):

dag_id = attr.get('dag_id')

task_id = attr.get('task_id')

execution_date = attr.get('dag_run.execution_date') or

attr.get('execution_date') or timezone.utcnow()

-url = url_for('Airflow.task', dag_id=dag_id, task_id=task_id)

+url = url_for('Airflow.task', dag_id=dag_id, task_id=task_id,

execution_date=execution_date)

Review comment:

```suggestion

url = url_for('Airflow.task', dag_id=dag_id, task_id=task_id,

execution_date=execution_date.isoformat())

```

See

https://github.com/apache/airflow/commit/944dcfbb918050274fd3a1cc51d8fdf460ea2429#diff-9baa686d200246adbc21b33dc8e57a2cc395c53330b67d304949479db99eb191L229

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #18419: Fix ``docker-stack`` docs build

github-actions[bot] commented on pull request #18419: URL: https://github.com/apache/airflow/pull/18419#issuecomment-924498839 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest main at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham closed pull request #18306: Chart: warn when webserver secret key isn't set

jedcunningham closed pull request #18306: URL: https://github.com/apache/airflow/pull/18306 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #18420: Custom timetable must return aware datetimes

uranusjr commented on a change in pull request #18420: URL: https://github.com/apache/airflow/pull/18420#discussion_r713508561 ## File path: airflow/utils/timezone.py ## @@ -176,11 +176,11 @@ def parse(string: str, timezone=None) -> DateTime: def coerce_datetime(v: Union[None, dt.datetime, DateTime]) -> Optional[DateTime]: -"""Convert whatever is passed in to ``pendulum.DateTime``.""" +"""Convert whatever is passed in to an timezone-aware ``pendulum.DateTime``.""" if v is None: return None -if isinstance(v, DateTime): -return v if v.tzinfo is None: v = make_aware(v) +if isinstance(v, DateTime): +return v Review comment: ``pendulum.DateTime`` can still be naive (as demostrated by this PR’s existence), so we must call `make_aware` to be sure. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr opened a new pull request #18420: Custom timetable must return aware datetimes

uranusjr opened a new pull request #18420: URL: https://github.com/apache/airflow/pull/18420 The example included in the recent AIP-39 documentation change had a bug that returns naive datetime for runs scheduled after a Friday. This also fixes a bug in `coerce_datetime` that may return a naive `pendulum.DateTime` in some edge cases. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] alex-astronomer commented on issue #18217: Audit Logging for Variables, Connections, Pools

alex-astronomer commented on issue #18217: URL: https://github.com/apache/airflow/issues/18217#issuecomment-924485066 Upon further consideration, I'd like to not use a SQLAlchemy event to make this happen. The way that we handle audit logging right now happens (from what I've seen) mostly through decorators and I'd like to take a similar approach to this problem just for clarity. I feel like a sqlalchemy event could have the potential to be lost in the code in some obscure spot and I'd like to have the audit logging occur at the source of the change, right next to the code that makes that change. Right now after the research that I've done both the API and CLI call the `Variable.set(...)` `classmethod` when making their changes to variables. I'm starting with Audit Logging for setting Variables through CLI, API, and UI. What do you think about that @potiuk? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kazanzhy closed pull request #18394: Add hook_params in SqlSensor

kazanzhy closed pull request #18394: URL: https://github.com/apache/airflow/pull/18394 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch constraints-2-2 updated (17cff54 -> 60412d5)

This is an automated email from the ASF dual-hosted git repository. jedcunningham pushed a change to branch constraints-2-2 in repository https://gitbox.apache.org/repos/asf/airflow.git. from 17cff54 Update to latest source/no providers add 520291e Switch main to patched version of Flask OpenId add 247f530 Switched to released 1.3.0 version of Flask-OpenID add 2a5cd2f Updated constraints after fixing celery constraints add 8f65711 Updating constraints. Build id:1227697754 add 0ee0232 Bump importlib-resources add cdd43c5 Revert "Bump importlib-resources" add 1e48096 Updating constraints. Build id:1235775074 add 157e1d9 Bump importlib-resources add d966773 Fixed moto to non-dev version add bfc5ccb Manually bumped constraints to latest add 905b005 Updating constraints. Build id:1249723992 add 9301a9c Updating constraints. Build id:1251488358 add f416a4c8 Updating constraints. Build id:1251858004 add 2eb9e87 Updating constraints. Build id:1252340473 add f5ed7eb Updating constraints. Build id:1254678188 add 60412d5 Updating constraints. Build id:1258278603 No new revisions were added by this update. Summary of changes: constraints-3.6.txt | 310 + constraints-3.7.txt | 325 +- constraints-3.8.txt | 326 ++- constraints-3.9.txt | 323 +- constraints-no-providers-3.6.txt | 48 -- constraints-no-providers-3.7.txt | 52 +++--- constraints-no-providers-3.8.txt | 50 +++--- constraints-no-providers-3.9.txt | 47 +++-- constraints-source-providers-3.6.txt | 128 +++--- constraints-source-providers-3.7.txt | 142 --- constraints-source-providers-3.8.txt | 142 --- constraints-source-providers-3.9.txt | 141 --- 12 files changed, 1081 insertions(+), 953 deletions(-)

[airflow] 01/01: Bump version to 2.2.0b2

This is an automated email from the ASF dual-hosted git repository. jedcunningham pushed a commit to branch v2-2-test in repository https://gitbox.apache.org/repos/asf/airflow.git commit 63b1c270cb09ff897702dafda28c59559f9e6080 Author: Jed Cunningham <66968678+jedcunning...@users.noreply.github.com> AuthorDate: Tue Sep 21 16:45:33 2021 -0600 Bump version to 2.2.0b2 --- setup.py | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/setup.py b/setup.py index 923a0f5..0ca2c24 100644 --- a/setup.py +++ b/setup.py @@ -41,7 +41,7 @@ PY39 = sys.version_info >= (3, 9) logger = logging.getLogger(__name__) -version = '2.2.0.dev0' +version = '2.2.0b2' my_dir = dirname(__file__)

[airflow] branch v2-2-test updated (e994d36 -> 63b1c27)

This is an automated email from the ASF dual-hosted git repository. jedcunningham pushed a change to branch v2-2-test in repository https://gitbox.apache.org/repos/asf/airflow.git. omit e994d36 Add 2.2.0b1 changelog omit faff793 Bump version to `2.2.0b1` add 4ee8f82 Allow users to submit issues for 2.2.0beta1 (#18165) add 8593d7f Improves quick-start docker-compose warnings and documentation (#18164) add 3d6c86c Fix spelling mistake in documentation (#18167) add 3e3c48a Apply parent dag permissions to subdags. (#18160) add 0b2b711 Allow publishing Docker images with more pre-release versions (#18170) add f9969c1 Separate Installing from sources section and add more details (#18171) add db72f40 Remove redundant single quote from Breeze build image script (#18173) add e2d069f Set task state to failed when pod is DELETED while running (#18095) add 29af57b Fix usage of ``range(len())`` to ``enumerate`` (#18174) add 15a9945 Update various pre-commits (#18176) add d7aed84 Add note about params on trigger DAG page (#18166) add a6c9f95 Fix typo in task fail migration (#18180) add 1cf7240 Remove Brent from Collaborators (#18182) add f222aad Fix broken link in ``dev/REFRESHING_CI_CACHE.md`` (#18181) add 9d05b32 Doc: Use ``closer.lua`` script for downloading sources (#18179) add 42bbeb0 Fix minor issues in Airflow release guide (#18177) add 0239776 Deprecate default pod name in EKSPodOperator (#18036) add 2f1ed34 sets encoding to utf-8 by default while reading task logs (#17965) add 0c43e68 Automatically create section when migrating config (#16814) add 1cb456c Add official download page for providers (#18187) add 1fdde76 Fix typo in decorator test (#18191) add d6e7c45 Adding Variable.update method and improving detection of variable key collisions (#18159) add ec79da0 Doc: Improve installing from sources (#18194) add 81ebd78 Added upsert method on S3ToRedshift operator (#18027) add d119ae8 Rename LocalToAzureDataLakeStorageOperator to LocalFilesystemToADLSOperator (#18168) add 8ae2bb9 Fix error when create external table using table resource (#17998) add d3d847a Swap dag import error dropdown icons (#18207) add 2474f89 Migrate Google Cloud Build from Discovery API to Python SDK (#18184) add 9c8f7ac Add heartbeat to TriggererJob (#18129) add 9d49772 BugFix: Wipe ``next_kwargs`` and ``next_method`` on task failure (#18210) add 3b2b7e7 Upgrade ``importlib-resources`` version (#18209) add dd313a5 Omit ``airflow._vendor`` package in coverage report (#18221) add b7f366c Fix failing main due to #18209 (#18215) add ca45bba Chart: Allow running and waiting for DB Migrations using default image (#18218) add 27144bd Doc change: XCOM / Taskflow (#18212) add fe6a769 Add some basic metrics to the Triggerer (#18214) add 23a68fa Advanced Params using json-schema (#17100) add a9776d3 Remove loading dots even when last run data is empty (#18230) add 7a19124 Return explicit error on user-add for duplicated email (#18224) add 37ca990 Don't check for `__init__.py` under pycache folders. (#18238) add 778be79 Fix example dag of PostgresOperator (#18236) add c73004d Revert Changes to ``importlib-resources`` (#18250) add 292751c Make sure create_user arguments are keyword-ed (#18248) add 67fddbf Improves installing from sources pages for all components (#18251) add 848f206 Reduce lengths of the name, username and email fields for this test (#18263) add 2d4f3cb Adding missing `replace` param in docstring (#18241) add 9f7c10b Fix deleting of zipped Dags in Serialized Dag Table (#18243) add e7925d8 Fix external_executor_id not being set for manually run jobs (#17207) add 9a7243a Sort adopted tasks in _check_for_stalled_adopted_tasks method (#18208) add 902aeb7 Fix DB session handling in XCom.set. (#18240) add b0e91f6 Fix dag_run FK check in pre-db upgrade (#18266) add 55649f3 Add ``triggerer`` to ``./breeze start-airflow`` command (#18259) add 9ed87e5 show next run if not none (#18273) add 686c3d7 remove all reference to date in dropdown tests (#18271) add 29d700d Add metrics docs for triggerer metrics (#18254) add 184f394 Use try/except when closing temporary file in task_runner (#18269) add 671de3f Improved log handling for zombie tasks (#18277) add ed10edd Silence warnings in tests from using SubDagOperator (#18275) add c313feb Fix web view rendering errors without a DAG run (#18244) add 21d53ed Fix provider test acessing importlib-resources (#18228) add f8ba475 Make `XCom.get_one` return full, not abbreviated values (#18274) add 2dac083 Fixed wasb hook attempting to create container when getting a blob client (#18287) add 73044af Ch

[airflow] branch main updated (f74d0ab -> a5afd1b)

This is an automated email from the ASF dual-hosted git repository. jedcunningham pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git. from f74d0ab Require can_edit on DAG privileges to modify TaskInstances and DagRuns (#16634) add a5afd1b Update 2.2.0 changelog for b2 (#18417) No new revisions were added by this update. Summary of changes: CHANGELOG.txt | 57 - 1 file changed, 56 insertions(+), 1 deletion(-)

[GitHub] [airflow] jedcunningham merged pull request #18417: Update 2.2.0 changelog for b2

jedcunningham merged pull request #18417: URL: https://github.com/apache/airflow/pull/18417 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham commented on pull request #18417: Update 2.2.0 changelog for b2

jedcunningham commented on pull request #18417: URL: https://github.com/apache/airflow/pull/18417#issuecomment-924481855 Docs build failure is unrelated and will be fixed by #18419. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham opened a new pull request #18419: Fix ``docker-stack`` docs build

jedcunningham opened a new pull request #18419: URL: https://github.com/apache/airflow/pull/18419 Missing newlines in the new pre-commit hook is causing the `docker-stack` docs build to fail: e.g: https://github.com/apache/airflow/pull/18417/checks?check_run_id=3669346986 ``` ## Start docs build errors summary ## == docker-stack == -- Error 1 WARNING: Explicit markup ends without a blank line; unexpected unindent. File path: docker-stack/build-arg-ref.rst (73) 68 | +--+--+-+ 69 | 70 | List of default extras in the production Dockerfile: 71 | 72 | .. BEGINNING OF EXTRAS LIST UPDATED BY PRE COMMIT > 73 | * amazon 74 | * async 75 | * celery 76 | * cncf.kubernetes 77 | * dask 78 | * docker -- Error 2 WARNING: Bullet list ends without a blank line; unexpected unindent. File path: docker-stack/build-arg-ref.rst (99) 94 | * sftp 95 | * slack 96 | * ssh 97 | * statsd 98 | * virtualenv > 99 | .. END OF EXTRAS LIST UPDATED BY PRE COMMIT 100 | 101 | Image optimization options 102 | .. 103 | 104 | The main advantage of Customization method of building Airflow image, is that it allows to build highly optimized image because ## End docs build errors summary ## ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #16498: gitpodify Apache Airflow - online development workspace

github-actions[bot] commented on pull request #16498: URL: https://github.com/apache/airflow/pull/16498#issuecomment-924475513 This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #17354: Add Docker Sensor

github-actions[bot] commented on pull request #17354: URL: https://github.com/apache/airflow/pull/17354#issuecomment-924475476 This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham closed pull request #18417: Update 2.2.0 changelog for b2

jedcunningham closed pull request #18417: URL: https://github.com/apache/airflow/pull/18417 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy opened a new pull request #18418: Fix task instance url in webserver utils

ephraimbuddy opened a new pull request #18418: URL: https://github.com/apache/airflow/pull/18418 `execution_date` was omitted when generating the task instance URL. This PR fixes it --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #18417: Update 2.2.0 changelog for b2

github-actions[bot] commented on pull request #18417: URL: https://github.com/apache/airflow/pull/18417#issuecomment-924434477 The PR is likely ready to be merged. No tests are needed as no important environment files, nor python files were modified by it. However, committers might decide that full test matrix is needed and add the 'full tests needed' label. Then you should rebase it to the latest main or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham commented on a change in pull request #18417: Update 2.2.0 changelog for b2

jedcunningham commented on a change in pull request #18417: URL: https://github.com/apache/airflow/pull/18417#discussion_r713460021 ## File path: CHANGELOG.txt ## @@ -1,13 +1,20 @@ -Airflow 2.2.0b1, TBD +Airflow 2.2.0b2, TBD - New Features - AIP-39: Handle DAG scheduling with timetables (#15397) - AIP-39: ``DagRun.data_interval_start|end`` (#16352) +- Add docs for AIP 39: Timetables (#17552) - AIP-40: Add Deferrable "Async" Tasks (#15389) +- Add a Docker Taskflow decorator (#15330) - Add Airflow Standalone command (#15826) +- Production-level support for MSSQL (#18382) Review comment: Probably should be a docs-only change instead on second look. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #18417: Update 2.2.0 changelog for b2

kaxil commented on a change in pull request #18417: URL: https://github.com/apache/airflow/pull/18417#discussion_r713457018 ## File path: CHANGELOG.txt ## @@ -119,6 +133,33 @@ Improvements Bug Fixes " +- Properly handle ti state difference between executor and scheduler (#17819) +- Fix stuck "queued" tasks in KubernetesExecutor (#18152) +- Don't permanently add zip DAGs to ``sys.path`` (#18384) +- Fix random deadlocks in MSSQL database (#18362) +- Deactivating DAGs which have been removed from files (#17121) +- Dag bulk_sync_to_db dag_tag only remove not exists (#8231) +- Graceful scheduler shutdown on error (#18092) +- Fix mini scheduler not respecting ``wait_for_downstream`` dep (#18338) +- Pass exception to ``run_finished_callback`` for Debug Executor (#17983) +- Make `XCom.get_one` return full, not abbreviated values (#18274) +- Fix web view rendering errors without a DAG run (#18244) +- Use try/except when closing temporary file in task_runner (#18269) +- show next run if not none (#18273) +- Fix dag_run FK check in pre-db upgrade (#18266) +- Fix DB session handling in XCom.set. (#18240) Review comment: ```suggestion - Fix DB session handling in ``XCom.set`` (#18240) ``` ## File path: CHANGELOG.txt ## @@ -37,6 +44,13 @@ New Features Improvements +- Require can_edit on DAG privileges to modify TaskInstances and DagRuns (#16634) +- Make Kubernetes job description fit on one log line (#18377) +- Always draw borders if task instance state is null or undefined (#18033) +- Inclusive Language (#18349) +- Improved log handling for zombie tasks (#18277) +- Adding Variable.update method and improving detection of variable key collisions (#18159) Review comment: ```suggestion - Adding ``Variable.update`` method and improving detection of variable key collisions (#18159) ``` ## File path: CHANGELOG.txt ## @@ -173,6 +214,14 @@ Bug Fixes Doc only changes +- Explain scheduler fine-tuning better (#18356) +- Added example JSON for airflow pools import (#18376) +- add sla_miss_callback section to the documentation (#18305) Review comment: ```suggestion - Add ``sla_miss_callback`` section to the documentation (#18305) ``` ## File path: CHANGELOG.txt ## @@ -119,6 +133,33 @@ Improvements Bug Fixes " +- Properly handle ti state difference between executor and scheduler (#17819) +- Fix stuck "queued" tasks in KubernetesExecutor (#18152) +- Don't permanently add zip DAGs to ``sys.path`` (#18384) +- Fix random deadlocks in MSSQL database (#18362) +- Deactivating DAGs which have been removed from files (#17121) +- Dag bulk_sync_to_db dag_tag only remove not exists (#8231) +- Graceful scheduler shutdown on error (#18092) +- Fix mini scheduler not respecting ``wait_for_downstream`` dep (#18338) +- Pass exception to ``run_finished_callback`` for Debug Executor (#17983) +- Make `XCom.get_one` return full, not abbreviated values (#18274) +- Fix web view rendering errors without a DAG run (#18244) +- Use try/except when closing temporary file in task_runner (#18269) +- show next run if not none (#18273) +- Fix dag_run FK check in pre-db upgrade (#18266) +- Fix DB session handling in XCom.set. (#18240) +- Sort adopted tasks in _check_for_stalled_adopted_tasks method (#18208) +- Fix external_executor_id not being set for manually run jobs (#17207) +- Fix deleting of zipped Dags in Serialized Dag Table (#18243) +- Return explicit error on user-add for duplicated email (#18224) +- Remove loading dots even when last run data is empty (#18230) +- BugFix: Wipe ``next_kwargs`` and ``next_method`` on task failure (#18210) +- Add heartbeat to TriggererJob (#18129) Review comment: ```suggestion - Add heartbeat to ``TriggererJob`` (#18129) ``` ## File path: CHANGELOG.txt ## @@ -1,13 +1,20 @@ -Airflow 2.2.0b1, TBD +Airflow 2.2.0b2, TBD - New Features - AIP-39: Handle DAG scheduling with timetables (#15397) - AIP-39: ``DagRun.data_interval_start|end`` (#16352) +- Add docs for AIP 39: Timetables (#17552) - AIP-40: Add Deferrable "Async" Tasks (#15389) +- Add a Docker Taskflow decorator (#15330) - Add Airflow Standalone command (#15826) +- Production-level support for MSSQL (#18382) Review comment: Probably worth moving to Misc/Internal ```suggestion - Dockerfile: Production-level support for MSSQL (#18382) ``` ## File path: CHANGELOG.txt ## @@ -119,6 +133,33 @@ Improvements Bug Fixes " +- Properly handle ti state difference between executor and scheduler (#17819) +- Fix stuck "queued" tasks in KubernetesExecutor (#18152) +- Don't permanently add zip DAGs to ``sys.path`` (#18384) +- Fix random deadlocks in MSSQL database (#18362) +- Deactivating DAGs which have been removed from files (#17121) +- Dag bulk_sync_to_db dag_tag only remove not exists

[GitHub] [airflow] maxcountryman commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

maxcountryman commented on issue #13542: URL: https://github.com/apache/airflow/issues/13542#issuecomment-924426797 @Jorricks thanks for your note--we'll do both 1. and 2. when this happens next. We're actually using the Celery executor with Redis; I don't see any weird resource contention or other obvious issues between the ECS services. The overall status of the DagRun in these situations is "running". -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] andrewgodwin commented on issue #18392: TriggerEvent fires, and then defers a second time (doesn't fire a second time though).

andrewgodwin commented on issue #18392: URL: https://github.com/apache/airflow/issues/18392#issuecomment-924416527 Yup, @MatrixManAtYrService and I discussed this yesterday. It's not a bad bug - triggers are designed to be re-entrant, and it won't resume the task twice - just something that needs cleaning up a little to save some cycles. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk edited a comment on pull request #18382: Production-level support for MSSQL

potiuk edited a comment on pull request #18382: URL: https://github.com/apache/airflow/pull/18382#issuecomment-924410641 > How much does this add to the docker images size btw? It increases the size of the image by 4MB - from 972 MB to 976 MB (~ 0.5%) See the discussion here: https://apache-airflow.slack.com/archives/CQAMHKWSJ/p1632131354042400 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kazanzhy commented on a change in pull request #17592: Support for passing arguments to SqlSensor underlying hooks

kazanzhy commented on a change in pull request #17592:

URL: https://github.com/apache/airflow/pull/17592#discussion_r712553155

##

File path: tests/sensors/test_sql_sensor.py

##

@@ -242,6 +242,22 @@ def test_sql_sensor_postgres_poke_invalid_success(self,

mock_hook):

op.poke(None)

assert "self.success is present, but not callable -> [1]" ==

str(ctx.value)

+@mock.patch('airflow.sensors.sql.BaseHook')

+def test_sql_sensor_bigquery_hook_kwargs(self, mock_hook):

+op = SqlSensor(

+task_id='sql_sensor_check',

+conn_id='postgres_default',

+sql="SELECT 1",

+hook_kwargs={

+'use_legacy_sql': False,

+'location': 'test_location',

+},

+)

+

+mock_hook.get_connection('google_cloud_default').conn_type =

"google_cloud_platform"

+assert op._get_hook().use_legacy_sql

+assert op._get_hook().location == 'test_location'

Review comment:

I think there may be the AssertionError due to

`op._get_hook().use_legacy_sql` is False.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #18382: Production-level support for MSSQL

potiuk commented on pull request #18382: URL: https://github.com/apache/airflow/pull/18382#issuecomment-924410641 > How much does this add to the docker images size btw? It increases the size of the image by 4MB - from 972 to 976. See the discussion here: https://apache-airflow.slack.com/archives/CQAMHKWSJ/p1632131354042400 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] SamWheating commented on a change in pull request #18370: Properly fix dagrun update state endpoint

SamWheating commented on a change in pull request #18370: URL: https://github.com/apache/airflow/pull/18370#discussion_r713422834 ## File path: airflow/api_connexion/endpoints/dag_run_endpoint.py ## @@ -302,6 +306,10 @@ def update_dag_run_state(dag_id: str, dag_run_id: str, session) -> dict: raise BadRequest(detail=str(err)) state = post_body['state'] -dag_run.set_state(state=DagRunState(state)) -session.merge(dag_run) +dag = current_app.dag_bag.get_dag(dag_id) +if state == State.SUCCESS: +set_dag_run_state_to_success(dag, dag_run.execution_date, commit=True) +else: +set_dag_run_state_to_failed(dag, dag_run.execution_date, commit=True) Review comment: My mistake, I didn't know about - Thanks for the explanation. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] SamWheating commented on a change in pull request #18370: Properly fix dagrun update state endpoint

SamWheating commented on a change in pull request #18370: URL: https://github.com/apache/airflow/pull/18370#discussion_r713422834 ## File path: airflow/api_connexion/endpoints/dag_run_endpoint.py ## @@ -302,6 +306,10 @@ def update_dag_run_state(dag_id: str, dag_run_id: str, session) -> dict: raise BadRequest(detail=str(err)) state = post_body['state'] -dag_run.set_state(state=DagRunState(state)) -session.merge(dag_run) +dag = current_app.dag_bag.get_dag(dag_id) +if state == State.SUCCESS: +set_dag_run_state_to_success(dag, dag_run.execution_date, commit=True) +else: +set_dag_run_state_to_failed(dag, dag_run.execution_date, commit=True) Review comment: Oh I didn't see that - super cool. Thanks for clearing this up. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: Require can_edit on DAG privileges to modify TaskInstances and DagRuns (#16634)

This is an automated email from the ASF dual-hosted git repository.

jedcunningham pushed a commit to branch main

in repository https://gitbox.apache.org/repos/asf/airflow.git

The following commit(s) were added to refs/heads/main by this push:

new f74d0ab Require can_edit on DAG privileges to modify TaskInstances

and DagRuns (#16634)

f74d0ab is described below

commit f74d0ab38e00b83b49e20d410736e2a8c42de095

Author: Jorrick Sleijster

AuthorDate: Tue Sep 21 23:04:38 2021 +0200

Require can_edit on DAG privileges to modify TaskInstances and DagRuns

(#16634)

---

INTHEWILD.md | 1 +

.../api_connexion/endpoints/dag_run_endpoint.py| 2 +-

.../endpoints/task_instance_endpoint.py| 4 +-

airflow/www/views.py | 134 +++--

.../endpoints/test_dag_run_endpoint.py | 23 +++-

.../endpoints/test_task_instance_endpoint.py | 58 +++--

tests/www/views/test_views_acl.py | 2 +-

tests/www/views/test_views_dagrun.py | 77 +++-

tests/www/views/test_views_decorators.py | 56 -

tests/www/views/test_views_tasks.py| 83 -

10 files changed, 386 insertions(+), 54 deletions(-)

diff --git a/INTHEWILD.md b/INTHEWILD.md

index c1a5b32..588db42 100644

--- a/INTHEWILD.md

+++ b/INTHEWILD.md

@@ -33,6 +33,7 @@ Currently, **officially** using Airflow:

1. [Accenture](https://www.accenture.com/au-en)

[[@nijanthanvijayakumar](https://github.com/nijanthanvijayakumar)]

1. [AdBOOST](https://www.adboost.sk) [[AdBOOST](https://github.com/AdBOOST)]

1. [Adobe](https://www.adobe.com/)

[[@mishikaSingh](https://github.com/mishikaSingh),

[@ramandumcs](https://github.com/ramandumcs),

[@vardancse](https://github.com/vardancse)]

+1. [Adyen](https://www.adyen.com/) [[@jorricks](https://github.com/jorricks)]

1. [Agari](https://github.com/agaridata) [[@r39132](https://github.com/r39132)]

1. [Agoda](https://agoda.com) [[@akki](https://github.com/akki)]

1. [Airbnb](https://airbnb.io/)

[[@mistercrunch](https://github.com/mistercrunch),

[@artwr](https://github.com/artwr)]

diff --git a/airflow/api_connexion/endpoints/dag_run_endpoint.py

b/airflow/api_connexion/endpoints/dag_run_endpoint.py

index 29e0efc..9327804 100644

--- a/airflow/api_connexion/endpoints/dag_run_endpoint.py

+++ b/airflow/api_connexion/endpoints/dag_run_endpoint.py

@@ -40,7 +40,7 @@ from airflow.utils.types import DagRunType

@security.requires_access(

[

-(permissions.ACTION_CAN_READ, permissions.RESOURCE_DAG),

+(permissions.ACTION_CAN_EDIT, permissions.RESOURCE_DAG),

(permissions.ACTION_CAN_DELETE, permissions.RESOURCE_DAG_RUN),

]

)

diff --git a/airflow/api_connexion/endpoints/task_instance_endpoint.py

b/airflow/api_connexion/endpoints/task_instance_endpoint.py

index 361d29e..cfa27ac 100644

--- a/airflow/api_connexion/endpoints/task_instance_endpoint.py

+++ b/airflow/api_connexion/endpoints/task_instance_endpoint.py

@@ -227,7 +227,7 @@ def get_task_instances_batch(session=None):

@security.requires_access(

[

-(permissions.ACTION_CAN_READ, permissions.RESOURCE_DAG),

+(permissions.ACTION_CAN_EDIT, permissions.RESOURCE_DAG),

(permissions.ACTION_CAN_READ, permissions.RESOURCE_DAG_RUN),

(permissions.ACTION_CAN_EDIT, permissions.RESOURCE_TASK_INSTANCE),

]

@@ -261,7 +261,7 @@ def post_clear_task_instances(dag_id: str, session=None):

@security.requires_access(

[

-(permissions.ACTION_CAN_READ, permissions.RESOURCE_DAG),

+(permissions.ACTION_CAN_EDIT, permissions.RESOURCE_DAG),

(permissions.ACTION_CAN_READ, permissions.RESOURCE_DAG_RUN),

(permissions.ACTION_CAN_EDIT, permissions.RESOURCE_TASK_INSTANCE),

]

diff --git a/airflow/www/views.py b/airflow/www/views.py

index ee09908..b2c8712 100644

--- a/airflow/www/views.py

+++ b/airflow/www/views.py

@@ -27,9 +27,10 @@ import sys

import traceback

from collections import defaultdict

from datetime import timedelta

+from functools import wraps

from json import JSONDecodeError

from operator import itemgetter

-from typing import Any, Iterable, List, Optional, Tuple

+from typing import Any, Callable, Iterable, List, Optional, Set, Tuple, Union

from urllib.parse import parse_qsl, unquote, urlencode, urlparse

import lazy_object_proxy

@@ -1515,7 +1516,7 @@ class Airflow(AirflowBaseView):

@expose('/run', methods=['POST'])

@auth.has_access(

[

-(permissions.ACTION_CAN_READ, permissions.RESOURCE_DAG),

+(permissions.ACTION_CAN_EDIT, permissions.RESOURCE_DAG),

(permissions.ACTION_CAN_CREATE,

permissions.RESOURCE_TASK_INSTANCE),

]

)

@@ -1793,7 +1794,7 @@ class Airflow(AirflowBaseView):

@expose('/clear', methods=['POST'])

@auth.has_access(

[

-(permissions.ACTION_CAN_READ, permissions.RESOURCE_DAG),

+

[GitHub] [airflow] jedcunningham merged pull request #16634: Require can_edit on DAG privileges to modify TaskInstances and DagRuns

jedcunningham merged pull request #16634: URL: https://github.com/apache/airflow/pull/16634 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

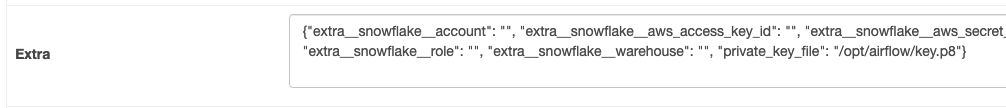

[GitHub] [airflow] weiminmei commented on issue #18102: Snowflake provider not accepting private_key_file in extras

weiminmei commented on issue #18102: URL: https://github.com/apache/airflow/issues/18102#issuecomment-924381869 Hi, On version 2.2.0b1, looks like this is addressed.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kimyen commented on issue #18317: Better Backfill User Experience

kimyen commented on issue #18317:

URL: https://github.com/apache/airflow/issues/18317#issuecomment-924377251

> What I'm after is a way to insert multiple dag-runs for historical dates

in bulk from the UI, possibly with some tasks already marked as

complete/skipped, as well as clearing tasks/dagruns between certain dates.

@thejens without knowing the explicit use case, seeing all the things you

listed here prompt me to this doc I wrote. I think that there are already

multiple ways to "backfill" using the scheduler. See Manual Backfill > Airflow

builtin options.

*Notes that the "backfill request view" in the below doc is for the first

backfill tool I mentioned above.*

# How to backfill your DAG

## Background

There are multiple ways to backfill a DAG in Airflow. We will attempt to

describe when to use each option.

### Use case 1: New DAG

A new DAG is created on April 4th, 2020 and we want the DAG to start

collecting data since March 1st, 2020.

To achieve this, while writing the DAG definition, we can set `catchup=True`

and `"start_date": datetime(2020, 3, 1)` in the DAG's `default_args`.

When the code is deployed to production, the backfill (from March 1st to

current time) is automatically started by the scheduler.

### Use case 2: Extend DAG runs further in the past

An existing DAG has DAG runs starting from March 1st, 2020. We want to

extend it to January 1st, 2020. We can achieve this by:

- ensuring that the DAG has `catchup=True`, and

- change the start date to January 1st, 2020

When the code is deployed to production, the backfill (from January 1st to

March 1st) is automatically started by the scheduler.

**If there are any successful DAG runs after the start date, Airflow is not

going to `catchup`. See [Start from Dag Runs view](#start-from-dag-runs-view)

to delete the successful DAG run and trigger the catchup process.**

This can also be achieved by manually backfilling the DAG from January 1st

to March 1st. See [manual backfill section](#manual-backfill).

### Use case 3: DAG logic change

An existing DAG was used to calculated some metrics. However, the

calculations need to be updated, and all past successful DAG runs need to be

rerun to update the resulting data for those days.

For example, the change was deployed to production on May 1st, 2020. The May

1st DAG run is then scheduled to run and uses the new DAG logic. However, all