[GitHub] [airflow] dstandish commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator

dstandish commented on a change in pull request #18447: URL: https://github.com/apache/airflow/pull/18447#discussion_r717216128 ## File path: tests/providers/amazon/aws/hooks/test_redshift_statement.py ## @@ -0,0 +1,72 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. +# +import json +import unittest +from unittest import mock + +from airflow.models import Connection +from airflow.providers.amazon.aws.hooks.redshift_statement import RedshiftStatementHook + + +class TestRedshiftStatementHookConn(unittest.TestCase): +def setUp(self): +super().setUp() + +self.connection = Connection(login='login', password='password', host='host', port=5439, schema="dev") + +class UnitTestRedshiftStatementHook(RedshiftStatementHook): +conn_name_attr = "redshift_conn_id" +conn_type = 'redshift+redshift_connector' + +self.db_hook = UnitTestRedshiftStatementHook() +self.db_hook.get_connection = mock.Mock() +self.db_hook.get_connection.return_value = self.connection + +def test_get_uri(self): +uri_shouldbe = 'redshift+redshift_connector://login:password@host:5439/dev' +x = self.db_hook.get_uri() +assert uri_shouldbe == x Review comment: ```suggestion assert x == uri_shoudbe ``` the convention with pytest is `assert actual == expected` nit pick here, but would also be easier to read / more conventional if you called it `expected` or `uri_expected` instead of `uri_shouldbe` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] dstandish commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator

dstandish commented on a change in pull request #18447:

URL: https://github.com/apache/airflow/pull/18447#discussion_r717131359

##

File path: airflow/providers/amazon/aws/hooks/redshift_statement.py

##

@@ -0,0 +1,131 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+"""Execute statements against Amazon Redshift, using redshift_connector."""

+try:

+from functools import cached_property

+except ImportError:

+from cached_property import cached_property

+from typing import Dict, Union

+

+import redshift_connector

+from redshift_connector import Connection as RedshiftConnection

+

+from airflow.hooks.dbapi import DbApiHook

+

+

+class RedshiftStatementHook(DbApiHook):

+"""

+Execute statements against Amazon Redshift, using redshift_connector

+

+This hook requires the redshift_conn_id connection. This connection must

+be initialized with the host, port, login, password. Additional connection

+options can be passed to extra as a JSON string.

+

+:param redshift_conn_id: reference to

+:ref:`Amazon Redshift connection id`

+:type redshift_conn_id: str

+

+.. note::

+get_sqlalchemy_engine() and get_uri() depend on

sqlalchemy-amazon-redshift

+"""

+

+conn_name_attr = 'redshift_conn_id'

+default_conn_name = 'redshift_default'

+conn_type = 'redshift+redshift_connector'

+hook_name = 'Amazon Redshift'

+supports_autocommit = True

+

+@staticmethod

+def get_ui_field_behavior() -> Dict:

+"""Returns custom field behavior"""

+return {

+"hidden_fields": [],

+"relabeling": {'login': 'User', 'schema': 'Database'},

+}

+

+def __init__(self, *args, **kwargs) -> None:

+super().__init__(*args, **kwargs)

+

+@cached_property

+def conn(self):

+return self.get_connection(

+self.redshift_conn_id # type: ignore[attr-defined] # pylint:

disable=no-member

+)

+

+def _get_conn_params(self) -> Dict[str, Union[str, int]]:

+"""Helper method to retrieve connection args"""

+conn = self.conn

+

+conn_params: Dict[str, Union[str, int]] = {}

+

+if conn.login:

+conn_params['user'] = conn.login

+if conn.password:

+conn_params['password'] = conn.password

+if conn.host:

+conn_params['host'] = conn.host

+if conn.port:

+conn_params['port'] = conn.port

+if conn.schema:

+conn_params['database'] = conn.schema

+

+return conn_params

+

+def get_uri(self) -> str:

+"""

+Override DbApiHook get_uri method for get_sqlalchemy_engine()

+

+.. note::

+Value passed to connection extra parameter will be excluded

+from returned uri but passed to get_sqlalchemy_engine()

+by default

+"""

+from sqlalchemy.engine.url import URL

+

+conn_params = self._get_conn_params()

+

+conn = self.conn

+

+conn_type = conn.conn_type or RedshiftStatementHook.conn_type

+

+if 'user' in conn_params:

+conn_params['username'] = conn_params.pop('user')

+

+return URL(drivername=conn_type, **conn_params).__str__()

Review comment:

```suggestion

return str(URL(drivername=conn_type, **conn_params))

```

I believe `str()` is preferred to calling `__str__()` directly

##

File path: airflow/providers/amazon/aws/operators/redshift.py

##

@@ -0,0 +1,73 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

[GitHub] [airflow] mik-laj commented on a change in pull request #18563: Test kubernetes refresh config

mik-laj commented on a change in pull request #18563:

URL: https://github.com/apache/airflow/pull/18563#discussion_r717209660

##

File path: tests/kubernetes/test_refresh_config.py

##

@@ -35,3 +43,64 @@ def

test_parse_timestamp_should_convert_regular_timezone_to_unix_timestamp(self)

def test_parse_timestamp_should_throw_exception(self):

with pytest.raises(ParserError):

_parse_timestamp("foobar")

+

+def test_get_kube_config_loader_for_yaml_file(self):

+refresh_kube_config_loader =

_get_kube_config_loader_for_yaml_file('./kube_config')

+

+assert refresh_kube_config_loader is not None

+

+assert refresh_kube_config_loader.current_context['name'] ==

'federal-context'

+

+context = refresh_kube_config_loader.current_context['context']

+assert context is not None

+assert context['cluster'] == 'horse-cluster'

+assert context['namespace'] == 'chisel-ns'

+assert context['user'] == 'green-user'

+

+def test_get_api_key_with_prefix(self):

+

+refresh_config = RefreshConfiguration()

+refresh_config.api_key['key'] = '1234'

+assert refresh_config is not None

+

+api_key = refresh_config.get_api_key_with_prefix("key")

+

+assert api_key == '1234'

+

+def test_refresh_kube_config_loader(self):

+

+current_context =

_get_kube_config_loader_for_yaml_file('./kube_config').current_context

+

+config_dict = {}

+config_dict['current-context'] = 'federal-context'

+config_dict['contexts'] = []

+config_dict['contexts'].append(current_context)

+

+config_dict['clusters'] = []

+

+cluster_config = {}

+cluster_config['api-version'] = 'v1'

+cluster_config['server'] = 'http://cow.org:8080'

+cluster_config['name'] = 'horse-cluster'

+cluster_root_config = {}

+cluster_root_config['cluster'] = cluster_config

+cluster_root_config['name'] = 'horse-cluster'

+config_dict['clusters'].append(cluster_root_config)

+

+refresh_kube_config_loader =

RefreshKubeConfigLoader(config_dict=config_dict)

+refresh_kube_config_loader._user = {}

+refresh_kube_config_loader._user['exec'] = 'test'

+

+config_node = ConfigNode('command', 'test')

+config_node.__dict__['apiVersion'] = '2.0'

+config_node.__dict__['command'] = 'test'

+

+ExecProvider.__init__ = Mock()

Review comment:

Can you use `unittest.mock` here to avoid side-effects?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] josh-fell opened a new pull request #18565: Updating the Elasticsearch example DAG to use the TaskFlow API

josh-fell opened a new pull request #18565: URL: https://github.com/apache/airflow/pull/18565 Related: #9415 This was missed from #18278 which updated miscellaneous example DAGs in providers to use the TaskFlow API. This PR updates the example DAG for Elasticsearch in the same manner. Also there is a refactoring of `default_args` similar to previous example DAG PRs. --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] josh-fell opened a new pull request #18564: Adding `task_group` parameter to the `BaseOperator` docstring

josh-fell opened a new pull request #18564: URL: https://github.com/apache/airflow/pull/18564 In the `BaseOperator` docstring the `task_group` parameter was missing and therefore missing from the documentation. --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] subkanthi opened a new pull request #18563: Test kubernetes refresh config

subkanthi opened a new pull request #18563: URL: https://github.com/apache/airflow/pull/18563 --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] r-richmond commented on issue #14396: Make context less nebulous

r-richmond commented on issue #14396: URL: https://github.com/apache/airflow/issues/14396#issuecomment-928642301 @kaxil Noticed this keeps getting pushed. Can you provide any additional context? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] josh-fell opened a new pull request #18562: Updating core example DAGs to use TaskFlow API where applicable

josh-fell opened a new pull request #18562: URL: https://github.com/apache/airflow/pull/18562 Related: #9415 This PR aims to replace the use of PythonOperator tasks for the TaskFlow API in several core example DAGs. Additionally, there are instances of replacing `trigger_rule` values with the appropriate `TriggerRule` attr instead of the literal string, removing an unnecessary `dag` arg in _example_skip_dag_, and replacing `PythonOperator` in _example_complex_ for `BashOperator` (since it was the only task that wasn't a `BashOperator` and using a `PythonOperator` task really wasn't adding any value to the example). --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ShakaibKhan commented on issue #17487: Make gantt view to show also retries

ShakaibKhan commented on issue #17487: URL: https://github.com/apache/airflow/issues/17487#issuecomment-928564345 I would like to try implementing this -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #18561: Update s3_list.py

boring-cyborg[bot] commented on pull request #18561: URL: https://github.com/apache/airflow/pull/18561#issuecomment-928522307 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (flake8, mypy and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/main/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/main/docs/apache-airflow/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/main/BREEZE.rst) for testing locally, it’s a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Please follow [ASF Code of Conduct](https://www.apache.org/foundation/policies/conduct) for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better . In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://s.apache.org/airflow-slack -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Xilorole opened a new pull request #18561: Update s3_list.py

Xilorole opened a new pull request #18561:

URL: https://github.com/apache/airflow/pull/18561

removed inappropriate character `{` from the error message

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] GaoJiaChengPaul edited a comment on issue #16881: Re-deploy scheduler tasks failing with SIGTERM on K8s executor

GaoJiaChengPaul edited a comment on issue #16881: URL: https://github.com/apache/airflow/issues/16881#issuecomment-928504787 Hi All, We are having the same problem as below discussion. In our case we are running 2 schedulers in two different clusters with Kubernetes Executor. #18455 @ashb Hi Ashb, Any suggestions about this? Do we need to reset a running task? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] GaoJiaChengPaul commented on issue #16881: Re-deploy scheduler tasks failing with SIGTERM on K8s executor

GaoJiaChengPaul commented on issue #16881: URL: https://github.com/apache/airflow/issues/16881#issuecomment-928504787 Hi All, We are having the same problem as below discussion. In our case we are running 2 schedulers in two different clusters with Kubernetes Executor. #18455 @ashb Hi Ashb, Any suggestions about this? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Brooke-white commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator

Brooke-white commented on a change in pull request #18447:

URL: https://github.com/apache/airflow/pull/18447#discussion_r717126873

##

File path: airflow/providers/amazon/aws/hooks/redshift_statement.py

##

@@ -0,0 +1,159 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+"""Execute statements against Amazon Redshift, using redshift_connector."""

+

+from typing import Callable, Dict, Optional, Union

+

+import redshift_connector

+from redshift_connector import Connection as RedshiftConnection

+

+from airflow.hooks.dbapi import DbApiHook

+

+

+class RedshiftStatementHook(DbApiHook):

+"""

+Execute statements against Amazon Redshift, using redshift_connector

+

+This hook requires the redshift_conn_id connection. This connection must

+be initialized with the host, port, login, password. Additional connection

+options can be passed to extra as a JSON string.

+

+:param redshift_conn_id: reference to

+:ref:`Amazon Redshift connection id`

+:type redshift_conn_id: str

+

+.. note::

+get_sqlalchemy_engine() and get_uri() depend on

sqlalchemy-amazon-redshift

+"""

+

+conn_name_attr = 'redshift_conn_id'

+default_conn_name = 'redshift_default'

+conn_type = 'redshift+redshift_connector'

+hook_name = 'Amazon Redshift'

+supports_autocommit = True

+

+@staticmethod

+def get_ui_field_behavior() -> Dict:

+"""Returns custom field behavior"""

+return {

+"hidden_fields": [],

+"relabeling": {'login': 'User', 'schema': 'Database'},

+}

+

+def __init__(self, *args, **kwargs) -> None:

+super().__init__(*args, **kwargs)

+

+def _get_conn_params(self) -> Dict[str, Union[str, int]]:

+"""Helper method to retrieve connection args"""

+conn = self.get_connection(

+self.redshift_conn_id # type: ignore[attr-defined] # pylint:

disable=no-member

+)

+

+conn_params: Dict[str, Union[str, int]] = {

+"user": conn.login or '',

+"password": conn.password or '',

+"host": conn.host or '',

+"port": conn.port or 5439,

+"database": conn.schema or '',

+}

+

+return conn_params

+

+def _get_conn_kwargs(self) -> Dict:

+"""Helper method to retrieve connection kwargs"""

+conn = self.get_connection(

Review comment:

cached property `conn` added, and `_get_conn_kwargs` removed in a1de44e

##

File path: airflow/providers/amazon/aws/hooks/redshift_statement.py

##

@@ -0,0 +1,159 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+"""Execute statements against Amazon Redshift, using redshift_connector."""

+

+from typing import Callable, Dict, Optional, Union

+

+import redshift_connector

+from redshift_connector import Connection as RedshiftConnection

+

+from airflow.hooks.dbapi import DbApiHook

+

+

+class RedshiftStatementHook(DbApiHook):

+"""

+Execute statements against Amazon Redshift, using redshift_connector

+

+This hook requires the redshift_conn_id connection. This connection must

+be initialized with the host, port, login, password. Additional connection

+options can be passed to extra as a JSON string.

+

+:param redshift_conn_id: reference to

+:ref:`Amazon Redshift connection id`

+:type redshift_conn_id: str

+

+.. note::

+get_sqlalchemy_engine() and get_uri() depend on

sqlalchemy-amazon-redshift

+"""

+

+

[GitHub] [airflow] github-actions[bot] closed pull request #16647: Move FABs base Security Manager into Airflow.

github-actions[bot] closed pull request #16647: URL: https://github.com/apache/airflow/pull/16647 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] closed pull request #16498: gitpodify Apache Airflow - online development workspace

github-actions[bot] closed pull request #16498: URL: https://github.com/apache/airflow/pull/16498 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] closed pull request #17354: Add Docker Sensor

github-actions[bot] closed pull request #17354: URL: https://github.com/apache/airflow/pull/17354 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #16795: GCP Dataflow - Fixed getting job status by job id

github-actions[bot] commented on pull request #16795: URL: https://github.com/apache/airflow/pull/16795#issuecomment-928481553 This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] t4n1o edited a comment on issue #18541: Error when running dag & something to do with parsing the start date

t4n1o edited a comment on issue #18541: URL: https://github.com/apache/airflow/issues/18541#issuecomment-928440208 Would it help if I upgraded airflow to a newer version (2.14)? The dag I'm running that caused this has `start_date` set to: ``` with DAG( 'Archives Mirror', default_args=default_args, description='A simple tutorial DAG', schedule_interval=timedelta(days=1), start_date=days_ago(2), tags=['example'], ) as dag: t1 = BashOperator( task_id='mirror_csv_gz_archives', bash_command='cd /opt/repo/ && /opt/repo/venv/bin/python -m path.to.my.mirror_script', ) ``` I copied days_ago(2) from this sample tutorial: https://airflow.apache.org/docs/apache-airflow/stable/tutorial.html -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] t4n1o edited a comment on issue #18541: Error when running dag & something to do with parsing the start date

t4n1o edited a comment on issue #18541: URL: https://github.com/apache/airflow/issues/18541#issuecomment-928440208 Would it help if I upgraded airflow to a newer version (2.14)? The dag I'm running that caused this has `start_date` set to: ``` with DAG( 'Archives Mirror', default_args=default_args, description='A simple tutorial DAG', schedule_interval=timedelta(days=1), start_date=days_ago(2), tags=['example'], ) as dag: t1 = BashOperator( task_id='mirror_csv_gz_archives', bash_command='set -e; cd /opt/repo/; /opt/repo/venv/bin/python -m path.to.my.mirror_script', ) ``` I copied days_ago(2) from this sample tutorial: https://airflow.apache.org/docs/apache-airflow/stable/tutorial.html -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] t4n1o edited a comment on issue #18541: Error when running dag & something to do with parsing the start date

t4n1o edited a comment on issue #18541: URL: https://github.com/apache/airflow/issues/18541#issuecomment-928440208 Would it help if I upgraded airflow to a newer version (2.14)? The dag I'm running that caused this has `start_date` set to: ``` with DAG( 'Archives Mirror', default_args=default_args, description='A simple tutorial DAG', schedule_interval=timedelta(days=1), start_date=days_ago(2), tags=['example'], ) as dag: t1 = BashOperator( task_id='mirror_csv_gz_archives', bash_command='set -e; cd /opt/repo/; /opt/repo/venv/bin/python -m path.to.my.mirror_script', ) ``` I used days_ago(2) as per this tutorial: https://airflow.apache.org/docs/apache-airflow/stable/tutorial.html -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] t4n1o commented on issue #18541: Error when running dag & something to do with parsing the start date

t4n1o commented on issue #18541: URL: https://github.com/apache/airflow/issues/18541#issuecomment-928440208 Would it help if I upgraded airflow to a newer version (2.14)? The dag I'm running that caused this has start_date set to: ``` with DAG( 'Archives Mirror', default_args=default_args, description='A simple tutorial DAG', schedule_interval=timedelta(days=1), start_date=days_ago(2), tags=['example'], ) as dag: t1 = BashOperator( task_id='mirror_csv_gz_archives', bash_command='set -e; cd /opt/repo/; /opt/repo/venv/bin/python -m path.to.my.mirror_script', ) ``` I used days_ago(2) as per this tutorial: https://airflow.apache.org/docs/apache-airflow/stable/tutorial.html -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ernest-kr commented on pull request #16666: Replace execution_date with run_id in airflow tasks run command

ernest-kr commented on pull request #1: URL: https://github.com/apache/airflow/pull/1#issuecomment-928439654 @SamWheating -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ernest-kr commented on pull request #16666: Replace execution_date with run_id in airflow tasks run command

ernest-kr commented on pull request #1: URL: https://github.com/apache/airflow/pull/1#issuecomment-928439191 I see that the CLI help docs are not updated -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ChristianDavis opened a new pull request #18560: typo in docs

ChristianDavis opened a new pull request #18560: URL: https://github.com/apache/airflow/pull/18560 --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #18560: typo in docs

boring-cyborg[bot] commented on pull request #18560: URL: https://github.com/apache/airflow/pull/18560#issuecomment-928400554 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (flake8, mypy and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/main/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/main/docs/apache-airflow/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/main/BREEZE.rst) for testing locally, it’s a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Please follow [ASF Code of Conduct](https://www.apache.org/foundation/policies/conduct) for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better . In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://s.apache.org/airflow-slack -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] rafaelwsan commented on issue #18558: Login failed in UI after setting Postgres external database in the helm chart

rafaelwsan commented on issue #18558: URL: https://github.com/apache/airflow/issues/18558#issuecomment-928360874 All tables were created successfully after chart upgrade, but UI login still fail -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] rafaelwsan commented on issue #18558: Login failed in UI after setting Postgres external database in the helm chart

rafaelwsan commented on issue #18558: URL: https://github.com/apache/airflow/issues/18558#issuecomment-928356991 NAME READY STATUSRESTARTS AGE airflow-pgbouncer-6df5c988f7-xbp7q 2/2 Running 0 26m airflow-scheduler-5dd5f7bf6b-g4q9g 3/3 Running 0 26m airflow-statsd-84f4f9898-2cb2k 1/1 Running 0 149m airflow-webserver-d6cb44b9d-ml4ws1/1 Running 0 26m -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

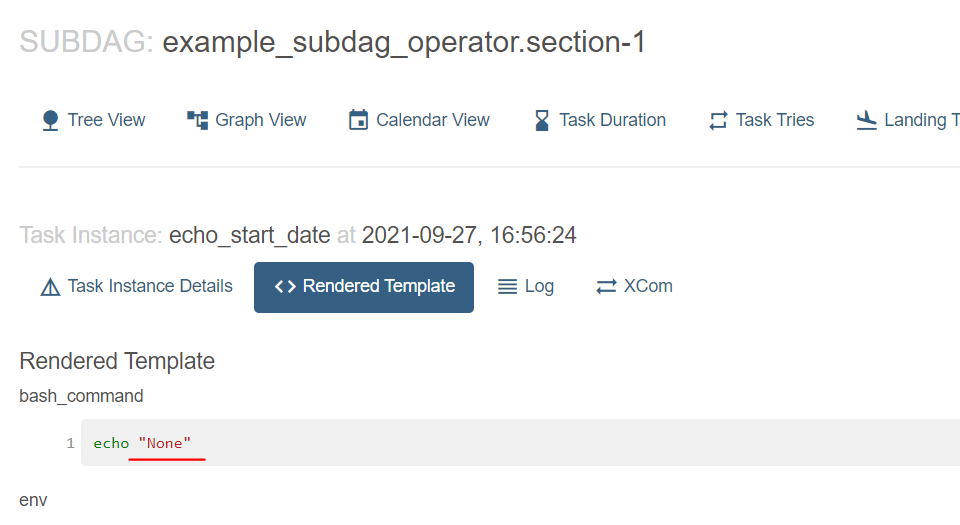

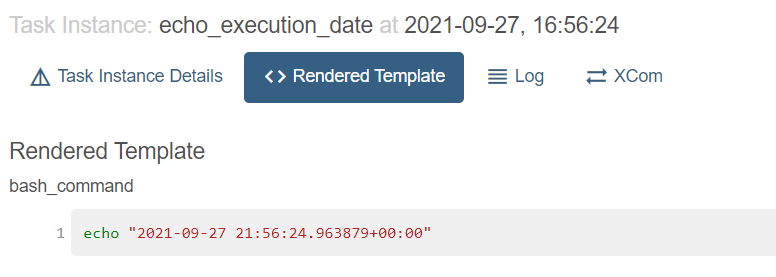

[GitHub] [airflow] enriqueayala opened a new issue #18559: dag_run.start_date not available from SubDag

enriqueayala opened a new issue #18559:

URL: https://github.com/apache/airflow/issues/18559

### Apache Airflow version

2.1.4 (latest released)

### Operating System

Ubuntu 20.04

### Versions of Apache Airflow Providers

_No response_

### Deployment

Virtualenv installation

### Deployment details

_No response_

### What happened

Access to dag_run.start_date attribute from subdag returns `None`.

### What you expected to happen

Return DagRun.start_date of parent_dag (working on 2.1.0)

### How to reproduce

Modify example_dags\subdags\subdag.py to :

```

from airflow import DAG

from airflow.operators.bash import BashOperator

from airflow.utils.dates import days_ago

def subdag(parent_dag_name, child_dag_name, args):

with DAG(

dag_id=f'{parent_dag_name}.{child_dag_name}',

default_args=args,

start_date=days_ago(2),

schedule_interval="@daily",

) as dag_subdag:

t1 = BashOperator(

task_id='echo_execution_date',

bash_command='echo "{{dag_run.execution_date}}"',

)

t2 = BashOperator(

task_id='echo_start_date',

bash_command='echo "{{dag_run.start_date}}"',

)

t1 >> t2

return dag_subdag

```

### Anything else

It also occurs within a task through context.

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of

Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] rafaelwsan opened a new issue #18558: Login failed in UI after setting Postgres external database in the helm chart

rafaelwsan opened a new issue #18558:

URL: https://github.com/apache/airflow/issues/18558

### Official Helm Chart version

1.1.0 (latest released)

### Apache Airflow version

2.1.2

### Kubernetes Version

1.20.9

### Helm Chart configuration

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

---

# Default values for airflow.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

# Provide a name to substitute for the full names of resources

fullnameOverride: ""

# Provide a name to substitute for the name of the chart

nameOverride: ""

# User and group of airflow user

uid: 5

gid: 0

# Airflow home directory

# Used for mount paths

airflowHome: /opt/airflow

# Default airflow repository -- overrides all the specific images below

defaultAirflowRepository: rafaelsan/airflow

# Default airflow tag to deploy

defaultAirflowTag: "airflow-custom-1.0.0"

# Airflow version (Used to make some decisions based on Airflow Version

being deployed)

airflowVersion: "2.1.2"

# Images

images:

airflow:

repository: ~

tag: ~

pullPolicy: IfNotPresent

pod_template:

repository: ~

tag: ~

pullPolicy: IfNotPresent

flower:

repository: ~

tag: ~

pullPolicy: IfNotPresent

statsd:

repository: apache/airflow

tag: airflow-statsd-exporter-2021.04.28-v0.17.0

pullPolicy: IfNotPresent

redis:

repository: redis

tag: 6-buster

pullPolicy: IfNotPresent

pgbouncer:

repository: apache/airflow

tag: airflow-pgbouncer-2021.04.28-1.14.0

pullPolicy: IfNotPresent

pgbouncerExporter:

repository: apache/airflow

tag: airflow-pgbouncer-exporter-2021.04.28-0.5.0

pullPolicy: IfNotPresent

gitSync:

repository: k8s.gcr.io/git-sync/git-sync

tag: v3.3.0

pullPolicy: IfNotPresent

# Select certain nodes for airflow pods.

nodeSelector: {}

affinity: {}

tolerations: []

# Add common labels to all objects and pods defined in this chart.

labels: {}

# Ingress configuration

ingress:

# Enable ingress resource

enabled: false

# Configs for the Ingress of the web Service

web:

# Annotations for the web Ingress

annotations: {}

# The path for the web Ingress

path: ""

# The hostname for the web Ingress

host: ""

# configs for web Ingress TLS

tls:

# Enable TLS termination for the web Ingress

enabled: false

# the name of a pre-created Secret containing a TLS private key and

certificate

secretName: ""

# HTTP paths to add to the web Ingress before the default path

precedingPaths: []

# Http paths to add to the web Ingress after the default path

succeedingPaths: []

# Configs for the Ingress of the flower Service

flower:

# Annotations for the flower Ingress

annotations: {}

# The path for the flower Ingress

path: ""

# The hostname for the flower Ingress

host: ""

# configs for web Ingress TLS

tls:

# Enable TLS termination for the flower Ingress

enabled: false

# the name of a pre-created Secret containing a TLS private key and

certificate

secretName: ""

# HTTP paths to add to the flower Ingress before the default path

precedingPaths: []

# Http paths to add to the flower Ingress after the default path

succeedingPaths: []

# Network policy configuration

networkPolicies:

# Enabled network policies

enabled: false

# Extra annotations to apply to all

# Airflow pods

airflowPodAnnotations: {}

# Extra annotations to apply to

# main Airflow configmap

airflowConfigAnnotations: {}

# `airflow_local_settings` file as a string (can be templated).

airflowLocalSettings: ~

# Enable RBAC (default on most clusters these days)

rbac:

# Specifies whether RBAC

[GitHub] [airflow] boring-cyborg[bot] commented on issue #18558: Login failed in UI after setting Postgres external database in the helm chart

boring-cyborg[bot] commented on issue #18558: URL: https://github.com/apache/airflow/issues/18558#issuecomment-928345567 Thanks for opening your first issue here! Be sure to follow the issue template! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ShakaibKhan commented on issue #17962: Warn if robots.txt is accessed

ShakaibKhan commented on issue #17962: URL: https://github.com/apache/airflow/issues/17962#issuecomment-928292345 started pr to address this: https://github.com/apache/airflow/pull/18557 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ShakaibKhan opened a new pull request #18557: Warning of public exposure of deployment in UI with on/off config

ShakaibKhan opened a new pull request #18557: URL: https://github.com/apache/airflow/pull/18557 related: #17962 Added warning banner message for when /robot.txt is hit with on/off config -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham closed issue #18458: Airflow deployed on Kubernetes cluster NOT showing airflow app metrics in STATSD Exporter

jedcunningham closed issue #18458: URL: https://github.com/apache/airflow/issues/18458 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] flolas edited a comment on pull request #17329: Split sql statements in DbApi run

flolas edited a comment on pull request #17329: URL: https://github.com/apache/airflow/pull/17329#issuecomment-928259116 @potiuk Tests fail :( whats wrong? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] flolas commented on pull request #17329: Split sql statements in DbApi run

flolas commented on pull request #17329: URL: https://github.com/apache/airflow/pull/17329#issuecomment-928259116 @potiuk Tests fails :( whats wrong? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] collinmcnulty opened a new pull request #18555: Add task information to logs about k8s pods

collinmcnulty opened a new pull request #18555: URL: https://github.com/apache/airflow/pull/18555 Add annotations, which contain the task information, to log lines that reference a specific pod so that logs can be searched by task or DAG id. Also condenses a few more log elements into a single line to play better with Elastic. I did not have the confidence/time to go through every log line that references a pod name to add annotations, as some of them would require passing the annotations through several layers that I do not understand and do not want to break. I think I got the most common and critical log lines though. closes: #18329 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Brooke-white commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator

Brooke-white commented on a change in pull request #18447: URL: https://github.com/apache/airflow/pull/18447#discussion_r716999098 ## File path: airflow/providers/amazon/aws/hooks/redshift_statement.py ## @@ -0,0 +1,144 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. +"""Interact with AWS Redshift, using the boto3 library.""" + +from typing import Callable, Dict, Optional, Tuple, Union + +import redshift_connector +from redshift_connector import Connection as RedshiftConnection + +from airflow.hooks.dbapi import DbApiHook + + +class RedshiftStatementHook(DbApiHook): Review comment: that sounds good to me, I'm happy to make this change if the others here agree with taking this route @josh-fell @JavierLopezT -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] zachliu commented on issue #15000: When an ECS Task fails to start, ECS Operator raises a CloudWatch exception

zachliu commented on issue #15000: URL: https://github.com/apache/airflow/issues/15000#issuecomment-928219338 > @zachliu @kanga333 did remove retry for now #16150 solved the problem? @eladkal unfortunately no, #16150 was for another issue that's only somewhat related to this one -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #18554: Bugfix: dag_bag.get_dag should not raise exception

ephraimbuddy commented on a change in pull request #18554: URL: https://github.com/apache/airflow/pull/18554#discussion_r716990684 ## File path: airflow/exceptions.py ## @@ -150,10 +150,6 @@ class DuplicateTaskIdFound(AirflowException): """Raise when a Task with duplicate task_id is defined in the same DAG""" -class SerializedDagNotFound(DagNotFound): Review comment: Another option would be to use `try_except` in all the places we called `get_dag` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy edited a comment on pull request #18554: Bugfix: dag_bag.get_dag should not raise exception

ephraimbuddy edited a comment on pull request #18554: URL: https://github.com/apache/airflow/pull/18554#issuecomment-928203949 > The API has been returning 404 for quite some time (and IMO it's the correct behaviour). #18523 only refactored the implementation to raise 404 in a different way. I meant on the webserver. Sorry I didn't make that clear. If you change the URL for the dag on tree/graph view such that the dag Id is not in SerializedDagModel. You will get the above error which I think should be for REST API -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal commented on issue #12680: SparkSubmitHook - allow log parsing

eladkal commented on issue #12680: URL: https://github.com/apache/airflow/issues/12680#issuecomment-928205522 This feels like a very custom use case for your needs. You can do that with a custom operator. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal closed issue #12680: SparkSubmitHook - allow log parsing

eladkal closed issue #12680: URL: https://github.com/apache/airflow/issues/12680 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated (892c5fc -> 80ae70c)

This is an automated email from the ASF dual-hosted git repository. kaxilnaik pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git. from 892c5fc Add package filter info to Breeze build docs (#18550) add 80ae70c Fix ``DetachedInstanceError`` when dag_run attrs are accessed from ti (#18499) No new revisions were added by this update. Summary of changes: airflow/models/taskinstance.py| 5 + tests/jobs/test_local_task_job.py | 2 +- tests/models/test_taskinstance.py | 2 +- 3 files changed, 3 insertions(+), 6 deletions(-)

[GitHub] [airflow] kaxil merged pull request #18499: Fix DetachedInstanceError when dag_run attrs are accessed from ti

kaxil merged pull request #18499: URL: https://github.com/apache/airflow/pull/18499 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on pull request #18554: Bugfix: dag_bag.get_dag should not raise exception

ephraimbuddy commented on pull request #18554: URL: https://github.com/apache/airflow/pull/18554#issuecomment-928203949 > The API has been returning 404 for quite some time (and IMO it's the correct behaviour). #18523 only refactored the implementation to raise 404 in a different way. I meant on the webserver. Sorry I didn't make that clear. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal commented on issue #15000: When an ECS Task fails to start, ECS Operator raises a CloudWatch exception

eladkal commented on issue #15000: URL: https://github.com/apache/airflow/issues/15000#issuecomment-928203500 @zachliu @kanga333 did remove retry for now #16150 solved the problem? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ashb commented on pull request #18503: When calling `dr.get_task_instance` automatically set `dag_run` relationship

ashb commented on pull request #18503: URL: https://github.com/apache/airflow/pull/18503#issuecomment-928202842 We don't need this now with Ephraim's latest pr -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ashb closed pull request #18503: When calling `dr.get_task_instance` automatically set `dag_run` relationship

ashb closed pull request #18503: URL: https://github.com/apache/airflow/pull/18503 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #18499: Fix DetachedInstanceError when dag_run attrs are accessed from ti

github-actions[bot] commented on pull request #18499: URL: https://github.com/apache/airflow/pull/18499#issuecomment-928201848 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest main at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal closed issue #17314: Kerberos configuration to enable allow kinit -f -A

eladkal closed issue #17314: URL: https://github.com/apache/airflow/issues/17314 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal commented on issue #17314: Kerberos configuration to enable allow kinit -f -A

eladkal commented on issue #17314: URL: https://github.com/apache/airflow/issues/17314#issuecomment-928201286 solved in https://github.com/apache/airflow/pull/17816 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal commented on issue #16919: error when using mysql_to_s3 (TypeError: cannot safely cast non-equivalent object to int64)

eladkal commented on issue #16919: URL: https://github.com/apache/airflow/issues/16919#issuecomment-928199673 @SasanAhmadi are you working on this issue? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] dstandish commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator

dstandish commented on a change in pull request #18447: URL: https://github.com/apache/airflow/pull/18447#discussion_r716962976 ## File path: airflow/providers/amazon/aws/hooks/redshift_statement.py ## @@ -0,0 +1,144 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. +"""Interact with AWS Redshift, using the boto3 library.""" + +from typing import Callable, Dict, Optional, Tuple, Union + +import redshift_connector +from redshift_connector import Connection as RedshiftConnection + +from airflow.hooks.dbapi import DbApiHook + + +class RedshiftStatementHook(DbApiHook): Review comment: It's a good point re IAM auth. What if we just call the module redshift_sql but the hook RedshiftHook Then there are two redshift hooks until 3.0, but in different modules After 3.0 the old one is renamed And I guess we rename the new module at 3.0 too? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] dstandish commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator

dstandish commented on a change in pull request #18447: URL: https://github.com/apache/airflow/pull/18447#discussion_r716962976 ## File path: airflow/providers/amazon/aws/hooks/redshift_statement.py ## @@ -0,0 +1,144 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. +"""Interact with AWS Redshift, using the boto3 library.""" + +from typing import Callable, Dict, Optional, Tuple, Union + +import redshift_connector +from redshift_connector import Connection as RedshiftConnection + +from airflow.hooks.dbapi import DbApiHook + + +class RedshiftStatementHook(DbApiHook): Review comment: What if we just call the module redshift_sql but the hook RedshiftHook Then there are two redshift hooks until 3.0, but in different modules After 3.0 the old one is renamed And I guess we rename the new module at 3.0 too? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] dstandish commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator

dstandish commented on a change in pull request #18447: URL: https://github.com/apache/airflow/pull/18447#discussion_r716962976 ## File path: airflow/providers/amazon/aws/hooks/redshift_statement.py ## @@ -0,0 +1,144 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. +"""Interact with AWS Redshift, using the boto3 library.""" + +from typing import Callable, Dict, Optional, Tuple, Union + +import redshift_connector +from redshift_connector import Connection as RedshiftConnection + +from airflow.hooks.dbapi import DbApiHook + + +class RedshiftStatementHook(DbApiHook): Review comment: What if we just call the module redshift_sql but the hook RedshiftHook Then there are two redshift hooks until 3.0 After 3.0 the old one is renamed And I guess we rename the new module at 3.0 too? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] dstandish commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator

dstandish commented on a change in pull request #18447: URL: https://github.com/apache/airflow/pull/18447#discussion_r716962976 ## File path: airflow/providers/amazon/aws/hooks/redshift_statement.py ## @@ -0,0 +1,144 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. +"""Interact with AWS Redshift, using the boto3 library.""" + +from typing import Callable, Dict, Optional, Tuple, Union + +import redshift_connector +from redshift_connector import Connection as RedshiftConnection + +from airflow.hooks.dbapi import DbApiHook + + +class RedshiftStatementHook(DbApiHook): Review comment: What if we just call the module redshift_sql but the hook Redshift hook Then there are two redshift hooks until 3.0 After 3.0 the old one is renamed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on pull request #18554: Bugfix: dag_bag.get_dag should not raise exception

uranusjr commented on pull request #18554: URL: https://github.com/apache/airflow/pull/18554#issuecomment-928180413 The API has been returning 404 for quite some time (and IMO it's the correct behaviour). #18523 only refactored the implementation to raise 404 in a different way. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on pull request #18554: Bugfix: dag_bag.get_dag should not raise exception

ephraimbuddy commented on pull request #18554:

URL: https://github.com/apache/airflow/pull/18554#issuecomment-928176400

Also, error for missing DAG now returns a REST API not found error:

```

{

"detail": null,

"status": 404,

"title": "DAG 'dag_pod_operatorxco' not found in serialized_dag table",

"type":

"http://apache-airflow-docs.s3-website.eu-central-1.amazonaws.com/docs/apache-airflow/latest/stable-rest-api-ref.html#section/Errors/NotFound;

}

```

I think this PR https://github.com/apache/airflow/pull/18523 caused it

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] mik-laj commented on pull request #17951: Refresh credentials for long-running pods on EKS

mik-laj commented on pull request #17951: URL: https://github.com/apache/airflow/pull/17951#issuecomment-928175875 @potiuk Can you look at it? It is ready for review now. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy opened a new pull request #18554: Bugfix: dag_bag.get_dag should not raise exception

ephraimbuddy opened a new pull request #18554: URL: https://github.com/apache/airflow/pull/18554 get_dag raising exception is breaking many parts of the codebase. The usage in code suggests that it should return None if a dag is not found. There are about 30 usages expecting it to return None if a dag is not found. A missing dag errors out in the UI instead of returning a message that DAG is missing. This PR adds a try/except and returns None when a dag is not found --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #18147: Allow airflow standard images to run in openshift utilising the official helm chart #18136

potiuk commented on pull request #18147: URL: https://github.com/apache/airflow/pull/18147#issuecomment-928152614 Some static/helm unit test failed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham closed pull request #18553: Extra debugging for helm tests

jedcunningham closed pull request #18553: URL: https://github.com/apache/airflow/pull/18553 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham opened a new pull request #18553: Extra debugging for helm tests

jedcunningham opened a new pull request #18553: URL: https://github.com/apache/airflow/pull/18553 I'm just seeing if I can identify why public runners are failing on -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] andrewgodwin opened a new pull request #18552: Allow core Triggerer loops to yield control

andrewgodwin opened a new pull request #18552: URL: https://github.com/apache/airflow/pull/18552 In the case of having several hundred triggers, the core triggerer creation/deletion loops would block the main thread for several hundred milliseconds and bring the event loop to a halt. This change allows them to yield control after every trigger they process, preventing this. It's unfortunately not possible to unit test reliably, but I ran it through its paces with 500 triggers locally. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Brooke-white commented on a change in pull request #18447: add RedshiftStatementHook, RedshiftOperator