[GitHub] [airflow] uranusjr commented on pull request #20664: Switch to buildx to build airflow images

uranusjr commented on pull request #20664: URL: https://github.com/apache/airflow/pull/20664#issuecomment-1005457445 I’m not too familiar with buildx in the first palce and will dismiss my request for review. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #20338: Breeze2 build ci image

github-actions[bot] commented on pull request #20338: URL: https://github.com/apache/airflow/pull/20338#issuecomment-1005457194 The PR is likely ready to be merged. No tests are needed as no important environment files, nor python files were modified by it. However, committers might decide that full test matrix is needed and add the 'full tests needed' label. Then you should rebase it to the latest main or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #20338: Breeze2 build ci image

potiuk commented on a change in pull request #20338:

URL: https://github.com/apache/airflow/pull/20338#discussion_r778608790

##

File path: dev/breeze/src/airflow_breeze/breeze.py

##

@@ -95,12 +93,103 @@ def shell(verbose: bool):

@option_verbose

-@main.command()

-def build_ci_image(verbose: bool):

-"""Builds breeze.ci image for breeze.py."""

+@main.command(name='build-ci-image')

+@click.option(

+'--additional-extras',

+help='This installs additional extra package while installing airflow in

the image.',

+)

+@click.option('-p', '--python', help='Choose your python version')

+@click.option(

+'--additional-dev-apt-deps', help='Additional apt dev dependencies to use

when building the images.'

+)

+@click.option(

+'--additional-runtime-apt-deps',

+help='Additional apt runtime dependencies to use when building the

images.',

+)

+@click.option(

+'--additional-python-deps', help='Additional python dependencies to use

when building the images.'

+)

+@click.option(

+'--additional_dev_apt_command', help='Additional command executed before

dev apt deps are installed.'

+)

+@click.option(

+'--additional_runtime_apt_command',

+help='Additional command executed before runtime apt deps are installed.',

+)

+@click.option(

+'--additional_dev_apt_env', help='Additional environment variables set

when adding dev dependencies.'

+)

+@click.option(

+'--additional_runtime_apt_env',

+help='Additional environment variables set when adding runtime

dependencies.',

+)

+@click.option('--dev-apt-command', help='The basic command executed before dev

apt deps are installed.')

+@click.option(

+'--dev-apt-deps',

+help='The basic apt dev dependencies to use when building the images.',

+)

+@click.option(

+'--runtime-apt-command', help='The basic command executed before runtime

apt deps are installed.'

+)

+@click.option(

+'--runtime-apt-deps',

+help='The basic apt runtime dependencies to use when building the images.',

+)

+@click.option('--github-repository', help='Choose repository to push/pull

image.')

+@click.option('--build-cache', help='Cache option')

+@click.option('--upgrade-to-newer-dependencies', is_flag=True)

+def build_ci_image(

+verbose: bool,

+additional_extras: Optional[str],

+python: Optional[float],

+additional_dev_apt_deps: Optional[str],

+additional_runtime_apt_deps: Optional[str],

+additional_python_deps: Optional[str],

+additional_dev_apt_command: Optional[str],

+additional_runtime_apt_command: Optional[str],

+additional_dev_apt_env: Optional[str],

+additional_runtime_apt_env: Optional[str],

+dev_apt_command: Optional[str],

+dev_apt_deps: Optional[str],

+runtime_apt_command: Optional[str],

+runtime_apt_deps: Optional[str],

+github_repository: Optional[str],

+build_cache: Optional[str],

+upgrade_to_newer_dependencies: bool,

+):

+"""Builds docker CI image without entering the container."""

+from airflow_breeze.ci.build_image import build_image

+

if verbose:

console.print(f"\n[blue]Building image of airflow from

{__AIRFLOW_SOURCES_ROOT}[/]\n")

-raise ClickException("\nPlease implement building the CI image\n")

+build_image(

+verbose,

+additional_extras=additional_extras,

+python_version=python,

+additional_dev_apt_deps=additional_dev_apt_deps,

+additional_runtime_apt_deps=additional_runtime_apt_deps,

+additional_python_deps=additional_python_deps,

+additional_runtime_apt_command=additional_runtime_apt_command,

+additional_dev_apt_command=additional_dev_apt_command,

+additional_dev_apt_env=additional_dev_apt_env,

+additional_runtime_apt_env=additional_runtime_apt_env,

+dev_apt_command=dev_apt_command,

+dev_apt_deps=dev_apt_deps,

+runtime_apt_command=runtime_apt_command,

+runtime_apt_deps=runtime_apt_deps,

+github_repository=github_repository,

+docker_cache=build_cache,

+upgrade_to_newer_dependencies=upgrade_to_newer_dependencies,

+)

+

+

+@option_verbose

+@main.command(name='build-prod-image')

+def build_prod_image(verbose: bool):

+"""Builds docker Production image without entering the container."""

+if verbose:

+console.print("\n[blue]Building image[/]\n")

+raise ClickException("\nPlease implement building the Production image\n")

if __name__ == '__main__':

Review comment:

It's correct!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] uranusjr opened a new pull request #20669: Fix ECSProtocol compat shim inheritance

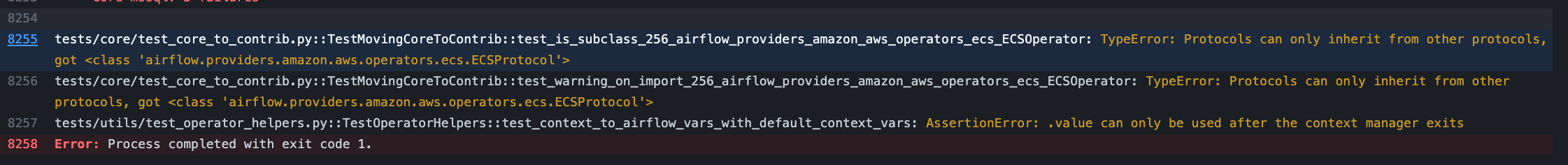

uranusjr opened a new pull request #20669: URL: https://github.com/apache/airflow/pull/20669 Tests are failing: ``` TypeError: Protocols can only inherit from other protocols, got ``` This is due to the compatibility shim for `ECSProtocol` is incorrectly double-inheriting `Protocol`. Since the “real” `ECSProtocol` class (i.e. `NewECSProtocol`) is already a protocol, the compat `ECSProtocol` does not need to (and can’t) also inherit from `Protocol`. Similarly, the `@runtime_checkable` decorator shouldn’t be applied, since the parent `ECSProtocol` is already runtime-checkable. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated (b83084b -> 64c0bd5)

This is an automated email from the ASF dual-hosted git repository. ephraimanierobi pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git. from b83084b Add filter by state in DagRun REST API (List Dag Runs) (#20485) add 64c0bd5 bugfix: deferred tasks does not cancel when DAG is marked fail (#20649) No new revisions were added by this update. Summary of changes: airflow/api/common/experimental/mark_tasks.py | 119 ++ 1 file changed, 84 insertions(+), 35 deletions(-)

[GitHub] [airflow] ephraimbuddy closed issue #20580: Triggers are not terminated when DAG is mark failed

ephraimbuddy closed issue #20580: URL: https://github.com/apache/airflow/issues/20580 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy merged pull request #20649: bugfix: deferred tasks does not cancel when DAG is marked fail

ephraimbuddy merged pull request #20649: URL: https://github.com/apache/airflow/pull/20649 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal commented on a change in pull request #20360: Standardize DynamoDB naming

eladkal commented on a change in pull request #20360: URL: https://github.com/apache/airflow/pull/20360#discussion_r778603523 ## File path: airflow/providers/amazon/aws/hooks/dynamodb.py ## @@ -18,13 +18,14 @@ """This module contains the AWS DynamoDB hook""" +import warnings from typing import Iterable, List, Optional from airflow.exceptions import AirflowException from airflow.providers.amazon.aws.hooks.base_aws import AwsBaseHook -class AwsDynamoDBHook(AwsBaseHook): +class DynamoDBHook(AwsBaseHook): Review comment: The entry in deprecated class points contrib to `AwsDynamoDBHook` This is no longer true. It should point to `DynamoDBHook` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal commented on a change in pull request #20369: Standardize AWS Batch naming

eladkal commented on a change in pull request #20369: URL: https://github.com/apache/airflow/pull/20369#discussion_r778602745 ## File path: airflow/providers/amazon/aws/operators/batch.py ## @@ -26,17 +26,18 @@ - http://boto3.readthedocs.io/en/latest/reference/services/batch.html - https://docs.aws.amazon.com/batch/latest/APIReference/Welcome.html """ -from typing import TYPE_CHECKING, Any, Optional, Sequence +import warnings +from typing import Any, Optional, Sequence, TYPE_CHECKING Review comment: ```suggestion from typing import TYPE_CHECKING, Any, Optional, Sequence ``` to fix static checks -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pingzh commented on a change in pull request #20361: Add context var hook to inject more env vars

pingzh commented on a change in pull request #20361:

URL: https://github.com/apache/airflow/pull/20361#discussion_r778598911

##

File path: tests/utils/test_operator_helpers.py

##

@@ -71,6 +71,29 @@ def test_context_to_airflow_vars_all_context(self):

'AIRFLOW_CTX_DAG_EMAIL': 'ema...@test.com',

}

+def test_context_to_airflow_vars_with_default_context_vars(self):

+with mock.patch('airflow.settings.get_airflow_context_vars') as

mock_method:

+airflow_cluster = 'cluster-a'

+mock_method.return_value = {'airflow_cluster': airflow_cluster}

+

+context_vars =

operator_helpers.context_to_airflow_vars(self.context)

+assert context_vars['airflow.ctx.airflow_cluster'] ==

airflow_cluster

+

+context_vars =

operator_helpers.context_to_airflow_vars(self.context, in_env_var_format=True)

+assert context_vars['AIRFLOW_CTX_AIRFLOW_CLUSTER'] ==

airflow_cluster

+

+with mock.patch('airflow.settings.get_airflow_context_vars') as

mock_method:

+mock_method.return_value = {'airflow_cluster': [1, 2]}

+with pytest.raises(TypeError) as error:

+assert "value of key must be string, not

" == error.value

+operator_helpers.context_to_airflow_vars(self.context)

+

+with mock.patch('airflow.settings.get_airflow_context_vars') as

mock_method:

+mock_method.return_value = {1: "value"}

+with pytest.raises(TypeError) as error:

+assert 'key <1> must be string' == error.value

+operator_helpers.context_to_airflow_vars(self.context)

Review comment:

thanks, ah, my bad 臘 , fixed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Bowrna commented on a change in pull request #20338: Breeze2 build ci image

Bowrna commented on a change in pull request #20338:

URL: https://github.com/apache/airflow/pull/20338#discussion_r778596878

##

File path: dev/breeze/src/airflow_breeze/breeze.py

##

@@ -95,12 +93,103 @@ def shell(verbose: bool):

@option_verbose

-@main.command()

-def build_ci_image(verbose: bool):

-"""Builds breeze.ci image for breeze.py."""

+@main.command(name='build-ci-image')

+@click.option(

+'--additional-extras',

+help='This installs additional extra package while installing airflow in

the image.',

+)

+@click.option('-p', '--python', help='Choose your python version')

+@click.option(

+'--additional-dev-apt-deps', help='Additional apt dev dependencies to use

when building the images.'

+)

+@click.option(

+'--additional-runtime-apt-deps',

+help='Additional apt runtime dependencies to use when building the

images.',

+)

+@click.option(

+'--additional-python-deps', help='Additional python dependencies to use

when building the images.'

+)

+@click.option(

+'--additional_dev_apt_command', help='Additional command executed before

dev apt deps are installed.'

+)

+@click.option(

+'--additional_runtime_apt_command',

+help='Additional command executed before runtime apt deps are installed.',

+)

+@click.option(

+'--additional_dev_apt_env', help='Additional environment variables set

when adding dev dependencies.'

+)

+@click.option(

+'--additional_runtime_apt_env',

+help='Additional environment variables set when adding runtime

dependencies.',

+)

+@click.option('--dev-apt-command', help='The basic command executed before dev

apt deps are installed.')

+@click.option(

+'--dev-apt-deps',

+help='The basic apt dev dependencies to use when building the images.',

+)

+@click.option(

+'--runtime-apt-command', help='The basic command executed before runtime

apt deps are installed.'

+)

+@click.option(

+'--runtime-apt-deps',

+help='The basic apt runtime dependencies to use when building the images.',

+)

+@click.option('--github-repository', help='Choose repository to push/pull

image.')

+@click.option('--build-cache', help='Cache option')

+@click.option('--upgrade-to-newer-dependencies', is_flag=True)

+def build_ci_image(

+verbose: bool,

+additional_extras: Optional[str],

+python: Optional[float],

+additional_dev_apt_deps: Optional[str],

+additional_runtime_apt_deps: Optional[str],

+additional_python_deps: Optional[str],

+additional_dev_apt_command: Optional[str],

+additional_runtime_apt_command: Optional[str],

+additional_dev_apt_env: Optional[str],

+additional_runtime_apt_env: Optional[str],

+dev_apt_command: Optional[str],

+dev_apt_deps: Optional[str],

+runtime_apt_command: Optional[str],

+runtime_apt_deps: Optional[str],

+github_repository: Optional[str],

+build_cache: Optional[str],

+upgrade_to_newer_dependencies: bool,

+):

+"""Builds docker CI image without entering the container."""

+from airflow_breeze.ci.build_image import build_image

+

if verbose:

console.print(f"\n[blue]Building image of airflow from

{__AIRFLOW_SOURCES_ROOT}[/]\n")

-raise ClickException("\nPlease implement building the CI image\n")

+build_image(

+verbose,

+additional_extras=additional_extras,

+python_version=python,

+additional_dev_apt_deps=additional_dev_apt_deps,

+additional_runtime_apt_deps=additional_runtime_apt_deps,

+additional_python_deps=additional_python_deps,

+additional_runtime_apt_command=additional_runtime_apt_command,

+additional_dev_apt_command=additional_dev_apt_command,

+additional_dev_apt_env=additional_dev_apt_env,

+additional_runtime_apt_env=additional_runtime_apt_env,

+dev_apt_command=dev_apt_command,

+dev_apt_deps=dev_apt_deps,

+runtime_apt_command=runtime_apt_command,

+runtime_apt_deps=runtime_apt_deps,

+github_repository=github_repository,

+docker_cache=build_cache,

+upgrade_to_newer_dependencies=upgrade_to_newer_dependencies,

+)

+

+

+@option_verbose

+@main.command(name='build-prod-image')

+def build_prod_image(verbose: bool):

+"""Builds docker Production image without entering the container."""

+if verbose:

+console.print("\n[blue]Building image[/]\n")

+raise ClickException("\nPlease implement building the Production image\n")

if __name__ == '__main__':

Review comment:

@potiuk I have resolved this issue. But I faced a circular import error

when I added the import part at the beginning of the file. So I have added the

import just before mkdir command. Is this correct way of handling or can you

suggest better way than this?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #20361: Add context var hook to inject more env vars

uranusjr commented on a change in pull request #20361:

URL: https://github.com/apache/airflow/pull/20361#discussion_r778592427

##

File path: tests/utils/test_operator_helpers.py

##

@@ -71,6 +71,29 @@ def test_context_to_airflow_vars_all_context(self):

'AIRFLOW_CTX_DAG_EMAIL': 'ema...@test.com',

}

+def test_context_to_airflow_vars_with_default_context_vars(self):

+with mock.patch('airflow.settings.get_airflow_context_vars') as

mock_method:

+airflow_cluster = 'cluster-a'

+mock_method.return_value = {'airflow_cluster': airflow_cluster}

+

+context_vars =

operator_helpers.context_to_airflow_vars(self.context)

+assert context_vars['airflow.ctx.airflow_cluster'] ==

airflow_cluster

+

+context_vars =

operator_helpers.context_to_airflow_vars(self.context, in_env_var_format=True)

+assert context_vars['AIRFLOW_CTX_AIRFLOW_CLUSTER'] ==

airflow_cluster

+

+with mock.patch('airflow.settings.get_airflow_context_vars') as

mock_method:

+mock_method.return_value = {'airflow_cluster': [1, 2]}

+with pytest.raises(TypeError) as error:

+assert "value of key must be string, not

" == error.value

+operator_helpers.context_to_airflow_vars(self.context)

Review comment:

```suggestion

with pytest.raises(TypeError) as error:

operator_helpers.context_to_airflow_vars(self.context)

assert "value of key must be string, not

" == error.value

```

##

File path: tests/utils/test_operator_helpers.py

##

@@ -71,6 +71,29 @@ def test_context_to_airflow_vars_all_context(self):

'AIRFLOW_CTX_DAG_EMAIL': 'ema...@test.com',

}

+def test_context_to_airflow_vars_with_default_context_vars(self):

+with mock.patch('airflow.settings.get_airflow_context_vars') as

mock_method:

+airflow_cluster = 'cluster-a'

+mock_method.return_value = {'airflow_cluster': airflow_cluster}

+

+context_vars =

operator_helpers.context_to_airflow_vars(self.context)

+assert context_vars['airflow.ctx.airflow_cluster'] ==

airflow_cluster

+

+context_vars =

operator_helpers.context_to_airflow_vars(self.context, in_env_var_format=True)

+assert context_vars['AIRFLOW_CTX_AIRFLOW_CLUSTER'] ==

airflow_cluster

+

+with mock.patch('airflow.settings.get_airflow_context_vars') as

mock_method:

+mock_method.return_value = {'airflow_cluster': [1, 2]}

+with pytest.raises(TypeError) as error:

+assert "value of key must be string, not

" == error.value

+operator_helpers.context_to_airflow_vars(self.context)

+

+with mock.patch('airflow.settings.get_airflow_context_vars') as

mock_method:

+mock_method.return_value = {1: "value"}

+with pytest.raises(TypeError) as error:

+assert 'key <1> must be string' == error.value

+operator_helpers.context_to_airflow_vars(self.context)

Review comment:

```suggestion

with pytest.raises(TypeError) as error:

operator_helpers.context_to_airflow_vars(self.context)

assert 'key <1> must be string' == error.value

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #20664: Switch to buildx to build airflow images

potiuk commented on pull request #20664: URL: https://github.com/apache/airflow/pull/20664#issuecomment-1005438706 cc: @Bowrna @edithturn - > that will make your job much easier, it simplifies a lot of the "caching" complexity - that's why I did not want you to start looking at it before as I knew we are going to get it WY simpler with BuildKit. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

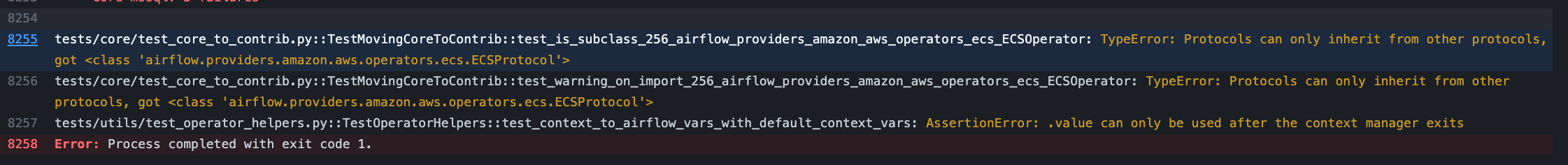

[GitHub] [airflow] uranusjr commented on pull request #20361: Add context var hook to inject more env vars

uranusjr commented on pull request #20361: URL: https://github.com/apache/airflow/pull/20361#issuecomment-1005438826 Oh, the last one is from your change though; you’re using `with pytest.raises(TypeError) as error` incorrectly. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on pull request #20361: Add context var hook to inject more env vars

uranusjr commented on pull request #20361: URL: https://github.com/apache/airflow/pull/20361#issuecomment-1005437824 Looks unrelated, probably someone merged some bad code to main. I’ll investigate separately. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk closed pull request #20238: Optimize dockerfiles for local rebuilds

potiuk closed pull request #20238: URL: https://github.com/apache/airflow/pull/20238 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #20664: Switch to buildx to build airflow images

potiuk commented on pull request #20664: URL: https://github.com/apache/airflow/pull/20664#issuecomment-1005437311 This is a VAST simplification of our docker build caching (using modern BuildKit plugin). I have to do some more testing with it, but on top of removing several hundreds of lines of Bash that I implemented when BuildKit did not have all the capabilities needed, it's also very stable and robust and opens the path to multi-architecture images (for ARM). I would do some more testing - but I would also love to merge #20238 (optimization of Dockerfiles) that this build is based on. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #20651: Fix mypy in providers/grpc and providers/imap

uranusjr commented on a change in pull request #20651: URL: https://github.com/apache/airflow/pull/20651#discussion_r778589556 ## File path: airflow/providers/imap/hooks/imap.py ## @@ -71,7 +71,7 @@ def get_conn(self) -> 'ImapHook': """ if not self.mail_client: conn = self.get_connection(self.imap_conn_id) -self.mail_client = imaplib.IMAP4_SSL(conn.host, conn.port or imaplib.IMAP4_SSL_PORT) +self.mail_client = imaplib.IMAP4_SSL(conn.host, conn.port or imaplib.IMAP4_SSL.port) Review comment: I don’t think `IMAP4_SSL.port` is going to work. Whether `IMAP4_SSL_PORT` is public or not is slightly ambiguous; it is not by module definition, [but the variable actually appears in documentation](https://docs.python.org/3/library/imaplib.html#imaplib.IMAP4_SSL). Either case, the IMAP-over-SSL port is standardised (993), so maybe we should just not rely on `imaplib.IMAP4_SSL_PORT` and just define our own variable. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

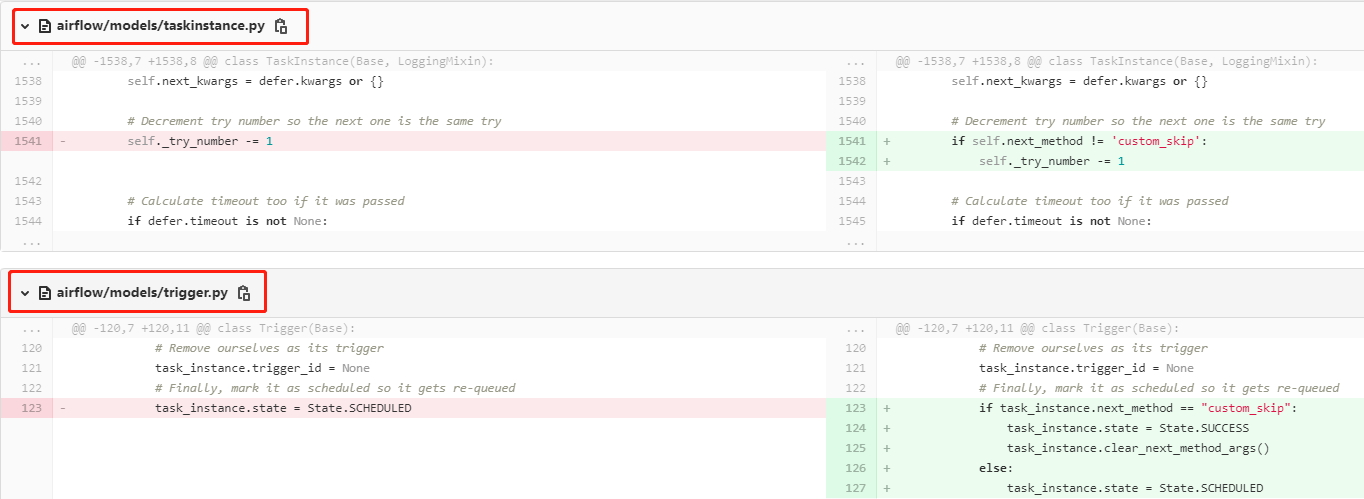

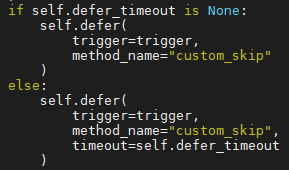

[GitHub] [airflow] Greetlist commented on issue #20308: Multi Schedulers don't synchronize task instance state when using Triggerer

Greetlist commented on issue #20308: URL: https://github.com/apache/airflow/issues/20308#issuecomment-1005435631 At this moment, I solve this problem by this:  Pass *custom_skip* args to method_name when invoking the **defer** function.  Tricky solution. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated (9c0ba1b -> b83084b)

This is an automated email from the ASF dual-hosted git repository. uranusjr pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git. from 9c0ba1b Standardize AWS ECS naming (#20332) add b83084b Add filter by state in DagRun REST API (List Dag Runs) (#20485) No new revisions were added by this update. Summary of changes: .../api_connexion/endpoints/dag_run_endpoint.py| 10 +++- airflow/api_connexion/openapi/v1.yaml | 1 + airflow/api_connexion/schemas/dag_run_schema.py| 1 + .../endpoints/test_dag_run_endpoint.py | 66 ++ 4 files changed, 54 insertions(+), 24 deletions(-)

[GitHub] [airflow] potiuk opened a new pull request #20664: Switch to buildx to build airflow images

potiuk opened a new pull request #20664: URL: https://github.com/apache/airflow/pull/20664 The "buildkit" is much more modern docker build mechanism and supports multiarchitecture builds which makes it suitable for our future ARM support, it also has nicer UI and much more sophisticated caching mechanisms as well as supports better multi-segment builds. BuildKit has been promoted to official for quite a while and it is rather stable now. Also we can now install BuildKit Plugin to docker that add capabilities of building and managin cache using dedicated builders (previously BuildKit cache was managed using rather complex external tools). This gives us an opportunity to vastly simplify our build scripts, because it has now much more robust caching mechanism than the old docker build (which forced us to pull images before using them as cache). We had a lot of complexity involved in efficient caching but with BuildKit all that can be vastly simplified and we can get rid of: * keeping base python images in our registry * keeping build segments for prod image in our registry * keeping manifest images in our registry * deciding when to pull or pull image (not needed now, we can always build image with --cache-from and buildkit will pull cached layers as needed * building the image when performing pre-commit (rather than that we simply encourage users to rebuild the image via breeze command) * pulling the images before building * separate 'build' cache kept in our registry (not needed any more as buildkit allows to keep cache for all segments of multi-segmented build in a single cache * the nice animated tty UI of buildkit eliminates the need of manual spinner * and a number of other complexities. Depends on #20238 --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pingzh edited a comment on pull request #20361: Add context var hook to inject more env vars

pingzh edited a comment on pull request #20361: URL: https://github.com/apache/airflow/pull/20361#issuecomment-1005433855 @potiuk @uranusjr any ideas about this error? https://github.com/apache/airflow/runs/4711391810?check_suite_focus=true#step:8:8255  I have rebased the latest `main` branch. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pingzh edited a comment on pull request #20361: Add context var hook to inject more env vars

pingzh edited a comment on pull request #20361: URL: https://github.com/apache/airflow/pull/20361#issuecomment-1005433855 @potiuk @uranusjr any ideas about this error? https://github.com/apache/airflow/runs/4711391810?check_suite_focus=true#step:8:8255  I have rebased the latest `main` branch. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pingzh commented on pull request #20361: Add context var hook to inject more env vars

pingzh commented on pull request #20361: URL: https://github.com/apache/airflow/pull/20361#issuecomment-1005433855 @potiuk @uranusjr any ideas about this error?  I have rebased the latest `main` branch. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr merged pull request #20485: Add filter by state in DagRun REST API (List Dag Runs)

uranusjr merged pull request #20485: URL: https://github.com/apache/airflow/pull/20485 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #20485: Add filter by state in DagRun REST API (List Dag Runs)

boring-cyborg[bot] commented on pull request #20485: URL: https://github.com/apache/airflow/pull/20485#issuecomment-1005429527 Awesome work, congrats on your first merged pull request! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pingzh commented on a change in pull request #20361: Add context var hook to inject more env vars

pingzh commented on a change in pull request #20361:

URL: https://github.com/apache/airflow/pull/20361#discussion_r778558416

##

File path: airflow/utils/operator_helpers.py

##

@@ -77,6 +87,21 @@ def context_to_airflow_vars(context: Mapping[str, Any],

in_env_var_format: bool

(dag_run, 'run_id', 'AIRFLOW_CONTEXT_DAG_RUN_ID'),

]

+context_params = settings.get_airflow_context_vars(context)

+for key, value in context_params.items():

+if not isinstance(key, str):

+raise TypeError("key must be string")

+if not isinstance(value, str):

+raise TypeError("value must be string")

Review comment:

i think this should be clear enough. but it wouldn't hurt to be more

explicit.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #20656: Update base sensor operator to support XCOM return value

github-actions[bot] commented on pull request #20656: URL: https://github.com/apache/airflow/pull/20656#issuecomment-1005398080 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest main at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #20361: Add context var hook to inject more env vars

uranusjr commented on a change in pull request #20361:

URL: https://github.com/apache/airflow/pull/20361#discussion_r778554258

##

File path: airflow/utils/operator_helpers.py

##

@@ -77,6 +87,21 @@ def context_to_airflow_vars(context: Mapping[str, Any],

in_env_var_format: bool

(dag_run, 'run_id', 'AIRFLOW_CONTEXT_DAG_RUN_ID'),

]

+context_params = settings.get_airflow_context_vars(context)

+for key, value in context_params.items():

+if not isinstance(key, str):

+raise TypeError("key must be string")

+if not isinstance(value, str):

+raise TypeError("value must be string")

Review comment:

Can we show the value of `key` and `value` in the exception message?

This would help the user debug the problem. Something like `f"key must be

string, not {key!r}"` would be enough (and the value variant should show both

`key` and `value`).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #20657: Clarify variable names and use cleaner set diff syntax

github-actions[bot] commented on pull request #20657: URL: https://github.com/apache/airflow/pull/20657#issuecomment-1005392592 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest main at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham closed pull request #20661: Chart: 1.4.0 changelog

jedcunningham closed pull request #20661: URL: https://github.com/apache/airflow/pull/20661 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on issue #20320: Airflow API throwing 500 when user does not have last name

uranusjr commented on issue #20320: URL: https://github.com/apache/airflow/issues/20320#issuecomment-1005381988 Go ahead. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #20663: Allow Viewing DagRuns and TIs if a user has DAG "read" perms

github-actions[bot] commented on pull request #20663: URL: https://github.com/apache/airflow/pull/20663#issuecomment-1005381486 The PR is likely OK to be merged with just subset of tests for default Python and Database versions without running the full matrix of tests, because it does not modify the core of Airflow. If the committers decide that the full tests matrix is needed, they will add the label 'full tests needed'. Then you should rebase to the latest main or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] arsalanfardi commented on issue #20320: Airflow API throwing 500 when user does not have last name

arsalanfardi commented on issue #20320: URL: https://github.com/apache/airflow/issues/20320#issuecomment-1005381384 Can I take this up as my first issue? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #20530: Add sensor decorator

uranusjr commented on a change in pull request #20530:

URL: https://github.com/apache/airflow/pull/20530#discussion_r778544900

##

File path: airflow/decorators/sensor.py

##

@@ -0,0 +1,89 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from inspect import signature

+from typing import Any, Callable, Collection, Dict, Iterable, Mapping,

Optional, Tuple

+

+from airflow.decorators.base import get_unique_task_id, task_decorator_factory

+from airflow.models.taskinstance import Context

+from airflow.sensors.base import BaseSensorOperator

+

+

+class DecoratedSensorOperator(BaseSensorOperator):

+"""

+Wraps a Python callable and captures args/kwargs when called for execution.

+

+:param python_callable: A reference to an object that is callable

+:type python_callable: python callable

+:param task_id: task Id

+:type task_id: str

+:param op_args: a list of positional arguments that will get unpacked when

+calling your callable (templated)

+:type op_args: list

+:param op_kwargs: a dictionary of keyword arguments that will get unpacked

+in your function (templated)

+:type op_kwargs: dict

+:param kwargs_to_upstream: For certain operators, we might need to

upstream certain arguments

+that would otherwise be absorbed by the DecoratedOperator (for example

python_callable for the

+PythonOperator). This gives a user the option to upstream kwargs as

needed.

+:type kwargs_to_upstream: dict

+"""

+

+template_fields: Iterable[str] = ('op_args', 'op_kwargs')

+template_fields_renderers: Dict[str, str] = {"op_args": "py", "op_kwargs":

"py"}

+

+# since we won't mutate the arguments, we should just do the shallow copy

+# there are some cases we can't deepcopy the objects (e.g protobuf).

+shallow_copy_attrs: Tuple[str, ...] = ('python_callable',)

Review comment:

Why does this need to be a tuple specifically, not a more general

protocol e.g. Sequence or Collection?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil commented on pull request #20663: Allow Viewing DagRuns and TIs if a user has DAG "read" perms

kaxil commented on pull request #20663: URL: https://github.com/apache/airflow/pull/20663#issuecomment-1005377013 @Jorricks Can you take a look at it please? This PR still respects the table you had in the PR description for https://github.com/apache/airflow/pull/16634 but allow viewing records where users have read-only perms -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil opened a new pull request #20663: Allow Viewing DagRuns and TIs if a user has DAG "read" perms

kaxil opened a new pull request #20663: URL: https://github.com/apache/airflow/pull/20663 This was updated in Airflow 2.2.0 via https://github.com/apache/airflow/pull/16634 which restricts a user to even views the DagRuns and TI records if they don't have "edit" permissions on DAG even though it has "read" permissions. The Behaviour seems inconsistent as a User can still view those from the Graph and Tree View of the DAG. And since we have got `@action_has_dag_edit_access` on all the Actions like Delete/Clear etc the approach in this PR is better as when a user will try to perform any actions from the List Dag Run view like deleting the record it will give a Access Denied error. cc @Jorricks --- **^ Add meaningful description above** Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information. In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pingzh commented on a change in pull request #20361: Add context var hook to inject more env vars

pingzh commented on a change in pull request #20361: URL: https://github.com/apache/airflow/pull/20361#discussion_r778523450 ## File path: airflow/utils/operator_helpers.py ## @@ -75,6 +86,19 @@ def context_to_airflow_vars(context: Mapping[str, Any], in_env_var_format: bool (dag_run, 'run_id', 'AIRFLOW_CONTEXT_DAG_RUN_ID'), ] +context_params = settings.get_airflow_context_vars(context) +for key, value in context_params.items(): +assert type(key) == str, "key must be string" +assert type(value) == str, "value must be string" Review comment: ah, thanks for pointing out this. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #20361: Add context var hook to inject more env vars

uranusjr commented on a change in pull request #20361: URL: https://github.com/apache/airflow/pull/20361#discussion_r778521753 ## File path: airflow/utils/operator_helpers.py ## @@ -75,6 +86,16 @@ def context_to_airflow_vars(context: Mapping[str, Any], in_env_var_format: bool (dag_run, 'run_id', 'AIRFLOW_CONTEXT_DAG_RUN_ID'), ] +context_params = settings.get_airflow_context_vars(context) +for key, value in context_params.items(): +if in_env_var_format: +if not key.startswith(ENV_VAR_FORMAT_PREFIX): +key = ENV_VAR_FORMAT_PREFIX + key.upper() +else: +if not key.startswith(DEFAULT_FORMAT_PREFIX): +key = DEFAULT_FORMAT_PREFIX + key +params[key] = value Review comment: Should probably mention this in the documentation page as well. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a change in pull request #20361: Add context var hook to inject more env vars

uranusjr commented on a change in pull request #20361: URL: https://github.com/apache/airflow/pull/20361#discussion_r778521582 ## File path: airflow/utils/operator_helpers.py ## @@ -75,6 +86,19 @@ def context_to_airflow_vars(context: Mapping[str, Any], in_env_var_format: bool (dag_run, 'run_id', 'AIRFLOW_CONTEXT_DAG_RUN_ID'), ] +context_params = settings.get_airflow_context_vars(context) +for key, value in context_params.items(): +assert type(key) == str, "key must be string" +assert type(value) == str, "value must be string" Review comment: This should use `isinstance` instead IMO. There’s also a rule to not use asserts in the code base (although I personally disagree with) https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#don-t-use-asserts-outside-tests -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #20659: Disabled edit button in task instances list view page

github-actions[bot] commented on pull request #20659: URL: https://github.com/apache/airflow/pull/20659#issuecomment-1005347651 The PR is likely OK to be merged with just subset of tests for default Python and Database versions without running the full matrix of tests, because it does not modify the core of Airflow. If the committers decide that the full tests matrix is needed, they will add the label 'full tests needed'. Then you should rebase to the latest main or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #19965: Map and Partial DAG authoring interface for Dynamic Task Mapping

github-actions[bot] commented on pull request #19965: URL: https://github.com/apache/airflow/pull/19965#issuecomment-1005326870 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest main at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pingzh commented on a change in pull request #20361: Add context var hook to inject more env vars

pingzh commented on a change in pull request #20361: URL: https://github.com/apache/airflow/pull/20361#discussion_r778486378 ## File path: airflow/utils/operator_helpers.py ## @@ -75,6 +86,16 @@ def context_to_airflow_vars(context: Mapping[str, Any], in_env_var_format: bool (dag_run, 'run_id', 'AIRFLOW_CONTEXT_DAG_RUN_ID'), ] +context_params = settings.get_airflow_context_vars(context) +for key, value in context_params.items(): +if in_env_var_format: +if not key.startswith(ENV_VAR_FORMAT_PREFIX): +key = ENV_VAR_FORMAT_PREFIX + key.upper() +else: +if not key.startswith(DEFAULT_FORMAT_PREFIX): +key = DEFAULT_FORMAT_PREFIX + key +params[key] = value Review comment: good point. @potiuk @uranusjr addressed the comment. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Bowrna commented on a change in pull request #20338: Breeze2 build ci image

Bowrna commented on a change in pull request #20338: URL: https://github.com/apache/airflow/pull/20338#discussion_r778483376 ## File path: dev/breeze/src/airflow_breeze/cache.py ## @@ -0,0 +1,67 @@ +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +import sys +from pathlib import Path +from typing import Any, List, Tuple + +from airflow_breeze import global_constants +from airflow_breeze.breeze import get_airflow_sources_root +from airflow_breeze.console import console + +BUILD_CACHE_DIR = Path(get_airflow_sources_root(), '.build') + + +def read_from_cache_file(param_name: str) -> str: Review comment: Yes @potiuk I think that would be much apt than returning empty string -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham opened a new pull request #20661: Chart: 1.4.0 changelog

jedcunningham opened a new pull request #20661: URL: https://github.com/apache/airflow/pull/20661 Add changelog for chart version 1.4.0. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kazanzhy commented on a change in pull request #20642: Added Hook for Amazon RDS

kazanzhy commented on a change in pull request #20642:

URL: https://github.com/apache/airflow/pull/20642#discussion_r778472926

##

File path: airflow/providers/amazon/aws/hooks/rds.py

##

@@ -0,0 +1,165 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+"""Interact with AWS RDS."""

+

+from typing import Any, Callable, Dict, List, Optional, Union

+

+from airflow.exceptions import AirflowException

+from airflow.providers.amazon.aws.hooks.base_aws import AwsBaseHook

+

+

+class RDSHook(AwsBaseHook):

+"""

+Interact with AWS RDS using the boto3 library.

+Client methods could be used from hook object, like:

hook.create_db_instance()

+

+Additional arguments (such as ``aws_conn_id``) may be specified and

+are passed down to the underlying AwsBaseHook.

+

+All available methods are listed in documentation

+.. seealso::

+

https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/rds.html

+

+.. seealso::

+:class:`~airflow.providers.amazon.aws.hooks.base_aws.AwsBaseHook`

+

+:param aws_conn_id: The Airflow connection used for AWS credentials.

+:type aws_conn_id: str

+"""

+

+def __init__(self, *args, **kwargs) -> None:

+kwargs["client_type"] = "rds"

+super().__init__(*args, **kwargs)

+

+def __getattr__(self, item: str) -> Union[Callable, AirflowException]:

+client = self.get_conn()

+if hasattr(client, item):

+self.log.info("Calling boto3 RDS client's method:", item)

+return getattr(client, item)

+else:

+raise AirflowException("Boto3 RDS client doesn't have a method:",

item)

+

+

+class RDSHookAlt(AwsBaseHook):

+"""

+Interact with AWS RDS, using the boto3 library.

+

+Additional arguments (such as ``aws_conn_id``) may be specified and

+are passed down to the underlying AwsBaseHook.

+

+All unimplemented methods available from boto3 RDS client ->

hook.get_conn()

+.. seealso::

+

https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/rds.html

+

+.. seealso::

+:class:`~airflow.providers.amazon.aws.hooks.base_aws.AwsBaseHook`

+

+:param aws_conn_id: The Airflow connection used for AWS credentials.

+:type aws_conn_id: str

+"""

+

+def __init__(self, *args, **kwargs) -> None:

+kwargs["client_type"] = "rds"

+super().__init__(*args, **kwargs)

+

+def describe_db_snapshots(

Review comment:

A lot of thanks that clarifying the way to implementation @dstandish

The solutions below don't work and it's expected

```

from typing import Type

from botocore.client import BaseClient

self.client: Type[BaseClient]

import boto3

rds_client = boto3.client('rds', 'us-east-1')

self.client: type(rds_client)

```

Did you mean the solution that I started to implement?

https://github.com/apache/airflow/pull/20642/files#diff-07892cfb7f2a919afec9185e36b417d75d5322d243096667ca1cf05c506d9533

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kazanzhy removed a comment on pull request #20642: Added Hook for Amazon RDS

kazanzhy removed a comment on pull request #20642:

URL: https://github.com/apache/airflow/pull/20642#issuecomment-1005276831

A lot of thanks that clarifying the way to implementation @dstandish

The solutions below don't work and it's expected

```

from typing import Type

from botocore.client import BaseClient

self.client: Type[BaseClient]

import boto3

rds_client = boto3.client('rds', 'us-east-1')

self.client: type(rds_client)

```

Did you mean the solution that I started to implement?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kazanzhy commented on a change in pull request #20642: Added Hook for Amazon RDS

kazanzhy commented on a change in pull request #20642:

URL: https://github.com/apache/airflow/pull/20642#discussion_r778472926

##

File path: airflow/providers/amazon/aws/hooks/rds.py

##

@@ -0,0 +1,165 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+"""Interact with AWS RDS."""

+

+from typing import Any, Callable, Dict, List, Optional, Union

+

+from airflow.exceptions import AirflowException

+from airflow.providers.amazon.aws.hooks.base_aws import AwsBaseHook

+

+

+class RDSHook(AwsBaseHook):

+"""

+Interact with AWS RDS using the boto3 library.

+Client methods could be used from hook object, like:

hook.create_db_instance()

+

+Additional arguments (such as ``aws_conn_id``) may be specified and

+are passed down to the underlying AwsBaseHook.

+

+All available methods are listed in documentation

+.. seealso::

+

https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/rds.html

+

+.. seealso::

+:class:`~airflow.providers.amazon.aws.hooks.base_aws.AwsBaseHook`

+

+:param aws_conn_id: The Airflow connection used for AWS credentials.

+:type aws_conn_id: str

+"""

+

+def __init__(self, *args, **kwargs) -> None:

+kwargs["client_type"] = "rds"

+super().__init__(*args, **kwargs)

+

+def __getattr__(self, item: str) -> Union[Callable, AirflowException]:

+client = self.get_conn()

+if hasattr(client, item):

+self.log.info("Calling boto3 RDS client's method:", item)

+return getattr(client, item)

+else:

+raise AirflowException("Boto3 RDS client doesn't have a method:",

item)

+

+

+class RDSHookAlt(AwsBaseHook):

+"""

+Interact with AWS RDS, using the boto3 library.

+

+Additional arguments (such as ``aws_conn_id``) may be specified and

+are passed down to the underlying AwsBaseHook.

+

+All unimplemented methods available from boto3 RDS client ->

hook.get_conn()

+.. seealso::

+

https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/rds.html

+

+.. seealso::

+:class:`~airflow.providers.amazon.aws.hooks.base_aws.AwsBaseHook`

+

+:param aws_conn_id: The Airflow connection used for AWS credentials.

+:type aws_conn_id: str

+"""

+

+def __init__(self, *args, **kwargs) -> None:

+kwargs["client_type"] = "rds"

+super().__init__(*args, **kwargs)

+

+def describe_db_snapshots(

Review comment:

A lot of thanks that clarifying the way to implementation @dstandish

The solutions below don't work and it's expected

```

from typing import Type

from botocore.client import BaseClient

self.client: Type[BaseClient]

import boto3

rds_client = boto3.client('rds', 'us-east-1')

self.client: type(rds_client)

```

Did you mean the solution that I started to implement?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kazanzhy commented on pull request #20642: Added Hook for Amazon RDS

kazanzhy commented on pull request #20642:

URL: https://github.com/apache/airflow/pull/20642#issuecomment-1005276831

A lot of thanks that clarifying the way to implementation @dstandish

The solutions below don't work and it's expected

```

from typing import Type

from botocore.client import BaseClient

self.client: Type[BaseClient]

import boto3

rds_client = boto3.client('rds', 'us-east-1')

self.client: type(rds_client)

```

Did you mean the solution that I started to implement?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] ferruzzi closed pull request #20369: Standardize AWS Batch naming

ferruzzi closed pull request #20369: URL: https://github.com/apache/airflow/pull/20369 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on pull request #20374: Standardize AWS Redshift naming

ferruzzi commented on pull request #20374: URL: https://github.com/apache/airflow/pull/20374#issuecomment-1005268141 This one is throwing the Protocol inheritance error that I mentioned in the other PR: https://github.com/apache/airflow/runs/4708697673?check_suite_focus=true#step:8:8489 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi edited a comment on pull request #20374: Standardize AWS Redshift naming

ferruzzi edited a comment on pull request #20374: URL: https://github.com/apache/airflow/pull/20374#issuecomment-1005258276 rebased and I think I covered https://github.com/apache/airflow/pull/20374#discussion_r771918489 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on pull request #20374: Standardize AWS Redshift naming

ferruzzi commented on pull request #20374: URL: https://github.com/apache/airflow/pull/20374#issuecomment-1005258276 rebased and I think I covered https://github.com/apache/airflow/pull/20374#discussion_r771535786 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a change in pull request #20360: Standardize DynamoDB naming

ferruzzi commented on a change in pull request #20360: URL: https://github.com/apache/airflow/pull/20360#discussion_r778457959 ## File path: airflow/providers/amazon/aws/hooks/dynamodb.py ## @@ -18,13 +18,14 @@ """This module contains the AWS DynamoDB hook""" +import warnings from typing import Iterable, List, Optional from airflow.exceptions import AirflowException from airflow.providers.amazon.aws.hooks.base_aws import AwsBaseHook -class AwsDynamoDBHook(AwsBaseHook): +class DynamoDBHook(AwsBaseHook): Review comment: I'm sorry, I don't follow. What needs to be done there? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] williamfmr commented on issue #20320: Airflow API throwing 500 when user does not have last name

williamfmr commented on issue #20320: URL: https://github.com/apache/airflow/issues/20320#issuecomment-1005250016 @potiuk, unfortunately my employer does not allow for contributing code to external projects. I have removed myself from this ticket. Very sorry :( -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] yehoshuadimarsky opened a new issue #20660: Exception unfairly raised in SQL Operator when using Application Default Credentials with Workload Identity for Cloud SQL Auth proxy

yehoshuadimarsky opened a new issue #20660:

URL: https://github.com/apache/airflow/issues/20660

### Apache Airflow Provider(s)

google

### Versions of Apache Airflow Providers

```

apache-airflow-providers-amazon==2.3.0

apache-airflow-providers-celery==2.1.0

apache-airflow-providers-cncf-kubernetes==2.0.0

apache-airflow-providers-docker==2.2.0

apache-airflow-providers-elasticsearch==2.0.3

apache-airflow-providers-ftp==2.0.1

apache-airflow-providers-google==6.0.0

apache-airflow-providers-grpc==2.0.1

apache-airflow-providers-hashicorp==2.1.1

apache-airflow-providers-http==2.0.1

apache-airflow-providers-imap==2.0.1

apache-airflow-providers-microsoft-azure==3.2.0

apache-airflow-providers-mysql==2.1.1

apache-airflow-providers-odbc==2.0.1

apache-airflow-providers-postgres==2.3.0

apache-airflow-providers-redis==2.0.1

apache-airflow-providers-sendgrid==2.0.1

apache-airflow-providers-sftp==2.1.1

apache-airflow-providers-slack==4.1.0

apache-airflow-providers-sqlite==2.0.1

apache-airflow-providers-ssh==2.2.0

```

### Apache Airflow version

2.2.1

### Operating System

Debian Buster

### Deployment

Official Apache Airflow Helm Chart

### Deployment details

Running the official Airflow Helm chart on GKE. Have Workload Identity set

up and working, linked the Google and Kubernetes service accounts.

Created the an Airflow connection for GCP ADC, per the

[instructions](https://airflow.apache.org/docs/apache-airflow-providers-google/6.2.0/connections/gcp.html#note-on-application-default-credentials)

```bash

airflow connections add \

--conn-type google_cloud_platform \

'gcp-airflow-svc-acct-dev'

```

The SQL connection - note, no password!

```bash

airflow connections add \

--conn-type gcpcloudsql \

--conn-host $SQL_INSTANCE_PUBLIC_IP \

--conn-login {GSA_NAME}@{PROJECT_ID}.iam \ # linked to KSA via WI

--conn-extra '{"instance": "INSTANCE_NAME", "location": "us-east1",

"database_type": "postgres", "project_id": "PROJECT_ID", "use_proxy": true,

"sql_proxy_use_tcp": true}' \

'gcp-sql-ods-dev'

```

### What happened

Getting this error when trying to run a

[CloudSQLExecuteQueryOperator](https://airflow.apache.org/docs/apache-airflow-providers-google/6.0.0/_api/airflow/providers/google/cloud/operators/cloud_sql/index.html#airflow.providers.google.cloud.operators.cloud_sql.CloudSQLExecuteQueryOperator)

```

[2022-01-04, 18:03:49 EST] {taskinstance.py:1703} ERROR - Task failed with

exception

Traceback (most recent call last):

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/models/taskinstance.py",

line 1332, in _run_raw_task

self._execute_task_with_callbacks(context)

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/models/taskinstance.py",

line 1458, in _execute_task_with_callbacks

result = self._execute_task(context, self.task)

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/models/taskinstance.py",

line 1514, in _execute_task

result = execute_callable(context=context)

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/providers/google/cloud/operators/cloud_sql.py",

line 1076, in execute

connection = hook.create_connection()

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/providers/google/cloud/hooks/cloud_sql.py",

line 932, in create_connection

uri = self._generate_connection_uri()

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/providers/google/cloud/hooks/cloud_sql.py",

line 893, in _generate_connection_uri

raise AirflowException("The password parameter needs to be set in

connection")

airflow.exceptions.AirflowException: The password parameter needs to be set

in connection

```

### What you expected to happen

I expect to be able to use the Cloud SQL Auth proxy from GKE using the IAM

database authentication of the Workload Identity-provided GSA and not needing

any passwords at all. However, the Airflow Hook is hardcoded to raise an error

if there is no password in the SQL connection. Why? Outside of Airflow it can

work without a password via Cloud SQL Auth proxy and WI etc, can we allow that

in Airflow too?

### How to reproduce

_No response_

### Anything else

_No response_

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of

Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries

[GitHub] [airflow] dimon222 commented on issue #20615: Status of testing Providers that were prepared on December 31, 2021

dimon222 commented on issue #20615: URL: https://github.com/apache/airflow/issues/20615#issuecomment-1005238859 #19726 briefly tested, seems to work as intended and not raising any problems. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] tikikun commented on issue #15635: ARM64 support in docker images

tikikun commented on issue #15635: URL: https://github.com/apache/airflow/issues/15635#issuecomment-1005236541 Has anyone done this ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] subkanthi opened a new pull request #20659: Disabled edit button in task instances list view page