[GitHub] [airflow] potiuk opened a new pull request, #23612: Make Breeze help generation indepdent from having breeze installed

potiuk opened a new pull request, #23612:

URL: https://github.com/apache/airflow/pull/23612

Generation of Breeze help requires breeze to be installed. However

if you have locally installed breeze with different dependencies

and did not run self-upgrade, the results of generation of the

images might be different (for example when different rich

version is used). This change works in the way that:

* you do not have to have breeze installed at all to make it work

* it always upgrades to latest breeze when it is not installed

* but this only happens when you actually modified some breeze code

---

**^ Add meaningful description above**

Read the **[Pull Request

Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)**

for more information.

In case of fundamental code change, Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in a

newsfragement file, named `{pr_number}.significant.rst`, in

[newsfragments](https://github.com/apache/airflow/tree/main/newsfragments).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] YoavGaudin commented on issue #23610: AttributeError: 'CeleryKubernetesExecutor' object has no attribute 'send_callback'

YoavGaudin commented on issue #23610: URL: https://github.com/apache/airflow/issues/23610#issuecomment-1122002123 We experience this as well, doesn't seem like a complicated fix. I hope it will be fixed promptly so we can use the awesome new features of 2.3. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hsrocks commented on a diff in pull request #21863: Add Quicksight create ingestion Hook and Operator

hsrocks commented on code in PR #21863: URL: https://github.com/apache/airflow/pull/21863#discussion_r868859964 ## airflow/providers/amazon/aws/sensors/quicksight.py: ## @@ -0,0 +1,86 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +from typing import TYPE_CHECKING, Optional + +from airflow.exceptions import AirflowException +from airflow.providers.amazon.aws.hooks.quicksight import QuickSightHook +from airflow.providers.amazon.aws.hooks.sts import StsHook +from airflow.sensors.base import BaseSensorOperator + +if TYPE_CHECKING: +from airflow.utils.context import Context + + +class QuickSightSensor(BaseSensorOperator): +""" +Watches for the status of an Amazon QuickSight Ingestion. + Review Comment: Done -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: Update min requirements for rich to 12.4.1 (#23604)

This is an automated email from the ASF dual-hosted git repository. potiuk pushed a commit to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git The following commit(s) were added to refs/heads/main by this push: new 1bd75ddbe3 Update min requirements for rich to 12.4.1 (#23604) 1bd75ddbe3 is described below commit 1bd75ddbe3b1e590e38d735757d99b43db1725d6 Author: Jarek Potiuk AuthorDate: Tue May 10 08:36:28 2022 +0200 Update min requirements for rich to 12.4.1 (#23604) --- .pre-commit-config.yaml | 40 dev/breeze/README.md| 2 +- dev/breeze/setup.cfg| 2 +- setup.cfg | 2 +- 4 files changed, 23 insertions(+), 23 deletions(-) diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml index 70c0c80d6e..f1f88129c4 100644 --- a/.pre-commit-config.yaml +++ b/.pre-commit-config.yaml @@ -324,7 +324,7 @@ repos: files: ^setup\.cfg$|^setup\.py$ pass_filenames: false entry: ./scripts/ci/pre_commit/pre_commit_check_order_setup.py -additional_dependencies: ['rich'] +additional_dependencies: ['rich>=12.4.1'] - id: check-extra-packages-references name: Checks setup extra packages description: Checks if all the libraries in setup.py are listed in extra-packages-ref.rst file @@ -332,7 +332,7 @@ repos: files: ^setup\.py$|^docs/apache-airflow/extra-packages-ref\.rst$ pass_filenames: false entry: ./scripts/ci/pre_commit/pre_commit_check_setup_extra_packages_ref.py -additional_dependencies: ['rich'] +additional_dependencies: ['rich>=12.4.1'] # This check might be removed when min-airflow-version in providers is 2.2 - id: check-airflow-2-1-compatibility name: Check that providers are 2.1 compatible. @@ -340,14 +340,14 @@ repos: language: python pass_filenames: true files: ^airflow/providers/.*\.py$ -additional_dependencies: ['rich'] +additional_dependencies: ['rich>=12.4.1'] - id: update-breeze-file name: Update output of breeze commands in BREEZE.rst entry: ./scripts/ci/pre_commit/pre_commit_breeze_cmd_line.py language: python files: ^BREEZE\.rst$|^dev/breeze/.*$ pass_filenames: false -additional_dependencies: ['rich', 'rich-click'] +additional_dependencies: ['rich>=12.4.1', 'rich-click'] - id: update-local-yml-file name: Update mounts in the local yml file entry: ./scripts/ci/pre_commit/pre_commit_local_yml_mounts.sh @@ -379,7 +379,7 @@ repos: language: python files: ^Dockerfile$ pass_filenames: false -additional_dependencies: ['rich'] +additional_dependencies: ['rich>=12.4.1'] - id: update-supported-versions name: Updates supported versions in documentation entry: ./scripts/ci/pre_commit/pre_commit_supported_versions.py @@ -601,7 +601,7 @@ repos: - 'jsonschema>=3.2.0,<5.0.0' - 'tabulate==0.8.8' - 'jsonpath-ng==1.5.3' - - 'rich' + - 'rich>=12.4.1' - id: check-pre-commit-information-consistent name: Update information about pre-commit hooks and verify ids and names entry: ./scripts/ci/pre_commit/pre_commit_check_pre_commit_hooks.py @@ -609,7 +609,7 @@ repos: - --max-length=70 language: python files: ^\.pre-commit-config\.yaml$|^scripts/ci/pre_commit/pre_commit_check_pre_commit_hook_names\.py$ -additional_dependencies: ['pyyaml', 'jinja2', 'black==22.3.0', 'tabulate', 'rich'] +additional_dependencies: ['pyyaml', 'jinja2', 'black==22.3.0', 'tabulate', 'rich>=12.4.1'] require_serial: true pass_filenames: false - id: check-airflow-providers-have-extras @@ -619,7 +619,7 @@ repos: files: ^setup\.py$|^airflow/providers/.*\.py$ pass_filenames: false require_serial: true -additional_dependencies: ['rich'] +additional_dependencies: ['rich>=12.4.1'] - id: update-breeze-readme-config-hash name: Update Breeze README.md with config files hash language: python @@ -634,7 +634,7 @@ repos: files: ^dev/breeze/.*$ pass_filenames: false require_serial: true -additional_dependencies: ['click', 'rich'] +additional_dependencies: ['click', 'rich>=12.4.1'] - id: check-system-tests-present name: Check if system tests have required segments of code entry: ./scripts/ci/pre_commit/pre_commit_check_system_tests.py @@ -642,7 +642,7 @@ repos: files: ^tests/system/.*/example_[^/]*.py$ exclude: ^tests/system/providers/google/bigquery/example_bigquery_queries\.py$ pass_filenames: true -additional_dependencies: ['rich'] +additional_dependencies: ['rich>=12.4.1'] - id: lint-markdown

[GitHub] [airflow] potiuk merged pull request #23604: Update min requirements for rich to 12.4.1

potiuk merged PR #23604: URL: https://github.com/apache/airflow/pull/23604 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #23604: Update min requirements for rich to 12.4.1

potiuk commented on PR #23604: URL: https://github.com/apache/airflow/pull/23604#issuecomment-1121986877 Random failures. Merging.. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hsrocks commented on a diff in pull request #21863: Add Quicksight create ingestion Hook and Operator

hsrocks commented on code in PR #21863: URL: https://github.com/apache/airflow/pull/21863#discussion_r868861090 ## airflow/providers/amazon/aws/sensors/quicksight.py: ## @@ -0,0 +1,86 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +from typing import TYPE_CHECKING, Optional + +from airflow.exceptions import AirflowException +from airflow.providers.amazon.aws.hooks.quicksight import QuickSightHook +from airflow.providers.amazon.aws.hooks.sts import StsHook +from airflow.sensors.base import BaseSensorOperator + +if TYPE_CHECKING: +from airflow.utils.context import Context + + +class QuickSightSensor(BaseSensorOperator): +""" +Watches for the status of an Amazon QuickSight Ingestion. + Review Comment: @vincbeck Appreciate the feedback and details. I have implemented the requested changes and suggestions . Kindly review it . Thanks :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hsrocks commented on pull request #21863: Add Quicksight create ingestion Hook and Operator

hsrocks commented on PR #21863: URL: https://github.com/apache/airflow/pull/21863#issuecomment-1121981040 > I am preparing new providers release. Last chance to get that in :) Thanks @potiuk I have implemented the changes. Will wait for review comments by team -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hsrocks commented on a diff in pull request #21863: Add Quicksight create ingestion Hook and Operator

hsrocks commented on code in PR #21863: URL: https://github.com/apache/airflow/pull/21863#discussion_r868859964 ## airflow/providers/amazon/aws/sensors/quicksight.py: ## @@ -0,0 +1,86 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +from typing import TYPE_CHECKING, Optional + +from airflow.exceptions import AirflowException +from airflow.providers.amazon.aws.hooks.quicksight import QuickSightHook +from airflow.providers.amazon.aws.hooks.sts import StsHook +from airflow.sensors.base import BaseSensorOperator + +if TYPE_CHECKING: +from airflow.utils.context import Context + + +class QuickSightSensor(BaseSensorOperator): +""" +Watches for the status of an Amazon QuickSight Ingestion. + Review Comment: Done -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hsrocks commented on a diff in pull request #21863: Add Quicksight create ingestion Hook and Operator

hsrocks commented on code in PR #21863: URL: https://github.com/apache/airflow/pull/21863#discussion_r868859353 ## airflow/providers/amazon/aws/operators/quicksight.py: ## @@ -0,0 +1,94 @@ +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +from typing import TYPE_CHECKING, Optional, Sequence + +from airflow.models import BaseOperator +from airflow.providers.amazon.aws.hooks.quicksight import QuickSightHook + +if TYPE_CHECKING: +from airflow.utils.context import Context + +DEFAULT_CONN_ID = "aws_default" + + +class QuickSightCreateIngestionOperator(BaseOperator): +""" +Creates and starts a new SPICE ingestion for a dataset. +Also, helps to Refresh existing SPICE datasets + Review Comment: Done ## airflow/providers/amazon/aws/sensors/quicksight.py: ## @@ -0,0 +1,86 @@ +# +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +from typing import TYPE_CHECKING, Optional + +from airflow.exceptions import AirflowException +from airflow.providers.amazon.aws.hooks.quicksight import QuickSightHook +from airflow.providers.amazon.aws.hooks.sts import StsHook +from airflow.sensors.base import BaseSensorOperator + +if TYPE_CHECKING: +from airflow.utils.context import Context + + +class QuickSightSensor(BaseSensorOperator): +""" +Watches for the status of an Amazon QuickSight Ingestion. + Review Comment: Done -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hsrocks commented on a diff in pull request #21863: Add Quicksight create ingestion Hook and Operator

hsrocks commented on code in PR #21863:

URL: https://github.com/apache/airflow/pull/21863#discussion_r868859190

##

airflow/providers/amazon/aws/example_dags/example_quicksight.py:

##

@@ -0,0 +1,65 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+import os

+from datetime import datetime

+

+from airflow import DAG

+from airflow.providers.amazon.aws.operators.quicksight import

QuickSightCreateIngestionOperator

+from airflow.providers.amazon.aws.sensors.quicksight import QuickSightSensor

+

+DATA_SET_ID = os.getenv("DATA_SET_ID", "DemoDataSet_Test")

+INGESTION_WAITING_ID = os.getenv("INGESTION_WAITING_ID",

"DemoDataSet_Ingestion_Waiting_Test")

+INGESTION_NO_WAITING_ID = os.getenv("INGESTION_NO_WAITING_ID",

"DemoDataSet_Ingestion_No_Waiting_Test")

+

+with DAG(

+dag_id="example_quicksight",

+schedule_interval=None,

+start_date=datetime(2021, 1, 1),

+tags=["example"],

+catchup=False,

+) as dag:

+# Create and Start the QuickSight SPICE data ingestion

+# and waits for its completion.

+# [START howto_operator_quicksight]

+quicksight_create_ingestion = QuickSightCreateIngestionOperator(

+data_set_id=DATA_SET_ID,

+ingestion_id=INGESTION_WAITING_ID,

+task_id="sample_quicksight_dag",

+)

+quicksight_create_ingestion

+# [END howto_operator_quicksight]

+

+# Create and Start the QuickSight SPICE data ingestion

+# and does not wait for its completion

+# [START howto_operator_quicksight_non_waiting]

+quicksight_create_ingestion_no_waiting = QuickSightCreateIngestionOperator(

+data_set_id=DATA_SET_ID,

+ingestion_id=INGESTION_NO_WAITING_ID,

+wait_for_completion=False,

+task_id="sample_quicksight_no_waiting_dag",

+)

+

+# The following task checks the status of the QuickSight SPICE ingestion

+# job until it succeeds.

+quicksight_job_status = QuickSightSensor(

+data_set_id=DATA_SET_ID,

+ingestion_id=INGESTION_NO_WAITING_ID,

+task_id="check_quicksight_job_status",

+)

Review Comment:

Done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] humit0 commented on a diff in pull request #23468: Rename cluster_policy to task_policy

humit0 commented on code in PR #23468: URL: https://github.com/apache/airflow/pull/23468#discussion_r868833606 ## docs/apache-airflow/concepts/cluster-policies.rst: ## @@ -57,12 +57,17 @@ This policy checks if each DAG has at least one tag defined: Task policies - -Here's an example of enforcing a maximum timeout policy on every task: +Here's an example of enforcing a maximum timeout policy on every task:: -.. literalinclude:: /../../tests/cluster_policies/__init__.py Review Comment: @potiuk when using literalinclude, it raises `redefinition` error. https://user-images.githubusercontent.com/8676247/167551873-1c26791f-3246-4a84-b6bb-8c1d78124ffd.png";> Can I create new file `tests/cluster_policies/example_task_policy.py` for adding that code? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] tirkarthi commented on issue #23609: "RuntimeError: dictionary changed size during iteration" happens in scheduler after running for a few hours

tirkarthi commented on issue #23609: URL: https://github.com/apache/airflow/issues/23609#issuecomment-1121938990 This seems to be a problem with Python itself. It seems to have been fixed in https://github.com/python/cpython/pull/24868 Python 3.9 backport : https://github.com/python/cpython/commit/3b9d886567c4fc6279c2198b6711f0590dbf3336 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Anurag-Shetty commented on issue #23520: Duplicate task instances starts if scheduler restarts in middle of execution of task

Anurag-Shetty commented on issue #23520: URL: https://github.com/apache/airflow/issues/23520#issuecomment-1121937825 I have having standalone deployment with postgres database as backend. its not a celery or kubernetes deployment. I can see its happening quite regularly. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] tirkarthi commented on issue #23610: AttributeError: 'CeleryKubernetesExecutor' object has no attribute 'send_callback'

tirkarthi commented on issue #23610: URL: https://github.com/apache/airflow/issues/23610#issuecomment-1121936423 The changes were added in #21301 . `CeleryKubernetesExecutor` inherits from `LoggingMixin` instead of `BaseExecutor` and might be missing this method. cc: @mhenc -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Greetlist commented on issue #20308: Multi Schedulers don't synchronize task instance state when using Triggerer

Greetlist commented on issue #20308: URL: https://github.com/apache/airflow/issues/20308#issuecomment-1121933204 @jskalasariya If you also use the deferrable task, you can fix this via https://github.com/apache/airflow/issues/20308#issuecomment-1005435631 temporarily. You can just try to change these codes and make some tests to checkout whether this problem is disappear. At this moment, In my product env: **airflow version**: > 2.2.0 **airflow components**: > 1. four schedulers > 2. three triggerers > 3. two web servers **operating system**: > Ubuntu16.04 **machine resource**: > 64G memory > 6Core(2slots * 3Core) NUMA Disabled **database**: > percona-server: 8.0.17 in k8s cluster as Deployment works as expected. By the way, if you want to use the deferrable operator's defer_timeout parameter,you may have some **dead-lock** problems: ```models/trigger.py[func submit_* clean_unused]``` will hold row lock **AND** ```jobs/scheduler_job.py[func check_trigger_timeouts])``` will hold another row lock. I fix this dead-lock by adding ```with_row_locks```, ```order by``` and split query and update into two steps:  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Bowrna commented on issue #23227: Ability to clear a specific DAG Run's task instances via REST APIs

Bowrna commented on issue #23227:

URL: https://github.com/apache/airflow/issues/23227#issuecomment-1121904927

@ephraimbuddy @bbovenzi How to pass `map_index` in this case of using

`/api/v1/dags/{dag_id}/clearTaskInstances` endpoint?

Because in `/api/v1/dags/{dag_id}/clearTaskInstances` we can get array of

task_id. If we get an array of task_id with map_index, how to ensure to which

task_id that map_index belong?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] jskalasariya commented on issue #20308: Multi Schedulers don't synchronize task instance state when using Triggerer

jskalasariya commented on issue #20308: URL: https://github.com/apache/airflow/issues/20308#issuecomment-1121897356 Any other work around apart from the one which was suggested by @Greetlist ? I am also running into same problem when I ran two scheduler. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jskalasariya commented on issue #20460: TimeDeltaSensorAsync task is failing occasionally

jskalasariya commented on issue #20460: URL: https://github.com/apache/airflow/issues/20460#issuecomment-1121895300 Any other work around apart from the one which was suggested by @Greetlist ? I am also running into same problem when I ran two scheduler. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #23604: Update min requirements for rich to 12.4.1

github-actions[bot] commented on PR #23604: URL: https://github.com/apache/airflow/pull/23604#issuecomment-1121894245 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest main at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] rahulgoyal2987 commented on a diff in pull request #23490: Fixed Kubernetes Operator large xcom content Defect

rahulgoyal2987 commented on code in PR #23490:

URL: https://github.com/apache/airflow/pull/23490#discussion_r868781207

##

airflow/providers/cncf/kubernetes/utils/pod_manager.py:

##

@@ -380,9 +380,15 @@ def _exec_pod_command(self, resp, command: str) ->

Optional[str]:

resp.write_stdin(command + '\n')

while resp.is_open():

resp.update(timeout=1)

-if resp.peek_stdout():

-return resp.read_stdout()

-if resp.peek_stderr():

-self.log.info("stderr from command: %s",

resp.read_stderr())

+res = None

+while resp.peek_stdout():

+res = res + resp.read_stdout()

+if res:

+return res

Review Comment:

Do we get stdout and stderr in real scenario? I have seen in spark we can

get both together. Then I should move this return part to the end. This api

does't have much documentation. Only we can move with logical sense how normal

system work.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] rahulgoyal2987 commented on a diff in pull request #23490: Fixed Kubernetes Operator large xcom content Defect

rahulgoyal2987 commented on code in PR #23490:

URL: https://github.com/apache/airflow/pull/23490#discussion_r868781207

##

airflow/providers/cncf/kubernetes/utils/pod_manager.py:

##

@@ -380,9 +380,15 @@ def _exec_pod_command(self, resp, command: str) ->

Optional[str]:

resp.write_stdin(command + '\n')

while resp.is_open():

resp.update(timeout=1)

-if resp.peek_stdout():

-return resp.read_stdout()

-if resp.peek_stderr():

-self.log.info("stderr from command: %s",

resp.read_stderr())

+res = None

+while resp.peek_stdout():

+res = res + resp.read_stdout()

+if res:

+return res

Review Comment:

Do we get stdout and stderr in real scenario? I have seen in spark we can

get both together. Then I should move this return part to the end. This api

does't have much documentation. Only we can move with logical sense how normal

system work

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] rahulgoyal2987 commented on a diff in pull request #23490: Fixed Kubernetes Operator large xcom content Defect

rahulgoyal2987 commented on code in PR #23490:

URL: https://github.com/apache/airflow/pull/23490#discussion_r868781207

##

airflow/providers/cncf/kubernetes/utils/pod_manager.py:

##

@@ -380,9 +380,15 @@ def _exec_pod_command(self, resp, command: str) ->

Optional[str]:

resp.write_stdin(command + '\n')

while resp.is_open():

resp.update(timeout=1)

-if resp.peek_stdout():

-return resp.read_stdout()

-if resp.peek_stderr():

-self.log.info("stderr from command: %s",

resp.read_stderr())

+res = None

+while resp.peek_stdout():

+res = res + resp.read_stdout()

+if res:

+return res

Review Comment:

Do we get stdout and stderr in real scenario? I have seen in spark we can

get both together. Then I should move this return part to in end. This api

does't have much documentation. Only we can move with logical sense how normal

system work

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] rahulgoyal2987 commented on a diff in pull request #23490: Fixed Kubernetes Operator large xcom content Defect

rahulgoyal2987 commented on code in PR #23490:

URL: https://github.com/apache/airflow/pull/23490#discussion_r868781207

##

airflow/providers/cncf/kubernetes/utils/pod_manager.py:

##

@@ -380,9 +380,15 @@ def _exec_pod_command(self, resp, command: str) ->

Optional[str]:

resp.write_stdin(command + '\n')

while resp.is_open():

resp.update(timeout=1)

-if resp.peek_stdout():

-return resp.read_stdout()

-if resp.peek_stderr():

-self.log.info("stderr from command: %s",

resp.read_stderr())

+res = None

+while resp.peek_stdout():

+res = res + resp.read_stdout()

+if res:

+return res

Review Comment:

Do we get stdout and stderr in real scenario? I have seen in spark we can

get both together. Then I should move this return part to in end.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

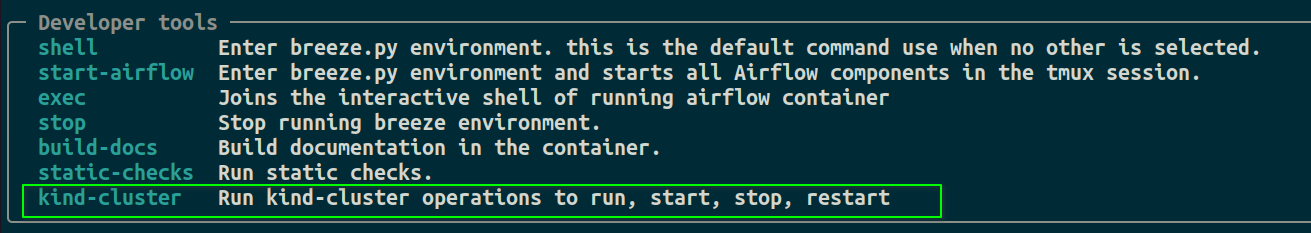

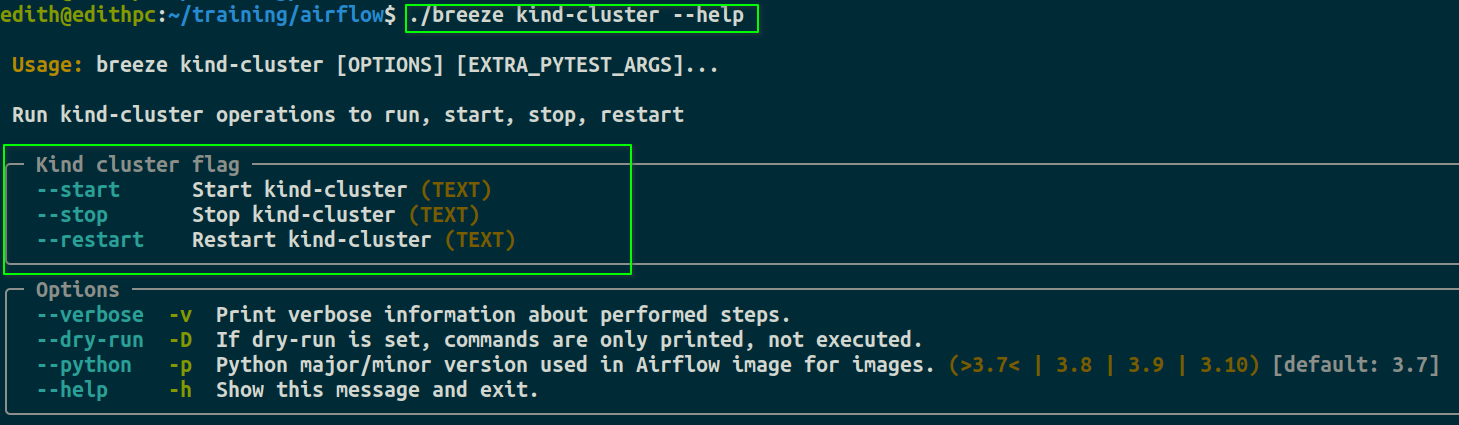

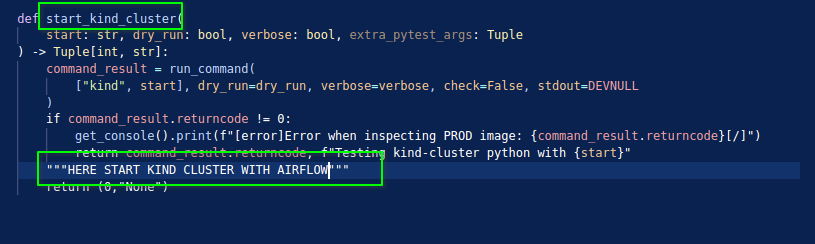

[GitHub] [airflow] edithturn commented on issue #23085: Breeze: Running K8S tests with Kind with Breeze

edithturn commented on issue #23085: URL: https://github.com/apache/airflow/issues/23085#issuecomment-1121849684 @potiuk let me know if I am going for the right path: 1. In the Developer tools section, I am adding kind-cluster as a command (./breeze --help)  * As options of kind-cluster, I am adding just for now "start", "stop" and "restart".  2. I added a new class in `dev/breeze/src/airflow_breeze/utils/kind_command_utils.py`, it will contain validations to start/stop...etc kind with airflow version for this: `./breeze-legacy kind-cluster start`  Now, --start, --stop, --restart, should they be commands and not options, right? In `./breeze kind-cluster start` <-- kind-cluster is a command and **start** should be a command too -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eduardchai opened a new issue, #23610: AttributeError: 'CeleryKubernetesExecutor' object has no attribute 'send_callback'

eduardchai opened a new issue, #23610: URL: https://github.com/apache/airflow/issues/23610 ### Apache Airflow version 2.3.0 (latest released) ### What happened The issue started to occur after upgrading airflow from v2.2.5 to v2.3.0. The schedulers are crashing when DAG's SLA is configured. Only occurred when I used `CeleryKubernetesExecutor`. Tested on `CeleryExecutor` and it works as expected. ``` Traceback (most recent call last): File "/home/airflow/.local/bin/airflow", line 8, in sys.exit(main()) File "/home/airflow/.local/lib/python3.9/site-packages/airflow/__main__.py", line 38, in main args.func(args) File "/home/airflow/.local/lib/python3.9/site-packages/airflow/cli/cli_parser.py", line 51, in command return func(*args, **kwargs) File "/home/airflow/.local/lib/python3.9/site-packages/airflow/utils/cli.py", line 99, in wrapper return f(*args, **kwargs) File "/home/airflow/.local/lib/python3.9/site-packages/airflow/cli/commands/scheduler_command.py", line 75, in scheduler _run_scheduler_job(args=args) File "/home/airflow/.local/lib/python3.9/site-packages/airflow/cli/commands/scheduler_command.py", line 46, in _run_scheduler_job job.run() File "/home/airflow/.local/lib/python3.9/site-packages/airflow/jobs/base_job.py", line 244, in run self._execute() File "/home/airflow/.local/lib/python3.9/site-packages/airflow/jobs/scheduler_job.py", line 736, in _execute self._run_scheduler_loop() File "/home/airflow/.local/lib/python3.9/site-packages/airflow/jobs/scheduler_job.py", line 824, in _run_scheduler_loop num_queued_tis = self._do_scheduling(session) File "/home/airflow/.local/lib/python3.9/site-packages/airflow/jobs/scheduler_job.py", line 919, in _do_scheduling self._send_dag_callbacks_to_processor(dag, callback_to_run) File "/home/airflow/.local/lib/python3.9/site-packages/airflow/jobs/scheduler_job.py", line 1179, in _send_dag_callbacks_to_processor self._send_sla_callbacks_to_processor(dag) File "/home/airflow/.local/lib/python3.9/site-packages/airflow/jobs/scheduler_job.py", line 1195, in _send_sla_callbacks_to_processor self.executor.send_callback(request) AttributeError: 'CeleryKubernetesExecutor' object has no attribute 'send_callback' ``` ### What you think should happen instead _No response_ ### How to reproduce 1. Use `CeleryKubernetesExecutor` 2. Configure DAG's SLA DAG to reproduce: ``` # # Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, # software distributed under the License is distributed on an # "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY # KIND, either express or implied. See the License for the # specific

[GitHub] [airflow] thaysa commented on issue #17481: Execution date is in future

thaysa commented on issue #17481: URL: https://github.com/apache/airflow/issues/17481#issuecomment-1121830025 @lewismc in my case, the dates were out of sync between the Airflow metadatabase server and the Airflow scheduler pod. They were both in UTC but they were out of sync. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaojunsong opened a new issue, #23609: "RuntimeError: dictionary changed size during iteration" happens in scheduler after running for a few hours

kaojunsong opened a new issue, #23609:

URL: https://github.com/apache/airflow/issues/23609

### Apache Airflow version

2.2.5

### What happened

The following error happens in the arflow scheduler:

[2022-05-09 11:22:44,122] [INFO] base_executor.py:85 - Adding to queue:

['airflow', 'tasks', 'run', 'stress-ingest', 'ingest',

'manual__2022-05-09T10:31:12.256131+00:00', '--local', '--subdir',

'DAGS_FOLDER/stress_ingest.py']

Exception in thread Thread-7940:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/threading.py", line 973, in _bootstrap_inner

self.run()

File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 317,

in run

result_item, is_broken, cause = self.wait_result_broken_or_wakeup()

File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 376,

in wait_result_broken_or_wakeup

worker_sentinels = [p.sentinel for p in self.processes.values()]

File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 376,

in

worker_sentinels = [p.sentinel for p in self.processes.values()]

RuntimeError: dictionary changed size during iteration

### What you think should happen instead

No error happens

### How to reproduce

I have created 2 DAGs, and DAG one will trigger DAG two thousands of times:

```

from datetime import datetime, timedelta

from distutils.command.config import config

from textwrap import dedent

from common.utils import httpclient

# The DAG object; we'll need this to instantiate a DAG

from airflow import DAG

# Operators; we need this to operate!

from airflow.operators.trigger_dagrun import TriggerDagRunOperator

from airflow.operators.python import PythonOperator

DELEGATOR_END_POINT =

'http://space-common-delegator-clusteripsvc:9049/api/pipelines/dags/stress-ingest/dagRuns/'

def trigger(**kwargs):

conf = kwargs.get('dag_run').conf

loop_number=conf["loop"]

print("Loop: "+str(loop_number))

for i in range(loop_number):

httpclient.post(DELEGATOR_END_POINT, conf)

print("Trigger: "+str(i))

with DAG(

'stress-test',

# These args will get passed on to each operator

# You can override them on a per-task basis during operator

initialization

default_args={

'depends_on_past': False,

'email': ['kao-jun.s...@zontal.io'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

},

description='A Stress Test DAG',

start_date=datetime(2022, 1, 1),

catchup=False,

schedule_interval=None,

tags=['stress','test'],

) as dag:

trigger_via_delegator = PythonOperator(

task_id='trigger_via_delegator',

python_callable=trigger,

dag=dag,

do_xcom_push=True,

retries=0

)

trigger_via_delegator

```

```

from datetime import datetime, timedelta

from distutils.command.config import config

from textwrap import dedent

from common.utils import httpclient

# The DAG object; we'll need this to instantiate a DAG

from airflow import DAG

# Operators; we need this to operate!

from airflow.operators.trigger_dagrun import TriggerDagRunOperator

from airflow.operators.python import PythonOperator

from common.utils.httpclient import post, get

from common.constants import INGEST_PULL_END_POINT

def ingest(**kwargs):

conf = kwargs.get('dag_run').conf

body = conf['body']

post(INGEST_PULL_END_POINT, body, 'stress test')

with DAG(

'stress-ingest',

default_args={

'depends_on_past': False,

'email': ['kao-jun.s...@zontal.io'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

},

description='A Stress Test DAG',

start_date=datetime(2022, 1, 1),

catchup=False,

schedule_interval=None,

tags=['stress','test'],

) as dag:

trigger_via_delegator = PythonOperator(

task_id='ingest',

python_callable=ingest,

dag=dag,

do_xcom_push=True,

retries=0

)

trigger_via_delegator

```

### Operating System

PRETTY_NAME="Debian GNU/Linux 9 (stretch)" NAME="Debian GNU/Linux"

VERSION_ID="9" VERSION="9 (stretch)" VERSION_CODENAME=stretch ID=debian

HOME_URL="https://www.debian.org/"; SUPPORT_URL="https://www.debian.org/support";

BUG_REPORT_URL="https://bugs.debian.org/";

### Versions of Apache Airflow Providers

apache-airflow[kubernetes,postgres,celery,redis,trino,ldap,elasticsearch,amazon,crypto,oracle,jdbc,microsoft-mssql]==2.2.5

\

--constraint

"http

[GitHub] [airflow] kolfild26 commented on issue #23607: airflow/configuration.py [logging] log_filename_template FutureWarning

kolfild26 commented on issue #23607: URL: https://github.com/apache/airflow/issues/23607#issuecomment-1121736955 `log_filename_template = dag_id=...` This looks strange, bwt. I didn't find any other lines containing two `=` in airflow.cfg. Then tried to delete `"dag_id="` but with no success. The exception remains. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kolfild26 opened a new issue, #23607: airflow/configuration.py [logging] log_filename_template FutureWarning

kolfild26 opened a new issue, #23607:

URL: https://github.com/apache/airflow/issues/23607

### Apache Airflow version

2.3.0 (latest released)

### What happened

Hi,

Tried to upgrade from v.2.2.3 to v.2.3.

`airflow db upgrade:`

```

./airflow2.3/lib/python3.8/site-packages/airflow/configuration.py:412:

FutureWarning: The 'log_filename_template' setting in [logging] has the old

default value of '{{ ti.dag_id }}/{{ ti.task_id }}/{{ ts }}/{{ try_number

}}.log'. This value has been changed to 'dag_id={{ ti.dag_id }}/run_id={{

ti.run_id }}/task_id={{ ti.task_id }}/{% if ti.map_index >= 0 %}map_index={{

ti.map_index }}/{% endif %}attempt={{ try_number }}.log' in the running config,

but please update your config before Apache Airflow 3.0.

```

I reviewed my airflow.cfg file and changed what was recommended by the

waring message. And also checked [the default config

file](https://github.com/apache/airflow/blob/main/airflow/config_templates/default_airflow.cfg)

in the airflow github repository. Here is the same string:

`log_filename_template = dag_id= ti.dag_id /run_id= ti.run_id

/task_id= ti.task_id /{{%% if ti.map_index >= 0 %%}}map_index=

ti.map_index /{{%% endif %%}}attempt= try_number .log`

But after I run again I get the exception:

`airflow db upgrade --show-sql-only:`

```

Unable to load the config, contains a configuration error.

Traceback (most recent call last):

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/logging/config.py", line

563, in configure

handler = self.configure_handler(handlers[name])

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/logging/config.py", line

744, in configure_handler

result = factory(**kwargs)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/utils/log/file_task_handler.py",

line 51, in __init__

self.filename_template, self.filename_jinja_template =

parse_template_string(filename_template)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/utils/helpers.py",

line 176, in parse_template_string

return None, jinja2.Template(template_string)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/jinja2/environment.py",

line 1195, in __new__

return env.from_string(source, template_class=cls)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/jinja2/environment.py",

line 1092, in from_string

return cls.from_code(self, self.compile(source), gs, None)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/jinja2/environment.py",

line 757, in compile

self.handle_exception(source=source_hint)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/jinja2/environment.py",

line 925, in handle_exception

raise rewrite_traceback_stack(source=source)

File "", line 1, in template

jinja2.exceptions.TemplateSyntaxError: expected token ':', got '}'

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/media/data/anaconda3/envs/airflow2.3/bin/airflow", line 5, in

from airflow.__main__ import main

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/__init__.py",

line 47, in

settings.initialize()

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/settings.py",

line 561, in initialize

LOGGING_CLASS_PATH = configure_logging()

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/logging_config.py",

line 73, in configure_logging

raise e

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/logging_config.py",

line 68, in configure_logging

dictConfig(logging_config)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/logging/config.py", line

808, in dictConfig

dictConfigClass(config).configure()

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/logging/config.py", line

570, in configure

raise ValueError('Unable to configure handler '

ValueError: Unable to configure handler 'task'

```

it seems that it is the **log_filename_template** parameter causes the error

as:

- there are no any other exceptions or error messages

- exception disapears when reverting the changes

I don't really know whether this FutureWarning causes any problems with

working with airflow. And is it okay to stay with the old value of the

**log_filename_template**?

### What you think should happen instead

_No response_

### How to reproduce

1. upgrade airflow installation from [pip apache

airflow](https://pypi.org/project/apache-airflow/)

2. run `airflow db upgrade`

### Operating System

[GitHub] [airflow] kolfild26 commented on issue #23605: 2.2.3 -> 2.3 upgrade db error with mysql metadb

kolfild26 commented on issue #23605: URL: https://github.com/apache/airflow/issues/23605#issuecomment-1121704745 So, currently unable to move to v.2.3. p.s. I didn't try to initiate the db from scratch, because I need to keep the past data and settings. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on issue #23605: 2.2.3 -> 2.3 upgrade db error with mysql metadb

boring-cyborg[bot] commented on issue #23605: URL: https://github.com/apache/airflow/issues/23605#issuecomment-1121702896 Thanks for opening your first issue here! Be sure to follow the issue template! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kolfild26 opened a new issue, #23605: 2.2.3 -> 2.3 upgrade db error with mysql metadb

kolfild26 opened a new issue, #23605:

URL: https://github.com/apache/airflow/issues/23605

### Apache Airflow version

2.3.0 (latest released)

### What happened

Hi,

Tried to upgrade from v.2.2.3 to v.2.3

`airflow upgrade db:`

```

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/engine/base.py",

line 1705, in _execute_context

self.dialect.do_execute(

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/engine/default.py",

line 716, in do_execute

cursor.execute(statement, parameters)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/mysql/connector/cursor_cext.py",

line 269, in execute

result = self._cnx.cmd_query(stmt, raw=self._raw,

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/mysql/connector/connection_cext.py",

line 528, in cmd_query

raise errors.get_mysql_exception(exc.errno, msg=exc.msg,

mysql.connector.errors.ProgrammingError: 1054 (42S22): Unknown column

'rendered_task_instance_fields.dag_id' in 'on clause'

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/media/data/anaconda3/envs/airflow2.3/bin/airflow", line 8, in

sys.exit(main())

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/__main__.py",

line 38, in main

args.func(args)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/cli/cli_parser.py",

line 51, in command

return func(*args, **kwargs)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/utils/cli.py",

line 99, in wrapper

return f(*args, **kwargs)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/cli/commands/db_command.py",

line 82, in upgradedb

db.upgradedb(to_revision=to_revision, from_revision=from_revision,

show_sql_only=args.show_sql_only)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/utils/session.py",

line 71, in wrapper

return func(*args, session=session, **kwargs)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/utils/db.py",

line 1400, in upgradedb

for err in _check_migration_errors(session=session):

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/utils/db.py",

line 1285, in _check_migration_errors

yield from check_fn(session=session)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/airflow/utils/db.py",

line 1243, in check_bad_references

invalid_row_count = invalid_rows_query.count()

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/orm/query.py",

line 3062, in count

return self._from_self(col).enable_eagerloads(False).scalar()

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/orm/query.py",

line 2803, in scalar

ret = self.one()

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/orm/query.py",

line 2780, in one

return self._iter().one()

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/orm/query.py",

line 2818, in _iter

result = self.session.execute(

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/orm/session.py",

line 1670, in execute

result = conn._execute_20(statement, params or {}, execution_options)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/engine/base.py",

line 1520, in _execute_20

return meth(self, args_10style, kwargs_10style, execution_options)

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/sql/elements.py",

line 313, in _execute_on_connection

return connection._execute_clauseelement(

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/engine/base.py",

line 1389, in _execute_clauseelement

ret = self._execute_context(

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/engine/base.py",

line 1748, in _execute_context

self._handle_dbapi_exception(

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/engine/base.py",

line 1929, in _handle_dbapi_exception

util.raise_(

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/util/compat.py",

line 211, in raise_

raise exception

File

"/media/data/anaconda3/envs/airflow2.3/lib/python3.8/site-packages/sqlalchemy/engine/base.py",

line 1705, in _execute_context

self.di

[GitHub] [airflow] potiuk commented on pull request #23604: Update min requirements for rich to 12.4.1

potiuk commented on PR #23604: URL: https://github.com/apache/airflow/pull/23604#issuecomment-1121665957 Hey @josh-fell - updated min-requirements ... -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #23506: Added postgres 14 to support versions(including breeze)

potiuk commented on PR #23506: URL: https://github.com/apache/airflow/pull/23506#issuecomment-1121665587 You want to rebase to latest version for rich 12.4.1 min-version after #23604 is merged. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk opened a new pull request, #23604: Update min requirements for rich to 12.4.1

potiuk opened a new pull request, #23604:

URL: https://github.com/apache/airflow/pull/23604

---

**^ Add meaningful description above**

Read the **[Pull Request

Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)**

for more information.

In case of fundamental code change, Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in a

newsfragement file, named `{pr_number}.significant.rst`, in

[newsfragments](https://github.com/apache/airflow/tree/main/newsfragments).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[airflow] branch main updated (e63dbdc431 -> d21e49dfda)

This is an automated email from the ASF dual-hosted git repository. potiuk pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git from e63dbdc431 Add exception to catch single line private keys (#23043) add d21e49dfda Add sample dag and doc for S3ListPrefixesOperator (#23448) No new revisions were added by this update. Summary of changes: .../amazon/aws/example_dags/example_s3.py | 39 ++ airflow/providers/amazon/aws/operators/s3.py | 4 +++ .../operators/s3.rst | 16 + 3 files changed, 46 insertions(+), 13 deletions(-)

[GitHub] [airflow] potiuk merged pull request #23448: Add sample dag and doc for S3ListPrefixesOperator

potiuk merged PR #23448: URL: https://github.com/apache/airflow/pull/23448 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated (d7b85d9a0a -> e63dbdc431)

This is an automated email from the ASF dual-hosted git repository. potiuk pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git from d7b85d9a0a Use inclusive words in apache airflow project (#23090) add e63dbdc431 Add exception to catch single line private keys (#23043) No new revisions were added by this update. Summary of changes: airflow/providers/ssh/hooks/ssh.py| 3 +++ tests/providers/ssh/hooks/test_ssh.py | 18 ++ 2 files changed, 21 insertions(+)

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #23043: Add exception to catch single line private keys

boring-cyborg[bot] commented on PR #23043: URL: https://github.com/apache/airflow/pull/23043#issuecomment-1121658704 Awesome work, congrats on your first merged pull request! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk merged pull request #23043: Add exception to catch single line private keys

potiuk merged PR #23043: URL: https://github.com/apache/airflow/pull/23043 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk closed issue #22576: SFTP connection hook not working when using inline Ed25519 key from Airflow connection

potiuk closed issue #22576: SFTP connection hook not working when using inline Ed25519 key from Airflow connection URL: https://github.com/apache/airflow/issues/22576 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] edithturn commented on pull request #23090: Use inclusive words in apache airflow project

edithturn commented on PR #23090: URL: https://github.com/apache/airflow/pull/23090#issuecomment-1121621186 wohoo! Thank you! 🙌🏽💃🏽 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: Use inclusive words in apache airflow project (#23090)

This is an automated email from the ASF dual-hosted git repository.

potiuk pushed a commit to branch main

in repository https://gitbox.apache.org/repos/asf/airflow.git

The following commit(s) were added to refs/heads/main by this push:

new d7b85d9a0a Use inclusive words in apache airflow project (#23090)

d7b85d9a0a is described below

commit d7b85d9a0a09fd7b287ec928d3b68c38481b0225

Author: Edith Puclla <58795858+editht...@users.noreply.github.com>

AuthorDate: Mon May 9 16:52:29 2022 -0500

Use inclusive words in apache airflow project (#23090)

---

.pre-commit-config.yaml| 39 --

airflow/sensors/base.py| 4 +--

airflow/utils/orm_event_handlers.py| 2 +-

.../commands/production_image_commands.py | 4 +--

scripts/ci/libraries/_sanity_checks.sh | 2 +-

scripts/in_container/run_system_tests.sh | 2 +-

tests/conftest.py | 2 +-

7 files changed, 37 insertions(+), 18 deletions(-)

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index c8ab403182..70c0c80d6e 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -429,19 +429,38 @@ repos:

- id: check-for-inclusive-language

language: pygrep

name: Check for language that we do not accept as community

-description: Please use "deny_list" or "allow_list" instead.

-entry: "(?i)(black|white)[_-]?list"

+description: Please use more appropriate words for community

documentation.

+entry: >

+ (?i)

+ (black|white)[_-]?list|

+ \bshe\b|

+ \bhe\b|

+ \bher\b|

+ \bhis\b|

+ \bmaster\b|

+ \bslave\b|

+ \bsanity\b|

+ \bdummy\b

pass_filenames: true

exclude: >

(?x)

- ^airflow/www/fab_security/manager\.py$|

- ^airflow/providers/apache/cassandra/hooks/cassandra\.py$|

- ^airflow/providers/apache/hive/operators/hive_stats\.py$|

- ^airflow/providers/apache/hive/.*PROVIDER_CHANGES_*|

- ^airflow/providers/apache/hive/.*README\.md$|

- ^tests/providers/apache/cassandra/hooks/test_cassandra\.py$|

-

^docs/apache-airflow-providers-apache-cassandra/connections/cassandra\.rst$|

- ^docs/apache-airflow-providers-apache-hive/commits\.rst$|

+ ^airflow/www/fab_security/manager.py$|

+ ^airflow/www/static/|

+ ^airflow/providers/|

+ ^tests/providers/apache/cassandra/hooks/test_cassandra.py$|

+

^docs/apache-airflow-providers-apache-cassandra/connections/cassandra.rst$|

+ ^docs/apache-airflow-providers-apache-hive/commits.rst$|

+ ^airflow/api_connexion/openapi/v1.yaml$|

+ ^tests/cli/commands/test_webserver_command.py$|

+ ^airflow/cli/commands/webserver_command.py$|

+ ^airflow/ui/yarn.lock$|

+ ^airflow/config_templates/default_airflow.cfg$|

+ ^airflow/config_templates/config.yml$|

+ ^docs/*.*$|

+ ^tests/providers/|

+ ^.pre-commit-config\.yaml$|

+ ^.*RELEASE_NOTES\.rst$|

+ ^.*CHANGELOG\.txt$|^.*CHANGELOG\.rst$|

git

- id: check-base-operator-usage

language: pygrep

diff --git a/airflow/sensors/base.py b/airflow/sensors/base.py

index 1a3bd72a33..5cbe009c82 100644

--- a/airflow/sensors/base.py

+++ b/airflow/sensors/base.py

@@ -157,10 +157,10 @@ class BaseSensorOperator(BaseOperator, SkipMixin):

f".{self.task_id}'; received '{self.mode}'."

)

-# Sanity check for poke_interval isn't immediately over MySQL's

TIMESTAMP limit.

+# Quick check for poke_interval isn't immediately over MySQL's

TIMESTAMP limit.

# This check is only rudimentary to catch trivial user errors, e.g.

mistakenly

# set the value to milliseconds instead of seconds. There's another

check when

-# we actually try to reschedule to ensure database sanity.

+# we actually try to reschedule to ensure database coherence.

if self.reschedule and _is_metadatabase_mysql():

if timezone.utcnow() +

datetime.timedelta(seconds=self.poke_interval) > _MYSQL_TIMESTAMP_MAX:

raise AirflowException(

diff --git a/airflow/utils/orm_event_handlers.py

b/airflow/utils/orm_event_handlers.py

index 23c99d8866..f3c08c9c07 100644

--- a/airflow/utils/orm_event_handlers.py

+++ b/airflow/utils/orm_event_handlers.py

@@ -43,7 +43,7 @@ def setup_event_handlers(engine):

cursor.execute("PRAGMA foreign_keys=ON")

cursor.close()

-# this ensures sanity in mysql when storing datetimes (not required for

postgres)

+# this ensures coherence in mysql when storing datetimes (not required for

postgres)

if engine.dialect.name == "mysql":

@event.listens_for(engine, "connect")

diff --git

a/dev/breeze/src/a

[GitHub] [airflow] potiuk closed issue #15994: Use inclusive words in Apache Airflow project

potiuk closed issue #15994: Use inclusive words in Apache Airflow project URL: https://github.com/apache/airflow/issues/15994 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk merged pull request #23090: Use inclusive words in apache airflow project

potiuk merged PR #23090: URL: https://github.com/apache/airflow/pull/23090 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch constraints-main updated: Updating constraints. Build id:2296793856

This is an automated email from the ASF dual-hosted git repository. github-bot pushed a commit to branch constraints-main in repository https://gitbox.apache.org/repos/asf/airflow.git The following commit(s) were added to refs/heads/constraints-main by this push: new be078fa89a Updating constraints. Build id:2296793856 be078fa89a is described below commit be078fa89aa6460dfb1fdda3dcc8c2a4cff0fdf4 Author: Automated GitHub Actions commit AuthorDate: Mon May 9 21:44:14 2022 + Updating constraints. Build id:2296793856 This update in constraints is automatically committed by the CI 'constraints-push' step based on HEAD of 'refs/heads/main' in 'apache/airflow' with commit sha 9a6baab5a271b28b6b3cbf96ffa151ac7dc79013. All tests passed in this build so we determined we can push the updated constraints. See https://github.com/apache/airflow/blob/main/README.md#installing-from-pypi for details. --- constraints-3.10.txt | 42 +-- constraints-3.7.txt | 42 +-- constraints-3.8.txt | 42 +-- constraints-3.9.txt | 42 +-- constraints-no-providers-3.10.txt | 6 ++--- constraints-no-providers-3.7.txt | 6 ++--- constraints-no-providers-3.8.txt | 6 ++--- constraints-no-providers-3.9.txt | 6 ++--- constraints-source-providers-3.10.txt | 12 +- constraints-source-providers-3.7.txt | 12 +- constraints-source-providers-3.8.txt | 12 +- constraints-source-providers-3.9.txt | 12 +- 12 files changed, 120 insertions(+), 120 deletions(-) diff --git a/constraints-3.10.txt b/constraints-3.10.txt index 77034f795b..60345f0333 100644 --- a/constraints-3.10.txt +++ b/constraints-3.10.txt @@ -1,5 +1,5 @@ # -# This constraints file was automatically generated on 2022-05-06T12:25:27Z +# This constraints file was automatically generated on 2022-05-09T21:43:53Z # via "eager-upgrade" mechanism of PIP. For the "main" branch of Airflow. # This variant of constraints install uses the HEAD of the branch version for 'apache-airflow' but installs # the providers from PIP-released packages at the moment of the constraint generation. @@ -139,7 +139,7 @@ argcomplete==1.12.3 arrow==1.2.2 asana==0.10.13 asn1crypto==1.5.1 -astroid==2.11.4 +astroid==2.11.5 asttokens==2.0.5 async-timeout==4.0.2 atlasclient==1.0.0 @@ -147,7 +147,7 @@ attrs==20.3.0 aws-xray-sdk==2.9.0 azure-batch==12.0.0 azure-common==1.1.28 -azure-core==1.23.1 +azure-core==1.24.0 azure-cosmos==4.2.0 azure-datalake-store==0.0.52 azure-identity==1.10.0 @@ -172,9 +172,9 @@ billiard==3.6.4.0 black==22.3.0 bleach==5.0.0 blinker==1.4 -boto3==1.22.8 +boto3==1.22.10 boto==2.49.0 -botocore==1.25.8 +botocore==1.25.10 bowler==0.9.0 cached-property==1.5.2 cachelib==0.6.0 @@ -209,7 +209,7 @@ cx-Oracle==8.3.0 dask==2022.5.0 databricks-sql-connector==1.0.2 datadog==0.44.0 -db-dtypes==1.0.0 +db-dtypes==1.0.1 decorator==5.1.1 defusedxml==0.7.1 dill==0.3.1.1 @@ -282,7 +282,7 @@ google-cloud-secret-manager==1.0.1 google-cloud-spanner==1.19.2 google-cloud-speech==1.3.3 google-cloud-storage==1.44.0 -google-cloud-tasks==2.8.1 +google-cloud-tasks==2.9.0 google-cloud-texttospeech==1.0.2 google-cloud-translate==1.7.1 google-cloud-videointelligence==1.16.2 @@ -328,13 +328,13 @@ json-merge-patch==0.2 jsondiff==2.0.0 jsonpath-ng==1.5.3 jsonschema==4.5.1 -jupyter-client==7.3.0 +jupyter-client==7.3.1 jupyter-core==4.10.0 jwcrypto==1.2 keyring==23.5.0 kombu==5.2.4 krb5==0.3.0 -kubernetes==23.3.0 +kubernetes==23.6.0 kylinpy==2.8.4 lazy-object-proxy==1.7.1 ldap3==2.9.1 @@ -351,7 +351,7 @@ mccabe==0.6.1 mongomock==4.0.0 monotonic==1.6 moreorless==0.4.0 -moto==3.1.7 +moto==3.1.8 msal-extensions==1.0.0 msal==1.17.0 msgpack==1.0.3 @@ -359,13 +359,13 @@ msrest==0.6.21 msrestazure==0.6.4 multi-key-dict==2.0.3 multidict==6.0.2 -mypy-boto3-rds==1.22.8 +mypy-boto3-rds==1.22.9 mypy-boto3-redshift-data==1.22.8 mypy-extensions==0.4.3 mypy==0.910 mysql-connector-python==8.0.29 mysqlclient==2.1.0 -nbclient==0.6.2 +nbclient==0.6.3 nbformat==5.4.0 neo4j==4.4.3 nest-asyncio==1.5.5 @@ -450,7 +450,7 @@ pytest-rerunfailures==9.1.1 pytest-timeouts==1.2.1 pytest-xdist==2.5.0 pytest==6.2.5 -python-arango==7.3.3 +python-arango==7.3.4 python-daemon==2.3.0 python-dateutil==2.8.2 python-http-client==3.3.7 @@ -480,7 +480,7 @@ requests==2.27.1 responses==0.20.0 rfc3986==1.5.0 rich-click==1.3.0 -rich==12.3.0 +rich==12.4.1 rsa==4.8 s3transfer==0.5.2 sasl==0.3.1 @@ -546,7 +546,7 @@ tqdm==4.64.0 traitlets==5.1.1 trino==0.313.0 twine==4.0.0 -types-Deprecated==1.2.7 +types-Deprecated==1.2.8 types-Markdown==3.3.14 types-PyMySQL==1.0.19 types-PyYAML==6.0.7 @@ -558,16 +558,16 @@ types-docutils==0.18.3 types-freezegun==1.1.9 types-paramiko==2.10.0 types-protobuf==3

[GitHub] [airflow] pingzh commented on issue #23540: tasks log is not correctly captured

pingzh commented on issue #23540: URL: https://github.com/apache/airflow/issues/23540#issuecomment-1121604396 while investigating this issue, i found that if i set `DONOT_MODIFY_HANDLERS` to `True`, the `airflow tasks run` will hang: for example, if my local: i changed the code of `DONOT_MODIFY_HANDLERS` to `True` and forced the `StandardTaskRunner` to use `_start_by_exec`. ``` airflow tasks run example_bash_operator also_run_this scheduled__2022-05-05T00:00:00+00:00 --job-id 272 --local --subdir /Users/ping_zhang/airlab/repos/airflow-internal-1.10.4/airflow/example_dags/example_bash_operator.py -f ``` it stuck without making any progress:  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal commented on pull request #23531: make resources for KubernetesPodTemplate templated

eladkal commented on PR #23531:

URL: https://github.com/apache/airflow/pull/23531#issuecomment-1121587702

Do we mean to something like?

```

V1ResourceRequirements(

requests={"cpu": "{{ dag_run.conf[\'request_cpu\'] }}",

"memory": "8G"},

limits={"cpu": "16000m", "memory": "128G"},

)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] eladkal commented on pull request #23531: make resources for KubernetesPodTemplate templated

eladkal commented on PR #23531: URL: https://github.com/apache/airflow/pull/23531#issuecomment-1121584193 How can we template resources? Its not a string. Its a list object of V1ResourceRequirements. Maybe im missing something here but I would love to see an actual test that template this field. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #23562: Improve caching for multi-platform images.

potiuk commented on PR #23562: URL: https://github.com/apache/airflow/pull/23562#issuecomment-1121578933 Merged. Refreshing cache now ! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk merged pull request #23562: Improve caching for multi-platform images.

potiuk merged PR #23562: URL: https://github.com/apache/airflow/pull/23562 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #23562: Improve caching for multi-platform images.