[GitHub] [airflow] eladkal commented on issue #27890: SFTP Sensor is not working with File Pattern Parameter

eladkal commented on issue #27890: URL: https://github.com/apache/airflow/issues/27890#issuecomment-1327112678 cc @Bowrna -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on pull request #27829: Improving the release process

ephraimbuddy commented on PR #27829: URL: https://github.com/apache/airflow/pull/27829#issuecomment-1327111335 I added a new function `user_confirm_bools` that returns a bool using `user_confirm` under the hood. This helped me reduce a lot of `if else` statements. Also added `console_print` that uses `get_console().print` to print messages to the screen. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] dstandish commented on pull request #27344: Add retry to submit_event in trigger to avoid deadlock

dstandish commented on PR #27344: URL: https://github.com/apache/airflow/pull/27344#issuecomment-1327107144 @NickYadance did you give up on this one? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] bolkedebruin commented on a diff in pull request #27887: Add allow list for imports during deserialization

bolkedebruin commented on code in PR #27887:

URL: https://github.com/apache/airflow/pull/27887#discussion_r1032070380

##

airflow/utils/json.py:

##

@@ -189,7 +189,7 @@ def __init__(self, *args, **kwargs) -> None:

if not kwargs.get("object_hook"):

kwargs["object_hook"] = self.object_hook

-patterns = conf.getjson("core", "allowed_deserialization_classes")

+patterns = cast(list, conf.getjson("core",

"allowed_deserialization_classes"))

Review Comment:

Mmm yes, I'd prefer that check at configure time rather than here. The

config file shouldn't validate if this is not a list.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Bowrna opened a new pull request, #27905: listener plugin example added

Bowrna opened a new pull request, #27905:

URL: https://github.com/apache/airflow/pull/27905

related: #15353

---

**^ Add meaningful description above**

Read the **[Pull Request

Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)**

for more information.

In case of fundamental code changes, an Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvement+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in a

newsfragment file, named `{pr_number}.significant.rst` or

`{issue_number}.significant.rst`, in

[newsfragments](https://github.com/apache/airflow/tree/main/newsfragments).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Bowrna closed pull request #27435: listener plugin example and documentation

Bowrna closed pull request #27435: listener plugin example and documentation URL: https://github.com/apache/airflow/pull/27435 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Bowrna opened a new pull request, #27435: listener plugin example and documentation

Bowrna opened a new pull request, #27435:

URL: https://github.com/apache/airflow/pull/27435

This PR contains example code and documentation to use listener plugin

feature in Airflow.

related: https://github.com/apache/airflow/issues/15353

---

**^ Add meaningful description above**

Read the **[Pull Request

Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)**

for more information.

In case of fundamental code changes, an Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvement+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in a

newsfragment file, named `{pr_number}.significant.rst` or

`{issue_number}.significant.rst`, in

[newsfragments](https://github.com/apache/airflow/tree/main/newsfragments).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] blag commented on a diff in pull request #27828: Soft delete datasets that are no longer referenced in DAG schedules or task outlets

blag commented on code in PR #27828:

URL: https://github.com/apache/airflow/pull/27828#discussion_r1032052680

##

airflow/jobs/scheduler_job.py:

##

@@ -1574,3 +1585,33 @@ def _cleanup_stale_dags(self, session: Session =

NEW_SESSION) -> None:

dag.is_active = False

SerializedDagModel.remove_dag(dag_id=dag.dag_id, session=session)

session.flush()

+

+@provide_session

+def _orphan_unreferenced_datasets(self, session: Session = NEW_SESSION) ->

None:

+"""

+Detects datasets that are no longer referenced in any DAG schedule

parameters or task outlets and

+sets the dataset is_orphaned flags to True

+"""

+orphaned_dataset_query = (

+session.query(DatasetModel)

+.join(

+DagScheduleDatasetReference,

+DagScheduleDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.join(

+TaskOutletDatasetReference,

+TaskOutletDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.group_by(DatasetModel.id)

+.having(

+and_(

+func.count(DagScheduleDatasetReference.dag_id) == 0,

+func.count(TaskOutletDatasetReference.dag_id) == 0,

+)

+)

+)

+for dataset in orphaned_dataset_query:

+self.log.info("Orphaning unreferenced dataset '%s'", dataset.uri)

+dataset.is_orphaned = True

Review Comment:

Nope, didn't work. Good idea though. :)

```

sqlalchemy.exc.InvalidRequestError: Can't call Query.update() or

Query.delete() when group_by() has been called

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] blag commented on a diff in pull request #27828: Soft delete datasets that are no longer referenced in DAG schedules or task outlets

blag commented on code in PR #27828:

URL: https://github.com/apache/airflow/pull/27828#discussion_r1032045393

##

airflow/jobs/scheduler_job.py:

##

@@ -1574,3 +1585,33 @@ def _cleanup_stale_dags(self, session: Session =

NEW_SESSION) -> None:

dag.is_active = False

SerializedDagModel.remove_dag(dag_id=dag.dag_id, session=session)

session.flush()

+

+@provide_session

+def _orphan_unreferenced_datasets(self, session: Session = NEW_SESSION) ->

None:

+"""

+Detects datasets that are no longer referenced in any DAG schedule

parameters or task outlets and

+sets the dataset is_orphaned flags to True

+"""

+orphaned_dataset_query = (

+session.query(DatasetModel)

+.join(

+DagScheduleDatasetReference,

+DagScheduleDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.join(

+TaskOutletDatasetReference,

+TaskOutletDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.group_by(DatasetModel.id)

+.having(

+and_(

+func.count(DagScheduleDatasetReference.dag_id) == 0,

+func.count(TaskOutletDatasetReference.dag_id) == 0,

+)

+)

+)

+for dataset in orphaned_dataset_query:

+self.log.info("Orphaning unreferenced dataset '%s'", dataset.uri)

+dataset.is_orphaned = True

Review Comment:

The group by expression might interfere but I'll try it, thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] tanelk opened a new pull request, #27904: Order TIs by map_index

tanelk opened a new pull request, #27904:

URL: https://github.com/apache/airflow/pull/27904

Sort TIs by the `map_index` field when selecting them for queueing.

Currently TIs are only ordered by `priority_weight` and `execution_date`. This

does not change any bug, but makes it more understandable and "cleaner" in the

UI.

Without this, every now and then the TIs get executed from the middle -

probably something to do with database internals.

---

**^ Add meaningful description above**

Read the **[Pull Request

Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)**

for more information.

In case of fundamental code changes, an Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvement+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in a

newsfragment file, named `{pr_number}.significant.rst` or

`{issue_number}.significant.rst`, in

[newsfragments](https://github.com/apache/airflow/tree/main/newsfragments).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a diff in pull request #27828: Soft delete datasets that are no longer referenced in DAG schedules or task outlets

ephraimbuddy commented on code in PR #27828:

URL: https://github.com/apache/airflow/pull/27828#discussion_r1032034170

##

airflow/jobs/scheduler_job.py:

##

@@ -1574,3 +1585,33 @@ def _cleanup_stale_dags(self, session: Session =

NEW_SESSION) -> None:

dag.is_active = False

SerializedDagModel.remove_dag(dag_id=dag.dag_id, session=session)

session.flush()

+

+@provide_session

+def _orphan_unreferenced_datasets(self, session: Session = NEW_SESSION) ->

None:

+"""

+Detects datasets that are no longer referenced in any DAG schedule

parameters or task outlets and

+sets the dataset is_orphaned flags to True

+"""

+orphaned_dataset_query = (

+session.query(DatasetModel)

+.join(

+DagScheduleDatasetReference,

+DagScheduleDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.join(

+TaskOutletDatasetReference,

+TaskOutletDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.group_by(DatasetModel.id)

+.having(

+and_(

+func.count(DagScheduleDatasetReference.dag_id) == 0,

+func.count(TaskOutletDatasetReference.dag_id) == 0,

+)

+)

+)

+for dataset in orphaned_dataset_query:

+self.log.info("Orphaning unreferenced dataset '%s'", dataset.uri)

+dataset.is_orphaned = True

Review Comment:

```suggestion

).update({DatasetModel.is_orphaned:True},

synchronize_session='fetch')

)

```

If this will work I think it's faster.

##

airflow/migrations/versions/0122_2_5_0_add_is_orphaned_to_datasetmodel.py:

##

@@ -0,0 +1,49 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+"""Add is_orphaned to DatasetModel

+

+Revision ID: 290244fb8b83

+Revises: 65a852f26899

+Create Date: 2022-11-22 00:12:53.432961

+

+"""

+

+from __future__ import annotations

+

+import sqlalchemy as sa

+from alembic import op

+

+# revision identifiers, used by Alembic.

+revision = "290244fb8b83"

+down_revision = "65a852f26899"

+branch_labels = None

+depends_on = None

+airflow_version = "2.5.0"

+

+

+def upgrade():

+"""Add is_orphaned to DatasetModel"""

+with op.batch_alter_table("dataset") as batch_op:

Review Comment:

I think so, due to SQLite but I don't think we need the server_default since

it's `False`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on a diff in pull request #27901: Add information on how to run tests in Breeze via the PyCharm IDE

Taragolis commented on code in PR #27901: URL: https://github.com/apache/airflow/pull/27901#discussion_r1032025382 ## TESTING.rst: ## @@ -61,20 +61,51 @@ Running Unit Tests from PyCharm IDE To run unit tests from the PyCharm IDE, create the `local virtualenv `_, select it as the default project's environment, then configure your test runner: -.. image:: images/configure_test_runner.png +.. image:: images/pycharm/configure_test_runner.png :align: center :alt: Configuring test runner and run unit tests as follows: -.. image:: images/running_unittests.png +.. image:: images/pycharm/running_unittests.png :align: center :alt: Running unit tests **NOTE:** You can run the unit tests in the standalone local virtualenv (with no Breeze installed) if they do not have dependencies such as Postgres/MySQL/Hadoop/etc. +Running Unit Tests from PyCharm IDE using Breeze + + +Ideally, all unit tests should be run using the standardized Breeze environment. While not +as convenient as the one-click "play button" in PyCharm, the IDE can be configured to do +this in two clicks. + +1. Add Breeze as an "External Tool" + a. File > Settings > Tools > External Tools + b. Click the little plus symbol to open the "Create Tool" popup and fill it out: Review Comment: Some macOS specific stuff. On macOS (and only there) user should navigate to `PyCharm -> Preferences` instead of `File > Settings` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jedcunningham commented on a diff in pull request #27828: Soft delete datasets that are no longer referenced in DAG schedules or task outlets

jedcunningham commented on code in PR #27828:

URL: https://github.com/apache/airflow/pull/27828#discussion_r1031991662

##

airflow/jobs/scheduler_job.py:

##

@@ -1574,3 +1585,33 @@ def _cleanup_stale_dags(self, session: Session =

NEW_SESSION) -> None:

dag.is_active = False

SerializedDagModel.remove_dag(dag_id=dag.dag_id, session=session)

session.flush()

+

+@provide_session

+def _orphan_unreferenced_datasets(self, session: Session = NEW_SESSION) ->

None:

+"""

+Detects datasets that are no longer referenced in any DAG schedule

parameters or task outlets and

+sets the dataset is_orphaned flags to True

+"""

+orphaned_dataset_query = (

+session.query(DatasetModel)

+.join(

+DagScheduleDatasetReference,

+DagScheduleDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.join(

+TaskOutletDatasetReference,

+TaskOutletDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.group_by(DatasetModel.id)

+.having(

+and_(

+func.count(DagScheduleDatasetReference.dag_id) == 0,

+func.count(TaskOutletDatasetReference.dag_id) == 0,

+)

+)

+)

+for dataset in orphaned_dataset_query.all():

Review Comment:

```suggestion

for dataset in orphaned_dataset_query:

```

##

airflow/www/views.py:

##

@@ -3648,7 +3648,7 @@ def datasets_summary(self):

if has_event_filters:

count_query = count_query.join(DatasetEvent,

DatasetEvent.dataset_id == DatasetModel.id)

-filters = []

+filters = [DatasetModel.is_orphaned.is_(False)]

Review Comment:

```suggestion

filters = [~DatasetModel.is_orphaned]

```

##

airflow/jobs/scheduler_job.py:

##

@@ -1574,3 +1585,33 @@ def _cleanup_stale_dags(self, session: Session =

NEW_SESSION) -> None:

dag.is_active = False

SerializedDagModel.remove_dag(dag_id=dag.dag_id, session=session)

session.flush()

+

+@provide_session

+def _orphan_unreferenced_datasets(self, session: Session = NEW_SESSION) ->

None:

+"""

+Detects datasets that are no longer referenced in any DAG schedule

parameters or task outlets and

+sets the dataset is_orphaned flags to True

+"""

+orphaned_dataset_query = (

+session.query(DatasetModel)

+.join(

+DagScheduleDatasetReference,

+DagScheduleDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.join(

+TaskOutletDatasetReference,

+TaskOutletDatasetReference.dataset_id == DatasetModel.id,

+isouter=True,

+)

+.group_by(DatasetModel.id)

+.having(

+and_(

+func.count(DagScheduleDatasetReference.dag_id) == 0,

+func.count(TaskOutletDatasetReference.dag_id) == 0,

+)

+)

+)

+for dataset in orphaned_dataset_query.all():

+self.log.info("Orphaning dataset '%s'", dataset.uri)

Review Comment:

```suggestion

self.log.info("Orphaning unreferenced dataset '%s'", dataset.uri)

```

##

airflow/dag_processing/manager.py:

##

@@ -433,8 +433,10 @@ def __init__(

self.last_stat_print_time = 0

# Last time we cleaned up DAGs which are no longer in files

self.last_deactivate_stale_dags_time =

timezone.make_aware(datetime.fromtimestamp(0))

-# How often to check for DAGs which are no longer in files

-self.deactivate_stale_dags_interval = conf.getint("scheduler",

"deactivate_stale_dags_interval")

+# How often to clean up:

+# * DAGs which are no longer in files

+# * datasets that are no longer referenced by any DAG schedule

parameters or task outlets

Review Comment:

```suggestion

# How often to check for DAGs which are no longer in files

```

##

airflow/models/dag.py:

##

@@ -2828,6 +2828,7 @@ def bulk_write_to_db(

for dataset in all_datasets:

stored_dataset =

session.query(DatasetModel).filter(DatasetModel.uri == dataset.uri).first()

if stored_dataset:

+stored_dataset.is_orphaned = False

Review Comment:

Test this situation.

##

airflow/migrations/versions/0122_2_5_0_add_is_orphaned_to_datasetmodel.py:

##

@@ -0,0 +1,49 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regard

[GitHub] [airflow] Ken-poc commented on issue #27903: dag.timezone can not have start_date.tzinfo

Ken-poc commented on issue #27903: URL: https://github.com/apache/airflow/issues/27903#issuecomment-1326986647 This is not a bug. Could you remove the label? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Ken-poc commented on issue #27903: dag.timezone can not have start_date.tzinfo

Ken-poc commented on issue #27903: URL: https://github.com/apache/airflow/issues/27903#issuecomment-1326984633 yes I know that works. if start_date and start_date.tzinfo: tzinfo = None if start_date.tzinfo else settings.TIMEZONE tz = pendulum.instance(start_date, tz=tzinfo).timezone Though `start_date` has its `tzinfo`, `tzinfo` is always assigned to _None_ and `tz` eventually is made from `start_date` not `tzinfo` anyway. this makes it confusing even though it acutally works. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] zsdyx commented on issue #13668: scheduler dies with "MySQLdb._exceptions.OperationalError: (1213, 'Deadlock found when trying to get lock; try restarting transaction')"

zsdyx commented on issue #13668: URL: https://github.com/apache/airflow/issues/13668#issuecomment-1326980476 MySQL deadlock occurs when I use 2.4.1, Has this problem never been solved -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] NickYadance closed pull request #27344: Add retry to submit_event in trigger to avoid deadlock

NickYadance closed pull request #27344: Add retry to submit_event in trigger to avoid deadlock URL: https://github.com/apache/airflow/pull/27344 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on issue #27026: Parameterise key sorting in "Rendered Template" view

uranusjr commented on issue #27026: URL: https://github.com/apache/airflow/issues/27026#issuecomment-1326964845 Problem is `template_fields` does not contain all operator fields, and you’d have no way to sort those non-templated fields (especially if some of them can’t be templated to begin with). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Ken-poc commented on issue #27903: dag.timezone can not have start_date.tzinfo

Ken-poc commented on issue #27903: URL: https://github.com/apache/airflow/issues/27903#issuecomment-1326961733 Yes that's right. I pointed out that tzinfo is always assinged to None. I think this is unnecessary. https://github.com/apache/airflow/blob/3e288abd0bc3e5788dcd7f6d9f6bef26ec4c7281/airflow/models/dag.py#L465 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vksunilk commented on issue #27894: Status of testing Providers that were prepared on November 24, 2022

vksunilk commented on issue #27894: URL: https://github.com/apache/airflow/issues/27894#issuecomment-1326959302 #26986 Works as expected. I am able to view the DataprocLink irrespective of Job status -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on issue #27903: dag.timezone can not have start_date.tzinfo

uranusjr commented on issue #27903:

URL: https://github.com/apache/airflow/issues/27903#issuecomment-1326956524

Not sure what you mean. The attribute is set, from what I can tell.

```pycon

>>> from airflow.models.dag import DAG

>>> import pendulum

>>> d = pendulum.now()

>>> d.tzinfo

Timezone('Etc/UTC')

>>> dag = DAG(dag_id="xxx", start_date=d)

>>> dag.timezone

Timezone('Etc/UTC')

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on issue #27903: dag.timezone can not have start_date.tzinfo

boring-cyborg[bot] commented on issue #27903: URL: https://github.com/apache/airflow/issues/27903#issuecomment-1326954259 Thanks for opening your first issue here! Be sure to follow the issue template! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Ken-poc opened a new issue, #27903: dag.timezone can not have start_date.tzinfo

Ken-poc opened a new issue, #27903: URL: https://github.com/apache/airflow/issues/27903 ### Apache Airflow version main (development) ### What happened I found a weird code when assigning dag.timezone from DAG model. The timezone of DAG is always assigned to None, `start_date` even has `tzinfo` though. Is it intended? ### What you think should happen instead Dag should have the timezone if start_date passed in has tzinfo. ### How to reproduce Dag can't have timezone from start_date. ### Operating System MacOS Monteray ### Versions of Apache Airflow Providers apache-airflow-providers-amazon==6.0.0 apache-airflow-providers-cncf-kubernetes==4.4.0 apache-airflow-providers-common-sql==1.2.0 apache-airflow-providers-ftp==3.1.0 apache-airflow-providers-http==4.0.0 apache-airflow-providers-imap==3.0.0 apache-airflow-providers-sqlite==3.2.1 ### Deployment Virtualenv installation ### Deployment details None ### Anything else None ### Are you willing to submit PR? - [X] Yes I am willing to submit a PR! ### Code of Conduct - [X] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on pull request #27740: Remove XCom API endpoint full deserialization option

uranusjr commented on PR #27740: URL: https://github.com/apache/airflow/pull/27740#issuecomment-1326951283 Sounds to me the most reasonable approach here would be to add a config to allow this feature. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: Remove is_mapped attribute (#27881)

This is an automated email from the ASF dual-hosted git repository.

uranusjr pushed a commit to branch main

in repository https://gitbox.apache.org/repos/asf/airflow.git

The following commit(s) were added to refs/heads/main by this push:

new 3e288abd0b Remove is_mapped attribute (#27881)

3e288abd0b is described below

commit 3e288abd0bc3e5788dcd7f6d9f6bef26ec4c7281

Author: Tzu-ping Chung

AuthorDate: Fri Nov 25 09:21:01 2022 +0800

Remove is_mapped attribute (#27881)

---

.../endpoints/task_instance_endpoint.py| 3 +-

airflow/api_connexion/schemas/task_schema.py | 17 ++--

airflow/cli/commands/task_command.py | 3 +-

airflow/models/baseoperator.py | 2 -

airflow/models/mappedoperator.py | 2 -

airflow/models/operator.py | 23 -

airflow/models/taskinstance.py | 5 +-

airflow/models/xcom_arg.py | 3 +-

airflow/ti_deps/deps/ready_to_reschedule.py| 4 +-

airflow/ti_deps/deps/trigger_rule_dep.py | 3 +-

airflow/www/views.py | 7 +-

tests/decorators/test_python.py| 7 +-

tests/models/test_taskinstance.py | 99 +-

13 files changed, 151 insertions(+), 27 deletions(-)

diff --git a/airflow/api_connexion/endpoints/task_instance_endpoint.py

b/airflow/api_connexion/endpoints/task_instance_endpoint.py

index 4e9d6cb9a1..9d5d54ba58 100644

--- a/airflow/api_connexion/endpoints/task_instance_endpoint.py

+++ b/airflow/api_connexion/endpoints/task_instance_endpoint.py

@@ -45,6 +45,7 @@ from airflow.api_connexion.schemas.task_instance_schema

import (

from airflow.api_connexion.types import APIResponse

from airflow.models import SlaMiss

from airflow.models.dagrun import DagRun as DR

+from airflow.models.operator import needs_expansion

from airflow.models.taskinstance import TaskInstance as TI,

clear_task_instances

from airflow.security import permissions

from airflow.utils.airflow_flask_app import get_airflow_app

@@ -202,7 +203,7 @@ def get_mapped_task_instances(

if not task:

error_message = f"Task id {task_id} not found"

raise NotFound(error_message)

-if not task.is_mapped:

+if not needs_expansion(task):

error_message = f"Task id {task_id} is not mapped"

raise NotFound(error_message)

diff --git a/airflow/api_connexion/schemas/task_schema.py

b/airflow/api_connexion/schemas/task_schema.py

index 0fcb9ff18f..5715ca2ea0 100644

--- a/airflow/api_connexion/schemas/task_schema.py

+++ b/airflow/api_connexion/schemas/task_schema.py

@@ -27,6 +27,7 @@ from airflow.api_connexion.schemas.common_schema import (

WeightRuleField,

)

from airflow.api_connexion.schemas.dag_schema import DAGSchema

+from airflow.models.mappedoperator import MappedOperator

from airflow.models.operator import Operator

@@ -59,22 +60,28 @@ class TaskSchema(Schema):

template_fields = fields.List(fields.String(), dump_only=True)

sub_dag = fields.Nested(DAGSchema, dump_only=True)

downstream_task_ids = fields.List(fields.String(), dump_only=True)

-params = fields.Method("get_params", dump_only=True)

-is_mapped = fields.Boolean(dump_only=True)

+params = fields.Method("_get_params", dump_only=True)

+is_mapped = fields.Method("_get_is_mapped", dump_only=True)

-def _get_class_reference(self, obj):

+@staticmethod

+def _get_class_reference(obj):

result = ClassReferenceSchema().dump(obj)

return result.data if hasattr(result, "data") else result

-def _get_operator_name(self, obj):

+@staticmethod

+def _get_operator_name(obj):

return obj.operator_name

@staticmethod

-def get_params(obj):

+def _get_params(obj):

"""Get the Params defined in a Task."""

params = obj.params

return {k: v.dump() for k, v in params.items()}

+@staticmethod

+def _get_is_mapped(obj):

+return isinstance(obj, MappedOperator)

+

class TaskCollection(NamedTuple):

"""List of Tasks with metadata."""

diff --git a/airflow/cli/commands/task_command.py

b/airflow/cli/commands/task_command.py

index a217d2c78d..078565dc38 100644

--- a/airflow/cli/commands/task_command.py

+++ b/airflow/cli/commands/task_command.py

@@ -42,6 +42,7 @@ from airflow.models import DagPickle, TaskInstance

from airflow.models.baseoperator import BaseOperator

from airflow.models.dag import DAG

from airflow.models.dagrun import DagRun

+from airflow.models.operator import needs_expansion

from airflow.ti_deps.dep_context import DepContext

from airflow.ti_deps.dependencies_deps import SCHEDULER_QUEUED_DEPS

from airflow.typing_compat import Literal

@@ -150,7 +151,7 @@ def _get_ti(

"""Get the task instance through DagRun.run_id, if that fails, get the TI

the old way."""

if not exec_date_or_run_id and not create_if_necessary:

[GitHub] [airflow] uranusjr merged pull request #27881: Remove is_mapped attribute

uranusjr merged PR #27881: URL: https://github.com/apache/airflow/pull/27881 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr closed issue #27879: Getting error in scheduler logs "Task killed externally" when running a dag with task group mapping

uranusjr closed issue #27879: Getting error in scheduler logs "Task killed externally" when running a dag with task group mapping URL: https://github.com/apache/airflow/issues/27879 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #27778: Lambda hook: make runtime and handler optional

uranusjr commented on code in PR #27778:

URL: https://github.com/apache/airflow/pull/27778#discussion_r1031930667

##

airflow/providers/amazon/aws/hooks/lambda_function.py:

##

@@ -93,6 +93,12 @@ def create_lambda(

code_signing_config_arn: str | None = None,

architectures: list[str] | None = None,

) -> dict:

+if package_type == "Zip":

+if handler is None:

+raise ValueError("Parameter 'handler' is required if

'package_type' is 'Zip'")

+if runtime is None:

+raise ValueError("Parameter 'runtime' is required if

'package_type' is 'Zip'")

Review Comment:

These should be TypeError to mirror Python’s default behaviour.

```pycon

>>> def f(*, a): pass

...

>>> f()

Traceback (most recent call last):

File "", line 1, in

TypeError: f() missing 1 required keyword-only argument: 'a'

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #27844: Detect alternative container runtime automatically

uranusjr commented on code in PR #27844: URL: https://github.com/apache/airflow/pull/27844#discussion_r1031929935 ## CONTRIBUTORS_QUICK_START.rst: ## @@ -50,7 +50,7 @@ Local machine development If you do not work with remote development environment, you need those prerequisites. -1. Docker Community Edition (you can also use Colima, see instructions below) +1. Container runtime: Docker Community Edition (recommended), Colima. Review Comment: FWIW, last time I tried using containerd with breeze, Docker CLI is not main problem (Podman is actually close enough you can just change a few constants to make things work), but docker-compose. But that’s off-topic; the main point here is the terminology here needs to be fixed to not introduce confusion unnecessarily. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on issue #27859: DYNAMICALLY CREATING TASKS issue : "_TaskDecorator' object has no attribute 'update_relative': "

uranusjr commented on issue #27859: URL: https://github.com/apache/airflow/issues/27859#issuecomment-1326922559 I suspect a call is missed somewhere in how you instantiate tasks. Note that a `@task` function needs to be _called_ (either with `f.expand()`, `f.expand_kwargs()`, or just `f()` like a function) to become a concrete task. We can probably check for this user error and emit a better message, but we need a reproduction first to identify the issue. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr closed issue #27862: airflow failure and success callbacks read task instance state as 'running'

uranusjr closed issue #27862: airflow failure and success callbacks read task instance state as 'running' URL: https://github.com/apache/airflow/issues/27862 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on issue #27862: airflow failure and success callbacks read task instance state as 'running'

uranusjr commented on issue #27862: URL: https://github.com/apache/airflow/issues/27862#issuecomment-1326920978 Duplicate of #26760. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #27887: Add allow list for imports during deserialization

uranusjr commented on code in PR #27887:

URL: https://github.com/apache/airflow/pull/27887#discussion_r1031925774

##

airflow/utils/json.py:

##

@@ -189,7 +189,7 @@ def __init__(self, *args, **kwargs) -> None:

if not kwargs.get("object_hook"):

kwargs["object_hook"] = self.object_hook

-patterns = conf.getjson("core", "allowed_deserialization_classes")

+patterns = cast(list, conf.getjson("core",

"allowed_deserialization_classes"))

Review Comment:

This would result in a confusing error if the value is not set to a list.

It’s probably better to explicitly check the value is a list instead (and raise

a clear message explaining the config value is exact source of failure).

##

airflow/utils/json.py:

##

@@ -189,7 +189,7 @@ def __init__(self, *args, **kwargs) -> None:

if not kwargs.get("object_hook"):

kwargs["object_hook"] = self.object_hook

-patterns = conf.getjson("core", "allowed_deserialization_classes")

+patterns = cast(list, conf.getjson("core",

"allowed_deserialization_classes"))

Review Comment:

This would result in a confusing error if the value is not set to a list.

It’s probably better to explicitly check the value is a list instead (and raise

a clear message explaining the config value is the exact source of failure).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

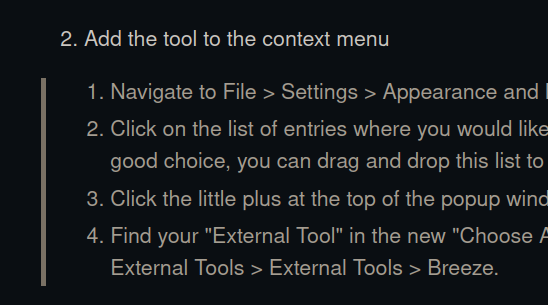

[GitHub] [airflow] ferruzzi commented on a diff in pull request #27901: Add information on how to run tests in Breeze via the PyCharm IDE

ferruzzi commented on code in PR #27901: URL: https://github.com/apache/airflow/pull/27901#discussion_r1031925157 ## TESTING.rst: ## @@ -61,20 +61,51 @@ Running Unit Tests from PyCharm IDE To run unit tests from the PyCharm IDE, create the `local virtualenv `_, select it as the default project's environment, then configure your test runner: -.. image:: images/configure_test_runner.png +.. image:: images/pycharm/configure_test_runner.png :align: center :alt: Configuring test runner and run unit tests as follows: -.. image:: images/running_unittests.png +.. image:: images/pycharm/running_unittests.png :align: center :alt: Running unit tests **NOTE:** You can run the unit tests in the standalone local virtualenv (with no Breeze installed) if they do not have dependencies such as Postgres/MySQL/Hadoop/etc. +Running Unit Tests from PyCharm IDE using Breeze + + +Ideally, all unit tests should be run using the standardized Breeze environment. While not +as convenient as the one-click "play button" in PyCharm, the IDE can be configured to do +this in two clicks. + +1. Add Breeze as an "External Tool" + a. File > Settings > Tools > External Tools + b. Click the little plus symbol to open the "Create Tool" popup and fill it out: + +.. image:: images/pycharm/pycharm_create_tool.png +:align: center +:alt: Installing Python extension + +2. Add the tool to the context menu + a. File > Settings > Appearance and Behavior > Menus and Toolbars > Project View Popup Menu + b. Click on the list of entries where you would like it to be added. Right above or below + "Project View Popup Menu Run Group" may be a good choice, you can drag and drop this list + to rearrange the placement later. + c. Click the little plus at the top of the popup window + d. Find your "External Tool" in the new "Choose Actions to Add" popup and click OK. If you + followed the image above, it will be at External Tools > External Tools > Breeze Review Comment: Committed the phrasing change, thanks. For the bullet styles, this is how it renders, despite the letter-bullets in the raw code.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #27834: Make sure we can get out of a faulty scheduler state

uranusjr commented on code in PR #27834: URL: https://github.com/apache/airflow/pull/27834#discussion_r1031924843 ## airflow/models/dagrun.py: ## @@ -780,8 +780,7 @@ def _expand_mapped_task_if_needed(ti: TI) -> Iterable[TI] | None: except NotMapped: # Not a mapped task, nothing needed. return None if expanded_tis: -assert expanded_tis[0] is ti -return expanded_tis[1:] +return expanded_tis Review Comment: Since this function only returns _new_ ti objects, should we do something like this? ```python if expanded_tis[0] is ti: return expanded_tis[1:] return expanded_tis ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #27834: Make sure we can get out of a faulty scheduler state

uranusjr commented on code in PR #27834:

URL: https://github.com/apache/airflow/pull/27834#discussion_r1031924565

##

airflow/models/abstractoperator.py:

##

@@ -494,18 +495,30 @@ def expand_mapped_task(self, run_id: str, *, session:

Session) -> tuple[Sequence

total_length,

)

unmapped_ti.state = TaskInstanceState.SKIPPED

-indexes_to_map = ()

else:

-# Otherwise convert this into the first mapped index, and

create

-# TaskInstance for other indexes.

-unmapped_ti.map_index = 0

-self.log.debug("Updated in place to become %s", unmapped_ti)

-all_expanded_tis.append(unmapped_ti)

-indexes_to_map = range(1, total_length)

-state = unmapped_ti.state

-elif not total_length:

+zero_index_ti_exists = session.query(

+exists().where(

+TaskInstance.dag_id == self.dag_id,

+TaskInstance.task_id == self.task_id,

+TaskInstance.run_id == run_id,

+TaskInstance.map_index == 0,

+)

+).scalar()

Review Comment:

IIRC `EXISTS` has some compatibility issues across databases (don’t remember

what exactly), so we generally use `query(count())...scalar() > 0` instead.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] xlanor commented on a diff in pull request #27898: fix: current_state method on TaskInstance doesn't filter by map_index

xlanor commented on code in PR #27898: URL: https://github.com/apache/airflow/pull/27898#discussion_r1031921282 ## airflow/models/taskinstance.py: ## @@ -719,6 +719,7 @@ def current_state(self, session: Session = NEW_SESSION) -> str: .filter( TaskInstance.dag_id == self.dag_id, TaskInstance.task_id == self.task_id, +TaskInstance.map_index == self.map_index, Review Comment: Thanks, will work on this PR tomorrow and hopefully get it ready for review shortly -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #27898: fix: current_state method on TaskInstance doesn't filter by map_index

uranusjr commented on code in PR #27898: URL: https://github.com/apache/airflow/pull/27898#discussion_r1031920687 ## airflow/models/taskinstance.py: ## @@ -719,6 +719,7 @@ def current_state(self, session: Session = NEW_SESSION) -> str: .filter( TaskInstance.dag_id == self.dag_id, TaskInstance.task_id == self.task_id, +TaskInstance.map_index == self.map_index, Review Comment: Honestly `current_state` isn’t really used almost anywhere in the code base (the only is for `airflow tasks state`, but I’d challenge even that usage is not necessary at all), so the test coverage is mostly non-existent. You can probably add a test for the `airflow tasks` CLI command (in `tests/cli/commands/test_task_command.py`) to cover this. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] xlanor commented on a diff in pull request #27898: fix: current_state method on TaskInstance doesn't filter by map_index

xlanor commented on code in PR #27898: URL: https://github.com/apache/airflow/pull/27898#discussion_r1031919560 ## airflow/models/taskinstance.py: ## @@ -719,6 +719,7 @@ def current_state(self, session: Session = NEW_SESSION) -> str: .filter( TaskInstance.dag_id == self.dag_id, TaskInstance.task_id == self.task_id, +TaskInstance.map_index == self.map_index, Review Comment: Thanks! I've looked at the tests in tests/model.py and I don't see any examples of a test of a mapped task there. Is there any test that you would suggest so that a regression does not occur in the future? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #27898: fix: current_state method on TaskInstance doesn't filter by map_index

uranusjr commented on code in PR #27898: URL: https://github.com/apache/airflow/pull/27898#discussion_r1031919036 ## airflow/models/taskinstance.py: ## @@ -719,6 +719,7 @@ def current_state(self, session: Session = NEW_SESSION) -> str: .filter( TaskInstance.dag_id == self.dag_id, TaskInstance.task_id == self.task_id, +TaskInstance.map_index == self.map_index, Review Comment: It’s probably a good chance to rewrite this to something like ```python from sqlalchemy.inspection import inspect session.query(TaskInstance.state).filter( col == getattr(self, col.name) for col in inspect(TaskInstance).primary_key ).scalar() ``` This would be resilient to any primary key changes in the future. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pulquero opened a new issue, #27902: HdfsSensor has no clear failure mode

pulquero opened a new issue, #27902: URL: https://github.com/apache/airflow/issues/27902 ### Description Currently, HdfsSensor pings forever if some failure causes the file not to be written. Some sort of timeout parameter would be nice. ### Use case/motivation If there is a failure earlier in the pipeline that prevents the file of interest being written, HdfsSensor just pings forever, and everything looks fine. I would like some sort of way to have HdfsSensor fail, so that my team can detect issues promptly and address them. ### Related issues _No response_ ### Are you willing to submit a PR? - [ ] Yes I am willing to submit a PR! ### Code of Conduct - [X] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #27901: Add information on how to run tests in Breeze via the PyCharm IDE

uranusjr commented on code in PR #27901: URL: https://github.com/apache/airflow/pull/27901#discussion_r1031914073 ## TESTING.rst: ## @@ -61,20 +61,51 @@ Running Unit Tests from PyCharm IDE To run unit tests from the PyCharm IDE, create the `local virtualenv `_, select it as the default project's environment, then configure your test runner: -.. image:: images/configure_test_runner.png +.. image:: images/pycharm/configure_test_runner.png :align: center :alt: Configuring test runner and run unit tests as follows: -.. image:: images/running_unittests.png +.. image:: images/pycharm/running_unittests.png :align: center :alt: Running unit tests **NOTE:** You can run the unit tests in the standalone local virtualenv (with no Breeze installed) if they do not have dependencies such as Postgres/MySQL/Hadoop/etc. +Running Unit Tests from PyCharm IDE using Breeze + + +Ideally, all unit tests should be run using the standardized Breeze environment. While not +as convenient as the one-click "play button" in PyCharm, the IDE can be configured to do +this in two clicks. + +1. Add Breeze as an "External Tool" + a. File > Settings > Tools > External Tools + b. Click the little plus symbol to open the "Create Tool" popup and fill it out: + +.. image:: images/pycharm/pycharm_create_tool.png +:align: center +:alt: Installing Python extension + +2. Add the tool to the context menu + a. File > Settings > Appearance and Behavior > Menus and Toolbars > Project View Popup Menu + b. Click on the list of entries where you would like it to be added. Right above or below + "Project View Popup Menu Run Group" may be a good choice, you can drag and drop this list + to rearrange the placement later. + c. Click the little plus at the top of the popup window + d. Find your "External Tool" in the new "Choose Actions to Add" popup and click OK. If you + followed the image above, it will be at External Tools > External Tools > Breeze Review Comment: ```suggestion a. Navigate to File > Settings > Appearance and Behavior > Menus and Toolbars > Project View Popup Menu. b. Click on the list of entries where you would like it to be added. Right above or below "Project View Popup Menu Run Group" may be a good choice, you can drag and drop this list to rearrange the placement later. c. Click the little plus at the top of the popup window. d. Find your "External Tool" in the new "Choose Actions to Add" popup and click OK. If you followed the image above, it will be at External Tools > External Tools > Breeze. ``` Maybe unify the style of bullet items? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated (eba04d7c40 -> bad875b58d)

This is an automated email from the ASF dual-hosted git repository. ephraimanierobi pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/airflow.git from eba04d7c40 tests: always cleanup registered test listeners (#27896) add bad875b58d Only get changelog for core commits (#27900) No new revisions were added by this update. Summary of changes: dev/airflow-github | 5 - 1 file changed, 4 insertions(+), 1 deletion(-)

[GitHub] [airflow] ephraimbuddy merged pull request #27900: Only get changelog for core commits

ephraimbuddy merged PR #27900: URL: https://github.com/apache/airflow/pull/27900 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pierrejeambrun commented on pull request #27805: Automatically save and allow restore of recent DAG run configs

pierrejeambrun commented on PR #27805: URL: https://github.com/apache/airflow/pull/27805#issuecomment-1326861132 @aaronabraham311 There is a lot of example querying resource from the db in the views.py file. In this case DagRun should have what you need. In the example you mentioned above (json config displayed in the dagrun details), its coming from the grid_data view of that file :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi opened a new pull request, #27901: Add information on how to run tests in Breeze via the PyCharm IDE

ferruzzi opened a new pull request, #27901: URL: https://github.com/apache/airflow/pull/27901 How to add a context menu entry in PyCharm to run selected unit tests in the Breeze environment instead if in your working venv. Also moved the two existing PyCharm-specific images into a new subdirectory for organizational reasons. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a diff in pull request #27823: Amazon Provider Package user agent

ferruzzi commented on code in PR #27823: URL: https://github.com/apache/airflow/pull/27823#discussion_r1031869533 ## airflow/providers/amazon/aws/hooks/base_aws.py: ## @@ -42,11 +46,13 @@ from dateutil.tz import tzlocal from slugify import slugify +from airflow import __version__ as airflow_version Review Comment: I think I addressed this in https://github.com/apache/airflow/pull/27823/commits/a5fc3bc4a39855b9f3c7fc5ac26505709d191a98 by moving it to a local import in the helper method. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a diff in pull request #27823: Amazon Provider Package user agent

ferruzzi commented on code in PR #27823: URL: https://github.com/apache/airflow/pull/27823#discussion_r1031868722 ## airflow/providers/amazon/aws/hooks/base_aws.py: ## @@ -405,9 +411,68 @@ def __init__( self.resource_type = resource_type self._region_name = region_name -self._config = config +self._config = config or botocore.config.Config() self._verify = verify +@classmethod +def _get_provider_version(cls) -> str: +"""Checks the Providers Manager for the package version.""" +manager = ProvidersManager() +provider_name = manager.hooks[cls.conn_type].package_name # type: ignore[union-attr] Review Comment: Sorry for the delay, just got back from vacation and it took a little longer to get back into gear. I ended up wrapping it in a try/except as mentioned and dropped the `if not hook`. If `hook` is falsy, then it'll error out on the next line at `hook.package_name` anyway and get caught by the `except`. Addressed in https://github.com/apache/airflow/pull/27823/commits/a5fc3bc4a39855b9f3c7fc5ac26505709d191a98 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a diff in pull request #27823: Amazon Provider Package user agent

ferruzzi commented on code in PR #27823: URL: https://github.com/apache/airflow/pull/27823#discussion_r1031868722 ## airflow/providers/amazon/aws/hooks/base_aws.py: ## @@ -405,9 +411,68 @@ def __init__( self.resource_type = resource_type self._region_name = region_name -self._config = config +self._config = config or botocore.config.Config() self._verify = verify +@classmethod +def _get_provider_version(cls) -> str: +"""Checks the Providers Manager for the package version.""" +manager = ProvidersManager() +provider_name = manager.hooks[cls.conn_type].package_name # type: ignore[union-attr] Review Comment: Sorry for the delay, just got back from vacation and it took a little longer to get back into gear. I ended up wrapping it in a try/except as mentioned and dropped the `if not hook`. If `hook` is falsy, then it'll error out on the next line at `hook.package_name` anyway and get caught by the `except`. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vandonr-amz opened a new pull request, #27899: fix sagemaker system test to run on Apple Silicon

vandonr-amz opened a new pull request, #27899: URL: https://github.com/apache/airflow/pull/27899 this test was failing when launched from an M1 mac because the docker image was built for the local CPU type (arm64) and then uploaded to an amd64 linux, which didn't work. `--platform` is a flag for buildx, but breeze has it replacing the default `docker build`, so this works alright. tested on an M1 macbook pro and on an EC2 ubuntu instance. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch v2-5-test updated (523df868dd -> 9bae336e69)

This is an automated email from the ASF dual-hosted git repository. ephraimanierobi pushed a change to branch v2-5-test in repository https://gitbox.apache.org/repos/asf/airflow.git omit 523df868dd Add release notes add 9bae336e69 Add release notes This update added new revisions after undoing existing revisions. That is to say, some revisions that were in the old version of the branch are not in the new version. This situation occurs when a user --force pushes a change and generates a repository containing something like this: * -- * -- B -- O -- O -- O (523df868dd) \ N -- N -- N refs/heads/v2-5-test (9bae336e69) You should already have received notification emails for all of the O revisions, and so the following emails describe only the N revisions from the common base, B. Any revisions marked "omit" are not gone; other references still refer to them. Any revisions marked "discard" are gone forever. No new revisions were added by this update. Summary of changes: RELEASE_NOTES.rst | 19 --- 1 file changed, 4 insertions(+), 15 deletions(-)

[GitHub] [airflow] vincbeck commented on a diff in pull request #27820: Add retry option in RedshiftDeleteClusterOperator to retry when an operation is running in the cluster

vincbeck commented on code in PR #27820: URL: https://github.com/apache/airflow/pull/27820#discussion_r1031804824 ## airflow/providers/amazon/aws/operators/redshift_cluster.py: ## @@ -498,22 +502,38 @@ def __init__( wait_for_completion: bool = True, aws_conn_id: str = "aws_default", poll_interval: float = 30.0, +retry: bool = False, +retry_attempts: int = 10, Review Comment: Should be good now @eladkal -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ajaykarthick27 commented on issue #27890: SFTP Sensor is not working with File Pattern Parameter

ajaykarthick27 commented on issue #27890: URL: https://github.com/apache/airflow/issues/27890#issuecomment-1326810050 yes did not notice. I will close this issue. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ajaykarthick27 closed issue #27890: SFTP Sensor is not working with File Pattern Parameter

ajaykarthick27 closed issue #27890: SFTP Sensor is not working with File Pattern Parameter URL: https://github.com/apache/airflow/issues/27890 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal commented on issue #27890: SFTP Sensor is not working with File Pattern Parameter

eladkal commented on issue #27890: URL: https://github.com/apache/airflow/issues/27890#issuecomment-1326807715 Duplicate of https://github.com/apache/airflow/issues/27418 ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vandonr-amz commented on a diff in pull request #27786: Add operators + sensor for aws sagemaker pipelines

vandonr-amz commented on code in PR #27786: URL: https://github.com/apache/airflow/pull/27786#discussion_r1031797200 ## airflow/providers/amazon/aws/hooks/sagemaker.py: ## @@ -647,28 +649,28 @@ def describe_endpoint(self, name: str) -> dict: def check_status( self, -job_name: str, +resource_name: str, Review Comment: ah that's a good point... I can keep it as job_name to avoid that, there is no strong need to rename it. I can also add a small comment about the fact that it can be used to check more than jobs. tbh I think this method should have been private, it's mostly a helper, only used in `wait_for_completion` cases, but now it's too late to change that. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] xlanor commented on pull request #27898: fix: current_state method on TaskInstance doesn't filter by map_index

xlanor commented on PR #27898: URL: https://github.com/apache/airflow/pull/27898#issuecomment-1326802435 Currently I'm still trying to figure out how to run the unit tests as I'm fairly new to this code base, opening a PR first for CI. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #27898: fix: current_state method on TaskInstance doesn't filter by map_index

boring-cyborg[bot] commented on PR #27898: URL: https://github.com/apache/airflow/pull/27898#issuecomment-1326802257 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (flake8, mypy and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/main/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/main/docs/apache-airflow/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/main/BREEZE.rst) for testing locally, it's a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Please follow [ASF Code of Conduct](https://www.apache.org/foundation/policies/conduct) for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better 🚀. In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://s.apache.org/airflow-slack -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] xlanor opened a new pull request, #27898: fix: current_state method on TaskInstance doesn't filter by map_index

xlanor opened a new pull request, #27898:

URL: https://github.com/apache/airflow/pull/27898

Signed-off-by: xlanor

Fixes #27864

---

current_state method on TaskInstance doesn't filter by map_index so calling

this method on mapped task instance fails.

Read the **[Pull Request

Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)**

for more information.

In case of fundamental code changes, an Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvement+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in a

newsfragment file, named `{pr_number}.significant.rst` or

`{issue_number}.significant.rst`, in

[newsfragments](https://github.com/apache/airflow/tree/main/newsfragments).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] syedahsn closed pull request #27873: EMR Notebook Execution Sensor

syedahsn closed pull request #27873: EMR Notebook Execution Sensor URL: https://github.com/apache/airflow/pull/27873 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] aaronabraham311 commented on pull request #27805: Automatically save and allow restore of recent DAG run configs

aaronabraham311 commented on PR #27805: URL: https://github.com/apache/airflow/pull/27805#issuecomment-1326792202 @pierrejeambrun Oh that's great! Is there any example on how to access the DagRun configs from the db? Or is there a code snippet that we can use as an example? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] pierrejeambrun commented on pull request #27805: Automatically save and allow restore of recent DAG run configs

pierrejeambrun commented on PR #27805: URL: https://github.com/apache/airflow/pull/27805#issuecomment-1326777609 Now that you mention it, DagRun conf are already stored and available for each run. Isn't it easier to just retrieve them and provide them to the `trigger.html` template directly ? We also have a lot of control of what conf should be retrieved (5 most recent conf for a specific dag, etc.) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] george-zubrienko commented on issue #27838: apache-airflow-providers-common-sql==1.3.0 breaks BigQuery operators

george-zubrienko commented on issue #27838: URL: https://github.com/apache/airflow/issues/27838#issuecomment-1326771187 @potiuk I usually pin versions (`==1.2.3`) of providers that ship a lot of dependencies, to what is shown in the official image by running `pip show ` and only upgrade if it was upgraded in the next release. Also, we don't upgrade to every release right away, so the snippets I posted were for 2.4.1 version where we did some dependency shuffling (no version bumps, simple `poetry update`) and then I saw errors popping on test env after new image was deployed. Reason we use poetry is to resolve potential incompatibilities between our own libraries and airflow dependencies. For some providers - like datadog in the example above - it is more or less safe to only lock major and minor with `~`, but for google after running into problems with the protobuf library upgrade, I learned it is safer to pin dependencies. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch constraints-2-5 updated: Updating constraints. Build id: