[GitHub] [airflow] boring-cyborg[bot] commented on issue #29456: How to automaticlly rerun the zombie tasks

boring-cyborg[bot] commented on issue #29456: URL: https://github.com/apache/airflow/issues/29456#issuecomment-1425334083 Thanks for opening your first issue here! Be sure to follow the issue template! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] linhgao opened a new issue, #29456: How to automaticlly rerun the zombie tasks

linhgao opened a new issue, #29456: URL: https://github.com/apache/airflow/issues/29456 ### Description My airflow cluster is deployed on the k8s cluster, when the worker container is restarted, the long-run jobs will failed. And now I only rerun manually, if I don't rerun in time, it will affect the normal operation of workflow. So, I want to achieve the feature that the airflow scheduler can automaticlly rerun the zombie tasks. ### Use case/motivation When the scheduler find the zombie tasks, we can choose by the airflow configuration that mark failed these tasks or automaticlly add these task into queue. ### Related issues no ### Are you willing to submit a PR? - [X] Yes I am willing to submit a PR! ### Code of Conduct - [X] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] wolfier opened a new issue, #29455: Not all zombies are caused by missed heartbeat

wolfier opened a new issue, #29455:

URL: https://github.com/apache/airflow/issues/29455

### Apache Airflow version

2.5.1

### What happened

When the scheduler [finds

zombies](https://github.com/apache/airflow/blob/2.5.1/airflow/jobs/scheduler_job.py#L1541-L1542),

a log emitted to indicate how many jobs was found without heartbeats.

```

[2023-01-12T03:26:33.347+] {scheduler_job.py:1533} WARNING - Failing (1)

jobs without heartbeat after 2023-01-12 03:21:33.317638+00:00

```

An odd case where a task instance became a zombie right after being executed.

---

The task is scheduled and queued by the scheduler and passed to the executor.

```

[2023-01-12T03:26:14.983+] {scheduler_job.py:360} INFO - 1 tasks up for

execution:

[2023-01-12T03:26:14.984+] {scheduler_job.py:511} INFO - Setting the

following tasks to queued state:

[2023-01-12T03:26:14.991+] {base_executor.py:95} INFO - Adding to queue:

['airflow', 'tasks', 'run', 'model_contracts', 'wait_for_last_product_astro',

'scheduled__2023-01-12T02:00:00+00:00', '--local', '--subdir',

'DAGS_FOLDER/declarative/gusty/hourly/hourly.py']

[2023-01-12T03:26:14.991+] {scheduler_job.py:550} INFO - Sending

TaskInstanceKey(dag_id='model_contracts',

task_id='wait_for_last_product_astro',

run_id='scheduled__2023-01-12T02:00:00+00:00', try_number=1, map_index=-1) to

executor with priority 15500 and queue default

```

Celery worker picks up task instance, assigns celery task id (uuid), and

emits executor event into event_buffer.

```

[2023-01-12 03:26:15,005: INFO/MainProcess] Task

airflow.executors.celery_executor.execute_command[f4242c9e-9426-4b2d-b55c-323731d74e09]

received

[2023-01-12 03:26:15,022: INFO/ForkPoolWorker-2]

[f4242c9e-9426-4b2d-b55c-323731d74e09] Executing command in Celery: ['airflow',

'tasks', 'run', 'model_contracts', 'wait_for_last_product_astro',

'scheduled__2023-01-12T02:00:00+00:00', '--local', '--subdir',

'DAGS_FOLDER/declarative/gusty/hourly/hourly.py']

```

Scheduler reads event_buffer and acknowledges the task instances as assigned

in Celery.

```

[2023-01-12T03:26:15.145+] {scheduler_job.py:635} INFO - Setting

external_id for to

f4242c9e-9426-4b2d-b55c-323731d74e09

```

The task instance is marked as zombie soon after.

```

[2023-01-12T03:26:33.347+] {scheduler_job.py:1533} WARNING - Failing (1)

jobs without heartbeat after 2023-01-12 03:21:33.317638+00:00

[2023-01-12T03:26:33.355+] {scheduler_job.py:1543} ERROR - Detected

zombie job: {'full_filepath':

'/usr/local/airflow/dags/declarative/gusty/hourly/hourly.py',

'processor_subdir': '/usr/local/airflow/dags', 'msg': "{'DAG Id':

'model_contracts', 'Task Id': 'wait_for_last_product_astro', 'Run Id':

'scheduled__2023-01-12T02:00:00+00:00', 'Hostname': '10.2.124.99', 'External

Executor Id': 'f4242c9e-9426-4b2d-b55c-323731d74e09'}", 'simple_task_instance':

,

'is_failure_callback': True}

[2023-01-12T03:26:36.044+] {taskinstance.py:1774} ERROR - {'DAG Id':

'model_contracts', 'Task Id': 'wait_for_last_product_astro', 'Run Id':

'scheduled__2023-01-12T02:00:00+00:00', 'Hostname': '10.2.124.99', 'External

Executor Id': 'f4242c9e-9426-4b2d-b55c-323731d74e09'}

```

Based on the task logs, the task run command never got to the task execution

part.

```

[2023-01-12, 03:26:17 UTC] {standard_task_runner.py:83} INFO - Job 4184045:

Subtask wait_for_last_product_astro

[2023-01-12, 03:26:22 UTC] {standard_task_runner.py:100} ERROR - Failed to

execute job 4184045 for task wait_for_last_product_astro

((psycopg2.OperationalError) could not translate host name

"geocentric-spacecraft-1886-pgbouncer.geocentric-spacecraft-1886.svc.cluster.local"

to address: Temporary failure in name resolution

(Background on this error at: https://sqlalche.me/e/14/e3q8); 7131)

[2023-01-12, 03:26:23 UTC] {local_task_job.py:159} INFO - Task exited with

return code 1

```

Given the [command execution encounter an

exception](https://github.com/apache/airflow/blob/2.5.0/airflow/cli/commands/task_command.py#L383-L389)

before running the execute method, the StandardTaskRunner exited followed by

the LocalTaskJob also exiting with the state success without handling the state

of the task instance. At this point the state of the [task instance is

running](https://github.com/apache/airflow/blob/2.5.0/airflow/jobs/local_task_job.py#L88-L97)

because the LocalTaskJob successfully created the StandardTaskRunner.

A task instance in the running state with its corresponding LocalTaskJob in

the success state means the task instance is now a zombie but not because of

the lack of heartbeats.

### What you think should happen instead

As explained above not all zombies are caused by missed heartbeat. When a

`LocalTaskJob` succeeds or fails while the task instance is

[GitHub] [airflow-client-python] VeereshPatil commented on issue #21: trigger dag api is broken

VeereshPatil commented on issue #21:

URL:

https://github.com/apache/airflow-client-python/issues/21#issuecomment-1425257059

you can not pass **dag_id** and **external_trigger** as part of DagRun (

dag_id is passed in post_dag_run() function)

The following worked for me,

```

dag_run = DAGRun(

dag_run_id='some_test_run',

conf={"key":"values"}

)

api_response = dag_run_api_instance.post_dag_run(dag_id, dag_run)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #29440: Bring back fixed tenacity

uranusjr commented on code in PR #29440: URL: https://github.com/apache/airflow/pull/29440#discussion_r1102278823 ## setup.cfg: ## @@ -134,9 +134,7 @@ install_requires = sqlalchemy>=1.4,<2.0 sqlalchemy_jsonfield>=1.0 tabulate>=0.7.5 -# The 8.2.0 release of tenacity has a mypy error that breaks our CI -# The upper-bound limit can be removed after https://github.com/jd/tenacity/issues/389 is resolved -tenacity>=6.2.0,<8.2.0 +tenacity>=8.2.1 Review Comment: ```suggestion tenacity>=6.2.0,!=8.2.0 ``` since the older versions aren’t incompatible? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] uranusjr commented on a diff in pull request #29451: Fix nested fields rendering in mapped operators

uranusjr commented on code in PR #29451: URL: https://github.com/apache/airflow/pull/29451#discussion_r1102278121 ## airflow/models/mappedoperator.py: ## @@ -687,7 +687,7 @@ def render_template_fields( unmapped_task = self.unmap(mapped_kwargs) context_update_for_unmapped(context, unmapped_task) -self._do_render_template_fields( +unmapped_task._do_render_template_fields( Review Comment: A comment here containing the explaination you gave in the PR description would be a good idea. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] BrunoGrandePhD commented on issue #29137: Add ability to access context in functions decorated by task.sensor

BrunoGrandePhD commented on issue #29137: URL: https://github.com/apache/airflow/issues/29137#issuecomment-1425156771 I see that a PR to address this is already open and already approved by at least one reviewer, but if anyone else stumbles upon this issue, you can use `airflow.operators.python.get_current_context()` as a temporary solution to access context variables ([docs](https://airflow.apache.org/docs/apache-airflow/stable/tutorial/taskflow.html#:~:text=Also%2C%20sometimes%20you%20might%20want%20to%20access%20the%20context%20somewhere%20deep%20in%20the%20stack%2C%20but%20you%20do%20not%20want%20to%20pass%20the%20context%20variables%20from%20the%20task%20callable.%20You%20can%20still%20access%20execution%20context%20via%20the%20get_current_context%20method.)). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] blag commented on a diff in pull request #29441: datasets, next_run_datasets, remove unnecessary timestamp filter

blag commented on code in PR #29441: URL: https://github.com/apache/airflow/pull/29441#discussion_r1102128972 ## airflow/www/views.py: ## @@ -3715,7 +3715,6 @@ def next_run_datasets(self, dag_id): DatasetEvent, and_( DatasetEvent.dataset_id == DatasetModel.id, -DatasetEvent.timestamp > DatasetDagRunQueue.created_at, Review Comment: It's been a long minute since I wrote this, but... I believe when I wrote this the intent with the `lastUpdate` field was to only show last updates _since the last time the dag was queued/run_. But yeah, the `lastUpdate` label isn't descriptive enough for that. Option 1: Personally, I would consider changing the name of what the `lastUpdate` field is rendered to, something like "Last update since last run" or something more wordy. Option 2: But if you don't want to do that, and you want to display the last update for every dataset regardless of whether has already been "consumed" by a DagRun (eg: in either the DatasetDagRunQueue or actually scheduled into a DagRun), then yeah it makes sense to remove this filter. However, I would also remove the `and_` around it since then there would only be one filter condition in that join: ```python .join( DatasetEvent, DatasetEvent.dataset_id == DatasetModel.id, isouter=True, ) ``` If you go for option 2, I think you should be able to compare the existence and creation time of the DDRQ with the DatasetEvent timestamp to figure out whether or not the last update time has already triggered a DDRQ/DagRun or if it has partially satisfied the conditions of a future DagRun. Hope this makes sense. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hussein-awala commented on issue #29432: Jinja templating doesn't work with container_resources when using dymanic task mapping with Kubernetes Pod Operator

hussein-awala commented on issue #29432: URL: https://github.com/apache/airflow/issues/29432#issuecomment-1425036759 @pshrivastava27 I created this PR #29451 and it solved the problem for me, can you test it on your Dev environment? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hussein-awala commented on issue #29442: Logging from inside on_failure_callback not propagated with Cloud Composer

hussein-awala commented on issue #29442:

URL: https://github.com/apache/airflow/issues/29442#issuecomment-1425031828

The callback is executed when the task/run finishes, and it is executed by

the scheduler, you will find this log in the scheduler log files:

```

[2023-02-10T00:01:08.696+] {logging_mixin.py:151} INFO -

[2023-02-10T00:01:08.696+] {test_dag.py:19} INFO - Minimal example -

failure callback with context: {'conf':

, 'dag':

, 'dag_run': , 'data_interval_end':

DateTime(2023, 2, 10, 0, 0, 0, tzinfo=Timezone('UTC')), 'data_interval_start':

DateTime(2023, 2, 9, 0, 0, 0, tzinfo=Timezone('UTC')), 'ds': '2023-02-09',

'ds_nodash': '20230209', 'execution_date': DateTime(2023, 2, 9, 0, 0, 0,

tzinfo=Timezone('UTC')), 'expanded_ti_count': None, 'inlets': [],

'logical_date': DateTime(2023, 2, 9, 0, 0, 0, tzinfo=Timezone('UTC')),

'macros': , 'next_ds': '2023-02-10', 'next_ds_nodash': '20230210',

'next_execution_date': DateTime(2023, 2, 10, 0, 0, 0, tzinfo=Timezone('UTC')),

'outlets': [], 'params': {}, 'prev_data_interval_start_success': None,

'prev_data_interval_end_success': None, 'prev_ds': '2023-02-08',

'prev_ds_nodash': '20230208', 'prev_execution_date': DateTime(2023, 2, 8, 0, 0,

0, tzinfo=Timezone('UTC')), 'prev_execution_date_success': None,

'prev_start_date_success': None, 'run_id':

'scheduled__2023-02-09T00:00:00+00:00', 'task': , 'task_instance': , 'task_instance_key_str':

'INT_tableau_others_recommendation_classifications__sleep_a_while__20230209',

'test_mode': False, 'ti': , 'tomorrow_ds': '2023-02-10',

'tomorrow_ds_no

dash': '20230210', 'triggering_dataset_events': .get_triggering_events at

0x7f2c14fa0290>>, 'ts': '2023-02-09T00:00:00+00:00', 'ts_nodash':

'20230209T00', 'ts_nodash_with_tz': '20230209T00+', 'var': {'json':

None, 'value': None}, 'conn': None, 'yesterday_ds': '2023-02-08',

'yesterday_ds_nodash': '20230208', 'reason': 'timed_out'}

```

You can find this log in the path `$AIRFLOW_HOME/logs/scheduler`, and for

cloud composer you will find it on gcs `gs:///logs/scheduler`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #27797: Add tests to PythonOperator

github-actions[bot] commented on PR #27797: URL: https://github.com/apache/airflow/pull/27797#issuecomment-1425005377 This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] akrava opened a new pull request, #29454: Make `prev_logical_date` variable offset-aware

akrava opened a new pull request, #29454:

URL: https://github.com/apache/airflow/pull/29454

Can fix https://github.com/apache/airflow/issues/29453

---

**^ Add meaningful description above**

Read the **[Pull Request

Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)**

for more information.

In case of fundamental code changes, an Airflow Improvement Proposal

([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvement+Proposals))

is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party

License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in a

newsfragment file, named `{pr_number}.significant.rst` or

`{issue_number}.significant.rst`, in

[newsfragments](https://github.com/apache/airflow/tree/main/newsfragments).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on pull request #29447: Remove non-public interface usage in EcsRunTaskOperator

Taragolis commented on PR #29447:

URL: https://github.com/apache/airflow/pull/29447#issuecomment-1424981137

Not sure is it changes, breeze itself or something else but I have

interesting behaviour with logs during execution this operator

```console

[2023-02-09, 23:33:53 UTC] {ecs.py:489} INFO - ECS task ID is:

6beb6333b8dc4cf7afbd36b524efd026*** Could not read served logs: Parent instance

is not bound to a Session; lazy load operation

of attribute 'trigger' cannot proceed (Background on this error at:

https://sqlalche.me/e/14/bhk3)

*** Found local files:

*** *

/root/airflow/logs/dag_id=test_ecs_operator_reatach/run_id=manual__2023-02-09T23:33:49.777801+00:00/task_id=ecs-task-regular-no-reattach/attempt=1.log

*** Could not read served logs: Parent instance is not bound to a Session; lazy load operation of attribute

'trigger' cannot proceed (Background on this error at:

https://sqlalche.me/e/14/bhk3)

*** Found local files:

*** *

/root/airflow/logs/dag_id=test_ecs_operator_reatach/run_id=manual__2023-02-09T23:33:49.777801+00:00/task_id=ecs-task-regular-no-reattach/attempt=1.log

*** Could not read served logs: Parent instance is not bound to a Session; lazy load operation of attribute

'trigger' cannot proceed (Background on this error at:

https://sqlalche.me/e/14/bhk3)

*** Found local files:

*** *

/root/airflow/logs/dag_id=test_ecs_operator_reatach/run_id=manual__2023-02-09T23:33:49.777801+00:00/task_id=ecs-task-regular-no-reattach/attempt=1.log

*** Could not read served logs: Parent instance is not bound to a Session; lazy load operation of attribute

'trigger' cannot proceed (Background on this error at:

https://sqlalche.me/e/14/bhk3)

*** Found local files:

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

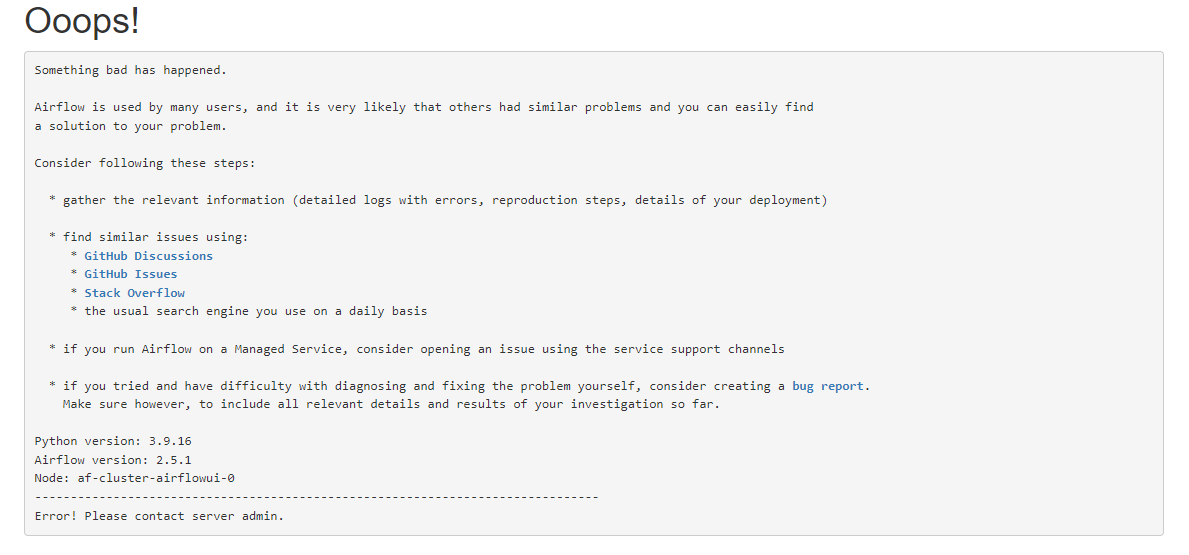

[GitHub] [airflow] akrava opened a new issue, #29453: Couldn't open Calendar tab of DAG after triggering DAG

akrava opened a new issue, #29453:

URL: https://github.com/apache/airflow/issues/29453

### Apache Airflow version

2.5.1

### What happened

After triggering any DAG and trying to open Calendar tab I see this:

Here is the logs:

```

[2023-02-09T23:19:27.482+] {app.py:1741} ERROR - Exception on

/dags/dtap_dag_with_bash/calendar [GET]

Traceback (most recent call last):

File "/home/airflow/.local/lib/python3.9/site-packages/flask/app.py", line

2525, in wsgi_app

response = self.full_dispatch_request()

File "/home/airflow/.local/lib/python3.9/site-packages/flask/app.py", line

1822, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/home/airflow/.local/lib/python3.9/site-packages/flask/app.py", line

1820, in full_dispatch_request

rv = self.dispatch_request()

File "/home/airflow/.local/lib/python3.9/site-packages/flask/app.py", line

1796, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**view_args)

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/www/auth.py", line

47, in decorated

return func(*args, **kwargs)

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/www/decorators.py",

line 166, in view_func

return f(*args, **kwargs)

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/www/decorators.py",

line 125, in wrapper

return f(*args, **kwargs)

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/utils/session.py",

line 75, in wrapper

return func(*args, session=session, **kwargs)

File

"/home/airflow/.local/lib/python3.9/site-packages/airflow/www/views.py", line

2756, in calendar

if curr_info.logical_date <= prev_logical_date:

TypeError: can't compare offset-naive and offset-aware datetimes

```

### What you think should happen instead

_No response_

### How to reproduce

_No response_

### Operating System

Ubuntu 20.04.5

### Versions of Apache Airflow Providers

_No response_

### Deployment

Other Docker-based deployment

### Deployment details

_No response_

### Anything else

_No response_

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of

Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] blag commented on a diff in pull request #29441: datasets, next_run_datasets, remove unnecessary timestamp filter

blag commented on code in PR #29441: URL: https://github.com/apache/airflow/pull/29441#discussion_r1102128972 ## airflow/www/views.py: ## @@ -3715,7 +3715,6 @@ def next_run_datasets(self, dag_id): DatasetEvent, and_( DatasetEvent.dataset_id == DatasetModel.id, -DatasetEvent.timestamp > DatasetDagRunQueue.created_at, Review Comment: It's been a long minute since I wrote this, but... I believe when I wrote this the intent with the `lastUpdate` field was to only show last updates _since the last time the dag was queued/run_. But yeah, the `lastUpdate` label isn't descriptive enough for that. Option 1: Personally, I would consider changing the name of what the `lastUpdate` field is rendered to, something like "Last update since last run" or something more wordy. Option 2: But if you don't want to do that, and you want to display the last update for every dataset regardless of whether has already been "consumed" by a DagRun (eg: in either the DatasetDagRunQueue or actually scheduled into a DagRun), then yeah it makes sense to remove this filter. However, I would also remove the `and_` around it since then there would only be one filter condition in that join: ```python .join( DatasetEvent, DatasetEvent.dataset_id == DatasetModel.id, isouter=True, ) ``` If you go for option 2, I think you should be able to compare the existence and creation time of the DDRQ with the DatasetEvent timestamp to figure out whether or not the last update time has already triggered a DagRun or if it has partially satisfied the conditions of a future DagRun. Hope this makes sense. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on a diff in pull request #29452: Add support of a different AWS connection for DynamoDB

Taragolis commented on code in PR #29452: URL: https://github.com/apache/airflow/pull/29452#discussion_r1102125057 ## airflow/providers/amazon/aws/transfers/dynamodb_to_s3.py: ## @@ -103,6 +106,7 @@ def __init__( self, *, dynamodb_table_name: str, +dynamodb_conn_id: str | None = None, Review Comment: It is more about less known behaviour of boto3-based Hooks, `None` it is a legit value and could force to use boto3 default behaviour strategy (without any call to Connections), e.g. env Variables, IAM Profile, ECS Task Role and etc. I think better to use specific sentinel as default value instead of `None` here https://github.com/apache/airflow/blob/a76e0fe16ef12749c3fea1b68d82936b238fafbb/airflow/utils/types.py#L28-L44 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] harishkrao commented on a diff in pull request #28950: Sensor for Databricks partition and table changes

harishkrao commented on code in PR #28950:

URL: https://github.com/apache/airflow/pull/28950#discussion_r1102114019

##

airflow/providers/databricks/sensors/databricks_table_changes.py:

##

@@ -0,0 +1,164 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+"""This module contains Databricks sensors."""

+

+from __future__ import annotations

+

+from datetime import datetime, timedelta

+from typing import Any, Callable, Sequence

+

+from airflow.exceptions import AirflowException

+from airflow.providers.common.sql.hooks.sql import fetch_all_handler

+from airflow.providers.databricks.hooks.databricks_sql import DatabricksSqlHook

+from airflow.providers.databricks.sensors.databricks_sql import

DatabricksSqlSensor

+from airflow.utils.context import Context

+

+

+class DatabricksTableChangesSensor(DatabricksSqlSensor):

+"""Sensor to detect changes in a Delta table.

+

+

+:param databricks_conn_id: Reference to :ref:`Databricks

+connection id` (templated), defaults to

+DatabricksSqlHook.default_conn_name

+:param http_path: Optional string specifying HTTP path of Databricks SQL

Endpoint or cluster.

+If not specified, it should be either specified in the Databricks

connection's

+extra parameters, or ``sql_endpoint_name`` must be specified.

+:param sql_endpoint_name: Optional name of Databricks SQL Endpoint. If not

specified, ``http_path`` must

+be provided as described above, defaults to None

+:param session_configuration: An optional dictionary of Spark session

parameters. If not specified,

+it could be specified in the Databricks connection's extra

parameters., defaults to None

+:param http_headers: An optional list of (k, v) pairs

+that will be set as HTTP headers on every request. (templated).

+:param catalog: An optional initial catalog to use.

+Requires DBR version 9.0+ (templated), defaults to ""

+:param schema: An optional initial schema to use.

+Requires DBR version 9.0+ (templated), defaults to "default"

+:param table_name: Table name to generate the SQL query, defaults to ""

+:param handler: Handler for DbApiHook.run() to return results, defaults to

fetch_all_handler

+:param caller: String passed to name a hook to Databricks, defaults to

"DatabricksPartitionSensor"

+:param client_parameters: Additional parameters internal to Databricks SQL

Connector parameters.

+:param timestamp: Timestamp to check event history for a Delta table,

+defaults to datetime.now()-timedelta(days=7)

+"""

+

+template_fields: Sequence[str] = ("databricks_conn_id", "catalog",

"schema", "table_name", "timestamp")

+

+def __init__(

+self,

+*,

+databricks_conn_id: str = DatabricksSqlHook.default_conn_name,

+http_path: str | None = None,

+sql_endpoint_name: str | None = None,

+session_configuration=None,

+http_headers: list[tuple[str, str]] | None = None,

+catalog: str = "",

+schema: str = "default",

+table_name: str = "",

+handler: Callable[[Any], Any] = fetch_all_handler,

+timestamp: datetime = datetime.now() - timedelta(days=7),

+caller: str = "DatabricksTableChangesSensor",

+client_parameters: dict[str, Any] | None = None,

+**kwargs,

+) -> None:

+super().__init__(**kwargs)

+self.databricks_conn_id = databricks_conn_id

+self._http_path = http_path

+self._sql_endpoint_name = sql_endpoint_name

+self.session_config = session_configuration

+self.http_headers = http_headers

+self.catalog = catalog

+self.schema = schema

+self.table_name = table_name

+self.timestamp = timestamp

+self.caller = caller

+self.client_parameters = client_parameters or {}

+self.hook_params = kwargs.pop("hook_params", {})

+self.handler = handler

+

+def _get_hook(self) -> DatabricksSqlHook:

+return DatabricksSqlHook(

+self.databricks_conn_id,

+self._http_path,

+self._sql_endpoint_name,

+self.session_config,

+self.http_headers,

+self.catalog,

[GitHub] [airflow] harishkrao commented on a diff in pull request #28950: Sensor for Databricks partition and table changes

harishkrao commented on code in PR #28950:

URL: https://github.com/apache/airflow/pull/28950#discussion_r1102113638

##

airflow/providers/databricks/sensors/databricks_table_changes.py:

##

@@ -0,0 +1,164 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+"""This module contains Databricks sensors."""

+

+from __future__ import annotations

+

+from datetime import datetime, timedelta

+from typing import Any, Callable, Sequence

+

+from airflow.exceptions import AirflowException

+from airflow.providers.common.sql.hooks.sql import fetch_all_handler

+from airflow.providers.databricks.hooks.databricks_sql import DatabricksSqlHook

+from airflow.providers.databricks.sensors.databricks_sql import

DatabricksSqlSensor

+from airflow.utils.context import Context

+

+

+class DatabricksTableChangesSensor(DatabricksSqlSensor):

+"""Sensor to detect changes in a Delta table.

+

+

+:param databricks_conn_id: Reference to :ref:`Databricks

+connection id` (templated), defaults to

+DatabricksSqlHook.default_conn_name

+:param http_path: Optional string specifying HTTP path of Databricks SQL

Endpoint or cluster.

+If not specified, it should be either specified in the Databricks

connection's

+extra parameters, or ``sql_endpoint_name`` must be specified.

+:param sql_endpoint_name: Optional name of Databricks SQL Endpoint. If not

specified, ``http_path`` must

+be provided as described above, defaults to None

+:param session_configuration: An optional dictionary of Spark session

parameters. If not specified,

+it could be specified in the Databricks connection's extra

parameters., defaults to None

+:param http_headers: An optional list of (k, v) pairs

+that will be set as HTTP headers on every request. (templated).

+:param catalog: An optional initial catalog to use.

+Requires DBR version 9.0+ (templated), defaults to ""

+:param schema: An optional initial schema to use.

+Requires DBR version 9.0+ (templated), defaults to "default"

+:param table_name: Table name to generate the SQL query, defaults to ""

+:param handler: Handler for DbApiHook.run() to return results, defaults to

fetch_all_handler

+:param caller: String passed to name a hook to Databricks, defaults to

"DatabricksPartitionSensor"

+:param client_parameters: Additional parameters internal to Databricks SQL

Connector parameters.

+:param timestamp: Timestamp to check event history for a Delta table,

+defaults to datetime.now()-timedelta(days=7)

+"""

+

+template_fields: Sequence[str] = ("databricks_conn_id", "catalog",

"schema", "table_name", "timestamp")

+

+def __init__(

+self,

+*,

+databricks_conn_id: str = DatabricksSqlHook.default_conn_name,

+http_path: str | None = None,

+sql_endpoint_name: str | None = None,

+session_configuration=None,

+http_headers: list[tuple[str, str]] | None = None,

+catalog: str = "",

+schema: str = "default",

+table_name: str = "",

+handler: Callable[[Any], Any] = fetch_all_handler,

+timestamp: datetime = datetime.now() - timedelta(days=7),

+caller: str = "DatabricksTableChangesSensor",

+client_parameters: dict[str, Any] | None = None,

+**kwargs,

+) -> None:

+super().__init__(**kwargs)

+self.databricks_conn_id = databricks_conn_id

+self._http_path = http_path

+self._sql_endpoint_name = sql_endpoint_name

+self.session_config = session_configuration

+self.http_headers = http_headers

+self.catalog = catalog

+self.schema = schema

+self.table_name = table_name

+self.timestamp = timestamp

+self.caller = caller

+self.client_parameters = client_parameters or {}

+self.hook_params = kwargs.pop("hook_params", {})

+self.handler = handler

+

+def _get_hook(self) -> DatabricksSqlHook:

+return DatabricksSqlHook(

+self.databricks_conn_id,

+self._http_path,

+self._sql_endpoint_name,

+self.session_config,

+self.http_headers,

+self.catalog,

[GitHub] [airflow] harishkrao commented on a diff in pull request #28950: Sensor for Databricks partition and table changes

harishkrao commented on code in PR #28950:

URL: https://github.com/apache/airflow/pull/28950#discussion_r1102111386

##

airflow/providers/databricks/sensors/databricks_table_changes.py:

##

@@ -0,0 +1,164 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+"""This module contains Databricks sensors."""

+

+from __future__ import annotations

+

+from datetime import datetime, timedelta

+from typing import Any, Callable, Sequence

+

+from airflow.exceptions import AirflowException

+from airflow.providers.common.sql.hooks.sql import fetch_all_handler

+from airflow.providers.databricks.hooks.databricks_sql import DatabricksSqlHook

+from airflow.providers.databricks.sensors.databricks_sql import

DatabricksSqlSensor

+from airflow.utils.context import Context

+

+

+class DatabricksTableChangesSensor(DatabricksSqlSensor):

+"""Sensor to detect changes in a Delta table.

+

+

+:param databricks_conn_id: Reference to :ref:`Databricks

+connection id` (templated), defaults to

+DatabricksSqlHook.default_conn_name

+:param http_path: Optional string specifying HTTP path of Databricks SQL

Endpoint or cluster.

+If not specified, it should be either specified in the Databricks

connection's

+extra parameters, or ``sql_endpoint_name`` must be specified.

+:param sql_endpoint_name: Optional name of Databricks SQL Endpoint. If not

specified, ``http_path`` must

+be provided as described above, defaults to None

+:param session_configuration: An optional dictionary of Spark session

parameters. If not specified,

+it could be specified in the Databricks connection's extra

parameters., defaults to None

+:param http_headers: An optional list of (k, v) pairs

+that will be set as HTTP headers on every request. (templated).

+:param catalog: An optional initial catalog to use.

+Requires DBR version 9.0+ (templated), defaults to ""

+:param schema: An optional initial schema to use.

+Requires DBR version 9.0+ (templated), defaults to "default"

+:param table_name: Table name to generate the SQL query, defaults to ""

+:param handler: Handler for DbApiHook.run() to return results, defaults to

fetch_all_handler

+:param caller: String passed to name a hook to Databricks, defaults to

"DatabricksPartitionSensor"

+:param client_parameters: Additional parameters internal to Databricks SQL

Connector parameters.

+:param timestamp: Timestamp to check event history for a Delta table,

+defaults to datetime.now()-timedelta(days=7)

+"""

+

+template_fields: Sequence[str] = ("databricks_conn_id", "catalog",

"schema", "table_name", "timestamp")

+

+def __init__(

+self,

+*,

+databricks_conn_id: str = DatabricksSqlHook.default_conn_name,

+http_path: str | None = None,

+sql_endpoint_name: str | None = None,

+session_configuration=None,

+http_headers: list[tuple[str, str]] | None = None,

+catalog: str = "",

+schema: str = "default",

+table_name: str = "",

+handler: Callable[[Any], Any] = fetch_all_handler,

+timestamp: datetime = datetime.now() - timedelta(days=7),

+caller: str = "DatabricksTableChangesSensor",

+client_parameters: dict[str, Any] | None = None,

Review Comment:

Changed it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] harishkrao commented on a diff in pull request #28950: Sensor for Databricks partition and table changes

harishkrao commented on code in PR #28950:

URL: https://github.com/apache/airflow/pull/28950#discussion_r110234

##

airflow/providers/databricks/sensors/databricks_partition.py:

##

@@ -0,0 +1,157 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+"""This module contains Databricks sensors."""

+

+from __future__ import annotations

+

+from typing import Any, Callable, Sequence

+

+from airflow.exceptions import AirflowException

+from airflow.providers.common.sql.hooks.sql import fetch_one_handler

+from airflow.providers.databricks.hooks.databricks_sql import DatabricksSqlHook

+from airflow.providers.databricks.sensors.databricks_sql import

DatabricksSqlSensor

+from airflow.utils.context import Context

+

+template_fields: Sequence[str] = ("databricks_conn_id", "catalog", "schema",

"table_name", "partition_name")

+

+

+class DatabricksPartitionSensor(DatabricksSqlSensor):

+"""Sensor to detect the existence of partitions in a Delta table.

+

+:param databricks_conn_id: Reference to :ref:`Databricks

+connection id` (templated).

+:param http_path: Optional string specifying HTTP path of Databricks SQL

Endpoint or cluster.

+If not specified, it should be either specified in the Databricks

connection's extra parameters,

+or ``sql_endpoint_name`` must be specified.

+:param sql_endpoint_name: Optional name of Databricks SQL Endpoint.

+If not specified, ``http_path`` must be provided as described above.

+:param session_configuration: An optional dictionary of Spark session

parameters. If not specified,

+it could be specified in the Databricks connection's extra parameters.

+:param http_headers: An optional list of (k, v) pairs that will be set

+as HTTP headers on every request. (templated)

+:param catalog: An optional initial catalog to use. Requires DBR version

9.0+ (templated)

+:param schema: An optional initial schema to use. Requires DBR version

9.0+ (templated)

+:param table_name: Table name to generate the SQL query.

+:param partition_name: Partition to check.

+:param handler: Handler for DbApiHook.run() to return results, defaults to

fetch_one_handler

+:param caller: String passed to name a hook to Databricks, defaults to

"DatabricksPartitionSensor"

+:param client_parameters: Additional parameters internal to Databricks SQL

Connector parameters.

+:param partition_operator: Comparison operator for partitions.

+"""

+

+template_fields: Sequence[str] = (

+"databricks_conn_id",

+"schema",

+"http_headers",

+"catalog",

+"table_name",

+"partition_name",

+)

+

+def __init__(

+self,

+*,

+databricks_conn_id: str = DatabricksSqlHook.default_conn_name,

+http_path: str | None = None,

+sql_endpoint_name: str | None = None,

+session_configuration=None,

+http_headers: list[tuple[str, str]] | None = None,

+catalog: str = "",

+schema: str = "default",

+table_name: str = "",

+partition_name: dict,

+handler: Callable[[Any], Any] = fetch_one_handler,

+caller: str = "DatabricksPartitionSensor",

+client_parameters: dict[str, Any] | None = None,

+partition_operator: str = "=",

+**kwargs,

+) -> None:

+super().__init__(**kwargs)

+self.databricks_conn_id = databricks_conn_id

+self._http_path = http_path

+self._sql_endpoint_name = sql_endpoint_name

+self.session_config = session_configuration

+self.http_headers = http_headers

+self.catalog = catalog

+self.schema = schema

+self.table_name = table_name

+self.partition_name = partition_name

+self.caller = caller

+self.client_parameters = client_parameters or {}

+self.hook_params = kwargs.pop("hook_params", {})

+self.handler = handler

+self.partition_operator = partition_operator

+

+def _get_hook(self) -> DatabricksSqlHook:

+return DatabricksSqlHook(

+self.databricks_conn_id,

+self._http_path,

+self._sql_endpoint_name,

+self.session_config,

+

[GitHub] [airflow] harishkrao commented on a diff in pull request #28950: Sensor for Databricks partition and table changes

harishkrao commented on code in PR #28950:

URL: https://github.com/apache/airflow/pull/28950#discussion_r1102110885

##

airflow/providers/databricks/sensors/databricks_partition.py:

##

@@ -0,0 +1,157 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+"""This module contains Databricks sensors."""

+

+from __future__ import annotations

+

+from typing import Any, Callable, Sequence

+

+from airflow.exceptions import AirflowException

+from airflow.providers.common.sql.hooks.sql import fetch_one_handler

+from airflow.providers.databricks.hooks.databricks_sql import DatabricksSqlHook

+from airflow.providers.databricks.sensors.databricks_sql import

DatabricksSqlSensor

+from airflow.utils.context import Context

+

+template_fields: Sequence[str] = ("databricks_conn_id", "catalog", "schema",

"table_name", "partition_name")

+

+

+class DatabricksPartitionSensor(DatabricksSqlSensor):

+"""Sensor to detect the existence of partitions in a Delta table.

+

+:param databricks_conn_id: Reference to :ref:`Databricks

+connection id` (templated).

+:param http_path: Optional string specifying HTTP path of Databricks SQL

Endpoint or cluster.

+If not specified, it should be either specified in the Databricks

connection's extra parameters,

+or ``sql_endpoint_name`` must be specified.

+:param sql_endpoint_name: Optional name of Databricks SQL Endpoint.

+If not specified, ``http_path`` must be provided as described above.

+:param session_configuration: An optional dictionary of Spark session

parameters. If not specified,

+it could be specified in the Databricks connection's extra parameters.

+:param http_headers: An optional list of (k, v) pairs that will be set

+as HTTP headers on every request. (templated)

+:param catalog: An optional initial catalog to use. Requires DBR version

9.0+ (templated)

+:param schema: An optional initial schema to use. Requires DBR version

9.0+ (templated)

+:param table_name: Table name to generate the SQL query.

+:param partition_name: Partition to check.

+:param handler: Handler for DbApiHook.run() to return results, defaults to

fetch_one_handler

+:param caller: String passed to name a hook to Databricks, defaults to

"DatabricksPartitionSensor"

+:param client_parameters: Additional parameters internal to Databricks SQL

Connector parameters.

+:param partition_operator: Comparison operator for partitions.

+"""

+

+template_fields: Sequence[str] = (

+"databricks_conn_id",

+"schema",

+"http_headers",

+"catalog",

+"table_name",

+"partition_name",

+)

+

+def __init__(

+self,

+*,

+databricks_conn_id: str = DatabricksSqlHook.default_conn_name,

+http_path: str | None = None,

+sql_endpoint_name: str | None = None,

+session_configuration=None,

+http_headers: list[tuple[str, str]] | None = None,

+catalog: str = "",

+schema: str = "default",

+table_name: str = "",

Review Comment:

Yes, changed it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Taragolis commented on a diff in pull request #29447: Remove non-public interface usage in EcsRunTaskOperator

Taragolis commented on code in PR #29447:

URL: https://github.com/apache/airflow/pull/29447#discussion_r1102108095

##

tests/providers/amazon/aws/utils/test_identifiers.py:

##

@@ -0,0 +1,74 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+from __future__ import annotations

+

+import random

+import string

+import uuid

+

+import pytest

+

+from airflow.providers.amazon.aws.utils.identifiers import generate_uuid

+from airflow.utils.types import NOTSET

+

+

+class TestGenerateUuid:

+@pytest.fixture(

+autouse=True,

+params=[

+pytest.param(NOTSET, id="default-namespace"),

+pytest.param(uuid.UUID(int=42), id="custom-namespace"),

+],

+)

+def setup_namespace(self, request):

+self.default_namespace = request.param is NOTSET

+self.namespace = uuid.NAMESPACE_OID if self.default_namespace else

request.param

+self.kwargs = {"namespace": self.namespace} if not

self.default_namespace else {}

+

+def test_deterministic(self):

+"""Test that result deterministic and valid UUID object"""

+args = [

+"".join(random.choice(string.ascii_letters) for _ in

range(random.randint(3, 13)))

+for _ in range(100)

+]

Review Comment:

That the basically a problem with any hashing algorithm and testing that.

This case mostly for catch some changes in logic when two run with the same

value could return different result (I hope it never happen).

So static values here would be "foo", "bar", "spam", "egg", "airflow"

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a diff in pull request #29449: Breeze StatsD Integration

potiuk commented on code in PR #29449: URL: https://github.com/apache/airflow/pull/29449#discussion_r1102103056 ## scripts/ci/docker-compose/integration-statsd.yml: ## @@ -19,15 +19,37 @@ version: "3.7" services: statsd-exporter: image: apache/airflow:airflow-statsd-exporter-2020.09.05-v0.17.0 +container_name: "breeze-statsd-exporter" ports: - "9125:9125" - "9125:9125/udp" - "29102:9102" + prometheus: +image: prom/prometheus +container_name: "breeze-prometheus" +user: "0" +ports: + - "29090:9090" +volumes: + - ./prometheus.yml:/etc/prometheus/prometheus.yml + - ./prometheus/volume:/prometheus + grafana: image: grafana/grafana:8.2.4 +container_name: "breeze-grafana" +environment: + GF_AUTH_ANONYMOUS_ENABLED: true + GF_AUTH_ANONYMOUS_ORG_NAME: "Main Org." + GF_AUTH_ANONYMOUS_ORG_ROLE: "Admin" Review Comment: We have plenty of admin/admin , airflow/airflow in those files already :). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a diff in pull request #29449: Breeze StatsD Integration

potiuk commented on code in PR #29449: URL: https://github.com/apache/airflow/pull/29449#discussion_r1102102697 ## scripts/ci/docker-compose/integration-statsd.yml: ## @@ -19,15 +19,36 @@ version: "3.7" services: statsd-exporter: image: apache/airflow:airflow-statsd-exporter-2020.09.05-v0.17.0 +container_name: "breeze-statsd-exporter" ports: - "9125:9125" - "9125:9125/udp" - "29102:9102" + prometheus: +image: prom/prometheus +container_name: "breeze-prometheus" +user: "0" +ports: + - "29090:9090" Review Comment: This looks cool. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a diff in pull request #29449: Breeze StatsD Integration

ferruzzi commented on code in PR #29449: URL: https://github.com/apache/airflow/pull/29449#discussion_r1102103235 ## scripts/ci/docker-compose/integration-statsd.yml: ## @@ -19,15 +19,37 @@ version: "3.7" services: statsd-exporter: image: apache/airflow:airflow-statsd-exporter-2020.09.05-v0.17.0 +container_name: "breeze-statsd-exporter" ports: - "9125:9125" - "9125:9125/udp" - "29102:9102" + prometheus: +image: prom/prometheus +container_name: "breeze-prometheus" +user: "0" +ports: + - "29090:9090" +volumes: + - ./prometheus.yml:/etc/prometheus/prometheus.yml + - ./prometheus/volume:/prometheus + grafana: image: grafana/grafana:8.2.4 +container_name: "breeze-grafana" +environment: + GF_AUTH_ANONYMOUS_ENABLED: true + GF_AUTH_ANONYMOUS_ORG_NAME: "Main Org." + GF_AUTH_ANONYMOUS_ORG_ROLE: "Admin" Review Comment: "Main Org." is the default org and only available option without also configuring the organization/team/user structure which felt excessive in this case. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a diff in pull request #29449: Breeze StatsD Integration

ferruzzi commented on code in PR #29449: URL: https://github.com/apache/airflow/pull/29449#discussion_r1102099891 ## scripts/ci/docker-compose/integration-statsd.yml: ## @@ -19,15 +19,36 @@ version: "3.7" services: statsd-exporter: image: apache/airflow:airflow-statsd-exporter-2020.09.05-v0.17.0 +container_name: "breeze-statsd-exporter" ports: - "9125:9125" - "9125:9125/udp" - "29102:9102" + prometheus: +image: prom/prometheus +container_name: "breeze-prometheus" +user: "0" +ports: + - "29090:9090" Review Comment: In the end it felt a little better in the BREEZE doc. I dropped it there, but I'll move it if you prefer it in TESTING -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a diff in pull request #29449: Breeze StatsD Integration

ferruzzi commented on code in PR #29449: URL: https://github.com/apache/airflow/pull/29449#discussion_r1102099122 ## scripts/ci/docker-compose/integration-statsd.yml: ## @@ -19,15 +19,37 @@ version: "3.7" services: statsd-exporter: image: apache/airflow:airflow-statsd-exporter-2020.09.05-v0.17.0 +container_name: "breeze-statsd-exporter" ports: - "9125:9125" - "9125:9125/udp" - "29102:9102" + prometheus: +image: prom/prometheus +container_name: "breeze-prometheus" +user: "0" +ports: + - "29090:9090" +volumes: + - ./prometheus.yml:/etc/prometheus/prometheus.yml + - ./prometheus/volume:/prometheus + grafana: image: grafana/grafana:8.2.4 +container_name: "breeze-grafana" +environment: + GF_AUTH_ANONYMOUS_ENABLED: true + GF_AUTH_ANONYMOUS_ORG_NAME: "Main Org." + GF_AUTH_ANONYMOUS_ORG_ROLE: "Admin" Review Comment: Not a good idea in production, but this configures Grafana to skip the login screen and auto-login as an admin. Seems fine to me since this is a test/dev environment. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on a diff in pull request #29449: Breeze StatsD Integration

ferruzzi commented on code in PR #29449: URL: https://github.com/apache/airflow/pull/29449#discussion_r1102099122 ## scripts/ci/docker-compose/integration-statsd.yml: ## @@ -19,15 +19,37 @@ version: "3.7" services: statsd-exporter: image: apache/airflow:airflow-statsd-exporter-2020.09.05-v0.17.0 +container_name: "breeze-statsd-exporter" ports: - "9125:9125" - "9125:9125/udp" - "29102:9102" + prometheus: +image: prom/prometheus +container_name: "breeze-prometheus" +user: "0" +ports: + - "29090:9090" +volumes: + - ./prometheus.yml:/etc/prometheus/prometheus.yml + - ./prometheus/volume:/prometheus + grafana: image: grafana/grafana:8.2.4 +container_name: "breeze-grafana" +environment: + GF_AUTH_ANONYMOUS_ENABLED: true + GF_AUTH_ANONYMOUS_ORG_NAME: "Main Org." + GF_AUTH_ANONYMOUS_ORG_ROLE: "Admin" Review Comment: Not a good idea in production, but this configures Grafana to auto-login as an admin. Seems fine to me since this is a test/dev environment. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ferruzzi commented on pull request #29449: Breeze StatsD Integration

ferruzzi commented on PR #29449:

URL: https://github.com/apache/airflow/pull/29449#issuecomment-1424931439

Failing test is

```

tests/models/test_xcom.py .[2023-02-09T21:36:22.304+]

{xcom.py:632} ERROR - Object of type PickleRce is not JSON serializable. If you

are using pickle instead of JSON for XCom, then you need to enable pickle

support for XCom in your airflow config or make sure to decorate your object

with attr.

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] jedcunningham commented on pull request #29136: Dataproc batches

jedcunningham commented on PR #29136: URL: https://github.com/apache/airflow/pull/29136#issuecomment-1424928053 It gets squashed automatically, so you don't need to worry about it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on issue #29424: Status of testing Providers that were prepared on February 08, 2023

o-nikolas commented on issue #29424: URL: https://github.com/apache/airflow/issues/29424#issuecomment-1424909095 I added code to kill the process while running to test the emr `on_kill` changes, and the cleanup code ran successfully (and I confirmed the resource was deleted in my AWS account): ``` INFO [airflow.task.operators] Job flow with id j-1ADYKTIV4THO5 created INFO [airflow.task.operators] SLEEPING NOW, KILL THE PROCESS TO TEST ON_KILL INFO [airflow.task.operators] TRYING TO KILL MYSELF ERROR [airflow.task] Received SIGTERM. Terminating subprocesses. INFO [airflow.task.operators] Terminating job flow j-1ADYKTIV4THO5 ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] eladkal closed issue #28431: Celery Stalled Running Tasks (SRT)

eladkal closed issue #28431: Celery Stalled Running Tasks (SRT) URL: https://github.com/apache/airflow/issues/28431 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #29452: Add support of a different AWS connection for DynamoDB

boring-cyborg[bot] commented on PR #29452: URL: https://github.com/apache/airflow/pull/29452#issuecomment-1424905565 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (ruff, mypy and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/main/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/main/docs/apache-airflow/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/main/BREEZE.rst) for testing locally, it's a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Please follow [ASF Code of Conduct](https://www.apache.org/foundation/policies/conduct) for all communication including (but not limited to) comments on Pull Requests, Mailing list and Slack. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better . In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://s.apache.org/airflow-slack -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] 1inuxoid opened a new pull request, #29452: Add support of a different AWS connection for DynamoDB

1inuxoid opened a new pull request, #29452: URL: https://github.com/apache/airflow/pull/29452 This change adds a new optional argument `dynamodb_conn_id` to `DynamoDBToS3Operator` so that a separate AWS connection can be used to scan a DynamoDB table. If not specified, the connection from `aws_conn_id` is used, as before. This makes it useful for cross-account transfers. closes: #29422 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kevingoss2 commented on pull request #26786: Fix parsing of optional `mode` field in BigQuery Result Schema

kevingoss2 commented on PR #26786: URL: https://github.com/apache/airflow/pull/26786#issuecomment-1424890246 @patricker You are correct. That version of the code does not have the error. The issue is that when I do a pip freeze on the docker container it shows 8.8.0. Will try to see what is going on. Thanks for the response! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] hussein-awala opened a new pull request, #29451: Fix/render nested fields

hussein-awala opened a new pull request, #29451: URL: https://github.com/apache/airflow/pull/29451 closes: #29432 related: #25588 --- **^ Add meaningful description above** By default, to render nested fields, we need to provide a nested argument `template_fields` contains the list of nested fields names to render. In some of the operator (ex `KubernetesPodOperator`) we override the method `_render_nested_template_fields` to define how we want to render the nested fields. This works fine with the normal operator because they extend the `BaseOperator`, and since they are not sub classes of `MappedOperator`, when it calls this method we call directly `Templater._render_nested_template_fields` instead of calling the method we overrode. To solve this problem, I replaced `self._do_render_template_fields` by `unmapped_task._do_render_template_fields` where `unmapped_task` is an instance of the operator where we override the method. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy opened a new pull request, #29450: Rename `db export-cleaned` to `db export-archived`

ephraimbuddy opened a new pull request, #29450: URL: https://github.com/apache/airflow/pull/29450 This is more appropriate because what is exported are the contents of the archived tables. Even though the contents are the cleaned data, they are still archived and we are 'exporting' from the archived tables. This also aligns with the `--drop-archives` option and `db drop-archived` command in terms of naming. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] o-nikolas commented on issue #29422: Multiple AWS connections support in DynamoDBToS3Operator

o-nikolas commented on issue #29422: URL: https://github.com/apache/airflow/issues/29422#issuecomment-1424875593 @eladkal Are you thinking of something like a generic "source" and "destination" connection that would be implemented in a base AWS transfer operator that all concrete transfer operators would use? Would both those connections be based on the aws connection? I suppose we could use extras to plumb anything service specific through. It's an interesting thought. What do you think @Taragolis? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] patricker commented on pull request #26786: Fix parsing of optional `mode` field in BigQuery Result Schema

patricker commented on PR #26786: URL: https://github.com/apache/airflow/pull/26786#issuecomment-1424873696 @kevingoss2 All I can think is that you somehow aren't actually using Google Providers 8.8.0? Looking at the code for the 8.80 Tag, the line numbers/code in the error don't line up with that version at all: https://github.com/apache/airflow/blob/providers-google/8.8.0/airflow/providers/google/cloud/hooks/bigquery.py#L2992 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] bmoon4 commented on issue #22067: DAG Import Errors not show in webUI if DAG is first time upload to airflow is not currect

bmoon4 commented on issue #22067: URL: https://github.com/apache/airflow/issues/22067#issuecomment-1424863269 Hi, im seeing same issue in airflow 2.5.1.. If a new DAG has import errors, error message does not appear in GUI. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@airflow.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch main updated: Add `airflow db drop-archived` command (#29309)

This is an automated email from the ASF dual-hosted git repository.

ephraimanierobi pushed a commit to branch main

in repository https://gitbox.apache.org/repos/asf/airflow.git

The following commit(s) were added to refs/heads/main by this push:

new a76e0fe16e Add `airflow db drop-archived` command (#29309)

a76e0fe16e is described below

commit a76e0fe16ef12749c3fea1b68d82936b238fafbb

Author: Ephraim Anierobi

AuthorDate: Thu Feb 9 22:26:29 2023 +0100

Add `airflow db drop-archived` command (#29309)

* Add `airflow db drop-archived` command

This command drops the archive tables directly

As part of this, the _confirm_drop_archives function was made more

interactive

* fixup! Add `airflow db drop-archived` command

* Fix test and add doc

* Apply suggestions from code review

Co-authored-by: Jed Cunningham

<66968678+jedcunning...@users.noreply.github.com>

---

airflow/cli/cli_parser.py | 6 ++

airflow/cli/commands/db_command.py | 11 +++-

airflow/utils/db_cleanup.py | 58 ++

docs/apache-airflow/howto/usage-cli.rst | 10

tests/cli/commands/test_db_command.py | 28 +

tests/utils/test_db_cleanup.py | 100 +---

6 files changed, 191 insertions(+), 22 deletions(-)

diff --git a/airflow/cli/cli_parser.py b/airflow/cli/cli_parser.py

index 9a82d2f06e..bf2e98d0f3 100644

--- a/airflow/cli/cli_parser.py

+++ b/airflow/cli/cli_parser.py

@@ -1626,6 +1626,12 @@ DB_COMMANDS = (

ARG_DB_TABLES,

),

),

+ActionCommand(

+name="drop-archived",

+help="Drop archived tables created through the db clean command",

+

func=lazy_load_command("airflow.cli.commands.db_command.drop_archived"),

+args=(ARG_DB_TABLES, ARG_YES),

+),

)

CONNECTIONS_COMMANDS = (

ActionCommand(

diff --git a/airflow/cli/commands/db_command.py

b/airflow/cli/commands/db_command.py

index 468aa3d87f..72ee55c86d 100644

--- a/airflow/cli/commands/db_command.py

+++ b/airflow/cli/commands/db_command.py

@@ -27,7 +27,7 @@ from airflow import settings

from airflow.exceptions import AirflowException

from airflow.utils import cli as cli_utils, db

from airflow.utils.db import REVISION_HEADS_MAP

-from airflow.utils.db_cleanup import config_dict, export_cleaned_records,

run_cleanup

+from airflow.utils.db_cleanup import config_dict, drop_archived_tables,

export_cleaned_records, run_cleanup

from airflow.utils.process_utils import execute_interactive

@@ -218,3 +218,12 @@ def export_cleaned(args):

table_names=args.tables,

drop_archives=args.drop_archives,

)

+

+

+@cli_utils.action_cli(check_db=False)

+def drop_archived(args):

+"""Drops archived tables from metadata database."""

+drop_archived_tables(

+table_names=args.tables,

+needs_confirm=not args.yes,

+)

diff --git a/airflow/utils/db_cleanup.py b/airflow/utils/db_cleanup.py

index a9d12c7aa3..41b89931f5 100644

--- a/airflow/utils/db_cleanup.py

+++ b/airflow/utils/db_cleanup.py

@@ -39,6 +39,7 @@ from airflow.cli.simple_table import AirflowConsole

from airflow.models import Base

from airflow.utils import timezone

from airflow.utils.db import reflect_tables

+from airflow.utils.helpers import ask_yesno

from airflow.utils.session import NEW_SESSION, provide_session

logger = logging.getLogger(__file__)

@@ -301,13 +302,21 @@ def _confirm_delete(*, date: DateTime, tables: list[str]):

def _confirm_drop_archives(*, tables: list[str]):

+# if length of tables is greater than 3, show the total count

+if len(tables) > 3:

+text_ = f"{len(tables)} archived tables prefixed with

{ARCHIVE_TABLE_PREFIX}"

+else:

+text_ = f"the following archived tables {tables}"

question = (

-f"You have requested that we drop archived records for tables

{tables!r}.\n"

-f"This is irreversible. Consider backing up the tables first \n"

-f"Enter 'drop archived tables' (without quotes) to proceed."

+f"You have requested that we drop {text_}.\n"