[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-542431579 @mik-laj Agree with most of your comments, will update it by end of this week. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-535914795 I am going to squash this PR. I have created a branch on my fork (https://github.com/kaxil/airflow/tree/dag-serialization-pr-27-sep-2019) if we need to check the history This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-532172943 > > * Do we want to let users use old versions of MySQL (and Mariadb that doesn't support JSON with sqlalchemy) or add a note and tell them that if you want to use serialisation you would need to use > > newer version of DB or a DB (Postgres) that supports JSON columns. Or else just keep store_serialised_dags=False. If we want to support old versions or DB that don't support JSON columns, that we will have to handle those exceptions and store the serialised DAG (python dicts) as string and use json.loads and json.dump. > > The one problem with this idea is that the migration is independent of the config setting, so we need a migration that works with either config setting. > > The tests currently run against Mysql 5.7 too :) Actually, that's how I figured too. I didn't knew that we were using that old version of Mysql in test. One of the tests errored and then I used your suggestion: https://github.com/apache/airflow/blob/614a8d0b4fe66f42f97ee397015834d70ce8068b/airflow/migrations/versions/d38e04c12aa2_add_serialized_dag_table.py#L37-L49 And it worked. Although I was expecting that I will have to do some changes in SerializedDagModel too: https://github.com/apache/airflow/blob/614a8d0b4fe66f42f97ee397015834d70ce8068b/airflow/models/serialized_dag.py#L62-L71 but it worked without having it change anything. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-530789821 Few things to do: - [ ] Add https://github.com/astronomer/airflow/commit/baf12f626e6d56dfde735faaed71b2c30cb4befb and add tests for it - [ ] Reduce the info we store in Serialized DAGs by removing all the default arguments that are not overridden by users. Eg `owner` in DAG & Task etc. This will help reduce blob size as well as reduce the time spent in `_deserialise` method. - [ ] Agree / dis-agree on using https://pypi.org/project/SQLAlchemy-JSONField/ instead of our code . It also has a nice option of specifying json library as compared to providing that info in the `create_engine.json_serializer` and `create_engine.json_deserializer` parameters in https://docs.sqlalchemy.org/en/13/core/type_basics.html#sqlalchemy.types.JSON cc @coufon @ashb This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-530785443 Few things to do: - [ ] Add https://github.com/astronomer/airflow/commit/baf12f626e6d56dfde735faaed71b2c30cb4befb and add tests for it - [ ] Reduce the info we store in Serialized DAGs by removing all the default arguments that are not overridden by users. Eg `owner` in DAG & Task etc. This will help reduce blob size as well as reduce the time spent in `_deserialise` method. - [ ] Agree / dis-agree on using https://pypi.org/project/SQLAlchemy-JSONField/ instead of our code . It also has a nice option of specifying json library as compared to providing that info in the `create_engine.json_serializer` and `create_engine.json_deserializer` parameters in https://docs.sqlalchemy.org/en/13/core/type_basics.html#sqlalchemy.types.JSON cc @coufon @ashb This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized

DAG in DB for webserver scalability

URL: https://github.com/apache/airflow/pull/5743#issuecomment-529024391

@ashb @coufon With the above commit:

https://github.com/apache/airflow/pull/5743/commits/951cee34e6ecb60bfa8b3f3f946938a43caa5a3a

I have basically enforced JSON column type.

The following code (Ash had suggested this one) that we might want to use to

be a little flexible and let people use old versions of MySQL and use JSONB for

postgres:

```

json_type = sa.JSON

conn = op.get_bind() # pylint: disable=no-member

if conn.dialect.name == "mysql":

# Mysql 5.7+/MariaDB 10.2.3 has JSON support. Rather than checking

for

# versions, check for the function existing.

try:

conn.execute("SELECT JSON_VALID(1)").fetchone()

except sa.exc.OperationalError:

json_type = sa.UnicodeText

elif conn.dialect.name == "postgresql":

json_type = postgresql.JSONB

```

Few related notes that I would like to know your feedback:

* Do we want to let users use old versions of MySQL (and Mariadb that

doesn't support JSON with sqlalchemy) or add a note and tell them that if you

want to use serialisation you would need to use

newer version of DB or a DB (Postgres) that supports JSON columns. Or else

just keep `store_serialised_dags=False`. If we want to support old versions or

DB that don't support JSON columns, that we will have to handle those

exceptions and store the serialised DAG (python dicts) as string and use

json.loads and json.dump.

* JSON vs JSONB? - Opinions ??

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized

DAG in DB for webserver scalability

URL: https://github.com/apache/airflow/pull/5743#issuecomment-528982114

> That last point is interesting - cos at the SQL level we still need to

write it as a string. I wonder if previously we were JSONing things twice (I.e.

in the JSON coloum in the db we were storing single string of the JSON

represention (`"(\"key\”:\”val\"}"` type of thing)?

Not sure. Currently in this PR the data is stored in a **str** column

(`sa.Column('data', sa.Text(), nullable=False)) . We were using `json.dumps` to

convert python dict to str and then store it in this column. So I feel we were

not double-serializing.

Last Night in this commit:

https://github.com/astronomer/airflow/commit/2ab9c9b803a5697410c649337fe0602c3b852654

I changed it to Json columns and removed all json.loads & `json.dumps` code.

I think you were under the assumption that we already changed to json

columns or maybe I might be missing something.

I will update this PR with the json column thing with the migration code you

suggested (was able to do it yet with 1.10.5 and other things).

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

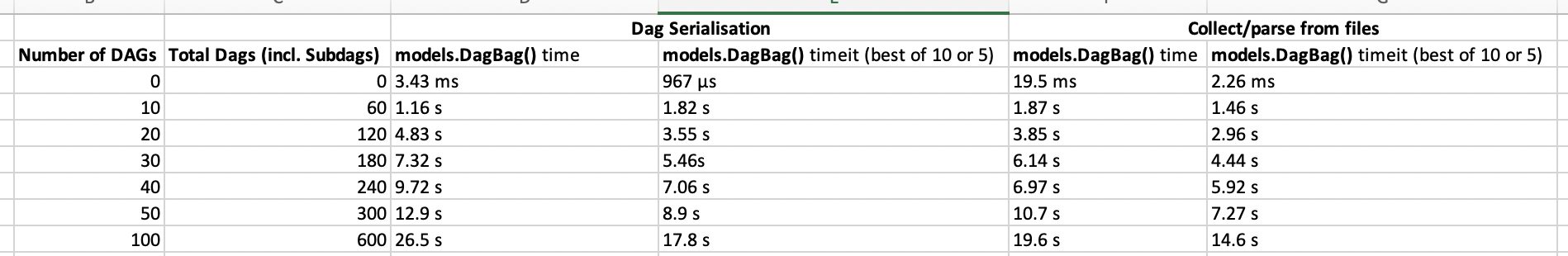

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-528697431 Some more testing: For another trial, I am completely removing the need to load JSON from a str by using JSON columns instead of str columns. Just did some benchmarks on my local machine and it is very impressive. Not having to loads json from str and vice-versa seemed to have halved the time needed to de-serialized dags. For 100 Dags, Parsing from file: 19.6 s (14.6 s - Best run after 5 runs) Dag Serialisation with `json.loads`: 26.5 s (17.8 s - Best Run after 5 runs) Dag Serialisation with `ujson`: 25.8 s (17.3 s - Best Run after 5 runs) Dag Serialisation with *Json Columns* (removed converting str to json & vice-versa): 12.1 s (6.98 s ± 169 ms - Best Run after 5 runs) Need to however tests this results on our staging cluster too as it can be very different. Will do it tomorrow. has been a long day fighting with json libraries - ~5AM here :sleeping: Postgres Jsonb might be even quicker ! Will try that out to tomorrow This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

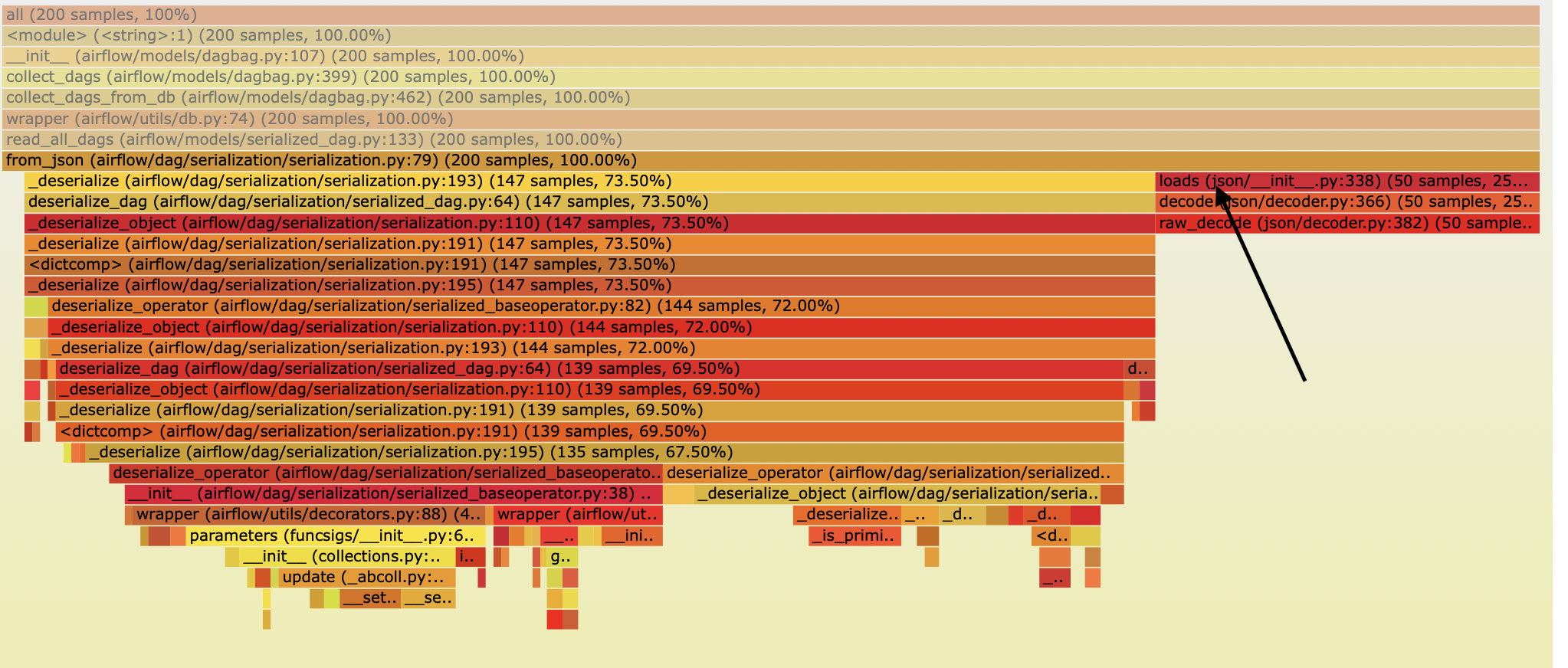

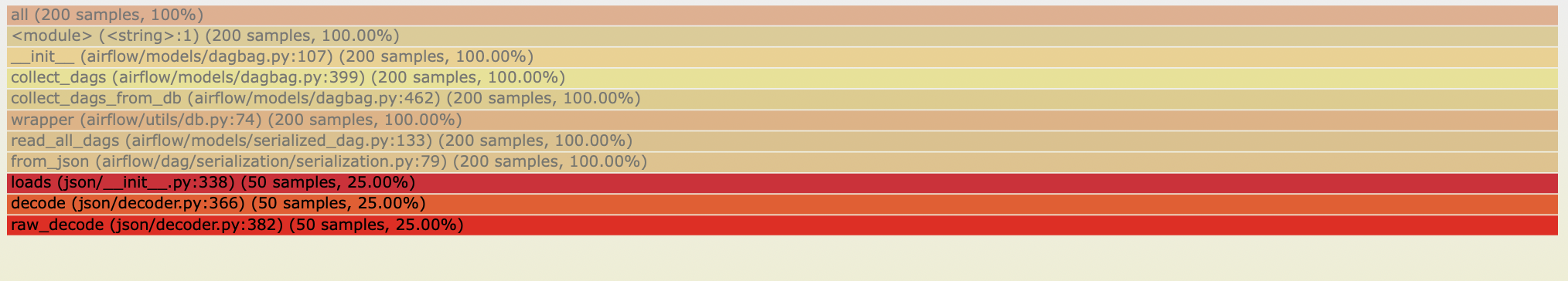

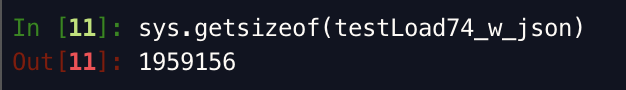

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-528654768 Have done some more benchmarking:  As you can fetching DAGs from DB is still taking more time than parsing DAGs from files. The flame graphs below show that a good amount of time is spent in loading the json (`json.loads`) into a Python dictionary.   So we have 2 solutions: (1) Replace `json` package with a faster json-parsing package. Many online benchmark showed that the `json` package is relatively slow. Based on that I carried out benchmarks of few popular json packages for our use-case: ``` import json import yajl import ujson In [21]: %timeit -n100 json.loads(testLoad74_w_json) 100 loops, best of 3: 25.5 ms per loop In [20]: %timeit -n100 yajl.loads(testLoad74_w_json) 100 loops, best of 3: 11.2 ms per loop In [22]: %timeit -n100 ujson.loads(testLoad74_w_json) 100 loops, best of 3: 9.67 ms per loop ``` Based on the above results I will change `json.loads` to `ujson.loads` and bechmark again. Will post the results tomorrow. (2) Reduce the number of fields that we store in DB even further. The current size of 1 of our test Serialized DAG is ~1.95 mb  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-52749 > How about instead of a background thread (I'm wary of using threads in python) could we instead query the last modified time of the serialised dag on each request? > > i.e. when asking for dag X we check if dag X has been updated in the db since we last loaded it? How about this -> https://github.com/kaxil/airflow/commit/d2ec5371ae987ff87f60ee6b0143eb66dd5dc1e7 ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-523847270 > fileloc is not stored in DB for subdag, because subdag does not have a row in DB. subdag's fileloc is different from its parent's. Should we just keep that? Yes, you are right. Let's keep it in the blob then as it is. Good catch. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized

DAG in DB for webserver scalability

URL: https://github.com/apache/airflow/pull/5743#issuecomment-523416060

> Bug:

>

> ```

> [2019-08-21 12:19:17,625] {app.py:1891} ERROR - Exception on /task [GET]

> Traceback (most recent call last):

> File

"/Users/ash/.virtualenvs/airflow/lib/python3.7/site-packages/flask/app.py",

line 2446, in wsgi_app

> response = self.full_dispatch_request()

> File

"/Users/ash/.virtualenvs/airflow/lib/python3.7/site-packages/flask/app.py",

line 1951, in full_dispatch_request

> rv = self.handle_user_exception(e)

> File

"/Users/ash/.virtualenvs/airflow/lib/python3.7/site-packages/flask/app.py",

line 1820, in handle_user_exception

> reraise(exc_type, exc_value, tb)

> File

"/Users/ash/.virtualenvs/airflow/lib/python3.7/site-packages/flask/_compat.py",

line 39, in reraise

> raise value

> File

"/Users/ash/.virtualenvs/airflow/lib/python3.7/site-packages/flask/app.py",

line 1949, in full_dispatch_request

> rv = self.dispatch_request()

> File

"/Users/ash/.virtualenvs/airflow/lib/python3.7/site-packages/flask/app.py",

line 1935, in dispatch_request

> return self.view_functions[rule.endpoint](**req.view_args)

> File

"/Users/ash/code/python/incubator-airflow/airflow/www/decorators.py", line 121,

in wrapper

> return f(self, *args, **kwargs)

> File

"/Users/ash/.virtualenvs/airflow/lib/python3.7/site-packages/flask_appbuilder/security/decorators.py",

line 101, in wraps

> return f(self, *args, **kwargs)

> File

"/Users/ash/code/python/incubator-airflow/airflow/www/decorators.py", line 56,

in wrapper

> return f(*args, **kwargs)

> File "/Users/ash/code/python/incubator-airflow/airflow/www/views.py",

line 736, in task

> dep_context=dep_context)]

> File "/Users/ash/code/python/incubator-airflow/airflow/www/views.py",

line 734, in

> failed_dep_reasons = [(dep.dep_name, dep.reason) for dep in

> File

"/Users/ash/code/python/incubator-airflow/airflow/models/taskinstance.py", line

626, in get_failed_dep_statuses

> dep_context):

> File

"/Users/ash/code/python/incubator-airflow/airflow/ti_deps/deps/base_ti_dep.py",

line 106, in get_dep_statuses

> yield from self._get_dep_statuses(ti, session, dep_context)

> File

"/Users/ash/code/python/incubator-airflow/airflow/ti_deps/deps/dagrun_id_dep.py",

line 50, in _get_dep_statuses

> if not dagrun.run_id or not match(BackfillJob.ID_PREFIX + '.*',

dagrun.run_id):

> AttributeError: 'NoneType' object has no attribute 'run_id'

> ```

>

> To reproduce:

>

> 1. Navigate to /graph of a disabled dag with no runs

> 2. Click on a task "blob"

> 3. Hit the Task Instance Details button

> 4. boom

I seem to get that when the DAG Serialization is turned off too. So this

might be a bug in master branch itself.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-523239054 As far as I can tell, this PR is now working & ready for Code Review. **Notes**: - **Rendered Template** tab will still have to parse Python file as it needs all the details like the execution date and even the data passed by the upstream task using Xcom. - **Code View** will have to open the DAG File & Show it using Pygments. **It does not need to Parse the Python files** - **_ToDo_** : Add documentation around DAG Serialization in a `.rst` file cc @ashb @coufon Can you please review and let me know what you think? If you are happy with the PR, I will draft documentation and create the `.rst` file and send an email to the mailing list for a vote on this. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-521358954 > > Pending Issues: > > > > * We still have the issue of `SerializedBaseOperator` being displayed in Graph View. > > This is because graph.html and tree.html use `op.__class__.__name__`. Replaced that by op.task_type to fix it. Found this issue with that fix: We have a `BashOperator` label for each task instead of unique lablels.  This is causes because we are making a dict of unique TaskInstance and not Operator Class in **L1335**: https://github.com/apache/airflow/blob/b814f8dfd9448ee3ceef2722c7f0291d8a680700/airflow/www/views.py#L1333-L1336 Previously it was comparing Classes directly, hence it would remove duplicates. https://github.com/apache/airflow/blob/42bf5cb6782994610c722fb56adfe7b837dfeabb/airflow/www/views.py#L1422-L1436 Fixing this now This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-520101071 Pending Issues: - ~Add Timezone support to `serialized_dag` table~ - We still have the issue of `SerializedBaseOperator` being displayed in Graph View.  - Issue displaying SubDags:  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-519723159 Just rebased This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability

kaxil commented on issue #5743: [AIRFLOW-5088][AIP-24] Persisting serialized DAG in DB for webserver scalability URL: https://github.com/apache/airflow/pull/5743#issuecomment-519647789 > hmm. It still failing on rat. This is strange. I am taking a look. Thanks This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services