[GitHub] [hudi] liujinhui1994 commented on issue #2162: [SUPPORT] Deltastreamer transform cannot add fields

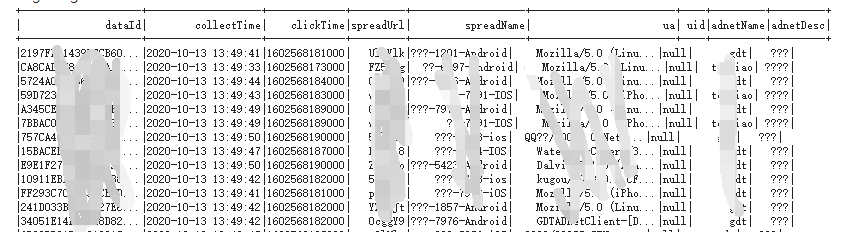

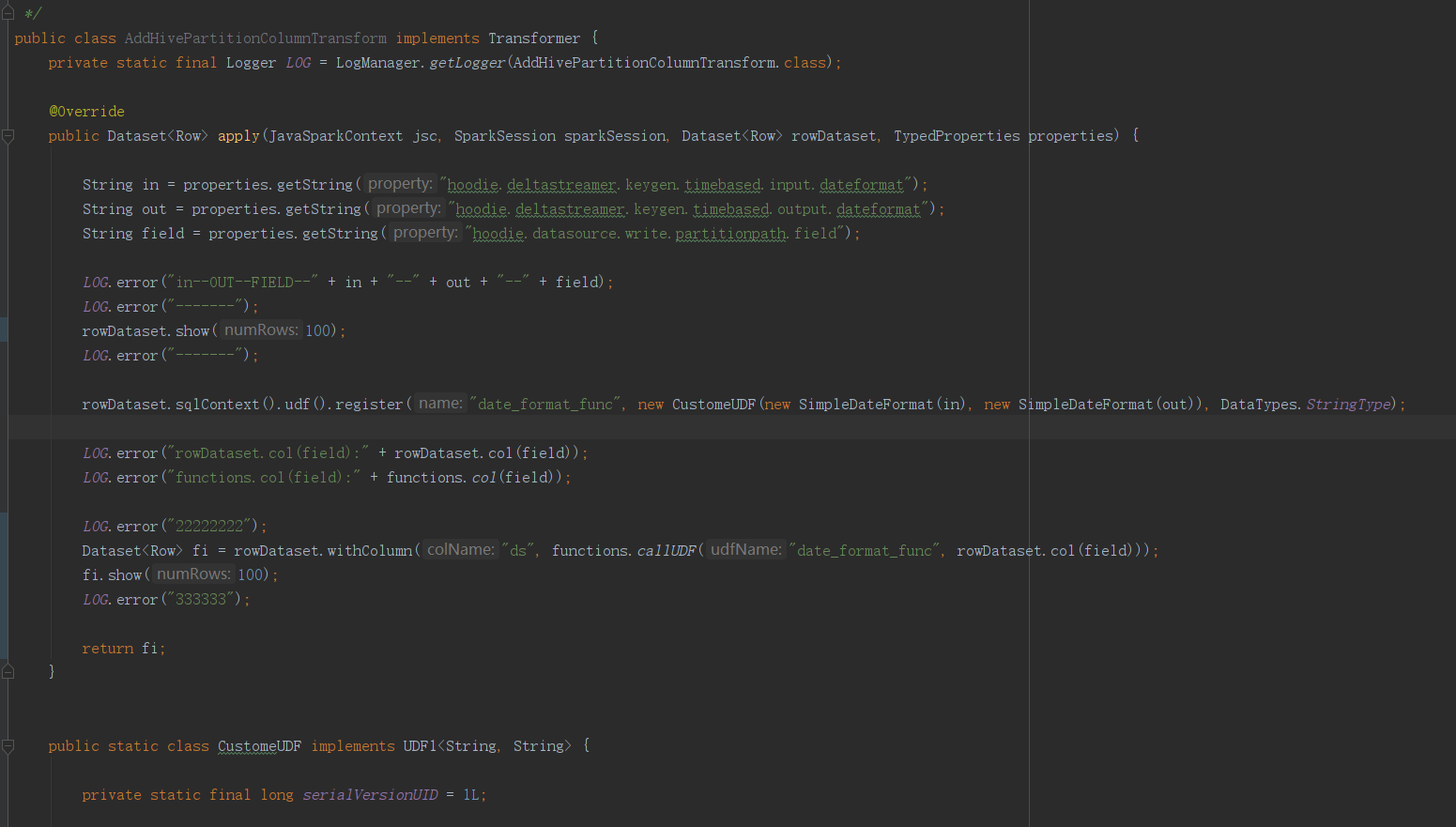

liujinhui1994 commented on issue #2162: URL: https://github.com/apache/hudi/issues/2162#issuecomment-707525990 This is the data I printed in the transform 1. This is before adding the ds field  2. This is after adding the ds field  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] liujinhui1994 removed a comment on issue #2162: [SUPPORT] Deltastreamer transform cannot add fields

liujinhui1994 removed a comment on issue #2162: URL: https://github.com/apache/hudi/issues/2162#issuecomment-707521050 These are the rowDataset I printed in transform 1. This is before I did not add the ds field ++---+-+-+---+++-+-++ | dataId|collectTime|clickTime|spreadUrl| spreadName| ua| uid|adnetName|adnetDesc| ds| ++---+-+-+---+++-+-++ |2197FF31439FFCB60...|2020-10-13 13:49:41|1602568181000| UloYlk|???-1201-Android|Mozilla/5.0 (Linu...|null| gdt| ???|null| |CA8CAD6F8162B0AAB...|2020-10-13 13:49:33|1602568173000| FZ5k8g| ??-6797-Android|Mozilla/5.0 (Linu...|null| toutiao| |null| |5724A0019D6D9FDE9...|2020-10-13 13:49:44|1602568184000| 0cggY9|???-7976-Android|Mozilla/5.0 (Linu...|null| gdt| ???|null| |59D7231E1EEE5B7ED...|2020-10-13 13:49:43|1602568183000| wtMhEd| ??-7991-IOS|Mozilla/5.0 (iPho...|null| toutiao| |null| |A345CE8B34F17C0BA...|2020-10-13 13:49:49|1602568189000| 0cggY9|???-7976-Android|Mozilla/5.0 (Linu...|null| gdt| ???|null| |7BBAC0DA1ED53D050...|2020-10-13 13:49:49|1602568189000| wtMhEd| ??-7991-IOS|Mozilla/5.0 (iPho...|null| toutiao| |null| |757CA415D7252F0DC...|2020-10-13 13:49:50|160256819| 5whw5s| ???-5423-ios| QQ??/20002 CFNetw...|null| gdt| ???|null| |15BACEDE444B1E3D2...|2020-10-13 13:49:47|1602568187000| NJ1Al8| ???-1854-IOS|WaterMarkCamera/3...|null| gdt| ???|null| |E9E1F2770A5B90724...|2020-10-13 13:49:50|160256819| Zdcc0o|???-5423-Android|Dalvik/2.1.0 (Lin...|null| gdt| ???|null| |10911EBF2E25FE5B8...|2020-10-13 13:49:42|1602568182000| 5whw5s| ???-5423-ios|kugou/10.3.0.4 CF...|null| gdt| ???|null| 2. This is after I added the ds field ++---+-+-+---+++-+-+--+ | dataId|collectTime|clickTime|spreadUrl| spreadName| ua| uid|adnetName|adnetDesc|ds| ++---+-+-+---+++-+-+--+ |2197FF31439FFCB60...|2020-10-13 13:49:41|1602568181000| UloYlk|???-1201-Android|Mozilla/5.0 (Linu...|null| gdt| ???|2020/10/13| |CA8CAD6F8162B0AAB...|2020-10-13 13:49:33|1602568173000| FZ5k8g| ??-6797-Android|Mozilla/5.0 (Linu...|null| toutiao| |2020/10/13| |5724A0019D6D9FDE9...|2020-10-13 13:49:44|1602568184000| 0cggY9|???-7976-Android|Mozilla/5.0 (Linu...|null| gdt| ???|2020/10/13| |59D7231E1EEE5B7ED...|2020-10-13 13:49:43|1602568183000| wtMhEd| ??-7991-IOS|Mozilla/5.0 (iPho...|null| toutiao| |2020/10/13| |A345CE8B34F17C0BA...|2020-10-13 13:49:49|1602568189000| 0cggY9|???-7976-Android|Mozilla/5.0 (Linu...|null| gdt| ???|2020/10/13| |7BBAC0DA1ED53D050...|2020-10-13 13:49:49|1602568189000| wtMhEd| ??-7991-IOS|Mozilla/5.0 (iPho...|null| toutiao| |2020/10/13| This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] liujinhui1994 commented on issue #2162: [SUPPORT] Deltastreamer transform cannot add fields

liujinhui1994 commented on issue #2162: URL: https://github.com/apache/hudi/issues/2162#issuecomment-707521050 These are the rowDataset I printed in transform 1. This is before I did not add the ds field ++---+-+-+---+++-+-++ | dataId|collectTime|clickTime|spreadUrl| spreadName| ua| uid|adnetName|adnetDesc| ds| ++---+-+-+---+++-+-++ |2197FF31439FFCB60...|2020-10-13 13:49:41|1602568181000| UloYlk|???-1201-Android|Mozilla/5.0 (Linu...|null| gdt| ???|null| |CA8CAD6F8162B0AAB...|2020-10-13 13:49:33|1602568173000| FZ5k8g| ??-6797-Android|Mozilla/5.0 (Linu...|null| toutiao| |null| |5724A0019D6D9FDE9...|2020-10-13 13:49:44|1602568184000| 0cggY9|???-7976-Android|Mozilla/5.0 (Linu...|null| gdt| ???|null| |59D7231E1EEE5B7ED...|2020-10-13 13:49:43|1602568183000| wtMhEd| ??-7991-IOS|Mozilla/5.0 (iPho...|null| toutiao| |null| |A345CE8B34F17C0BA...|2020-10-13 13:49:49|1602568189000| 0cggY9|???-7976-Android|Mozilla/5.0 (Linu...|null| gdt| ???|null| |7BBAC0DA1ED53D050...|2020-10-13 13:49:49|1602568189000| wtMhEd| ??-7991-IOS|Mozilla/5.0 (iPho...|null| toutiao| |null| |757CA415D7252F0DC...|2020-10-13 13:49:50|160256819| 5whw5s| ???-5423-ios| QQ??/20002 CFNetw...|null| gdt| ???|null| |15BACEDE444B1E3D2...|2020-10-13 13:49:47|1602568187000| NJ1Al8| ???-1854-IOS|WaterMarkCamera/3...|null| gdt| ???|null| |E9E1F2770A5B90724...|2020-10-13 13:49:50|160256819| Zdcc0o|???-5423-Android|Dalvik/2.1.0 (Lin...|null| gdt| ???|null| |10911EBF2E25FE5B8...|2020-10-13 13:49:42|1602568182000| 5whw5s| ???-5423-ios|kugou/10.3.0.4 CF...|null| gdt| ???|null| 2. This is after I added the ds field ++---+-+-+---+++-+-+--+ | dataId|collectTime|clickTime|spreadUrl| spreadName| ua| uid|adnetName|adnetDesc|ds| ++---+-+-+---+++-+-+--+ |2197FF31439FFCB60...|2020-10-13 13:49:41|1602568181000| UloYlk|???-1201-Android|Mozilla/5.0 (Linu...|null| gdt| ???|2020/10/13| |CA8CAD6F8162B0AAB...|2020-10-13 13:49:33|1602568173000| FZ5k8g| ??-6797-Android|Mozilla/5.0 (Linu...|null| toutiao| |2020/10/13| |5724A0019D6D9FDE9...|2020-10-13 13:49:44|1602568184000| 0cggY9|???-7976-Android|Mozilla/5.0 (Linu...|null| gdt| ???|2020/10/13| |59D7231E1EEE5B7ED...|2020-10-13 13:49:43|1602568183000| wtMhEd| ??-7991-IOS|Mozilla/5.0 (iPho...|null| toutiao| |2020/10/13| |A345CE8B34F17C0BA...|2020-10-13 13:49:49|1602568189000| 0cggY9|???-7976-Android|Mozilla/5.0 (Linu...|null| gdt| ???|2020/10/13| |7BBAC0DA1ED53D050...|2020-10-13 13:49:49|1602568189000| wtMhEd| ??-7991-IOS|Mozilla/5.0 (iPho...|null| toutiao| |2020/10/13| This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] liujinhui1994 commented on issue #2162: [SUPPORT] Deltastreamer transform cannot add fields

liujinhui1994 commented on issue #2162: URL: https://github.com/apache/hudi/issues/2162#issuecomment-707519900 1. I have now changed all fields to “ type”:[“ null”,“ string”],“ default”:null 2. printSchema() root |-- dataId: string (nullable = true) |-- collectTime: string (nullable = true) |-- clickTime: string (nullable = true) |-- spreadUrl: string (nullable = true) |-- spreadName: string (nullable = true) |-- ua: string (nullable = true) |-- uid: string (nullable = true) |-- adnetName: string (nullable = true) |-- adnetDesc: string (nullable = true) |-- ds: string (nullable = true @bvaradar This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] lw309637554 commented on pull request #2082: [WIP] hudi cluster write path poc

lw309637554 commented on pull request #2082: URL: https://github.com/apache/hudi/pull/2082#issuecomment-707514223 > > > > @leesf #2048 is landed. is it possible to merge this and address Balaji's comments? (I can help if needed) > > > > > > > > > Sure, considering I am a little busy these days, it is wonderful if you @satishkotha would take over the PR and land it. Thanks > > > > > > @leesf @satishkotha what is your process? i am intrested to take this and land it. Thanks > > @lw309637554 I've already started working on this. Perhaps, you could help with one of the followup tasks of #2048? These are tracked as subtasks here https://issues.apache.org/jira/browse/HUDI-868? Subtasks 2,4 are easy to get started. But, feel free to pick others too? > > @vinothchandar Maybe we can close this PR to avoid confusion? I'll open new PR when i'm ready and run some basic tests. @satishkotha ok, i can take some sub task in https://issues.apache.org/jira/browse/HUDI-868 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #2162: [SUPPORT] Deltastreamer transform cannot add fields

bvaradar commented on issue #2162:

URL: https://github.com/apache/hudi/issues/2162#issuecomment-707502829

1. I am not able to pinpoint the issue rightaway but let me engage in

debugging this with you. Couple of things :

1. Can you make ds field and any additional fields you are adding nullable

{

"name": "ds",

"type": ["null", "string"],

"default": null

}

2. In transformer implementation, after you constructed the dataset "fi",

can you call printSchema() and add the output here.

If possible, It would make things very easy, if you can construct some form

of test that I can use it to repro the issue and debug here.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] satishkotha commented on pull request #2082: [WIP] hudi cluster write path poc

satishkotha commented on pull request #2082: URL: https://github.com/apache/hudi/pull/2082#issuecomment-707476925 > > > @leesf #2048 is landed. is it possible to merge this and address Balaji's comments? (I can help if needed) > > > > > > Sure, considering I am a little busy these days, it is wonderful if you @satishkotha would take over the PR and land it. Thanks > > @leesf @satishkotha what is your process? i am intrested to take this and land it. Thanks @lw309637554 I've already started working on this. Perhaps, you could help with one of the followup tasks of #2048? These are tracked as subtasks here https://issues.apache.org/jira/browse/HUDI-868? Subtasks 2,4 are easy to get started. But, feel free to pick others too? @vinothchandar Maybe we can close this PR to avoid confusion? I'll open new PR when i'm ready and run some basic tests. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (HUDI-1304) test compaction workflow with replacecommit action

[ https://issues.apache.org/jira/browse/HUDI-1304?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] satish resolved HUDI-1304. -- Resolution: Fixed > test compaction workflow with replacecommit action > -- > > Key: HUDI-1304 > URL: https://issues.apache.org/jira/browse/HUDI-1304 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: satish >Assignee: satish >Priority: Major > Labels: pull-request-available > Fix For: 0.7.0 > > > Replacecommit hides certain file groups from FileSystemView. Make sure > pending/inflight compactions work as expected when file groups are hidden. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1260) Reader changes to supportinsert overwrite

[ https://issues.apache.org/jira/browse/HUDI-1260?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] satish updated HUDI-1260: - Status: In Progress (was: Open) > Reader changes to supportinsert overwrite > - > > Key: HUDI-1260 > URL: https://issues.apache.org/jira/browse/HUDI-1260 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: satish >Assignee: satish >Priority: Major > Fix For: 0.7.0 > > > Same as HUDI-1072, but creating subtask for insert overwrite -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HUDI-1260) Reader changes to supportinsert overwrite

[ https://issues.apache.org/jira/browse/HUDI-1260?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] satish resolved HUDI-1260. -- Resolution: Fixed > Reader changes to supportinsert overwrite > - > > Key: HUDI-1260 > URL: https://issues.apache.org/jira/browse/HUDI-1260 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: satish >Assignee: satish >Priority: Major > Fix For: 0.7.0 > > > Same as HUDI-1072, but creating subtask for insert overwrite -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1260) Reader changes to supportinsert overwrite

[ https://issues.apache.org/jira/browse/HUDI-1260?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] satish updated HUDI-1260: - Status: Open (was: New) > Reader changes to supportinsert overwrite > - > > Key: HUDI-1260 > URL: https://issues.apache.org/jira/browse/HUDI-1260 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: satish >Assignee: satish >Priority: Major > Fix For: 0.7.0 > > > Same as HUDI-1072, but creating subtask for insert overwrite -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1304) test compaction workflow with replacecommit action

[ https://issues.apache.org/jira/browse/HUDI-1304?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] satish updated HUDI-1304: - Status: Open (was: New) > test compaction workflow with replacecommit action > -- > > Key: HUDI-1304 > URL: https://issues.apache.org/jira/browse/HUDI-1304 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: satish >Assignee: satish >Priority: Major > Labels: pull-request-available > Fix For: 0.7.0 > > > Replacecommit hides certain file groups from FileSystemView. Make sure > pending/inflight compactions work as expected when file groups are hidden. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] bvaradar commented on issue #2165: [SUPPORT] Exception while Querying Hive _rt table

bvaradar commented on issue #2165: URL: https://github.com/apache/hudi/issues/2165#issuecomment-707475775 @tandonraghav : Yes, you need to shade the jar containing the custom record payload. Here is some context http://hudi.apache.org/releases.html#release-highlights-1 Look for section starting with... ``` With 0.5.1, hudi-hadoop-mr-bundle which is used by query engines such as presto and hive includes shaded avro package to support hudi real time queries through these ``` More Context: https://issues.apache.org/jira/browse/HUDI-519 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-1304) test compaction workflow with replacecommit action

[ https://issues.apache.org/jira/browse/HUDI-1304?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] satish updated HUDI-1304: - Status: In Progress (was: Open) > test compaction workflow with replacecommit action > -- > > Key: HUDI-1304 > URL: https://issues.apache.org/jira/browse/HUDI-1304 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: satish >Assignee: satish >Priority: Major > Labels: pull-request-available > Fix For: 0.7.0 > > > Replacecommit hides certain file groups from FileSystemView. Make sure > pending/inflight compactions work as expected when file groups are hidden. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] lw309637554 edited a comment on pull request #2127: [HUDI-284] add more test for UpdateSchemaEvolution

lw309637554 edited a comment on pull request #2127: URL: https://github.com/apache/hudi/pull/2127#issuecomment-706813674 > lagging a bit. Will take a pass today and circle back. @pratyakshsharma thanks,please help to review This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-781) Re-design test utilities

[ https://issues.apache.org/jira/browse/HUDI-781?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-781: Fix Version/s: 0.6.1 > Re-design test utilities > > > Key: HUDI-781 > URL: https://issues.apache.org/jira/browse/HUDI-781 > Project: Apache Hudi > Issue Type: Test > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > Fix For: 0.6.1 > > > Test utility classes are to re-designed with considerations like > * Use more mockings > * Reduce spark context setup > * Improve/clean up data generator > An RFC would be preferred for illustrating the design work. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-995) Organize test utils methods and classes

[ https://issues.apache.org/jira/browse/HUDI-995?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-995: Fix Version/s: (was: 0.6.1) > Organize test utils methods and classes > --- > > Key: HUDI-995 > URL: https://issues.apache.org/jira/browse/HUDI-995 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > > * Move test utils classes to hudi-common where appropriate, e.g. > TestRawTripPayload, HoodieDataGenerator > * Organize test utils into separate utils classes like `TransformUtils` for > transformations, `SchemaUtils` for schema loading, etc > * Migrate HoodieTestUtils APIs to new utility class HoodieTestTable or > HoodieWriteableTestTable -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HUDI-779) [Umbrella] Unit tests improvements

[ https://issues.apache.org/jira/browse/HUDI-779?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu resolved HUDI-779. - Assignee: Raymond Xu Resolution: Done > [Umbrella] Unit tests improvements > -- > > Key: HUDI-779 > URL: https://issues.apache.org/jira/browse/HUDI-779 > Project: Apache Hudi > Issue Type: Test > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > > Long-running track ticket for tasks regarding unit test improvements. > > Email thread > [https://lists.apache.org/thread.html/recd284114d9bfe5f82cdd6a5a3ead1c5e1545cf0f44c74a6bb4c813b%40%3Cdev.hudi.apache.org%3E] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HUDI-781) Re-design test utilities

[ https://issues.apache.org/jira/browse/HUDI-781?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu resolved HUDI-781. - Resolution: Implemented > Re-design test utilities > > > Key: HUDI-781 > URL: https://issues.apache.org/jira/browse/HUDI-781 > Project: Apache Hudi > Issue Type: Test > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > > Test utility classes are to re-designed with considerations like > * Use more mockings > * Reduce spark context setup > * Improve/clean up data generator > An RFC would be preferred for illustrating the design work. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (HUDI-781) Re-design test utilities

[ https://issues.apache.org/jira/browse/HUDI-781?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu closed HUDI-781. --- > Re-design test utilities > > > Key: HUDI-781 > URL: https://issues.apache.org/jira/browse/HUDI-781 > Project: Apache Hudi > Issue Type: Test > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > > Test utility classes are to re-designed with considerations like > * Use more mockings > * Reduce spark context setup > * Improve/clean up data generator > An RFC would be preferred for illustrating the design work. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-779) [Umbrella] Unit tests improvements

[ https://issues.apache.org/jira/browse/HUDI-779?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-779: Status: Open (was: New) > [Umbrella] Unit tests improvements > -- > > Key: HUDI-779 > URL: https://issues.apache.org/jira/browse/HUDI-779 > Project: Apache Hudi > Issue Type: Test > Components: Testing >Reporter: Raymond Xu >Priority: Major > > Long-running track ticket for tasks regarding unit test improvements. > > Email thread > [https://lists.apache.org/thread.html/recd284114d9bfe5f82cdd6a5a3ead1c5e1545cf0f44c74a6bb4c813b%40%3Cdev.hudi.apache.org%3E] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (HUDI-779) [Umbrella] Unit tests improvements

[ https://issues.apache.org/jira/browse/HUDI-779?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu closed HUDI-779. --- > [Umbrella] Unit tests improvements > -- > > Key: HUDI-779 > URL: https://issues.apache.org/jira/browse/HUDI-779 > Project: Apache Hudi > Issue Type: Test > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > > Long-running track ticket for tasks regarding unit test improvements. > > Email thread > [https://lists.apache.org/thread.html/recd284114d9bfe5f82cdd6a5a3ead1c5e1545cf0f44c74a6bb4c813b%40%3Cdev.hudi.apache.org%3E] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1010) Fix the memory leak for hudi-client unit tests

[ https://issues.apache.org/jira/browse/HUDI-1010?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-1010: - Parent: (was: HUDI-781) Issue Type: Bug (was: Sub-task) > Fix the memory leak for hudi-client unit tests > -- > > Key: HUDI-1010 > URL: https://issues.apache.org/jira/browse/HUDI-1010 > Project: Apache Hudi > Issue Type: Bug > Components: Testing >Reporter: Yanjia Gary Li >Assignee: Nishith Agarwal >Priority: Major > Labels: help-wanted > Fix For: 0.6.1 > > Attachments: image-2020-06-08-09-22-08-864.png > > > hudi-client unit test has a memory leak, which could be some resources are > not properly released during the cleanup. The memory consumption was > accumulating over time and lead to the Travis CI failure. > By using the IntelliJ memory analysis tool, we can find the major leak was > HoodieLogFormatWriter, HoodieWrapperFileSystem, HoodieLogFileReader, e.t.c > Related PR: [https://github.com/apache/hudi/pull/1707] > [https://github.com/apache/hudi/pull/1697] > !image-2020-06-08-09-22-08-864.png! -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-994) Identify functional tests that are convertible to unit tests with mocks

[ https://issues.apache.org/jira/browse/HUDI-994?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu updated HUDI-994: Status: Open (was: New) > Identify functional tests that are convertible to unit tests with mocks > --- > > Key: HUDI-994 > URL: https://issues.apache.org/jira/browse/HUDI-994 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Prashant Wason >Priority: Major > Labels: pull-request-available > > * Identify convertible functional tests and re-implement by using mock > * remove/merge duplicate/overlapping functional tests if possible -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HUDI-994) Identify functional tests that are convertible to unit tests with mocks

[ https://issues.apache.org/jira/browse/HUDI-994?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu resolved HUDI-994. - Resolution: Done > Identify functional tests that are convertible to unit tests with mocks > --- > > Key: HUDI-994 > URL: https://issues.apache.org/jira/browse/HUDI-994 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > > * Identify convertible functional tests and re-implement by using mock > * remove/merge duplicate/overlapping functional tests if possible -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (HUDI-994) Identify functional tests that are convertible to unit tests with mocks

[ https://issues.apache.org/jira/browse/HUDI-994?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu reassigned HUDI-994: --- Assignee: Raymond Xu (was: Prashant Wason) > Identify functional tests that are convertible to unit tests with mocks > --- > > Key: HUDI-994 > URL: https://issues.apache.org/jira/browse/HUDI-994 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > > * Identify convertible functional tests and re-implement by using mock > * remove/merge duplicate/overlapping functional tests if possible -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (HUDI-994) Identify functional tests that are convertible to unit tests with mocks

[ https://issues.apache.org/jira/browse/HUDI-994?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu closed HUDI-994. --- > Identify functional tests that are convertible to unit tests with mocks > --- > > Key: HUDI-994 > URL: https://issues.apache.org/jira/browse/HUDI-994 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > > * Identify convertible functional tests and re-implement by using mock > * remove/merge duplicate/overlapping functional tests if possible -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HUDI-996) Use shared spark session provider

[ https://issues.apache.org/jira/browse/HUDI-996?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu resolved HUDI-996. - Resolution: Done Closing this as the functional test utilities are implemented. The future work is to decide which classes to be migrated to functional test suite. > Use shared spark session provider > -- > > Key: HUDI-996 > URL: https://issues.apache.org/jira/browse/HUDI-996 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Priority: Major > Labels: pull-request-available > > * implement a shared spark session provider to be used for test suites, setup > and tear down less spark sessions and other mini servers > * add functional tests with similar setup logic to test suites, to make use > of shared spark session -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (HUDI-996) Use shared spark session provider

[ https://issues.apache.org/jira/browse/HUDI-996?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu closed HUDI-996. --- Assignee: Raymond Xu > Use shared spark session provider > -- > > Key: HUDI-996 > URL: https://issues.apache.org/jira/browse/HUDI-996 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > > * implement a shared spark session provider to be used for test suites, setup > and tear down less spark sessions and other mini servers > * add functional tests with similar setup logic to test suites, to make use > of shared spark session -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (HUDI-896) Parallelize CI testing to reduce CI wait time

[ https://issues.apache.org/jira/browse/HUDI-896?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu closed HUDI-896. --- > Parallelize CI testing to reduce CI wait time > - > > Key: HUDI-896 > URL: https://issues.apache.org/jira/browse/HUDI-896 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > Fix For: 0.6.0 > > > - > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Closed] (HUDI-995) Organize test utils methods and classes

[ https://issues.apache.org/jira/browse/HUDI-995?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu closed HUDI-995. --- > Organize test utils methods and classes > --- > > Key: HUDI-995 > URL: https://issues.apache.org/jira/browse/HUDI-995 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > Fix For: 0.6.1 > > > * Move test utils classes to hudi-common where appropriate, e.g. > TestRawTripPayload, HoodieDataGenerator > * Organize test utils into separate utils classes like `TransformUtils` for > transformations, `SchemaUtils` for schema loading, etc > * Migrate HoodieTestUtils APIs to new utility class HoodieTestTable or > HoodieWriteableTestTable -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Resolved] (HUDI-995) Organize test utils methods and classes

[ https://issues.apache.org/jira/browse/HUDI-995?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Raymond Xu resolved HUDI-995. - Fix Version/s: 0.6.1 Resolution: Done > Organize test utils methods and classes > --- > > Key: HUDI-995 > URL: https://issues.apache.org/jira/browse/HUDI-995 > Project: Apache Hudi > Issue Type: Sub-task > Components: Testing >Reporter: Raymond Xu >Assignee: Raymond Xu >Priority: Major > Labels: pull-request-available > Fix For: 0.6.1 > > > * Move test utils classes to hudi-common where appropriate, e.g. > TestRawTripPayload, HoodieDataGenerator > * Organize test utils into separate utils classes like `TransformUtils` for > transformations, `SchemaUtils` for schema loading, etc > * Migrate HoodieTestUtils APIs to new utility class HoodieTestTable or > HoodieWriteableTestTable -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (HUDI-1323) Fence metadata reads using latest data timeline commit times!

[ https://issues.apache.org/jira/browse/HUDI-1323?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Vinoth Chandar reassigned HUDI-1323: Assignee: Vinoth Chandar > Fence metadata reads using latest data timeline commit times! > - > > Key: HUDI-1323 > URL: https://issues.apache.org/jira/browse/HUDI-1323 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Assignee: Vinoth Chandar >Priority: Major > > Problem D: We need to fence metadata reads using latest data timeline commit > times! and limit to only handing out files that belong to a committed instant > on the data timeline. Otherwise, metadata table can hand uncommitted files to > cleaner etc and cause us to delete legit latest file slices i.e data loss -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1323) Fence metadata reads using latest data timeline commit times!

[ https://issues.apache.org/jira/browse/HUDI-1323?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Vinoth Chandar updated HUDI-1323: - Status: Open (was: New) > Fence metadata reads using latest data timeline commit times! > - > > Key: HUDI-1323 > URL: https://issues.apache.org/jira/browse/HUDI-1323 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Assignee: Vinoth Chandar >Priority: Major > > Problem D: We need to fence metadata reads using latest data timeline commit > times! and limit to only handing out files that belong to a committed instant > on the data timeline. Otherwise, metadata table can hand uncommitted files to > cleaner etc and cause us to delete legit latest file slices i.e data loss -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1323) Fence metadata reads using latest data timeline commit times!

[ https://issues.apache.org/jira/browse/HUDI-1323?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Vinoth Chandar updated HUDI-1323: - Status: In Progress (was: Open) > Fence metadata reads using latest data timeline commit times! > - > > Key: HUDI-1323 > URL: https://issues.apache.org/jira/browse/HUDI-1323 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Assignee: Vinoth Chandar >Priority: Major > > Problem D: We need to fence metadata reads using latest data timeline commit > times! and limit to only handing out files that belong to a committed instant > on the data timeline. Otherwise, metadata table can hand uncommitted files to > cleaner etc and cause us to delete legit latest file slices i.e data loss -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (HUDI-1312) Query side use of Metadata Table

[ https://issues.apache.org/jira/browse/HUDI-1312?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17212745#comment-17212745 ] Vinoth Chandar commented on HUDI-1312: -- [~uditme] are you interested in taking this up. this is a good ramp up task > Query side use of Metadata Table > > > Key: HUDI-1312 > URL: https://issues.apache.org/jira/browse/HUDI-1312 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Priority: Major > > Add support for opening Metadata Table on the query side and using it for > eliminating file listings. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] vinothchandar merged pull request #2150: [HUDI-1304] Add unit test for testing compaction on replaced file groups

vinothchandar merged pull request #2150: URL: https://github.com/apache/hudi/pull/2150 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch master updated (c5e10d6 -> 0d40734)

This is an automated email from the ASF dual-hosted git repository. vinoth pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git. from c5e10d6 [HUDI-995] Migrate HoodieTestUtils APIs to HoodieTestTable (#2167) add 0d40734 [HUDI-1304] Add unit test for testing compaction on replaced file groups (#2150) No new revisions were added by this update. Summary of changes: .../table/action/compact/CompactionTestBase.java | 26 +++ .../table/action/compact/TestAsyncCompaction.java | 52 ++ 2 files changed, 78 insertions(+)

[GitHub] [hudi] codecov-io edited a comment on pull request #2150: [HUDI-1304] Add unit test for testing compaction on replaced file groups

codecov-io edited a comment on pull request #2150: URL: https://github.com/apache/hudi/pull/2150#issuecomment-704505827 # [Codecov](https://codecov.io/gh/apache/hudi/pull/2150?src=pr&el=h1) Report > Merging [#2150](https://codecov.io/gh/apache/hudi/pull/2150?src=pr&el=desc) into [master](https://codecov.io/gh/apache/hudi/commit/fdae388626b8d97acc01191aa0e7075c36a41132?el=desc) will **increase** coverage by `1.83%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/hudi/pull/2150?src=pr&el=tree) ```diff @@ Coverage Diff @@ ## master#2150 +/- ## + Coverage 51.79% 53.63% +1.83% - Complexity 2532 2848 +316 Files 318 359 +41 Lines 1441716545+2128 Branches 1460 1780 +320 + Hits 7468 8874+1406 - Misses 6354 6912 +558 - Partials595 759 +164 ``` | Flag | Coverage Δ | Complexity Δ | | |---|---|---|---| | #hudicli | `38.37% <ø> (ø)` | `193.00 <ø> (ø)` | | | #hudiclient | `100.00% <ø> (ø)` | `0.00 <ø> (ø)` | | | #hudicommon | `54.75% <ø> (+<0.01%)` | `1795.00 <ø> (+2.00)` | | | #hudihadoopmr | `33.05% <ø> (ø)` | `181.00 <ø> (ø)` | | | #hudispark | `65.48% <ø> (?)` | `304.00 <ø> (?)` | | | #huditimelineservice | `62.29% <ø> (ø)` | `50.00 <ø> (ø)` | | | #hudiutilities | `70.07% <ø> (+0.60%)` | `325.00 <ø> (+10.00)` | | Flags with carried forward coverage won't be shown. [Click here](https://docs.codecov.io/docs/carryforward-flags#carryforward-flags-in-the-pull-request-comment) to find out more. | [Impacted Files](https://codecov.io/gh/apache/hudi/pull/2150?src=pr&el=tree) | Coverage Δ | Complexity Δ | | |---|---|---|---| | [.../main/java/org/apache/hudi/common/util/Option.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL3V0aWwvT3B0aW9uLmphdmE=) | `66.66% <0.00%> (-3.61%)` | `23.00% <0.00%> (+1.00%)` | :arrow_down: | | [...udi/utilities/sources/helpers/DFSPathSelector.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvaGVscGVycy9ERlNQYXRoU2VsZWN0b3IuamF2YQ==) | `82.05% <0.00%> (-2.16%)` | `12.00% <0.00%> (ø%)` | | | [.../org/apache/hudi/common/model/HoodieTableType.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL21vZGVsL0hvb2RpZVRhYmxlVHlwZS5qYXZh) | `100.00% <0.00%> (ø)` | `1.00% <0.00%> (ø%)` | | | [...rg/apache/hudi/common/util/SerializationUtils.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL3V0aWwvU2VyaWFsaXphdGlvblV0aWxzLmphdmE=) | `88.00% <0.00%> (ø)` | `3.00% <0.00%> (ø%)` | | | [.../apache/hudi/common/table/TableSchemaResolver.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL3RhYmxlL1RhYmxlU2NoZW1hUmVzb2x2ZXIuamF2YQ==) | `0.00% <0.00%> (ø)` | `0.00% <0.00%> (ø%)` | | | [...pache/hudi/io/storage/HoodieFileReaderFactory.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvaW8vc3RvcmFnZS9Ib29kaWVGaWxlUmVhZGVyRmFjdG9yeS5qYXZh) | `50.00% <0.00%> (ø)` | `3.00% <0.00%> (ø%)` | | | [.../hudi/utilities/schema/SchemaRegistryProvider.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFSZWdpc3RyeVByb3ZpZGVyLmphdmE=) | `0.00% <0.00%> (ø)` | `0.00% <0.00%> (ø%)` | | | [...i/common/model/OverwriteWithLatestAvroPayload.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL21vZGVsL092ZXJ3cml0ZVdpdGhMYXRlc3RBdnJvUGF5bG9hZC5qYXZh) | `64.70% <0.00%> (ø)` | `10.00% <0.00%> (ø%)` | | | [...del/OverwriteNonDefaultsWithLatestAvroPayload.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL21vZGVsL092ZXJ3cml0ZU5vbkRlZmF1bHRzV2l0aExhdGVzdEF2cm9QYXlsb2FkLmphdmE=) | `78.94% <0.00%> (ø)` | `5.00% <0.00%> (ø%)` | | | [...s/deltastreamer/HoodieMultiTableDeltaStreamer.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS11dGlsaXRpZXMvc3

[GitHub] [hudi] codecov-io edited a comment on pull request #2150: [HUDI-1304] Add unit test for testing compaction on replaced file groups

codecov-io edited a comment on pull request #2150: URL: https://github.com/apache/hudi/pull/2150#issuecomment-704505827 # [Codecov](https://codecov.io/gh/apache/hudi/pull/2150?src=pr&el=h1) Report > Merging [#2150](https://codecov.io/gh/apache/hudi/pull/2150?src=pr&el=desc) into [master](https://codecov.io/gh/apache/hudi/commit/fdae388626b8d97acc01191aa0e7075c36a41132?el=desc) will **increase** coverage by `1.83%`. > The diff coverage is `n/a`. [](https://codecov.io/gh/apache/hudi/pull/2150?src=pr&el=tree) ```diff @@ Coverage Diff @@ ## master#2150 +/- ## + Coverage 51.79% 53.63% +1.83% - Complexity 2532 2848 +316 Files 318 359 +41 Lines 1441716545+2128 Branches 1460 1780 +320 + Hits 7468 8874+1406 - Misses 6354 6912 +558 - Partials595 759 +164 ``` | Flag | Coverage Δ | Complexity Δ | | |---|---|---|---| | #hudicli | `38.37% <ø> (ø)` | `193.00 <ø> (ø)` | | | #hudiclient | `100.00% <ø> (ø)` | `0.00 <ø> (ø)` | | | #hudicommon | `54.75% <ø> (+<0.01%)` | `1795.00 <ø> (+2.00)` | | | #hudihadoopmr | `33.05% <ø> (ø)` | `181.00 <ø> (ø)` | | | #hudispark | `65.48% <ø> (?)` | `304.00 <ø> (?)` | | | #huditimelineservice | `62.29% <ø> (ø)` | `50.00 <ø> (ø)` | | | #hudiutilities | `70.07% <ø> (+0.60%)` | `325.00 <ø> (+10.00)` | | Flags with carried forward coverage won't be shown. [Click here](https://docs.codecov.io/docs/carryforward-flags#carryforward-flags-in-the-pull-request-comment) to find out more. | [Impacted Files](https://codecov.io/gh/apache/hudi/pull/2150?src=pr&el=tree) | Coverage Δ | Complexity Δ | | |---|---|---|---| | [.../main/java/org/apache/hudi/common/util/Option.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL3V0aWwvT3B0aW9uLmphdmE=) | `66.66% <0.00%> (-3.61%)` | `23.00% <0.00%> (+1.00%)` | :arrow_down: | | [...udi/utilities/sources/helpers/DFSPathSelector.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NvdXJjZXMvaGVscGVycy9ERlNQYXRoU2VsZWN0b3IuamF2YQ==) | `82.05% <0.00%> (-2.16%)` | `12.00% <0.00%> (ø%)` | | | [.../org/apache/hudi/common/model/HoodieTableType.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL21vZGVsL0hvb2RpZVRhYmxlVHlwZS5qYXZh) | `100.00% <0.00%> (ø)` | `1.00% <0.00%> (ø%)` | | | [...rg/apache/hudi/common/util/SerializationUtils.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL3V0aWwvU2VyaWFsaXphdGlvblV0aWxzLmphdmE=) | `88.00% <0.00%> (ø)` | `3.00% <0.00%> (ø%)` | | | [.../apache/hudi/common/table/TableSchemaResolver.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL3RhYmxlL1RhYmxlU2NoZW1hUmVzb2x2ZXIuamF2YQ==) | `0.00% <0.00%> (ø)` | `0.00% <0.00%> (ø%)` | | | [...pache/hudi/io/storage/HoodieFileReaderFactory.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvaW8vc3RvcmFnZS9Ib29kaWVGaWxlUmVhZGVyRmFjdG9yeS5qYXZh) | `50.00% <0.00%> (ø)` | `3.00% <0.00%> (ø%)` | | | [.../hudi/utilities/schema/SchemaRegistryProvider.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS11dGlsaXRpZXMvc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvdXRpbGl0aWVzL3NjaGVtYS9TY2hlbWFSZWdpc3RyeVByb3ZpZGVyLmphdmE=) | `0.00% <0.00%> (ø)` | `0.00% <0.00%> (ø%)` | | | [...i/common/model/OverwriteWithLatestAvroPayload.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL21vZGVsL092ZXJ3cml0ZVdpdGhMYXRlc3RBdnJvUGF5bG9hZC5qYXZh) | `64.70% <0.00%> (ø)` | `10.00% <0.00%> (ø%)` | | | [...del/OverwriteNonDefaultsWithLatestAvroPayload.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS1jb21tb24vc3JjL21haW4vamF2YS9vcmcvYXBhY2hlL2h1ZGkvY29tbW9uL21vZGVsL092ZXJ3cml0ZU5vbkRlZmF1bHRzV2l0aExhdGVzdEF2cm9QYXlsb2FkLmphdmE=) | `78.94% <0.00%> (ø)` | `5.00% <0.00%> (ø%)` | | | [...s/deltastreamer/HoodieMultiTableDeltaStreamer.java](https://codecov.io/gh/apache/hudi/pull/2150/diff?src=pr&el=tree#diff-aHVkaS11dGlsaXRpZXMvc3

[GitHub] [hudi] satishkotha commented on a change in pull request #2150: [HUDI-1304] Add unit test for testing compaction on replaced file groups

satishkotha commented on a change in pull request #2150:

URL: https://github.com/apache/hudi/pull/2150#discussion_r503565568

##

File path:

hudi-client/hudi-spark-client/src/test/java/org/apache/hudi/table/action/compact/TestAsyncCompaction.java

##

@@ -332,4 +336,51 @@ public void testInterleavedCompaction() throws Exception {

executeCompaction(compactionInstantTime, client, hoodieTable, cfg,

numRecs, true);

}

}

+

+ @Test

+ public void testCompactionOnReplacedFiles() throws Exception {

Review comment:

@bvaradar Added comment. PTAL

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] umehrot2 commented on pull request #2147: [HUDI-1289] Remove shading pattern for hbase dependencies in hudi-spark-bundle

umehrot2 commented on pull request #2147: URL: https://github.com/apache/hudi/pull/2147#issuecomment-707334174 @rmpifer A couple of points: - As @vinothchandar mentioned, it would be worth exploring if by just removing the dependency relocation and still continuing to shade, helps avoid the issues with Hbase index, and at the same time not break bootstrap code. - If we do go ahead with removing relocation for Hbase, we may want to remove the relocation in `hudi-hadoop-mr-bundle` and `hudi-presto-bundle` to avoid any other issues this might cause. One such issue we ran into with bootstrap was that Hbase was writing the KeyValue Comparator class name in HFile footer. At read time it would expect to see the exact same class. However this was resolved by creating our own comparator class for Hbase. https://github.com/apache/hudi/blob/master/hudi-common/src/main/java/org/apache/hudi/common/bootstrap/index/HFileBootstrapIndex.java#L584 - Lets fix the commit message. We are not removing shading, but avoiding relocation as part of shading process. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-1320) Move static invocations of HoodieMetadata.xxx to HoodieTable

[ https://issues.apache.org/jira/browse/HUDI-1320?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Prashant Wason updated HUDI-1320: - Status: Open (was: New) > Move static invocations of HoodieMetadata.xxx to HoodieTable > > > Key: HUDI-1320 > URL: https://issues.apache.org/jira/browse/HUDI-1320 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Assignee: Prashant Wason >Priority: Major > > Also take care to guard against multi invocations -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1322) Refactor into Reader & Writer side for Metadata

[ https://issues.apache.org/jira/browse/HUDI-1322?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Prashant Wason updated HUDI-1322: - Status: Open (was: New) > Refactor into Reader & Writer side for Metadata > --- > > Key: HUDI-1322 > URL: https://issues.apache.org/jira/browse/HUDI-1322 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Assignee: Prashant Wason >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-1320) Move static invocations of HoodieMetadata.xxx to HoodieTable

[ https://issues.apache.org/jira/browse/HUDI-1320?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Prashant Wason updated HUDI-1320: - Status: In Progress (was: Open) > Move static invocations of HoodieMetadata.xxx to HoodieTable > > > Key: HUDI-1320 > URL: https://issues.apache.org/jira/browse/HUDI-1320 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Assignee: Prashant Wason >Priority: Major > > Also take care to guard against multi invocations -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (HUDI-1322) Refactor into Reader & Writer side for Metadata

[ https://issues.apache.org/jira/browse/HUDI-1322?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Prashant Wason reassigned HUDI-1322: Assignee: Prashant Wason > Refactor into Reader & Writer side for Metadata > --- > > Key: HUDI-1322 > URL: https://issues.apache.org/jira/browse/HUDI-1322 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Assignee: Prashant Wason >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (HUDI-1320) Move static invocations of HoodieMetadata.xxx to HoodieTable

[ https://issues.apache.org/jira/browse/HUDI-1320?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Prashant Wason reassigned HUDI-1320: Assignee: Prashant Wason > Move static invocations of HoodieMetadata.xxx to HoodieTable > > > Key: HUDI-1320 > URL: https://issues.apache.org/jira/browse/HUDI-1320 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: Prashant Wason >Assignee: Prashant Wason >Priority: Major > > Also take care to guard against multi invocations -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] tandonraghav commented on issue #2165: [SUPPORT] Exception while Querying Hive _rt table

tandonraghav commented on issue #2165: URL: https://github.com/apache/hudi/issues/2165#issuecomment-707231701 @bvaradar I was trying on Presto with Glue on AWS EMR. presto-bundle is present inside /plugins/hive-hadoop2/. But my problem is why this error - `Caused by: java.lang.ClassCastException: org.apache.hudi.org.apache.avro.generic.GenericData$Record cannot be cast to org.apache.avro.generic.GenericRecord` Why there is a difference in Generic Record class (HudiRecordPayload.class) in spark_bundle and presto/hudi-hadoop-mr-bundle? I am also aware that specific versions of presto only supports Snapshot queries. But as the Stacktrace says it is not able to cast properly. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #2165: [SUPPORT] Exception while Querying Hive _rt table

bvaradar commented on issue #2165: URL: https://github.com/apache/hudi/issues/2165#issuecomment-707226897 @tandonraghav : It was not clear from your original description of the issue whether you are making a spark or presto query. Looking at the previous comments, it looks like you are making Presto queries ? Have you included presto-bundle which is the only bundle you should have in the runtime for presto ? @bhasudha : Any other things that we need to be aware of ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (HUDI-1342) hudi-dla-sync support modify table properties

liwei created HUDI-1342: --- Summary: hudi-dla-sync support modify table properties Key: HUDI-1342 URL: https://issues.apache.org/jira/browse/HUDI-1342 Project: Apache Hudi Issue Type: Improvement Components: Hive Integration Reporter: liwei Assignee: liwei hudi-dla-sync support modify table properties -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] lw309637554 commented on a change in pull request #2082: [WIP] hudi cluster write path poc

lw309637554 commented on a change in pull request #2082:

URL: https://github.com/apache/hudi/pull/2082#discussion_r503376335

##

File path:

hudi-common/src/main/java/org/apache/hudi/common/table/view/HoodieTableFileSystemView.java

##

@@ -110,6 +114,11 @@ protected void resetViewState() {

return fileIdToPendingCompaction;

}

+ protected Map>

createFileIdToPendingClusteringMap(

Review comment:

make sense

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] lw309637554 commented on a change in pull request #2082: [WIP] hudi cluster write path poc

lw309637554 commented on a change in pull request #2082:

URL: https://github.com/apache/hudi/pull/2082#discussion_r503376042

##

File path: hudi-common/src/main/avro/HoodieClusteringPlan.avsc

##

@@ -0,0 +1,71 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+{

Review comment:

will do

##

File path:

hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieActiveTimeline.java

##

@@ -65,7 +65,8 @@

COMMIT_EXTENSION, INFLIGHT_COMMIT_EXTENSION, REQUESTED_COMMIT_EXTENSION,

DELTA_COMMIT_EXTENSION,

INFLIGHT_DELTA_COMMIT_EXTENSION, REQUESTED_DELTA_COMMIT_EXTENSION,

SAVEPOINT_EXTENSION,

INFLIGHT_SAVEPOINT_EXTENSION, CLEAN_EXTENSION,

REQUESTED_CLEAN_EXTENSION, INFLIGHT_CLEAN_EXTENSION,

- INFLIGHT_COMPACTION_EXTENSION, REQUESTED_COMPACTION_EXTENSION,

INFLIGHT_RESTORE_EXTENSION, RESTORE_EXTENSION));

+ INFLIGHT_COMPACTION_EXTENSION, REQUESTED_COMPACTION_EXTENSION,

INFLIGHT_RESTORE_EXTENSION, RESTORE_EXTENSION,

Review comment:

make sense

##

File path:

hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieInstant.java

##

@@ -159,6 +163,14 @@ public String getFileName() {

} else {

return HoodieTimeline.makeCommitFileName(timestamp);

}

+} else if (HoodieTimeline.CLUSTERING_ACTION.equals(action)) {

Review comment:

make sense

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] lw309637554 commented on a change in pull request #2082: [WIP] hudi cluster write path poc

lw309637554 commented on a change in pull request #2082:

URL: https://github.com/apache/hudi/pull/2082#discussion_r503375885

##

File path:

hudi-client/src/main/java/org/apache/hudi/table/action/clustering/updates/UpdateStrategy.java

##

@@ -0,0 +1,26 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.action.clustering.updates;

+

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.table.WorkloadProfile;

+

+public interface UpdateStrategy {

Review comment:

ok

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] lw309637554 commented on a change in pull request #2082: [WIP] hudi cluster write path poc

lw309637554 commented on a change in pull request #2082:

URL: https://github.com/apache/hudi/pull/2082#discussion_r503375808

##

File path:

hudi-client/src/main/java/org/apache/hudi/table/action/clustering/updates/RejectUpdateStrategy.java

##

@@ -0,0 +1,77 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.action.clustering.updates;

+

+import org.apache.hudi.avro.model.HoodieClusteringOperation;

+import org.apache.hudi.avro.model.HoodieClusteringPlan;

+import org.apache.hudi.common.fs.FSUtils;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.util.ClusteringUtils;

+import org.apache.hudi.common.util.collection.Pair;

+import org.apache.hudi.exception.HoodieUpdateRejectException;

+import org.apache.hudi.table.WorkloadProfile;

+import org.apache.hudi.table.WorkloadStat;

+import org.apache.log4j.LogManager;

+import org.apache.log4j.Logger;

+

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.stream.Collectors;

+

+public class RejectUpdateStrategy implements UpdateStrategy {

+ private static final Logger LOG =

LogManager.getLogger(RejectUpdateStrategy.class);

+

+ @Override

+ public void apply(HoodieTableMetaClient client, WorkloadProfile

workloadProfile) {

+List> plans =

ClusteringUtils.getAllPendingClusteringPlans(client);

+if (plans == null || plans.size() == 0) {

+ return;

+}

+List> partitionFileIdPairs = plans.stream().map(entry

-> {

+ HoodieClusteringPlan plan = entry.getValue();

+ List operations = plan.getOperations();

+ List> partitionFileIdPair =

+ operations.stream()

+ .flatMap(operation ->

operation.getBaseFilePaths().stream().map(filePath ->

Pair.of(operation.getPartitionPath(), FSUtils.getFileId(filePath

+ .collect(Collectors.toList());

+ return partitionFileIdPair;

+}).collect(Collectors.toList()).stream().flatMap(list ->

list.stream()).collect(Collectors.toList());

+

+if (partitionFileIdPairs.size() == 0) {

+ return;

+}

+

+Set> partitionStatEntries =

workloadProfile.getPartitionPathStatMap().entrySet();

+for (Map.Entry partitionStat : partitionStatEntries)

{

+ for (Map.Entry> updateLocEntry :

+ partitionStat.getValue().getUpdateLocationToCount().entrySet()) {

+String partitionPath = partitionStat.getKey();

+String fileId = updateLocEntry.getKey();

+if (partitionFileIdPairs.contains(Pair.of(partitionPath, fileId))) {

+ LOG.error("Not allowed to update the clustering files, partition: "

+ partitionPath + ", fileID " + fileId + ", please use other strategy.");

Review comment:

yes, first step will not support update when clustering

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] lw309637554 commented on a change in pull request #2082: [WIP] hudi cluster write path poc

lw309637554 commented on a change in pull request #2082:

URL: https://github.com/apache/hudi/pull/2082#discussion_r503375182

##

File path:

hudi-client/src/main/java/org/apache/hudi/table/action/clustering/strategy/BaseFileSizeBasedClusteringStrategy.java

##

@@ -0,0 +1,73 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.action.clustering.strategy;

+

+import org.apache.hudi.avro.model.HoodieClusteringOperation;

+import org.apache.hudi.avro.model.HoodieClusteringPlan;

+import org.apache.hudi.common.model.HoodieBaseFile;

+import org.apache.hudi.config.HoodieWriteConfig;

+import

org.apache.hudi.table.action.compact.strategy.BoundedIOCompactionStrategy;

+import org.apache.hudi.table.action.compact.strategy.CompactionStrategy;

+

+import java.util.Comparator;

+import java.util.List;

+import java.util.Map;

+import java.util.stream.Collectors;

+

+/**

+ * LogFileSizeBasedClusteringStrategy orders the compactions based on the

total log files size and limits the

+ * clusterings within a configured IO bound.

+ *

+ * @see BoundedIOCompactionStrategy

+ * @see CompactionStrategy

+ */

+public class BaseFileSizeBasedClusteringStrategy extends

BoundedIOClusteringStrategy

+implements Comparator {

+

+ private static final String TOTAL_BASE_FILE_SIZE = "TOTAL_BASE_FILE_SIZE";

+

+ @Override

+ public Map captureMetrics(HoodieWriteConfig config,

List dataFile,

+ String partitionPath) {

+Map metrics = super.captureMetrics(config, dataFile,

partitionPath);

+

+// Total size of all the data files

+Long totalBaseFileSize =

dataFile.stream().map(HoodieBaseFile::getFileSize).filter(size -> size >= 0)

+.reduce(Long::sum).orElse(0L);

+// save the metrics needed during the order

+metrics.put(TOTAL_BASE_FILE_SIZE, totalBaseFileSize.doubleValue());

+return metrics;

+ }

+

+ @Override

+ public List orderAndFilter(HoodieWriteConfig

writeConfig,

+ List operations, List

pendingCompactionPlans) {

+// Order the operations based on the reverse size of the logs and limit

them by the IO

+return super.orderAndFilter(writeConfig,

operations.stream().sorted(this).collect(Collectors.toList()),

+pendingCompactionPlans);

+ }

+

+ @Override

+ public int compare(HoodieClusteringOperation op1, HoodieClusteringOperation

op2) {

Review comment:

ok

##

File path:

hudi-client/src/main/java/org/apache/hudi/table/action/clustering/strategy/BoundedIOClusteringStrategy.java

##

@@ -0,0 +1,54 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.action.clustering.strategy;

+

+import org.apache.hudi.avro.model.HoodieClusteringOperation;

+import org.apache.hudi.avro.model.HoodieClusteringPlan;

+import org.apache.hudi.config.HoodieWriteConfig;

+

+import java.util.ArrayList;

+import java.util.List;

+

+/**

+ * CompactionStrategy which looks at total IO to be done for the compaction

(read + write) and limits the list of

Review comment:

ok

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] lw309637554 commented on a change in pull request #2082: [WIP] hudi cluster write path poc

lw309637554 commented on a change in pull request #2082:

URL: https://github.com/apache/hudi/pull/2082#discussion_r503375018

##

File path:

hudi-client/src/main/java/org/apache/hudi/table/action/clustering/HoodieCopyOnWriteTableCluster.java

##

@@ -0,0 +1,243 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.action.clustering;

+

+import org.apache.avro.Schema;

+import org.apache.avro.generic.GenericRecord;

+import org.apache.hadoop.fs.Path;

+import org.apache.hudi.avro.HoodieAvroUtils;

+import org.apache.hudi.avro.model.HoodieClusteringOperation;

+import org.apache.hudi.avro.model.HoodieClusteringPlan;

+import org.apache.hudi.client.WriteStatus;

+import org.apache.hudi.common.fs.FSUtils;

+import org.apache.hudi.common.model.ClusteringOperation;

+import org.apache.hudi.common.model.FileSlice;

+import org.apache.hudi.common.model.HoodieBaseFile;

+import org.apache.hudi.common.model.HoodieFileGroupId;

+import org.apache.hudi.common.model.HoodieKey;

+import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.model.HoodieRecordPayload;

+import org.apache.hudi.common.model.HoodieWriteStat.RuntimeStats;

+import org.apache.hudi.common.model.OverwriteWithLatestAvroPayload;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.view.SyncableFileSystemView;

+import org.apache.hudi.common.table.view.TableFileSystemView.SliceView;

+import org.apache.hudi.common.util.ClusteringUtils;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.ValidationUtils;

+import org.apache.hudi.common.util.collection.Pair;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.HoodieException;

+import org.apache.hudi.io.storage.HoodieFileReader;

+import org.apache.hudi.io.storage.HoodieFileReaderFactory;

+import org.apache.hudi.table.HoodieCopyOnWriteTable;

+import org.apache.hudi.table.HoodieTable;

+import org.apache.log4j.LogManager;

+import org.apache.log4j.Logger;

+import org.apache.spark.api.java.JavaRDD;

+import org.apache.spark.api.java.JavaSparkContext;

+import org.apache.spark.api.java.function.Function;

+import org.apache.spark.util.AccumulatorV2;

+import org.apache.spark.util.LongAccumulator;

+

+import java.io.IOException;

+import java.util.Collection;

+import java.util.Iterator;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+import java.util.stream.StreamSupport;

+

+import static java.util.stream.Collectors.toList;

+

+public class HoodieCopyOnWriteTableCluster implements HoodieCluster {

+

+ private static final Logger LOG =

LogManager.getLogger(HoodieCopyOnWriteTableCluster.class);

+ // Accumulator to keep track of total file slices for a table

+ private AccumulatorV2 totalFileSlices;

+

+ public static class BaseFileIterator implements Iterator> {

+List readers;

+Iterator currentReader;

+Schema schema;

+

+public BaseFileIterator(List readers, Schema schema) {

+ this.readers = readers;

+ this.schema = schema;

+ if (readers.size() > 0) {

+try {

+ currentReader = readers.remove(0).getRecordIterator(schema);

+} catch (Exception e) {

+ throw new HoodieException(e);

+}

+ }

+}

+

+@Override

+public boolean hasNext() {

+ if (currentReader == null) {

+return false;

+ } else if (currentReader.hasNext()) {

+return true;

+ } else if (readers.size() > 0) {

+try {

+ currentReader = readers.remove(0).getRecordIterator(schema);

+ return currentReader.hasNext();

+} catch (Exception e) {

+ throw new HoodieException("unable to initialize read with base file

", e);

+}

+ }

+ return false;

+}

+

+@Override

+public HoodieRecord next() {

+ //GenericRecord record = currentReader.next();

+ return transform(currentReader.next());

+}

+

+private HoodieRecord

transform(GenericRecord record) {

+ OverwriteWithLatestAvroPayload payload = new

OverwriteWithLatestAvroPayload(Option.of(record));

+ String key =

reco

[GitHub] [hudi] lw309637554 commented on a change in pull request #2082: [WIP] hudi cluster write path poc

lw309637554 commented on a change in pull request #2082:

URL: https://github.com/apache/hudi/pull/2082#discussion_r503374310

##

File path:

hudi-client/src/main/java/org/apache/hudi/table/HoodieCopyOnWriteTable.java

##

@@ -125,6 +128,16 @@ public HoodieWriteMetadata

bulkInsertPrepped(JavaSparkContext jsc, String instan

this, instantTime, preppedRecords, bulkInsertPartitioner).execute();

}

+ @Override

+ public Option scheduleClustering(JavaSparkContext jsc,

String instantTime, Option> extraMetadata) {

+return new ScheduleClusteringActionExecutor(jsc, config, this,

instantTime, extraMetadata).execute();

+ }

+

+ @Override

+ public HoodieWriteMetadata clustering(JavaSparkContext jsc, String

compactionInstantTime) {

+return new RunClusteringActionExecutor(jsc, config, this,

compactionInstantTime).execute();

+ }

+

Review comment:

Thanks, Will pay attention to this

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] tandonraghav edited a comment on issue #2165: [SUPPORT] Exception while Querying Hive _rt table

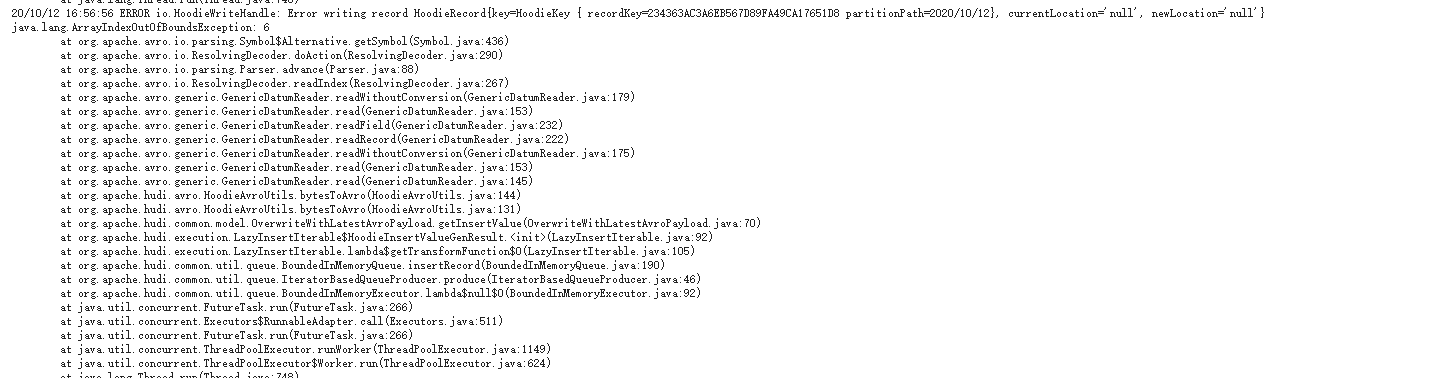

tandonraghav edited a comment on issue #2165: URL: https://github.com/apache/hudi/issues/2165#issuecomment-707163257 Attaching the presto logs- 2020-10-12T14:41:49.229Z INFO20201012_144143_00011_zymbu.1.0.0-0-44 org.apache.hudi.common.table.log.AbstractHoodieLogRecordScanner Merging the final data blocks 2020-10-12T14:41:49.229Z INFO20201012_144143_00011_zymbu.1.0.0-0-44 org.apache.hudi.common.table.log.AbstractHoodieLogRecordScanner Number of remaining logblocks to merge 1 2020-10-12T14:41:49.283Z ERROR 20201012_144143_00011_zymbu.1.0.0-0-44 org.apache.hudi.common.table.log.AbstractHoodieLogRecordScanner Got exception when reading log file org.apache.hudi.exception.HoodieException: Unable to instantiate payload class at org.apache.hudi.common.util.ReflectionUtils.loadPayload(ReflectionUtils.java:69) at org.apache.hudi.common.util.SpillableMapUtils.convertToHoodieRecordPayload(SpillableMapUtils.java:116) at org.apache.hudi.common.table.log.AbstractHoodieLogRecordScanner.processAvroDataBlock(AbstractHoodieLogRecordScanner.java:276) at org.apache.hudi.common.table.log.AbstractHoodieLogRecordScanner.processQueuedBlocksForInstant(AbstractHoodieLogRecordScanner.java:305) at org.apache.hudi.common.table.log.AbstractHoodieLogRecordScanner.scan(AbstractHoodieLogRecordScanner.java:238) at org.apache.hudi.common.table.log.HoodieMergedLogRecordScanner.(HoodieMergedLogRecordScanner.java:81) at org.apache.hudi.hadoop.realtime.RealtimeCompactedRecordReader.getMergedLogRecordScanner(RealtimeCompactedRecordReader.java:69) at org.apache.hudi.hadoop.realtime.RealtimeCompactedRecordReader.(RealtimeCompactedRecordReader.java:52) at org.apache.hudi.hadoop.realtime.HoodieRealtimeRecordReader.constructRecordReader(HoodieRealtimeRecordReader.java:69) at org.apache.hudi.hadoop.realtime.HoodieRealtimeRecordReader.(HoodieRealtimeRecordReader.java:47) at org.apache.hudi.hadoop.realtime.HoodieParquetRealtimeInputFormat.getRecordReader(HoodieParquetRealtimeInputFormat.java:253) at com.facebook.presto.hive.HiveUtil.createRecordReader(HiveUtil.java:251) at com.facebook.presto.hive.GenericHiveRecordCursorProvider.lambda$createRecordCursor$0(GenericHiveRecordCursorProvider.java:74) at com.facebook.presto.hive.authentication.UserGroupInformationUtils.lambda$executeActionInDoAs$0(UserGroupInformationUtils.java:29) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:360) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1824) at com.facebook.presto.hive.authentication.UserGroupInformationUtils.executeActionInDoAs(UserGroupInformationUtils.java:27) at com.facebook.presto.hive.authentication.ImpersonatingHdfsAuthentication.doAs(ImpersonatingHdfsAuthentication.java:39) at com.facebook.presto.hive.HdfsEnvironment.doAs(HdfsEnvironment.java:82) at com.facebook.presto.hive.GenericHiveRecordCursorProvider.createRecordCursor(GenericHiveRecordCursorProvider.java:73) at com.facebook.presto.hive.HivePageSourceProvider.createHivePageSource(HivePageSourceProvider.java:370) at com.facebook.presto.hive.HivePageSourceProvider.createPageSource(HivePageSourceProvider.java:137) at com.facebook.presto.hive.HivePageSourceProvider.createPageSource(HivePageSourceProvider.java:113) at com.facebook.presto.spi.connector.classloader.ClassLoaderSafeConnectorPageSourceProvider.createPageSource(ClassLoaderSafeConnectorPageSourceProvider.java:52) at com.facebook.presto.split.PageSourceManager.createPageSource(PageSourceManager.java:69) at com.facebook.presto.operator.TableScanOperator.getOutput(TableScanOperator.java:259) at com.facebook.presto.operator.Driver.processInternal(Driver.java:379) at com.facebook.presto.operator.Driver.lambda$processFor$8(Driver.java:283) at com.facebook.presto.operator.Driver.tryWithLock(Driver.java:675) at com.facebook.presto.operator.Driver.processFor(Driver.java:276) at com.facebook.presto.execution.SqlTaskExecution$DriverSplitRunner.processFor(SqlTaskExecution.java:1077) at com.facebook.presto.execution.executor.PrioritizedSplitRunner.process(PrioritizedSplitRunner.java:162) at com.facebook.presto.execution.executor.TaskExecutor$TaskRunner.run(TaskExecutor.java:545) at com.facebook.presto.$gen.Presto_0_23220201012_144123_1.run(Unknown Source) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.

[GitHub] [hudi] tandonraghav commented on issue #2165: [SUPPORT] Exception while Querying Hive _rt table