[jira] [Commented] (HUDI-2576) flink do checkpoint error because parquet file is missing

[ https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430351#comment-17430351 ] liyuanzhao435 commented on HUDI-2576: - the *20211019124727* instant commit twice, why? > flink do checkpoint error because parquet file is missing > -- > > Key: HUDI-2576 > URL: https://issues.apache.org/jira/browse/HUDI-2576 > Project: Apache Hudi > Issue Type: Bug > Components: Flink Integration >Affects Versions: 0.10.0 >Reporter: liyuanzhao435 >Priority: Major > Labels: flink, hudi > Fix For: 0.10.0 > > Attachments: error.txt > > Original Estimate: 96h > Remaining Estimate: 96h > > hudi:0.10.0, flink 1.13.1 > some times when flink do checkpoint , error occurs, the error shows a hudi > parquet file is missing (says file not exists) : > *2021-10-19 09:20:03,796 INFO > org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close > hoodie row data* > *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] - > DataStreamer Exception* > *java.io.FileNotFoundException: File does not exist: > /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet > (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have > any open files.* > *at > org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)* > > detail see appendix -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (HUDI-2563) Refactor XScheduleCompactionActionExecutor and CompactionTriggerStrategy.

[ https://issues.apache.org/jira/browse/HUDI-2563?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430028#comment-17430028 ] Yuepeng Pan edited comment on HUDI-2563 at 10/19/21, 6:41 AM: -- Hi,[~xleesf] [~xushiyan] [~danny0405] Could you help me to review this pr ? Thank you. was (Author: rocmarshal): Hi, [~danny0405] Could you help me to review this pr ? Thank you. > Refactor XScheduleCompactionActionExecutor and CompactionTriggerStrategy. > - > > Key: HUDI-2563 > URL: https://issues.apache.org/jira/browse/HUDI-2563 > Project: Apache Hudi > Issue Type: Improvement > Components: CLI, Compaction, Writer Core >Reporter: Yuepeng Pan >Assignee: Yuepeng Pan >Priority: Minor > Labels: pull-request-available > > # Pull up some common methods from XXXScheduleCompactionActionExecutor to > BaseScheduleCompactionActionExecutor. > # Replace conditional in XXXScheduleCompactionActionExecutor with > polymorphsim of CompactionTriggerStrategy class. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Comment Edited] (HUDI-2576) flink do checkpoint error because parquet file is missing

[

https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430326#comment-17430326

]

liyuanzhao435 edited comment on HUDI-2576 at 10/19/21, 6:31 AM:

Hoodie table says:

*LOG.info("Removing duplicate data files created due to spark retries before

committing. Paths=" + invalidDataPaths);*

however, the invalid path means have spececial extension: parquet, log, orc,

hfile

but my deleted file is end with the extension parquet, why the jobmanager

delete it? I can't understand it

was (Author: liyuanzhao435):

Hoodie table says:

*LOG.info("Removing duplicate data files created due to spark retries before

committing. Paths=" + invalidDataPaths);*

however, the invalid path means have spececial extension: parquet, log, orc,

hfile

bug my deleted file is end with the extension parquet, why the jobmanager

delete it? I can't understand it

> flink do checkpoint error because parquet file is missing

> --

>

> Key: HUDI-2576

> URL: https://issues.apache.org/jira/browse/HUDI-2576

> Project: Apache Hudi

> Issue Type: Bug

> Components: Flink Integration

>Affects Versions: 0.10.0

>Reporter: liyuanzhao435

>Priority: Major

> Labels: flink, hudi

> Fix For: 0.10.0

>

> Attachments: error.txt

>

> Original Estimate: 96h

> Remaining Estimate: 96h

>

> hudi:0.10.0, flink 1.13.1

> some times when flink do checkpoint , error occurs, the error shows a hudi

> parquet file is missing (says file not exists) :

> *2021-10-19 09:20:03,796 INFO

> org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close

> hoodie row data*

> *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] -

> DataStreamer Exception*

> *java.io.FileNotFoundException: File does not exist:

> /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet

> (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have

> any open files.*

> *at

> org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)*

>

> detail see appendix

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HUDI-2576) flink do checkpoint error because parquet file is missing

[

https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430326#comment-17430326

]

liyuanzhao435 commented on HUDI-2576:

-

Hoodie table says:

*LOG.info("Removing duplicate data files created due to spark retries before

committing. Paths=" + invalidDataPaths);*

however, the invalid path means have spececial extension: parquet, log, orc,

hfile

bug my deleted file is end with the extension parquet, why the jobmanager

delete it? I can't understand it

> flink do checkpoint error because parquet file is missing

> --

>

> Key: HUDI-2576

> URL: https://issues.apache.org/jira/browse/HUDI-2576

> Project: Apache Hudi

> Issue Type: Bug

> Components: Flink Integration

>Affects Versions: 0.10.0

>Reporter: liyuanzhao435

>Priority: Major

> Labels: flink, hudi

> Fix For: 0.10.0

>

> Attachments: error.txt

>

> Original Estimate: 96h

> Remaining Estimate: 96h

>

> hudi:0.10.0, flink 1.13.1

> some times when flink do checkpoint , error occurs, the error shows a hudi

> parquet file is missing (says file not exists) :

> *2021-10-19 09:20:03,796 INFO

> org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close

> hoodie row data*

> *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] -

> DataStreamer Exception*

> *java.io.FileNotFoundException: File does not exist:

> /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet

> (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have

> any open files.*

> *at

> org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)*

>

> detail see appendix

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [hudi] xushiyan commented on issue #3821: [SUPPORT] Ingestion taking very long time getting small files from partitions/

xushiyan commented on issue #3821: URL: https://github.com/apache/hudi/issues/3821#issuecomment-946406362 @rohit-m-99 it depends on your business logic. you can choose any field(s) that make sense for you (year/month/day, country, city, timezone, etc anything similar to those that makes sense) or simply use `hash(run_id)%10` to partition by the hash value's mod, which guarantees the number of partitions. Hope this helps. And closing this now. if you run into further issues, feel free to follow up here. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan closed issue #3821: [SUPPORT] Ingestion taking very long time getting small files from partitions/

xushiyan closed issue #3821: URL: https://github.com/apache/hudi/issues/3821 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on issue #3804: [SUPPORT] Error with metadata table

xushiyan commented on issue #3804: URL: https://github.com/apache/hudi/issues/3804#issuecomment-946402297 @rubenssoto On the metadata issue on EMR, please note that EMR has its own hudi built to work with other libraries bundled on each EMR release. See the version matrix here https://docs.aws.amazon.com/emr/latest/ReleaseGuide/emr-640-release.html Therefore you'd be better off to stay with EMR's Hudi / Spark / Flink versions. Otherwise, if you want to use a different Hudi version, you may also need to install your own and desired+compatible Spark version on the EMR machines. It'd take much more effort on environment setup so stay with EMR's version support is the best choice, plus this allows you to engage with AWS support if anything goes wrong, which should be the first choice of support. On your local build problem, please follow readme instructions closely. Double check java version, maven version, and purge your local maven repo if needed. Our CI build is passing so there should be no issue to build the project. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan closed issue #3804: [SUPPORT] Error with metadata table

xushiyan closed issue #3804: URL: https://github.com/apache/hudi/issues/3804 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] rohit-m-99 commented on issue #3821: [SUPPORT] Ingestion taking very long time getting small files from partitions/

rohit-m-99 commented on issue #3821: URL: https://github.com/apache/hudi/issues/3821#issuecomment-946395852 I see, as of now the main problem is that intuitively we'd partition by each `run` but each `run` is only about 2000-4000k records, so it is not immediately obvious on what field we should be partitioning by. Any advice here would be appreciated. We chose to not partition by the `run` id for query performance (not to have too many partitions). But not sure about alternatives - our use case has pretty high variability between time periods so have moved away from time base partitioning. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on issue #3821: [SUPPORT] Ingestion taking very long time getting small files from partitions/

xushiyan commented on issue #3821:

URL: https://github.com/apache/hudi/issues/3821#issuecomment-946393073

@rohit-m-99 you'd need to partition your dataset the normal way you

partition a spark dataset. Something like

`df.repartition().write().format("hudi").partitionBy().mode().options().save()`.

You can search more on how to do Spark partitioning.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on issue #3814: [SUPPORT] Error o Trying to create a table using Spark SQL

xushiyan commented on issue #3814: URL: https://github.com/apache/hudi/issues/3814#issuecomment-946390788 @rubenssoto since you're on EMR, please use EMR pre-installed hudi jars instead of open source ones ``` --packages org.apache.hudi:hudi-spark3-bundle_2.12:0.9.0,org.apache.spark:spark-avro_2.12:3.0.1 ``` change to ``` --jars /usr/lib/hudi/hudi-spark-bundle.jar,/usr/lib/spark/external/lib/spark-avro.jar ``` See more from https://docs.aws.amazon.com/emr/latest/ReleaseGuide/emr-hudi-installation-and-configuration.html https://docs.aws.amazon.com/emr/latest/ReleaseGuide/emr-hudi-work-with-dataset.html And please engage with AWS support for EMR specific setup problems. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan closed issue #3814: [SUPPORT] Error o Trying to create a table using Spark SQL

xushiyan closed issue #3814: URL: https://github.com/apache/hudi/issues/3814 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] rohit-m-99 commented on issue #3821: [SUPPORT] Ingestion taking very long time getting small files from partitions/

rohit-m-99 commented on issue #3821: URL: https://github.com/apache/hudi/issues/3821#issuecomment-946385171 Thank you for the advice, how do you set the number of partitions when using df.write()? Currently basing my code off of the intro guide found here: https://hudi.apache.org/docs/quick-start-guide. I am specifically using pyspark. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on issue #3821: [SUPPORT] Ingestion taking very long time getting small files from partitions/

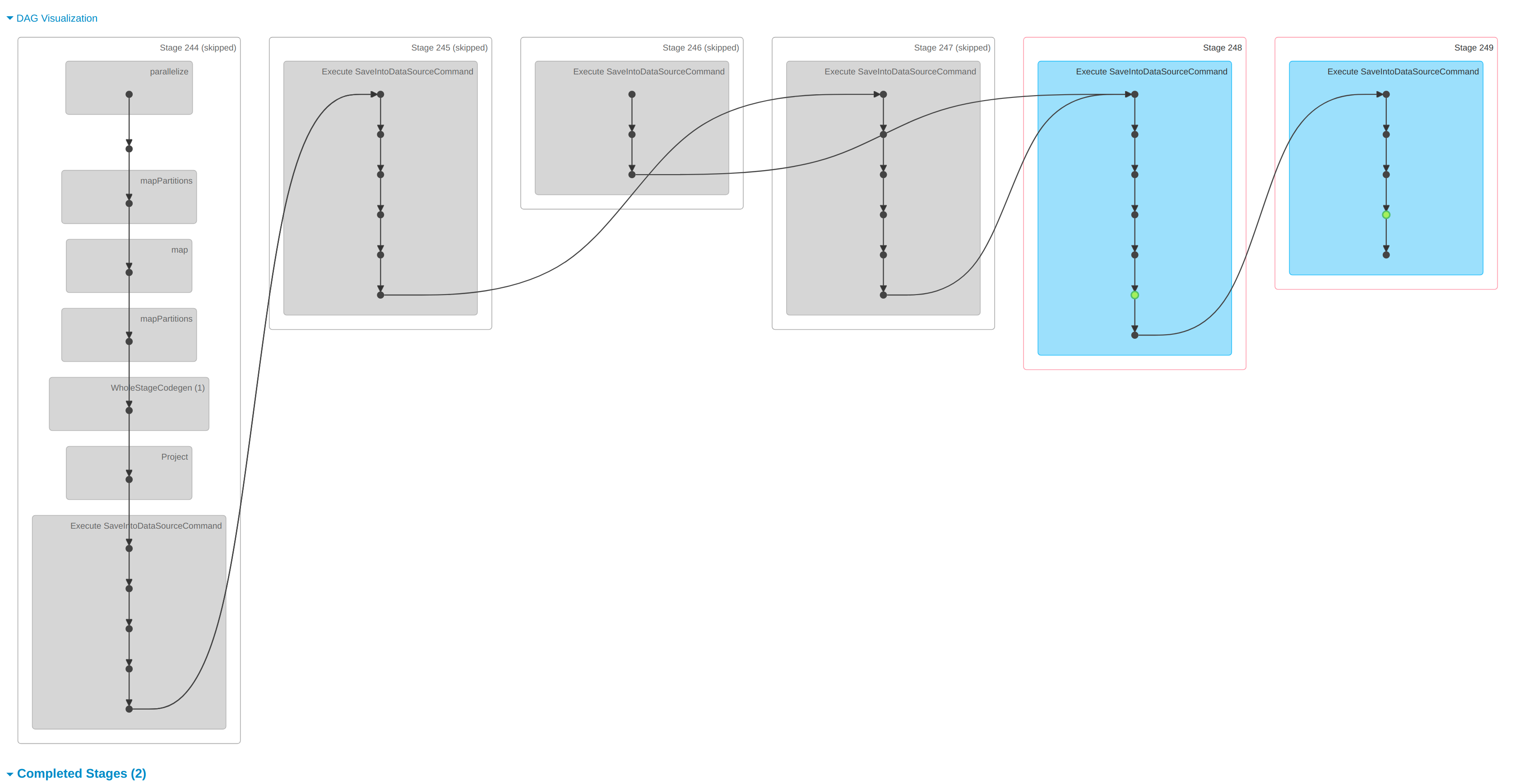

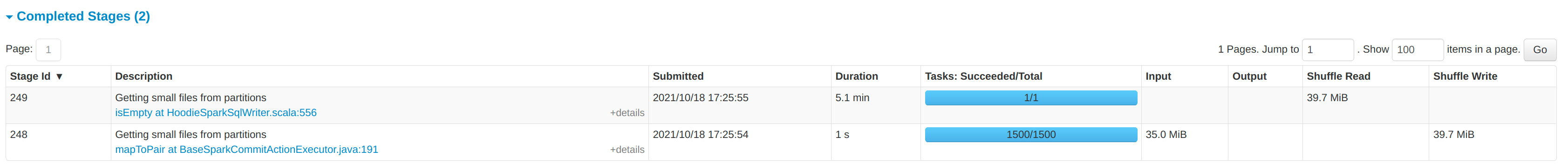

xushiyan commented on issue #3821: URL: https://github.com/apache/hudi/issues/3821#issuecomment-946383159 @rohit-m-99 this is likely due to non-partitioned dataset https://github.com/apache/hudi/blob/dbcf60f370e93ab490cf82e677387a07ea743cda/hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/table/action/commit/UpsertPartitioner.java#L254 getSmallFilesForPartitions() is parallelized over partitions. Try use 10-20 partitions may get this faster to <50s and make use of multiple executors. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (HUDI-2576) flink do checkpoint error because parquet file is missing

[

https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430316#comment-17430316

]

liyuanzhao435 edited comment on HUDI-2576 at 10/19/21, 5:33 AM:

flink jobmanager deleted the file :

*2021-10-19 12:47:34,606 INFO org.apache.hudi.common.util.CommitUtils [] -

Creating metadata for null numWriteStats:1numReplaceFileIds:0*

*2021-10-19 12:47:34,607 INFO org.apache.hudi.client.AbstractHoodieWriteClient

[] - Committing 20211019124727 action deltacommit*

*2021-10-19 12:47:34,615 INFO org.apache.hudi.table.HoodieTable [] - Removing

duplicate data files created due to spark retries before committing.

Paths=[aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet]*

*2021-10-19 12:47:34,617 INFO org.apache.hudi.table.HoodieTable [] -

{color:#de350b}Deleting invalid data

files{color}=[(hdfs://:/tmp/test_liyz2/aa,hdfs://:/tmp/test_liyz2/aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet)]*

*2021-10-19 12:47:34,676 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Marking instant

complete [==>20211019124727__deltacommit__INFLIGHT]*

was (Author: liyuanzhao435):

flink jobmanager deleted the file :

*2021-10-19 12:47:34,606 INFO org.apache.hudi.common.util.CommitUtils [] -

Creating metadata for null numWriteStats:1numReplaceFileIds:0*

*2021-10-19 12:47:34,607 INFO org.apache.hudi.client.AbstractHoodieWriteClient

[] - Committing 20211019124727 action deltacommit*

*2021-10-19 12:47:34,615 INFO org.apache.hudi.table.HoodieTable [] - Removing

duplicate data files created due to spark retries before committing.

Paths=[aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet]*

*2021-10-19 12:47:34,617 INFO org.apache.hudi.table.HoodieTable [] -

{color:#de350b}Deleting invalid data

files{color}=[(hdfs://:/tmp/test_liyz2/aa,hdfs://:/tmp/test_liyz2/aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet)]*

*2021-10-19 12:47:34,676 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Marking instant

complete [==>20211019124727__deltacommit__INFLIGHT]*

*2021-10-19 12:47:34,677 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Checking for

file exists

?hdfs://26.6.4.165:8020/tmp/test_liyz2/.hoodie/20211019124727.deltacommit.inflight*

*2021-10-19 12:47:34,691 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Create new file

for toInstant

?hdfs://26.6.4.165:8020/tmp/test_liyz2/.hoodie/20211019124727.deltacommit*

*2021-10-19 12:47:34,691 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Completed

[==>20211019124727__deltacommit__INFLIGHT]*

*20*

> flink do checkpoint error because parquet file is missing

> --

>

> Key: HUDI-2576

> URL: https://issues.apache.org/jira/browse/HUDI-2576

> Project: Apache Hudi

> Issue Type: Bug

> Components: Flink Integration

>Affects Versions: 0.10.0

>Reporter: liyuanzhao435

>Priority: Major

> Labels: flink, hudi

> Fix For: 0.10.0

>

> Attachments: error.txt

>

> Original Estimate: 96h

> Remaining Estimate: 96h

>

> hudi:0.10.0, flink 1.13.1

> some times when flink do checkpoint , error occurs, the error shows a hudi

> parquet file is missing (says file not exists) :

> *2021-10-19 09:20:03,796 INFO

> org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close

> hoodie row data*

> *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] -

> DataStreamer Exception*

> *java.io.FileNotFoundException: File does not exist:

> /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet

> (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have

> any open files.*

> *at

> org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)*

>

> detail see appendix

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Comment Edited] (HUDI-2576) flink do checkpoint error because parquet file is missing

[

https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430316#comment-17430316

]

liyuanzhao435 edited comment on HUDI-2576 at 10/19/21, 5:33 AM:

flink jobmanager deleted the file :

*2021-10-19 12:47:34,606 INFO org.apache.hudi.common.util.CommitUtils [] -

Creating metadata for null numWriteStats:1numReplaceFileIds:0*

*2021-10-19 12:47:34,607 INFO org.apache.hudi.client.AbstractHoodieWriteClient

[] - Committing 20211019124727 action deltacommit*

*2021-10-19 12:47:34,615 INFO org.apache.hudi.table.HoodieTable [] - Removing

duplicate data files created due to spark retries before committing.

Paths=[aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet]*

*2021-10-19 12:47:34,617 INFO org.apache.hudi.table.HoodieTable [] -

{color:#de350b}Deleting invalid data

files{color}=[(hdfs://:/tmp/test_liyz2/aa,hdfs://:/tmp/test_liyz2/aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet)]*

*2021-10-19 12:47:34,676 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Marking instant

complete [==>20211019124727__deltacommit__INFLIGHT]*

*2021-10-19 12:47:34,677 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Checking for

file exists

?hdfs://26.6.4.165:8020/tmp/test_liyz2/.hoodie/20211019124727.deltacommit.inflight*

*2021-10-19 12:47:34,691 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Create new file

for toInstant

?hdfs://26.6.4.165:8020/tmp/test_liyz2/.hoodie/20211019124727.deltacommit*

*2021-10-19 12:47:34,691 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Completed

[==>20211019124727__deltacommit__INFLIGHT]*

*20*

was (Author: liyuanzhao435):

flink jobmanager deleted the file :

*2021-10-19 12:47:34,606 INFO org.apache.hudi.common.util.CommitUtils [] -

Creating metadata for null numWriteStats:1numReplaceFileIds:0*

*2021-10-19 12:47:34,607 INFO org.apache.hudi.client.AbstractHoodieWriteClient

[] - Committing 20211019124727 action deltacommit*

*2021-10-19 12:47:34,615 INFO org.apache.hudi.table.HoodieTable [] - Removing

duplicate data files created due to spark retries before committing.

Paths=[aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet]*

*2021-10-19 12:47:34,617 INFO org.apache.hudi.table.HoodieTable [] -

{color:#de350b}Deleting invalid data

files{color}=[(hdfs://:/tmp/test_liyz2/aa,hdfs://26.6.4.165:8020/tmp/test_liyz2/aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet)]*

*2021-10-19 12:47:34,676 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Marking instant

complete [==>20211019124727__deltacommit__INFLIGHT]*

*2021-10-19 12:47:34,677 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Checking for

file exists

?hdfs://26.6.4.165:8020/tmp/test_liyz2/.hoodie/20211019124727.deltacommit.inflight*

*2021-10-19 12:47:34,691 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Create new file

for toInstant

?hdfs://26.6.4.165:8020/tmp/test_liyz2/.hoodie/20211019124727.deltacommit*

*2021-10-19 12:47:34,691 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Completed

[==>20211019124727__deltacommit__INFLIGHT]*

*20*

> flink do checkpoint error because parquet file is missing

> --

>

> Key: HUDI-2576

> URL: https://issues.apache.org/jira/browse/HUDI-2576

> Project: Apache Hudi

> Issue Type: Bug

> Components: Flink Integration

>Affects Versions: 0.10.0

>Reporter: liyuanzhao435

>Priority: Major

> Labels: flink, hudi

> Fix For: 0.10.0

>

> Attachments: error.txt

>

> Original Estimate: 96h

> Remaining Estimate: 96h

>

> hudi:0.10.0, flink 1.13.1

> some times when flink do checkpoint , error occurs, the error shows a hudi

> parquet file is missing (says file not exists) :

> *2021-10-19 09:20:03,796 INFO

> org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close

> hoodie row data*

> *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] -

> DataStreamer Exception*

> *java.io.FileNotFoundException: File does not exist:

> /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet

> (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have

> any open files.*

> *at

> org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)*

>

> detail see appendix

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HUDI-2576) flink do checkpoint error because parquet file is missing

[

https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430316#comment-17430316

]

liyuanzhao435 commented on HUDI-2576:

-

flink jobmanager deleted the file :

*2021-10-19 12:47:34,606 INFO org.apache.hudi.common.util.CommitUtils [] -

Creating metadata for null numWriteStats:1numReplaceFileIds:0*

*2021-10-19 12:47:34,607 INFO org.apache.hudi.client.AbstractHoodieWriteClient

[] - Committing 20211019124727 action deltacommit*

*2021-10-19 12:47:34,615 INFO org.apache.hudi.table.HoodieTable [] - Removing

duplicate data files created due to spark retries before committing.

Paths=[aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet]*

*2021-10-19 12:47:34,617 INFO org.apache.hudi.table.HoodieTable [] -

{color:#de350b}Deleting invalid data

files{color}=[(hdfs://:/tmp/test_liyz2/aa,hdfs://26.6.4.165:8020/tmp/test_liyz2/aa/c6eff439-d4e0-4deb-af43-f6906ab71d2b-0_0-1-0_20211019124727.parquet)]*

*2021-10-19 12:47:34,676 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Marking instant

complete [==>20211019124727__deltacommit__INFLIGHT]*

*2021-10-19 12:47:34,677 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Checking for

file exists

?hdfs://26.6.4.165:8020/tmp/test_liyz2/.hoodie/20211019124727.deltacommit.inflight*

*2021-10-19 12:47:34,691 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Create new file

for toInstant

?hdfs://26.6.4.165:8020/tmp/test_liyz2/.hoodie/20211019124727.deltacommit*

*2021-10-19 12:47:34,691 INFO

org.apache.hudi.common.table.timeline.HoodieActiveTimeline [] - Completed

[==>20211019124727__deltacommit__INFLIGHT]*

*20*

> flink do checkpoint error because parquet file is missing

> --

>

> Key: HUDI-2576

> URL: https://issues.apache.org/jira/browse/HUDI-2576

> Project: Apache Hudi

> Issue Type: Bug

> Components: Flink Integration

>Affects Versions: 0.10.0

>Reporter: liyuanzhao435

>Priority: Major

> Labels: flink, hudi

> Fix For: 0.10.0

>

> Attachments: error.txt

>

> Original Estimate: 96h

> Remaining Estimate: 96h

>

> hudi:0.10.0, flink 1.13.1

> some times when flink do checkpoint , error occurs, the error shows a hudi

> parquet file is missing (says file not exists) :

> *2021-10-19 09:20:03,796 INFO

> org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close

> hoodie row data*

> *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] -

> DataStreamer Exception*

> *java.io.FileNotFoundException: File does not exist:

> /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet

> (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have

> any open files.*

> *at

> org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)*

>

> detail see appendix

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (HUDI-2576) flink do checkpoint error because parquet file is missing

[ https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430311#comment-17430311 ] liyuanzhao435 commented on HUDI-2576: - I checked the hdfs audit log, the parquet file created and then deleted immediately, now I hive to fund the reason > flink do checkpoint error because parquet file is missing > -- > > Key: HUDI-2576 > URL: https://issues.apache.org/jira/browse/HUDI-2576 > Project: Apache Hudi > Issue Type: Bug > Components: Flink Integration >Affects Versions: 0.10.0 >Reporter: liyuanzhao435 >Priority: Major > Labels: flink, hudi > Fix For: 0.10.0 > > Attachments: error.txt > > Original Estimate: 96h > Remaining Estimate: 96h > > hudi:0.10.0, flink 1.13.1 > some times when flink do checkpoint , error occurs, the error shows a hudi > parquet file is missing (says file not exists) : > *2021-10-19 09:20:03,796 INFO > org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close > hoodie row data* > *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] - > DataStreamer Exception* > *java.io.FileNotFoundException: File does not exist: > /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet > (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have > any open files.* > *at > org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)* > > detail see appendix -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] xushiyan closed issue #3728: [SUPPORT] Hudi Flink S3 Java Example

xushiyan closed issue #3728: URL: https://github.com/apache/hudi/issues/3728 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #2544: [SUPPORT]failed to read timestamp column in version 0.7.0 even when HIVE_SUPPORT_TIMESTAMP is enabled

nsivabalan commented on issue #2544: URL: https://github.com/apache/hudi/issues/2544#issuecomment-946369974 Closing due to inactivity and the issue is not reproducible anymore. thanks -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan closed issue #2544: [SUPPORT]failed to read timestamp column in version 0.7.0 even when HIVE_SUPPORT_TIMESTAMP is enabled

nsivabalan closed issue #2544: URL: https://github.com/apache/hudi/issues/2544 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3603: [SUPPORT] delta streamer Failed to archive commits

nsivabalan commented on issue #3603: URL: https://github.com/apache/hudi/issues/3603#issuecomment-946369090 @fengjian428 : hey, can you give us any updates. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3559: [SUPPORT] Failed to archive commits

nsivabalan commented on issue #3559: URL: https://github.com/apache/hudi/issues/3559#issuecomment-946367293 This was fixed in 090. closing it out. If you run into any issues, do reach out to us. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan closed issue #3559: [SUPPORT] Failed to archive commits

nsivabalan closed issue #3559: URL: https://github.com/apache/hudi/issues/3559 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #2802: Hive read issues when different partition have different schemas.

nsivabalan commented on issue #2802: URL: https://github.com/apache/hudi/issues/2802#issuecomment-946364855 @aditiwari01 : when you get a chance can you respond. Will close out in a week if we don't hear from you. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

nsivabalan commented on issue #3739: URL: https://github.com/apache/hudi/issues/3739#issuecomment-946363380 @codope : Can you create a ticket for adding ability via hudi-cli to clean up dangling data files. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-2511) Aggressive archival configs compared to cleaner configs make cleaning moot

[ https://issues.apache.org/jira/browse/HUDI-2511?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sivabalan narayanan updated HUDI-2511: -- Priority: Blocker (was: Major) > Aggressive archival configs compared to cleaner configs make cleaning moot > -- > > Key: HUDI-2511 > URL: https://issues.apache.org/jira/browse/HUDI-2511 > Project: Apache Hudi > Issue Type: Improvement >Reporter: sivabalan narayanan >Assignee: sivabalan narayanan >Priority: Blocker > Labels: sev:high, user-support-issues > > if hoodie.keep.max.commits <= hoodie.cleaner.commits.retained, then cleaner > will never kick in only. Bcoz, by then archival will kick in and will move > entries from active to archived. > We need to revisit this and either throw exception or make cleaner also look > into archived commits. > Related issue: [https://github.com/apache/hudi/issues/3739] > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] nsivabalan closed issue #2564: Hoodie clean is not deleting old files

nsivabalan closed issue #2564: URL: https://github.com/apache/hudi/issues/2564 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] absognety edited a comment on issue #3758: [SUPPORT] Issues when writing dataframe to hudi format with hive syncing enabled for AWS Athena and Glue metadata persistence

absognety edited a comment on issue #3758: URL: https://github.com/apache/hudi/issues/3758#issuecomment-946311994 @nsivabalan I can confidently say that this is intermittently occurring issue, especially when we have concurrency in our code - doing concurrent writes to multiple tables in S3 (using threading or multiprocessing libraries). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] absognety edited a comment on issue #3758: [SUPPORT] Issues when writing dataframe to hudi format with hive syncing enabled for AWS Athena and Glue metadata persistence

absognety edited a comment on issue #3758: URL: https://github.com/apache/hudi/issues/3758#issuecomment-946311994 @nsivabalan I can confidently say that this is intermittently occurring issue, especially when we have concurrency in our code - doing concurrent writes to different hudi partitions for multiple tables in S3 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot edited a comment on pull request #3519: [DO NOT MERGE] 0.9.0 release patch for flink

hudi-bot edited a comment on pull request #3519: URL: https://github.com/apache/hudi/pull/3519#issuecomment-903204631 ## CI report: * d108ef91b835ec89276863ac062bcc5cad6a2081 Azure: [CANCELED](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=2712) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run travis` re-run the last Travis build - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot edited a comment on pull request #3519: [DO NOT MERGE] 0.9.0 release patch for flink

hudi-bot edited a comment on pull request #3519: URL: https://github.com/apache/hudi/pull/3519#issuecomment-903204631 ## CI report: * c4ed928cfa949daca478608bee6046995b106c7d Azure: [CANCELED](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=2710) * d108ef91b835ec89276863ac062bcc5cad6a2081 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run travis` re-run the last Travis build - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yanghua commented on pull request #3773: [HUDI-2507] Generate more dependency list file for other bundles

yanghua commented on pull request #3773: URL: https://github.com/apache/hudi/pull/3773#issuecomment-946329906 @vinothchandar Do you have any thoughts? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yanghua commented on pull request #3773: [HUDI-2507] Generate more dependency list file for other bundles

yanghua commented on pull request #3773: URL: https://github.com/apache/hudi/pull/3773#issuecomment-946329594 > LGTM. Optional: maybe having a test PR to show what diffs people will get if changed/added a dependency can help understand the impact easily. sounds good, will try to write a guide and blog to explain how it works. Actually, I still did not figure out how to test it since the diff comes from the change of the dependencies. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot edited a comment on pull request #3519: [DO NOT MERGE] 0.9.0 release patch for flink

hudi-bot edited a comment on pull request #3519: URL: https://github.com/apache/hudi/pull/3519#issuecomment-903204631 ## CI report: * c4ed928cfa949daca478608bee6046995b106c7d Azure: [CANCELED](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=2710) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run travis` re-run the last Travis build - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot edited a comment on pull request #3519: [DO NOT MERGE] 0.9.0 release patch for flink

hudi-bot edited a comment on pull request #3519: URL: https://github.com/apache/hudi/pull/3519#issuecomment-903204631 ## CI report: * 0e29ebfbbc37cd342017bdd8290e34bf5336210d Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=2702) * c4ed928cfa949daca478608bee6046995b106c7d UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run travis` re-run the last Travis build - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (HUDI-2572) Strength flink compaction rollback strategy

[ https://issues.apache.org/jira/browse/HUDI-2572?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen resolved HUDI-2572. -- Resolution: Fixed Fixed via master branch: 3a78be9203a9c3cea33fa6120c89f7702275fc31 > Strength flink compaction rollback strategy > --- > > Key: HUDI-2572 > URL: https://issues.apache.org/jira/browse/HUDI-2572 > Project: Apache Hudi > Issue Type: Improvement > Components: Flink Integration >Reporter: Danny Chen >Assignee: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.10.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[hudi] branch master updated: [HUDI-2572] Strength flink compaction rollback strategy (#3819)

This is an automated email from the ASF dual-hosted git repository.

danny0405 pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/hudi.git

The following commit(s) were added to refs/heads/master by this push:

new 3a78be9 [HUDI-2572] Strength flink compaction rollback strategy

(#3819)

3a78be9 is described below

commit 3a78be9203a9c3cea33fa6120c89f7702275fc31

Author: Danny Chan

AuthorDate: Tue Oct 19 10:47:38 2021 +0800

[HUDI-2572] Strength flink compaction rollback strategy (#3819)

* make the events of commit task distinct by file id

* fix the existence check for inflight state file

* make the compaction task fail-safe

---

.../apache/hudi/sink/compact/CompactFunction.java | 2 +-

.../hudi/sink/compact/CompactionCommitEvent.java | 17 +++-

.../hudi/sink/compact/CompactionCommitSink.java| 47 +++---

.../hudi/sink/compact/CompactionPlanOperator.java | 27 +

4 files changed, 50 insertions(+), 43 deletions(-)

diff --git

a/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactFunction.java

b/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactFunction.java

index 5916244..57b79df 100644

--- a/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactFunction.java

+++ b/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactFunction.java

@@ -99,7 +99,7 @@ public class CompactFunction extends

ProcessFunction collector) throws

IOException {

List writeStatuses = FlinkCompactHelpers.compact(writeClient,

instantTime, compactionOperation);

-collector.collect(new CompactionCommitEvent(instantTime, writeStatuses,

taskID));

+collector.collect(new CompactionCommitEvent(instantTime,

compactionOperation.getFileId(), writeStatuses, taskID));

}

@VisibleForTesting

diff --git

a/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitEvent.java

b/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitEvent.java

index 52c0812..0444944 100644

---

a/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitEvent.java

+++

b/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitEvent.java

@@ -33,6 +33,12 @@ public class CompactionCommitEvent implements Serializable {

* The compaction commit instant time.

*/

private String instant;

+

+ /**

+ * The file ID.

+ */

+ private String fileId;

+

/**

* The write statuses.

*/

@@ -45,8 +51,9 @@ public class CompactionCommitEvent implements Serializable {

public CompactionCommitEvent() {

}

- public CompactionCommitEvent(String instant, List

writeStatuses, int taskID) {

+ public CompactionCommitEvent(String instant, String fileId,

List writeStatuses, int taskID) {

this.instant = instant;

+this.fileId = fileId;

this.writeStatuses = writeStatuses;

this.taskID = taskID;

}

@@ -55,6 +62,10 @@ public class CompactionCommitEvent implements Serializable {

this.instant = instant;

}

+ public void setFileId(String fileId) {

+this.fileId = fileId;

+ }

+

public void setWriteStatuses(List writeStatuses) {

this.writeStatuses = writeStatuses;

}

@@ -67,6 +78,10 @@ public class CompactionCommitEvent implements Serializable {

return instant;

}

+ public String getFileId() {

+return fileId;

+ }

+

public List getWriteStatuses() {

return writeStatuses;

}

diff --git

a/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

b/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

index e6c4ced..d90af2c 100644

---

a/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

+++

b/hudi-flink/src/main/java/org/apache/hudi/sink/compact/CompactionCommitSink.java

@@ -20,8 +20,6 @@ package org.apache.hudi.sink.compact;

import org.apache.hudi.avro.model.HoodieCompactionPlan;

import org.apache.hudi.client.WriteStatus;

-import org.apache.hudi.common.model.HoodieCommitMetadata;

-import org.apache.hudi.common.model.HoodieWriteStat;

import org.apache.hudi.common.util.CompactionUtils;

import org.apache.hudi.common.util.Option;

import org.apache.hudi.configuration.FlinkOptions;

@@ -33,7 +31,6 @@ import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

-import java.util.ArrayList;

import java.util.Collection;

import java.util.HashMap;

import java.util.List;

@@ -61,9 +58,12 @@ public class CompactionCommitSink extends

CleanFunction {

/**

* Buffer to collect the event from each compact task {@code

CompactFunction}.

- * The key is the instant time.

+ *

+ * Stores the mapping of instant_time -> file_id -> event. Use a map to

collect the

+ * events because the rolling back of intermediate compaction tasks

generates corrupt

+ * events.

*/

- private transient Map> commitBuffer;

+ private transient Map>

commitBuffer;

public CompactionCommitSink(Configuration conf) {

[GitHub] [hudi] danny0405 merged pull request #3819: [HUDI-2572] Strength flink compaction rollback strategy

danny0405 merged pull request #3819: URL: https://github.com/apache/hudi/pull/3819 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (HUDI-2576) flink do checkpoint error because parquet file is missing

[ https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17430282#comment-17430282 ] liyuanzhao435 commented on HUDI-2576: - the missing parquet file , either not created or deleted. according to the code, the file won't be deleted , so the reason is file not created but, there is no exception reported > flink do checkpoint error because parquet file is missing > -- > > Key: HUDI-2576 > URL: https://issues.apache.org/jira/browse/HUDI-2576 > Project: Apache Hudi > Issue Type: Bug > Components: Flink Integration >Affects Versions: 0.10.0 >Reporter: liyuanzhao435 >Priority: Major > Labels: flink, hudi > Fix For: 0.10.0 > > Attachments: error.txt > > Original Estimate: 96h > Remaining Estimate: 96h > > hudi:0.10.0, flink 1.13.1 > some times when flink do checkpoint , error occurs, the error shows a hudi > parquet file is missing (says file not exists) : > *2021-10-19 09:20:03,796 INFO > org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close > hoodie row data* > *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] - > DataStreamer Exception* > *java.io.FileNotFoundException: File does not exist: > /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet > (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have > any open files.* > *at > org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)* > > detail see appendix -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] nsivabalan commented on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

nsivabalan commented on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-946312014 @davehagman let's proceed with the approach you suggested. If others have any thoughts, I can take it up in a follow up PR. but lets proceed with this for now. One more request: Do add a unit test for the changes in TransactionUtils. should be easy to add one. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] absognety commented on issue #3758: [SUPPORT] Issues when writing dataframe to hudi format with hive syncing enabled for AWS Athena and Glue metadata persistence

absognety commented on issue #3758: URL: https://github.com/apache/hudi/issues/3758#issuecomment-946311994 @nsivabalan I can confidently say that this is intermittently occurring issue, especially when we have concurrency in our code - doing concurrent writes to different hudi partitions in S3 . -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

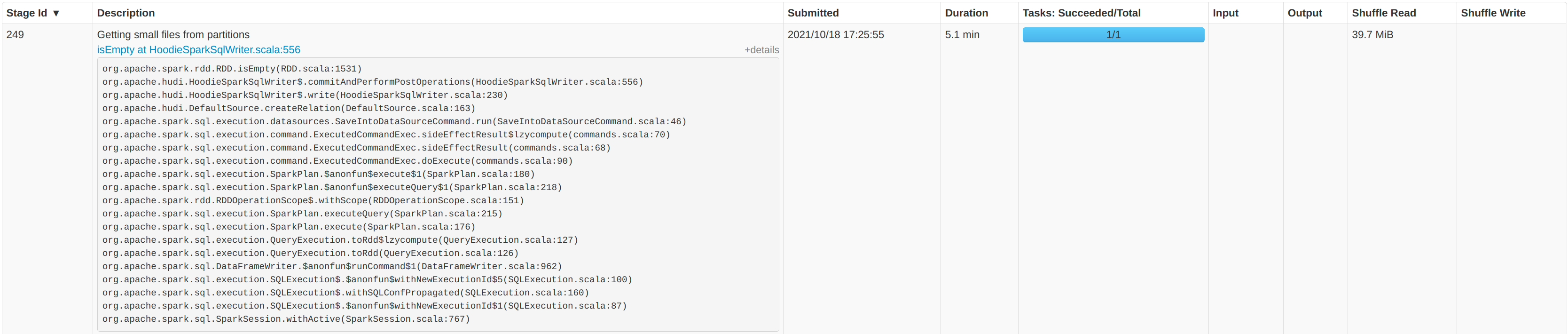

[GitHub] [hudi] rohit-m-99 opened a new issue #3821: [SUPPORT] Ingestion taking very long time getting small files from partitions/

rohit-m-99 opened a new issue #3821: URL: https://github.com/apache/hudi/issues/3821 **Describe the problem you faced** Currently running Hudi 0.9.0 in production without a specific partition field. We are running using 6 workers each with 7 cores and 28GB of RAM. The files are stored in S3. We run 50 `runs` each with about `4000` records. When then combine the runs into one dataframe, writing around 200k records at once using the `upsert` operation. Each record has around 280 columns. We see the majority of time being spent `GettingSmallFiles from partitions`.    * Hudi version : spark_hudi_0.9.0-SNAPSHOT * Spark version : 3.0.3 * Hadoop version : 3.2.0 * Storage (HDFS/S3/GCS..) : S# * Running on Docker? (yes/no) : K8S -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-2576) flink do checkpoint error because parquet file is missing

[ https://issues.apache.org/jira/browse/HUDI-2576?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] liyuanzhao435 updated HUDI-2576: Attachment: error.txt > flink do checkpoint error because parquet file is missing > -- > > Key: HUDI-2576 > URL: https://issues.apache.org/jira/browse/HUDI-2576 > Project: Apache Hudi > Issue Type: Bug > Components: Flink Integration >Affects Versions: 0.10.0 >Reporter: liyuanzhao435 >Priority: Major > Labels: flink, hudi > Fix For: 0.10.0 > > Attachments: error.txt > > Original Estimate: 96h > Remaining Estimate: 96h > > hudi:0.10.0, flink 1.13.1 > some times when flink do checkpoint , error occurs, the error shows a hudi > parquet file is missing (says file not exists) : > *2021-10-19 09:20:03,796 INFO > org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close > hoodie row data* > *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] - > DataStreamer Exception* > *java.io.FileNotFoundException: File does not exist: > /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet > (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have > any open files.* > *at > org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)* > > detail see appendix -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (HUDI-2576) flink do checkpoint error because parquet file is missing

liyuanzhao435 created HUDI-2576: --- Summary: flink do checkpoint error because parquet file is missing Key: HUDI-2576 URL: https://issues.apache.org/jira/browse/HUDI-2576 Project: Apache Hudi Issue Type: Bug Components: Flink Integration Affects Versions: 0.10.0 Reporter: liyuanzhao435 Fix For: 0.10.0 Attachments: error.txt hudi:0.10.0, flink 1.13.1 some times when flink do checkpoint , error occurs, the error shows a hudi parquet file is missing (says file not exists) : *2021-10-19 09:20:03,796 INFO org.apache.hudi.io.storage.row.HoodieRowDataCreateHandle [] - start close hoodie row data* *2021-10-19 09:20:03,800 WARN org.apache.hadoop.hdfs.DataStreamer [] - DataStreamer Exception* *java.io.FileNotFoundException: File does not exist: /tmp/test_liyz2/aa/2ff301cc-8db2-478e-b707-e8f2327ba38f-0_0-1-4_20211019091917.parquet (inode 32234795) Holder DFSClient_NONMAPREDUCE_633610786_99 does not have any open files.* *at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:2815)* detail see appendix -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [hudi] nsivabalan commented on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

nsivabalan commented on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-946148718 yeah, the naming looks fine by me. btw, Can you please attach jira ticket to PR. prefix w/ ticket id. Especially for bugs, we need a tracking ticket. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch master updated (588a34a -> 335e80e)

This is an automated email from the ASF dual-hosted git repository. vinoth pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git. from 588a34a [HUDI-2571] Remove include-flink-sql-connector-hive profile from flink bundle (#3818) add 335e80e [HUDI-2561] BitCaskDiskMap - avoiding hostname resolution when logging messages (#3811) No new revisions were added by this update. Summary of changes: .../java/org/apache/hudi/common/util/collection/BitCaskDiskMap.java | 5 + 1 file changed, 1 insertion(+), 4 deletions(-)

[GitHub] [hudi] davehagman commented on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

davehagman commented on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-946035803 I like that idea a lot. It reduces the chance of error as well. Here are some thoughts: > a new config called `hoodie.copy.over.deltastreamer.checkpoints` Since this is very specific to multi-writer/OCC what about putting it under the `concurrency` namespace? Something like `hoodie.write.concurrency.merge.deltastreamer.state`. This also removes the implementation detail of "checkpoint" in favor of a generalized "state" which will allow us to extend this to other keys in the future if necessary without needing more configs. > fetch value of "deltastreamer.checkpoint.key" from last committed transaction and copy to cur inflight commit extra metadata. Yea we can even re-use the existing code (still need my fix) that merges a key from the previous instant's metadata to the inflight (current) one. Now we will just make this access private and only expose a new method which is specific to copying over checkpoint state if the above config is set. Something like: `TransactionUtils.mergeCheckpointStateFromPreviousCommit(thisInstant, previousCommit)` this will ultimately just call the existing `overrideWithLatestCommitMetadata` (now private) specifically with the metadata key `deltastreamer.checkpoint.key`, successfully abstracting details and removing the need for users to know anything about the internal state of commits. Thoughts? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

nsivabalan edited a comment on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-946024720 thanks a lot for fixing this Dave. I would like to propose something here. I am wondering why do we need to retrofit copying over delta streamer checkpoint into logic meant for hoodie.write.meta.key.prefixes. to me, this new requirement is very simple and not really tied to `hoodie.write.meta.key.prefixes`. Let me propose something and see how that looks like. Introduce a new config called `hoodie.copy.over.deltastreamer.checkpoints`. we can brainstorm on actual naming later. When set to true, within TransactionUtils::overrideWithLatestCommitMetadata ``` fetch value of "deltastreamer.checkpoint.key" from last committed transaction and copy to cur inflight commit extra metadata. ``` This is very tight and not error prone. Users don't need to set two different config as below which is not very intuitive as to why they need to do this. ``` hoodie.write.meta.key.prefixes = 'deltastreamer.checkpoint.key' ``` and optionally ``` deltastreamer.checkpoint.key =. "" ``` All users have to do is, for all of their spark writers, they need to set `hoodie.copy.over.deltastreamer.checkpoints` to true. welcome thoughts @n3nash @vinothchandar @davehagman -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

nsivabalan edited a comment on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-946024720 thanks a lot for fixing this Dave. I would like to propose something here. I am wondering why do we need to retrofit copying over delta streamer checkpoint into hoodie.write.meta.key.prefixes. to me, this new requirement is very simple and not really tied to `hoodie.write.meta.key.prefixes`. Let me propose something and see how that looks like. Introduce a new config called `hoodie.copy.over.deltastreamer.checkpoints`. we can brainstorm on actual naming later. When set to true, within TransactionUtils::overrideWithLatestCommitMetadata ``` fetch value of "deltastreamer.checkpoint.key" from last committed transaction and copy to cur inflight commit extra metadata. ``` This is very tight and not error prone. Users don't need to set two different config as below which is not very intuitive as to why they need to do this. ``` hoodie.write.meta.key.prefixes = 'deltastreamer.checkpoint.key' ``` and optionally ``` deltastreamer.checkpoint.key =. "" ``` All users have to do is, for all of their spark writers, they need to set `hoodie.copy.over.deltastreamer.checkpoints` to true. welcome thoughts @n3nash @vinothchandar @davehagman -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

nsivabalan commented on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-946024720 thanks a lot for fixing this Dave. I would like to propose something here. I am wondering why do we need to retrofit copying over delta streamer checkpoint into hoodie.write.meta.key.prefixes. to me, this new requirement is very simple and not really tied to `hoodie.write.meta.key.prefixes`. Let me propose something and see how that looks like. Introduce a new config called `hoodie.copy.over.deltastreamer.checkpoints`. we can brainstorm on actual naming later. When set to true, within TransactionUtils::overrideWithLatestCommitMetadata ``` fetch value of "deltastreamer.checkpoint.key" from last committed transaction and copy to cur inflight commit extra metadata. ``` This is very tight and not error prone. Users don't need to set two different config as below which is not very intuitive as to why they need to do this. ``` hoodie.write.meta.key.prefixes = 'deltastreamer.checkpoint.key' ``` and optionally ``` deltastreamer.checkpoint.key =. "" ``` All users have to do is, for all of their spark writers, they need to set `hoodie.copy.over.deltastreamer.checkpoints` to true. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot edited a comment on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

hudi-bot edited a comment on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-945958915 ## CI report: * 8de6afb8a205a41de2a4b214c8982488b2b8ec19 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=2708) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run travis` re-run the last Travis build - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] vinothchandar merged pull request #3811: [HUDI-2561] BitCaskDiskMap - avoiding hostname resolution when logging messages

vinothchandar merged pull request #3811: URL: https://github.com/apache/hudi/pull/3811 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xushiyan commented on pull request #3781: [HUDI-2540] Fixed wrong validation for metadataTableEnabled in HoodieTable

xushiyan commented on pull request #3781: URL: https://github.com/apache/hudi/pull/3781#issuecomment-945974656 @RocMarshal for this PR's failure, it's most likely based on an impacted master build. you may want to rebase next time to stay on top of master. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-2573) Deadlock w/ multi writer due to double locking

[ https://issues.apache.org/jira/browse/HUDI-2573?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sivabalan narayanan updated HUDI-2573: -- Priority: Blocker (was: Major) > Deadlock w/ multi writer due to double locking > -- > > Key: HUDI-2573 > URL: https://issues.apache.org/jira/browse/HUDI-2573 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: sivabalan narayanan >Assignee: sivabalan narayanan >Priority: Blocker > Labels: release-blocker, sev:critical > Fix For: 0.10.0 > > > With synchronous metadata patch, we added locking for cleaning and rollbacks. > but there are code paths, where we do double locking and hence it hangs or > fails after sometime. > > inline cleaning enabled. > > C1 acquires lock. > post commit -> triggers cleaning. > cleaning again tries to acquire lock when about to > commit and this is problematic. > > Also, when upgrade is needed, we take a lock and rollback failed writes. this > again will run into issues w/ double locking. > > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-2559) Ensure unique timestamps are generated for commit times with concurrent writers

[

https://issues.apache.org/jira/browse/HUDI-2559?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sivabalan narayanan updated HUDI-2559:

--

Priority: Blocker (was: Major)

> Ensure unique timestamps are generated for commit times with concurrent

> writers

> ---

>

> Key: HUDI-2559

> URL: https://issues.apache.org/jira/browse/HUDI-2559

> Project: Apache Hudi

> Issue Type: Sub-task

>Reporter: sivabalan narayanan

>Assignee: sivabalan narayanan

>Priority: Blocker

> Labels: release-blocker, sev:critical

>

> Ensure unique timestamps are generated for commit times with concurrent

> writers.

> this is the piece of code in HoodieActiveTimeline which creates a new commit

> time.

> {code:java}

> public static String createNewInstantTime(long milliseconds) {

> return lastInstantTime.updateAndGet((oldVal) -> {

> String newCommitTime;

> do {

> newCommitTime = HoodieActiveTimeline.COMMIT_FORMATTER.format(new

> Date(System.currentTimeMillis() + milliseconds));

> } while (HoodieTimeline.compareTimestamps(newCommitTime,

> LESSER_THAN_OR_EQUALS, oldVal));

> return newCommitTime;

> });

> }

> {code}

> There are chances that a deltastreamer and a concurrent spark ds writer gets

> same timestamp and one of them fails.

> Related issues and github jiras:

> [https://github.com/apache/hudi/issues/3782]

> https://issues.apache.org/jira/browse/HUDI-2549

>

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HUDI-2573) Deadlock w/ multi writer due to double locking

[ https://issues.apache.org/jira/browse/HUDI-2573?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sivabalan narayanan updated HUDI-2573: -- Parent: HUDI-1292 Issue Type: Sub-task (was: Bug) > Deadlock w/ multi writer due to double locking > -- > > Key: HUDI-2573 > URL: https://issues.apache.org/jira/browse/HUDI-2573 > Project: Apache Hudi > Issue Type: Sub-task >Reporter: sivabalan narayanan >Assignee: sivabalan narayanan >Priority: Major > Labels: release-blocker, sev:critical > Fix For: 0.10.0 > > > With synchronous metadata patch, we added locking for cleaning and rollbacks. > but there are code paths, where we do double locking and hence it hangs or > fails after sometime. > > inline cleaning enabled. > > C1 acquires lock. > post commit -> triggers cleaning. > cleaning again tries to acquire lock when about to > commit and this is problematic. > > Also, when upgrade is needed, we take a lock and rollback failed writes. this > again will run into issues w/ double locking. > > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-2559) Ensure unique timestamps are generated for commit times with concurrent writers

[

https://issues.apache.org/jira/browse/HUDI-2559?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sivabalan narayanan updated HUDI-2559:

--

Parent: HUDI-1292

Issue Type: Sub-task (was: Improvement)

> Ensure unique timestamps are generated for commit times with concurrent

> writers

> ---

>

> Key: HUDI-2559

> URL: https://issues.apache.org/jira/browse/HUDI-2559

> Project: Apache Hudi

> Issue Type: Sub-task

>Reporter: sivabalan narayanan

>Assignee: sivabalan narayanan

>Priority: Major

> Labels: release-blocker, sev:critical

>

> Ensure unique timestamps are generated for commit times with concurrent

> writers.

> this is the piece of code in HoodieActiveTimeline which creates a new commit

> time.

> {code:java}

> public static String createNewInstantTime(long milliseconds) {

> return lastInstantTime.updateAndGet((oldVal) -> {

> String newCommitTime;

> do {

> newCommitTime = HoodieActiveTimeline.COMMIT_FORMATTER.format(new

> Date(System.currentTimeMillis() + milliseconds));

> } while (HoodieTimeline.compareTimestamps(newCommitTime,

> LESSER_THAN_OR_EQUALS, oldVal));

> return newCommitTime;

> });

> }

> {code}

> There are chances that a deltastreamer and a concurrent spark ds writer gets

> same timestamp and one of them fails.

> Related issues and github jiras:

> [https://github.com/apache/hudi/issues/3782]

> https://issues.apache.org/jira/browse/HUDI-2549

>

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [hudi] hudi-bot edited a comment on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

hudi-bot edited a comment on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-945958915 ## CI report: * 8de6afb8a205a41de2a4b214c8982488b2b8ec19 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=2708) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run travis` re-run the last Travis build - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] davehagman commented on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

davehagman commented on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-945961702 I also noticed that there isn't any documentation around `hoodie.write.meta.key.prefixes` config in the multi-writer docs. We should add something about it since it is very important if you're multi-writer table includes a deltastreamer. Thoughts? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #3820: [BUGFIX] Merge commit state from previous commit instead of current

hudi-bot commented on pull request #3820: URL: https://github.com/apache/hudi/pull/3820#issuecomment-945958915 ## CI report: * 8de6afb8a205a41de2a4b214c8982488b2b8ec19 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run travis` re-run the last Travis build - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-2573) Deadlock w/ multi writer due to double locking

[ https://issues.apache.org/jira/browse/HUDI-2573?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] sivabalan narayanan updated HUDI-2573: -- Labels: release-blocker sev:critical (was: ) > Deadlock w/ multi writer due to double locking > -- > > Key: HUDI-2573 > URL: https://issues.apache.org/jira/browse/HUDI-2573 > Project: Apache Hudi > Issue Type: Bug >Reporter: sivabalan narayanan >Assignee: sivabalan narayanan >Priority: Major > Labels: release-blocker, sev:critical > Fix For: 0.10.0 > > > With synchronous metadata patch, we added locking for cleaning and rollbacks. > but there are code paths, where we do double locking and hence it hangs or > fails after sometime. > > inline cleaning enabled. > > C1 acquires lock. > post commit -> triggers cleaning. > cleaning again tries to acquire lock when about to > commit and this is problematic. > > Also, when upgrade is needed, we take a lock and rollback failed writes. this > again will run into issues w/ double locking. > > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (HUDI-2559) Ensure unique timestamps are generated for commit times with concurrent writers

[

https://issues.apache.org/jira/browse/HUDI-2559?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

sivabalan narayanan updated HUDI-2559:

--

Labels: release-blocker sev:critical (was: )

> Ensure unique timestamps are generated for commit times with concurrent

> writers

> ---

>

> Key: HUDI-2559

> URL: https://issues.apache.org/jira/browse/HUDI-2559

> Project: Apache Hudi

> Issue Type: Improvement

>Reporter: sivabalan narayanan

>Assignee: sivabalan narayanan

>Priority: Major

> Labels: release-blocker, sev:critical

>

> Ensure unique timestamps are generated for commit times with concurrent

> writers.

> this is the piece of code in HoodieActiveTimeline which creates a new commit

> time.

> {code:java}

> public static String createNewInstantTime(long milliseconds) {

> return lastInstantTime.updateAndGet((oldVal) -> {

> String newCommitTime;

> do {

> newCommitTime = HoodieActiveTimeline.COMMIT_FORMATTER.format(new

> Date(System.currentTimeMillis() + milliseconds));

> } while (HoodieTimeline.compareTimestamps(newCommitTime,

> LESSER_THAN_OR_EQUALS, oldVal));

> return newCommitTime;

> });

> }

> {code}

> There are chances that a deltastreamer and a concurrent spark ds writer gets

> same timestamp and one of them fails.

> Related issues and github jiras:

> [https://github.com/apache/hudi/issues/3782]

> https://issues.apache.org/jira/browse/HUDI-2549

>

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (HUDI-1912) Presto defaults to GenericHiveRecordCursor for all Hudi tables

[