[GitHub] [hudi] TJX2014 commented on a diff in pull request #6567: [HUDI-4767] Fix non partition table in hudi-flink ignore KEYGEN_CLASS…

TJX2014 commented on code in PR #6567:

URL: https://github.com/apache/hudi/pull/6567#discussion_r962542029

##

hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/table/HoodieTableFactory.java:

##

@@ -217,31 +217,33 @@ private static void setupHoodieKeyOptions(Configuration

conf, CatalogTable table

}

}

-// tweak the key gen class if possible

-final String[] partitions =

conf.getString(FlinkOptions.PARTITION_PATH_FIELD).split(",");

-final String[] pks =

conf.getString(FlinkOptions.RECORD_KEY_FIELD).split(",");

-if (partitions.length == 1) {

- final String partitionField = partitions[0];

- if (partitionField.isEmpty()) {

-conf.setString(FlinkOptions.KEYGEN_CLASS_NAME,

NonpartitionedAvroKeyGenerator.class.getName());

-LOG.info("Table option [{}] is reset to {} because this is a

non-partitioned table",

-FlinkOptions.KEYGEN_CLASS_NAME.key(),

NonpartitionedAvroKeyGenerator.class.getName());

-return;

+if (StringUtils.isNullOrEmpty(conf.get(FlinkOptions.KEYGEN_CLASS_NAME))) {

+ // tweak the key gen class if possible

Review Comment:

Hudi configure keygen clazz auto is great, so the option should not exists,

once configured but not effect, is it strange?The code in spark has changed to

follow hudi-partition way, but in historical data, if the layout of

non-partitioned table with complex key by spark, the only chance for hudi-flink

it to configure keygen.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6587: [HUDI-4775] Fixing incremental source for MOR table

hudi-bot commented on PR #6587: URL: https://github.com/apache/hudi/pull/6587#issuecomment-1236609160 ## CI report: * 9c996aa5881d2a9e341b5181ef635750a7f4c926 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11142) * a8bbdf4475b8a9c204c2547071ecdb7ba26691ae UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6046: [HUDI-4363] Support Clustering row writer to improve performance

hudi-bot commented on PR #6046: URL: https://github.com/apache/hudi/pull/6046#issuecomment-1236608382 ## CI report: * 1c913457d2dd531fd1ecae6b0d60e600f59e261b Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=10760) * b8e848d0f8b32ff3c75762951e3af4c911419927 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5478: [HUDI-3998] Fix getCommitsSinceLastCleaning failed when async cleaning

hudi-bot commented on PR #5478: URL: https://github.com/apache/hudi/pull/5478#issuecomment-1236607892 ## CI report: * 9b10ad3fb80db31e34e46abbd5d0b3ba9f179a8b Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11140) * 9b0e2c00879a4b3b8fdfebb1a4ead10b1eed60eb UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] TJX2014 commented on a diff in pull request #6567: [HUDI-4767] Fix non partition table in hudi-flink ignore KEYGEN_CLASS…

TJX2014 commented on code in PR #6567:

URL: https://github.com/apache/hudi/pull/6567#discussion_r962542972

##

hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/table/HoodieTableFactory.java:

##

@@ -217,31 +217,33 @@ private static void setupHoodieKeyOptions(Configuration

conf, CatalogTable table

}

}

-// tweak the key gen class if possible

-final String[] partitions =

conf.getString(FlinkOptions.PARTITION_PATH_FIELD).split(",");

-final String[] pks =

conf.getString(FlinkOptions.RECORD_KEY_FIELD).split(",");

-if (partitions.length == 1) {

- final String partitionField = partitions[0];

- if (partitionField.isEmpty()) {

-conf.setString(FlinkOptions.KEYGEN_CLASS_NAME,

NonpartitionedAvroKeyGenerator.class.getName());

-LOG.info("Table option [{}] is reset to {} because this is a

non-partitioned table",

-FlinkOptions.KEYGEN_CLASS_NAME.key(),

NonpartitionedAvroKeyGenerator.class.getName());

-return;

+if (StringUtils.isNullOrEmpty(conf.get(FlinkOptions.KEYGEN_CLASS_NAME))) {

+ // tweak the key gen class if possible

Review Comment:

Hudi configure keygen clazz auto is great, so the option should not exists,

once configured but not effect, is it strange?The code in spark has changed to

follow hudi-partition way, but in historical data, if the layout of

non-partitioned table with complex key by spark, the only chance for hudi-flink

is to configure keygen.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] TJX2014 commented on a diff in pull request #6567: [HUDI-4767] Fix non partition table in hudi-flink ignore KEYGEN_CLASS…

TJX2014 commented on code in PR #6567:

URL: https://github.com/apache/hudi/pull/6567#discussion_r962542972

##

hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/table/HoodieTableFactory.java:

##

@@ -217,31 +217,33 @@ private static void setupHoodieKeyOptions(Configuration

conf, CatalogTable table

}

}

-// tweak the key gen class if possible

-final String[] partitions =

conf.getString(FlinkOptions.PARTITION_PATH_FIELD).split(",");

-final String[] pks =

conf.getString(FlinkOptions.RECORD_KEY_FIELD).split(",");

-if (partitions.length == 1) {

- final String partitionField = partitions[0];

- if (partitionField.isEmpty()) {

-conf.setString(FlinkOptions.KEYGEN_CLASS_NAME,

NonpartitionedAvroKeyGenerator.class.getName());

-LOG.info("Table option [{}] is reset to {} because this is a

non-partitioned table",

-FlinkOptions.KEYGEN_CLASS_NAME.key(),

NonpartitionedAvroKeyGenerator.class.getName());

-return;

+if (StringUtils.isNullOrEmpty(conf.get(FlinkOptions.KEYGEN_CLASS_NAME))) {

+ // tweak the key gen class if possible

Review Comment:

Hudi configure keygen clazz auto is great, so the option should not exists,

once configured but not effect, is it strange?The code in spark has changed to

follow hudi-partition way, but in historical data, if the layout of

non-partitioned table with complex key by spark, the only chance for hudi-flink

it to configure keygen.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] TJX2014 commented on a diff in pull request #6567: [HUDI-4767] Fix non partition table in hudi-flink ignore KEYGEN_CLASS…

TJX2014 commented on code in PR #6567:

URL: https://github.com/apache/hudi/pull/6567#discussion_r962542029

##

hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/table/HoodieTableFactory.java:

##

@@ -217,31 +217,33 @@ private static void setupHoodieKeyOptions(Configuration

conf, CatalogTable table

}

}

-// tweak the key gen class if possible

-final String[] partitions =

conf.getString(FlinkOptions.PARTITION_PATH_FIELD).split(",");

-final String[] pks =

conf.getString(FlinkOptions.RECORD_KEY_FIELD).split(",");

-if (partitions.length == 1) {

- final String partitionField = partitions[0];

- if (partitionField.isEmpty()) {

-conf.setString(FlinkOptions.KEYGEN_CLASS_NAME,

NonpartitionedAvroKeyGenerator.class.getName());

-LOG.info("Table option [{}] is reset to {} because this is a

non-partitioned table",

-FlinkOptions.KEYGEN_CLASS_NAME.key(),

NonpartitionedAvroKeyGenerator.class.getName());

-return;

+if (StringUtils.isNullOrEmpty(conf.get(FlinkOptions.KEYGEN_CLASS_NAME))) {

+ // tweak the key gen class if possible

Review Comment:

Hudi configure keygen clazz auto is great, so the option should not exists,

once configured but not effect, is it strange?The code in spark has changed to

follow hudi-partition way, but in historical data, if the layout of

non-partitioned table with complex key by spark, the only chance for hudi-flink

it to configure keygen.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6566: [HUDI-4766] Fix HoodieFlinkClusteringJob

hudi-bot commented on PR #6566: URL: https://github.com/apache/hudi/pull/6566#issuecomment-1236605102 ## CI report: * b10c9d062f03c2c2675866c6f4bf6346dc03ea49 UNKNOWN * a2dcd81f74603e88c4db895900d43eee6702a6da UNKNOWN * c404647afc6d26bc0e69a7a8ef93f378b397bb96 UNKNOWN * 1709f71ae9494da4d7ca6b9c62ac97cd11dd8046 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11146) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

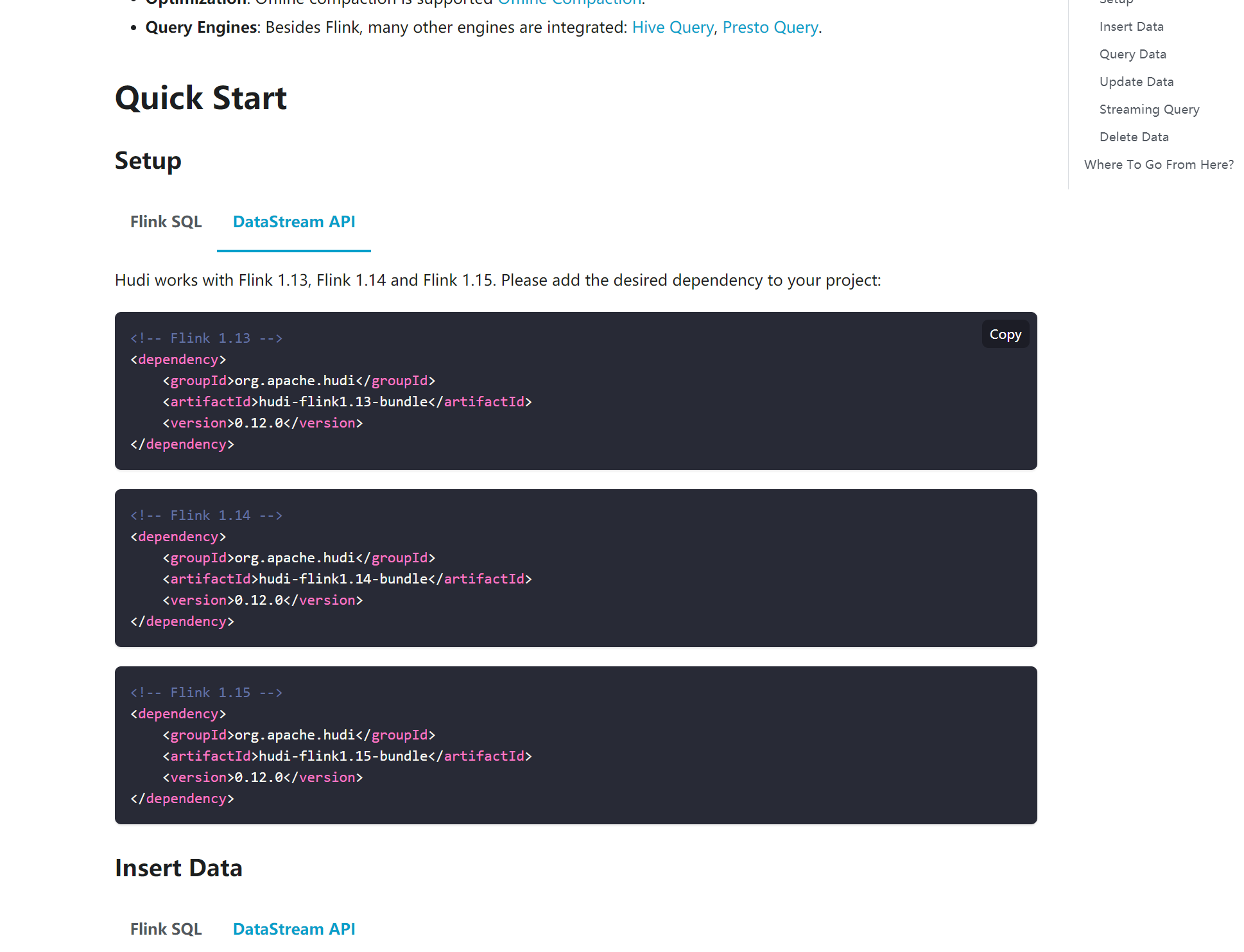

[GitHub] [hudi] danny0405 commented on pull request #6582: [DOCS] Add Flink DataStream API demo in Flink Guide.

danny0405 commented on PR #6582: URL: https://github.com/apache/hudi/pull/6582#issuecomment-1236604028 Thanks, can we also add the doc for archived release 0.12.0 ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] boneanxs commented on a diff in pull request #6046: [HUDI-4363] Support Clustering row writer to improve performance

boneanxs commented on code in PR #6046:

URL: https://github.com/apache/hudi/pull/6046#discussion_r962540143

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/execution/bulkinsert/RowSpatialCurveSortPartitioner.java:

##

@@ -0,0 +1,75 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.execution.bulkinsert;

+

+import org.apache.hudi.config.HoodieClusteringConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.sort.SpaceCurveSortingHelper;

+import org.apache.spark.sql.Dataset;

+import org.apache.spark.sql.Row;

+

+import java.util.Arrays;

+import java.util.List;

+

+public class RowSpatialCurveSortPartitioner extends

RowCustomColumnsSortPartitioner {

+

+ private final String[] orderByColumns;

+ private final HoodieClusteringConfig.LayoutOptimizationStrategy

layoutOptStrategy;

+ private final HoodieClusteringConfig.SpatialCurveCompositionStrategyType

curveCompositionStrategyType;

+

+ public RowSpatialCurveSortPartitioner(HoodieWriteConfig config) {

+super(config);

+this.layoutOptStrategy = config.getLayoutOptimizationStrategy();

+if (config.getClusteringSortColumns() != null) {

+ this.orderByColumns =

Arrays.stream(config.getClusteringSortColumns().split(","))

+ .map(String::trim).toArray(String[]::new);

+} else {

+ this.orderByColumns = getSortColumnNames();

+}

+this.curveCompositionStrategyType =

config.getLayoutOptimizationCurveBuildMethod();

+ }

+

+ @Override

+ public Dataset repartitionRecords(Dataset records, int

outputPartitions) {

+return reorder(records, outputPartitions);

Review Comment:

Looks When building clustering plan, we already consider this, only same

partition files will be combined to one `clusteringGroup`, so maybe we don't

need to handle it here?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #6385: [HUDI-4614] fix primary key extract of delete_record when complexKeyGen configured and ChangeLogDisabled

danny0405 commented on code in PR #6385:

URL: https://github.com/apache/hudi/pull/6385#discussion_r962539009

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/KeyGenUtils.java:

##

@@ -73,21 +73,20 @@ public static String

getPartitionPathFromGenericRecord(GenericRecord genericReco

*/

public static String[] extractRecordKeys(String recordKey) {

String[] fieldKV = recordKey.split(",");

-if (fieldKV.length == 1) {

- return fieldKV;

-} else {

- // a complex key

- return Arrays.stream(fieldKV).map(kv -> {

-final String[] kvArray = kv.split(":");

-if (kvArray[1].equals(NULL_RECORDKEY_PLACEHOLDER)) {

- return null;

-} else if (kvArray[1].equals(EMPTY_RECORDKEY_PLACEHOLDER)) {

- return "";

-} else {

- return kvArray[1];

-}

- }).toArray(String[]::new);

-}

+

+return Arrays.stream(fieldKV).map(kv -> {

+ final String[] kvArray = kv.split(":");

Review Comment:

Thanks, we can rebase the PR and fix it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] prasannarajaperumal commented on a diff in pull request #6476: [HUDI-3478] Support CDC for Spark in Hudi

prasannarajaperumal commented on code in PR #6476:

URL: https://github.com/apache/hudi/pull/6476#discussion_r962517070

##

hudi-common/src/main/java/org/apache/hudi/common/table/cdc/CDCExtractor.java:

##

@@ -0,0 +1,359 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common.table.cdc;

+

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.Path;

+

+import org.apache.hudi.common.fs.FSUtils;

+import org.apache.hudi.common.model.HoodieBaseFile;

+import org.apache.hudi.common.model.HoodieCommitMetadata;

+import org.apache.hudi.common.model.HoodieFileFormat;

+import org.apache.hudi.common.model.HoodieFileGroupId;

+import org.apache.hudi.common.model.HoodieLogFile;

+import org.apache.hudi.common.model.HoodieReplaceCommitMetadata;

+import org.apache.hudi.common.model.HoodieTableType;

+import org.apache.hudi.common.model.HoodieWriteStat;

+import org.apache.hudi.common.model.FileSlice;

+import org.apache.hudi.common.model.WriteOperationType;

+import org.apache.hudi.common.table.HoodieTableConfig;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieActiveTimeline;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.table.view.HoodieTableFileSystemView;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.StringUtils;

+import org.apache.hudi.common.util.collection.Pair;

+import org.apache.hudi.exception.HoodieException;

+import org.apache.hudi.exception.HoodieIOException;

+import org.apache.hudi.exception.HoodieNotSupportedException;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.Locale;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.stream.Collectors;

+

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.ADD_BASE_FILE;

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.CDC_LOG_FILE;

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.MOR_LOG_FILE;

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.REMOVE_BASE_FILE;

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.REPLACED_FILE_GROUP;

+import static

org.apache.hudi.common.table.timeline.HoodieTimeline.COMMIT_ACTION;

+import static

org.apache.hudi.common.table.timeline.HoodieTimeline.DELTA_COMMIT_ACTION;

+import static org.apache.hudi.common.table.timeline.HoodieTimeline.isInRange;

+import static

org.apache.hudi.common.table.timeline.HoodieTimeline.REPLACE_COMMIT_ACTION;

+

+public class CDCExtractor {

+

+ private final HoodieTableMetaClient metaClient;

+

+ private final Path basePath;

+

+ private final FileSystem fs;

+

+ private final String supplementalLoggingMode;

+

+ private final String startInstant;

+

+ private final String endInstant;

+

+ // TODO: this will be used when support the cdc query type of

'read_optimized'.

+ private final String cdcQueryType;

+

+ private Map commits;

+

+ private HoodieTableFileSystemView fsView;

+

+ public CDCExtractor(

+ HoodieTableMetaClient metaClient,

+ String startInstant,

+ String endInstant,

+ String cdcqueryType) {

+this.metaClient = metaClient;

+this.basePath = metaClient.getBasePathV2();

+this.fs = metaClient.getFs().getFileSystem();

+this.supplementalLoggingMode =

metaClient.getTableConfig().cdcSupplementalLoggingMode();

+this.startInstant = startInstant;

+this.endInstant = endInstant;

+if (HoodieTableType.MERGE_ON_READ == metaClient.getTableType()

+&& cdcqueryType.equals("read_optimized")) {

+ throw new HoodieNotSupportedException("The 'read_optimized' cdc query

type hasn't been supported for now.");

+}

+this.cdcQueryType = cdcqueryType;

+init();

+ }

+

+ private void init() {

+initInstantAndCommitMetadatas();

+initFSView();

+ }

+

+ /**

+ * At the granularity of a file group, trace the mapping between

+ * eac

[GitHub] [hudi] prasannarajaperumal commented on a diff in pull request #6476: [HUDI-3478] Support CDC for Spark in Hudi

prasannarajaperumal commented on code in PR #6476:

URL: https://github.com/apache/hudi/pull/6476#discussion_r962510356

##

hudi-common/src/main/java/org/apache/hudi/avro/SerializableRecord.java:

##

@@ -0,0 +1,42 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.avro;

+

+import org.apache.avro.generic.GenericData;

+

+import java.io.Serializable;

+

+/**

+ * In some cases like putting the [[GenericData.Record]] into

[[ExternalSpillableMap]],

+ * objects is asked to extend [[Serializable]].

+ *

+ * This class wraps [[GenericData.Record]].

+ */

+public class SerializableRecord implements Serializable {

Review Comment:

How does this work? GenericData.Record is not serializable so how would

storing this in SpillableMap actually serialize and de-serialize the data when

spilled.

1. We should write a test on the spillable property with CDC context.

2. If the serialization is not thought through - Create something similar to

HoodieAvroPayload (HoodieCDCPayload) and store the contents as byte[]

##

hudi-common/src/main/java/org/apache/hudi/common/table/cdc/CDCExtractor.java:

##

@@ -0,0 +1,359 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common.table.cdc;

+

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.Path;

+

+import org.apache.hudi.common.fs.FSUtils;

+import org.apache.hudi.common.model.HoodieBaseFile;

+import org.apache.hudi.common.model.HoodieCommitMetadata;

+import org.apache.hudi.common.model.HoodieFileFormat;

+import org.apache.hudi.common.model.HoodieFileGroupId;

+import org.apache.hudi.common.model.HoodieLogFile;

+import org.apache.hudi.common.model.HoodieReplaceCommitMetadata;

+import org.apache.hudi.common.model.HoodieTableType;

+import org.apache.hudi.common.model.HoodieWriteStat;

+import org.apache.hudi.common.model.FileSlice;

+import org.apache.hudi.common.model.WriteOperationType;

+import org.apache.hudi.common.table.HoodieTableConfig;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieActiveTimeline;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.table.view.HoodieTableFileSystemView;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.StringUtils;

+import org.apache.hudi.common.util.collection.Pair;

+import org.apache.hudi.exception.HoodieException;

+import org.apache.hudi.exception.HoodieIOException;

+import org.apache.hudi.exception.HoodieNotSupportedException;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.Locale;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.stream.Collectors;

+

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.ADD_BASE_FILE;

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.CDC_LOG_FILE;

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.MOR_LOG_FILE;

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.REMOVE_BASE_FILE;

+import static

org.apache.hudi.common.table.cdc.HoodieCDCLogicalFileType.REPLACED_FILE_GROUP;

+import static

org.apache.hudi.co

[GitHub] [hudi] nleena123 commented on issue #5540: [SUPPORT]HoodieException: Commit 20220509105215 failed and rolled-back ! at org.apache.hudi.utilities.deltastreamer.DeltaSync.writeToSink(DeltaSync.

nleena123 commented on issue #5540: URL: https://github.com/apache/hudi/issues/5540#issuecomment-1236584029 still i could see the same issue do i need to follow the below step to fix the issue ? --conf spark.driver.extraJavaOptions="-Dlog4j.configuration=file:/home/hadoop/log4j.properties" --conf spark.executor.extraJavaOptions="-Dlog4j.configuration=file:/home/hadoop/log4j.properties" but i am getting this issue while running data bricks job ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] dik111 closed issue #6588: [SUPPORT]Caused by: java.lang.ClassNotFoundException: org.apache.hudi.org.apache.avro.util.Utf8

dik111 closed issue #6588: [SUPPORT]Caused by: java.lang.ClassNotFoundException: org.apache.hudi.org.apache.avro.util.Utf8 URL: https://github.com/apache/hudi/issues/6588 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] dik111 commented on issue #6588: [SUPPORT]Caused by: java.lang.ClassNotFoundException: org.apache.hudi.org.apache.avro.util.Utf8

dik111 commented on issue #6588: URL: https://github.com/apache/hudi/issues/6588#issuecomment-1236580669 I solved this problem by adding the following configuration in Packaging/Hudi-spark-bundle/pom.xml ``` ... org.apache.avro:avro ... ... org.apache.avro. org.apache.hudi.org.apache.avro. ... ... org.apache.avro avro 1.8.2 compile ... ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope commented on a diff in pull request #6587: [HUDI-4775] Fixing incremental source for MOR table

codope commented on code in PR #6587:

URL: https://github.com/apache/hudi/pull/6587#discussion_r962515108

##

hudi-utilities/src/test/java/org/apache/hudi/utilities/sources/TestHoodieIncrSource.java:

##

@@ -55,20 +66,39 @@ public class TestHoodieIncrSource extends

SparkClientFunctionalTestHarness {

private HoodieTestDataGenerator dataGen;

private HoodieTableMetaClient metaClient;

+ private HoodieTableType tableType = COPY_ON_WRITE;

@BeforeEach

public void setUp() throws IOException {

dataGen = new HoodieTestDataGenerator();

-metaClient = getHoodieMetaClient(hadoopConf(), basePath());

}

- @Test

- public void testHoodieIncrSource() throws IOException {

+ @Override

+ public HoodieTableMetaClient getHoodieMetaClient(Configuration hadoopConf,

String basePath, Properties props) throws IOException {

+props = HoodieTableMetaClient.withPropertyBuilder()

+.setTableName(RAW_TRIPS_TEST_NAME)

+.setTableType(tableType)

+.setPayloadClass(HoodieAvroPayload.class)

+.fromProperties(props)

+.build();

+return HoodieTableMetaClient.initTableAndGetMetaClient(hadoopConf,

basePath, props);

+ }

+

+ private static Stream tableTypeParams() {

+return Arrays.stream(new HoodieTableType[][]

{{HoodieTableType.COPY_ON_WRITE},

{HoodieTableType.MERGE_ON_READ}}).map(Arguments::of);

+ }

+

+ @ParameterizedTest

+ @MethodSource("tableTypeParams")

+ public void testHoodieIncrSource(HoodieTableType tableType) throws

IOException {

+this.tableType = tableType;

+metaClient = getHoodieMetaClient(hadoopConf(), basePath());

HoodieWriteConfig writeConfig = getConfigBuilder(basePath(), metaClient)

.withArchivalConfig(HoodieArchivalConfig.newBuilder().archiveCommitsWith(2,

3).build())

.withCleanConfig(HoodieCleanConfig.newBuilder().retainCommits(1).build())

+

.withCompactionConfig(HoodieCompactionConfig.newBuilder().withInlineCompaction(true).withMaxNumDeltaCommitsBeforeCompaction(3).build())

.withMetadataConfig(HoodieMetadataConfig.newBuilder()

-.withMaxNumDeltaCommitsBeforeCompaction(1).build())

+.enable(false).build())

Review Comment:

got it

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[hudi] branch master updated (edbd7fd6cc -> d2c46fb62a)

This is an automated email from the ASF dual-hosted git repository. codope pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git from edbd7fd6cc [HUDI-4528] Add diff tool to compare commit metadata (#6485) add d2c46fb62a [HUDI-4648] Support rename partition through CLI (#6569) No new revisions were added by this update. Summary of changes: .../apache/hudi/cli/commands/RepairsCommand.java | 32 +++- .../org/apache/hudi/cli/commands/SparkMain.java| 87 +- .../hudi/cli/commands/TestRepairsCommand.java | 48 3 files changed, 146 insertions(+), 21 deletions(-)

[GitHub] [hudi] codope merged pull request #6569: [HUDI-4648] Support rename partition through CLI

codope merged PR #6569: URL: https://github.com/apache/hudi/pull/6569 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch master updated (24dd00724c -> edbd7fd6cc)

This is an automated email from the ASF dual-hosted git repository. codope pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git from 24dd00724c [HUDI-4739] Wrong value returned when key's length equals 1 (#6539) add edbd7fd6cc [HUDI-4528] Add diff tool to compare commit metadata (#6485) No new revisions were added by this update. Summary of changes: .../apache/hudi/cli/HoodieTableHeaderFields.java | 32 +++ .../apache/hudi/cli/commands/CommitsCommand.java | 229 - .../hudi/cli/commands/CompactionCommand.java | 52 ++--- .../org/apache/hudi/cli/commands/DiffCommand.java | 184 + .../cli/commands/HoodieSyncValidateCommand.java| 5 +- .../apache/hudi/cli/commands/RollbacksCommand.java | 34 +++ .../java/org/apache/hudi/cli/utils/CommitUtil.java | 4 +- .../hudi/cli/commands/TestCommitsCommand.java | 87 +--- .../hudi/cli/commands/TestCompactionCommand.java | 2 +- .../apache/hudi/cli/commands/TestDiffCommand.java | 147 + .../HoodieTestCommitMetadataGenerator.java | 32 ++- .../hudi/common/table/HoodieTableMetaClient.java | 7 +- .../table/timeline/HoodieArchivedTimeline.java | 2 +- .../table/timeline/HoodieDefaultTimeline.java | 15 ++ .../hudi/common/table/timeline/TimelineUtils.java | 10 +- 15 files changed, 630 insertions(+), 212 deletions(-) create mode 100644 hudi-cli/src/main/java/org/apache/hudi/cli/commands/DiffCommand.java create mode 100644 hudi-cli/src/test/java/org/apache/hudi/cli/commands/TestDiffCommand.java

[GitHub] [hudi] codope merged pull request #6485: [HUDI-4528] Add diff tool to compare commit metadata

codope merged PR #6485: URL: https://github.com/apache/hudi/pull/6485 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on a diff in pull request #6587: [HUDI-4775] Fixing incremental source for MOR table

nsivabalan commented on code in PR #6587:

URL: https://github.com/apache/hudi/pull/6587#discussion_r962507509

##

hudi-utilities/src/test/java/org/apache/hudi/utilities/sources/TestHoodieIncrSource.java:

##

@@ -55,20 +66,39 @@ public class TestHoodieIncrSource extends

SparkClientFunctionalTestHarness {

private HoodieTestDataGenerator dataGen;

private HoodieTableMetaClient metaClient;

+ private HoodieTableType tableType = COPY_ON_WRITE;

@BeforeEach

public void setUp() throws IOException {

dataGen = new HoodieTestDataGenerator();

-metaClient = getHoodieMetaClient(hadoopConf(), basePath());

}

- @Test

- public void testHoodieIncrSource() throws IOException {

+ @Override

+ public HoodieTableMetaClient getHoodieMetaClient(Configuration hadoopConf,

String basePath, Properties props) throws IOException {

+props = HoodieTableMetaClient.withPropertyBuilder()

+.setTableName(RAW_TRIPS_TEST_NAME)

+.setTableType(tableType)

+.setPayloadClass(HoodieAvroPayload.class)

+.fromProperties(props)

+.build();

+return HoodieTableMetaClient.initTableAndGetMetaClient(hadoopConf,

basePath, props);

+ }

+

+ private static Stream tableTypeParams() {

+return Arrays.stream(new HoodieTableType[][]

{{HoodieTableType.COPY_ON_WRITE},

{HoodieTableType.MERGE_ON_READ}}).map(Arguments::of);

+ }

+

+ @ParameterizedTest

+ @MethodSource("tableTypeParams")

+ public void testHoodieIncrSource(HoodieTableType tableType) throws

IOException {

+this.tableType = tableType;

+metaClient = getHoodieMetaClient(hadoopConf(), basePath());

HoodieWriteConfig writeConfig = getConfigBuilder(basePath(), metaClient)

.withArchivalConfig(HoodieArchivalConfig.newBuilder().archiveCommitsWith(2,

3).build())

.withCleanConfig(HoodieCleanConfig.newBuilder().retainCommits(1).build())

+

.withCompactionConfig(HoodieCompactionConfig.newBuilder().withInlineCompaction(true).withMaxNumDeltaCommitsBeforeCompaction(3).build())

.withMetadataConfig(HoodieMetadataConfig.newBuilder()

-.withMaxNumDeltaCommitsBeforeCompaction(1).build())

+.enable(false).build())

Review Comment:

it messes w/ metadata compaction/archival. and so data table archival does

not kick in. I just want to simulate archival in datatable. also, in this test,

there is no real benefit w/ metadata enabled. we are just interested in the

timeline files.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

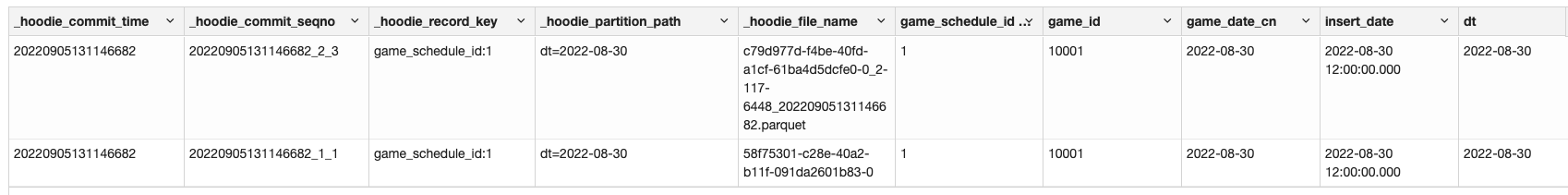

[GitHub] [hudi] FredMkl opened a new issue, #6591: [SUPPORT]Duplicate records in MOR

FredMkl opened a new issue, #6591:

URL: https://github.com/apache/hudi/issues/6591

**Describe the problem you faced**

We use MOR table, we found that when updating an existing set of rows to

another partition will result in both a)generate a parquet file b)an update

written to a log file. This brings duplicate records

**To Reproduce**

```

//action1: spark-dataframe write

import org.apache.spark.sql.SaveMode._

import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.spark.sql.{DataFrame, Row, SparkSession}

import scala.collection.mutable

val tableName = "f_schedule_test"

val basePath = "oss://nbadatalake-poc/fred/warehouse/dw/f_schedule_test"

val spark = SparkSession.builder.enableHiveSupport.getOrCreate

import spark.implicits._

// spark-shell

val df = Seq(

("1", "10001", "2022-08-30","2022-08-30 12:00:00.000","2022-08-30"),

("2", "10002", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("3", "10003", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("4", "10004", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("5", "10005", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("6", "10006", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30")

).toDF("game_schedule_id", "game_id", "game_date_cn", "insert_date",

"dt")

// df.show()

val hudiOptions = mutable.Map(

"hoodie.datasource.write.table.type" -> "MERGE_ON_READ",

"hoodie.datasource.write.operation" -> "upsert",

"hoodie.datasource.write.recordkey.field" -> "game_schedule_id",

"hoodie.datasource.write.precombine.field" -> "insert_date",

"hoodie.datasource.write.partitionpath.field" -> "dt",

"hoodie.index.type" -> "GLOBAL_BLOOM",

"hoodie.compact.inline" -> "true",

"hoodie.datasource.write.keygenerator.class" ->

"org.apache.hudi.keygen.ComplexKeyGenerator"

)

//step1: insert --no issue

df.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

//step2: move part data to another partition --no issue

val df1 = spark.sql("select * from dw.f_schedule_test where dt =

'2022-08-30'").withColumn("dt",lit("2022-08-31")).limit(3)

df1.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

//step3: move back --duplicate occurs

//Updating an existing set of rows will result in either a) a companion

log/delta file for an existing base parquet file generated from a previous

compaction or b) an update written to a log/delta file in case no compaction

ever happened for it.

val df2 = spark.sql("select * from dw.f_schedule_test where dt =

'2022-08-31'").withColumn("dt",lit("2022-08-30")).limit(3)

df2.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

```

**Checking scripts:**

```

select * from dw.f_schedule_test where game_schedule_id = 1;

select _hoodie_file_name,count(*) as co from dw.f_schedule_test group by

_hoodie_file_name;

```

**results:**

**Expected behavior**

Duplicate records should not occur

**Environment Description**

* Hudi version :0.10.1

* Spark version :3.2.1

* Hive version :3.1.2

* Hadoop version :3.2.1

* Storage (HDFS/S3/GCS..) :OSS

* Running on Docker? (yes/no) :no

[hoodie.zip](https://github.com/apache/hudi/files/9487065/hoodie.zip)

**Stacktrace**

```Pls check logs as attached

[hoodie.zip](https://github.com/apache/hudi/files/9487078/hoodie.zip)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] Xiaohan-Shen opened a new issue, #6590: [SUPPORT] HoodieDeltaStreamer AWSDmsAvroPayload fails to handle deletes in MySQL

Xiaohan-Shen opened a new issue, #6590: URL: https://github.com/apache/hudi/issues/6590 **Describe the problem you faced** inspired by this [blog](https://cwiki.apache.org/confluence/display/HUDI/2020/01/20/Change+Capture+Using+AWS+Database+Migration+Service+and+Hudi), I am trying to set up Hudi Deltastreamer to continuously pick up changes in MySQL for a performance benchmark. My setup hosts MySQL on **AWS RDS**, captures changes in MySQL with **AWS DMS** as Parquet in S3, and runs HoodieDeltaStreamer with `--continuous` on **AWS EMR** to write the changes into a Hudi table on S3. It's working fine with updates and inserts but throws exceptions on deletes. The row deleted in MySQL is not deleted in the Hudi table. I am new to Hudi so it's possible I have something configured wrong. **To Reproduce** Steps to reproduce the behavior: 1. Follow the setup steps in the [blog](https://cwiki.apache.org/confluence/display/HUDI/2020/01/20/Change+Capture+Using+AWS+Database+Migration+Service+and+Hudi) 2. use this command for starting HoodieDeltaStreamer: ``` spark-submit --jars /usr/lib/spark/external/lib/spark-avro.jar,/usr/lib/hudi/hudi-spark-bundle.jar,/usr/lib/hudi/hudi-utilities-bundle.jar --class org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer --packages org.apache.hudi:hudi-spark-bundle_2.12:0.11.0,org.apache.spark:spark-avro_2.12:3.2.1 --master yarn --deploy-mode client /usr/lib/hudi/hudi-utilities-bundle.jar --table-type COPY_ON_WRITE --source-ordering-field updated_at --source-class org.apache.hudi.utilities.sources.ParquetDFSSource --target-base-path s3://mysql-data-replication/hudi_orders --target-table hudi_orders --transformer-class org.apache.hudi.utilities.transform.AWSDmsTransformer --continuous --hoodie-conf hoodie.datasource.write.recordkey.field=order_id --hoodie-conf hoodie.datasource.write.partitionpath.field=customer_name --hoodie-conf hoodie.deltastreamer.source.dfs.root=s3://mysql-data-replication/hudi_dms/orders --payload-class org.apache.hudi.payload.AWSDmsAvroPayload ``` 3. Insert a few rows to the MySQL table 4. Delete a row **Expected behavior** Hudi should monitor and capture any changes (Inserts, updates, and deletes) in the MySQL table and writes them into the Hudi table. I specified `--payload-class org.apache.hudi.payload.AWSDmsAvroPayload`, which should tell Hudi the right way to handle a row with `Op = D`. I.e. when a row in MySQL is deleted, Hudi should capture the change and delete the corresponding row in the Hudi table. **Environment Description** * Hudi version : 0.11.0 * Spark version : 3.2.1 * Hive version : should be irrelevant, but 3.1.3 * Hadoop version : 3.2.1 * Storage (HDFS/S3/GCS..) : S3 * Running on Docker? (yes/no) : no **Additional context** The command I ran to start Hudi is slightly different from that provided in the blog. The original one didn't work for me out of the box. Please let me know if I passed in the wrong configs in the command that might've caused this issue. **Stacktrace** ``` Caused by: java.util.NoSuchElementException: No value present in Option at org.apache.hudi.common.util.Option.get(Option.java:89) at org.apache.hudi.payload.AWSDmsAvroPayload.getInsertValue(AWSDmsAvroPayload.java:74) at org.apache.hudi.io.HoodieMergeHandle.writeInsertRecord(HoodieMergeHandle.java:272) at org.apache.hudi.io.HoodieMergeHandle.writeIncomingRecords(HoodieMergeHandle.java:380) at org.apache.hudi.io.HoodieMergeHandle.close(HoodieMergeHandle.java:388) at org.apache.hudi.table.action.commit.HoodieMergeHelper.runMerge(HoodieMergeHelper.java:154) at org.apache.hudi.table.action.commit.BaseSparkCommitActionExecutor.handleUpdateInternal(BaseSparkCommitActionExecutor.java:358) at org.apache.hudi.table.action.commit.BaseSparkCommitActionExecutor.handleUpdate(BaseSparkCommitActionExecutor.java:349) at org.apache.hudi.table.action.commit.BaseSparkCommitActionExecutor.handleUpsertPartition(BaseSparkCommitActionExecutor.java:322) ... 28 more Driver stacktrace: at org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer$DeltaSyncService.lambda$startService$0(HoodieDeltaStreamer.java:709) at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1604) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:750) Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 156.0 failed 4 times, most recent failure: Lost task 0.3 in stage 156.0 (TID 6494)

[GitHub] [hudi] hudi-bot commented on pull request #6574: Keep a clustering running at the same time.#6573

hudi-bot commented on PR #6574: URL: https://github.com/apache/hudi/pull/6574#issuecomment-1236562876 ## CI report: * bcc7396d9357eb792a0c7a61335910cb16746a62 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9) * 6dd530a55de90fe931c22597d453c92b56bb31c1 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11148) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] ROOBALJINDAL commented on issue #5540: [SUPPORT]HoodieException: Commit 20220509105215 failed and rolled-back ! at org.apache.hudi.utilities.deltastreamer.DeltaSync.writeToSink(DeltaSy

ROOBALJINDAL commented on issue #5540: URL: https://github.com/apache/hudi/issues/5540#issuecomment-1236559491 @nsivabalan this was my issue which I have already closed. https://github.com/apache/hudi/issues/6348 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6574: Keep a clustering running at the same time.#6573

hudi-bot commented on PR #6574: URL: https://github.com/apache/hudi/pull/6574#issuecomment-1236559694 ## CI report: * bcc7396d9357eb792a0c7a61335910cb16746a62 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9) * 6dd530a55de90fe931c22597d453c92b56bb31c1 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] flashJd commented on a diff in pull request #6385: [HUDI-4614] fix primary key extract of delete_record when complexKeyGen configured and ChangeLogDisabled

flashJd commented on code in PR #6385:

URL: https://github.com/apache/hudi/pull/6385#discussion_r962494062

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/KeyGenUtils.java:

##

@@ -73,21 +73,20 @@ public static String

getPartitionPathFromGenericRecord(GenericRecord genericReco

*/

public static String[] extractRecordKeys(String recordKey) {

String[] fieldKV = recordKey.split(",");

-if (fieldKV.length == 1) {

- return fieldKV;

-} else {

- // a complex key

- return Arrays.stream(fieldKV).map(kv -> {

-final String[] kvArray = kv.split(":");

-if (kvArray[1].equals(NULL_RECORDKEY_PLACEHOLDER)) {

- return null;

-} else if (kvArray[1].equals(EMPTY_RECORDKEY_PLACEHOLDER)) {

- return "";

-} else {

- return kvArray[1];

-}

- }).toArray(String[]::new);

-}

+

+return Arrays.stream(fieldKV).map(kv -> {

+ final String[] kvArray = kv.split(":");

Review Comment:

> Yeah, i have merged #6539 , so this pr can be closed.

@danny0405 #6539 has little problem, if it's single pk and simple key

generator, we'll store 'danny' not 'id:danny', so kvArray[1] will be null point

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] CaesarWangX commented on issue #6543: [SUPPORT] Unable to load class of UserDefinedMetricsReporter in hudi0.11

CaesarWangX commented on issue #6543: URL: https://github.com/apache/hudi/issues/6543#issuecomment-1236558188 Hi @Zouxxyy Thanks for your suggestion. I tried your method, but I still got the same error spark-submit \ --master yarn \ --deploy-mode cluster \ --name \ --queue clustering \ --class com.test.MainClass \ --files test.conf \ --conf spark.driver.extraClassPath=my-jar-1.0.0-SNAPSHOT-jar-with-dependencies.jar \ --jars /usr/lib/hudi/hudi-spark-bundle.jar,/usr/lib/spark/external/lib/spark-avro.jar,/usr/lib/spark/external/lib/spark-sql-kafka-0-10.jar,/usr/lib/spark/external/lib/spark-streaming-kafka-0-10-assembly.jar,/usr/lib/spark/external/lib/spark-token-provider-kafka-0-10.jar \ my-jar-1.0.0-SNAPSHOT-jar-with-dependencies.jar -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

hudi-bot commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1236557079 ## CI report: * 47680402da599615de30c13a1f22f79f3573ee30 UNKNOWN * 5613f14b3d5f1c8aaf8de1730e2f21b78a657150 UNKNOWN * 61586bd9583dd4cb3fe6572d572911ca193faecf Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11144) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope commented on a diff in pull request #6587: [HUDI-4775] Fixing incremental source for MOR table

codope commented on code in PR #6587:

URL: https://github.com/apache/hudi/pull/6587#discussion_r962489007

##

hudi-utilities/src/test/java/org/apache/hudi/utilities/sources/TestHoodieIncrSource.java:

##

@@ -55,20 +66,39 @@ public class TestHoodieIncrSource extends

SparkClientFunctionalTestHarness {

private HoodieTestDataGenerator dataGen;

private HoodieTableMetaClient metaClient;

+ private HoodieTableType tableType = COPY_ON_WRITE;

@BeforeEach

public void setUp() throws IOException {

dataGen = new HoodieTestDataGenerator();

-metaClient = getHoodieMetaClient(hadoopConf(), basePath());

}

- @Test

- public void testHoodieIncrSource() throws IOException {

+ @Override

+ public HoodieTableMetaClient getHoodieMetaClient(Configuration hadoopConf,

String basePath, Properties props) throws IOException {

+props = HoodieTableMetaClient.withPropertyBuilder()

+.setTableName(RAW_TRIPS_TEST_NAME)

+.setTableType(tableType)

+.setPayloadClass(HoodieAvroPayload.class)

+.fromProperties(props)

+.build();

+return HoodieTableMetaClient.initTableAndGetMetaClient(hadoopConf,

basePath, props);

+ }

+

+ private static Stream tableTypeParams() {

+return Arrays.stream(new HoodieTableType[][]

{{HoodieTableType.COPY_ON_WRITE},

{HoodieTableType.MERGE_ON_READ}}).map(Arguments::of);

+ }

+

+ @ParameterizedTest

+ @MethodSource("tableTypeParams")

+ public void testHoodieIncrSource(HoodieTableType tableType) throws

IOException {

+this.tableType = tableType;

+metaClient = getHoodieMetaClient(hadoopConf(), basePath());

HoodieWriteConfig writeConfig = getConfigBuilder(basePath(), metaClient)

.withArchivalConfig(HoodieArchivalConfig.newBuilder().archiveCommitsWith(2,

3).build())

.withCleanConfig(HoodieCleanConfig.newBuilder().retainCommits(1).build())

+

.withCompactionConfig(HoodieCompactionConfig.newBuilder().withInlineCompaction(true).withMaxNumDeltaCommitsBeforeCompaction(3).build())

.withMetadataConfig(HoodieMetadataConfig.newBuilder()

-.withMaxNumDeltaCommitsBeforeCompaction(1).build())

+.enable(false).build())

Review Comment:

Why false? Let's keep it default?

##

hudi-utilities/src/main/java/org/apache/hudi/utilities/sources/helpers/IncrSourceHelper.java:

##

@@ -73,7 +73,7 @@ public static Pair>

calculateBeginAndEndInstants(Ja

HoodieTableMetaClient srcMetaClient =

HoodieTableMetaClient.builder().setConf(jssc.hadoopConfiguration()).setBasePath(srcBasePath).setLoadActiveTimelineOnLoad(true).build();

final HoodieTimeline activeCommitTimeline =

-

srcMetaClient.getActiveTimeline().getCommitTimeline().filterCompletedInstants();

+

srcMetaClient.getCommitsAndCompactionTimeline().filterCompletedInstants();

Review Comment:

Eventually, we should replace this API. Simply use

`metaClient.getActiveTimeline().getWriteTimeline()` as much as possible. I

don't think this API brings any real benefit apart from filtering out certain

types (deltacommit and compaction) for COW table. Anyway, such commits won't be

there for COW table and active timeline has already been loaded by that time.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hechao-ustc commented on pull request #6582: [DOCS] Add Flink DataStream API demo in Flink Guide.

hechao-ustc commented on PR #6582: URL: https://github.com/apache/hudi/pull/6582#issuecomment-1236542371 > Thanks for the contribution @hechao-ustc , i have left one small comment. @danny0405 hi danny,Thanks for your comment,I have updated the content:  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6589: [HUDI-4776] fix merge into use unresolved assignment

hudi-bot commented on PR #6589: URL: https://github.com/apache/hudi/pull/6589#issuecomment-1236532554 ## CI report: * 2779ca40748e4aa90ddecb01288c61fe478767a1 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11147) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6566: [HUDI-4766] Fix HoodieFlinkClusteringJob

hudi-bot commented on PR #6566: URL: https://github.com/apache/hudi/pull/6566#issuecomment-1236532503 ## CI report: * b10c9d062f03c2c2675866c6f4bf6346dc03ea49 UNKNOWN * a2dcd81f74603e88c4db895900d43eee6702a6da UNKNOWN * c404647afc6d26bc0e69a7a8ef93f378b397bb96 UNKNOWN * 257a2f2acf08448c082c89510cd731b4d8f1b877 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11130) * 1709f71ae9494da4d7ca6b9c62ac97cd11dd8046 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11146) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6589: [HUDI-4776] fix merge into use unresolved assignment

hudi-bot commented on PR #6589: URL: https://github.com/apache/hudi/pull/6589#issuecomment-1236530342 ## CI report: * 2779ca40748e4aa90ddecb01288c61fe478767a1 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6566: [HUDI-4766] Fix HoodieFlinkClusteringJob

hudi-bot commented on PR #6566: URL: https://github.com/apache/hudi/pull/6566#issuecomment-1236530294 ## CI report: * b10c9d062f03c2c2675866c6f4bf6346dc03ea49 UNKNOWN * a2dcd81f74603e88c4db895900d43eee6702a6da UNKNOWN * c404647afc6d26bc0e69a7a8ef93f378b397bb96 UNKNOWN * 257a2f2acf08448c082c89510cd731b4d8f1b877 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11130) * 1709f71ae9494da4d7ca6b9c62ac97cd11dd8046 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on issue #6588: [SUPPORT]Caused by: java.lang.ClassNotFoundException: org.apache.hudi.org.apache.avro.util.Utf8

danny0405 commented on issue #6588: URL: https://github.com/apache/hudi/issues/6588#issuecomment-1236524891 what jar did you use for spark, you can open the spark jar with command: ```shell vim xxx.jar ``` and search about the missing clazz to see if the jar includes it. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #6385: [HUDI-4614] fix primary key extract of delete_record when complexKeyGen configured and ChangeLogDisabled

danny0405 commented on code in PR #6385:

URL: https://github.com/apache/hudi/pull/6385#discussion_r962462481

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/KeyGenUtils.java:

##

@@ -73,21 +73,20 @@ public static String

getPartitionPathFromGenericRecord(GenericRecord genericReco

*/

public static String[] extractRecordKeys(String recordKey) {

String[] fieldKV = recordKey.split(",");

-if (fieldKV.length == 1) {

- return fieldKV;

-} else {

- // a complex key

- return Arrays.stream(fieldKV).map(kv -> {

-final String[] kvArray = kv.split(":");

-if (kvArray[1].equals(NULL_RECORDKEY_PLACEHOLDER)) {

- return null;

-} else if (kvArray[1].equals(EMPTY_RECORDKEY_PLACEHOLDER)) {

- return "";

-} else {

- return kvArray[1];

-}

- }).toArray(String[]::new);

-}

+

+return Arrays.stream(fieldKV).map(kv -> {

+ final String[] kvArray = kv.split(":");

Review Comment:

Yeah, i have merged #6539 , so this pr can be closed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Resolved] (HUDI-4739) Wrong value returned when length equals 1

[ https://issues.apache.org/jira/browse/HUDI-4739?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen resolved HUDI-4739. -- > Wrong value returned when length equals 1 > - > > Key: HUDI-4739 > URL: https://issues.apache.org/jira/browse/HUDI-4739 > Project: Apache Hudi > Issue Type: Bug > Components: writer-core >Reporter: wuwenchi >Assignee: wuwenchi >Priority: Major > Labels: pull-request-available > Fix For: 0.12.1 > > > In "KeyGenUtils#extractRecordKeys" function, it will return the value > corresponding to the key, but when the length is equal to 1, the key and > value are returned. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (HUDI-4739) Wrong value returned when length equals 1

[ https://issues.apache.org/jira/browse/HUDI-4739?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17600191#comment-17600191 ] Danny Chen commented on HUDI-4739: -- Fixed via master branch: 24dd00724cf8f49b8e2d5ad07afaa7756165e0a7 > Wrong value returned when length equals 1 > - > > Key: HUDI-4739 > URL: https://issues.apache.org/jira/browse/HUDI-4739 > Project: Apache Hudi > Issue Type: Bug > Components: writer-core >Reporter: wuwenchi >Assignee: wuwenchi >Priority: Major > Labels: pull-request-available > Fix For: 0.12.1 > > > In "KeyGenUtils#extractRecordKeys" function, it will return the value > corresponding to the key, but when the length is equal to 1, the key and > value are returned. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[hudi] branch master updated: [HUDI-4739] Wrong value returned when key's length equals 1 (#6539)

This is an automated email from the ASF dual-hosted git repository.

danny0405 pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/hudi.git

The following commit(s) were added to refs/heads/master by this push:

new 24dd00724c [HUDI-4739] Wrong value returned when key's length equals 1

(#6539)

24dd00724c is described below

commit 24dd00724cf8f49b8e2d5ad07afaa7756165e0a7

Author: wuwenchi

AuthorDate: Mon Sep 5 12:10:13 2022 +0800

[HUDI-4739] Wrong value returned when key's length equals 1 (#6539)

* extracts key fields

Co-authored-by: 吴文池

---

.../java/org/apache/hudi/keygen/KeyGenUtils.java | 25 ++-

.../org/apache/hudi/keygen/TestKeyGenUtils.java| 37 ++

2 files changed, 47 insertions(+), 15 deletions(-)

diff --git

a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/KeyGenUtils.java

b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/KeyGenUtils.java

index 362ef208d4..1fd46d31e5 100644

---

a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/KeyGenUtils.java

+++

b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/KeyGenUtils.java

@@ -73,21 +73,16 @@ public class KeyGenUtils {

*/

public static String[] extractRecordKeys(String recordKey) {

String[] fieldKV = recordKey.split(",");

-if (fieldKV.length == 1) {

- return fieldKV;

-} else {

- // a complex key

- return Arrays.stream(fieldKV).map(kv -> {

-final String[] kvArray = kv.split(":");

-if (kvArray[1].equals(NULL_RECORDKEY_PLACEHOLDER)) {

- return null;

-} else if (kvArray[1].equals(EMPTY_RECORDKEY_PLACEHOLDER)) {

- return "";

-} else {

- return kvArray[1];

-}

- }).toArray(String[]::new);

-}

+return Arrays.stream(fieldKV).map(kv -> {

+ final String[] kvArray = kv.split(":");

+ if (kvArray[1].equals(NULL_RECORDKEY_PLACEHOLDER)) {

+return null;

+ } else if (kvArray[1].equals(EMPTY_RECORDKEY_PLACEHOLDER)) {

+return "";

+ } else {

+return kvArray[1];

+ }

+}).toArray(String[]::new);

}

public static String getRecordKey(GenericRecord record, List

recordKeyFields, boolean consistentLogicalTimestampEnabled) {

diff --git

a/hudi-client/hudi-client-common/src/test/java/org/apache/hudi/keygen/TestKeyGenUtils.java

b/hudi-client/hudi-client-common/src/test/java/org/apache/hudi/keygen/TestKeyGenUtils.java

new file mode 100644

index 00..06a6fcd7d7

--- /dev/null

+++

b/hudi-client/hudi-client-common/src/test/java/org/apache/hudi/keygen/TestKeyGenUtils.java

@@ -0,0 +1,37 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.keygen;

+

+import org.junit.jupiter.api.Assertions;

+import org.junit.jupiter.api.Test;

+

+public class TestKeyGenUtils {

+

+ @Test

+ public void testExtractRecordKeys() {

+String[] s1 = KeyGenUtils.extractRecordKeys("id:1");

+Assertions.assertArrayEquals(new String[]{"1"}, s1);

+

+String[] s2 = KeyGenUtils.extractRecordKeys("id:1,id:2");

+Assertions.assertArrayEquals(new String[]{"1", "2"}, s2);

+

+String[] s3 =

KeyGenUtils.extractRecordKeys("id:1,id2:__null__,id3:__empty__");

+Assertions.assertArrayEquals(new String[]{"1", null, ""}, s3);

+ }

+}

[GitHub] [hudi] danny0405 merged pull request #6539: [HUDI-4739] Wrong value returned when key's length equals 1

danny0405 merged PR #6539: URL: https://github.com/apache/hudi/pull/6539 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #6539: [HUDI-4739] Wrong value returned when key's length equals 1

danny0405 commented on code in PR #6539:

URL: https://github.com/apache/hudi/pull/6539#discussion_r962460133

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/KeyGenUtils.java:

##

@@ -73,21 +73,16 @@ public static String

getPartitionPathFromGenericRecord(GenericRecord genericReco

*/

public static String[] extractRecordKeys(String recordKey) {

String[] fieldKV = recordKey.split(",");

-if (fieldKV.length == 1) {

- return fieldKV;

-} else {

- // a complex key

- return Arrays.stream(fieldKV).map(kv -> {

-final String[] kvArray = kv.split(":");

-if (kvArray[1].equals(NULL_RECORDKEY_PLACEHOLDER)) {

- return null;

-} else if (kvArray[1].equals(EMPTY_RECORDKEY_PLACEHOLDER)) {

- return "";

-} else {

- return kvArray[1];

-}

- }).toArray(String[]::new);

-}

+return Arrays.stream(fieldKV).map(kv -> {

+ final String[] kvArray = kv.split(":");

+ if (kvArray[1].equals(NULL_RECORDKEY_PLACEHOLDER)) {

+return null;

+ } else if (kvArray[1].equals(EMPTY_RECORDKEY_PLACEHOLDER)) {

+return "";

+ } else {

+return kvArray[1];

+ }

+}).toArray(String[]::new);

Review Comment:

Thanks, generally we should not use `Complex` key generators for single

field primary key, but the fix makes the logic more robust,

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] dik111 commented on issue #6588: [SUPPORT]Caused by: java.lang.ClassNotFoundException: org.apache.hudi.org.apache.avro.util.Utf8

dik111 commented on issue #6588: URL: https://github.com/apache/hudi/issues/6588#issuecomment-1236519071 > Seems that spark bundle jar does not contain the shaded avro clazz. What should I do about it ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-4776) missing specify value for the preCombineField when use merge into

[

https://issues.apache.org/jira/browse/HUDI-4776?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HUDI-4776:

-

Labels: pull-request-available (was: )

> missing specify value for the preCombineField when use merge into

> -

>

> Key: HUDI-4776

> URL: https://issues.apache.org/jira/browse/HUDI-4776

> Project: Apache Hudi

> Issue Type: Bug

> Components: spark-sql

>Reporter: KnightChess

>Assignee: KnightChess

>Priority: Minor

> Labels: pull-request-available

>

>

> {code:java}

> org.apache.spark.sql.AnalysisException: Missing specify value for the

> preCombineField: ts in merge-into update action. You should add '... update

> set ts = xx' to the when-matched clause. at

> org.apache.spark.sql.hudi.analysis.HoodieResolveReferences$$anonfun$apply$1.$anonfun$applyOrElse$19(HoodieAnalysis.scala:387)

> at

> scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

> at scala.collection.immutable.List.foreach(List.scala:392) at

> scala.collection.TraversableLike.map(TraversableLike.scala:238) at

> scala.collection.TraversableLike.map$(TraversableLike.scala:231) at

> scala.collection.immutable.List.map(List.scala:298) at

> org.apache.spark.sql.hudi.analysis.HoodieResolveReferences$$anonfun$apply$1.$anonfun$applyOrElse$14(HoodieAnalysis.scala:377)

> at

> scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

> at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

> at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

> {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [hudi] KnightChess opened a new pull request, #6589: [HUDI-4776] fix merge into use unresolved assignment

KnightChess opened a new pull request, #6589: URL: https://github.com/apache/hudi/pull/6589 ### Change Logs fix merge into sql use unresolved attr cause use the wrong condition branch `resolve Star assignment` ### Impact _Describe any public API or user-facing feature change or any performance impact._ **Risk level: none | low | medium | high** _Choose one. If medium or high, explain what verification was done to mitigate the risks._ ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on issue #6588: [SUPPORT]Caused by: java.lang.ClassNotFoundException: org.apache.hudi.org.apache.avro.util.Utf8

danny0405 commented on issue #6588: URL: https://github.com/apache/hudi/issues/6588#issuecomment-1236516899 Seems that spark bundle jar does not contain the shaded avro clazz. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org