[jira] [Created] (HUDI-4820) ORC dependency conflict with spark 3.1

xi chaomin created HUDI-4820: Summary: ORC dependency conflict with spark 3.1 Key: HUDI-4820 URL: https://issues.apache.org/jira/browse/HUDI-4820 Project: Apache Hudi Issue Type: Bug Reporter: xi chaomin -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] paul8263 commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

paul8263 commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241525099 @hudi-bot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

hudi-bot commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241501161 ## CI report: * 252b9f49e2c86c7aad7908e5887064b0d0b36932 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11258) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6550: [HUDI-4691] Cleaning up duplicated classes in Spark 3.3 module

hudi-bot commented on PR #6550: URL: https://github.com/apache/hudi/pull/6550#issuecomment-1241498549 ## CI report: * 684ca9bdec8d75a27bf78ec09bf2ba31f67bdda4 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11132) * bd41f0de1043153c18b1a716e6362c56cf03bbf9 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11263) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

hudi-bot commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241498437 ## CI report: * 252b9f49e2c86c7aad7908e5887064b0d0b36932 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11258) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (HUDI-4819) run_sync_tool.sh in hudi-hive-sync fails with classpath errors on release-0.12.0

Pramod Biligiri created HUDI-4819:

-

Summary: run_sync_tool.sh in hudi-hive-sync fails with classpath

errors on release-0.12.0

Key: HUDI-4819

URL: https://issues.apache.org/jira/browse/HUDI-4819

Project: Apache Hudi

Issue Type: Bug

Components: hive, meta-sync

Affects Versions: 0.12.0

Reporter: Pramod Biligiri

Attachments: modified_run_sync_tool.sh

I ran the run_sync_tool.sh script after git cloning and building a new instance

of apache-hudi (branch: release-0.12.0). The script failed with classpath

related errors. Find below the relevant sequence of commands I used:

$ git branch

* (HEAD detached at release-0.12.0)

$ mvn -Dspark3.2 -Dscala-2.12 -DskipTests -Dcheckstyle.skip -Drat.skip clean

install

$ echo $HADOOP_HOME

/home/pramod/2installers/hadoop-2.7.4

$ echo $HIVE_HOME

/home/pramod/2installers/apache-hive-3.1.3-bin

$ /run_sync_tool.sh --jdbc-url jdbc:hive2:\/\/hiveserver:1

--partitioned-by bucket --base-path

/2-pramod/tmp/gcs-integration-test/data/meta-gcs --database default --table

gcs_meta_hive_4 > log.out 2>&1

setting hadoop conf dir

Running Command : java -cp

/home/pramod/2installers/apache-hive-3.1.3-bin/lib/hive-metastore-3.1.3.jar::/home/pramod/2installers/apache-hive-3.1.3-bin/lib/hive-service-3.1.3.jar::/home/pramod/2installers/apache-hive-3.1.3-bin/lib/hive-exec-3.1.3.jar::/home/pramod/2installers/apache-hive-3.1.3-bin/lib/hive-jdbc-3.1.3.jar:/home/pramod/2installers/apache-hive-3.1.3-bin/lib/hive-jdbc-handler-3.1.3.jar::/home/pramod/2installers/apache-hive-3.1.3-bin/lib/jackson-annotations-2.12.0.jar:/home/pramod/2installers/apache-hive-3.1.3-bin/lib/jackson-core-2.12.0.jar:/home/pramod/2installers/apache-hive-3.1.3-bin/lib/jackson-core-asl-1.9.13.jar:/home/pramod/2installers/apache-hive-3.1.3-bin/lib/jackson-databind-2.12.0.jar:/home/pramod/2installers/apache-hive-3.1.3-bin/lib/jackson-dataformat-smile-2.12.0.jar:/home/pramod/2installers/apache-hive-3.1.3-bin/lib/jackson-mapper-asl-1.9.13.jar:/home/pramod/2installers/apache-hive-3.1.3-bin/lib/jackson-module-scala_2.11-2.12.0.jar::/home/pramod/2installers/hadoop-2.7.4/share/hadoop/common/*:/home/pramod/2installers/hadoop-2.7.4/share/hadoop/mapreduce/*:/home/pramod/2installers/hadoop-2.7.4/share/hadoop/hdfs/*:/home/pramod/2installers/hadoop-2.7.4/share/hadoop/common/lib/*:/home/pramod/2installers/hadoop-2.7.4/share/hadoop/hdfs/lib/*:/home/pramod/2installers/hadoop-2.7.4/etc/hadoop:/3-pramod/3workspace/apache-hudi/hudi-sync/hudi-hive-sync/../../packaging/hudi-hive-sync-bundle/target/hudi-hive-sync-bundle-0.12.0.jar

org.apache.hudi.hive.HiveSyncTool --jdbc-url jdbc:hive2://hiveserver:1

--partitioned-by bucket --base-path

/2-pramod/tmp/gcs-integration-test/data/meta-gcs --database default --table

gcs_meta_hive_4

2022-09-08 10:53:24,335 INFO [main] conf.HiveConf

(HiveConf.java:findConfigFile(187)) - Found configuration file

file:/home/pramod/2installers/apache-hive-3.1.3-bin/conf/hive-site.xml

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by

org.apache.hadoop.security.authentication.util.KerberosUtil

(file:/2-pramod/installers/hadoop-2.7.4/share/hadoop/common/lib/hadoop-auth-2.7.4.jar)

to method sun.security.krb5.Config.getInstance()

WARNING: Please consider reporting this to the maintainers of

org.apache.hadoop.security.authentication.util.KerberosUtil

WARNING: Use --illegal-access=warn to enable warnings of further illegal

reflective access operations

WARNING: All illegal access operations will be denied in a future release

2022-09-08 10:53:25,876 WARN [main] util.NativeCodeLoader

(NativeCodeLoader.java:(62)) - Unable to load native-hadoop library for

your platform... using builtin-java classes where applicable

2022-09-08 10:53:26,359 INFO [main] table.HoodieTableMetaClient

(HoodieTableMetaClient.java:(121)) - Loading HoodieTableMetaClient from

/2-pramod/tmp/gcs-integration-test/data/meta-gcs

2022-09-08 10:53:26,568 INFO [main] table.HoodieTableConfig

(HoodieTableConfig.java:(243)) - Loading table properties from

/2-pramod/tmp/gcs-integration-test/data/meta-gcs/.hoodie/hoodie.properties

2022-09-08 10:53:26,585 INFO [main] table.HoodieTableMetaClient

(HoodieTableMetaClient.java:(140)) - Finished Loading Table of type

COPY_ON_WRITE(version=1, baseFileFormat=PARQUET) from

/2-pramod/tmp/gcs-integration-test/data/meta-gcs

2022-09-08 10:53:26,586 INFO [main] table.HoodieTableMetaClient

(HoodieTableMetaClient.java:(143)) - Loading Active commit timeline for

/2-pramod/tmp/gcs-integration-test/data/meta-gcs

2022-09-08 10:53:26,727 INFO [main] timeline.HoodieActiveTimeline

(HoodieActiveTimeline.java:(129)) - Loaded instants upto :

Option\{val=[20220907220948700__commit__COMPLETED]}

Exception in thread "main" java.lang.NoClassDefFoundError:

org/apache/http/config/Lookup

at

[GitHub] [hudi] paul8263 commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

paul8263 commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241480297 @hudi-bot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6550: [HUDI-4691] Cleaning up duplicated classes in Spark 3.3 module

hudi-bot commented on PR #6550: URL: https://github.com/apache/hudi/pull/6550#issuecomment-1241471920 ## CI report: * 684ca9bdec8d75a27bf78ec09bf2ba31f67bdda4 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11132) * bd41f0de1043153c18b1a716e6362c56cf03bbf9 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #6256: [RFC-51][HUDI-3478] Update RFC: CDC support

danny0405 commented on code in PR #6256: URL: https://github.com/apache/hudi/pull/6256#discussion_r966599692 ## rfc/rfc-51/rfc-51.md: ## @@ -215,18 +245,31 @@ Note: - Only instants that are active can be queried in a CDC scenario. - `CDCReader` manages all the things on CDC, and all the spark entrances(DataFrame, SQL, Streaming) call the functions in `CDCReader`. -- If `hoodie.table.cdc.supplemental.logging` is false, we need to do more work to get the change data. The following illustration explains the difference when this config is true or false. +- If `hoodie.table.cdc.supplemental.logging.mode=KEY_OP`, we need to compute the changed data. The following illustrates the difference.  COW table -Reading COW table in CDC query mode is equivalent to reading a simplified MOR table that has no normal log files. +Reading COW tables in CDC query mode is equivalent to reading MOR tables in RO mode. MOR table -According to the design of the writing part, only the cases where writing mor tables will write out the base file (which call the `HoodieMergeHandle` and it's subclasses) will write out the cdc files. -In other words, cdc files will be written out only for the index and file size reasons. +According to the section "Persisting CDC in MOR", CDC data is available upon base files' generation. + +When users want to get fresher real-time CDC results: + +- users are to set `hoodie.datasource.query.incremental.type=snapshot` +- the implementation logic is to compute the results in-flight by reading log files and the corresponding base files ( + current and previous file slices). +- this is equivalent to running incremental-query on MOR RT tables + +When users want to optimize compute-cost and are tolerant with latency of CDC results, + +- users are to set `hoodie.datasource.query.incremental.type=read_optimized` +- the implementation logic is to extract the results by reading persisted CDC data and the corresponding base files ( + current and previous file slices). Review Comment: > can you pls clarify what cases exactly represent writer anomalies I'm a little worried about the cdc semantics correctness before it is widely used in production, such as the deletion, the merging, the data sequence. > 2nd step to support read_optimized i We can all it an optimization, but not exposed as read_optimized, which conflicts a little with current read_optimized view, WDYT. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6631: [HUDI-4810] Fixing Hudi bundles requiring log4j2 on the classpath

hudi-bot commented on PR #6631: URL: https://github.com/apache/hudi/pull/6631#issuecomment-1241469308 ## CI report: * e8e8c4d8047b5985764f7534bd84e82763c3ad28 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11243) * cf25a4e0d37980de4284afe841eced2f205b97a5 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11262) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #6256: [RFC-51][HUDI-3478] Update RFC: CDC support

danny0405 commented on code in PR #6256: URL: https://github.com/apache/hudi/pull/6256#discussion_r966598104 ## rfc/rfc-51/rfc-51.md: ## @@ -148,20 +152,46 @@ hudi_cdc_table/ Under a partition directory, the `.log` file with `CDCBlock` above will keep the changing data we have to materialize. -There is an option to control what data is written to `CDCBlock`, that is `hoodie.table.cdc.supplemental.logging`. See the description of this config above. + Persisting CDC in MOR: Write-on-indexing vs Write-on-compaction + +2 design choices on when to persist CDC in MOR tables: + +Write-on-indexing allows CDC info to be persisted at the earliest, however, in case of Flink writer or Bucket +indexing, `op` (I/U/D) data is not available at indexing. + +Write-on-compaction can always persist CDC info and achieve standardization of implementation logic across engines, +however, some delays are added to the CDC query results. Based on the business requirements, Log Compaction (RFC-48) or +scheduling more frequent compaction can be used to minimize the latency. -Spark DataSource example: +The semantics we propose to establish are: when base files are written, the corresponding CDC data is also persisted. + +- For Spark + - inserts are written to base files: the CDC data `op=I` will be persisted + - updates/deletes that written to log files are compacted into base files: the CDC data `op=U|D` will be persisted +- For Flink + - inserts/updates/deletes that written to log files are compacted into base files: the CDC data `op=I|U|D` will be +persisted + Review Comment: That's true, but by default flink does not take the look up(for better throughput), and we may generate the changelog for reader on the fly. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6631: [HUDI-4810] Fixing Hudi bundles requiring log4j2 on the classpath

hudi-bot commented on PR #6631: URL: https://github.com/apache/hudi/pull/6631#issuecomment-1241466870 ## CI report: * e8e8c4d8047b5985764f7534bd84e82763c3ad28 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11243) * cf25a4e0d37980de4284afe841eced2f205b97a5 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

hudi-bot commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241466655 ## CI report: * 252b9f49e2c86c7aad7908e5887064b0d0b36932 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11258) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

hudi-bot commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241464172 ## CI report: * 252b9f49e2c86c7aad7908e5887064b0d0b36932 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11258) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] TJX2014 commented on pull request #6595: [HUDI-4777] Fix flink gen bucket index of mor table not consistent wi…

TJX2014 commented on PR #6595: URL: https://github.com/apache/hudi/pull/6595#issuecomment-1241461060 > > but in flink side, I think deduplicate should also open as default option for mor table , when duplicate write to log file, very hard for compact to read, also lead mor table not stable due to the duplicate record twice read into memory. > > Do you mean that there are two client writing to the same partition at the same time? Not exactly, if we deduplicate the record in memory, and then write to log is elegant for MOR because result is same. As @danny0405 say, in cdc situation, we need to retain origin records, not compact firstly in memory, which is acceptable. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] TJX2014 commented on pull request #6595: [HUDI-4777] Fix flink gen bucket index of mor table not consistent wi…

TJX2014 commented on PR #6595: URL: https://github.com/apache/hudi/pull/6595#issuecomment-1241460834 > Not exactly, if we deduplicate the record in memory, and then write to log is elegant for MOR because result is same. As @danny0405 say, in cdc situation, we need to retain origin records, not compact firstly in memory, which is acceptable. Not exactly, if we deduplicate the record in memory, and then write to log is elegant for MOR because result is same. As @danny0405 say, in cdc situation, we need to retain origin records, not compact firstly in memory, which is acceptable. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] TJX2014 commented on pull request #6595: [HUDI-4777] Fix flink gen bucket index of mor table not consistent wi…

TJX2014 commented on PR #6595: URL: https://github.com/apache/hudi/pull/6595#issuecomment-1241460494 > Not exactly, if we deduplicate the record in memory, and then write to log is elegant for MOR because result is same. As @danny0405 say, in cdc situation, we need to retain origin records, not compact firstly in memory, which is acceptable. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #6256: [RFC-51][HUDI-3478] Update RFC: CDC support

danny0405 commented on code in PR #6256: URL: https://github.com/apache/hudi/pull/6256#discussion_r966591772 ## rfc/rfc-51/rfc-51.md: ## @@ -64,69 +65,72 @@ We follow the debezium output format: four columns as shown below Note: the illustration here ignores all the Hudi metadata columns like `_hoodie_commit_time` in `before` and `after` columns. -## Goals +## Design Goals 1. Support row-level CDC records generation and persistence; 2. Support both MOR and COW tables; 3. Support all the write operations; 4. Support Spark DataFrame/SQL/Streaming Query; -## Implementation +## Configurations -### CDC Architecture +| key | default | description | +|-|--|--| +| hoodie.table.cdc.enabled| `false` | The master switch of the CDC features. If `true`, writers and readers will respect CDC configurations and behave accordingly.| +| hoodie.table.cdc.supplemental.logging | `false` | If `true`, persist the required information about the changed data, including `before`. If `false`, only `op` and record keys will be persisted. | +| hoodie.table.cdc.supplemental.logging.include_after | `false` | If `true`, persist `after` as well. | - +To perform CDC queries, users need to set `hoodie.table.cdc.enable=true` and `hoodie.datasource.query.type=incremental`. -Note: Table operations like `Compact`, `Clean`, `Index` do not write/change any data. So we don't need to consider them in CDC scenario. - -### Modifiying code paths +| key| default| description | +|||--| +| hoodie.table.cdc.enabled | `false`| set to `true` for CDC queries| +| hoodie.datasource.query.type | `snapshot` | set to `incremental` for CDC queries | +| hoodie.datasource.read.start.timestamp | - | requried. | +| hoodie.datasource.read.end.timestamp | - | optional. | - +### Logical File Types -### Config Definitions +We define 4 logical file types for the CDC scenario. Review Comment: > always easier for people to digest when leverage on existing knowledge (actions here) instead of bringing up new definitions Cann't agree more, let's not introduce new terminology. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] paul8263 commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

paul8263 commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241457900 @hudi-bot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] TJX2014 commented on a diff in pull request #6630: [HUDI-4808] Fix HoodieSimpleBucketIndex not consider bucket num in lo…

TJX2014 commented on code in PR #6630:

URL: https://github.com/apache/hudi/pull/6630#discussion_r966590804

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/index/HoodieIndexUtils.java:

##

@@ -72,6 +73,26 @@ public static List

getLatestBaseFilesForPartition(

return Collections.emptyList();

}

+ /**

+ * Fetches Pair of partition path and {@link FileSlice}s for interested

partitions.

+ *

+ * @param partition Partition of interest

+ * @param hoodieTable Instance of {@link HoodieTable} of interest

+ * @return the list of {@link FileSlice}

+ */

+ public static List getLatestFileSlicesForPartition(

+ final String partition,

+ final HoodieTable hoodieTable) {

+Option latestCommitTime =

hoodieTable.getMetaClient().getCommitsTimeline()

+.filterCompletedInstants().lastInstant();

+if (latestCommitTime.isPresent()) {

+ return hoodieTable.getHoodieView()

+ .getLatestFileSlicesBeforeOrOn(partition,

latestCommitTime.get().getTimestamp(), true)

+ .collect(toList());

Review Comment:

Ok, I will add a test for this pr.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] TJX2014 commented on a diff in pull request #6634: [HUDI-4813] Fix infer keygen not work in sparksql side issue

TJX2014 commented on code in PR #6634:

URL: https://github.com/apache/hudi/pull/6634#discussion_r966590414

##

hudi-spark-datasource/hudi-spark-common/src/main/scala/org/apache/hudi/DataSourceOptions.scala:

##

@@ -787,9 +787,13 @@ object DataSourceOptionsHelper {

def inferKeyGenClazz(props: TypedProperties): String = {

val partitionFields =

props.getString(DataSourceWriteOptions.PARTITIONPATH_FIELD.key(), null)

+val recordsKeyFields =

props.getString(DataSourceWriteOptions.RECORDKEY_FIELD.key(),

DataSourceWriteOptions.RECORDKEY_FIELD.defaultValue())

+genKeyGenerator(recordsKeyFields, partitionFields)

+ }

+

+ def genKeyGenerator(recordsKeyFields: String, partitionFields: String):

String = {

if (partitionFields != null) {

Review Comment:

Ok, I will do it right now.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] the-other-tim-brown commented on a diff in pull request #6631: [HUDI-4810] Fixing Hudi bundles requiring log4j2 on the classpath

the-other-tim-brown commented on code in PR #6631: URL: https://github.com/apache/hudi/pull/6631#discussion_r966589943 ## hudi-client/hudi-flink-client/pom.xml: ## @@ -35,7 +35,21 @@ - + + Review Comment: nitpick: indentation is off here -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-4810) Fix Hudi bundles requiring log4j2 on the classpath

[

https://issues.apache.org/jira/browse/HUDI-4810?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Alexey Kudinkin updated HUDI-4810:

--

Status: Patch Available (was: In Progress)

> Fix Hudi bundles requiring log4j2 on the classpath

> --

>

> Key: HUDI-4810

> URL: https://issues.apache.org/jira/browse/HUDI-4810

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: Alexey Kudinkin

>Assignee: Alexey Kudinkin

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 0.12.1

>

>

> As part of addressing HUDI-4441, we've erroneously rebased Hudi onto

> "log4j-1.2-api" module under impression that it's an API module (as

> advertised) which turned out not to be the case: it's actual bridge

> implementation, requiring Log4j2 be provided on the classpath as required

> dependency.

> For version of Spark < 3.3 this triggers exceptions like the following one

> (reported by [~akmodi])

>

> {code:java}

> Exception in thread "main" java.lang.NoClassDefFoundError:

> org/apache/logging/log4j/LogManagerat

> org.apache.hudi.metrics.datadog.DatadogReporter.(DatadogReporter.java:55)

> at

> org.apache.hudi.metrics.datadog.DatadogMetricsReporter.(DatadogMetricsReporter.java:62)

> at

> org.apache.hudi.metrics.MetricsReporterFactory.createReporter(MetricsReporterFactory.java:70)

> at org.apache.hudi.metrics.Metrics.(Metrics.java:50)at

> org.apache.hudi.metrics.Metrics.init(Metrics.java:96)at

> org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamerMetrics.(HoodieDeltaStreamerMetrics.java:44)

> at

> org.apache.hudi.utilities.deltastreamer.DeltaSync.(DeltaSync.java:243)

> at

> org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer$DeltaSyncService.(HoodieDeltaStreamer.java:663)

> at

> org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer.(HoodieDeltaStreamer.java:143)

> at

> org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer.(HoodieDeltaStreamer.java:116)

> at

> org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer.main(HoodieDeltaStreamer.java:562)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at

> sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

>at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)at

> org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

> at

> org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:1000)

> at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

>at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)at

> org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)at

> org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1089)

> at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1098)at

> org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)Caused by:

> java.lang.ClassNotFoundException: org.apache.logging.log4j.LogManagerat

> java.net.URLClassLoader.findClass(URLClassLoader.java:387)at

> java.lang.ClassLoader.loadClass(ClassLoader.java:418)at

> java.lang.ClassLoader.loadClass(ClassLoader.java:351)... 23 more {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (HUDI-3391) presto and hive beeline fails to read MOR table w/ 2 or more array fields

[

https://issues.apache.org/jira/browse/HUDI-3391?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Sagar Sumit updated HUDI-3391:

--

Status: Patch Available (was: In Progress)

> presto and hive beeline fails to read MOR table w/ 2 or more array fields

> -

>

> Key: HUDI-3391

> URL: https://issues.apache.org/jira/browse/HUDI-3391

> Project: Apache Hudi

> Issue Type: Bug

> Components: dependencies, reader-core, trino-presto

>Reporter: sivabalan narayanan

>Assignee: Sagar Sumit

>Priority: Blocker

> Fix For: 0.12.1

>

> Original Estimate: 4h

> Remaining Estimate: 4h

>

> We have an issue reported by user

> [here|[https://github.com/apache/hudi/issues/2657].] Looks like w/ 0.10.0 or

> later, spark datasource read works, but hive beeline does not work. Even

> spark.sql (hive table) querying works as well.

> Another related ticket:

> [https://github.com/apache/hudi/issues/3834#issuecomment-997307677]

>

> Steps that I tried:

> [https://gist.github.com/nsivabalan/fdb8794104181f93b9268380c7f7f079]

> From beeline, you will encounter below exception

> {code:java}

> Failed with exception

> java.io.IOException:org.apache.hudi.org.apache.avro.SchemaParseException:

> Can't redefine: array {code}

> All linked ticket states that upgrading parquet to 1.11.0 or greater should

> work. We need to try it out w/ latest master and go from there.

>

>

>

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Closed] (HUDI-4465) Optimizing file-listing path in MT

[ https://issues.apache.org/jira/browse/HUDI-4465?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Sagar Sumit closed HUDI-4465. - Resolution: Done > Optimizing file-listing path in MT > -- > > Key: HUDI-4465 > URL: https://issues.apache.org/jira/browse/HUDI-4465 > Project: Apache Hudi > Issue Type: Bug >Reporter: Alexey Kudinkin >Assignee: Alexey Kudinkin >Priority: Blocker > Labels: pull-request-available > Fix For: 0.13.0 > > > We should review file-listing path and try to optimize the file-listing path > as much as possible. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[hudi] branch master updated: [HUDI-4465] Optimizing file-listing sequence of Metadata Table (#6016)

This is an automated email from the ASF dual-hosted git repository. codope pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git The following commit(s) were added to refs/heads/master by this push: new 4af60dcfba [HUDI-4465] Optimizing file-listing sequence of Metadata Table (#6016) 4af60dcfba is described below commit 4af60dcfbaeea5e79bc4b9457477e9ee0f9cdb79 Author: Alexey Kudinkin AuthorDate: Thu Sep 8 20:09:00 2022 -0700 [HUDI-4465] Optimizing file-listing sequence of Metadata Table (#6016) Optimizes file-listing sequence of the Metadata Table to make sure it's on par or better than FS-based file-listing Change log: - Cleaned up avoidable instantiations of Hadoop's Path - Replaced new Path w/ createUnsafePath where possible - Cached TimestampFormatter, DateFormatter for timezone - Avoid loading defaults in Hadoop conf when init-ing HFile reader - Avoid re-instantiating BaseTableMetadata twice w/in BaseHoodieTableFileIndex - Avoid looking up FileSystem for every partition when listing partitioned table, instead do it just once --- docker/demo/config/dfs-source.properties | 4 + .../metadata/HoodieBackedTableMetadataWriter.java | 6 +- .../apache/hudi/keygen/BuiltinKeyGenerator.java| 1 - .../org/apache/hudi/keygen/SimpleKeyGenerator.java | 7 ++ .../datasources/SparkParsePartitionUtil.scala | 12 +- .../org/apache/spark/sql/hudi/SparkAdapter.scala | 2 +- .../functional/TestConsistentBucketIndex.java | 2 +- .../hudi/table/TestHoodieMergeOnReadTable.java | 26 +++- .../TestHoodieSparkMergeOnReadTableCompaction.java | 19 ++- ...HoodieSparkMergeOnReadTableIncrementalRead.java | 2 +- .../TestHoodieSparkMergeOnReadTableRollback.java | 9 +- .../hudi/testutils/HoodieClientTestHarness.java| 6 +- .../SparkClientFunctionalTestHarness.java | 12 +- .../org/apache/hudi/BaseHoodieTableFileIndex.java | 87 + .../java/org/apache/hudi/common/fs/FSUtils.java| 13 +- .../org/apache/hudi/common/model/BaseFile.java | 9 ++ .../hudi/common/table/HoodieTableMetaClient.java | 2 +- .../table/log/block/HoodieHFileDataBlock.java | 26 ++-- .../table/view/AbstractTableFileSystemView.java| 13 +- .../apache/hudi/common/util/CollectionUtils.java | 9 ++ .../java/org/apache/hudi/hadoop/CachingPath.java | 59 - .../org/apache/hudi/hadoop/SerializablePath.java | 9 +- .../apache/hudi/io/storage/HoodieHFileUtils.java | 4 +- .../apache/hudi/metadata/BaseTableMetadata.java| 135 - .../hudi/metadata/HoodieBackedTableMetadata.java | 11 +- .../hudi/metadata/HoodieMetadataPayload.java | 18 ++- .../apache/hudi/metadata/HoodieTableMetadata.java | 21 +++- .../hudi/common/testutils/HoodieTestUtils.java | 35 +++--- .../hudi/hadoop/testutils/InputFormatTestUtil.java | 5 +- .../apache/hudi/SparkHoodieTableFileIndex.scala| 28 +++-- .../hudi/functional/TestTimeTravelQuery.scala | 6 +- .../apache/spark/sql/adapter/Spark2Adapter.scala | 2 +- .../datasources/Spark2ParsePartitionUtil.scala | 14 +-- .../apache/hudi/spark3/internal/ReflectUtil.java | 4 +- .../spark/sql/adapter/BaseSpark3Adapter.scala | 4 +- .../datasources/Spark3ParsePartitionUtil.scala | 48 .../functional/TestHoodieDeltaStreamer.java| 10 +- .../utilities/sources/TestHoodieIncrSource.java| 4 +- 38 files changed, 451 insertions(+), 233 deletions(-) diff --git a/docker/demo/config/dfs-source.properties b/docker/demo/config/dfs-source.properties index ac7080e141..a90629ef8e 100644 --- a/docker/demo/config/dfs-source.properties +++ b/docker/demo/config/dfs-source.properties @@ -19,6 +19,10 @@ include=base.properties # Key fields, for kafka example hoodie.datasource.write.recordkey.field=key hoodie.datasource.write.partitionpath.field=date +# NOTE: We have to duplicate configuration since this is being used +# w/ both Spark and DeltaStreamer +hoodie.table.recordkey.fields=key +hoodie.table.partition.fields=date # Schema provider props (change to absolute path based on your installation) hoodie.deltastreamer.schemaprovider.source.schema.file=/var/demo/config/schema.avsc hoodie.deltastreamer.schemaprovider.target.schema.file=/var/demo/config/schema.avsc diff --git a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadataWriter.java b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadataWriter.java index c7cc50967a..962875fb92 100644 --- a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadataWriter.java +++ b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metadata/HoodieBackedTableMetadataWriter.java @@ -1006,21 +1006,21 @@ public abstract class HoodieBackedTableMetadataWriter

[GitHub] [hudi] codope merged pull request #6016: [HUDI-4465] Optimizing file-listing sequence of Metadata Table

codope merged PR #6016: URL: https://github.com/apache/hudi/pull/6016 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope commented on a diff in pull request #6016: [HUDI-4465] Optimizing file-listing sequence of Metadata Table

codope commented on code in PR #6016:

URL: https://github.com/apache/hudi/pull/6016#discussion_r966582484

##

hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/keygen/SimpleKeyGenerator.java:

##

@@ -46,6 +47,12 @@ public SimpleKeyGenerator(TypedProperties props) {

SimpleKeyGenerator(TypedProperties props, String recordKeyField, String

partitionPathField) {

super(props);

+// Make sure key-generator is configured properly

+ValidationUtils.checkArgument(recordKeyField == null ||

!recordKeyField.isEmpty(),

+"Record key field has to be non-empty!");

+ValidationUtils.checkArgument(partitionPathField == null ||

!partitionPathField.isEmpty(),

Review Comment:

Discussed offline. It will be taken up separately. @alexeykudinkin in case

if you have a JIRA, please link it here. For simple keygen we need the

validation because of misconfiguration of some tests that were passing “” as

partition fields.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] LinMingQiang commented on issue #6618: Caused by: org.apache.http.NoHttpResponseException: xxxxxx:34812 failed to respond[SUPPORT]

LinMingQiang commented on issue #6618: URL: https://github.com/apache/hudi/issues/6618#issuecomment-1241441100 I have encountered this problem,this pr may solve your problem : https://github.com/apache/hudi/pull/6393 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] Zhifeiyu commented on issue #6640: [SUPPORT] HUDI partition table duplicate data cow hudi 0.10.0 flink 1.13.1

Zhifeiyu commented on issue #6640: URL: https://github.com/apache/hudi/issues/6640#issuecomment-1241440579 mark -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-4818) Using CustomKeyGenerator fails w/ SparkHoodieTableFileIndex

[

https://issues.apache.org/jira/browse/HUDI-4818?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HUDI-4818:

-

Labels: pull-request-available (was: )

> Using CustomKeyGenerator fails w/ SparkHoodieTableFileIndex

> ---

>

> Key: HUDI-4818

> URL: https://issues.apache.org/jira/browse/HUDI-4818

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: Alexey Kudinkin

>Assignee: Alexey Kudinkin

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 0.13.0

>

>

> Currently using `CustomKeyGenerator` with the partition-path config

> \{hoodie.datasource.write.partitionpath.field=ts:timestamp} fails w/

> {code:java}

> Caused by: java.lang.RuntimeException: Failed to cast value `2022-05-11` to

> `LongType` for partition column `ts_ms`

> at

> org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.$anonfun$parsePartition$2(Spark3ParsePartitionUtil.scala:72)

> at

> scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:286)

> at

> scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

> at

> scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

> at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

> at scala.collection.TraversableLike.map(TraversableLike.scala:286)

> at scala.collection.TraversableLike.map$(TraversableLike.scala:279)

> at scala.collection.AbstractTraversable.map(Traversable.scala:108)

> at

> org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.$anonfun$parsePartition$1(Spark3ParsePartitionUtil.scala:65)

> at scala.Option.map(Option.scala:230)

> at

> org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.parsePartition(Spark3ParsePartitionUtil.scala:63)

> at

> org.apache.hudi.SparkHoodieTableFileIndex.parsePartitionPath(SparkHoodieTableFileIndex.scala:274)

> at

> org.apache.hudi.SparkHoodieTableFileIndex.parsePartitionColumnValues(SparkHoodieTableFileIndex.scala:258)

> at

> org.apache.hudi.BaseHoodieTableFileIndex.lambda$getAllQueryPartitionPaths$3(BaseHoodieTableFileIndex.java:190)

> at

> java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193)

> at

> java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

> at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

> at

> java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

> at

> java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708)

> at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

> at

> java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566)

> at

> org.apache.hudi.BaseHoodieTableFileIndex.getAllQueryPartitionPaths(BaseHoodieTableFileIndex.java:193)

> {code}

>

> This occurs b/c SparkHoodieTableFileIndex produces incorrect partition schema

> at XXX

> where it properly handles only `TimestampBasedKeyGenerator`s but not the

> other key-generators that might be changing the data-type of the

> partition-value as compared to the source partition-column (in this case it

> has `ts` as a long in the source table schema, but it produces

> partition-value as string)

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [hudi] alexeykudinkin opened a new pull request, #6641: [WIP][HUDI-4818] Fixing SparkHoodieTableFileIndex handling of KeyGenerators changing the type of returned partition-value

alexeykudinkin opened a new pull request, #6641: URL: https://github.com/apache/hudi/pull/6641 ### Change Logs _Describe context and summary for this change. Highlight if any code was copied._ ### Impact _Describe any public API or user-facing feature change or any performance impact._ **Risk level: none | low | medium | high** _Choose one. If medium or high, explain what verification was done to mitigate the risks._ ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

hudi-bot commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241435958 ## CI report: * 252b9f49e2c86c7aad7908e5887064b0d0b36932 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11258) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (HUDI-4818) Using CustomKeyGenerator fails w/ SparkHoodieTableFileIndex

Alexey Kudinkin created HUDI-4818:

-

Summary: Using CustomKeyGenerator fails w/

SparkHoodieTableFileIndex

Key: HUDI-4818

URL: https://issues.apache.org/jira/browse/HUDI-4818

Project: Apache Hudi

Issue Type: Bug

Reporter: Alexey Kudinkin

Assignee: Alexey Kudinkin

Fix For: 0.13.0

Currently using `CustomKeyGenerator` with the partition-path config

\{hoodie.datasource.write.partitionpath.field=ts:timestamp} fails w/

{code:java}

Caused by: java.lang.RuntimeException: Failed to cast value `2022-05-11` to

`LongType` for partition column `ts_ms`

at

org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.$anonfun$parsePartition$2(Spark3ParsePartitionUtil.scala:72)

at

scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:286)

at

scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at

scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at scala.collection.TraversableLike.map(TraversableLike.scala:286)

at scala.collection.TraversableLike.map$(TraversableLike.scala:279)

at scala.collection.AbstractTraversable.map(Traversable.scala:108)

at

org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.$anonfun$parsePartition$1(Spark3ParsePartitionUtil.scala:65)

at scala.Option.map(Option.scala:230)

at

org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.parsePartition(Spark3ParsePartitionUtil.scala:63)

at

org.apache.hudi.SparkHoodieTableFileIndex.parsePartitionPath(SparkHoodieTableFileIndex.scala:274)

at

org.apache.hudi.SparkHoodieTableFileIndex.parsePartitionColumnValues(SparkHoodieTableFileIndex.scala:258)

at

org.apache.hudi.BaseHoodieTableFileIndex.lambda$getAllQueryPartitionPaths$3(BaseHoodieTableFileIndex.java:190)

at

java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193)

at

java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

at

java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

at

java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708)

at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

at

java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566)

at

org.apache.hudi.BaseHoodieTableFileIndex.getAllQueryPartitionPaths(BaseHoodieTableFileIndex.java:193)

{code}

This occurs b/c SparkHoodieTableFileIndex produces incorrect partition schema

at XXX

where it properly handles only `TimestampBasedKeyGenerator`s but not the other

key-generators that might be changing the data-type of the partition-value as

compared to the source partition-column (in this case it has `ts` as a long in

the source table schema, but it produces partition-value as string)

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (HUDI-4818) Using CustomKeyGenerator fails w/ SparkHoodieTableFileIndex

[

https://issues.apache.org/jira/browse/HUDI-4818?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Alexey Kudinkin updated HUDI-4818:

--

Story Points: 4 (was: 2)

> Using CustomKeyGenerator fails w/ SparkHoodieTableFileIndex

> ---

>

> Key: HUDI-4818

> URL: https://issues.apache.org/jira/browse/HUDI-4818

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: Alexey Kudinkin

>Assignee: Alexey Kudinkin

>Priority: Blocker

> Fix For: 0.13.0

>

>

> Currently using `CustomKeyGenerator` with the partition-path config

> \{hoodie.datasource.write.partitionpath.field=ts:timestamp} fails w/

> {code:java}

> Caused by: java.lang.RuntimeException: Failed to cast value `2022-05-11` to

> `LongType` for partition column `ts_ms`

> at

> org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.$anonfun$parsePartition$2(Spark3ParsePartitionUtil.scala:72)

> at

> scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:286)

> at

> scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

> at

> scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

> at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

> at scala.collection.TraversableLike.map(TraversableLike.scala:286)

> at scala.collection.TraversableLike.map$(TraversableLike.scala:279)

> at scala.collection.AbstractTraversable.map(Traversable.scala:108)

> at

> org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.$anonfun$parsePartition$1(Spark3ParsePartitionUtil.scala:65)

> at scala.Option.map(Option.scala:230)

> at

> org.apache.spark.sql.execution.datasources.Spark3ParsePartitionUtil.parsePartition(Spark3ParsePartitionUtil.scala:63)

> at

> org.apache.hudi.SparkHoodieTableFileIndex.parsePartitionPath(SparkHoodieTableFileIndex.scala:274)

> at

> org.apache.hudi.SparkHoodieTableFileIndex.parsePartitionColumnValues(SparkHoodieTableFileIndex.scala:258)

> at

> org.apache.hudi.BaseHoodieTableFileIndex.lambda$getAllQueryPartitionPaths$3(BaseHoodieTableFileIndex.java:190)

> at

> java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193)

> at

> java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

> at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

> at

> java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

> at

> java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708)

> at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

> at

> java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566)

> at

> org.apache.hudi.BaseHoodieTableFileIndex.getAllQueryPartitionPaths(BaseHoodieTableFileIndex.java:193)

> {code}

>

> This occurs b/c SparkHoodieTableFileIndex produces incorrect partition schema

> at XXX

> where it properly handles only `TimestampBasedKeyGenerator`s but not the

> other key-generators that might be changing the data-type of the

> partition-value as compared to the source partition-column (in this case it

> has `ts` as a long in the source table schema, but it produces

> partition-value as string)

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

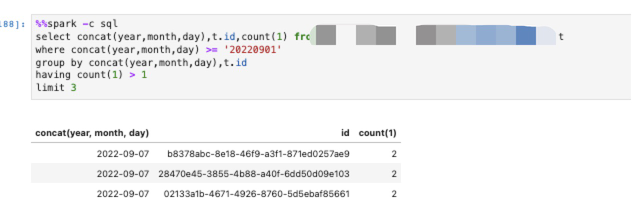

[GitHub] [hudi] zwj0110 opened a new issue, #6640: [SUPPORT] HUDI partition table duplicate data cow

zwj0110 opened a new issue, #6640: URL: https://github.com/apache/hudi/issues/6640 **_Tips before filing an issue_** - Have you gone through our [FAQs](https://hudi.apache.org/learn/faq/)? - Join the mailing list to engage in conversations and get faster support at dev-subscr...@hudi.apache.org. - If you have triaged this as a bug, then file an [issue](https://issues.apache.org/jira/projects/HUDI/issues) directly. **Describe the problem you faced** A clear and concise description of the problem. **To Reproduce** Steps to reproduce the behavior: ## hudi sink config ```sql 'connector' = 'hudi', 'hoodie.table.name' = 'xxx', 'table.type' = 'COPY_ON_WRITE', 'path' = '', 'hoodie.datasource.write.keygenerator.type' = 'COMPLEX', 'hoodie.datasource.write.recordkey.field' = 'id', 'hoodie.cleaner.policy' = 'KEEP_LATEST_FILE_VERSIONS', 'hoodie.cleaner.fileversions.retained' = '20', 'hoodie.keep.min.commits' = '30', 'hoodie.keep.max.commits' = '40', 'hoodie.cleaner.commits.retained' = '20', 'write.operation' = 'upsert', 'write.commit.ack.timeout' = '6000', 'write.sort.memory' = '128', 'write.task.max.size' = '1024', 'write.merge.max_memory' = '100', 'write.tasks' = '96', 'write.precombine' = 'true', 'write.precombine.field' = 'meta_es_offset', 'index.state.ttl' = '0', 'index.global.enabled' = 'false', 'hive_sync.enable' = 'true', 'hive_sync.table' = 'xxx', 'hive_sync.auto_create_db' = 'true', 'hive_sync.mode' = 'hms', 'hive_sync.metastore.uris' = 'xxx', 'hive_sync.db' = 'xxx', 'hoodie.datasource.write.partitionpath.field' = 'year,month,day', 'hoodie.datasource.write.hive_style_partitioning' = 'true', 'hive_sync.partition_fields' = 'year,month,day', 'hive_sync.partition_extractor_class' = 'org.apache.hudi.hive.MultiPartKeysValueExtractor', 'index.bootstrap.enabled' = 'true' ``` ## description: After initialize history data, set `scan.startup.mode` as `timestamp`,and set the timestamp ahead, the duplicate occur,and if we restart the job from checkpoint, the data is well ## data duplicate result:  **Expected behavior** A clear and concise description of what you expected to happen. **Environment Description** * Hudi version : 0.10.0 * flink version : 1.13.1 * Hive version : 3.1.2 * Hadoop version : 3.1.0 * Storage (HDFS/S3/GCS..) : s3 * Running on Docker? (yes/no) : no **Additional context** Add any other context about the problem here. **Stacktrace** ```Add the stacktrace of the error.``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] wqwl611 commented on pull request #6636: add new index RANGE_BUCKET , when primary key is auto-increment like most mysql table

wqwl611 commented on PR #6636:

URL: https://github.com/apache/hudi/pull/6636#issuecomment-1241432142

> Nice feature, can we log an JIRA issue and change the commit title to

"[HUDI-${JIRA_ID}] ${your title}"

@danny0405 yes,Thanks。

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] wqwl611 commented on pull request #6636: add new index RANGE_BUCKET , when primary key is auto-increment like most mysql table

wqwl611 commented on PR #6636:

URL: https://github.com/apache/hudi/pull/6636#issuecomment-1241430662

> Nice feature, can we log an JIRA issue and change the commit title to

"[HUDI-${JIRA_ID}] ${your title}"

yes

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on pull request #6632: [HUDI-4753] more accurate record size estimation for log writing and spillable map

YuweiXiao commented on PR #6632: URL: https://github.com/apache/hudi/pull/6632#issuecomment-1241421818 @yihua Hey Yihua, could you help review this PR. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on pull request #6629: [HUDI-4807] Use base table instant for metadata table initialization

YuweiXiao commented on PR #6629: URL: https://github.com/apache/hudi/pull/6629#issuecomment-1241421204 > @YuweiXiao Can you please add more details in the PR description? It would be great if you add a test as well. Have added some details. Feel like it is not easy to add a test for it, as the failure only occurs when we modify `HoodieAppendHandle` (i.e., in the consistent hashing PR). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #6595: [HUDI-4777] Fix flink gen bucket index of mor table not consistent wi…

danny0405 commented on PR #6595: URL: https://github.com/apache/hudi/pull/6595#issuecomment-1241417330 > > > > I will fix give pr fix in spark side too, but in flink side, I think deduplicate should also open as default option for mor table , when duplicate write to log file, very hard for compact to read, also lead mor table not stable due to the duplicate record twice read into memory. The initial idea is to keep the details of the log records, such as in the cdc change log feed. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #6634: [HUDI-4813] Fix infer keygen not work in sparksql side issue

danny0405 commented on code in PR #6634:

URL: https://github.com/apache/hudi/pull/6634#discussion_r966556852

##

hudi-spark-datasource/hudi-spark-common/src/main/scala/org/apache/hudi/DataSourceOptions.scala:

##

@@ -787,9 +787,13 @@ object DataSourceOptionsHelper {

def inferKeyGenClazz(props: TypedProperties): String = {

val partitionFields =

props.getString(DataSourceWriteOptions.PARTITIONPATH_FIELD.key(), null)

+val recordsKeyFields =

props.getString(DataSourceWriteOptions.RECORDKEY_FIELD.key(),

DataSourceWriteOptions.RECORDKEY_FIELD.defaultValue())

+genKeyGenerator(recordsKeyFields, partitionFields)

+ }

+

+ def genKeyGenerator(recordsKeyFields: String, partitionFields: String):

String = {

if (partitionFields != null) {

Review Comment:

Let just rename `genKeyGenerator` to `inferKeyGenClazz`, it's okey because

they have different method signature. Also can we write a test case for Spark

SQL then ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (HUDI-4811) Fix the checkstyle of hudi flink

[ https://issues.apache.org/jira/browse/HUDI-4811?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17602058#comment-17602058 ] Danny Chen commented on HUDI-4811: -- Fixed via master branch: 13eb892081fc4ddd5e1592ef8698831972012666 > Fix the checkstyle of hudi flink > > > Key: HUDI-4811 > URL: https://issues.apache.org/jira/browse/HUDI-4811 > Project: Apache Hudi > Issue Type: Task > Components: flink-sql >Reporter: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.12.1 > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Resolved] (HUDI-4811) Fix the checkstyle of hudi flink

[ https://issues.apache.org/jira/browse/HUDI-4811?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen resolved HUDI-4811. -- > Fix the checkstyle of hudi flink > > > Key: HUDI-4811 > URL: https://issues.apache.org/jira/browse/HUDI-4811 > Project: Apache Hudi > Issue Type: Task > Components: flink-sql >Reporter: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.12.1 > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Updated] (HUDI-4811) Fix the checkstyle of hudi flink

[ https://issues.apache.org/jira/browse/HUDI-4811?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen updated HUDI-4811: - Fix Version/s: 0.12.1 > Fix the checkstyle of hudi flink > > > Key: HUDI-4811 > URL: https://issues.apache.org/jira/browse/HUDI-4811 > Project: Apache Hudi > Issue Type: Task > Components: flink-sql >Reporter: Danny Chen >Priority: Major > Labels: pull-request-available > Fix For: 0.12.1 > > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[hudi] branch master updated (e1da06fa70 -> 13eb892081)

This is an automated email from the ASF dual-hosted git repository. danny0405 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git from e1da06fa70 [MINOR] Typo fix for kryo in flink-bundle (#6639) add 13eb892081 [HUDI-4811] Fix the checkstyle of hudi flink (#6633) No new revisions were added by this update. Summary of changes: hudi-client/hudi-flink-client/pom.xml | 561 +++-- .../hudi/client/FlinkTaskContextSupplier.java | 2 +- .../apache/hudi/client/HoodieFlinkWriteClient.java | 8 + .../client/common/HoodieFlinkEngineContext.java| 7 +- .../hudi/execution/FlinkLazyInsertIterable.java| 5 + .../java/org/apache/hudi/io/MiniBatchHandle.java | 3 +- .../io/storage/row/HoodieRowDataParquetWriter.java | 2 +- .../row/parquet/ParquetSchemaConverter.java| 5 +- .../FlinkHoodieBackedTableMetadataWriter.java | 3 + .../hudi/table/ExplicitWriteHandleTable.java | 22 +- .../hudi/table/HoodieFlinkCopyOnWriteTable.java| 23 +- .../hudi/table/HoodieFlinkMergeOnReadTable.java| 3 + .../org/apache/hudi/table/HoodieFlinkTable.java| 3 + .../commit/FlinkDeleteCommitActionExecutor.java| 3 + .../table/action/commit/FlinkDeleteHelper.java | 3 + .../commit/FlinkInsertCommitActionExecutor.java| 3 + .../FlinkInsertOverwriteCommitActionExecutor.java | 3 + ...nkInsertOverwriteTableCommitActionExecutor.java | 3 + .../FlinkInsertPreppedCommitActionExecutor.java| 3 + .../hudi/table/action/commit/FlinkMergeHelper.java | 3 + .../commit/FlinkUpsertCommitActionExecutor.java| 3 + .../FlinkUpsertPreppedCommitActionExecutor.java| 3 + .../delta/BaseFlinkDeltaCommitActionExecutor.java | 3 + .../FlinkUpsertDeltaCommitActionExecutor.java | 3 + ...linkUpsertPreppedDeltaCommitActionExecutor.java | 3 + .../common/TestHoodieFlinkEngineContext.java | 2 +- .../index/bloom/TestFlinkHoodieBloomIndex.java | 13 +- .../testutils/HoodieFlinkClientTestHarness.java| 9 +- .../testutils/HoodieFlinkWriteableTestTable.java | 5 +- .../apache/hudi/configuration/FlinkOptions.java| 2 +- .../hudi/configuration/HadoopConfigurations.java | 2 +- .../sink/clustering/FlinkClusteringConfig.java | 10 +- .../sink/clustering/HoodieFlinkClusteringJob.java | 2 +- .../hudi/sink/compact/CompactionPlanOperator.java | 2 +- .../hudi/sink/compact/HoodieFlinkCompactor.java| 2 +- .../java/org/apache/hudi/sink/utils/Pipelines.java | 18 +- .../hudi/source/stats/ExpressionEvaluator.java | 1 + .../apache/hudi/streamer/FlinkStreamerConfig.java | 4 +- .../org/apache/hudi/table/HoodieTableSource.java | 2 +- .../apache/hudi/table/catalog/HiveSchemaUtils.java | 16 +- .../hudi/table/catalog/HoodieHiveCatalog.java | 2 +- .../org/apache/hudi/util/AvroSchemaConverter.java | 2 +- .../java/org/apache/hudi/util/ClusteringUtil.java | 2 +- .../java/org/apache/hudi/util/CompactionUtil.java | 2 +- .../java/org/apache/hudi/util/DataTypeUtils.java | 2 +- .../hudi/util/FlinkStateBackendConverter.java | 6 +- .../java/org/apache/hudi/util/HoodiePipeline.java | 62 +-- .../java/org/apache/hudi/util/StreamerUtil.java| 4 +- .../apache/hudi/sink/ITTestDataStreamWrite.java| 4 +- .../org/apache/hudi/sink/TestWriteMergeOnRead.java | 52 +- .../sink/compact/ITTestHoodieFlinkCompactor.java | 2 +- .../sink/compact/TestCompactionPlanStrategy.java | 39 +- .../hudi/source/stats/TestExpressionEvaluator.java | 2 +- .../apache/hudi/table/ITTestHoodieDataSource.java | 4 +- .../hudi/table/catalog/HoodieCatalogTestUtils.java | 8 +- .../table/catalog/TestHoodieCatalogFactory.java| 2 +- .../hudi/table/catalog/TestHoodieHiveCatalog.java | 4 +- .../test/java/org/apache/hudi/utils/TestData.java | 16 +- .../hudi-flink/src/test/resources/hive-site.xml| 16 +- .../test-catalog-factory-conf/hive-site.xml| 10 +- .../format/cow/vector/HeapMapColumnVector.java | 2 +- 61 files changed, 546 insertions(+), 470 deletions(-)

[GitHub] [hudi] danny0405 merged pull request #6633: [HUDI-4811] Fix the checkstyle of hudi flink

danny0405 merged PR #6633: URL: https://github.com/apache/hudi/pull/6633 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

hudi-bot commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241399541 ## CI report: * 3ae4fb8b374e12b1097a86d56e5996b7dc0ac79f Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11215) * 252b9f49e2c86c7aad7908e5887064b0d0b36932 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11258) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #6636: add new index RANGE_BUCKET , when primary key is auto-increment like most mysql table

danny0405 commented on PR #6636:

URL: https://github.com/apache/hudi/pull/6636#issuecomment-1241399179

Nice feature, can we log an JIRA issue and change the commit title to

"[HUDI-${JIRA_ID}] ${your title}"

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[hudi] branch master updated: [MINOR] Typo fix for kryo in flink-bundle (#6639)

This is an automated email from the ASF dual-hosted git repository.

danny0405 pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/hudi.git

The following commit(s) were added to refs/heads/master by this push:

new e1da06fa70 [MINOR] Typo fix for kryo in flink-bundle (#6639)

e1da06fa70 is described below

commit e1da06fa7024b9fa84811ec030b8f5f06ce52b06

Author: Xingcan Cui

AuthorDate: Thu Sep 8 21:25:31 2022 -0400

[MINOR] Typo fix for kryo in flink-bundle (#6639)

---

packaging/hudi-flink-bundle/pom.xml | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/packaging/hudi-flink-bundle/pom.xml

b/packaging/hudi-flink-bundle/pom.xml

index 8ccf92c77b..de0478ff06 100644

--- a/packaging/hudi-flink-bundle/pom.xml

+++ b/packaging/hudi-flink-bundle/pom.xml

@@ -130,7 +130,7 @@

javax.servlet:javax.servlet-api

- com.esotericsoftware:kryo-shaded

+ com.esotericsoftware:kryo-shaded

org.apache.flink:${flink.hadoop.compatibility.artifactId}

org.apache.flink:flink-json

[GitHub] [hudi] danny0405 merged pull request #6639: [MINOR] Typo fix for kryo in flink-bundle

danny0405 merged PR #6639: URL: https://github.com/apache/hudi/pull/6639 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6489: [HUDI-4485] [cli] Bumped spring shell to 2.1.1. Updated the default …

hudi-bot commented on PR #6489: URL: https://github.com/apache/hudi/pull/6489#issuecomment-1241397077 ## CI report: * 3ae4fb8b374e12b1097a86d56e5996b7dc0ac79f Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11215) * 252b9f49e2c86c7aad7908e5887064b0d0b36932 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] dongkelun commented on a diff in pull request #5478: [HUDI-3998] Fix getCommitsSinceLastCleaning failed when async cleaning

dongkelun commented on code in PR #5478:

URL: https://github.com/apache/hudi/pull/5478#discussion_r966535607

##

hudi-timeline-service/src/main/java/org/apache/hudi/timeline/service/RequestHandler.java:

##

@@ -539,4 +543,19 @@ public void handle(@NotNull Context context) throws

Exception {

}

}

}

+

+ /**

+ * Determine whether to throw an exception when local view of table's

timeline is behind that of client's view.

+ */

+ private boolean shouldThrowExceptionIfLocalViewBehind(HoodieTimeline

localTimeline, String lastInstantTs) {

Review Comment:

I modified the logic of `shouldThrowExceptionIfLocalViewBehind` method. I

think it's better

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6639: [MINOR] Typo fix for kryo in flink-bundle

hudi-bot commented on PR #6639: URL: https://github.com/apache/hudi/pull/6639#issuecomment-1241323712 ## CI report: * e83471bf24d848fbb3c8ec16decf1bcbe0d5449a Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=11257) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-4817) Markers are not deleted after bootstrap operation

[

https://issues.apache.org/jira/browse/HUDI-4817?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Ethan Guo updated HUDI-4817:

Sprint: 2022/09/05

> Markers are not deleted after bootstrap operation

> -

>

> Key: HUDI-4817

> URL: https://issues.apache.org/jira/browse/HUDI-4817

> Project: Apache Hudi

> Issue Type: Bug

> Components: bootstrap

>Affects Versions: 0.12.0

>Reporter: Ethan Guo

>Assignee: Ethan Guo

>Priority: Critical

> Labels: bootstrap

> Fix For: 0.12.1

>

>

> After the bootstrap operation finishes, the markers are left behind, not

> cleaned up.

>

> {code:java}

> ls -l .hoodie/.temp/02/

> total 24

> -rw-r--r-- MARKERS.type

> -rw-r--r-- MARKERS0

> -rw-r--r-- MARKERS4 {code}

> {code:java}

> ls -l .hoodie/

> total 40

> -rw-r--r-- 02.commit

> -rw-r--r-- 02.commit.requested

> -rw-r--r-- 02.inflight

> drwxr-xr-x archived

> -rw-r--r-- hoodie.properties

> drwxr-xr-x metadata {code}

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (HUDI-4817) Markers are not deleted after bootstrap operation

[

https://issues.apache.org/jira/browse/HUDI-4817?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Ethan Guo updated HUDI-4817:

Fix Version/s: 0.12.1

> Markers are not deleted after bootstrap operation

> -

>

> Key: HUDI-4817

> URL: https://issues.apache.org/jira/browse/HUDI-4817

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: Ethan Guo

>Assignee: Ethan Guo

>Priority: Major

> Fix For: 0.12.1

>

>

> After the bootstrap operation finishes, the markers are left behind, not

> cleaned up.

>

> {code:java}

> ls -l .hoodie/.temp/02/

> total 24

> -rw-r--r-- MARKERS.type

> -rw-r--r-- MARKERS0

> -rw-r--r-- MARKERS4 {code}

> {code:java}

> ls -l .hoodie/

> total 40

> -rw-r--r-- 02.commit

> -rw-r--r-- 02.commit.requested

> -rw-r--r-- 02.inflight

> drwxr-xr-x archived

> -rw-r--r-- hoodie.properties

> drwxr-xr-x metadata {code}

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (HUDI-4817) Markers are not deleted after bootstrap operation

[

https://issues.apache.org/jira/browse/HUDI-4817?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Ethan Guo updated HUDI-4817:

Priority: Critical (was: Major)

> Markers are not deleted after bootstrap operation

> -

>

> Key: HUDI-4817

> URL: https://issues.apache.org/jira/browse/HUDI-4817

> Project: Apache Hudi

> Issue Type: Bug

> Components: bootstrap

>Affects Versions: 0.12.0

>Reporter: Ethan Guo

>Assignee: Ethan Guo

>Priority: Critical

> Labels: bootstrap

> Fix For: 0.12.1

>

>