[GitHub] [hudi] hudi-bot commented on pull request #6782: [HUDI-4911][HUDI-3301] Fixing `HoodieMetadataLogRecordReader` to avoid flushing cache for every lookup

hudi-bot commented on PR #6782: URL: https://github.com/apache/hudi/pull/6782#issuecomment-1370587477 ## CI report: * 182351f81d81cbfa9be87fa2e13d5d58a4d7bec4 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14090) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yihua commented on a diff in pull request #7561: [HUDI-5477] Optimize timeline loading in Hudi sync client

yihua commented on code in PR #7561:

URL: https://github.com/apache/hudi/pull/7561#discussion_r1061210337

##

hudi-common/src/main/java/org/apache/hudi/common/table/timeline/TimelineUtils.java:

##

@@ -210,11 +210,30 @@ public static HoodieDefaultTimeline

getTimeline(HoodieTableMetaClient metaClient

return activeTimeline;

}

+ /**

+ * Returns a Hudi timeline with commits after the given instant time

(exclusive).

+ *

+ * @param metaClient{@link HoodieTableMetaClient} instance.

+ * @param exclusiveStartInstantTime Start instant time (exclusive).

+ * @return Hudi timeline.

+ */

+ public static HoodieTimeline getCommitsTimelineAfter(

+ HoodieTableMetaClient metaClient, String exclusiveStartInstantTime) {

+HoodieActiveTimeline activeTimeline = metaClient.getActiveTimeline();

+HoodieDefaultTimeline timeline =

+activeTimeline.isBeforeTimelineStarts(exclusiveStartInstantTime)

+? metaClient.getArchivedTimeline(exclusiveStartInstantTime)

+.mergeTimeline(activeTimeline)

+: activeTimeline;

+return timeline.getCommitsTimeline()

+.findInstantsAfter(exclusiveStartInstantTime, Integer.MAX_VALUE);

+ }

Review Comment:

I think I misunderstood your comment before. When

`activeTimeline.isBeforeTimelineStarts(exclusiveStartInstantTime)` is true,

`metaClient.getArchivedTimeline(exclusiveStartInstantTime)` is called to return

the archived timeline, which still contains the instant

exclusiveStartInstantTime (inclusive). So we still need `#findInstantsAfter`

as the last step to exclude that instant.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] voonhous commented on pull request #7159: [HUDI-5173]Skip if there is only one file in clusteringGroup

voonhous commented on PR #7159: URL: https://github.com/apache/hudi/pull/7159#issuecomment-1370582777 # Issue Issue at hand: Clustering will be performed for inputGroups with only 1 fileSlice, which may cause unnecessary file re-writes and write amplifications should there be no column sorting required. # Edge cases CMIIW, the changes here does not fully fix the cluster of inputGroups with only 1 fileSlice issue. I am not sure if I have missed out any scenarios, at the top of my head, I can only think of these 3 scenarios. 1. No sorting required 2. Sorting required; column has not been sorted (replacecommit/clustering not performed yet) 3. Sorting required; column has already been sorted (replacecommit/clustering has been performed) While this fix is able to fix the issue for case (1), it is not able to differentiate between the cases (2) and (3). As such, if a parquet file has the required columns that are already sorted, an unnecessary rewrite will be performed again. I am not sure if there are any way around this issue other than reading required replacecommit files (if they are not archived) to check if a sort operation has been performed. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] SabyasachiDasTR opened a new issue, #7600: Hoodie clean is not deleting old files for MOR table

SabyasachiDasTR opened a new issue, #7600: URL: https://github.com/apache/hudi/issues/7600 **Describe the problem you faced** We are incrementally upserting data into our Hudi table/s every 5 minutes. We have set CLEANER_POLICY as KEEP_LATEST_BY_HOURS with CLEANER_HOURS_RETAINED = 48. The old delta log files in our partition from 2 months back are still not cleaned and we can see in cli last cleanup happened 2 months back on November. I do not see any action being performed on cleaning the old log files. The only command we execute is Upsert and we have single writer and compaction runs every hour. We think this is causing out emr job to underperform and crash multiple times as very large number of delta log files are getting piled up in the partitions and compaction is trying to read them while processing the job.  **Options used during Upsert:**  **Writing to s3**  Partition structure: s3://bucket/table/partition/parquet and .log files **Expected behavior** As per my understanding the logs should be deleted beyond CLEANER_HOURS_RETAINED which is 2 days . **Environment Description** * Hudi version : 0.11.1 * Spark version : 3.2.1 * Hive version : Hive not install on EMR Cluster emr-6.7.0 * Hadoop version : 3.2.1 * Storage (HDFS/S3/GCS..) : s3 * Running on Docker? (yes/no) : No -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7573: [HUDI-5484] Avoid using GenericRecord in ColumnStatMetadata

hudi-bot commented on PR #7573: URL: https://github.com/apache/hudi/pull/7573#issuecomment-1370533946 ## CI report: * 1ac267ba9af690ecd47f74f60c34851387aee9eb Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14080) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14083) Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14089) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7528: [HUDI-5443] Fixing exception trying to read MOR table after `NestedSchemaPruning` rule has been applied

hudi-bot commented on PR #7528: URL: https://github.com/apache/hudi/pull/7528#issuecomment-1370533874 ## CI report: * f3a439884f90500e29da0075f4d0ad7d73a484b3 UNKNOWN * 801223083980fed8f400a34588df98cca453e439 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14088) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #7584: [HUDI-5205] Support Flink 1.16.0

danny0405 commented on PR #7584: URL: https://github.com/apache/hudi/pull/7584#issuecomment-1370531069 Thanks for the contribution, I have reviewed again and created another patch: [5205.patch.zip](https://github.com/apache/hudi/files/10341889/5205.patch.zip) The tests failed because the `JsonRowDataDeserializationSchema` is refactorted where the `#open` method must be called for some JSON infrustructures initialization. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] BruceKellan commented on pull request #7445: [HUDI-5380] Fixing change table path but table location in metastore …

BruceKellan commented on PR #7445: URL: https://github.com/apache/hudi/pull/7445#issuecomment-1370524442 The failure of CI seems to have flaky test. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (HUDI-5496) Prevent Hudi from generating clustering plans with filegroups consisting of only 1 fileSlice

[

https://issues.apache.org/jira/browse/HUDI-5496?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

voon closed HUDI-5496.

--

Resolution: Duplicate

> Prevent Hudi from generating clustering plans with filegroups consisting of

> only 1 fileSlice

>

>

> Key: HUDI-5496

> URL: https://issues.apache.org/jira/browse/HUDI-5496

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: voon

>Assignee: voon

>Priority: Major

> Labels: pull-request-available

>

> Suppose a partition is no longer being written/updated, i.e. there will be no

> changes to the partition, therefore, size of parquet files will always be the

> same.

>

> If the parquet files in the partition (even after prior clustering) is

> smaller than {*}hoodie.clustering.plan.strategy.small.file.limit{*}, the

> fileSlice will always be returned as a candidate for

> {_}getFileSlicesEligibleForClustering(){_}.

>

> This may cause inputGroups with only 1 fileSlice to be selected as candidates

> for clustering. An of a clusteringPlan demonstrating such a case in JSON

> format is seen below.

>

>

> {code:java}

> {

> "inputGroups": [

> {

> "slices": [

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-03/cf2929a7-78dc-4e99-be0c-926e9487187d-0_0-2-0_20230104102201656.parquet",

> "deltaFilePaths": [],

> "fileId": "cf2929a7-78dc-4e99-be0c-926e9487187d-0",

> "partitionPath": "dt=2023-01-03",

> "bootstrapFilePath": "",

> "version": 1

> }

> ],

> "metrics": {

> "TOTAL_LOG_FILES": 0.0,

> "TOTAL_IO_MB": 260.0,

> "TOTAL_IO_READ_MB": 130.0,

> "TOTAL_LOG_FILES_SIZE": 0.0,

> "TOTAL_IO_WRITE_MB": 130.0

> },

> "numOutputFileGroups": 1,

> "extraMetadata": null,

> "version": 1

> },

> {

> "slices": [

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-04/b101162e-4813-4de6-9881-4ee0ff918f32-0_0-2-0_20230104103401458.parquet",

> "deltaFilePaths": [],

> "fileId": "b101162e-4813-4de6-9881-4ee0ff918f32-0",

> "partitionPath": "dt=2023-01-04",

> "bootstrapFilePath": "",

> "version": 1

> },

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-04/9b1c1494-2a58-43f1-890d-4b52070937b1-0_0-2-0_20230104102201656.parquet",

> "deltaFilePaths": [],

> "fileId": "9b1c1494-2a58-43f1-890d-4b52070937b1-0",

> "partitionPath": "dt=2023-01-04",

> "bootstrapFilePath": "",

> "version": 1

> }

> ],

> "metrics": {

> "TOTAL_LOG_FILES": 0.0,

> "TOTAL_IO_MB": 418.0,

> "TOTAL_IO_READ_MB": 209.0,

> "TOTAL_LOG_FILES_SIZE": 0.0,

> "TOTAL_IO_WRITE_MB": 209.0

> },

> "numOutputFileGroups": 1,

> "extraMetadata": null,

> "version": 1

> }

> ],

> "strategy": {

> "strategyClassName":

> "org.apache.hudi.client.clustering.run.strategy.SparkSortAndSizeExecutionStrategy",

> "strategyParams": {},

> "version": 1

> },

> "extraMetadata": {},

> "version": 1,

> "preserveHoodieMetadata": true

> }{code}

>

> Such a case will cause performance issues as a parquet file is re-written

> unnecessarily (write amplification).

>

> The fix is to only select inputGroups with more than 1 fileSlice as

> candidates for clustering.

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Commented] (HUDI-5496) Prevent Hudi from generating clustering plans with filegroups consisting of only 1 fileSlice

[

https://issues.apache.org/jira/browse/HUDI-5496?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17654303#comment-17654303

]

voon commented on HUDI-5496:

Duplicate of HUDI-5173.

> Prevent Hudi from generating clustering plans with filegroups consisting of

> only 1 fileSlice

>

>

> Key: HUDI-5496

> URL: https://issues.apache.org/jira/browse/HUDI-5496

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: voon

>Assignee: voon

>Priority: Major

> Labels: pull-request-available

>

> Suppose a partition is no longer being written/updated, i.e. there will be no

> changes to the partition, therefore, size of parquet files will always be the

> same.

>

> If the parquet files in the partition (even after prior clustering) is

> smaller than {*}hoodie.clustering.plan.strategy.small.file.limit{*}, the

> fileSlice will always be returned as a candidate for

> {_}getFileSlicesEligibleForClustering(){_}.

>

> This may cause inputGroups with only 1 fileSlice to be selected as candidates

> for clustering. An of a clusteringPlan demonstrating such a case in JSON

> format is seen below.

>

>

> {code:java}

> {

> "inputGroups": [

> {

> "slices": [

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-03/cf2929a7-78dc-4e99-be0c-926e9487187d-0_0-2-0_20230104102201656.parquet",

> "deltaFilePaths": [],

> "fileId": "cf2929a7-78dc-4e99-be0c-926e9487187d-0",

> "partitionPath": "dt=2023-01-03",

> "bootstrapFilePath": "",

> "version": 1

> }

> ],

> "metrics": {

> "TOTAL_LOG_FILES": 0.0,

> "TOTAL_IO_MB": 260.0,

> "TOTAL_IO_READ_MB": 130.0,

> "TOTAL_LOG_FILES_SIZE": 0.0,

> "TOTAL_IO_WRITE_MB": 130.0

> },

> "numOutputFileGroups": 1,

> "extraMetadata": null,

> "version": 1

> },

> {

> "slices": [

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-04/b101162e-4813-4de6-9881-4ee0ff918f32-0_0-2-0_20230104103401458.parquet",

> "deltaFilePaths": [],

> "fileId": "b101162e-4813-4de6-9881-4ee0ff918f32-0",

> "partitionPath": "dt=2023-01-04",

> "bootstrapFilePath": "",

> "version": 1

> },

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-04/9b1c1494-2a58-43f1-890d-4b52070937b1-0_0-2-0_20230104102201656.parquet",

> "deltaFilePaths": [],

> "fileId": "9b1c1494-2a58-43f1-890d-4b52070937b1-0",

> "partitionPath": "dt=2023-01-04",

> "bootstrapFilePath": "",

> "version": 1

> }

> ],

> "metrics": {

> "TOTAL_LOG_FILES": 0.0,

> "TOTAL_IO_MB": 418.0,

> "TOTAL_IO_READ_MB": 209.0,

> "TOTAL_LOG_FILES_SIZE": 0.0,

> "TOTAL_IO_WRITE_MB": 209.0

> },

> "numOutputFileGroups": 1,

> "extraMetadata": null,

> "version": 1

> }

> ],

> "strategy": {

> "strategyClassName":

> "org.apache.hudi.client.clustering.run.strategy.SparkSortAndSizeExecutionStrategy",

> "strategyParams": {},

> "version": 1

> },

> "extraMetadata": {},

> "version": 1,

> "preserveHoodieMetadata": true

> }{code}

>

> Such a case will cause performance issues as a parquet file is re-written

> unnecessarily (write amplification).

>

> The fix is to only select inputGroups with more than 1 fileSlice as

> candidates for clustering.

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [hudi] voonhous closed pull request #7599: [HUDI-5496] Prevent unnecessary rewrites when performing clustering

voonhous closed pull request #7599: [HUDI-5496] Prevent unnecessary rewrites when performing clustering URL: https://github.com/apache/hudi/pull/7599 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] voonhous commented on pull request #7599: [HUDI-5496] Prevent unnecessary rewrites when performing clustering

voonhous commented on PR #7599: URL: https://github.com/apache/hudi/pull/7599#issuecomment-1370517402 @SteNicholas great, I'll close my PR then. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] SteNicholas commented on pull request #7599: [HUDI-5496] Prevent unnecessary rewrites when performing clustering

SteNicholas commented on PR #7599: URL: https://github.com/apache/hudi/pull/7599#issuecomment-1370516264 @voonhous, you could take a look at the pull request: https://github.com/apache/hudi/pull/7159 . -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7599: [HUDI-5496] Prevent unnecessary rewrites when performing clustering

hudi-bot commented on PR #7599: URL: https://github.com/apache/hudi/pull/7599#issuecomment-1370500287 ## CI report: * efb347ea9684ccafeb7208fb870c4ff19b300412 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14093) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7599: [HUDI-5496] Prevent unnecessary rewrites when performing clustering

hudi-bot commented on PR #7599: URL: https://github.com/apache/hudi/pull/7599#issuecomment-1370497402 ## CI report: * efb347ea9684ccafeb7208fb870c4ff19b300412 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7445: [HUDI-5380] Fixing change table path but table location in metastore …

hudi-bot commented on PR #7445: URL: https://github.com/apache/hudi/pull/7445#issuecomment-1370493785 ## CI report: * 36bc81d1f49d09d22eb8ad87d280b0f1f61f4a44 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13998) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14025) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14031) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14087) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

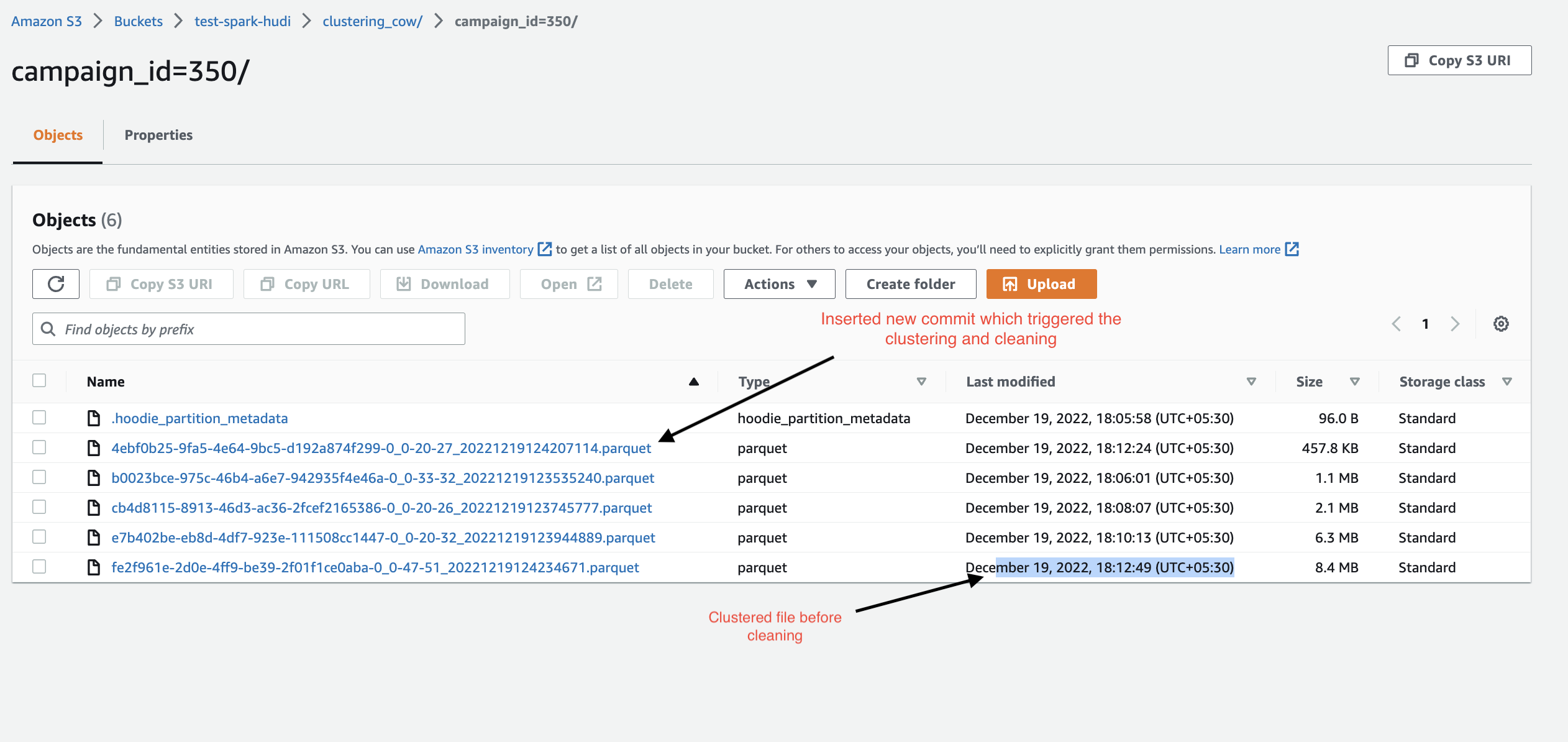

[GitHub] [hudi] maheshguptags commented on issue #7589: Keep only clustered file(all) after cleaning

maheshguptags commented on issue #7589: URL: https://github.com/apache/hudi/issues/7589#issuecomment-1370489489 Hi @yihua ,Thanks for looking into this. you are partially right but I want to preserve all the clustered file from 1 clustered file to till very end of the pipeline. let me give you the example. step 1 image : it contains the 3 commit of the file  step 2 image contain after the clustering file :  step 3 it contains only the clustered file and the latest commit files  step 4 image inserted few more commit then perform the clustering  Step 5 Image : Now this time clustering and cleaning will be triggered (4 commits completed) so it will clean the last cluster file (size exactly 8.4MB) and create new cluster file having all updated data. Whereas my wish is to preserve the old clustered file (8.4MB) file and create new clustered file. This way I will be able to maintain historical data of my process.  I hope I am able to explain the use case, If not we can quick catch up on call. Let me know your thought on this Thanks!! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] BalaMahesh commented on issue #7595: [SUPPORT] Hudi Clean and Delta commits taking ~50 mins to finish frequently

BalaMahesh commented on issue #7595: URL: https://github.com/apache/hudi/issues/7595#issuecomment-1370487901 This is a non-partitioned table with minimum file size set to 1 MB and ~150 parquet files are created. Below are the screenshots from spark web ui. https://user-images.githubusercontent.com/25053668/210485678-01569009-8b3c-4b62-8d04-7d5149cbdb7b.png;> https://user-images.githubusercontent.com/25053668/210485684-b5314c87-a794-4242-889f-3a7a6623e395.png;> https://user-images.githubusercontent.com/25053668/210485691-d3112ff4-739e-429a-bca1-3abd44394ba6.png;> https://user-images.githubusercontent.com/25053668/210485699-26aeede3-24fa-46ec-9e4f-b07d87b842c9.png;> https://user-images.githubusercontent.com/25053668/210485707-bdd31536-0399-4a89-98b8-2816ed1fdff3.png;> I am adding the logs between 23/01/04 03:48:51 and 23/01/04 04:41:25 - which took longer duration for delta commit. [hudi_logs.txt](https://github.com/apache/hudi/files/10341543/hudi_logs.txt) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Assigned] (HUDI-5496) Prevent Hudi from generating clustering plans with filegroups consisting of only 1 fileSlice

[

https://issues.apache.org/jira/browse/HUDI-5496?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

voon reassigned HUDI-5496:

--

Assignee: voon

> Prevent Hudi from generating clustering plans with filegroups consisting of

> only 1 fileSlice

>

>

> Key: HUDI-5496

> URL: https://issues.apache.org/jira/browse/HUDI-5496

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: voon

>Assignee: voon

>Priority: Major

> Labels: pull-request-available

>

> Suppose a partition is no longer being written/updated, i.e. there will be no

> changes to the partition, therefore, size of parquet files will always be the

> same.

>

> If the parquet files in the partition (even after prior clustering) is

> smaller than {*}hoodie.clustering.plan.strategy.small.file.limit{*}, the

> fileSlice will always be returned as a candidate for

> {_}getFileSlicesEligibleForClustering(){_}.

>

> This may cause inputGroups with only 1 fileSlice to be selected as candidates

> for clustering. An of a clusteringPlan demonstrating such a case in JSON

> format is seen below.

>

>

> {code:java}

> {

> "inputGroups": [

> {

> "slices": [

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-03/cf2929a7-78dc-4e99-be0c-926e9487187d-0_0-2-0_20230104102201656.parquet",

> "deltaFilePaths": [],

> "fileId": "cf2929a7-78dc-4e99-be0c-926e9487187d-0",

> "partitionPath": "dt=2023-01-03",

> "bootstrapFilePath": "",

> "version": 1

> }

> ],

> "metrics": {

> "TOTAL_LOG_FILES": 0.0,

> "TOTAL_IO_MB": 260.0,

> "TOTAL_IO_READ_MB": 130.0,

> "TOTAL_LOG_FILES_SIZE": 0.0,

> "TOTAL_IO_WRITE_MB": 130.0

> },

> "numOutputFileGroups": 1,

> "extraMetadata": null,

> "version": 1

> },

> {

> "slices": [

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-04/b101162e-4813-4de6-9881-4ee0ff918f32-0_0-2-0_20230104103401458.parquet",

> "deltaFilePaths": [],

> "fileId": "b101162e-4813-4de6-9881-4ee0ff918f32-0",

> "partitionPath": "dt=2023-01-04",

> "bootstrapFilePath": "",

> "version": 1

> },

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-04/9b1c1494-2a58-43f1-890d-4b52070937b1-0_0-2-0_20230104102201656.parquet",

> "deltaFilePaths": [],

> "fileId": "9b1c1494-2a58-43f1-890d-4b52070937b1-0",

> "partitionPath": "dt=2023-01-04",

> "bootstrapFilePath": "",

> "version": 1

> }

> ],

> "metrics": {

> "TOTAL_LOG_FILES": 0.0,

> "TOTAL_IO_MB": 418.0,

> "TOTAL_IO_READ_MB": 209.0,

> "TOTAL_LOG_FILES_SIZE": 0.0,

> "TOTAL_IO_WRITE_MB": 209.0

> },

> "numOutputFileGroups": 1,

> "extraMetadata": null,

> "version": 1

> }

> ],

> "strategy": {

> "strategyClassName":

> "org.apache.hudi.client.clustering.run.strategy.SparkSortAndSizeExecutionStrategy",

> "strategyParams": {},

> "version": 1

> },

> "extraMetadata": {},

> "version": 1,

> "preserveHoodieMetadata": true

> }{code}

>

> Such a case will cause performance issues as a parquet file is re-written

> unnecessarily (write amplification).

>

> The fix is to only select inputGroups with more than 1 fileSlice as

> candidates for clustering.

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (HUDI-5496) Prevent Hudi from generating clustering plans with filegroups consisting of only 1 fileSlice

[

https://issues.apache.org/jira/browse/HUDI-5496?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HUDI-5496:

-

Labels: pull-request-available (was: )

> Prevent Hudi from generating clustering plans with filegroups consisting of

> only 1 fileSlice

>

>

> Key: HUDI-5496

> URL: https://issues.apache.org/jira/browse/HUDI-5496

> Project: Apache Hudi

> Issue Type: Bug

>Reporter: voon

>Priority: Major

> Labels: pull-request-available

>

> Suppose a partition is no longer being written/updated, i.e. there will be no

> changes to the partition, therefore, size of parquet files will always be the

> same.

>

> If the parquet files in the partition (even after prior clustering) is

> smaller than {*}hoodie.clustering.plan.strategy.small.file.limit{*}, the

> fileSlice will always be returned as a candidate for

> {_}getFileSlicesEligibleForClustering(){_}.

>

> This may cause inputGroups with only 1 fileSlice to be selected as candidates

> for clustering. An of a clusteringPlan demonstrating such a case in JSON

> format is seen below.

>

>

> {code:java}

> {

> "inputGroups": [

> {

> "slices": [

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-03/cf2929a7-78dc-4e99-be0c-926e9487187d-0_0-2-0_20230104102201656.parquet",

> "deltaFilePaths": [],

> "fileId": "cf2929a7-78dc-4e99-be0c-926e9487187d-0",

> "partitionPath": "dt=2023-01-03",

> "bootstrapFilePath": "",

> "version": 1

> }

> ],

> "metrics": {

> "TOTAL_LOG_FILES": 0.0,

> "TOTAL_IO_MB": 260.0,

> "TOTAL_IO_READ_MB": 130.0,

> "TOTAL_LOG_FILES_SIZE": 0.0,

> "TOTAL_IO_WRITE_MB": 130.0

> },

> "numOutputFileGroups": 1,

> "extraMetadata": null,

> "version": 1

> },

> {

> "slices": [

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-04/b101162e-4813-4de6-9881-4ee0ff918f32-0_0-2-0_20230104103401458.parquet",

> "deltaFilePaths": [],

> "fileId": "b101162e-4813-4de6-9881-4ee0ff918f32-0",

> "partitionPath": "dt=2023-01-04",

> "bootstrapFilePath": "",

> "version": 1

> },

> {

> "dataFilePath":

> "/path/clustering_test_table/dt=2023-01-04/9b1c1494-2a58-43f1-890d-4b52070937b1-0_0-2-0_20230104102201656.parquet",

> "deltaFilePaths": [],

> "fileId": "9b1c1494-2a58-43f1-890d-4b52070937b1-0",

> "partitionPath": "dt=2023-01-04",

> "bootstrapFilePath": "",

> "version": 1

> }

> ],

> "metrics": {

> "TOTAL_LOG_FILES": 0.0,

> "TOTAL_IO_MB": 418.0,

> "TOTAL_IO_READ_MB": 209.0,

> "TOTAL_LOG_FILES_SIZE": 0.0,

> "TOTAL_IO_WRITE_MB": 209.0

> },

> "numOutputFileGroups": 1,

> "extraMetadata": null,

> "version": 1

> }

> ],

> "strategy": {

> "strategyClassName":

> "org.apache.hudi.client.clustering.run.strategy.SparkSortAndSizeExecutionStrategy",

> "strategyParams": {},

> "version": 1

> },

> "extraMetadata": {},

> "version": 1,

> "preserveHoodieMetadata": true

> }{code}

>

> Such a case will cause performance issues as a parquet file is re-written

> unnecessarily (write amplification).

>

> The fix is to only select inputGroups with more than 1 fileSlice as

> candidates for clustering.

>

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [hudi] voonhous opened a new pull request, #7599: [HUDI-5496] Prevent unnecessary rewrites when performing clustering

voonhous opened a new pull request, #7599: URL: https://github.com/apache/hudi/pull/7599 ### Change Logs Prevent unnecessary rewrites when performing clustering by only selecting _HoodieClusteringGroup_s with more than 1 fileSlice as candidates for clustering. ### Impact _Describe any public API or user-facing feature change or any performance impact._ None ### Risk level (write none, low medium or high below) None _If medium or high, explain what verification was done to mitigate the risks._ ### Documentation Update _Describe any necessary documentation update if there is any new feature, config, or user-facing change_ - _The config description must be updated if new configs are added or the default value of the configs are changed_ - _Any new feature or user-facing change requires updating the Hudi website. Please create a Jira ticket, attach the ticket number here and follow the [instruction](https://hudi.apache.org/contribute/developer-setup#website) to make changes to the website._ ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-5496) Prevent Hudi from generating clustering plans with filegroups consisting of only 1 fileSlice

[

https://issues.apache.org/jira/browse/HUDI-5496?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

voon updated HUDI-5496:

---

Description:

Suppose a partition is no longer being written/updated, i.e. there will be no

changes to the partition, therefore, size of parquet files will always be the

same.

If the parquet files in the partition (even after prior clustering) is smaller

than {*}hoodie.clustering.plan.strategy.small.file.limit{*}, the fileSlice will

always be returned as a candidate for

{_}getFileSlicesEligibleForClustering(){_}.

This may cause inputGroups with only 1 fileSlice to be selected as candidates

for clustering. An of a clusteringPlan demonstrating such a case in JSON format

is seen below.

{code:java}

{

"inputGroups": [

{

"slices": [

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-03/cf2929a7-78dc-4e99-be0c-926e9487187d-0_0-2-0_20230104102201656.parquet",

"deltaFilePaths": [],

"fileId": "cf2929a7-78dc-4e99-be0c-926e9487187d-0",

"partitionPath": "dt=2023-01-03",

"bootstrapFilePath": "",

"version": 1

}

],

"metrics": {

"TOTAL_LOG_FILES": 0.0,

"TOTAL_IO_MB": 260.0,

"TOTAL_IO_READ_MB": 130.0,

"TOTAL_LOG_FILES_SIZE": 0.0,

"TOTAL_IO_WRITE_MB": 130.0

},

"numOutputFileGroups": 1,

"extraMetadata": null,

"version": 1

},

{

"slices": [

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-04/b101162e-4813-4de6-9881-4ee0ff918f32-0_0-2-0_20230104103401458.parquet",

"deltaFilePaths": [],

"fileId": "b101162e-4813-4de6-9881-4ee0ff918f32-0",

"partitionPath": "dt=2023-01-04",

"bootstrapFilePath": "",

"version": 1

},

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-04/9b1c1494-2a58-43f1-890d-4b52070937b1-0_0-2-0_20230104102201656.parquet",

"deltaFilePaths": [],

"fileId": "9b1c1494-2a58-43f1-890d-4b52070937b1-0",

"partitionPath": "dt=2023-01-04",

"bootstrapFilePath": "",

"version": 1

}

],

"metrics": {

"TOTAL_LOG_FILES": 0.0,

"TOTAL_IO_MB": 418.0,

"TOTAL_IO_READ_MB": 209.0,

"TOTAL_LOG_FILES_SIZE": 0.0,

"TOTAL_IO_WRITE_MB": 209.0

},

"numOutputFileGroups": 1,

"extraMetadata": null,

"version": 1

}

],

"strategy": {

"strategyClassName":

"org.apache.hudi.client.clustering.run.strategy.SparkSortAndSizeExecutionStrategy",

"strategyParams": {},

"version": 1

},

"extraMetadata": {},

"version": 1,

"preserveHoodieMetadata": true

}{code}

Such a case will cause performance issues as a parquet file is re-written

unnecessarily (write amplification).

The fix is to only select inputGroups with more than 1 fileSlice as candidates

for clustering.

was:

Suppose a partition is no longer being written/updated, i.e. there will be no

changes to the partition, therefore, size of parquet files will always be the

same.

If the parquet files in the partition (even after prior clustering) is smaller

than {*}hoodie.clustering.plan.strategy.small.file.limit{*}, ** the fileSlice

will always be returned as a candidate for

{_}getFileSlicesEligibleForClustering(){_}.

This may cause inputGroups with only 1 fileSlice to be selected as candidates

for clustering. An of a clusteringPlan demonstrating such a case in JSON format

is seen below.

{code:java}

{

"inputGroups": [

{

"slices": [

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-03/cf2929a7-78dc-4e99-be0c-926e9487187d-0_0-2-0_20230104102201656.parquet",

"deltaFilePaths": [],

"fileId": "cf2929a7-78dc-4e99-be0c-926e9487187d-0",

"partitionPath": "dt=2023-01-03",

"bootstrapFilePath": "",

"version": 1

}

],

"metrics": {

"TOTAL_LOG_FILES": 0.0,

"TOTAL_IO_MB": 260.0,

"TOTAL_IO_READ_MB": 130.0,

"TOTAL_LOG_FILES_SIZE": 0.0,

"TOTAL_IO_WRITE_MB": 130.0

},

"numOutputFileGroups": 1,

"extraMetadata": null,

"version": 1

},

{

"slices": [

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-04/b101162e-4813-4de6-9881-4ee0ff918f32-0_0-2-0_20230104103401458.parquet",

"deltaFilePaths": [],

"fileId": "b101162e-4813-4de6-9881-4ee0ff918f32-0",

"partitionPath": "dt=2023-01-04",

"bootstrapFilePath": "",

"version": 1

},

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-04/9b1c1494-2a58-43f1-890d-4b52070937b1-0_0-2-0_20230104102201656.parquet",

"deltaFilePaths": [],

"fileId": "9b1c1494-2a58-43f1-890d-4b52070937b1-0",

"partitionPath":

[jira] [Created] (HUDI-5496) Prevent Hudi from generating clustering plans with filegroups consisting of only 1 fileSlice

voon created HUDI-5496:

--

Summary: Prevent Hudi from generating clustering plans with

filegroups consisting of only 1 fileSlice

Key: HUDI-5496

URL: https://issues.apache.org/jira/browse/HUDI-5496

Project: Apache Hudi

Issue Type: Bug

Reporter: voon

Suppose a partition is no longer being written/updated, i.e. there will be no

changes to the partition, therefore, size of parquet files will always be the

same.

If the parquet files in the partition (even after prior clustering) is smaller

than {*}hoodie.clustering.plan.strategy.small.file.limit{*}, ** the fileSlice

will always be returned as a candidate for

{_}getFileSlicesEligibleForClustering(){_}.

This may cause inputGroups with only 1 fileSlice to be selected as candidates

for clustering. An of a clusteringPlan demonstrating such a case in JSON format

is seen below.

{code:java}

{

"inputGroups": [

{

"slices": [

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-03/cf2929a7-78dc-4e99-be0c-926e9487187d-0_0-2-0_20230104102201656.parquet",

"deltaFilePaths": [],

"fileId": "cf2929a7-78dc-4e99-be0c-926e9487187d-0",

"partitionPath": "dt=2023-01-03",

"bootstrapFilePath": "",

"version": 1

}

],

"metrics": {

"TOTAL_LOG_FILES": 0.0,

"TOTAL_IO_MB": 260.0,

"TOTAL_IO_READ_MB": 130.0,

"TOTAL_LOG_FILES_SIZE": 0.0,

"TOTAL_IO_WRITE_MB": 130.0

},

"numOutputFileGroups": 1,

"extraMetadata": null,

"version": 1

},

{

"slices": [

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-04/b101162e-4813-4de6-9881-4ee0ff918f32-0_0-2-0_20230104103401458.parquet",

"deltaFilePaths": [],

"fileId": "b101162e-4813-4de6-9881-4ee0ff918f32-0",

"partitionPath": "dt=2023-01-04",

"bootstrapFilePath": "",

"version": 1

},

{

"dataFilePath":

"/path/clustering_test_table/dt=2023-01-04/9b1c1494-2a58-43f1-890d-4b52070937b1-0_0-2-0_20230104102201656.parquet",

"deltaFilePaths": [],

"fileId": "9b1c1494-2a58-43f1-890d-4b52070937b1-0",

"partitionPath": "dt=2023-01-04",

"bootstrapFilePath": "",

"version": 1

}

],

"metrics": {

"TOTAL_LOG_FILES": 0.0,

"TOTAL_IO_MB": 418.0,

"TOTAL_IO_READ_MB": 209.0,

"TOTAL_LOG_FILES_SIZE": 0.0,

"TOTAL_IO_WRITE_MB": 209.0

},

"numOutputFileGroups": 1,

"extraMetadata": null,

"version": 1

}

],

"strategy": {

"strategyClassName":

"org.apache.hudi.client.clustering.run.strategy.SparkSortAndSizeExecutionStrategy",

"strategyParams": {},

"version": 1

},

"extraMetadata": {},

"version": 1,

"preserveHoodieMetadata": true

}{code}

Such a case will cause performance issues as a parquet file is re-written

unnecessarily (write amplification).

The fix is to only select inputGroups with more than 1 fileSlice as candidates

for clustering.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [hudi] hudi-bot commented on pull request #7598: [HUDI-5495] add some property to table config

hudi-bot commented on PR #7598: URL: https://github.com/apache/hudi/pull/7598#issuecomment-1370458184 ## CI report: * 2c35d032539bd064a57a1e25f062e5f93dceeccd Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14092) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7593: [HUDI-5492] spark call command show_compaction doesn't return the com…

hudi-bot commented on PR #7593: URL: https://github.com/apache/hudi/pull/7593#issuecomment-1370458167 ## CI report: * 8dac276274844f65a48d2e877a3cb1ed1d4ec3e3 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14079) * eebec335595a73242a23d682c401b5b5c5d54284 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14091) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7528: [HUDI-5443] Fixing exception trying to read MOR table after `NestedSchemaPruning` rule has been applied

hudi-bot commented on PR #7528: URL: https://github.com/apache/hudi/pull/7528#issuecomment-1370458072 ## CI report: * f3a439884f90500e29da0075f4d0ad7d73a484b3 UNKNOWN * 636b3000094521146d90c541b8cfd3b4ee6e Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13929) * 801223083980fed8f400a34588df98cca453e439 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14088) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6782: [HUDI-4911][HUDI-3301] Fixing `HoodieMetadataLogRecordReader` to avoid flushing cache for every lookup

hudi-bot commented on PR #6782: URL: https://github.com/apache/hudi/pull/6782#issuecomment-1370457674 ## CI report: * 0a57ee15196e2b6978dbf74efad95cd58f6e7f13 Azure: [CANCELED](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13923) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13931) * 182351f81d81cbfa9be87fa2e13d5d58a4d7bec4 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14090) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7598: [HUDI-5495] add some property to table config

hudi-bot commented on PR #7598: URL: https://github.com/apache/hudi/pull/7598#issuecomment-1370455843 ## CI report: * 2c35d032539bd064a57a1e25f062e5f93dceeccd UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7593: [HUDI-5492] spark call command show_compaction doesn't return the com…

hudi-bot commented on PR #7593: URL: https://github.com/apache/hudi/pull/7593#issuecomment-1370455818 ## CI report: * 8dac276274844f65a48d2e877a3cb1ed1d4ec3e3 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14079) * eebec335595a73242a23d682c401b5b5c5d54284 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7528: [HUDI-5443] Fixing exception trying to read MOR table after `NestedSchemaPruning` rule has been applied

hudi-bot commented on PR #7528: URL: https://github.com/apache/hudi/pull/7528#issuecomment-1370455694 ## CI report: * f3a439884f90500e29da0075f4d0ad7d73a484b3 UNKNOWN * 636b3000094521146d90c541b8cfd3b4ee6e Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13929) * 801223083980fed8f400a34588df98cca453e439 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #6782: [HUDI-4911][HUDI-3301] Fixing `HoodieMetadataLogRecordReader` to avoid flushing cache for every lookup

hudi-bot commented on PR #6782: URL: https://github.com/apache/hudi/pull/6782#issuecomment-1370455241 ## CI report: * 0a57ee15196e2b6978dbf74efad95cd58f6e7f13 Azure: [CANCELED](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13923) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13931) * 182351f81d81cbfa9be87fa2e13d5d58a4d7bec4 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7573: [HUDI-5484] Avoid using GenericRecord in ColumnStatMetadata

hudi-bot commented on PR #7573: URL: https://github.com/apache/hudi/pull/7573#issuecomment-1370453158 ## CI report: * 1ac267ba9af690ecd47f74f60c34851387aee9eb Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14080) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14083) Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14089) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] 41/45: fix read log not exist

This is an automated email from the ASF dual-hosted git repository.

forwardxu pushed a commit to branch release-0.12.1

in repository https://gitbox.apache.org/repos/asf/hudi.git

commit 4fe2aec44acefc7ec857836b54af69dce5f41bda

Author: XuQianJin-Stars

AuthorDate: Tue Dec 13 14:52:03 2022 +0800

fix read log not exist

---

.../org/apache/hudi/common/table/log/HoodieLogFormatReader.java | 8 ++--

1 file changed, 6 insertions(+), 2 deletions(-)

diff --git

a/hudi-common/src/main/java/org/apache/hudi/common/table/log/HoodieLogFormatReader.java

b/hudi-common/src/main/java/org/apache/hudi/common/table/log/HoodieLogFormatReader.java

index c48107e392..7f67c76870 100644

---

a/hudi-common/src/main/java/org/apache/hudi/common/table/log/HoodieLogFormatReader.java

+++

b/hudi-common/src/main/java/org/apache/hudi/common/table/log/HoodieLogFormatReader.java

@@ -67,8 +67,12 @@ public class HoodieLogFormatReader implements

HoodieLogFormat.Reader {

this.internalSchema = internalSchema == null ?

InternalSchema.getEmptyInternalSchema() : internalSchema;

if (logFiles.size() > 0) {

HoodieLogFile nextLogFile = logFiles.remove(0);

- this.currentReader = new HoodieLogFileReader(fs, nextLogFile,

readerSchema, bufferSize, readBlocksLazily, false,

- enableRecordLookups, recordKeyField, internalSchema);

+ if (fs.exists(nextLogFile.getPath())) {

+this.currentReader = new HoodieLogFileReader(fs, nextLogFile,

readerSchema, bufferSize, readBlocksLazily, false,

+enableRecordLookups, recordKeyField, internalSchema);

+ } else {

+LOG.warn("File does not exist: " + nextLogFile.getPath());

+ }

}

}

[hudi] 16/45: [HUDI-2624] Implement Non Index type for HUDI

This is an automated email from the ASF dual-hosted git repository.

forwardxu pushed a commit to branch release-0.12.1

in repository https://gitbox.apache.org/repos/asf/hudi.git

commit 8ba01dc70a718ddab3044b45f96a545f0aae4084

Author: XuQianJin-Stars

AuthorDate: Fri Oct 28 12:33:22 2022 +0800

[HUDI-2624] Implement Non Index type for HUDI

---

.../org/apache/hudi/config/HoodieIndexConfig.java | 43

.../org/apache/hudi/config/HoodieWriteConfig.java | 12 +

.../java/org/apache/hudi/index/HoodieIndex.java| 2 +-

.../java/org/apache/hudi/io/HoodieMergeHandle.java | 3 +-

.../apache/hudi/keygen/EmptyAvroKeyGenerator.java | 65 +

.../hudi/table/action/commit/BucketInfo.java | 4 +

.../hudi/table/action/commit/BucketType.java | 2 +-

.../apache/hudi/index/FlinkHoodieIndexFactory.java | 2 +

.../org/apache/hudi/index/FlinkHoodieNonIndex.java | 65 +

.../apache/hudi/index/SparkHoodieIndexFactory.java | 3 +

.../hudi/index/nonindex/SparkHoodieNonIndex.java | 73 ++

.../hudi/io/storage/row/HoodieRowCreateHandle.java | 5 +-

.../org/apache/hudi/keygen/EmptyKeyGenerator.java | 80 +++

.../commit/BaseSparkCommitActionExecutor.java | 17 ++

.../table/action/commit/UpsertPartitioner.java | 35 ++-

.../org/apache/hudi/common/model/FileSlice.java| 13 +

.../org/apache/hudi/common/model/HoodieKey.java| 2 +

.../table/log/HoodieMergedLogRecordScanner.java| 3 +-

.../apache/hudi/configuration/OptionsResolver.java | 4 +

.../org/apache/hudi/sink/StreamWriteFunction.java | 32 ++-

.../hudi/sink/StreamWriteOperatorCoordinator.java | 5 +

.../sink/nonindex/NonIndexStreamWriteFunction.java | 265 +

.../sink/nonindex/NonIndexStreamWriteOperator.java | 25 +-

.../java/org/apache/hudi/sink/utils/Pipelines.java | 7 +

.../org/apache/hudi/table/HoodieTableFactory.java | 12 +

.../org/apache/hudi/sink/TestWriteMergeOnRead.java | 54 +

.../hudi/sink/utils/InsertFunctionWrapper.java | 6 +

.../sink/utils/StreamWriteFunctionWrapper.java | 23 +-

.../hudi/sink/utils/TestFunctionWrapper.java | 6 +

.../org/apache/hudi/sink/utils/TestWriteBase.java | 48

.../test/java/org/apache/hudi/utils/TestData.java | 34 +++

.../test/scala/org/apache/hudi/TestNonIndex.scala | 110 +

32 files changed, 1030 insertions(+), 30 deletions(-)

diff --git

a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/config/HoodieIndexConfig.java

b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/config/HoodieIndexConfig.java

index ee5b83a43a..b5edaf4abc 100644

---

a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/config/HoodieIndexConfig.java

+++

b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/config/HoodieIndexConfig.java

@@ -270,6 +270,34 @@ public class HoodieIndexConfig extends HoodieConfig {

.withDocumentation("Index key. It is used to index the record and find

its file group. "

+ "If not set, use record key field as default");

+ /**

+ * public static final String

NON_INDEX_PARTITION_FILE_GROUP_CACHE_INTERVAL_MINUTE =

"hoodie.non.index.partition.file.group.cache.interval.minute"; //minutes

+ * public static final String

DEFAULT_NON_INDEX_PARTITION_FILE_GROUP_CACHE_INTERVAL_MINUTE = "1800";

+ *

+ * public static final String NON_INDEX_PARTITION_FILE_GROUP_STORAGE_TYPE

= "hoodie.non.index.partition.file.group.storage.type";

+ * public static final String

DEFAULT_NON_INDEX_PARTITION_FILE_GROUP_CACHE_STORAGE_TYPE = "IN_MEMORY";

+ *

+ * public static final String NON_INDEX_PARTITION_FILE_GROUP_CACHE_SIZE =

"hoodie.non.index.partition.file.group.cache.size"; //byte

+ * public static final String

DEFAULT_NON_INDEX_PARTITION_FILE_GROUP_CACHE_SIZE = String.valueOf(1048576000);

+ */

+ public static final ConfigProperty

NON_INDEX_PARTITION_FILE_GROUP_CACHE_INTERVAL_MINUTE = ConfigProperty

+ .key("hoodie.non.index.partition.file.group.cache.interval.minute")

+ .defaultValue(1800)

+ .withDocumentation("Only applies if index type is BUCKET. Determine the

number of buckets in the hudi table, "

+ + "and each partition is divided to N buckets.");

+

+ public static final ConfigProperty

NON_INDEX_PARTITION_FILE_GROUP_STORAGE_TYPE = ConfigProperty

+ .key("hoodie.non.index.partition.file.group.storage.type")

+ .defaultValue("IN_MEMORY")

+ .withDocumentation("Only applies if index type is BUCKET. Determine the

number of buckets in the hudi table, "

+ + "and each partition is divided to N buckets.");

+

+ public static final ConfigProperty

NON_INDEX_PARTITION_FILE_GROUP_CACHE_SIZE = ConfigProperty

+ .key("hoodie.non.index.partition.file.group.cache.size")

+ .defaultValue(1024 * 1024 * 1024L)

+ .withDocumentation("Only applies if index type is BUCKET. Determine the

number of buckets in the hudi table, "

+ + "and each partition is

[hudi] 22/45: [HUDI-4898] presto/hive respect payload during merge parquet file and logfile when reading mor table (#6741)

This is an automated email from the ASF dual-hosted git repository.

forwardxu pushed a commit to branch release-0.12.1

in repository https://gitbox.apache.org/repos/asf/hudi.git

commit 738e2cce8f437ed3a2f7fc474e4143bb42fbad22

Author: xiarixiaoyao

AuthorDate: Thu Nov 3 23:11:39 2022 +0800

[HUDI-4898] presto/hive respect payload during merge parquet file and

logfile when reading mor table (#6741)

* [HUDI-4898] presto/hive respect payload during merge parquet file and

logfile when reading mor table

* Update HiveAvroSerializer.java otherwise payload string type combine

field will cause cast exception

(cherry picked from commit cd314b8cfa58c32f731f7da2aa6377a09df4c6f9)

---

.../realtime/AbstractRealtimeRecordReader.java | 72 +++-

.../realtime/HoodieHFileRealtimeInputFormat.java | 2 +-

.../realtime/HoodieParquetRealtimeInputFormat.java | 14 +-

.../realtime/RealtimeCompactedRecordReader.java| 25 +-

.../hudi/hadoop/utils/HiveAvroSerializer.java | 409 +

.../utils/HoodieRealtimeInputFormatUtils.java | 19 +-

.../utils/HoodieRealtimeRecordReaderUtils.java | 5 +

.../hudi/hadoop/utils/TestHiveAvroSerializer.java | 148

8 files changed, 678 insertions(+), 16 deletions(-)

diff --git

a/hudi-hadoop-mr/src/main/java/org/apache/hudi/hadoop/realtime/AbstractRealtimeRecordReader.java

b/hudi-hadoop-mr/src/main/java/org/apache/hudi/hadoop/realtime/AbstractRealtimeRecordReader.java

index dfdda9dfc8..83b69812e1 100644

---

a/hudi-hadoop-mr/src/main/java/org/apache/hudi/hadoop/realtime/AbstractRealtimeRecordReader.java

+++

b/hudi-hadoop-mr/src/main/java/org/apache/hudi/hadoop/realtime/AbstractRealtimeRecordReader.java

@@ -18,26 +18,34 @@

package org.apache.hudi.hadoop.realtime;

-import org.apache.hudi.common.model.HoodieAvroPayload;

-import org.apache.hudi.common.model.HoodiePayloadProps;

-import org.apache.hudi.common.table.HoodieTableMetaClient;

-import org.apache.hudi.exception.HoodieException;

-import org.apache.hudi.common.table.TableSchemaResolver;

-import org.apache.hudi.hadoop.utils.HoodieRealtimeRecordReaderUtils;

-

import org.apache.avro.Schema;

import org.apache.avro.Schema.Field;

import org.apache.hadoop.hive.metastore.api.hive_metastoreConstants;

+import org.apache.hadoop.hive.ql.io.parquet.serde.ArrayWritableObjectInspector;

+import org.apache.hadoop.hive.serde.serdeConstants;

import org.apache.hadoop.hive.serde2.ColumnProjectionUtils;

+import org.apache.hadoop.hive.serde2.typeinfo.StructTypeInfo;

+import org.apache.hadoop.hive.serde2.typeinfo.TypeInfo;

+import org.apache.hadoop.hive.serde2.typeinfo.TypeInfoFactory;

+import org.apache.hadoop.hive.serde2.typeinfo.TypeInfoUtils;

import org.apache.hadoop.mapred.JobConf;

+import org.apache.hudi.common.model.HoodieAvroPayload;

+import org.apache.hudi.common.model.HoodiePayloadProps;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.TableSchemaResolver;

+import org.apache.hudi.exception.HoodieException;

+import org.apache.hudi.hadoop.utils.HiveAvroSerializer;

+import org.apache.hudi.hadoop.utils.HoodieRealtimeRecordReaderUtils;

import org.apache.log4j.LogManager;

import org.apache.log4j.Logger;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

+import java.util.Locale;

import java.util.Map;

import java.util.Properties;

+import java.util.Set;

import java.util.stream.Collectors;

/**

@@ -55,6 +63,10 @@ public abstract class AbstractRealtimeRecordReader {

private Schema writerSchema;

private Schema hiveSchema;

private HoodieTableMetaClient metaClient;

+ // support merge operation

+ protected boolean supportPayload = true;

+ // handle hive type to avro record

+ protected HiveAvroSerializer serializer;

public AbstractRealtimeRecordReader(RealtimeSplit split, JobConf job) {

this.split = split;

@@ -62,6 +74,7 @@ public abstract class AbstractRealtimeRecordReader {

LOG.info("cfg ==> " +

job.get(ColumnProjectionUtils.READ_COLUMN_NAMES_CONF_STR));

LOG.info("columnIds ==> " +

job.get(ColumnProjectionUtils.READ_COLUMN_IDS_CONF_STR));

LOG.info("partitioningColumns ==> " +

job.get(hive_metastoreConstants.META_TABLE_PARTITION_COLUMNS, ""));

+this.supportPayload =

Boolean.parseBoolean(job.get("hoodie.support.payload", "true"));

try {

metaClient =

HoodieTableMetaClient.builder().setConf(jobConf).setBasePath(split.getBasePath()).build();

if (metaClient.getTableConfig().getPreCombineField() != null) {

@@ -73,6 +86,7 @@ public abstract class AbstractRealtimeRecordReader {

} catch (Exception e) {

throw new HoodieException("Could not create HoodieRealtimeRecordReader

on path " + this.split.getPath(), e);

}

+prepareHiveAvroSerializer();

}

private boolean usesCustomPayload(HoodieTableMetaClient metaClient) {

@@ -80,6 +94,34 @@ public abstract class AbstractRealtimeRecordReader {

||

[hudi] 20/45: remove hudi-kafka-connect module

This is an automated email from the ASF dual-hosted git repository. forwardxu pushed a commit to branch release-0.12.1 in repository https://gitbox.apache.org/repos/asf/hudi.git commit 5f6d6ae42d4a4be1396c4cc585c503b1c23e9deb Author: XuQianJin-Stars AuthorDate: Wed Nov 2 14:44:30 2022 +0800 remove hudi-kafka-connect module --- pom.xml | 4 ++-- 1 file changed, 2 insertions(+), 2 deletions(-) diff --git a/pom.xml b/pom.xml index 0adb64838b..01be2c1f89 100644 --- a/pom.xml +++ b/pom.xml @@ -58,9 +58,9 @@ packaging/hudi-trino-bundle hudi-examples hudi-flink-datasource -hudi-kafka-connect + packaging/hudi-flink-bundle -packaging/hudi-kafka-connect-bundle + hudi-tests-common

[hudi] 06/45: [MINOR] fix Invalid value for YearOfEra

This is an automated email from the ASF dual-hosted git repository.

forwardxu pushed a commit to branch release-0.12.1

in repository https://gitbox.apache.org/repos/asf/hudi.git

commit c1ceb628e576dd50f9c3bdf1ab830dcf61f70296

Author: XuQianJin-Stars

AuthorDate: Sun Oct 23 17:35:58 2022 +0800

[MINOR] fix Invalid value for YearOfEra

---

.../apache/hudi/client/BaseHoodieWriteClient.java | 28 ++

.../apache/hudi/client/SparkRDDWriteClient.java| 22 +

.../table/timeline/HoodieActiveTimeline.java | 18 ++

3 files changed, 58 insertions(+), 10 deletions(-)

diff --git

a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/BaseHoodieWriteClient.java

b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/BaseHoodieWriteClient.java

index d9f260e633..ff500a617e 100644

---

a/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/BaseHoodieWriteClient.java

+++

b/hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/BaseHoodieWriteClient.java

@@ -104,6 +104,7 @@ import org.apache.log4j.Logger;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

+import java.text.ParseException;

import java.util.Collection;

import java.util.Collections;

import java.util.HashMap;

@@ -115,6 +116,7 @@ import java.util.stream.Collectors;

import java.util.stream.Stream;

import static org.apache.hudi.common.model.HoodieCommitMetadata.SCHEMA_KEY;

+import static org.apache.hudi.common.model.TableServiceType.CLEAN;

/**

* Abstract Write Client providing functionality for performing commit, index

updates and rollback

@@ -306,14 +308,20 @@ public abstract class BaseHoodieWriteClient createTable(HoodieWriteConfig

config, Configuration hadoopConf);

void emitCommitMetrics(String instantTime, HoodieCommitMetadata metadata,

String actionType) {

-if (writeTimer != null) {

- long durationInMs = metrics.getDurationInMs(writeTimer.stop());

- // instantTime could be a non-standard value, so use

`parseDateFromInstantTimeSafely`

- // e.g. INIT_INSTANT_TS, METADATA_BOOTSTRAP_INSTANT_TS and

FULL_BOOTSTRAP_INSTANT_TS in HoodieTimeline

-

HoodieActiveTimeline.parseDateFromInstantTimeSafely(instantTime).ifPresent(parsedInstant

->

- metrics.updateCommitMetrics(parsedInstant.getTime(), durationInMs,

metadata, actionType)

- );

- writeTimer = null;

+try {

+ if (writeTimer != null) {

+long durationInMs = metrics.getDurationInMs(writeTimer.stop());

+long commitEpochTimeInMs = 0;

+if (HoodieActiveTimeline.checkDateTime(instantTime)) {

+ commitEpochTimeInMs =

HoodieActiveTimeline.parseDateFromInstantTime(instantTime).getTime();

+}

+metrics.updateCommitMetrics(commitEpochTimeInMs, durationInMs,

+metadata, actionType);

+writeTimer = null;

+ }

+} catch (ParseException e) {

+ throw new HoodieCommitException("Failed to complete commit " +

config.getBasePath() + " at time " + instantTime

+ + "Instant time is not of valid format", e);

}

}

@@ -862,7 +870,7 @@ public abstract class BaseHoodieWriteClient> extraMetadata) throws HoodieIOException {

-return scheduleTableService(instantTime, extraMetadata,

TableServiceType.CLEAN).isPresent();

+return scheduleTableService(instantTime, extraMetadata, CLEAN).isPresent();

}

/**

diff --git

a/hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/client/SparkRDDWriteClient.java

b/hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/client/SparkRDDWriteClient.java

index 7110e26bb0..32c4a0a06d 100644

---

a/hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/client/SparkRDDWriteClient.java

+++

b/hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/client/SparkRDDWriteClient.java

@@ -45,6 +45,7 @@ import org.apache.hudi.common.util.collection.Pair;

import org.apache.hudi.config.HoodieWriteConfig;

import org.apache.hudi.data.HoodieJavaRDD;

import org.apache.hudi.exception.HoodieClusteringException;

+import org.apache.hudi.exception.HoodieCommitException;

import org.apache.hudi.exception.HoodieWriteConflictException;

import org.apache.hudi.index.HoodieIndex;

import org.apache.hudi.index.SparkHoodieIndexFactory;

@@ -68,6 +69,7 @@ import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import java.nio.charset.StandardCharsets;

+import java.text.ParseException;

import java.util.List;

import java.util.Map;

import java.util.stream.Collectors;

@@ -324,6 +326,16 @@ public class SparkRDDWriteClient extends

HoodieActiveTimeline.parseDateFromInstantTimeSafely(compactionCommitTime).ifPresent(parsedInstant

->

metrics.updateCommitMetrics(parsedInstant.getTime(), durationInMs,

metadata, HoodieActiveTimeline.COMPACTION_ACTION)

);

+ try {

+long commitEpochTimeInMs = 0;

+if

[hudi] 28/45: [HUDI-5095] Flink: Stores a special watermark(flag) to identify the current progress of writing data

This is an automated email from the ASF dual-hosted git repository.

forwardxu pushed a commit to branch release-0.12.1

in repository https://gitbox.apache.org/repos/asf/hudi.git

commit 005e913403824fc6d5494bbefe8a370712656782

Author: XuQianJin-Stars

AuthorDate: Thu Nov 24 13:17:21 2022 +0800

[HUDI-5095] Flink: Stores a special watermark(flag) to identify the current

progress of writing data

---

.../hudi/sink/StreamWriteOperatorCoordinator.java | 21 ++---

1 file changed, 10 insertions(+), 11 deletions(-)

diff --git

a/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/StreamWriteOperatorCoordinator.java

b/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/StreamWriteOperatorCoordinator.java

index 578bb10db5..4a3674ec29 100644

---

a/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/StreamWriteOperatorCoordinator.java

+++

b/hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/sink/StreamWriteOperatorCoordinator.java

@@ -511,28 +511,27 @@ public class StreamWriteOperatorCoordinator

}

setMinEventTime();

doCommit(instant, writeResults);

-resetMinEventTime();

return true;

}

public void setMinEventTime() {

if (commitEventTimeEnable) {

- LOG.info("[setMinEventTime] receive event time for current commit: {} ",

Arrays.stream(eventBuffer).map(WriteMetadataEvent::getMaxEventTime).map(String::valueOf)

- .collect(Collectors.joining(", ")));

- this.minEventTime = Arrays.stream(eventBuffer)

+ List eventTimes = Arrays.stream(eventBuffer)

.filter(Objects::nonNull)

- .filter(maxEventTime -> maxEventTime.getMaxEventTime() > 0)

.map(WriteMetadataEvent::getMaxEventTime)

- .min(Comparator.naturalOrder())

- .map(aLong -> Math.min(aLong,

this.minEventTime)).orElse(Long.MAX_VALUE);

+ .filter(maxEventTime -> maxEventTime > 0)

+ .collect(Collectors.toList());

+

+ if (!eventTimes.isEmpty()) {

+LOG.info("[setMinEventTime] receive event time for current commit: {}

",

+

eventTimes.stream().map(String::valueOf).collect(Collectors.joining(", ")));

+this.minEventTime = eventTimes.stream().min(Comparator.naturalOrder())

+.map(aLong -> Math.min(aLong,

this.minEventTime)).orElse(Long.MAX_VALUE);

+ }

LOG.info("[setMinEventTime] minEventTime: {} ", this.minEventTime);

}

}

- public void resetMinEventTime() {

-this.minEventTime = Long.MAX_VALUE;

- }

-

/**

* Performs the actual commit action.

*/

[hudi] 42/45: improve checkstyle

This is an automated email from the ASF dual-hosted git repository.

forwardxu pushed a commit to branch release-0.12.1

in repository https://gitbox.apache.org/repos/asf/hudi.git

commit 79abc24265debcd0ce4a57adbd1ca33e8591d1a4

Author: XuQianJin-Stars

AuthorDate: Thu Dec 15 16:45:05 2022 +0800

improve checkstyle

---

dev/tencent-install.sh | 5 +++--

dev/tencent-release.sh | 1 +

pom.xml| 10 ++

3 files changed, 14 insertions(+), 2 deletions(-)

diff --git a/dev/tencent-install.sh b/dev/tencent-install.sh

index 1e34f40440..173ca06671 100644

--- a/dev/tencent-install.sh

+++ b/dev/tencent-install.sh

@@ -40,6 +40,7 @@ echo "Preparing source for $tagrc"

# change version

echo "Change version for ${version}"

mvn versions:set -DnewVersion=${version} -DgenerateBackupPom=false -s

dev/settings.xml -U

+mvn -N versions:update-child-modules

mvn versions:commit -s dev/settings.xml -U

function git_push() {

@@ -118,9 +119,9 @@ function deploy_spark() {

FLINK_VERSION=$3

if [ ${release_repo} = "Y" ]; then

-COMMON_OPTIONS="-Dscala-${SCALA_VERSION} -Dspark${SPARK_VERSION}

-Dflink${FLINK_VERSION} -DskipTests -s dev/settings.xml

-DretryFailedDeploymentCount=30 -T 2.5C"

+COMMON_OPTIONS="-Dscala-${SCALA_VERSION} -Dspark${SPARK_VERSION}

-Dflink${FLINK_VERSION} -DskipTests -Dcheckstyle.skip=true

-Dscalastyle.skip=true -s dev/settings.xml -DretryFailedDeploymentCount=30 -T

2.5C"

else

-COMMON_OPTIONS="-Dscala-${SCALA_VERSION} -Dspark${SPARK_VERSION}

-Dflink${FLINK_VERSION} -DskipTests -s dev/settings.xml

-DretryFailedDeploymentCount=30 -T 2.5C"

+COMMON_OPTIONS="-Dscala-${SCALA_VERSION} -Dspark${SPARK_VERSION}

-Dflink${FLINK_VERSION} -DskipTests -Dcheckstyle.skip=true

-Dscalastyle.skip=true -s dev/settings.xml -DretryFailedDeploymentCount=30 -T

2.5C"

fi

# INSTALL_OPTIONS="-U -Drat.skip=true -Djacoco.skip=true

-Dscala-${SCALA_VERSION} -Dspark${SPARK_VERSION} -DskipTests -s

dev/settings.xml -T 2.5C"

diff --git a/dev/tencent-release.sh b/dev/tencent-release.sh

index b788d62dc7..54631f5c0f 100644

--- a/dev/tencent-release.sh

+++ b/dev/tencent-release.sh

@@ -40,6 +40,7 @@ echo "Preparing source for $tagrc"

# change version

echo "Change version for ${version}"

mvn versions:set -DnewVersion=${version} -DgenerateBackupPom=false -s

dev/settings.xml -U

+mvn -N versions:update-child-modules

mvn versions:commit -s dev/settings.xml -U

# create version.txt for this release

diff --git a/pom.xml b/pom.xml

index 01be2c1f89..230b338df6 100644

--- a/pom.xml

+++ b/pom.xml

@@ -583,6 +583,16 @@

+

+

+

+ org.codehaus.mojo

+ versions-maven-plugin

+ 2.7

+

+false

+

+

[hudi] 07/45: add 'backup_invalid_parquet' procedure

This is an automated email from the ASF dual-hosted git repository.

forwardxu pushed a commit to branch release-0.12.1

in repository https://gitbox.apache.org/repos/asf/hudi.git

commit 1d029e668bde07f764d3781d51b6c18c6fc025e1

Author: jiimmyzhan

AuthorDate: Wed Aug 24 22:27:11 2022 +0800

add 'backup_invalid_parquet' procedure

---

.../procedures/BackupInvalidParquetProcedure.scala | 89 ++

.../hudi/command/procedures/HoodieProcedures.scala | 1 +

.../TestBackupInvalidParquetProcedure.scala| 83

3 files changed, 173 insertions(+)

diff --git

a/hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/command/procedures/BackupInvalidParquetProcedure.scala

b/hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/command/procedures/BackupInvalidParquetProcedure.scala

new file mode 100644

index 00..fbbb1247fa

--- /dev/null

+++

b/hudi-spark-datasource/hudi-spark/src/main/scala/org/apache/spark/sql/hudi/command/procedures/BackupInvalidParquetProcedure.scala

@@ -0,0 +1,89 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.hudi.command.procedures

+

+import org.apache.hadoop.fs.Path

+import org.apache.hudi.client.common.HoodieSparkEngineContext

+import org.apache.hudi.common.config.SerializableConfiguration

+import org.apache.hudi.common.fs.FSUtils

+import

org.apache.parquet.format.converter.ParquetMetadataConverter.SKIP_ROW_GROUPS

+import org.apache.parquet.hadoop.ParquetFileReader

+import org.apache.spark.api.java.JavaRDD

+import org.apache.spark.sql.Row

+import org.apache.spark.sql.types.{DataTypes, Metadata, StructField,

StructType}

+

+import java.util.function.Supplier

+

+class BackupInvalidParquetProcedure extends BaseProcedure with

ProcedureBuilder {

+ private val PARAMETERS = Array[ProcedureParameter](

+ProcedureParameter.required(0, "path", DataTypes.StringType, None)

+ )

+

+ private val OUTPUT_TYPE = new StructType(Array[StructField](

+StructField("backup_path", DataTypes.StringType, nullable = true,

Metadata.empty),

+StructField("invalid_parquet_size", DataTypes.LongType, nullable = true,

Metadata.empty))

+ )

+