[jira] [Updated] (HUDI-5656) Metadata Bootstrap flow resulting in NPE

[

https://issues.apache.org/jira/browse/HUDI-5656?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated HUDI-5656:

-

Labels: pull-request-available (was: )

> Metadata Bootstrap flow resulting in NPE

>

>

> Key: HUDI-5656

> URL: https://issues.apache.org/jira/browse/HUDI-5656

> Project: Apache Hudi

> Issue Type: Bug

> Components: bootstrap

>Affects Versions: 0.13.0

>Reporter: Alexey Kudinkin

>Assignee: Alexey Kudinkin

>Priority: Blocker

> Labels: pull-request-available

> Fix For: 0.13.0

>

>

> After adding a simple statement forcing the test to read whole bootstrapped

> table:

> {code:java}

> sqlContext.sql("select * from bootstrapped").show(); {code}

>

> Following NPE have been observed on master

> (testBulkInsertsAndUpsertsWithBootstrap):

> {code:java}

> org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in

> stage 183.0 failed 1 times, most recent failure: Lost task 0.0 in stage 183.0

> (TID 971, localhost, executor driver): java.lang.NullPointerException

> at

> org.apache.spark.sql.catalyst.expressions.codegen.UnsafeWriter.write(UnsafeWriter.java:109)

> at

> org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection.writeFields_0_1$(Unknown

> Source)

> at

> org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection.apply(Unknown

> Source)

> at

> org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection.apply(Unknown

> Source)

> at scala.collection.Iterator$$anon$10.next(Iterator.scala:448)

> at

> org.apache.spark.sql.execution.SparkPlan.$anonfun$getByteArrayRdd$1(SparkPlan.scala:256)

> at

> org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2(RDD.scala:836)

> at

> org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2$adapted(RDD.scala:836)

> at

> org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

> at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

> at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

> at

> org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

> at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

> at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

> at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

> at org.apache.spark.scheduler.Task.run(Task.scala:123)

> at

> org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:411)

> at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

> at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

> at

> java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

> at

> java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

> at java.lang.Thread.run(Thread.java:748)Driver stacktrace: at

> org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:1889)

> at

> org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2(DAGScheduler.scala:1877)

> at

> org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2$adapted(DAGScheduler.scala:1876)

> at

> scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:59)

> at

> scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:52)

> at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

> at

> org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1876)

> at

> org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1(DAGScheduler.scala:926)

> at

> org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1$adapted(DAGScheduler.scala:926)

> at scala.Option.foreach(Option.scala:257)

> at

> org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:926)

> at

> org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2110)

> at

> org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2059)

> at

> org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2048)

> at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

> at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:737)

> at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

> at org.apache.spark.SparkContext.runJob(SparkContext.scala:2082)

> at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

> at

> org.apache.spark.sql.execution.SparkPlan.executeTake(SparkPlan.scala:365)

> at

>

[GitHub] [hudi] alexeykudinkin opened a new pull request, #7804: [HUDI-5656] Fixing NPE while reading `HoodieBootstrapRelation`

alexeykudinkin opened a new pull request, #7804: URL: https://github.com/apache/hudi/pull/7804 ### Change Logs Currently `HoodieBootstrapRelation` is improperly treating partitioned tables resulting in NPE, while trying to read bootstrapped table. To address that `HoodieBootstrapRelation` have been rebased onto `HoodieBaseRelation` providing some of the common semantic across all of the Hudi's file-based Partition implementations (schema handling, file-listing, etc) ### Impact Addresses NPE in current implementation of `HoodieBootstrapRelation` ### Risk level (write none, low medium or high below) Medium ### Documentation Update TBA ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] SteNicholas commented on pull request #7669: [HUDI-5553] Prevent partition(s) from being dropped if there are pending…

SteNicholas commented on PR #7669: URL: https://github.com/apache/hudi/pull/7669#issuecomment-1409895036 @voonhous, I had a voice communication with @YannByron. This PR is just a temporary check for deleting partitions. The final reasonable implementation should be to put the check in the table service of compaction or clustering. In other words , table service should not affect the execution of DDL such as deleting partitions. However, it is beneficial to temporarily add the check in this PR. For example, user A creates a Flink task that writes Hudi table 1 and enables asynchronous clustering. At this time, when user B wants to delete partitions, he can Perceived that this partition has a corresponding pending table service, it can at least temporarily refuse user B to delete the partition to avoid affecting user A's Flink task. To sum up, you can merge first, and create a ticket to optimize this check in table service. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch master updated (1377143656a -> 252c4033010)

This is an automated email from the ASF dual-hosted git repository. mengtao pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git from 1377143656a [HUDI-5487] Reduce duplicate logs in ExternalSpillableMap (#7579) add 252c4033010 [MINOR] Standardise schema concepts on Flink Engine (#7761) No new revisions were added by this update. Summary of changes: .../internal/schema/utils/InternalSchemaUtils.java | 4 +- .../hudi/table/format/InternalSchemaManager.java | 57 +- .../apache/hudi/table/format/RecordIterators.java | 8 +-- 3 files changed, 41 insertions(+), 28 deletions(-)

[GitHub] [hudi] xiarixiaoyao merged pull request #7761: [MINOR] Standardise schema concepts on Flink Engine

xiarixiaoyao merged PR #7761: URL: https://github.com/apache/hudi/pull/7761 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] xiarixiaoyao commented on pull request #7761: [MINOR] Standardise schema concepts on Flink Engine

xiarixiaoyao commented on PR #7761: URL: https://github.com/apache/hudi/pull/7761#issuecomment-1409863546 ut failure has nothing to do with pr -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

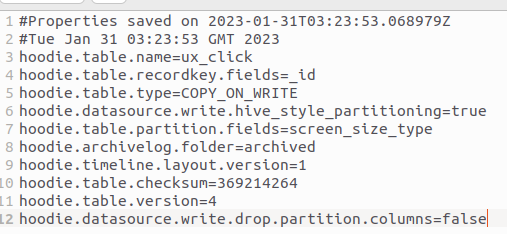

[GitHub] [hudi] duc-dn commented on issue #7791: [SUPPORT] Don't see metadata folder in .hoodie folder when ingesting data use hudi kafka connector

duc-dn commented on issue #7791: URL: https://github.com/apache/hudi/issues/7791#issuecomment-1409860750 @danny0405 This is my hoodie.properties file  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yuzhaojing commented on a diff in pull request #5926: [HUDI-3475] Initialize hudi table management module

yuzhaojing commented on code in PR #5926: URL: https://github.com/apache/hudi/pull/5926#discussion_r1091522679 ## hudi-platform-service/hudi-table-service-manager/src/main/java/org/apache/hudi/table/service/manager/entity/AssistQueryEntity.java: ## @@ -0,0 +1,46 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one + * or more contributor license agreements. See the NOTICE file + * distributed with this work for additional information + * regarding copyright ownership. The ASF licenses this file + * to you under the Apache License, Version 2.0 (the + * "License"); you may not use this file except in compliance + * with the License. You may obtain a copy of the License at + * + * http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.hudi.table.service.manager.entity; + +import org.apache.hudi.table.service.manager.common.ServiceConfig; +import org.apache.hudi.table.service.manager.util.DateTimeUtils; + +import lombok.Getter; + +import java.util.Date; Review Comment: Ok. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yuzhaojing commented on a diff in pull request #5926: [HUDI-3475] Initialize hudi table management module

yuzhaojing commented on code in PR #5926:

URL: https://github.com/apache/hudi/pull/5926#discussion_r1091520884

##

hudi-platform-service/hudi-table-service-manager/src/main/java/org/apache/hudi/table/service/manager/executor/submitter/ExecutionEngine.java:

##

@@ -0,0 +1,55 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.service.manager.executor.submitter;

+

+import

org.apache.hudi.table.service.manager.common.HoodieTableServiceManagerConfig;

+import org.apache.hudi.table.service.manager.entity.Instance;

+import

org.apache.hudi.table.service.manager.exception.HoodieTableServiceManagerException;

+import org.apache.hudi.table.service.manager.store.impl.InstanceService;

+

+import org.apache.logging.log4j.LogManager;

+import org.apache.logging.log4j.Logger;

+

+import java.util.Map;

+

+public abstract class ExecutionEngine {

Review Comment:

Sure, will add doc.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] yuzhaojing commented on a diff in pull request #5926: [HUDI-3475] Initialize hudi table management module

yuzhaojing commented on code in PR #5926:

URL: https://github.com/apache/hudi/pull/5926#discussion_r1091520637

##

hudi-platform-service/hudi-table-service-manager/src/main/java/org/apache/hudi/table/service/manager/service/BaseService.java:

##

@@ -0,0 +1,29 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.service.manager.service;

+

+public interface BaseService {

+

+ void init();

+

+ void startService();

Review Comment:

Ok.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] yuzhaojing commented on a diff in pull request #5926: [HUDI-3475] Initialize hudi table management module

yuzhaojing commented on code in PR #5926:

URL: https://github.com/apache/hudi/pull/5926#discussion_r1091520367

##

hudi-platform-service/hudi-table-service-manager/src/main/java/org/apache/hudi/table/service/manager/store/impl/InstanceService.java:

##

@@ -0,0 +1,155 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.service.manager.store.impl;

+

+import org.apache.hudi.table.service.manager.common.ServiceContext;

+import org.apache.hudi.table.service.manager.entity.AssistQueryEntity;

+import org.apache.hudi.table.service.manager.entity.Instance;

+import org.apache.hudi.table.service.manager.entity.InstanceStatus;

+

+import org.apache.hudi.table.service.manager.store.jdbc.JdbcMapper;

+

+import org.apache.ibatis.session.RowBounds;

+import org.apache.logging.log4j.LogManager;

+import org.apache.logging.log4j.Logger;

+

+import java.util.List;

+import java.util.concurrent.TimeUnit;

+

+public class InstanceService {

+

+ private static Logger LOG = LogManager.getLogger(InstanceService.class);

+

+ private JdbcMapper jdbcMapper = ServiceContext.getJdbcMapper();

+

+ private static final String NAMESPACE = "Instance";

+

+ public void createInstance() {

+try {

+ jdbcMapper.updateObject(statement(NAMESPACE, "createInstance"), null);

+} catch (Exception e) {

+ throw new RuntimeException(e);

+}

+ }

+

+ public void saveInstance(Instance instance) {

+try {

+ jdbcMapper.saveObject(statement(NAMESPACE, "saveInstance"), instance);

+} catch (Exception e) {

+ throw new RuntimeException(e);

+}

+ }

+

+ public void updateStatus(Instance instance) {

+try {

+ int ret = jdbcMapper.updateObject(statement(NAMESPACE,

getUpdateStatusSqlId(instance)), instance);

+ if (ret != 1) {

+LOG.error("Fail update status instance: " + instance);

+throw new RuntimeException("Fail update status instance: " +

instance.getIdentifier());

+ }

+ LOG.info("Success update status instance: " + instance.getIdentifier());

+} catch (Exception e) {

+ LOG.error("Fail update status, instance: " + instance.getIdentifier() +

", errMsg: ", e);

+ throw new RuntimeException(e);

+}

+ }

+

+ public void updateExecutionInfo(Instance instance) {

+int retryNum = 0;

+try {

+ while (retryNum++ < 3) {

Review Comment:

Will update it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] yuzhaojing commented on a diff in pull request #5926: [HUDI-3475] Initialize hudi table management module

yuzhaojing commented on code in PR #5926:

URL: https://github.com/apache/hudi/pull/5926#discussion_r1091520137

##

hudi-platform-service/hudi-table-service-manager/src/main/java/org/apache/hudi/table/service/manager/RequestHandler.java:

##

@@ -0,0 +1,168 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.service.manager;

+

+import org.apache.hudi.client.HoodieTableServiceManagerClient;

+import org.apache.hudi.table.service.manager.entity.Action;

+import org.apache.hudi.table.service.manager.entity.Engine;

+import org.apache.hudi.table.service.manager.entity.Instance;

+import org.apache.hudi.table.service.manager.entity.InstanceStatus;

+import org.apache.hudi.table.service.manager.handlers.ActionHandler;

+import org.apache.hudi.table.service.manager.store.MetadataStore;

+import org.apache.hudi.table.service.manager.util.InstanceUtil;

+

+import io.javalin.Context;

+import io.javalin.Handler;

+import io.javalin.Javalin;

+import org.apache.hadoop.conf.Configuration;

+import org.apache.logging.log4j.LogManager;

+import org.apache.logging.log4j.Logger;

+import org.jetbrains.annotations.NotNull;

+

+import java.util.Locale;

+

+/**

+ * Main REST Handler class that handles and delegates calls to timeline

relevant handlers.

+ */

+public class RequestHandler {

+

+ private static final Logger LOG = LogManager.getLogger(RequestHandler.class);

+

+ private final Javalin app;

+ private final ActionHandler actionHandler;

+

+ public RequestHandler(Javalin app,

+Configuration conf,

+MetadataStore metadataStore) {

+this.app = app;

+this.actionHandler = new ActionHandler(conf, metadataStore);

+ }

+

+ public void register() {

+registerCompactionAPI();

+registerClusteringAPI();

+registerCleanAPI();

+ }

+

+ /**

+ * Register Compaction API calls.

+ */

+ private void registerCompactionAPI() {

+app.get(HoodieTableServiceManagerClient.EXECUTE_COMPACTION, new

ViewHandler(ctx -> {

+ for (String instant :

ctx.validatedQueryParam(HoodieTableServiceManagerClient.INSTANT_PARAM).getOrThrow().split(","))

{

+Instance instance = Instance.builder()

+

.basePath(ctx.validatedQueryParam(HoodieTableServiceManagerClient.BASEPATH_PARAM).getOrThrow())

+

.dbName(ctx.validatedQueryParam(HoodieTableServiceManagerClient.DATABASE_NAME_PARAM).getOrThrow())

+

.tableName(ctx.validatedQueryParam(HoodieTableServiceManagerClient.TABLE_NAME_PARAM).getOrThrow())

+.action(Action.COMPACTION.getValue())

+.instant(instant)

+

.executionEngine(Engine.valueOf(ctx.validatedQueryParam(HoodieTableServiceManagerClient.EXECUTION_ENGINE).getOrThrow().toUpperCase(Locale.ROOT)))

+

.userName(ctx.validatedQueryParam(HoodieTableServiceManagerClient.USERNAME).getOrThrow())

+

.queue(ctx.validatedQueryParam(HoodieTableServiceManagerClient.QUEUE).getOrThrow())

+

.resource(ctx.validatedQueryParam(HoodieTableServiceManagerClient.RESOURCE).getOrThrow())

+

.parallelism(ctx.validatedQueryParam(HoodieTableServiceManagerClient.PARALLELISM).getOrThrow())

+.status(InstanceStatus.SCHEDULED.getStatus())

+.build();

+InstanceUtil.checkArgument(instance);

+actionHandler.scheduleCompaction(instance);

+ }

+}));

+ }

+

+ /**

+ * Register Clustering API calls.

+ */

+ private void registerClusteringAPI() {

+app.get(HoodieTableServiceManagerClient.EXECUTE_CLUSTERING, new

ViewHandler(ctx -> {

+ Instance instance = Instance.builder()

+

.basePath(ctx.validatedQueryParam(HoodieTableServiceManagerClient.BASEPATH_PARAM).getOrThrow())

+

.dbName(ctx.validatedQueryParam(HoodieTableServiceManagerClient.DATABASE_NAME_PARAM).getOrThrow())

+

.tableName(ctx.validatedQueryParam(HoodieTableServiceManagerClient.TABLE_NAME_PARAM).getOrThrow())

+ .action(Action.CLUSTERING.getValue())

+

.instant(ctx.validatedQueryParam(HoodieTableServiceManagerClient.INSTANT_PARAM).getOrThrow())

+

[GitHub] [hudi] hudi-bot commented on pull request #7803: [HUDI-5661] Add ConflictResolutionStrategy for bucket index

hudi-bot commented on PR #7803: URL: https://github.com/apache/hudi/pull/7803#issuecomment-1409856892 ## CI report: * 410d0d504acaa6ff46ee85bb3dddb46cf5fb18fb UNKNOWN * 53807f6493b7056be1afdd7e78353a354514f845 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yuzhaojing commented on a diff in pull request #5926: [HUDI-3475] Initialize hudi table management module

yuzhaojing commented on code in PR #5926:

URL: https://github.com/apache/hudi/pull/5926#discussion_r1091519698

##

hudi-platform-service/hudi-table-service-manager/src/main/java/org/apache/hudi/table/service/manager/store/jdbc/SqlSessionFactoryUtil.java:

##

@@ -0,0 +1,82 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.service.manager.store.jdbc;

+

+import

org.apache.hudi.table.service.manager.exception.HoodieTableServiceManagerException;

+

+import org.apache.ibatis.io.Resources;

+import org.apache.ibatis.session.SqlSession;

+import org.apache.ibatis.session.SqlSessionFactory;

+import org.apache.ibatis.session.SqlSessionFactoryBuilder;

+

+import java.io.IOException;

+import java.io.InputStream;

+import java.sql.PreparedStatement;

+import java.util.stream.Collectors;

+

+public class SqlSessionFactoryUtil {

Review Comment:

In the follow-up PR, hudi-platform-common will be extracted for unification,

let us follow up.

##

hudi-platform-service/hudi-table-service-manager/src/main/java/org/apache/hudi/table/service/manager/HoodieTableServiceManager.java:

##

@@ -0,0 +1,152 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.service.manager;

+

+import org.apache.hudi.common.fs.FSUtils;

+import org.apache.hudi.common.util.ReflectionUtils;

+import org.apache.hudi.table.service.manager.common.CommandConfig;

+import

org.apache.hudi.table.service.manager.common.HoodieTableServiceManagerConfig;

+import org.apache.hudi.table.service.manager.service.BaseService;

+import org.apache.hudi.table.service.manager.service.CleanService;

+import org.apache.hudi.table.service.manager.service.ExecutorService;

+import org.apache.hudi.table.service.manager.service.MonitorService;

+import org.apache.hudi.table.service.manager.service.RestoreService;

+import org.apache.hudi.table.service.manager.service.RetryService;

+import org.apache.hudi.table.service.manager.service.ScheduleService;

+import org.apache.hudi.table.service.manager.store.MetadataStore;

+

+import com.beust.jcommander.JCommander;

+import io.javalin.Javalin;

+import org.apache.hadoop.conf.Configuration;

+import org.apache.logging.log4j.LogManager;

+import org.apache.logging.log4j.Logger;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.List;

+

+/**

+ * Main class of hoodie table service manager.

+ *

+ * @Experimental

+ * @since 0.13.0

+ */

+public class HoodieTableServiceManager {

+

+ private static final Logger LOG =

LogManager.getLogger(HoodieTableServiceManager.class);

+

+ private final int serverPort;

+ private final Configuration conf;

+ private transient Javalin app = null;

+ private List services;

+ private final MetadataStore metadataStore;

+ private final HoodieTableServiceManagerConfig tableServiceManagerConfig;

+

+ public HoodieTableServiceManager(CommandConfig config) {

+this.conf = FSUtils.prepareHadoopConf(new Configuration());

+this.tableServiceManagerConfig =

CommandConfig.toTableServiceManagerConfig(config);

+this.serverPort = config.serverPort;

+this.metadataStore = initMetadataStore();

+ }

+

+ public void startService() {

+app = Javalin.create();

+RequestHandler requestHandler = new RequestHandler(app, conf,

metadataStore);

+app.get("/", ctx -> ctx.result("Hello World"));

+requestHandler.register();

+app.start(serverPort);

+registerService();

[GitHub] [hudi] hudi-bot commented on pull request #7614: [HUDI-5509] check if dfs support atomic creation when using filesyste…

hudi-bot commented on PR #7614: URL: https://github.com/apache/hudi/pull/7614#issuecomment-1409856482 ## CI report: * 48630689184d006a4be0ad9eef2ade76919458cd Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14174) * 058ab2703bda207fc9f5861d5e4b865e83ee1b45 UNKNOWN * 3c010a86327c341b29aaea9ff6ca571855951bd3 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7680: [HUDI-5548] spark sql show | update hudi's table properties

hudi-bot commented on PR #7680: URL: https://github.com/apache/hudi/pull/7680#issuecomment-1409856659 ## CI report: * 0970573f82ef1a49184d1875975463f76f7d791d Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14686) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14760) * b87dbc4ca43aa4e2565f11d87d74cd018b95b6cf Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14807) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] yuzhaojing commented on a diff in pull request #5926: [HUDI-3475] Initialize hudi table management module

yuzhaojing commented on code in PR #5926:

URL: https://github.com/apache/hudi/pull/5926#discussion_r1091518781

##

hudi-platform-service/hudi-table-service-manager/src/main/java/org/apache/hudi/table/service/manager/util/DateTimeUtils.java:

##

@@ -0,0 +1,39 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table.service.manager.util;

+

+import java.util.Calendar;

+import java.util.Date;

+

+public class DateTimeUtils {

Review Comment:

Sure.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] xicm commented on pull request #7675: [HUDI-5561] The preCombine method of PartialUpdateAvroPayload is not called

xicm commented on PR #7675: URL: https://github.com/apache/hudi/pull/7675#issuecomment-1409854509 @danny0405 The problem has been fix by #7759, we can close this pr. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] voonhous commented on pull request #7669: [HUDI-5553] Prevent partition(s) from being dropped if there are pending…

voonhous commented on PR #7669: URL: https://github.com/apache/hudi/pull/7669#issuecomment-1409854140 @YannByron Hmmm, are you envisioning option 3 as a solution for this issue that is described here? https://github.com/apache/hudi/pull/7669#discussion_r1090237751 i.e. Any new filegroup that is created from a filegroup that is flagged for deletion should also be flagged for deletion? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7803: [HUDI-5661] Add ConflictResolutionStrategy for bucket index

hudi-bot commented on PR #7803: URL: https://github.com/apache/hudi/pull/7803#issuecomment-1409850518 ## CI report: * 410d0d504acaa6ff46ee85bb3dddb46cf5fb18fb UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7802: [DNM] Disable default Avro schema validation

hudi-bot commented on PR #7802: URL: https://github.com/apache/hudi/pull/7802#issuecomment-1409850470 ## CI report: * 88d82b6c21f0c4d81409dae0f5420cea116954ba Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14805) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7787: [HUDI-5646] Guard dropping columns by a config, do not allow by default

hudi-bot commented on PR #7787: URL: https://github.com/apache/hudi/pull/7787#issuecomment-1409850396 ## CI report: * a930137a8435863ad4b01997b731895328c0fa59 Azure: [CANCELED](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14790) * 8849a731a979b687f490674e7fc15f8301947b27 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14804) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7706: [HUDI-5585][flink]Fix flink creates and writes the table, the spark alter table reports an error

hudi-bot commented on PR #7706: URL: https://github.com/apache/hudi/pull/7706#issuecomment-1409850237 ## CI report: * 126951c4f2e2581ffbfb996df3d2ea325290f7f6 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14447) * 8c4ddec99d740d881bbabb9ca27e860c217acc2c Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14803) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7680: [HUDI-5548] spark sql show | update hudi's table properties

hudi-bot commented on PR #7680: URL: https://github.com/apache/hudi/pull/7680#issuecomment-1409850148 ## CI report: * 0970573f82ef1a49184d1875975463f76f7d791d Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14686) Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14760) * b87dbc4ca43aa4e2565f11d87d74cd018b95b6cf UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7669: [HUDI-5553] Prevent partition(s) from being dropped if there are pending…

hudi-bot commented on PR #7669: URL: https://github.com/apache/hudi/pull/7669#issuecomment-1409850055 ## CI report: * 6e1d03f8dd6c292959ee29c8592ca4340d2aca46 Azure: [CANCELED](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14796) * 343b22a4f7cd783ff6a69ba19df828f221c69c5a Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14802) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7378: [HUDI-5329] spark reads hudi table error when flink creates the table without preCombine fields

hudi-bot commented on PR #7378: URL: https://github.com/apache/hudi/pull/7378#issuecomment-1409849655 ## CI report: * e6dd84eef8e98d43c19f31df2a88f6b1d3f6f717 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13455) * 576dad186d2bfbe4bc497d75d17c6ded88df35a5 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14801) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7614: [HUDI-5509] check if dfs support atomic creation when using filesyste…

hudi-bot commented on PR #7614: URL: https://github.com/apache/hudi/pull/7614#issuecomment-1409849911 ## CI report: * 48630689184d006a4be0ad9eef2ade76919458cd Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14174) * 058ab2703bda207fc9f5861d5e4b865e83ee1b45 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (HUDI-5662) Build failure on upgrading hudi-presto-bundle to version 0.13.0 in Presto

Sagar Sumit created HUDI-5662:

-

Summary: Build failure on upgrading hudi-presto-bundle to version

0.13.0 in Presto

Key: HUDI-5662

URL: https://issues.apache.org/jira/browse/HUDI-5662

Project: Apache Hudi

Issue Type: Task

Reporter: Sagar Sumit

After upgrading to 0.13.0-rc1 as shown in

[https://github.com/codope/presto/commit/8779d4f17be10861d7726226e74397c6d9b316fd]

and building the project, we get the following error:

{code:java}

Failed while enforcing RequireUpperBoundDeps. The error(s) are [

Require upper bound dependencies error for org.objenesis:objenesis:1.3 paths to

dependency are:

+-com.facebook.presto:presto-hive:0.280-SNAPSHOT

+-org.apache.hudi:hudi-presto-bundle:0.13.0-rc1

+-com.esotericsoftware:kryo-shaded:4.0.2

+-org.objenesis:objenesis:1.3 (managed) <-- org.objenesis:objenesis:2.5.1

] {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [hudi] YannByron commented on pull request #7669: [HUDI-5553] Prevent partition(s) from being dropped if there are pending…

YannByron commented on PR #7669: URL: https://github.com/apache/hudi/pull/7669#issuecomment-1409849451 @voonhous @SteNicholas @XuQianJin-Stars hey guys, sorry that I have a different thought. I think the drop-partition operation should be allowed to execute, and the table service action should re-check whether the touched file groups have been updated when the table service action is actually executing. IMO, the operations to data is prior to the table management operations. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7802: [DNM] Disable default Avro schema validation

hudi-bot commented on PR #7802: URL: https://github.com/apache/hudi/pull/7802#issuecomment-1409843991 ## CI report: * 88d82b6c21f0c4d81409dae0f5420cea116954ba UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7787: [HUDI-5646] Guard dropping columns by a config, do not allow by default

hudi-bot commented on PR #7787: URL: https://github.com/apache/hudi/pull/7787#issuecomment-1409843915 ## CI report: * 4a25ef8aaf5415ea6a918b7f32c5f3a84597ddec Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14781) * a930137a8435863ad4b01997b731895328c0fa59 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14790) * 8849a731a979b687f490674e7fc15f8301947b27 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7769: [HUDI-5633] Fixing performance regression in `HoodieSparkRecord`

hudi-bot commented on PR #7769: URL: https://github.com/apache/hudi/pull/7769#issuecomment-1409843852 ## CI report: * 9bfa20f45fcc675b79053bb8b4f379b09c6cd6c5 UNKNOWN * 325244765016f67034fd8f364942028fe217ecb5 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14788) * 24020a964671b35fb9aa7b86748771fd71512495 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14800) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7706: [HUDI-5585][flink]Fix flink creates and writes the table, the spark alter table reports an error

hudi-bot commented on PR #7706: URL: https://github.com/apache/hudi/pull/7706#issuecomment-1409843749 ## CI report: * 126951c4f2e2581ffbfb996df3d2ea325290f7f6 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14447) * 8c4ddec99d740d881bbabb9ca27e860c217acc2c UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7669: [HUDI-5553] Prevent partition(s) from being dropped if there are pending…

hudi-bot commented on PR #7669: URL: https://github.com/apache/hudi/pull/7669#issuecomment-1409843559 ## CI report: * c4ecd3d09de159fab46b6bcadc502cea3a76e4cb Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14770) * 6e1d03f8dd6c292959ee29c8592ca4340d2aca46 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14796) * 343b22a4f7cd783ff6a69ba19df828f221c69c5a UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7378: [HUDI-5329] spark reads hudi table error when flink creates the table without preCombine fields

hudi-bot commented on PR #7378: URL: https://github.com/apache/hudi/pull/7378#issuecomment-1409843214 ## CI report: * e6dd84eef8e98d43c19f31df2a88f6b1d3f6f717 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13455) * 576dad186d2bfbe4bc497d75d17c6ded88df35a5 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-5661) Add ConflictResolutionStrategy for bucket index

[ https://issues.apache.org/jira/browse/HUDI-5661?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HUDI-5661: - Labels: pull-request-available (was: ) > Add ConflictResolutionStrategy for bucket index > --- > > Key: HUDI-5661 > URL: https://issues.apache.org/jira/browse/HUDI-5661 > Project: Apache Hudi > Issue Type: Improvement >Reporter: xi chaomin >Priority: Major > Labels: pull-request-available > -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] xicm opened a new pull request, #7803: [HUDI-5661] Add ConflictResolutionStrategy for bucket index

xicm opened a new pull request, #7803: URL: https://github.com/apache/hudi/pull/7803 ### Change Logs `SimpleConcurrentFileWritesConflictResolutionStrategy` check conflict by file id , while for bucket index we should check bucket id to see if there is a conflict. ### Impact none ### Risk level (write none, low medium or high below) low ### Documentation Update _Describe any necessary documentation update if there is any new feature, config, or user-facing change_ - _The config description must be updated if new configs are added or the default value of the configs are changed_ - _Any new feature or user-facing change requires updating the Hudi website. Please create a Jira ticket, attach the ticket number here and follow the [instruction](https://hudi.apache.org/contribute/developer-setup#website) to make changes to the website._ ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7793: [HUDI-5317] Fix insert overwrite table for partitioned table

hudi-bot commented on PR #7793: URL: https://github.com/apache/hudi/pull/7793#issuecomment-1409837284 ## CI report: * 6f3efd8db2ef71ad0861f468a491b6b22e032037 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14791) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7633: Fix Deletes issued without any prior commits

hudi-bot commented on PR #7633: URL: https://github.com/apache/hudi/pull/7633#issuecomment-1409836312 ## CI report: * 6edffd10a0abadfc1f169b10f131b755c8e1280e Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14789) Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14799) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7434: [HUDI-5240] Clean content when recursive Invocation inflate

hudi-bot commented on PR #7434: URL: https://github.com/apache/hudi/pull/7434#issuecomment-1409836047 ## CI report: * 3494a8f423725c70f16e1f2f4ae2e7ef45a06b35 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=13645) Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14798) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nfarah86 commented on pull request #7549: [DOCS] improve spark quickstart, info about MT and async services

nfarah86 commented on PR #7549: URL: https://github.com/apache/hudi/pull/7549#issuecomment-1409835064 cc @codope -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (HUDI-5661) Add ConflictResolutionStrategy for bucket index

xi chaomin created HUDI-5661: Summary: Add ConflictResolutionStrategy for bucket index Key: HUDI-5661 URL: https://issues.apache.org/jira/browse/HUDI-5661 Project: Apache Hudi Issue Type: Improvement Reporter: xi chaomin -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] XuQianJin-Stars commented on pull request #7434: [HUDI-5240] Clean content when recursive Invocation inflate

XuQianJin-Stars commented on PR #7434: URL: https://github.com/apache/hudi/pull/7434#issuecomment-1409823440 @hudi-bot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] liaotian1005 commented on pull request #7633: Fix Deletes issued without any prior commits

liaotian1005 commented on PR #7633: URL: https://github.com/apache/hudi/pull/7633#issuecomment-1409806899 @hudi-bot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] alexeykudinkin opened a new pull request, #7802: [DNM] Disable default Avro schema validation

alexeykudinkin opened a new pull request, #7802: URL: https://github.com/apache/hudi/pull/7802 ### Change Logs As we discussed earlier today, disabling executing Avro schema validation by default. ### Impact No impact ### Risk level (write none, low medium or high below) Low ### Documentation Update _Describe any necessary documentation update if there is any new feature, config, or user-facing change_ - _The config description must be updated if new configs are added or the default value of the configs are changed_ - _Any new feature or user-facing change requires updating the Hudi website. Please create a Jira ticket, attach the ticket number here and follow the [instruction](https://hudi.apache.org/contribute/developer-setup#website) to make changes to the website._ ### Contributor's checklist - [ ] Read through [contributor's guide](https://hudi.apache.org/contribute/how-to-contribute) - [ ] Change Logs and Impact were stated clearly - [ ] Adequate tests were added if applicable - [ ] CI passed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] maheshguptags commented on issue #7613: [SUPPORT] Not able to Delete record

maheshguptags commented on issue #7613: URL: https://github.com/apache/hudi/issues/7613#issuecomment-1409804936 Hi @danny0405, I tried both option like Upsert, Delete but it is doing the same. so it is not working Now if you have any working code for cross platform(flink insert, delete spark) to delete the record from hudi table, please share with us as well. -Mahesh -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (HUDI-5660) Support bucket index for spark bulk_insert

Danny Chen created HUDI-5660: Summary: Support bucket index for spark bulk_insert Key: HUDI-5660 URL: https://issues.apache.org/jira/browse/HUDI-5660 Project: Apache Hudi Issue Type: Improvement Components: spark Reporter: Danny Chen -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] waywtdcc commented on a diff in pull request #7706: [HUDI-5585][flink]Fix flink creates and writes the table, the spark alter table reports an error

waywtdcc commented on code in PR #7706:

URL: https://github.com/apache/hudi/pull/7706#discussion_r1091477435

##

hudi-flink-datasource/hudi-flink/src/main/java/org/apache/hudi/table/catalog/HoodieHiveCatalog.java:

##

@@ -438,8 +439,10 @@ public CatalogBaseTable getTable(ObjectPath tablePath)

throws TableNotExistExcep

LOG.warn("{} does not have any hoodie schema, and use hive table schema

to infer the table schema", tablePath);

schema = HiveSchemaUtils.convertTableSchema(hiveTable);

}

+org.apache.flink.table.api.Schema resultSchema =

DataTypeUtils.dropIfExistsColumns(schema,

HoodieRecord.HOODIE_META_COLUMNS_WITH_OPERATION);

+

Review Comment:

Indeed, I have removed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #7684: [HUDI-5567] Modified to make bootstrapping exception message clearer

danny0405 commented on PR #7684: URL: https://github.com/apache/hudi/pull/7684#issuecomment-1409796950 The failed test is kind of unrelated: https://dev.azure.com/apache-hudi-ci-org/apache-hudi-ci/_build/results?buildId=14754=logs=dcedfe73-9485-5cc5-817a-73b61fc5dcb0=746585d8-b50a-55c3-26c5-517d93af9934=40524 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on issue #7613: Not able to Delete record

danny0405 commented on issue #7613: URL: https://github.com/apache/hudi/issues/7613#issuecomment-1409794955 I noticed that there is an write operation named `DELETE`, so just switch the value to `hoodie.datasource.write.operation` and have a try again. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (HUDI-5487) Reduce duplicate Logs in ExternalSpillableMap

[

https://issues.apache.org/jira/browse/HUDI-5487?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Danny Chen closed HUDI-5487.

Reviewers: Danny Chen

Resolution: Fixed

Fixed via master branch: 1377143656a447ddd6f884380c93be6fb5ecf459

> Reduce duplicate Logs in ExternalSpillableMap

> -

>

> Key: HUDI-5487

> URL: https://issues.apache.org/jira/browse/HUDI-5487

> Project: Apache Hudi

> Issue Type: Improvement

>Reporter: dzcxzl

>Assignee: Danny Chen

>Priority: Minor

> Labels: pull-request-available

> Fix For: 0.13.1

>

>

> We see hundreds of thousands of duplicate logs in the executor log.

> {code:java}

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567 {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (HUDI-5487) Reduce duplicate Logs in ExternalSpillableMap

[

https://issues.apache.org/jira/browse/HUDI-5487?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Danny Chen updated HUDI-5487:

-

Fix Version/s: 0.13.1

> Reduce duplicate Logs in ExternalSpillableMap

> -

>

> Key: HUDI-5487

> URL: https://issues.apache.org/jira/browse/HUDI-5487

> Project: Apache Hudi

> Issue Type: Improvement

>Reporter: dzcxzl

>Priority: Minor

> Labels: pull-request-available

> Fix For: 0.13.1

>

>

> We see hundreds of thousands of duplicate logs in the executor log.

> {code:java}

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567 {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Assigned] (HUDI-5487) Reduce duplicate Logs in ExternalSpillableMap

[

https://issues.apache.org/jira/browse/HUDI-5487?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Danny Chen reassigned HUDI-5487:

Assignee: Danny Chen

> Reduce duplicate Logs in ExternalSpillableMap

> -

>

> Key: HUDI-5487

> URL: https://issues.apache.org/jira/browse/HUDI-5487

> Project: Apache Hudi

> Issue Type: Improvement

>Reporter: dzcxzl

>Assignee: Danny Chen

>Priority: Minor

> Labels: pull-request-available

> Fix For: 0.13.1

>

>

> We see hundreds of thousands of duplicate logs in the executor log.

> {code:java}

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567

> 22/12/26 21:13:40,864 [Executor task launch worker for task 0.0 in stage

> 480.0 (TID 211376)] INFO ExternalSpillableMap: Update Estimated Payload size

> to => 4567 {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[hudi] branch master updated: [HUDI-5487] Reduce duplicate logs in ExternalSpillableMap (#7579)

This is an automated email from the ASF dual-hosted git repository.

danny0405 pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/hudi.git

The following commit(s) were added to refs/heads/master by this push:

new 1377143656a [HUDI-5487] Reduce duplicate logs in ExternalSpillableMap

(#7579)

1377143656a is described below

commit 1377143656a447ddd6f884380c93be6fb5ecf459

Author: cxzl25

AuthorDate: Tue Jan 31 13:35:27 2023 +0800

[HUDI-5487] Reduce duplicate logs in ExternalSpillableMap (#7579)

---

.../apache/hudi/common/util/collection/ExternalSpillableMap.java | 9 ++---

1 file changed, 6 insertions(+), 3 deletions(-)

diff --git

a/hudi-common/src/main/java/org/apache/hudi/common/util/collection/ExternalSpillableMap.java

b/hudi-common/src/main/java/org/apache/hudi/common/util/collection/ExternalSpillableMap.java

index ee930e588d0..b540b204214 100644

---

a/hudi-common/src/main/java/org/apache/hudi/common/util/collection/ExternalSpillableMap.java

+++

b/hudi-common/src/main/java/org/apache/hudi/common/util/collection/ExternalSpillableMap.java

@@ -202,10 +202,13 @@ public class ExternalSpillableMap= maxInMemorySizeInBytes ||

inMemoryMap.size() % NUMBER_OF_RECORDS_TO_ESTIMATE_PAYLOAD_SIZE == 0) {

- this.estimatedPayloadSize = (long) (this.estimatedPayloadSize * 0.9

-+ (keySizeEstimator.sizeEstimate(key) +

valueSizeEstimator.sizeEstimate(value)) * 0.1);

+ long tmpEstimatedPayloadSize = (long) (this.estimatedPayloadSize * 0.9

+ + (keySizeEstimator.sizeEstimate(key) +

valueSizeEstimator.sizeEstimate(value)) * 0.1);

+ if (this.estimatedPayloadSize != tmpEstimatedPayloadSize) {

+LOG.info("Update Estimated Payload size to => " +

this.estimatedPayloadSize);

+ }

+ this.estimatedPayloadSize = tmpEstimatedPayloadSize;

this.currentInMemoryMapSize = this.inMemoryMap.size() *

this.estimatedPayloadSize;

- LOG.info("Update Estimated Payload size to => " +

this.estimatedPayloadSize);

}

if (this.currentInMemoryMapSize < maxInMemorySizeInBytes ||

inMemoryMap.containsKey(key)) {

[GitHub] [hudi] danny0405 merged pull request #7579: [HUDI-5487] Reduce duplicate Logs in ExternalSpillableMap

danny0405 merged PR #7579: URL: https://github.com/apache/hudi/pull/7579 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on pull request #7647: [HUDI-5530] Fix WARNING during compile.

danny0405 commented on PR #7647: URL: https://github.com/apache/hudi/pull/7647#issuecomment-1409787529 cc @alexeykudinkin if you have any time for this? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on issue #7791: [SUPPORT] Don't see metadata folder in .hoodie folder when ingesting data use hudi kafka connector

danny0405 commented on issue #7791: URL: https://github.com/apache/hudi/issues/7791#issuecomment-1409780774 Can you check the `.hoodie/hoodie.properties` file so that we make sure the metadata table is enabled in the table config? Just to make sure there are no conflicts. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (HUDI-5659) Support cleaning for archived files

[ https://issues.apache.org/jira/browse/HUDI-5659?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen updated HUDI-5659: - Labels: hudi-on-call (was: ) > Support cleaning for archived files > --- > > Key: HUDI-5659 > URL: https://issues.apache.org/jira/browse/HUDI-5659 > Project: Apache Hudi > Issue Type: Improvement > Components: writer-core >Reporter: Danny Chen >Priority: Major > Labels: hudi-on-call > > Details comes from the issue: https://github.com/apache/hudi/issues/7800 -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] danny0405 commented on issue #7800: "java.lang.OutOfMemoryError: Requested array size exceeds VM limit" while writing to Hudi COW table

danny0405 commented on issue #7800: URL: https://github.com/apache/hudi/issues/7800#issuecomment-1409773708 Thanks for the feedback @phani482 , sorry to tell you that cleaning of archival files are not supported now, I have created a JIRA issue to track this: https://issues.apache.org/jira/browse/HUDI-5659 I also noticed that you use the `INSERT` operation, so which spark stage did you percive as slow? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7769: [HUDI-5633] Fixing performance regression in `HoodieSparkRecord`

hudi-bot commented on PR #7769: URL: https://github.com/apache/hudi/pull/7769#issuecomment-1409773643 ## CI report: * 9bfa20f45fcc675b79053bb8b4f379b09c6cd6c5 UNKNOWN * 325244765016f67034fd8f364942028fe217ecb5 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14788) * 24020a964671b35fb9aa7b86748771fd71512495 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #7669: [HUDI-5553] Prevent partition(s) from being dropped if there are pending…

hudi-bot commented on PR #7669: URL: https://github.com/apache/hudi/pull/7669#issuecomment-1409773412 ## CI report: * c4ecd3d09de159fab46b6bcadc502cea3a76e4cb Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14770) * 6e1d03f8dd6c292959ee29c8592ca4340d2aca46 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=14796) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nfarah86 commented on pull request #7549: [DOCS] improve spark quickstart, info about MT and async services