[GitHub] [hudi] codope commented on issue #6304: Hudi MultiTable Deltastreamer not updating glue catalog when new column added on Source

codope commented on issue #6304: URL: https://github.com/apache/hudi/issues/6304#issuecomment-1488046159 Closing due to inactivity. Have also tested the adding column scenario with glue sync for upcoming 0.12.3 release. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope closed issue #6304: Hudi MultiTable Deltastreamer not updating glue catalog when new column added on Source

codope closed issue #6304: Hudi MultiTable Deltastreamer not updating glue catalog when new column added on Source URL: https://github.com/apache/hudi/issues/6304 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope commented on issue #6283: [SUPPORT] No .marker files

codope commented on issue #6283: URL: https://github.com/apache/hudi/issues/6283#issuecomment-1488040027 Closing due to inactivity. Please reopen with steps to reproduce. The general flow works in master as well as 0.12.2 an d0.13.0. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope closed issue #6283: [SUPPORT] No .marker files

codope closed issue #6283: [SUPPORT] No .marker files URL: https://github.com/apache/hudi/issues/6283 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] voonhous commented on a diff in pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

voonhous commented on code in PR #8307:

URL: https://github.com/apache/hudi/pull/8307#discussion_r1151467972

##

hudi-common/src/main/java/org/apache/hudi/avro/GenericAvroSerializer.java:

##

@@ -0,0 +1,148 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.avro;

+

+import com.esotericsoftware.kryo.Kryo;

+import com.esotericsoftware.kryo.Serializer;

+import com.esotericsoftware.kryo.io.Input;

+import com.esotericsoftware.kryo.io.Output;

+import org.apache.avro.Schema;

+import org.apache.avro.SchemaNormalization;

+import org.apache.avro.generic.GenericContainer;

+import org.apache.avro.generic.GenericDatumReader;

+import org.apache.avro.generic.GenericDatumWriter;

+import org.apache.avro.io.DatumReader;

+import org.apache.avro.io.DatumWriter;

+import org.apache.avro.io.Decoder;

+import org.apache.avro.io.DecoderFactory;

+import org.apache.avro.io.Encoder;

+import org.apache.avro.io.EncoderFactory;

+

+import java.io.IOException;

+import java.nio.charset.StandardCharsets;

+import java.util.HashMap;

+

+

+/**

+ * Custom serializer used for generic Avro containers.

+ *

+ * Heavily adapted from:

+ *

+ * https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/serializer/GenericAvroSerializer.scala";>GenericAvroSerializer.scala

+ *

+ * As {@link org.apache.hudi.common.util.SerializationUtils} is not shared

between threads and does not concern any

+ * shuffling operations, compression and decompression cache is omitted as

network IO is not a concern.

+ *

+ * Unlike Spark's implementation, the class and constructor is not initialized

with a predefined map of avro schemas.

+ * This is the case as schemas to read and write are not known beforehand.

+ *

+ * @param the subtype of [[GenericContainer]] handled by this serializer

+ */

+public class GenericAvroSerializer extends

Serializer {

+

+ // reuses the same datum reader/writer since the same schema will be used

many times

+ private final HashMap> writerCache = new HashMap<>();

+ private final HashMap> readerCache = new HashMap<>();

+

+ // fingerprinting is very expensive so this alleviates most of the work

+ private final HashMap fingerprintCache = new HashMap<>();

+ private final HashMap schemaCache = new HashMap<>();

+

+ private Long getFingerprint(Schema schema) {

+if (fingerprintCache.containsKey(schema)) {

+ return fingerprintCache.get(schema);

+} else {

+ Long fingerprint = SchemaNormalization.parsingFingerprint64(schema);

+ fingerprintCache.put(schema, fingerprint);

+ return fingerprint;

+}

+ }

+

+ private Schema getSchema(Long fingerprint, byte[] schemaBytes) {

+if (schemaCache.containsKey(fingerprint)) {

+ return schemaCache.get(fingerprint);

+} else {

+ String schema = new String(schemaBytes, StandardCharsets.UTF_8);

+ Schema parsedSchema = new Schema.Parser().parse(schema);

+ schemaCache.put(fingerprint, parsedSchema);

+ return parsedSchema;

+}

+ }

+

+ private DatumWriter getDatumWriter(Schema schema) {

+DatumWriter writer;

+if (writerCache.containsKey(schema)) {

+ writer = writerCache.get(schema);

+} else {

+ writer = new GenericDatumWriter<>(schema);

+ writerCache.put(schema, writer);

+}

+return writer;

+ }

+

+ private DatumReader getDatumReader(Schema schema) {

+DatumReader reader;

+if (readerCache.containsKey(schema)) {

+ reader = readerCache.get(schema);

+} else {

+ reader = new GenericDatumReader<>(schema);

+ readerCache.put(schema, reader);

+}

+return reader;

+ }

+

+ private void serializeDatum(D datum, Output output) throws IOException {

+Encoder encoder = EncoderFactory.get().directBinaryEncoder(output, null);

+Schema schema = datum.getSchema();

+Long fingerprint = this.getFingerprint(schema);

+byte[] schemaBytes = schema.toString().getBytes(StandardCharsets.UTF_8);

Review Comment:

In that case, I'll change the cache as such?

```java

private final HashMap fingerprintCache = new HashMap<>();

private final HashMap schemaCache = new HashMap<>();

```

--

This is an automated message from the Apache Git Service.

To respond t

[jira] [Created] (HUDI-5997) Support DFS Schema Provider with S3/GCS EventsHoodieIncrSource

Sagar Sumit created HUDI-5997: - Summary: Support DFS Schema Provider with S3/GCS EventsHoodieIncrSource Key: HUDI-5997 URL: https://issues.apache.org/jira/browse/HUDI-5997 Project: Apache Hudi Issue Type: Improvement Components: deltastreamer Reporter: Sagar Sumit Fix For: 0.14.0 See for more details -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] codope commented on issue #8211: [SUPPORT] DFS Schema Provider not working with S3EventsHoodieIncrSource

codope commented on issue #8211: URL: https://github.com/apache/hudi/issues/8211#issuecomment-1488037163 The incremental source infers schema by simply loading the dataset from the source table. What you're proposing is a good enhancement. Would you like to take it up? HUDI-5997 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] voonhous commented on a diff in pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

voonhous commented on code in PR #8307:

URL: https://github.com/apache/hudi/pull/8307#discussion_r1151467972

##

hudi-common/src/main/java/org/apache/hudi/avro/GenericAvroSerializer.java:

##

@@ -0,0 +1,148 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.avro;

+

+import com.esotericsoftware.kryo.Kryo;

+import com.esotericsoftware.kryo.Serializer;

+import com.esotericsoftware.kryo.io.Input;

+import com.esotericsoftware.kryo.io.Output;

+import org.apache.avro.Schema;

+import org.apache.avro.SchemaNormalization;

+import org.apache.avro.generic.GenericContainer;

+import org.apache.avro.generic.GenericDatumReader;

+import org.apache.avro.generic.GenericDatumWriter;

+import org.apache.avro.io.DatumReader;

+import org.apache.avro.io.DatumWriter;

+import org.apache.avro.io.Decoder;

+import org.apache.avro.io.DecoderFactory;

+import org.apache.avro.io.Encoder;

+import org.apache.avro.io.EncoderFactory;

+

+import java.io.IOException;

+import java.nio.charset.StandardCharsets;

+import java.util.HashMap;

+

+

+/**

+ * Custom serializer used for generic Avro containers.

+ *

+ * Heavily adapted from:

+ *

+ * https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/serializer/GenericAvroSerializer.scala";>GenericAvroSerializer.scala

+ *

+ * As {@link org.apache.hudi.common.util.SerializationUtils} is not shared

between threads and does not concern any

+ * shuffling operations, compression and decompression cache is omitted as

network IO is not a concern.

+ *

+ * Unlike Spark's implementation, the class and constructor is not initialized

with a predefined map of avro schemas.

+ * This is the case as schemas to read and write are not known beforehand.

+ *

+ * @param the subtype of [[GenericContainer]] handled by this serializer

+ */

+public class GenericAvroSerializer extends

Serializer {

+

+ // reuses the same datum reader/writer since the same schema will be used

many times

+ private final HashMap> writerCache = new HashMap<>();

+ private final HashMap> readerCache = new HashMap<>();

+

+ // fingerprinting is very expensive so this alleviates most of the work

+ private final HashMap fingerprintCache = new HashMap<>();

+ private final HashMap schemaCache = new HashMap<>();

+

+ private Long getFingerprint(Schema schema) {

+if (fingerprintCache.containsKey(schema)) {

+ return fingerprintCache.get(schema);

+} else {

+ Long fingerprint = SchemaNormalization.parsingFingerprint64(schema);

+ fingerprintCache.put(schema, fingerprint);

+ return fingerprint;

+}

+ }

+

+ private Schema getSchema(Long fingerprint, byte[] schemaBytes) {

+if (schemaCache.containsKey(fingerprint)) {

+ return schemaCache.get(fingerprint);

+} else {

+ String schema = new String(schemaBytes, StandardCharsets.UTF_8);

+ Schema parsedSchema = new Schema.Parser().parse(schema);

+ schemaCache.put(fingerprint, parsedSchema);

+ return parsedSchema;

+}

+ }

+

+ private DatumWriter getDatumWriter(Schema schema) {

+DatumWriter writer;

+if (writerCache.containsKey(schema)) {

+ writer = writerCache.get(schema);

+} else {

+ writer = new GenericDatumWriter<>(schema);

+ writerCache.put(schema, writer);

+}

+return writer;

+ }

+

+ private DatumReader getDatumReader(Schema schema) {

+DatumReader reader;

+if (readerCache.containsKey(schema)) {

+ reader = readerCache.get(schema);

+} else {

+ reader = new GenericDatumReader<>(schema);

+ readerCache.put(schema, reader);

+}

+return reader;

+ }

+

+ private void serializeDatum(D datum, Output output) throws IOException {

+Encoder encoder = EncoderFactory.get().directBinaryEncoder(output, null);

+Schema schema = datum.getSchema();

+Long fingerprint = this.getFingerprint(schema);

+byte[] schemaBytes = schema.toString().getBytes(StandardCharsets.UTF_8);

Review Comment:

In that case, I'll change the cache as such?

```

private final HashMap fingerprintCache = new HashMap<>();

private final HashMap schemaCache = new HashMap<>();

```

--

This is an automated message from the Apache Git Service.

To respond to th

[GitHub] [hudi] danny0405 commented on issue #8087: [SUPPORT] split_reader don't checkpoint before consuming all splits

danny0405 commented on issue #8087: URL: https://github.com/apache/hudi/issues/8087#issuecomment-1488035083 > Can i create a pr then you review it Sure, but let's test the PR in production for at least one week, we also need to test the failover/restart for data completeness. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on issue #8267: [SUPPORT] Why some delta commit logs files are not converted to parquet ?

danny0405 commented on issue #8267: URL: https://github.com/apache/hudi/issues/8267#issuecomment-1488033858 > there is actually no filedId parquet file Confused by your words, can you re-organize it a little? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope commented on issue #8222: [SUPPORT] Incremental read with MOR does not work as COW

codope commented on issue #8222: URL: https://github.com/apache/hudi/issues/8222#issuecomment-1488033021 Maybe https://github.com/apache/hudi/pull/8299 fixes this issue @parisni -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] danny0405 commented on a diff in pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

danny0405 commented on code in PR #8307:

URL: https://github.com/apache/hudi/pull/8307#discussion_r1151463202

##

hudi-common/src/main/java/org/apache/hudi/avro/GenericAvroSerializer.java:

##

@@ -0,0 +1,148 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.avro;

+

+import com.esotericsoftware.kryo.Kryo;

+import com.esotericsoftware.kryo.Serializer;

+import com.esotericsoftware.kryo.io.Input;

+import com.esotericsoftware.kryo.io.Output;

+import org.apache.avro.Schema;

+import org.apache.avro.SchemaNormalization;

+import org.apache.avro.generic.GenericContainer;

+import org.apache.avro.generic.GenericDatumReader;

+import org.apache.avro.generic.GenericDatumWriter;

+import org.apache.avro.io.DatumReader;

+import org.apache.avro.io.DatumWriter;

+import org.apache.avro.io.Decoder;

+import org.apache.avro.io.DecoderFactory;

+import org.apache.avro.io.Encoder;

+import org.apache.avro.io.EncoderFactory;

+

+import java.io.IOException;

+import java.nio.charset.StandardCharsets;

+import java.util.HashMap;

+

+

+/**

+ * Custom serializer used for generic Avro containers.

+ *

+ * Heavily adapted from:

+ *

+ * https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/serializer/GenericAvroSerializer.scala";>GenericAvroSerializer.scala

+ *

+ * As {@link org.apache.hudi.common.util.SerializationUtils} is not shared

between threads and does not concern any

+ * shuffling operations, compression and decompression cache is omitted as

network IO is not a concern.

+ *

+ * Unlike Spark's implementation, the class and constructor is not initialized

with a predefined map of avro schemas.

+ * This is the case as schemas to read and write are not known beforehand.

+ *

+ * @param the subtype of [[GenericContainer]] handled by this serializer

+ */

+public class GenericAvroSerializer extends

Serializer {

+

+ // reuses the same datum reader/writer since the same schema will be used

many times

+ private final HashMap> writerCache = new HashMap<>();

+ private final HashMap> readerCache = new HashMap<>();

+

+ // fingerprinting is very expensive so this alleviates most of the work

+ private final HashMap fingerprintCache = new HashMap<>();

+ private final HashMap schemaCache = new HashMap<>();

+

+ private Long getFingerprint(Schema schema) {

+if (fingerprintCache.containsKey(schema)) {

+ return fingerprintCache.get(schema);

+} else {

+ Long fingerprint = SchemaNormalization.parsingFingerprint64(schema);

+ fingerprintCache.put(schema, fingerprint);

+ return fingerprint;

+}

+ }

+

+ private Schema getSchema(Long fingerprint, byte[] schemaBytes) {

+if (schemaCache.containsKey(fingerprint)) {

+ return schemaCache.get(fingerprint);

+} else {

+ String schema = new String(schemaBytes, StandardCharsets.UTF_8);

+ Schema parsedSchema = new Schema.Parser().parse(schema);

+ schemaCache.put(fingerprint, parsedSchema);

+ return parsedSchema;

+}

+ }

+

+ private DatumWriter getDatumWriter(Schema schema) {

+DatumWriter writer;

+if (writerCache.containsKey(schema)) {

+ writer = writerCache.get(schema);

+} else {

+ writer = new GenericDatumWriter<>(schema);

+ writerCache.put(schema, writer);

+}

+return writer;

+ }

+

+ private DatumReader getDatumReader(Schema schema) {

+DatumReader reader;

+if (readerCache.containsKey(schema)) {

+ reader = readerCache.get(schema);

+} else {

+ reader = new GenericDatumReader<>(schema);

+ readerCache.put(schema, reader);

+}

+return reader;

+ }

+

+ private void serializeDatum(D datum, Output output) throws IOException {

+Encoder encoder = EncoderFactory.get().directBinaryEncoder(output, null);

+Schema schema = datum.getSchema();

+Long fingerprint = this.getFingerprint(schema);

+byte[] schemaBytes = schema.toString().getBytes(StandardCharsets.UTF_8);

Review Comment:

You are right, we can use the schema bytes as the cache key. But be caution

of the `ByteBuffer` equals impls.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to th

[GitHub] [hudi] nsivabalan commented on a diff in pull request #8107: [HUDI-5514] Adding auto generation of record keys support to Hudi/Spark

nsivabalan commented on code in PR #8107:

URL: https://github.com/apache/hudi/pull/8107#discussion_r1151448306

##

hudi-common/src/main/java/org/apache/hudi/common/table/HoodieTableConfig.java:

##

@@ -260,6 +260,18 @@ public class HoodieTableConfig extends HoodieConfig {

.sinceVersion("0.13.0")

.withDocumentation("The metadata of secondary indexes");

+ public static final ConfigProperty AUTO_GENERATE_RECORD_KEYS =

ConfigProperty

+ .key("hoodie.table.auto.generate.record.keys")

+ .defaultValue("false")

+ .withDocumentation("Enables automatic generation of the record-keys in

cases when dataset bears "

Review Comment:

yes. @yihua had some tracking ticket for this. Ethan: I could not locate

one. can you share the jira link.

##

hudi-spark-datasource/hudi-spark-common/src/main/scala/org/apache/hudi/AutoRecordKeyGenerationUtils.scala:

##

@@ -0,0 +1,103 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.hudi

+

+import org.apache.avro.generic.GenericRecord

+import org.apache.hudi.DataSourceWriteOptions.INSERT_DROP_DUPS

+import org.apache.hudi.common.config.HoodieConfig

+import org.apache.hudi.common.model.{HoodieRecord, WriteOperationType}

+import org.apache.hudi.common.table.HoodieTableConfig

+import org.apache.hudi.config.HoodieWriteConfig

+import org.apache.hudi.exception.HoodieException

+import org.apache.spark.TaskContext

+

+object AutoRecordKeyGenerationUtils {

+

+ // supported operation types when auto generation of record keys is enabled.

+ val supportedOperations: Set[String] =

+Set(WriteOperationType.INSERT, WriteOperationType.BULK_INSERT,

WriteOperationType.DELETE,

+ WriteOperationType.INSERT_OVERWRITE,

WriteOperationType.INSERT_OVERWRITE_TABLE,

+ WriteOperationType.DELETE_PARTITION).map(_.name())

Review Comment:

yeah. we can't generate for an upsert. its only insert or bulk_insert for

spark-datasource writes. but w/ spark-sql, we might want to support MIT,

updates, deletes. so, will fix this accordingly.

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/SimpleAvroKeyGenerator.java:

##

@@ -29,24 +31,29 @@

public class SimpleAvroKeyGenerator extends BaseKeyGenerator {

public SimpleAvroKeyGenerator(TypedProperties props) {

-this(props,

props.getString(KeyGeneratorOptions.RECORDKEY_FIELD_NAME.key()),

+this(props,

Option.ofNullable(props.getString(KeyGeneratorOptions.RECORDKEY_FIELD_NAME.key(),

null)),

props.getString(KeyGeneratorOptions.PARTITIONPATH_FIELD_NAME.key()));

}

SimpleAvroKeyGenerator(TypedProperties props, String partitionPathField) {

-this(props, null, partitionPathField);

+this(props, Option.empty(), partitionPathField);

}

- SimpleAvroKeyGenerator(TypedProperties props, String recordKeyField, String

partitionPathField) {

+ SimpleAvroKeyGenerator(TypedProperties props, Option recordKeyField,

String partitionPathField) {

Review Comment:

we are good. everywhere we use the constructor w/ just TypedProps

##

hudi-client/hudi-spark-client/src/main/scala/org/apache/hudi/HoodieDatasetBulkInsertHelper.scala:

##

@@ -82,9 +85,19 @@ object HoodieDatasetBulkInsertHelper

val keyGenerator =

ReflectionUtils.loadClass(keyGeneratorClassName, new

TypedProperties(config.getProps))

.asInstanceOf[SparkKeyGeneratorInterface]

+ val partitionId = TaskContext.getPartitionId()

Review Comment:

yeah. if entire source RDD is not re-computed, we should be ok.

##

hudi-spark-datasource/hudi-spark-common/src/main/scala/org/apache/hudi/AutoRecordKeyGenerationUtils.scala:

##

@@ -0,0 +1,103 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LI

[GitHub] [hudi] codope commented on issue #7452: [SUPPORT]SparkSQL can not read the latest data(snapshot mode) after write by flink

codope commented on issue #7452: URL: https://github.com/apache/hudi/issues/7452#issuecomment-1488030005 Closing it due to inactivity and not being a code issue. The workaround is mentioned above. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

hudi-bot commented on PR #8307: URL: https://github.com/apache/hudi/pull/8307#issuecomment-1488030487 ## CI report: * d8521565c1a8a4e215f779c525b7d123b44b94b3 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15965) Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15966) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] voonhous commented on pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

voonhous commented on PR #8307: URL: https://github.com/apache/hudi/pull/8307#issuecomment-1488030287 @hudi-bot run azure -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope closed issue #7452: [SUPPORT]SparkSQL can not read the latest data(snapshot mode) after write by flink

codope closed issue #7452: [SUPPORT]SparkSQL can not read the latest data(snapshot mode) after write by flink URL: https://github.com/apache/hudi/issues/7452 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] 1032851561 commented on issue #8087: [SUPPORT] split_reader don't checkpoint before consuming all splits

1032851561 commented on issue #8087: URL: https://github.com/apache/hudi/issues/8087#issuecomment-1488028533 > Should be fine, we need to test it in practice about the performace and whether it resolves the problem that ckp takes too long time to be timedout. I have tested my case, and it looks good. Can i create a pr then you review it?  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] codope commented on issue #7531: [SUPPORT] table comments not fully supported

codope commented on issue #7531: URL: https://github.com/apache/hudi/issues/7531#issuecomment-1488020421 Tracked in HUDI-5533 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

hudi-bot commented on PR #8307: URL: https://github.com/apache/hudi/pull/8307#issuecomment-1488016726 ## CI report: * d8521565c1a8a4e215f779c525b7d123b44b94b3 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15965) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (HUDI-5976) Add fs in the constructor of HoodieAvroHFileReader to avoid potential NPE

[ https://issues.apache.org/jira/browse/HUDI-5976?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Sagar Sumit resolved HUDI-5976. --- > Add fs in the constructor of HoodieAvroHFileReader to avoid potential NPE > - > > Key: HUDI-5976 > URL: https://issues.apache.org/jira/browse/HUDI-5976 > Project: Apache Hudi > Issue Type: Bug >Reporter: Sagar Sumit >Priority: Major > Labels: pull-request-available > > See https://github.com/apache/hudi/issues/8257 -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Updated] (HUDI-5976) Add fs in the constructor of HoodieAvroHFileReader to avoid potential NPE

[ https://issues.apache.org/jira/browse/HUDI-5976?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Sagar Sumit updated HUDI-5976: -- Fix Version/s: 0.14.0 > Add fs in the constructor of HoodieAvroHFileReader to avoid potential NPE > - > > Key: HUDI-5976 > URL: https://issues.apache.org/jira/browse/HUDI-5976 > Project: Apache Hudi > Issue Type: Bug >Reporter: Sagar Sumit >Priority: Major > Labels: pull-request-available > Fix For: 0.14.0 > > > See https://github.com/apache/hudi/issues/8257 -- This message was sent by Atlassian Jira (v8.20.10#820010)

[hudi] branch master updated (7243393c688 -> 21f83594a9c)

This is an automated email from the ASF dual-hosted git repository. codope pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git from 7243393c688 [HUDI-5952] Fix NPE when use kafka callback (#8227) add 21f83594a9c [HUDI-5976] Add fs in the constructor of HoodieAvroHFileReader (#8277) No new revisions were added by this update. Summary of changes: .../src/main/java/org/apache/hudi/common/fs/FSUtils.java | 8 .../hudi/common/table/log/block/HoodieHFileDataBlock.java | 10 +- .../hudi/common/table/log/block/HoodieParquetDataBlock.java| 6 ++ 3 files changed, 15 insertions(+), 9 deletions(-)

[GitHub] [hudi] codope merged pull request #8277: [HUDI-5976] Add fs in the constructor of HoodieAvroHFileReader

codope merged PR #8277: URL: https://github.com/apache/hudi/pull/8277 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on a diff in pull request #8107: [HUDI-5514] Adding auto generation of record keys support to Hudi/Spark

nsivabalan commented on code in PR #8107:

URL: https://github.com/apache/hudi/pull/8107#discussion_r1151439496

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/SimpleAvroKeyGenerator.java:

##

@@ -29,24 +31,29 @@

public class SimpleAvroKeyGenerator extends BaseKeyGenerator {

public SimpleAvroKeyGenerator(TypedProperties props) {

-this(props,

props.getString(KeyGeneratorOptions.RECORDKEY_FIELD_NAME.key()),

+this(props,

Option.ofNullable(props.getString(KeyGeneratorOptions.RECORDKEY_FIELD_NAME.key(),

null)),

Review Comment:

we use TypedProperties and got getString, we call checkKey() which might

throw exception if key is not found

https://github.com/apache/hudi/blob/7243393c6881802803c0233cbac42daf1271afb3/hudi-common/src/main/java/org/apache/hudi/common/config/TypedProperties.java#L72

and hence.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Created] (HUDI-5996) We should verify the consistency of bucket num at job startup.

HunterXHunter created HUDI-5996: --- Summary: We should verify the consistency of bucket num at job startup. Key: HUDI-5996 URL: https://issues.apache.org/jira/browse/HUDI-5996 Project: Apache Hudi Issue Type: Improvement Reporter: HunterXHunter Users may sometimes modify the bucket num, and the inconsistency of the bucket num will lead to data duplication and make it unavailability. Maybe there are some other parameters that should also be checked before the job starts -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [hudi] nsivabalan commented on a diff in pull request #8107: [HUDI-5514] Adding auto generation of record keys support to Hudi/Spark

nsivabalan commented on code in PR #8107:

URL: https://github.com/apache/hudi/pull/8107#discussion_r1151437700

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/keygen/NonpartitionedAvroKeyGenerator.java:

##

@@ -36,8 +39,9 @@ public class NonpartitionedAvroKeyGenerator extends

BaseKeyGenerator {

public NonpartitionedAvroKeyGenerator(TypedProperties props) {

super(props);

-this.recordKeyFields =

Arrays.stream(props.getString(KeyGeneratorOptions.RECORDKEY_FIELD_NAME.key())

-.split(",")).map(String::trim).filter(s ->

!s.isEmpty()).collect(Collectors.toList());

+this.recordKeyFields = autoGenerateRecordKeys() ? Collections.emptyList() :

Review Comment:

we have 10+ classes to fix on this. wondering if we can make it in a

separate patch https://issues.apache.org/jira/browse/HUDI-5995

dont want to add more changes to this patch.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [hudi] codope closed issue #8261: [SUPPORT] How to reduce hoodie commit latency

codope closed issue #8261: [SUPPORT] How to reduce hoodie commit latency URL: https://github.com/apache/hudi/issues/8261 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (HUDI-5995) Refactor key generators to set record keys in base class

sivabalan narayanan created HUDI-5995: - Summary: Refactor key generators to set record keys in base class Key: HUDI-5995 URL: https://issues.apache.org/jira/browse/HUDI-5995 Project: Apache Hudi Issue Type: Improvement Reporter: sivabalan narayanan Refactor key generators to set record keys in base class (BaseKeyGenerator) rather than in each individual sub classes. -- This message was sent by Atlassian Jira (v8.20.10#820010)

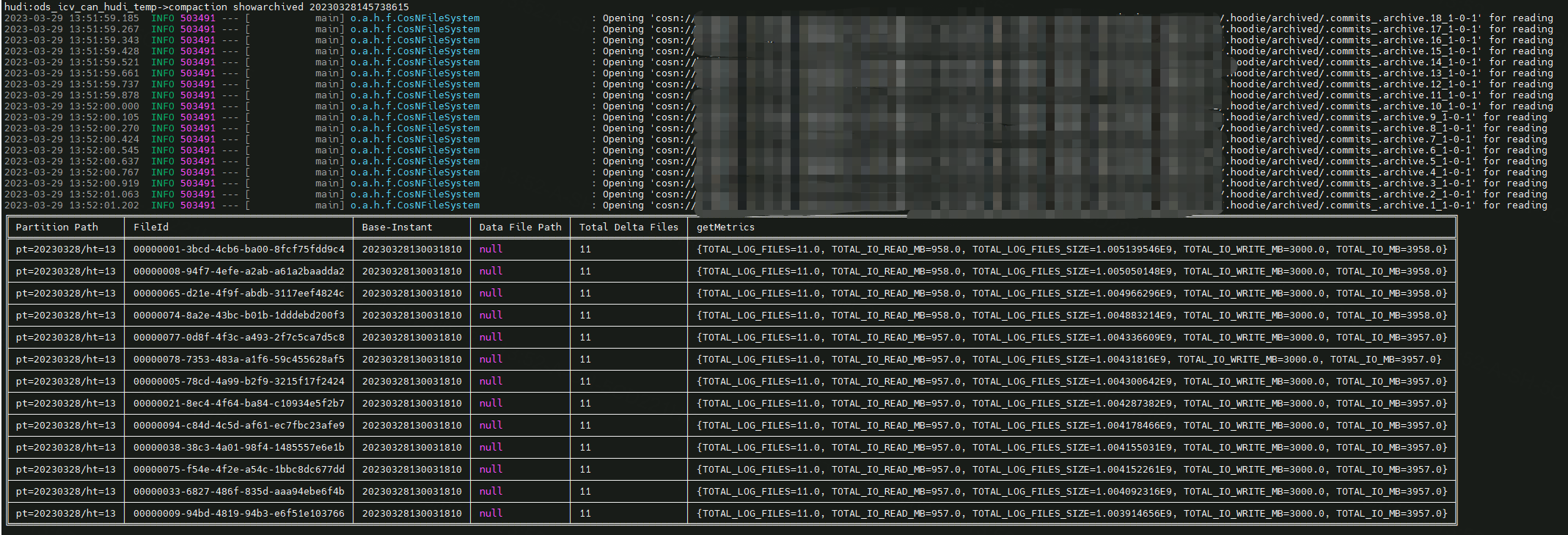

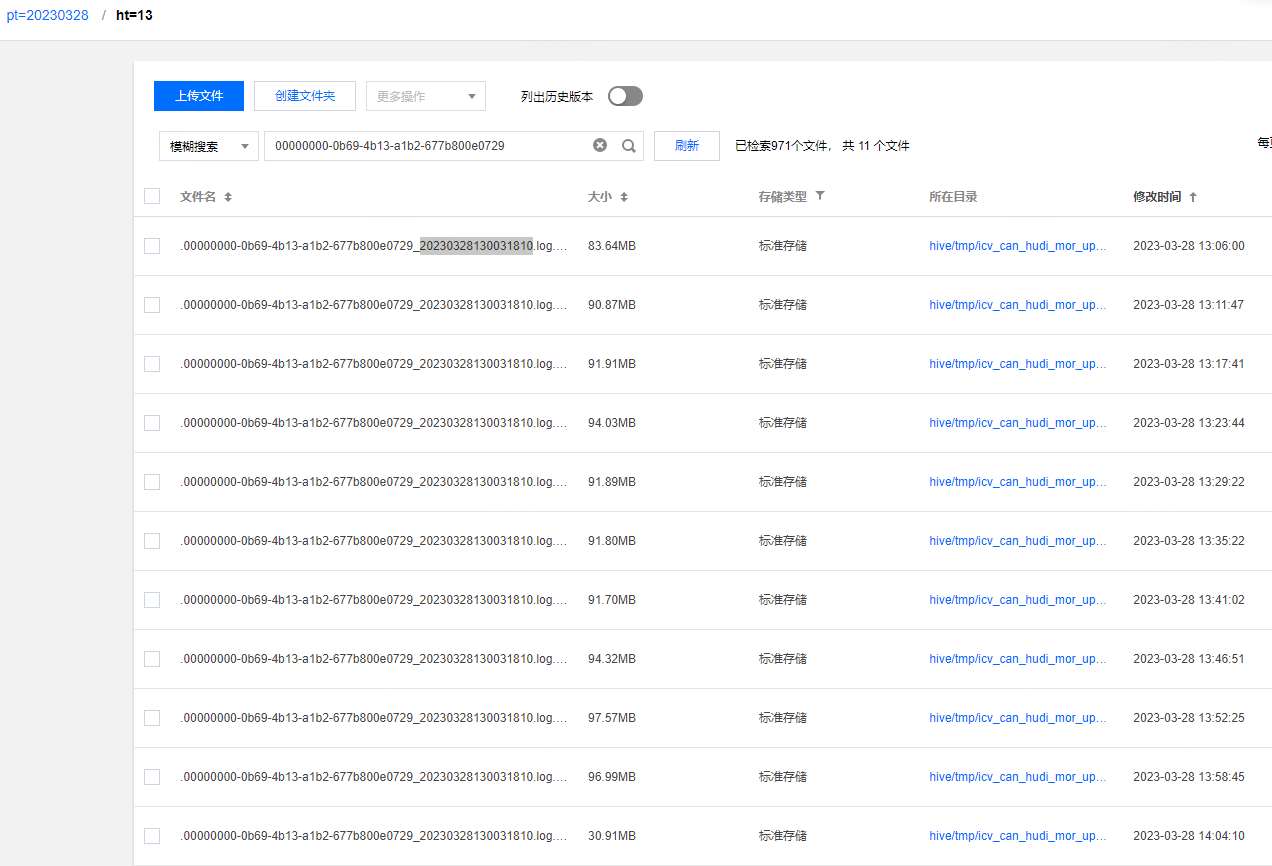

[GitHub] [hudi] DavidZ1 commented on issue #8267: [SUPPORT] Why some delta commit logs files are not converted to parquet ?

DavidZ1 commented on issue #8267: URL: https://github.com/apache/hudi/issues/8267#issuecomment-1487993254 Yes, we checked the Compaction archive file and found that the corresponding commit has completed the Compaction, but there is actually no filedId parquet file.    -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] nsivabalan commented on a diff in pull request #8304: [HUDI-5993] Connection leak for lock provider

nsivabalan commented on code in PR #8304:

URL: https://github.com/apache/hudi/pull/8304#discussion_r1151428902

##

hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/transaction/lock/LockManager.java:

##

@@ -111,6 +111,7 @@ public void unlock() {

if

(writeConfig.getWriteConcurrencyMode().supportsOptimisticConcurrencyControl()) {

getLockProvider().unlock();

metrics.updateLockHeldTimerMetrics();

+ close();

Review Comment:

@vinothchandar : yes you are right. for streaming ingestion, we keep

re-using the same write client and hence.

##

hudi-client/hudi-client-common/src/test/java/org/apache/hudi/client/transaction/TestLockManager.java:

##

@@ -0,0 +1,119 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.client.transaction;

+

+import org.apache.hudi.client.transaction.lock.LockManager;

+import org.apache.hudi.client.transaction.lock.ZookeeperBasedLockProvider;

+import org.apache.hudi.common.model.HoodieFailedWritesCleaningPolicy;

+import org.apache.hudi.common.model.WriteConcurrencyMode;

+import org.apache.hudi.common.testutils.HoodieCommonTestHarness;

+import org.apache.hudi.config.HoodieCleanConfig;

+import org.apache.hudi.config.HoodieLockConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+

+import org.apache.curator.test.TestingServer;

+import org.apache.log4j.LogManager;

+import org.apache.log4j.Logger;

+import org.junit.jupiter.api.AfterAll;

+import org.junit.jupiter.api.BeforeAll;

+import org.junit.jupiter.api.BeforeEach;

+import org.junit.jupiter.api.Test;

+

+import java.io.IOException;

+import java.lang.reflect.Field;

+

+import static org.junit.jupiter.api.Assertions.assertDoesNotThrow;

+import static org.junit.jupiter.api.Assertions.assertNotNull;

+import static org.junit.jupiter.api.Assertions.assertNull;

+

+public class TestLockManager extends HoodieCommonTestHarness {

+

+ private static final Logger LOG =

LogManager.getLogger(TestLockManager.class);

+

+ private static TestingServer server;

+ private static String zk_basePath = "/hudi/test/lock";

+ private static String key = "table1";

+

+ HoodieWriteConfig writeConfig;

+ LockManager lockManager;

+

+ @BeforeAll

+ public static void setup() {

+while (server == null) {

+ try {

+server = new TestingServer();

+ } catch (Exception e) {

+LOG.error("Getting bind exception - retrying to allocate server");

+server = null;

+ }

+}

+ }

+

+ @AfterAll

+ public static void tearDown() throws IOException {

+if (server != null) {

+ server.close();

+}

+ }

+

+ private HoodieWriteConfig getWriteConfig() {

+return HoodieWriteConfig.newBuilder()

+.withPath(basePath)

+.withCleanConfig(HoodieCleanConfig.newBuilder()

+

.withFailedWritesCleaningPolicy(HoodieFailedWritesCleaningPolicy.LAZY)

+.build())

+

.withWriteConcurrencyMode(WriteConcurrencyMode.OPTIMISTIC_CONCURRENCY_CONTROL)

+.withLockConfig(HoodieLockConfig.newBuilder()

+.withLockProvider(ZookeeperBasedLockProvider.class)

+.withZkBasePath(zk_basePath)

+.withZkLockKey(key)

+.withZkQuorum(server.getConnectString())

+.build())

+.build();

+ }

+

+ @BeforeEach

+ private void init() throws IOException {

+initPath();

+initMetaClient();

+this.writeConfig = getWriteConfig();

+this.lockManager = new LockManager(this.writeConfig,

this.metaClient.getFs());

+ }

+

+ @Test

+ public void testLockAndUnlock() throws NoSuchFieldException,

IllegalAccessException{

+

+Field lockProvider =

lockManager.getClass().getDeclaredField("lockProvider");

+lockProvider.setAccessible(true);

+

+assertDoesNotThrow(() -> {

+ lockManager.lock();

+});

+

+assertNotNull(lockProvider.get(lockManager));

+

+assertDoesNotThrow(() -> {

+ lockManager.unlock();

Review Comment:

+1

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org

For queries about this

[GitHub] [hudi] nsivabalan commented on pull request #8304: [HUDI-5993] Connection leak for lock provider

nsivabalan commented on PR #8304: URL: https://github.com/apache/hudi/pull/8304#issuecomment-1487992098 LGTM. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] voonhous commented on a diff in pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

voonhous commented on code in PR #8307:

URL: https://github.com/apache/hudi/pull/8307#discussion_r1151405975

##

hudi-common/src/main/java/org/apache/hudi/avro/GenericAvroSerializer.java:

##

@@ -0,0 +1,148 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.avro;

+

+import com.esotericsoftware.kryo.Kryo;

+import com.esotericsoftware.kryo.Serializer;

+import com.esotericsoftware.kryo.io.Input;

+import com.esotericsoftware.kryo.io.Output;

+import org.apache.avro.Schema;

+import org.apache.avro.SchemaNormalization;

+import org.apache.avro.generic.GenericContainer;

+import org.apache.avro.generic.GenericDatumReader;

+import org.apache.avro.generic.GenericDatumWriter;

+import org.apache.avro.io.DatumReader;

+import org.apache.avro.io.DatumWriter;

+import org.apache.avro.io.Decoder;

+import org.apache.avro.io.DecoderFactory;

+import org.apache.avro.io.Encoder;

+import org.apache.avro.io.EncoderFactory;

+

+import java.io.IOException;

+import java.nio.charset.StandardCharsets;

+import java.util.HashMap;

+

+

+/**

+ * Custom serializer used for generic Avro containers.

+ *

+ * Heavily adapted from:

+ *

+ * https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/serializer/GenericAvroSerializer.scala";>GenericAvroSerializer.scala

+ *

+ * As {@link org.apache.hudi.common.util.SerializationUtils} is not shared

between threads and does not concern any

+ * shuffling operations, compression and decompression cache is omitted as

network IO is not a concern.

+ *

+ * Unlike Spark's implementation, the class and constructor is not initialized

with a predefined map of avro schemas.

+ * This is the case as schemas to read and write are not known beforehand.

+ *

+ * @param the subtype of [[GenericContainer]] handled by this serializer

+ */

+public class GenericAvroSerializer extends

Serializer {

+

+ // reuses the same datum reader/writer since the same schema will be used

many times

+ private final HashMap> writerCache = new HashMap<>();

+ private final HashMap> readerCache = new HashMap<>();

+

+ // fingerprinting is very expensive so this alleviates most of the work

+ private final HashMap fingerprintCache = new HashMap<>();

+ private final HashMap schemaCache = new HashMap<>();

+

+ private Long getFingerprint(Schema schema) {

+if (fingerprintCache.containsKey(schema)) {

+ return fingerprintCache.get(schema);

+} else {

+ Long fingerprint = SchemaNormalization.parsingFingerprint64(schema);

+ fingerprintCache.put(schema, fingerprint);

+ return fingerprint;

+}

+ }

+

+ private Schema getSchema(Long fingerprint, byte[] schemaBytes) {

+if (schemaCache.containsKey(fingerprint)) {

+ return schemaCache.get(fingerprint);

+} else {

+ String schema = new String(schemaBytes, StandardCharsets.UTF_8);

+ Schema parsedSchema = new Schema.Parser().parse(schema);

+ schemaCache.put(fingerprint, parsedSchema);

+ return parsedSchema;

+}

+ }

+

+ private DatumWriter getDatumWriter(Schema schema) {

+DatumWriter writer;

+if (writerCache.containsKey(schema)) {

+ writer = writerCache.get(schema);

+} else {

+ writer = new GenericDatumWriter<>(schema);

+ writerCache.put(schema, writer);

+}

+return writer;

+ }

+

+ private DatumReader getDatumReader(Schema schema) {

+DatumReader reader;

+if (readerCache.containsKey(schema)) {

+ reader = readerCache.get(schema);

+} else {

+ reader = new GenericDatumReader<>(schema);

+ readerCache.put(schema, reader);

+}

+return reader;

+ }

+

+ private void serializeDatum(D datum, Output output) throws IOException {

+Encoder encoder = EncoderFactory.get().directBinaryEncoder(output, null);

+Schema schema = datum.getSchema();

+Long fingerprint = this.getFingerprint(schema);

+byte[] schemaBytes = schema.toString().getBytes(StandardCharsets.UTF_8);

Review Comment:

> When fingleprint is disabled, the schema is decoded directly from the

compressee bytes.

Yeap. In this PR, our `schemaCache` serves the purpose of the

`decompressCache`, which is the cache used in the `decompress` function.

If you look at Spark

[GitHub] [hudi] voonhous commented on a diff in pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

voonhous commented on code in PR #8307:

URL: https://github.com/apache/hudi/pull/8307#discussion_r1151405975

##

hudi-common/src/main/java/org/apache/hudi/avro/GenericAvroSerializer.java:

##

@@ -0,0 +1,148 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.avro;

+

+import com.esotericsoftware.kryo.Kryo;

+import com.esotericsoftware.kryo.Serializer;

+import com.esotericsoftware.kryo.io.Input;

+import com.esotericsoftware.kryo.io.Output;

+import org.apache.avro.Schema;

+import org.apache.avro.SchemaNormalization;

+import org.apache.avro.generic.GenericContainer;

+import org.apache.avro.generic.GenericDatumReader;

+import org.apache.avro.generic.GenericDatumWriter;

+import org.apache.avro.io.DatumReader;

+import org.apache.avro.io.DatumWriter;

+import org.apache.avro.io.Decoder;

+import org.apache.avro.io.DecoderFactory;

+import org.apache.avro.io.Encoder;

+import org.apache.avro.io.EncoderFactory;

+

+import java.io.IOException;

+import java.nio.charset.StandardCharsets;

+import java.util.HashMap;

+

+

+/**

+ * Custom serializer used for generic Avro containers.

+ *

+ * Heavily adapted from:

+ *

+ * https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/serializer/GenericAvroSerializer.scala";>GenericAvroSerializer.scala

+ *

+ * As {@link org.apache.hudi.common.util.SerializationUtils} is not shared

between threads and does not concern any

+ * shuffling operations, compression and decompression cache is omitted as

network IO is not a concern.

+ *

+ * Unlike Spark's implementation, the class and constructor is not initialized

with a predefined map of avro schemas.

+ * This is the case as schemas to read and write are not known beforehand.

+ *

+ * @param the subtype of [[GenericContainer]] handled by this serializer

+ */

+public class GenericAvroSerializer extends

Serializer {

+

+ // reuses the same datum reader/writer since the same schema will be used

many times

+ private final HashMap> writerCache = new HashMap<>();

+ private final HashMap> readerCache = new HashMap<>();

+

+ // fingerprinting is very expensive so this alleviates most of the work

+ private final HashMap fingerprintCache = new HashMap<>();

+ private final HashMap schemaCache = new HashMap<>();

+

+ private Long getFingerprint(Schema schema) {

+if (fingerprintCache.containsKey(schema)) {

+ return fingerprintCache.get(schema);

+} else {

+ Long fingerprint = SchemaNormalization.parsingFingerprint64(schema);

+ fingerprintCache.put(schema, fingerprint);

+ return fingerprint;

+}

+ }

+

+ private Schema getSchema(Long fingerprint, byte[] schemaBytes) {

+if (schemaCache.containsKey(fingerprint)) {

+ return schemaCache.get(fingerprint);

+} else {

+ String schema = new String(schemaBytes, StandardCharsets.UTF_8);

+ Schema parsedSchema = new Schema.Parser().parse(schema);

+ schemaCache.put(fingerprint, parsedSchema);

+ return parsedSchema;

+}

+ }

+

+ private DatumWriter getDatumWriter(Schema schema) {

+DatumWriter writer;

+if (writerCache.containsKey(schema)) {

+ writer = writerCache.get(schema);

+} else {

+ writer = new GenericDatumWriter<>(schema);

+ writerCache.put(schema, writer);

+}

+return writer;

+ }

+

+ private DatumReader getDatumReader(Schema schema) {

+DatumReader reader;

+if (readerCache.containsKey(schema)) {

+ reader = readerCache.get(schema);

+} else {

+ reader = new GenericDatumReader<>(schema);

+ readerCache.put(schema, reader);

+}

+return reader;

+ }

+

+ private void serializeDatum(D datum, Output output) throws IOException {

+Encoder encoder = EncoderFactory.get().directBinaryEncoder(output, null);

+Schema schema = datum.getSchema();

+Long fingerprint = this.getFingerprint(schema);

+byte[] schemaBytes = schema.toString().getBytes(StandardCharsets.UTF_8);

Review Comment:

> When fingleprint is disabled, the schema is decoded directly from the

compressee bytes.

Yeap. In this PR, our `schemaCache` serves the purpose of the

`decompressCache`, which is the cache used in the `decompress` function.

If you look at Spark

[GitHub] [hudi] danny0405 commented on issue #8305: [SUPPORT] fluent-hc max connection restriction

danny0405 commented on issue #8305: URL: https://github.com/apache/hudi/issues/8305#issuecomment-1487936671 Nice catch, can you show me the code where the `org.apache.http.client.fluent.Executor` is used? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Closed] (HUDI-5952) NullPointerException when use kafka callback

[ https://issues.apache.org/jira/browse/HUDI-5952?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Danny Chen closed HUDI-5952. Fix Version/s: 0.14.0 Resolution: Fixed Fixed via master branch: 7243393c6881802803c0233cbac42daf1271afb3 > NullPointerException when use kafka callback > > > Key: HUDI-5952 > URL: https://issues.apache.org/jira/browse/HUDI-5952 > Project: Apache Hudi > Issue Type: Bug > Components: hudi-utilities >Reporter: wuzhenhua >Priority: Major > Labels: pull-request-available > Fix For: 0.14.0 > > Attachments: image-2023-03-18-11-41-35-135.png > > > hudi.conf: > hoodie.write.commit.callback.on true > hoodie.write.commit.callback.class > org.apache.hudi.utilities.callback.kafka.HoodieWriteCommitKafkaCallback > hoodie.write.commit.callback.kafka.bootstrap.servers localhost:9082 > hoodie.write.commit.callback.kafka.topic hudi-callback > hoodie.write.commit.callback.kafka.partition 1 > > !image-2023-03-18-11-41-35-135.png! -- This message was sent by Atlassian Jira (v8.20.10#820010)

[hudi] branch master updated (2023302ebee -> 7243393c688)

This is an automated email from the ASF dual-hosted git repository. danny0405 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git from 2023302ebee [HUDI-5986] Empty preCombineKey should never be stored in hoodie.properties (#8296) add 7243393c688 [HUDI-5952] Fix NPE when use kafka callback (#8227) No new revisions were added by this update. Summary of changes: .../kafka/HoodieWriteCommitKafkaCallback.java | 4 +- .../callback/TestKafkaCallbackProvider.java| 90 ++ 2 files changed, 92 insertions(+), 2 deletions(-) create mode 100644 hudi-utilities/src/test/java/org/apache/hudi/utilities/callback/TestKafkaCallbackProvider.java

[GitHub] [hudi] danny0405 merged pull request #8227: [HUDI-5952] Fix NPE when use kafka callback

danny0405 merged PR #8227: URL: https://github.com/apache/hudi/pull/8227 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (HUDI-5986) empty preCombineKey should never be stored in hoodie.properties

[

https://issues.apache.org/jira/browse/HUDI-5986?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Danny Chen resolved HUDI-5986.

--

> empty preCombineKey should never be stored in hoodie.properties

> ---

>

> Key: HUDI-5986

> URL: https://issues.apache.org/jira/browse/HUDI-5986

> Project: Apache Hudi

> Issue Type: Bug

> Components: hudi-utilities

>Reporter: Wechar

>Priority: Major

> Labels: pull-request-available

>

> *Overview:*

> We found {{hoodie.properties}} will keep the empty preCombineKey if the table

> does not have preCombineKey. And the empty preCombineKey will cause the

> exception when insert data:

> {code:bash}

> Caused by: org.apache.hudi.exception.HoodieException: (Part -) field not

> found in record. Acceptable fields were :[id, name, price]

> at

> org.apache.hudi.avro.HoodieAvroUtils.getNestedFieldVal(HoodieAvroUtils.java:557)

> at

> org.apache.hudi.HoodieSparkSqlWriter$$anonfun$createHoodieRecordRdd$1$$anonfun$apply$5.apply(HoodieSparkSqlWriter.scala:1134)

> at

> org.apache.hudi.HoodieSparkSqlWriter$$anonfun$createHoodieRecordRdd$1$$anonfun$apply$5.apply(HoodieSparkSqlWriter.scala:1127)

> at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

> at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

> at

> org.apache.spark.util.collection.ExternalSorter.insertAll(ExternalSorter.scala:193)

> at

> org.apache.spark.shuffle.sort.SortShuffleWriter.write(SortShuffleWriter.scala:62)

> at

> org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99)

> at

> org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:55)

> at org.apache.spark.scheduler.Task.run(Task.scala:123)

> at

> org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

> at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

> at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

> at

> java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

> at

> java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

> at java.lang.Thread.run(Thread.java:748)

> {code}

> *Steps to Reproduce:*

> {code:sql}

> -- 1. create a table without preCombineKey

> CREATE TABLE default.test_hudi_default_cm (

> uuid int,

> name string,

> price double

> ) USING hudi

> options (

> primaryKey='uuid');

> -- 2. config write operation to insert

> set hoodie.datasource.write.operation=insert;

> set hoodie.merge.allow.duplicate.on.inserts=true;

> -- 3. insert data

> insert into default.test_hudi_default_cm select 1, 'name1', 1.1;

> -- 4. insert overwrite

> insert overwrite table default.test_hudi_default_cm select 2, 'name3', 1.1;

> -- 5. insert data will occur exception

> insert into default.test_hudi_default_cm select 1, 'name3', 1.1;

> {code}

> *Root Cause:*

> Hudi re-construct the table when *insert overwrite table* in sql but the

> configured operation is not, then it stores the default empty preCombineKey

> in {{hoodie.properties}}.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (HUDI-5986) empty preCombineKey should never be stored in hoodie.properties

[

https://issues.apache.org/jira/browse/HUDI-5986?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Danny Chen updated HUDI-5986:

-

Fix Version/s: 0.14.0

> empty preCombineKey should never be stored in hoodie.properties

> ---

>

> Key: HUDI-5986

> URL: https://issues.apache.org/jira/browse/HUDI-5986

> Project: Apache Hudi

> Issue Type: Bug

> Components: hudi-utilities

>Reporter: Wechar

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.14.0

>

>

> *Overview:*

> We found {{hoodie.properties}} will keep the empty preCombineKey if the table

> does not have preCombineKey. And the empty preCombineKey will cause the

> exception when insert data:

> {code:bash}

> Caused by: org.apache.hudi.exception.HoodieException: (Part -) field not

> found in record. Acceptable fields were :[id, name, price]

> at

> org.apache.hudi.avro.HoodieAvroUtils.getNestedFieldVal(HoodieAvroUtils.java:557)

> at

> org.apache.hudi.HoodieSparkSqlWriter$$anonfun$createHoodieRecordRdd$1$$anonfun$apply$5.apply(HoodieSparkSqlWriter.scala:1134)

> at

> org.apache.hudi.HoodieSparkSqlWriter$$anonfun$createHoodieRecordRdd$1$$anonfun$apply$5.apply(HoodieSparkSqlWriter.scala:1127)

> at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

> at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

> at

> org.apache.spark.util.collection.ExternalSorter.insertAll(ExternalSorter.scala:193)

> at

> org.apache.spark.shuffle.sort.SortShuffleWriter.write(SortShuffleWriter.scala:62)

> at

> org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99)

> at

> org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:55)

> at org.apache.spark.scheduler.Task.run(Task.scala:123)

> at

> org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

> at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

> at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

> at

> java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

> at

> java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

> at java.lang.Thread.run(Thread.java:748)

> {code}

> *Steps to Reproduce:*

> {code:sql}

> -- 1. create a table without preCombineKey

> CREATE TABLE default.test_hudi_default_cm (

> uuid int,

> name string,

> price double

> ) USING hudi

> options (

> primaryKey='uuid');

> -- 2. config write operation to insert

> set hoodie.datasource.write.operation=insert;

> set hoodie.merge.allow.duplicate.on.inserts=true;

> -- 3. insert data

> insert into default.test_hudi_default_cm select 1, 'name1', 1.1;

> -- 4. insert overwrite

> insert overwrite table default.test_hudi_default_cm select 2, 'name3', 1.1;

> -- 5. insert data will occur exception

> insert into default.test_hudi_default_cm select 1, 'name3', 1.1;

> {code}

> *Root Cause:*

> Hudi re-construct the table when *insert overwrite table* in sql but the

> configured operation is not, then it stores the default empty preCombineKey

> in {{hoodie.properties}}.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Closed] (HUDI-5986) empty preCombineKey should never be stored in hoodie.properties

[

https://issues.apache.org/jira/browse/HUDI-5986?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Danny Chen closed HUDI-5986.

Resolution: Fixed

Fixed via master branch: 2023302ebeed728e632e43e2475c0045a9263067

> empty preCombineKey should never be stored in hoodie.properties

> ---

>

> Key: HUDI-5986

> URL: https://issues.apache.org/jira/browse/HUDI-5986

> Project: Apache Hudi

> Issue Type: Bug

> Components: hudi-utilities

>Reporter: Wechar

>Priority: Major

> Labels: pull-request-available

> Fix For: 0.14.0

>

>

> *Overview:*

> We found {{hoodie.properties}} will keep the empty preCombineKey if the table

> does not have preCombineKey. And the empty preCombineKey will cause the

> exception when insert data:

> {code:bash}

> Caused by: org.apache.hudi.exception.HoodieException: (Part -) field not

> found in record. Acceptable fields were :[id, name, price]

> at

> org.apache.hudi.avro.HoodieAvroUtils.getNestedFieldVal(HoodieAvroUtils.java:557)

> at

> org.apache.hudi.HoodieSparkSqlWriter$$anonfun$createHoodieRecordRdd$1$$anonfun$apply$5.apply(HoodieSparkSqlWriter.scala:1134)

> at

> org.apache.hudi.HoodieSparkSqlWriter$$anonfun$createHoodieRecordRdd$1$$anonfun$apply$5.apply(HoodieSparkSqlWriter.scala:1127)

> at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

> at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

> at

> org.apache.spark.util.collection.ExternalSorter.insertAll(ExternalSorter.scala:193)

> at

> org.apache.spark.shuffle.sort.SortShuffleWriter.write(SortShuffleWriter.scala:62)

> at

> org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99)

> at

> org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:55)

> at org.apache.spark.scheduler.Task.run(Task.scala:123)

> at

> org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

> at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

> at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

> at

> java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

> at

> java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

> at java.lang.Thread.run(Thread.java:748)

> {code}

> *Steps to Reproduce:*

> {code:sql}

> -- 1. create a table without preCombineKey

> CREATE TABLE default.test_hudi_default_cm (

> uuid int,

> name string,

> price double

> ) USING hudi

> options (

> primaryKey='uuid');

> -- 2. config write operation to insert

> set hoodie.datasource.write.operation=insert;

> set hoodie.merge.allow.duplicate.on.inserts=true;

> -- 3. insert data

> insert into default.test_hudi_default_cm select 1, 'name1', 1.1;

> -- 4. insert overwrite

> insert overwrite table default.test_hudi_default_cm select 2, 'name3', 1.1;

> -- 5. insert data will occur exception

> insert into default.test_hudi_default_cm select 1, 'name3', 1.1;

> {code}

> *Root Cause:*

> Hudi re-construct the table when *insert overwrite table* in sql but the

> configured operation is not, then it stores the default empty preCombineKey

> in {{hoodie.properties}}.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [hudi] danny0405 merged pull request #8296: [HUDI-5986] Empty preCombineKey should never be stored in hoodie.properties

danny0405 merged PR #8296: URL: https://github.com/apache/hudi/pull/8296 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[hudi] branch master updated (e1741ddc7d9 -> 2023302ebee)

This is an automated email from the ASF dual-hosted git repository. danny0405 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/hudi.git from e1741ddc7d9 [MINOR] Fix typo for method in AvroSchemaConverter (#8306) add 2023302ebee [HUDI-5986] Empty preCombineKey should never be stored in hoodie.properties (#8296) No new revisions were added by this update. Summary of changes: .../hudi/common/table/HoodieTableMetaClient.java | 2 +- .../apache/spark/sql/hudi/TestInsertTable.scala| 34 ++ 2 files changed, 35 insertions(+), 1 deletion(-)

[GitHub] [hudi] hudi-bot commented on pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

hudi-bot commented on PR #8307: URL: https://github.com/apache/hudi/pull/8307#issuecomment-1487931181 ## CI report: * 8aef9327c27d3f716a7f4c40f9a6d0ea6f370d3e Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15957) Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15961) * d8521565c1a8a4e215f779c525b7d123b44b94b3 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15965) Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] Zouxxyy commented on issue #8312: [SUPPORT] Metadata of hudi mor table (without ro/rt suffix) is not synchronized to HMS

Zouxxyy commented on issue #8312: URL: https://github.com/apache/hudi/issues/8312#issuecomment-1487927646 My idea is to remove `hoodie.datasource.hive_sync.skip_ro_suffix`, and control the metadata synchronization behavior only by `hoodie.datasource.hive_sync.table.strategy`: ALL: table (behavior is consistent with table_rt) table_ro table_rt RO table (behavior is consistent with table_rt) table_ro RT table (behavior is consistent with table_rt) table_rt But this change is a bit big, what do you think? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #8307: [HUDI-5992] Fix (de)serialization for avro versions > 1.10.0

hudi-bot commented on PR #8307: URL: https://github.com/apache/hudi/pull/8307#issuecomment-1487927175 ## CI report: * 8aef9327c27d3f716a7f4c40f9a6d0ea6f370d3e Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15957) Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=15961) * d8521565c1a8a4e215f779c525b7d123b44b94b3 UNKNOWN Bot commands @hudi-bot supports the following commands: - `@hudi-bot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] Zouxxyy opened a new issue, #8312: [SUPPORT] Metadata of hudi mor table (without ro/rt suffix) is not synchronized to HMS

Zouxxyy opened a new issue, #8312:

URL: https://github.com/apache/hudi/issues/8312

**Describe the problem you faced**

When scan partitioned hudi mor table (without ro/rt suffix), we get nothing,

because metadata (especially partitions) is not synchronized to HMS. As follows

```java

protected void doSync() {

switch (syncClient.getTableType()) {

case COPY_ON_WRITE:

syncHoodieTable(snapshotTableName, false, false);

break;

case MERGE_ON_READ:

switch (HoodieSyncTableStrategy.valueOf(hiveSyncTableStrategy)) {

case RO :

// sync a RO table for MOR

syncHoodieTable(tableName, false, true);

break;

case RT :

// sync a RT table for MOR

syncHoodieTable(tableName, true, false);

break;

default:

// sync a RO table for MOR

syncHoodieTable(roTableName.get(), false, true);

// sync a RT table for MOR

syncHoodieTable(snapshotTableName, true, false);

}

break;

default:

LOG.error("Unknown table type " + syncClient.getTableType());

throw new InvalidTableException(syncClient.getBasePath());

}

}

```

In addition, there is a parameter,

`hoodie.datasource.hive_sync.skip_ro_suffix`, when set it to true, **will

register table without ro suffix as ro table**;

**This contradicts spark's behavior**, because the behavior of querying

table without suffix is **actually rt table**.

**To Reproduce**

Steps to reproduce the behavior:

```sql

-- spark

create table hudi_mor_test_tbl (

id bigint,

name string,

ts bigint,

dt string,

hh string

) using hudi

tblproperties (

type = 'mor',

primaryKey = 'id',

preCombineField = 'ts'

)

partitioned by (dt, hh);

insert into hudi_mor_test_tbl values (1, 'a1', 1001, '2021-12-09', '10');

-- hive

select * from hudi_mor_test_tbl;

```

**Expected behavior**

We should get query result

**Environment Description**

* Hudi version : 0.13.0

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org