[GitHub] [hudi] bradleyhurley commented on issue #2068: [SUPPORT]Deltastreamer Upsert Very Slow / Never Completes After Initial Data Load

bradleyhurley commented on issue #2068: URL: https://github.com/apache/hudi/issues/2068#issuecomment-690292104 Thanks @bvaradar - I think I have read most of the guides and documentation that I could find. Is there a formula that should drive the number of executors, cores per executor, driver memory, and executor memory? With a properly sized configuration do you have a ballpark of how long you would expect it to take to upsert 100M rows into a Hudi table with 100M existing rows with 99%+ of the data being an insert vs update? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] bradleyhurley commented on issue #2068: [SUPPORT]Deltastreamer Upsert Very Slow / Never Completes After Initial Data Load

bradleyhurley commented on issue #2068: URL: https://github.com/apache/hudi/issues/2068#issuecomment-689064559 I made some tweaks and was able to get the job to complete. - Executor Cores = 1 - Executors = 300 - Driver Memory = 4G - Executor Memory = 6G - spark.kryoserializer.buffer.max=512m I am still spilling to disk, but am unsure if thats the area I should focus my tuning efforts.  **Stage 13 Details - 1.5 Hours**  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [hudi] bradleyhurley commented on issue #2068: [SUPPORT]Deltastreamer Upsert Very Slow / Never Completes After Initial Data Load

bradleyhurley commented on issue #2068: URL: https://github.com/apache/hudi/issues/2068#issuecomment-688369846 Failed Stages:  **Failed To Connect To XXX** We see these errors when nodes leave the EMR cluster. ``` org.apache.spark.shuffle.FetchFailedException: Failed to connect to ip-100-64-115-173.us-west-2.compute.internal/100.64.115.173:7337 at org.apache.spark.storage.ShuffleBlockFetcherIterator.throwFetchFailedException(ShuffleBlockFetcherIterator.scala:640) at org.apache.spark.storage.ShuffleBlockFetcherIterator.next(ShuffleBlockFetcherIterator.scala:562) at org.apache.spark.storage.ShuffleBlockFetcherIterator.next(ShuffleBlockFetcherIterator.scala:66) at scala.collection.Iterator$$anon$12.nextCur(Iterator.scala:435) at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:441) at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409) at org.apache.spark.util.CompletionIterator.hasNext(CompletionIterator.scala:31) at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:37) at org.apache.spark.util.collection.ExternalAppendOnlyMap.insertAll(ExternalAppendOnlyMap.scala:156) at org.apache.spark.Aggregator.combineCombinersByKey(Aggregator.scala:50) at org.apache.spark.shuffle.BlockStoreShuffleReader.read(BlockStoreShuffleReader.scala:106) at org.apache.spark.rdd.ShuffledRDD.compute(ShuffledRDD.scala:105) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:346) at org.apache.spark.rdd.RDD.iterator(RDD.scala:310) at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:346) at org.apache.spark.rdd.RDD.iterator(RDD.scala:310) at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:346) at org.apache.spark.rdd.RDD.iterator(RDD.scala:310) at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99) at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:55) at org.apache.spark.scheduler.Task.run(Task.scala:123) at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408) at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1405) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Caused by: java.io.IOException: Failed to connect to ip-100-64-115-173.us-west-2.compute.internal/100.64.115.173:7337 at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:245) at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:187) at org.apache.spark.network.shuffle.ExternalShuffleClient.lambda$fetchBlocks$0(ExternalShuffleClient.java:100) at org.apache.spark.network.shuffle.RetryingBlockFetcher.fetchAllOutstanding(RetryingBlockFetcher.java:141) at org.apache.spark.network.shuffle.RetryingBlockFetcher.lambda$initiateRetry$0(RetryingBlockFetcher.java:169) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) ... 1 more Caused by: io.netty.channel.AbstractChannel$AnnotatedNoRouteToHostException: No route to host: ip-100-64-115-173.us-west-2.compute.internal/100.64.115.173:7337 Caused by: java.net.NoRouteToHostException: No route to host at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:714) at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:327) at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:334) at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:688) at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:635) at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:552) at io.netty.channel.nio.NioEventLoop.run(NioEventLo

[GitHub] [hudi] bradleyhurley commented on issue #2068: [SUPPORT]Deltastreamer Upsert Very Slow / Never Completes After Initial Data Load

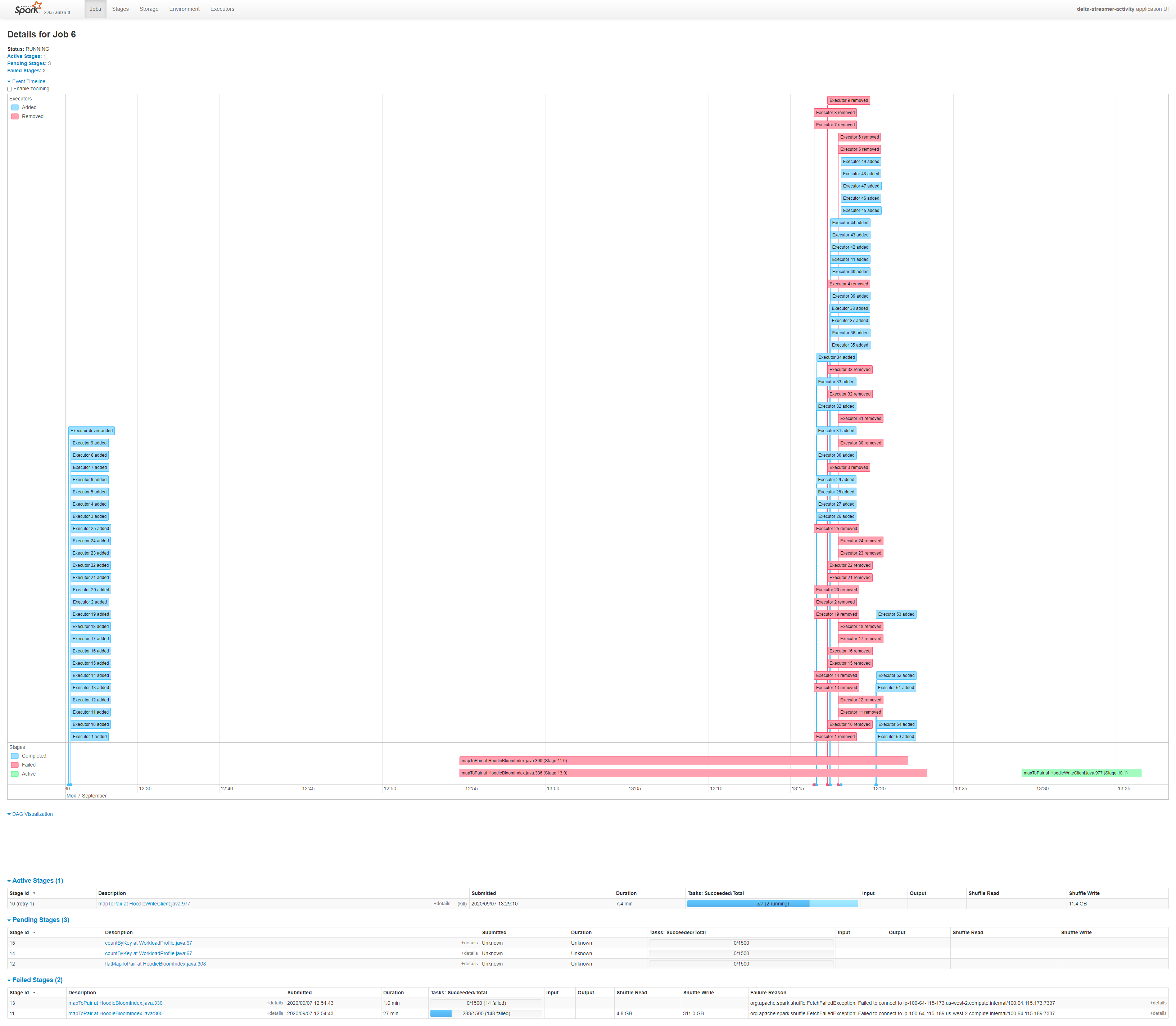

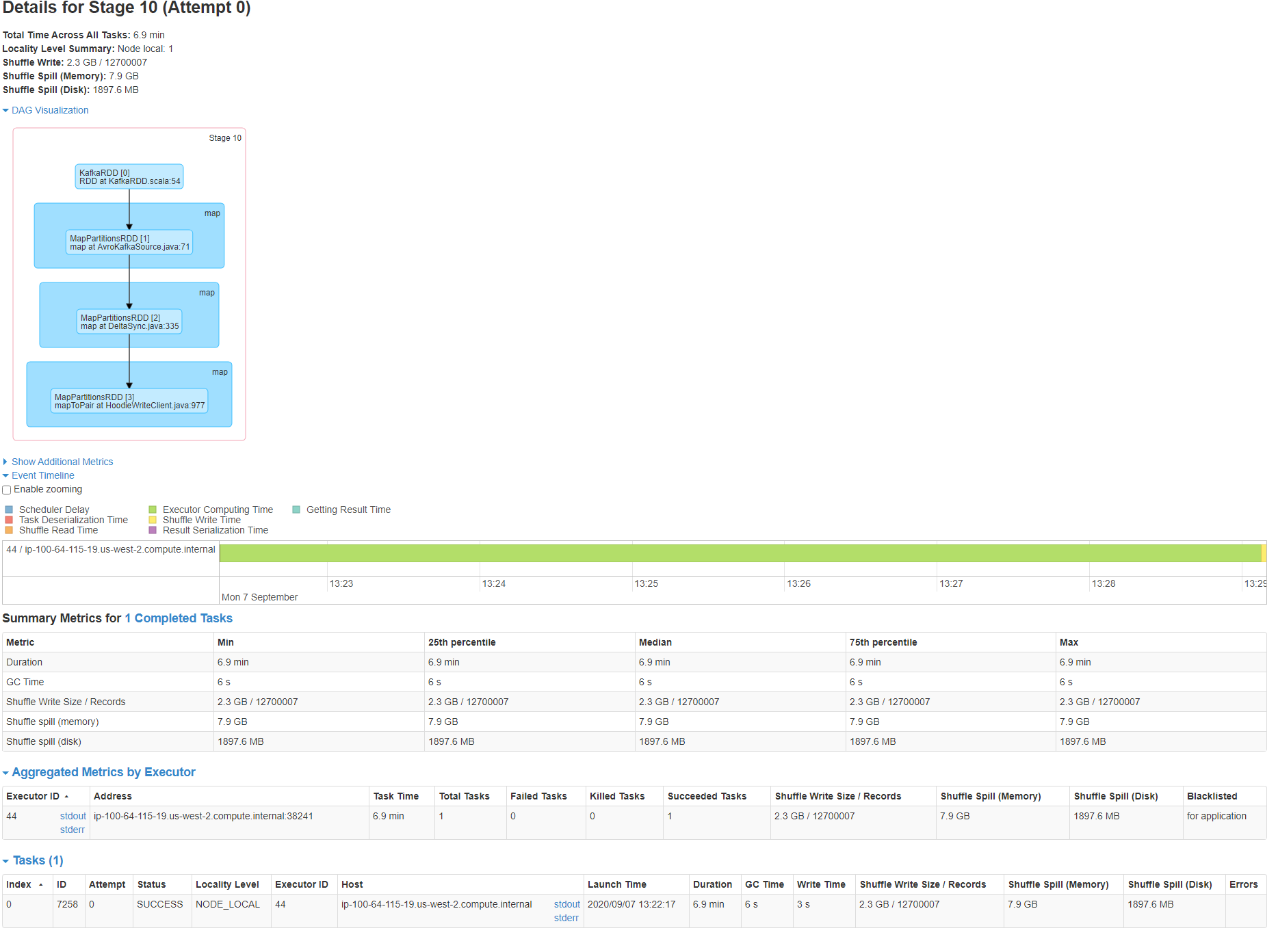

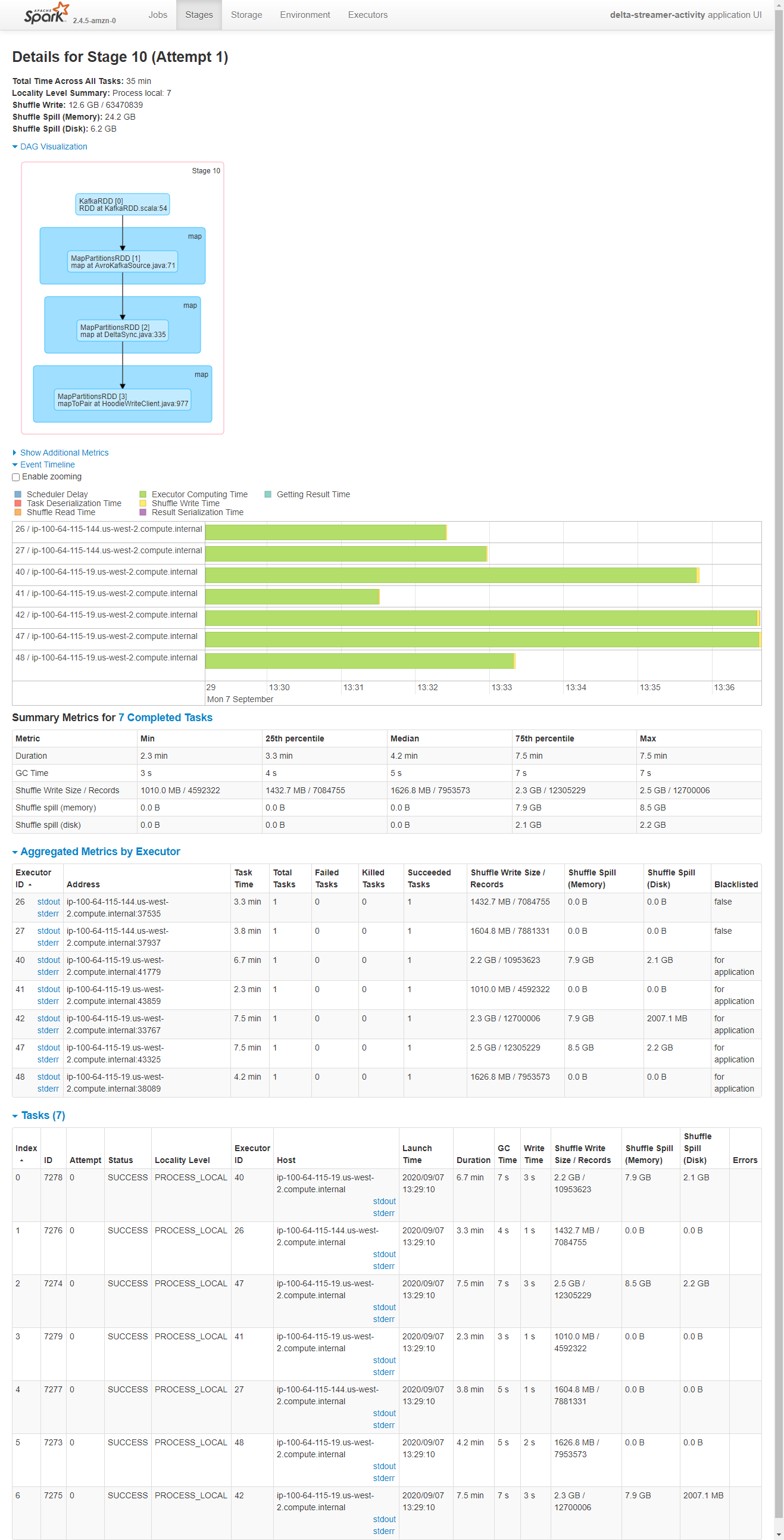

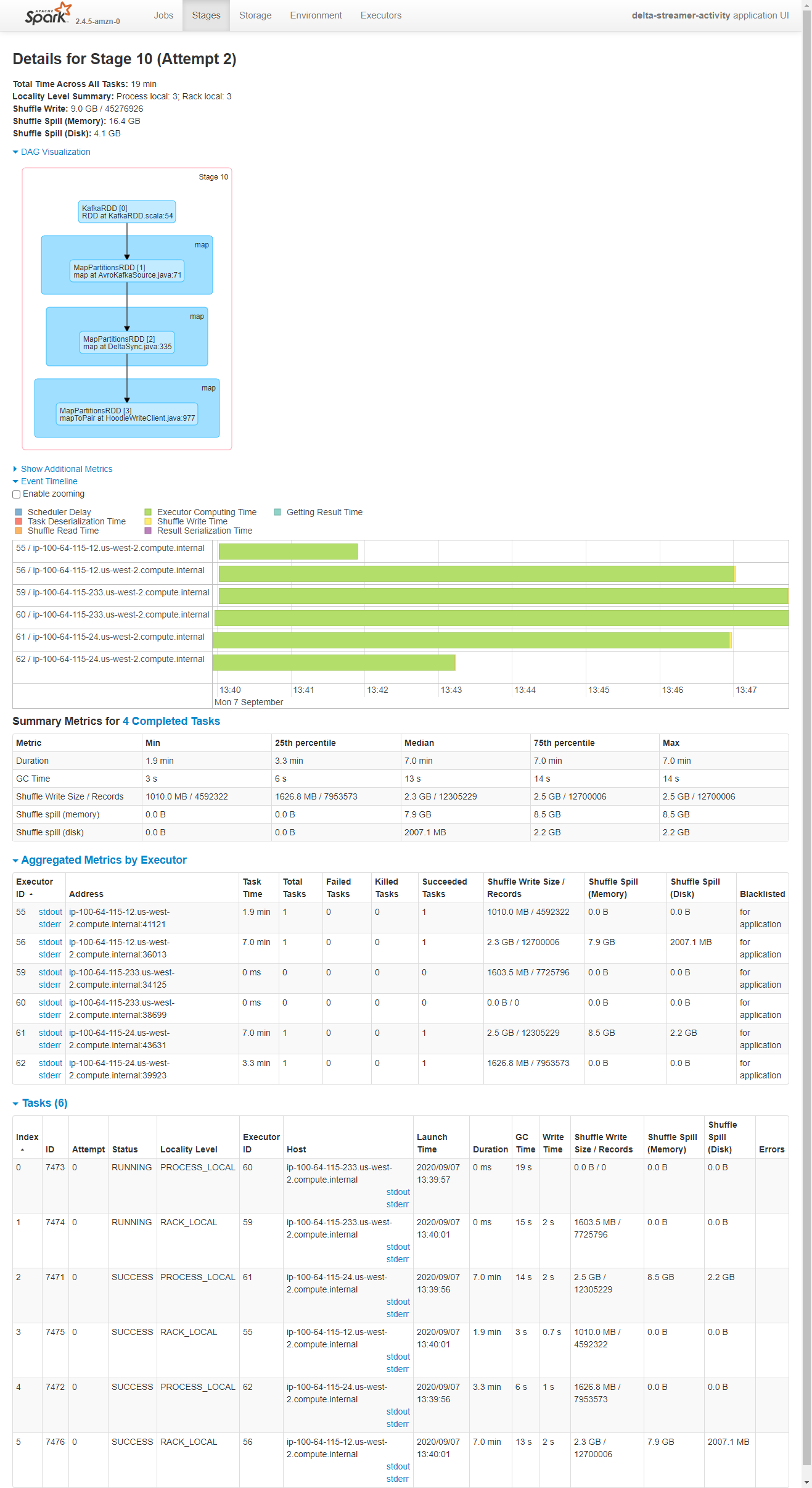

bradleyhurley commented on issue #2068: URL: https://github.com/apache/hudi/issues/2068#issuecomment-688365307 Thanks for following up @bvaradar. I have attached screenshots below. Please let me know what else I can provide. Running Applications:  Job 2  Job 2 Stage 2  Job 5  Job 5 Stage 8  Job 6  Job 6 Stage 10 - Attempt 0  Job 6 Stage 10 - Attempt 1  Job 6 Stage 10 - Attempt 2  Job 6 Stage 11 - Attempt 0  Job 6 Stage 11 - Attempt 1  Job 6 Stage 13 - Attempt 0  Job 6 Stage 13 - Attempt 1  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org