[incubator-mxnet] branch master updated (7dde0eb -> d60f37b)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from 7dde0eb [MXNET-1234] Fix shape inference problems in Activation backward (#13409) add d60f37b Docs & website sphinx errors squished (#13488) No new revisions were added by this update. Summary of changes: docs/api/scala/index.md | 18 +-- docs/api/scala/kvstore.md| 98 +++--- docs/api/scala/ndarray.md| 186 +-- docs/api/scala/symbol.md | 66 +- docs/gluon/index.md | 10 +- docs/install/ubuntu_setup.md | 12 -- docs/tutorials/r/fiveMinutesNeuralNetwork.md | 4 +- python/mxnet/gluon/parameter.py | 2 +- 8 files changed, 192 insertions(+), 204 deletions(-)

[incubator-mxnet] branch master updated: fix toctree Sphinx errors (#13489)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new f2dcd7c fix toctree Sphinx errors (#13489) f2dcd7c is described below commit f2dcd7c7b8676b55d912997fc3f9c62c55915307 Author: Aaron Markham AuthorDate: Mon Dec 3 17:27:41 2018 -0800 fix toctree Sphinx errors (#13489) * fix toctree errors * nudging file for CI --- docs/api/index.md | 2 ++ docs/tutorials/index.md | 3 ++- 2 files changed, 4 insertions(+), 1 deletion(-) diff --git a/docs/api/index.md b/docs/api/index.md index eff6807..9e7a58f 100644 --- a/docs/api/index.md +++ b/docs/api/index.md @@ -1,11 +1,13 @@ # MXNet APIs + ```eval_rst .. toctree:: :maxdepth: 1 c++/index.md clojure/index.md + java/index.md julia/index.md perl/index.md python/index.md diff --git a/docs/tutorials/index.md b/docs/tutorials/index.md index 52e2be8..7d102bb 100644 --- a/docs/tutorials/index.md +++ b/docs/tutorials/index.md @@ -3,12 +3,13 @@ ```eval_rst .. toctree:: :hidden: - + basic/index.md c++/index.md control_flow/index.md embedded/index.md gluon/index.md + java/index.md nlp/index.md onnx/index.md python/index.md

[incubator-mxnet] branch master updated (3c4a97d -> 3a50ae0)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from 3c4a97d Fix github status context if running inside a folder (#13350) add 3a50ae0 Fix sphinx docstring warnings. (#13315) No new revisions were added by this update. Summary of changes: python/mxnet/contrib/io.py| 4 ++-- python/mxnet/contrib/svrg_optimization/svrg_module.py | 16 python/mxnet/contrib/text/embedding.py| 6 -- python/mxnet/gluon/data/vision/datasets.py| 19 ++- python/mxnet/image/image.py | 4 ++-- python/mxnet/optimizer/optimizer.py | 3 ++- python/mxnet/rnn/rnn_cell.py | 3 ++- python/mxnet/symbol/contrib.py| 18 +- python/mxnet/torch.py | 2 +- src/operator/contrib/adaptive_avg_pooling.cc | 4 ++-- 10 files changed, 42 insertions(+), 37 deletions(-)

[incubator-mxnet] branch master updated: Fix descriptions in scaladocs for macro ndarray/sybmol APIs (#13210)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new dfeb6b0 Fix descriptions in scaladocs for macro ndarray/sybmol APIs

(#13210)

dfeb6b0 is described below

commit dfeb6b017445b4a6b37a67abc8d8e23e3b8f4a0a

Author: Zach Kimberg

AuthorDate: Fri Nov 16 16:49:04 2018 -0800

Fix descriptions in scaladocs for macro ndarray/sybmol APIs (#13210)

---

.../src/main/scala/org/apache/mxnet/APIDocGenerator.scala | 15 ---

1 file changed, 12 insertions(+), 3 deletions(-)

diff --git

a/scala-package/macros/src/main/scala/org/apache/mxnet/APIDocGenerator.scala

b/scala-package/macros/src/main/scala/org/apache/mxnet/APIDocGenerator.scala

index 7c1edb5..ce12dc7 100644

--- a/scala-package/macros/src/main/scala/org/apache/mxnet/APIDocGenerator.scala

+++ b/scala-package/macros/src/main/scala/org/apache/mxnet/APIDocGenerator.scala

@@ -114,11 +114,20 @@ private[mxnet] object APIDocGenerator extends

GeneratorBase {

}

def generateAPIDocFromBackend(func: Func, withParam: Boolean = true): String

= {

-val desc = func.desc.split("\n")

- .mkString(" * \n", "\n * ", " * \n")

+def fixDesc(desc: String): String = {

+ var curDesc = desc

+ var prevDesc = ""

+ while ( curDesc != prevDesc ) {

+prevDesc = curDesc

+curDesc = curDesc.replace("[[", "`[ [").replace("]]", "] ]")

+ }

+ curDesc

+}

+val desc = fixDesc(func.desc).split("\n")

+ .mkString(" *\n * {{{\n *\n * ", "\n * ", "\n * }}}\n * ")

val params = func.listOfArgs.map { absClassArg =>

- s" * @param ${absClassArg.safeArgName}\t\t${absClassArg.argDesc}"

+ s" * @param

${absClassArg.safeArgName}\t\t${fixDesc(absClassArg.argDesc)}"

}

val returnType = s" * @return ${func.returnType}"

[incubator-mxnet] branch master updated (46e870b -> 1aa6a38)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from 46e870b fix the flag (#13293) add 1aa6a38 Made fixes to sparse.py and sparse.md (#13305) No new revisions were added by this update. Summary of changes: docs/api/python/ndarray/sparse.md | 1 + python/mxnet/ndarray/sparse.py| 70 +++ 2 files changed, 36 insertions(+), 35 deletions(-)

[incubator-mxnet] branch master updated: Addressed "dumplicate object reference" issues (#13214)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new 6ae5b65 Addressed "dumplicate object reference" issues (#13214) 6ae5b65 is described below commit 6ae5b657eba67c565c08012889777fd7ca4552c4 Author: vdantu <36211508+vda...@users.noreply.github.com> AuthorDate: Fri Nov 16 14:13:05 2018 -0800 Addressed "dumplicate object reference" issues (#13214) --- docs/api/python/symbol/random.md | 1 + 1 file changed, 1 insertion(+) diff --git a/docs/api/python/symbol/random.md b/docs/api/python/symbol/random.md index 22c686f..b93f641 100644 --- a/docs/api/python/symbol/random.md +++ b/docs/api/python/symbol/random.md @@ -48,6 +48,7 @@ In the rest of this document, we list routines provided by the `symbol.random` p .. automodule:: mxnet.symbol.random :members: +:noindex: .. automodule:: mxnet.random :members:

[incubator-mxnet] branch master updated: Visualization doc fix. Added notes for shortform (#13291)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 5af975e Visualization doc fix. Added notes for shortform (#13291)

5af975e is described below

commit 5af975e8b1d5f368f5c9a039ec6d2184c583eb99

Author: vdantu <36211508+vda...@users.noreply.github.com>

AuthorDate: Fri Nov 16 13:35:10 2018 -0800

Visualization doc fix. Added notes for shortform (#13291)

---

python/mxnet/visualization.py | 25 +

1 file changed, 25 insertions(+)

diff --git a/python/mxnet/visualization.py b/python/mxnet/visualization.py

index 9297ede..1ebdcb5 100644

--- a/python/mxnet/visualization.py

+++ b/python/mxnet/visualization.py

@@ -57,9 +57,22 @@ def print_summary(symbol, shape=None, line_length=120,

positions=[.44, .64, .74,

Rotal length of printed lines

positions: list

Relative or absolute positions of log elements in each line.

+

Returns

--

None

+

+Notes

+-

+If ``mxnet`` is imported, the visualization module can be used in its

short-form.

+For example, if we ``import mxnet`` as follows::

+

+import mxnet

+

+this method in visualization module can be used in its short-form as::

+

+mxnet.viz.print_summary(...)

+

"""

if not isinstance(symbol, Symbol):

raise TypeError("symbol must be Symbol")

@@ -238,6 +251,18 @@ def plot_network(symbol, title="plot", save_format='pdf',

shape=None, node_attrs

>>> digraph = mx.viz.plot_network(net, shape={'data':(100,200)},

... node_attrs={"fixedsize":"false"})

>>> digraph.view()

+

+Notes

+-

+If ``mxnet`` is imported, the visualization module can be used in its

short-form.

+For example, if we ``import mxnet`` as follows::

+

+import mxnet

+

+this method in visualization module can be used in its short-form as::

+

+mxnet.viz.plot_network(...)

+

"""

# todo add shape support

try:

[incubator-mxnet] branch master updated (20a23ef -> 21fc3af)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from 20a23ef Sphinx errors in Gluon (#13275) add 21fc3af Fix Sphinx python docstring formatting error. (#13194) No new revisions were added by this update. Summary of changes: src/operator/nn/batch_norm.cc | 7 +++ 1 file changed, 3 insertions(+), 4 deletions(-)

[incubator-mxnet] branch master updated: stop gap fix to let website builds through; scaladoc fix pending (#13298)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new d73e4df stop gap fix to let website builds through; scaladoc fix

pending (#13298)

d73e4df is described below

commit d73e4df122302bd47790ff42810f6e71989420b1

Author: Aaron Markham

AuthorDate: Fri Nov 16 10:30:45 2018 -0800

stop gap fix to let website builds through; scaladoc fix pending (#13298)

---

docs/mxdoc.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/docs/mxdoc.py b/docs/mxdoc.py

index 8b26c89..5e86c1c 100644

--- a/docs/mxdoc.py

+++ b/docs/mxdoc.py

@@ -115,7 +115,7 @@ def build_scala_docs(app):

'`find native -name "*.jar" | grep "target/lib/" | tr "\\n" ":" `',

'`find macros -name "*-SNAPSHOT.jar" | tr "\\n" ":" `'

])

-_run_cmd('cd {}; scaladoc `{}` -classpath {} -feature -deprecation'

+_run_cmd('cd {}; scaladoc `{}` -classpath {} -feature -deprecation; exit 0'

.format(scala_path, scala_doc_sources, scala_doc_classpath))

dest_path = app.builder.outdir + '/api/scala/docs'

_run_cmd('rm -rf ' + dest_path)

[incubator-mxnet] branch master updated: [MXNET-1203] Tutorial infogan (#13144)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 97fdfd9 [MXNET-1203] Tutorial infogan (#13144)

97fdfd9 is described below

commit 97fdfd947e841b2556c8a8a19bdeed8f4e7bd407

Author: Nathalie Rauschmayr

AuthorDate: Thu Nov 15 15:08:19 2018 -0800

[MXNET-1203] Tutorial infogan (#13144)

* Adding info_gan example

* adjust paths of filenames

* Update index.md

* Update index.md

* Update index.md

* Update info_gan.md

Added an image

* Update info_gan.md

Applied some fixes

* Update info_gan.md

Applied some fixes

* Update info_gan.md

Applied some fixes

* Update info_gan.md

* Updated index.md file

* Updated index.md file

* change links

* Fixed typo

* Delete Untitled.ipynb

* Adding Vishaals comments

* Adding Anirudh's comments

* Fixed some bugs

* Adding Anirudh's comments

* some minor fixes

---

docs/tutorials/gluon/info_gan.md | 437 ++

docs/tutorials/index.md | 1 +

tests/tutorials/test_tutorials.py | 3 +

3 files changed, 441 insertions(+)

diff --git a/docs/tutorials/gluon/info_gan.md b/docs/tutorials/gluon/info_gan.md

new file mode 100644

index 000..c8f07c6

--- /dev/null

+++ b/docs/tutorials/gluon/info_gan.md

@@ -0,0 +1,437 @@

+

+# Image similarity search with InfoGAN

+

+This notebook shows how to implement an InfoGAN based on Gluon. InfoGAN is an

extension of GANs, where the generator input is split in 2 parts: random noise

and a latent code (see [InfoGAN Paper](https://arxiv.org/pdf/1606.03657.pdf)).

+The codes are made meaningful by maximizing the mutual information between

code and generator output. InfoGAN learns a disentangled representation in a

completely unsupervised manner. It can be used for many applications such as

image similarity search. This notebook uses the DCGAN example from the

[Straight Dope

Book](https://gluon.mxnet.io/chapter14_generative-adversarial-networks/dcgan.html)

and extends it to create an InfoGAN.

+

+

+```python

+from __future__ import print_function

+from datetime import datetime

+import logging

+import multiprocessing

+import os

+import sys

+import tarfile

+import time

+

+import numpy as np

+from matplotlib import pyplot as plt

+from mxboard import SummaryWriter

+import mxnet as mx

+from mxnet import gluon

+from mxnet import ndarray as nd

+from mxnet.gluon import nn, utils

+from mxnet import autograd

+

+```

+

+The latent code vector can contain several variables, which can be categorical

and/or continuous. We set `n_continuous` to 2 and `n_categories` to 10.

+

+

+```python

+batch_size = 64

+z_dim= 100

+n_continuous = 2

+n_categories = 10

+ctx = mx.gpu() if mx.test_utils.list_gpus() else mx.cpu()

+```

+

+Some functions to load and normalize images.

+

+

+```python

+lfw_url = 'http://vis-www.cs.umass.edu/lfw/lfw-deepfunneled.tgz'

+data_path = 'lfw_dataset'

+if not os.path.exists(data_path):

+os.makedirs(data_path)

+data_file = utils.download(lfw_url)

+with tarfile.open(data_file) as tar:

+tar.extractall(path=data_path)

+

+```

+

+

+```python

+def transform(data, width=64, height=64):

+data = mx.image.imresize(data, width, height)

+data = nd.transpose(data, (2,0,1))

+data = data.astype(np.float32)/127.5 - 1

+if data.shape[0] == 1:

+data = nd.tile(data, (3, 1, 1))

+return data.reshape((1,) + data.shape)

+```

+

+

+```python

+def get_files(data_dir):

+images= []

+filenames = []

+for path, _, fnames in os.walk(data_dir):

+for fname in fnames:

+if not fname.endswith('.jpg'):

+continue

+img = os.path.join(path, fname)

+img_arr = mx.image.imread(img)

+img_arr = transform(img_arr)

+images.append(img_arr)

+filenames.append(path + "/" + fname)

+return images, filenames

+```

+

+Load the dataset `lfw_dataset` which contains images of celebrities.

+

+

+```python

+data_dir = 'lfw_dataset'

+images, filenames = get_files(data_dir)

+split = int(len(images)*0.8)

+test_images = images[split:]

+test_filenames = filenames[split:]

+train_images = images[:split]

+train_filenames = filenames[:split]

+

+train_data = gluon.data.ArrayDataset(nd.concatenate(train_images))

+train_dataloader = gluon.data.DataLoader(train_data, batch_size=batch_size,

shuffle=True, last_batch='rollover', num_workers=multiprocessing.cpu_count()-1)

+```

+

+## Generator

+Define the Generator model. Architecture is taken from the DCGAN

implementation in [Straight Dope

Book](https://gluon.mxnet.io/chapter14_generative-adversarial-networks/

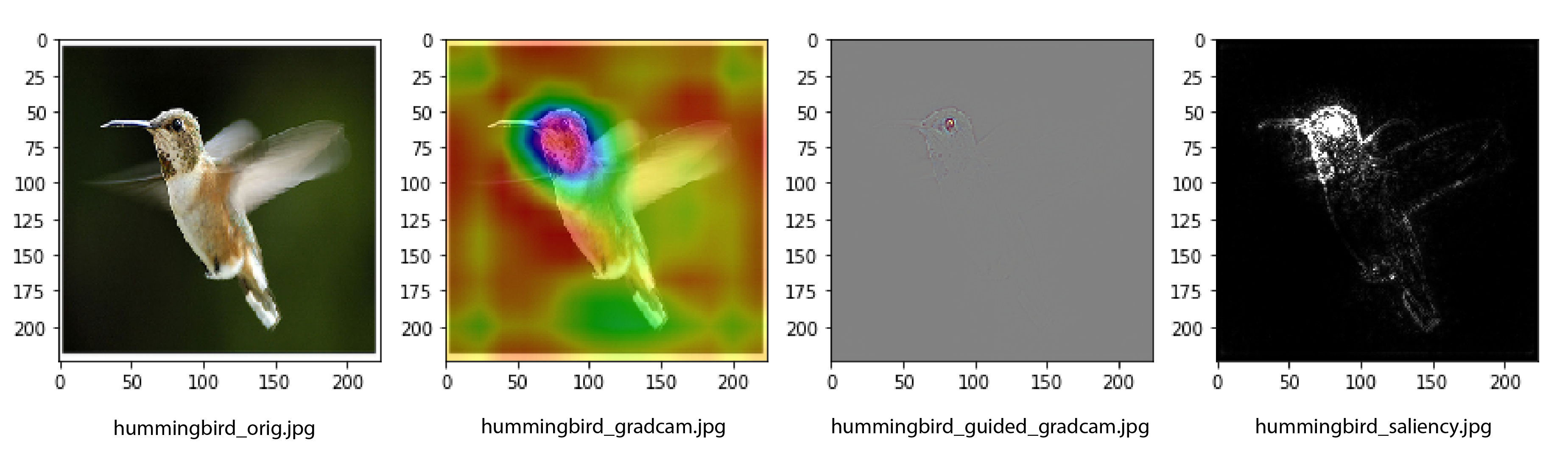

[incubator-mxnet] branch master updated: [Example] Gradcam consolidation in tutorial (#13255)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 8ac7fb9 [Example] Gradcam consolidation in tutorial (#13255)

8ac7fb9 is described below

commit 8ac7fb930fdfa6ef3ac61be7569a17eb95f1ad4c

Author: Ankit Khedia <36249596+ankkhe...@users.noreply.github.com>

AuthorDate: Thu Nov 15 15:05:36 2018 -0800

[Example] Gradcam consolidation in tutorial (#13255)

* fixing gradcam

* changed loading parameters code

* fixing type conversions issue with previous versions of matplotlib

* gradcam consolidation

* creating directory structures in utils

* changing location

* empty commit

---

docs/conf.py | 2 +-

.../vision}/cnn_visualization/gradcam.py | 0

docs/tutorials/vision/cnn_visualization.md | 3 +-

example/cnn_visualization/README.md| 17

example/cnn_visualization/gradcam_demo.py | 110 -

example/cnn_visualization/vgg.py | 90 -

6 files changed, 3 insertions(+), 219 deletions(-)

diff --git a/docs/conf.py b/docs/conf.py

index 656a1da..af23521 100644

--- a/docs/conf.py

+++ b/docs/conf.py

@@ -107,7 +107,7 @@ master_doc = 'index'

# List of patterns, relative to source directory, that match files and

# directories to ignore when looking for source files.

-exclude_patterns = ['3rdparty', 'build_version_doc', 'virtualenv',

'api/python/model.md', 'README.md']

+exclude_patterns = ['3rdparty', 'build_version_doc', 'virtualenv',

'api/python/model.md', 'README.md', 'tutorial_utils']

# The reST default role (used for this markup: `text`) to use for all

documents.

#default_role = None

diff --git a/example/cnn_visualization/gradcam.py

b/docs/tutorial_utils/vision/cnn_visualization/gradcam.py

similarity index 100%

rename from example/cnn_visualization/gradcam.py

rename to docs/tutorial_utils/vision/cnn_visualization/gradcam.py

diff --git a/docs/tutorials/vision/cnn_visualization.md

b/docs/tutorials/vision/cnn_visualization.md

index a350fff..fd6a464 100644

--- a/docs/tutorials/vision/cnn_visualization.md

+++ b/docs/tutorials/vision/cnn_visualization.md

@@ -22,7 +22,7 @@ from matplotlib import pyplot as plt

import numpy as np

gradcam_file = "gradcam.py"

-base_url =

"https://raw.githubusercontent.com/indhub/mxnet/cnnviz/example/cnn_visualization/{}?raw=true;

+base_url =

"https://github.com/apache/incubator-mxnet/tree/master/docs/tutorial_utils/vision/cnn_visualization/{}?raw=true;

mx.test_utils.download(base_url.format(gradcam_file), fname=gradcam_file)

import gradcam

```

@@ -182,6 +182,7 @@ Next, we'll write a method to get an image, preprocess it,

predict category and

2. **Guided Grad-CAM:** Guided Grad-CAM shows which exact pixels contributed

the most to the CNN's decision.

3. **Saliency map:** Saliency map is a monochrome image showing which pixels

contributed the most to the CNN's decision. Sometimes, it is easier to see the

areas in the image that most influence the output in a monochrome image than in

a color image.

+

```python

def visualize(net, img_path, conv_layer_name):

orig_img = mx.img.imread(img_path)

diff --git a/example/cnn_visualization/README.md

b/example/cnn_visualization/README.md

deleted file mode 100644

index 10b9149..000

--- a/example/cnn_visualization/README.md

+++ /dev/null

@@ -1,17 +0,0 @@

-# Visualzing CNN decisions

-

-This folder contains an MXNet Gluon implementation of

[Grad-CAM](https://arxiv.org/abs/1610.02391) that helps visualize CNN decisions.

-

-A tutorial on how to use this from Jupyter notebook is available

[here](https://mxnet.incubator.apache.org/tutorials/vision/cnn_visualization.html).

-

-You can also do the visualization from terminal:

-```

-$ python gradcam_demo.py hummingbird.jpg

-Predicted category : hummingbird (94)

-Original Image : hummingbird_orig.jpg

-Grad-CAM: hummingbird_gradcam.jpg

-Guided Grad-CAM : hummingbird_guided_gradcam.jpg

-Saliency Map: hummingbird_saliency.jpg

-```

-

-

diff --git a/example/cnn_visualization/gradcam_demo.py

b/example/cnn_visualization/gradcam_demo.py

deleted file mode 100644

index d9ca5dd..000

--- a/example/cnn_visualization/gradcam_demo.py

+++ /dev/null

@@ -1,110 +0,0 @@

-# Licensed to the Apache Software Foundation (ASF) under one

-# or more contributor license agreements. See the NOTICE file

-# distributed with this work for additional information

-# regarding copyright ownership. The ASF licenses this file

-# to you under the Apache License, Version 2.0 (the

-# "License"

[incubator-mxnet] branch master updated: [Example] Fixing Gradcam implementation (#13196)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new e655f62 [Example] Fixing Gradcam implementation (#13196)

e655f62 is described below

commit e655f62bcccdf55fbc62b96cd6b12e7fbe68aaba

Author: Ankit Khedia <36249596+ankkhe...@users.noreply.github.com>

AuthorDate: Tue Nov 13 15:38:06 2018 -0800

[Example] Fixing Gradcam implementation (#13196)

* fixing gradcam

* changed loading parameters code

* fixing type conversions issue with previous versions of matplotlib

---

docs/tutorials/vision/cnn_visualization.md | 3 ++-

example/cnn_visualization/gradcam.py | 4 ++--

example/cnn_visualization/vgg.py | 16 +++-

3 files changed, 15 insertions(+), 8 deletions(-)

diff --git a/docs/tutorials/vision/cnn_visualization.md

b/docs/tutorials/vision/cnn_visualization.md

index 940c261..a350fff 100644

--- a/docs/tutorials/vision/cnn_visualization.md

+++ b/docs/tutorials/vision/cnn_visualization.md

@@ -151,7 +151,8 @@ def show_images(pred_str, images):

for i in range(num_images):

fig.add_subplot(rows, cols, i+1)

plt.xlabel(titles[i])

-plt.imshow(images[i], cmap='gray' if i==num_images-1 else None)

+img = images[i].astype(np.uint8)

+plt.imshow(img, cmap='gray' if i==num_images-1 else None)

plt.show()

```

diff --git a/example/cnn_visualization/gradcam.py

b/example/cnn_visualization/gradcam.py

index a8708f7..54cb65e 100644

--- a/example/cnn_visualization/gradcam.py

+++ b/example/cnn_visualization/gradcam.py

@@ -249,8 +249,8 @@ def visualize(net, preprocessed_img, orig_img,

conv_layer_name):

imggrad = get_image_grad(net, preprocessed_img)

conv_out, conv_out_grad = get_conv_out_grad(net, preprocessed_img,

conv_layer_name=conv_layer_name)

-cam = get_cam(imggrad, conv_out)

-

+cam = get_cam(conv_out_grad, conv_out)

+cam = cv2.resize(cam, (imggrad.shape[1], imggrad.shape[2]))

ggcam = get_guided_grad_cam(cam, imggrad)

img_ggcam = grad_to_image(ggcam)

diff --git a/example/cnn_visualization/vgg.py b/example/cnn_visualization/vgg.py

index b6215a3..a8a0ef6 100644

--- a/example/cnn_visualization/vgg.py

+++ b/example/cnn_visualization/vgg.py

@@ -72,11 +72,17 @@ def get_vgg(num_layers, pretrained=False, ctx=mx.cpu(),

root=os.path.join('~', '.mxnet', 'models'), **kwargs):

layers, filters = vgg_spec[num_layers]

net = VGG(layers, filters, **kwargs)

-if pretrained:

-from mxnet.gluon.model_zoo.model_store import get_model_file

-batch_norm_suffix = '_bn' if kwargs.get('batch_norm') else ''

-net.load_params(get_model_file('vgg%d%s'%(num_layers,

batch_norm_suffix),

- root=root), ctx=ctx)

+net.initialize(ctx=ctx)

+

+# Get the pretrained model

+vgg = mx.gluon.model_zoo.vision.get_vgg(num_layers, pretrained=True,

ctx=ctx)

+

+# Set the parameters in the new network

+params = vgg.collect_params()

+for key in params:

+param = params[key]

+net.collect_params()[net.prefix+key.replace(vgg.prefix,

'')].set_data(param.data())

+

return net

def vgg16(**kwargs):

[incubator-mxnet] branch master updated: Add Java API docs generation (#13071)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new ea6ee0d Add Java API docs generation (#13071)

ea6ee0d is described below

commit ea6ee0d217dcdac29fe0a22bdce019168a76a060

Author: Aaron Markham

AuthorDate: Tue Nov 13 12:17:44 2018 -0800

Add Java API docs generation (#13071)

* add Java API docs generation; split out from Scala API docs

* bumping file for ci

* make scala docs build compatible for 2.11.x and 2.12.x scala

fix typo

* fix exit bug

---

docs/mxdoc.py | 31 ---

docs/settings.ini | 11 +++

2 files changed, 35 insertions(+), 7 deletions(-)

diff --git a/docs/mxdoc.py b/docs/mxdoc.py

index 7092b9e..8570cae 100644

--- a/docs/mxdoc.py

+++ b/docs/mxdoc.py

@@ -40,11 +40,12 @@ if _DOC_SET not in parser.sections():

for section in [ _DOC_SET ]:

print("Document sets to generate:")

-for candidate in [ 'scala_docs', 'clojure_docs', 'doxygen_docs', 'r_docs'

]:

+for candidate in [ 'scala_docs', 'java_docs', 'clojure_docs',

'doxygen_docs', 'r_docs' ]:

print('%-12s : %s' % (candidate, parser.get(section, candidate)))

_MXNET_DOCS_BUILD_MXNET = parser.getboolean('mxnet', 'build_mxnet')

_SCALA_DOCS = parser.getboolean(_DOC_SET, 'scala_docs')

+_JAVA_DOCS = parser.getboolean(_DOC_SET, 'java_docs')

_CLOJURE_DOCS = parser.getboolean(_DOC_SET, 'clojure_docs')

_DOXYGEN_DOCS = parser.getboolean(_DOC_SET, 'doxygen_docs')

_R_DOCS = parser.getboolean(_DOC_SET, 'r_docs')

@@ -58,7 +59,8 @@ _CODE_MARK = re.compile('^([ ]*)```([\w]*)')

# language names and the according file extensions and comment symbol

_LANGS = {'python' : ('py', '#'),

'r' : ('R','#'),

- 'scala' : ('scala', '#'),

+ 'scala' : ('scala', '//'),

+ 'java' : ('java', '//'),

'julia' : ('jl', '#'),

'perl' : ('pl', '#'),

'cpp' : ('cc', '//'),

@@ -101,7 +103,7 @@ def build_r_docs(app):

_run_cmd('mkdir -p ' + dest_path + '; mv ' + pdf_path + ' ' + dest_path)

def build_scala(app):

-"""build scala for scala docs and clojure docs to use"""

+"""build scala for scala docs, java docs, and clojure docs to use"""

_run_cmd("cd %s/.. && make scalapkg" % app.builder.srcdir)

_run_cmd("cd %s/.. && make scalainstall" % app.builder.srcdir)

@@ -109,13 +111,26 @@ def build_scala_docs(app):

"""build scala doc and then move the outdir"""

scala_path = app.builder.srcdir + '/../scala-package'

# scaldoc fails on some apis, so exit 0 to pass the check

-_run_cmd('cd ' + scala_path + '; scaladoc `find . -type f -name "*.scala"

| egrep \"\/core|\/infer\" | egrep -v \"Suite\"`; exit 0')

+_run_cmd('cd ' + scala_path + '; scaladoc `find . -type f -name "*.scala"

| egrep \"\/core|\/infer\" | egrep -v \"Suite|javaapi\"`; exit 0')

dest_path = app.builder.outdir + '/api/scala/docs'

_run_cmd('rm -rf ' + dest_path)

_run_cmd('mkdir -p ' + dest_path)

+# 'index' and 'package.html' do not exist in later versions of scala;

delete these after upgrading scala>2.12.x

scaladocs = ['index', 'index.html', 'org', 'lib', 'index.js',

'package.html']

for doc_file in scaladocs:

-_run_cmd('cd ' + scala_path + ' && mv -f ' + doc_file + ' ' +

dest_path)

+_run_cmd('cd ' + scala_path + ' && mv -f ' + doc_file + ' ' +

dest_path + '; exit 0')

+

+def build_java_docs(app):

+"""build java docs and then move the outdir"""

+java_path = app.builder.srcdir +

'/../scala-package/core/src/main/scala/org/apache/mxnet/'

+# scaldoc fails on some apis, so exit 0 to pass the check

+_run_cmd('cd ' + java_path + '; scaladoc `find . -type f -name "*.scala" |

egrep \"\/javaapi\" | egrep -v \"Suite\"`; exit 0')

+dest_path = app.builder.outdir + '/api/java/docs'

+_run_cmd('rm -rf ' + dest_path)

+_run_cmd('mkdir -p ' + dest_path)

+javadocs = ['index', 'index.html', 'org', 'lib', 'index.js',

'package.html']

+for doc_file in javadocs:

+_run_cmd('cd ' + java_path + ' && mv -f ' + doc_file + ' ' + dest_path

+ '; exit 0')

def build_clojure_docs(app):

"""build clojure doc and then move the outdir"""

@@ -125,7 +140,7 @@ def build_clojure_docs(app):

_run_cmd('rm -rf ' + dest_path)

_run_cmd('mkdir -p ' + dest_path)

clojure_doc_path = app.builder.srcdir +

'/../contrib/clojure-package/target/doc'

-_run_cmd('cd ' + clojure_doc_path + ' && cp -r * ' + dest_path)

+

[incubator-mxnet] branch master updated: Updates to several examples (#13068)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new 012288f Updates to several examples (#13068) 012288f is described below commit 012288f0325d58582d1b12499e9daccdad696080 Author: Thomas Delteil AuthorDate: Thu Nov 8 09:53:33 2018 -0800 Updates to several examples (#13068) * Minor updates to several examples * fix typo * update following review --- example/reinforcement-learning/ddpg/README.md | 2 + example/reinforcement-learning/dqn/setup.sh| 6 +- example/restricted-boltzmann-machine/README.md | 52 example/rnn-time-major/bucket_io.py| 264 - example/rnn-time-major/get_sherlockholmes_data.sh | 43 example/rnn-time-major/readme.md | 24 -- example/rnn-time-major/rnn_cell_demo.py| 189 --- example/rnn/README.md | 5 + example/rnn/bucketing/README.md| 13 +- example/rnn/large_word_lm/data.py | 2 +- example/rnn/large_word_lm/readme.md| 66 -- example/rnn/word_lm/README.md | 2 +- example/sparse/linear_classification/data.py | 5 +- example/speech_recognition/README.md | 4 +- example/speech_recognition/label_util.py | 2 +- example/speech_recognition/log_util.py | 74 +++--- example/speech_recognition/main.py | 6 +- example/speech_recognition/singleton.py| 32 ++- example/speech_recognition/stt_datagenerator.py| 6 +- example/speech_recognition/stt_io_iter.py | 6 +- example/speech_recognition/stt_metric.py | 2 +- example/speech_recognition/stt_utils.py| 6 +- example/speech_recognition/train.py| 2 +- example/ssd/README.md | 8 + example/stochastic-depth/sd_cifar10.py | 31 ++- example/stochastic-depth/sd_mnist.py | 6 +- example/svm_mnist/svm_mnist.py | 110 + example/svrg_module/README.md | 6 +- .../svrg_module/benchmarks/svrg_benchmark.ipynb| 111 - .../svrg_module/linear_regression/data_reader.py | 24 +- example/svrg_module/linear_regression/train.py | 4 +- 31 files changed, 326 insertions(+), 787 deletions(-) diff --git a/example/reinforcement-learning/ddpg/README.md b/example/reinforcement-learning/ddpg/README.md index 37f42a8..2e299dd 100644 --- a/example/reinforcement-learning/ddpg/README.md +++ b/example/reinforcement-learning/ddpg/README.md @@ -1,6 +1,8 @@ # mx-DDPG MXNet Implementation of DDPG +## /!\ This example depends on RLLAB which is deprecated /!\ + # Introduction This is the MXNet implementation of [DDPG](https://arxiv.org/abs/1509.02971). It is tested in the rllab cart pole environment against rllab's native implementation and achieves comparably similar results. You can substitute with this anywhere you use rllab's DDPG with minor modifications. diff --git a/example/reinforcement-learning/dqn/setup.sh b/example/reinforcement-learning/dqn/setup.sh index 3069fef..012ff8f 100755 --- a/example/reinforcement-learning/dqn/setup.sh +++ b/example/reinforcement-learning/dqn/setup.sh @@ -26,11 +26,11 @@ pip install pygame # Install arcade learning environment if [[ "$OSTYPE" == "linux-gnu" ]]; then -sudo apt-get install libsdl1.2-dev libsdl-gfx1.2-dev libsdl-image1.2-dev cmake +sudo apt-get install libsdl1.2-dev libsdl-gfx1.2-dev libsdl-image1.2-dev cmake ninja-build elif [[ "$OSTYPE" == "darwin"* ]]; then brew install sdl sdl_image sdl_mixer sdl_ttf portmidi fi -git clone g...@github.com:mgbellemare/Arcade-Learning-Environment.git || true +git clone https://github.com/mgbellemare/Arcade-Learning-Environment || true pushd . cd Arcade-Learning-Environment mkdir -p build @@ -43,6 +43,6 @@ popd cp Arcade-Learning-Environment/ale.cfg . # Copy roms -git clone g...@github.com:npow/atari.git || true +git clone https://github.com/npow/atari || true cp -R atari/roms . diff --git a/example/restricted-boltzmann-machine/README.md b/example/restricted-boltzmann-machine/README.md index 129120b..a8769a5 100644 --- a/example/restricted-boltzmann-machine/README.md +++ b/example/restricted-boltzmann-machine/README.md @@ -8,6 +8,58 @@ Here are some samples generated by the RBM with the default hyperparameters. The +Usage: + +``` +python binary_rbm_gluon.py --help +usage: binary_rbm_gluon.py [-h] [--num-hidden NUM_HIDDEN] [--k K] + [--batch-size BATCH_SIZE] [--num-epoch NUM_EPOCH] + [--learning-rate LEARNING_RATE] +

[incubator-mxnet] branch master updated: Updated capsnet example (#12934)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new 68bc9b7 Updated capsnet example (#12934) 68bc9b7 is described below commit 68bc9b7f444e76e42c02adfa97ec12149ba0d996 Author: Thomas Delteil AuthorDate: Thu Nov 8 08:38:42 2018 -0800 Updated capsnet example (#12934) * Updated capsnet * trigger CI * Update README.md --- example/capsnet/README.md | 132 example/capsnet/capsulenet.py | 695 +- 2 files changed, 413 insertions(+), 414 deletions(-) diff --git a/example/capsnet/README.md b/example/capsnet/README.md index 49a6dd1..500c7df 100644 --- a/example/capsnet/README.md +++ b/example/capsnet/README.md @@ -1,66 +1,66 @@ -**CapsNet-MXNet** -= - -This example is MXNet implementation of [CapsNet](https://arxiv.org/abs/1710.09829): -Sara Sabour, Nicholas Frosst, Geoffrey E Hinton. Dynamic Routing Between Capsules. NIPS 2017 -- The current `best test error is 0.29%` and `average test error is 0.303%` -- The `average test error on paper is 0.25%` - -Log files for the error rate are uploaded in [repository](https://github.com/samsungsds-rnd/capsnet.mxnet). -* * * -## **Usage** -Install scipy with pip -``` -pip install scipy -``` -Install tensorboard with pip -``` -pip install tensorboard -``` - -On Single gpu -``` -python capsulenet.py --devices gpu0 -``` -On Multi gpus -``` -python capsulenet.py --devices gpu0,gpu1 -``` -Full arguments -``` -python capsulenet.py --batch_size 100 --devices gpu0,gpu1 --num_epoch 100 --lr 0.001 --num_routing 3 --model_prefix capsnet -``` - -* * * -## **Prerequisities** - -MXNet version above (0.11.0) -scipy version above (0.19.0) - -*** -## **Results** -Train time takes about 36 seconds for each epoch (batch_size=100, 2 gtx 1080 gpus) - -CapsNet classification test error on MNIST - -``` -python capsulenet.py --devices gpu0,gpu1 --lr 0.0005 --decay 0.99 --model_prefix lr_0_0005_decay_0_99 --batch_size 100 --num_routing 3 --num_epoch 200 -``` - - - -| Trial | Epoch | train err(%) | test err(%) | train loss | test loss | -| :---: | :---: | :---: | :---: | :---: | :---: | -| 1 | 120 | 0.06 | 0.31 | 0.0056 | 0.0064 | -| 2 | 167 | 0.03 | 0.29 | 0.0048 | 0.0058 | -| 3 | 182 | 0.04 | 0.31 | 0.0046 | 0.0058 | -| average | - | 0.043 | 0.303 | 0.005 | 0.006 | - -We achieved `the best test error rate=0.29%` and `average test error=0.303%`. It is the best accuracy and fastest training time result among other implementations(Keras, Tensorflow at 2017-11-23). -The result on paper is `0.25% (average test error rate)`. - -| Implementation| test err(%) | ※train time/epoch | GPU Used| -| :---: | :---: | :---: |:---: | -| MXNet | 0.29 | 36 sec | 2 GTX 1080 | -| tensorflow | 0.49 | ※ 10 min | Unknown(4GB Memory) | -| Keras | 0.30 | 55 sec | 2 GTX 1080 Ti | +**CapsNet-MXNet** += + +This example is MXNet implementation of [CapsNet](https://arxiv.org/abs/1710.09829): +Sara Sabour, Nicholas Frosst, Geoffrey E Hinton. Dynamic Routing Between Capsules. NIPS 2017 +- The current `best test error is 0.29%` and `average test error is 0.303%` +- The `average test error on paper is 0.25%` + +Log files for the error rate are uploaded in [repository](https://github.com/samsungsds-rnd/capsnet.mxnet). +* * * +## **Usage** +Install scipy with pip +``` +pip install scipy +``` +Install tensorboard and mxboard with pip +``` +pip install mxboard tensorflow +``` + +On Single gpu +``` +python capsulenet.py --devices gpu0 +``` +On Multi gpus +``` +python capsulenet.py --devices gpu0,gpu1 +``` +Full arguments +``` +python capsulenet.py --batch_size 100 --devices gpu0,gpu1 --num_epoch 100 --lr 0.001 --num_routing 3 --model_prefix capsnet +``` + +* * * +## **Prerequisities** + +MXNet version above (1.2.0) +scipy version above (0.19.0) + +*** +## **Results** +Train time takes about 36 seconds for each epoch (batch_size=100, 2 gtx 1080 gpus) + +CapsNet classification test error on MNIST: + +``` +python capsulenet.py --devices gpu0,gpu1 --lr 0.0005 --decay 0.99 --model_prefix lr_0_0005_decay_0_99 --batch_size 100 --num_routing 3 --num_epoch 200 +``` + + + +| Trial | Epoch | train err(%) | test err(%) | train loss | test loss | +| :---: | :---: | :---: | :---: | :---: | :---: | +| 1 | 120 | 0.06 | 0.31 | 0.0056 | 0.0064 | +| 2 | 167 | 0.03 | 0.29 | 0.0048 | 0.0058 | +| 3 | 182 | 0.04 | 0.31 | 0.0046 | 0.0058 | +| average | - | 0.043 | 0.303 | 0.005 | 0.006 | + +We achieved `the best test error rate=0.29%` and `average test error=0.303%`. It is the best accuracy and fastest training time result among other implementations(Keras, Tensorflow at 2017-11-23). +The result on paper

[incubator-mxnet] branch master updated: Update Gluon example folder (#12951)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new c169b14 Update Gluon example folder (#12951)

c169b14 is described below

commit c169b14b35cb8eb25df166d2e5f5ddc1c4fa5d5f

Author: Thomas Delteil

AuthorDate: Tue Nov 6 11:01:43 2018 -0800

Update Gluon example folder (#12951)

* Reorganized the Gluon folder in example

* trigger CI

* update reference

* fix out of place accumulation

---

docs/tutorials/unsupervised_learning/gan.md| 2 +-

example/gluon/{ => actor_critic}/actor_critic.py | 0

example/gluon/{DCGAN => dc_gan}/README.md | 0

example/gluon/{DCGAN => dc_gan}/__init__.py| 0

example/gluon/{DCGAN => dc_gan}/dcgan.py | 0

example/gluon/{DCGAN => dc_gan}/inception_score.py | 0

.../kaggle_k_fold_cross_validation.py | 0

example/gluon/learning_rate_manipulation.py| 63 --

example/gluon/{ => lstm_crf}/lstm_crf.py | 10 ++--

example/gluon/{ => mnist}/mnist.py | 0

example/gluon/sn_gan/data.py | 2 +-

example/gluon/sn_gan/model.py | 2 +-

example/gluon/sn_gan/train.py | 2 +-

example/gluon/sn_gan/utils.py | 2 +-

.../{ => super_resolution}/super_resolution.py | 0

example/notebooks/README.md| 4 --

16 files changed, 12 insertions(+), 75 deletions(-)

diff --git a/docs/tutorials/unsupervised_learning/gan.md

b/docs/tutorials/unsupervised_learning/gan.md

index 1556bf6..f436a15 100644

--- a/docs/tutorials/unsupervised_learning/gan.md

+++ b/docs/tutorials/unsupervised_learning/gan.md

@@ -394,7 +394,7 @@ As a result, we have created two neural nets: a Generator,

which is able to crea

Along the way, we have learned how to do the image manipulation and

visualization that is associated with the training of deep neural nets. We have

also learned how to use MXNet's Module APIs to perform advanced model training

functionality to fit the model.

## Acknowledgements

-This tutorial is based on [MXNet DCGAN

codebase](https://github.com/apache/incubator-mxnet/blob/master/example/gluon/dcgan.py),

+This tutorial is based on [MXNet DCGAN

codebase](https://github.com/apache/incubator-mxnet/blob/master/example/gluon/dc_gan/dcgan.py),

[The original paper on GANs](https://arxiv.org/abs/1406.2661), as well as

[this paper on deep convolutional GANs](https://arxiv.org/abs/1511.06434).

\ No newline at end of file

diff --git a/example/gluon/actor_critic.py

b/example/gluon/actor_critic/actor_critic.py

similarity index 100%

rename from example/gluon/actor_critic.py

rename to example/gluon/actor_critic/actor_critic.py

diff --git a/example/gluon/DCGAN/README.md b/example/gluon/dc_gan/README.md

similarity index 100%

rename from example/gluon/DCGAN/README.md

rename to example/gluon/dc_gan/README.md

diff --git a/example/gluon/DCGAN/__init__.py b/example/gluon/dc_gan/__init__.py

similarity index 100%

rename from example/gluon/DCGAN/__init__.py

rename to example/gluon/dc_gan/__init__.py

diff --git a/example/gluon/DCGAN/dcgan.py b/example/gluon/dc_gan/dcgan.py

similarity index 100%

rename from example/gluon/DCGAN/dcgan.py

rename to example/gluon/dc_gan/dcgan.py

diff --git a/example/gluon/DCGAN/inception_score.py

b/example/gluon/dc_gan/inception_score.py

similarity index 100%

rename from example/gluon/DCGAN/inception_score.py

rename to example/gluon/dc_gan/inception_score.py

diff --git a/example/gluon/kaggle_k_fold_cross_validation.py

b/example/gluon/house_prices/kaggle_k_fold_cross_validation.py

similarity index 100%

rename from example/gluon/kaggle_k_fold_cross_validation.py

rename to example/gluon/house_prices/kaggle_k_fold_cross_validation.py

diff --git a/example/gluon/learning_rate_manipulation.py

b/example/gluon/learning_rate_manipulation.py

deleted file mode 100644

index be1ffc2..000

--- a/example/gluon/learning_rate_manipulation.py

+++ /dev/null

@@ -1,63 +0,0 @@

-# Licensed to the Apache Software Foundation (ASF) under one

-# or more contributor license agreements. See the NOTICE file

-# distributed with this work for additional information

-# regarding copyright ownership. The ASF licenses this file

-# to you under the Apache License, Version 2.0 (the

-# "License"); you may not use this file except in compliance

-# with the License. You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing,

-# software distributed under the License is distributed on an

-# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

-# KIND, either express or implied. See the License for the

-# specific language go

[incubator-mxnet] branch master updated: Updated / Deleted some examples (#12968)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 3e8a976 Updated / Deleted some examples (#12968)

3e8a976 is described below

commit 3e8a976d805dee130831d4f54b7a5dd9f1a7c7bd

Author: Thomas Delteil

AuthorDate: Fri Nov 2 16:03:51 2018 -0700

Updated / Deleted some examples (#12968)

* Updated / Deleted some examples

* remove onnx test

* remove onnx test

---

ci/docker/runtime_functions.sh | 1 -

example/multivariate_time_series/README.md | 4 +-

example/named_entity_recognition/README.md | 1 -

example/named_entity_recognition/src/metrics.py| 2 +-

example/named_entity_recognition/src/ner.py| 2 +-

example/nce-loss/README.md | 2 +-

example/numpy-ops/numpy_softmax.py | 84 -

example/onnx/super_resolution.py | 86 --

example/python-howto/README.md | 37 --

example/python-howto/data_iter.py | 76 ---

example/python-howto/debug_conv.py | 39 --

example/python-howto/monitor_weights.py| 46

example/python-howto/multiple_outputs.py | 38 --

.../{mxnet_adversarial_vae => vae-gan}/README.md | 0

.../convert_data.py| 0

.../vaegan_mxnet.py| 0

.../python-pytest/onnx/import/onnx_import_test.py | 15

17 files changed, 6 insertions(+), 427 deletions(-)

diff --git a/ci/docker/runtime_functions.sh b/ci/docker/runtime_functions.sh

index 0adec07..095eb57 100755

--- a/ci/docker/runtime_functions.sh

+++ b/ci/docker/runtime_functions.sh

@@ -877,7 +877,6 @@ unittest_centos7_gpu() {

integrationtest_ubuntu_cpu_onnx() {

set -ex

export PYTHONPATH=./python/

- python example/onnx/super_resolution.py

pytest tests/python-pytest/onnx/import/mxnet_backend_test.py

pytest tests/python-pytest/onnx/import/onnx_import_test.py

pytest tests/python-pytest/onnx/import/gluon_backend_test.py

diff --git a/example/multivariate_time_series/README.md

b/example/multivariate_time_series/README.md

index 704c86a..87baca3 100644

--- a/example/multivariate_time_series/README.md

+++ b/example/multivariate_time_series/README.md

@@ -3,6 +3,8 @@

- This repo contains an MXNet implementation of

[this](https://arxiv.org/pdf/1703.07015.pdf) state of the art time series

forecasting model.

- You can find my blog post on the model

[here](https://opringle.github.io/2018/01/05/deep_learning_multivariate_ts.html)

+- A Gluon implementation is available

[here](https://github.com/safrooze/LSTNet-Gluon)

+

## Running the code

@@ -22,7 +24,7 @@

## Hyperparameters

-The default arguements in `lstnet.py` achieve equivolent performance to the

published results. For other datasets, the following hyperparameters provide a

good starting point:

+The default arguements in `lstnet.py` achieve equivalent performance to the

published results. For other datasets, the following hyperparameters provide a

good starting point:

- q = {2^0, 2^1, ... , 2^9} (1 week is typical value)

- Convolutional num filters = {50, 100, 200}

diff --git a/example/named_entity_recognition/README.md

b/example/named_entity_recognition/README.md

index 260c19d..2b28b3b 100644

--- a/example/named_entity_recognition/README.md

+++ b/example/named_entity_recognition/README.md

@@ -11,7 +11,6 @@ To reproduce the preprocessed training data:

1. Download and unzip the data:

https://www.kaggle.com/abhinavwalia95/entity-annotated-corpus/downloads/ner_dataset.csv

2. Move ner_dataset.csv into `./data`

-3. create `./preprocessed_data` directory

3. `$ cd src && python preprocess.py`

To train the model:

diff --git a/example/named_entity_recognition/src/metrics.py

b/example/named_entity_recognition/src/metrics.py

index 40c5015..d3d7378 100644

--- a/example/named_entity_recognition/src/metrics.py

+++ b/example/named_entity_recognition/src/metrics.py

@@ -27,7 +27,7 @@ def load_obj(name):

with open(name + '.pkl', 'rb') as f:

return pickle.load(f)

-tag_dict = load_obj("../preprocessed_data/tag_to_index")

+tag_dict = load_obj("../data/tag_to_index")

not_entity_index = tag_dict["O"]

def classifer_metrics(label, pred):

diff --git a/example/named_entity_recognition/src/ner.py

b/example/named_entity_recognition/src/ner.py

index 561db4c..7f5dd84 100644

--- a/example/named_entity_recognition/src/ner.py

+++ b/example/named_entity_recognition/src/ner.py

@@ -34,7 +34,7 @@ logging.basicConfig(level=logging.DEBUG)

parser = argparse.ArgumentParser(description="Deep neural net

[incubator-mxnet] branch master updated: fix mac r install and windows python build from source docs (#12919)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new ffeaf31 fix mac r install and windows python build from source docs

(#12919)

ffeaf31 is described below

commit ffeaf315a41b1bda48deeaab5a8c4109ea63d7cc

Author: Aaron Markham

AuthorDate: Fri Oct 26 13:15:56 2018 -0700

fix mac r install and windows python build from source docs (#12919)

* fix mac r install and windows python build from source docs

* reorder macos r install instructions

---

docs/install/index.md | 19 ++-

1 file changed, 14 insertions(+), 5 deletions(-)

diff --git a/docs/install/index.md b/docs/install/index.md

index fc13697..7ddbaa8 100644

--- a/docs/install/index.md

+++ b/docs/install/index.md

@@ -597,7 +597,8 @@ MXNet developers should refer to the MXNet wiki's https://cwiki.apache.

-Install OpenCV and OpenBLAS.

+

+To run MXNet you also should have OpenCV and OpenBLAS installed. You may

install them with `brew` as follows:

```bash

brew install opencv

@@ -757,7 +758,7 @@ All MKL pip packages are experimental prior to version

1.3.0.

-

+

Docker images with *MXNet* are available at [Docker

Hub](https://hub.docker.com/r/mxnet/).

@@ -800,7 +801,13 @@ mxnet/python1.3.0_cpu_mkl deaf9bf61d29

4 days ago

**Step 4** Validate the installation.

-

+

+

+

+

+Refer to the MXNet Windows installation guide

+

+

@@ -886,7 +893,7 @@ Refer to

[#8671](https://github.com/apache/incubator-mxnet/issues/8671) for stat

You can either upgrade your CUDA install or install the MXNet package that

supports your CUDA version.

-

+

@@ -894,7 +901,7 @@ You can either upgrade your CUDA install or install the

MXNet package that suppo

To build from source, refer to the MXNet Windows

installation guide.

-

+

@@ -915,6 +922,8 @@ options(repos = cran)

install.packages("mxnet")

```

+To run MXNet you also should have OpenCV and OpenBLAS installed.

+

[incubator-mxnet] branch master updated: fix cnn visualization tutorial (#12719)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 865255a fix cnn visualization tutorial (#12719)

865255a is described below

commit 865255a398223510d857820f3061a26065bcdb74

Author: Thomas Delteil

AuthorDate: Wed Oct 10 11:31:14 2018 -0700

fix cnn visualization tutorial (#12719)

---

docs/tutorials/vision/cnn_visualization.md | 16 +++-

1 file changed, 11 insertions(+), 5 deletions(-)

diff --git a/docs/tutorials/vision/cnn_visualization.md

b/docs/tutorials/vision/cnn_visualization.md

index ea027df..940c261 100644

--- a/docs/tutorials/vision/cnn_visualization.md

+++ b/docs/tutorials/vision/cnn_visualization.md

@@ -99,12 +99,18 @@ def get_vgg(num_layers, ctx=mx.cpu(),

root=os.path.join('~', '.mxnet', 'models')

# Get the number of convolution layers and filters

layers, filters = vgg_spec[num_layers]

-# Build the VGG network

+# Build the modified VGG network

net = VGG(layers, filters, **kwargs)

-

-# Load pretrained weights from model zoo

-from mxnet.gluon.model_zoo.model_store import get_model_file

-net.load_params(get_model_file('vgg%d' % num_layers, root=root), ctx=ctx)

+net.initialize(ctx=ctx)

+

+# Get the pretrained model

+vgg = mx.gluon.model_zoo.vision.get_vgg(num_layers, pretrained=True,

ctx=ctx)

+

+# Set the parameters in the new network

+params = vgg.collect_params()

+for key in params:

+param = params[key]

+net.collect_params()[net.prefix+key.replace(vgg.prefix,

'')].set_data(param.data())

return net

[incubator-mxnet] branch master updated: Updated download links and verification instructions (#12651)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new 76ae725 Updated download links and verification instructions (#12651) 76ae725 is described below commit 76ae7252a6751275565600d287b7d432b685fd7d Author: Aaron Markham AuthorDate: Mon Sep 24 14:28:11 2018 -0700 Updated download links and verification instructions (#12651) * use mirror link; add verification instructions * use https links --- docs/install/download.md | 56 +--- 1 file changed, 48 insertions(+), 8 deletions(-) diff --git a/docs/install/download.md b/docs/install/download.md index 3660204..b61b815 100644 --- a/docs/install/download.md +++ b/docs/install/download.md @@ -4,11 +4,51 @@ These source archives are generated from tagged releases. Updates and patches wi | Version | Source | PGP | SHA | |-|-|-|-| -| 1.3.0 | [Download](http://apache.org/dist/incubator/mxnet/1.3.0/apache-mxnet-src-1.3.0-incubating.tar.gz) | [Download](http://apache.org/dist/incubator/mxnet/1.3.0/apache-mxnet-src-1.3.0-incubating.tar.gz.asc) | [Download](http://apache.org/dist/incubator/mxnet/1.3.0/apache-mxnet-src-1.3.0-incubating.tar.gz.sha512) | -| 1.2.1 | [Download](http://archive.apache.org/dist/incubator/mxnet/1.2.1/apache-mxnet-src-1.2.1-incubating.tar.gz) | [Download](http://archive.apache.org/dist/incubator/mxnet/1.2.1/apache-mxnet-src-1.2.1-incubating.tar.gz.asc) | [Download](http://archive.apache.org/dist/incubator/mxnet/1.2.1/apache-mxnet-src-1.2.1-incubating.tar.gz.sha512) | -| 1.2.0 | [Download](http://archive.apache.org/dist/incubator/mxnet/1.2.0/apache-mxnet-src-1.2.0-incubating.tar.gz) | [Download](http://archive.apache.org/dist/incubator/mxnet/1.2.0/apache-mxnet-src-1.2.0-incubating.tar.gz.asc) | [Download](http://archive.apache.org/dist/incubator/mxnet/1.2.0/apache-mxnet-src-1.2.0-incubating.tar.gz.sha512) | -| 1.1.0 | [Download](http://archive.apache.org/dist/incubator/mxnet/1.1.0/apache-mxnet-src-1.1.0-incubating.tar.gz) | [Download](http://archive.apache.org/dist/incubator/mxnet/1.1.0/apache-mxnet-src-1.1.0-incubating.tar.gz.asc) | [Download](http://archive.apache.org/dist/incubator/mxnet/1.1.0/apache-mxnet-src-1.1.0-incubating.tar.gz.sha512) | -| 1.0.0 | [Download](http://archive.apache.org/dist/incubator/mxnet/1.0.0/apache-mxnet-src-1.0.0-incubating.tar.gz) | [Download](http://archive.apache.org/dist/incubator/mxnet/1.0.0/apache-mxnet-src-1.0.0-incubating.tar.gz.asc) | [Download](http://archive.apache.org/dist/incubator/mxnet/1.0.0/apache-mxnet-src-1.0.0-incubating.tar.gz.sha512) | -| 0.12.1 | [Download](http://archive.apache.org/dist/incubator/mxnet/0.12.1/apache-mxnet-src-0.12.1-incubating.tar.gz) | [Download](http://archive.apache.org/dist/incubator/mxnet/0.12.1/apache-mxnet-src-0.12.1-incubating.tar.gz.asc) | [Download](http://archive.apache.org/dist/incubator/mxnet/0.12.1/apache-mxnet-src-0.12.1-incubating.tar.gz.sha512) | -| 0.12.0 | [Download](http://archive.apache.org/dist/incubator/mxnet/0.12.0/apache-mxnet-src-0.12.0-incubating.tar.gz) | [Download](http://archive.apache.org/dist/incubator/mxnet/0.12.0/apache-mxnet-src-0.12.0-incubating.tar.gz.asc) | [Download](http://archive.apache.org/dist/incubator/mxnet/0.12.0/apache-mxnet-src-0.12.0-incubating.tar.gz.sha512) | -| 0.11.0 | [Download](http://archive.apache.org/dist/incubator/mxnet/0.11.0/apache-mxnet-src-0.11.0-incubating.tar.gz) | [Download](http://archive.apache.org/dist/incubator/mxnet/0.11.0/apache-mxnet-src-0.11.0-incubating.tar.gz.asc) | [Download](http://archive.apache.org/dist/incubator/mxnet/0.11.0/apache-mxnet-src-0.11.0-incubating.tar.gz.sha512) | +| 1.3.0 | [Download](https://www.apache.org/dyn/closer.cgi/incubator/mxnet/1.3.0/apache-mxnet-src-1.3.0-incubating.tar.gz) | [Download](https://apache.org/dist/incubator/mxnet/1.3.0/apache-mxnet-src-1.3.0-incubating.tar.gz.asc) | [Download](https://apache.org/dist/incubator/mxnet/1.3.0/apache-mxnet-src-1.3.0-incubating.tar.gz.sha512) | +| 1.2.1 | [Download](https

[incubator-mxnet] branch master updated (d4991a0 -> dba9487)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from d4991a0 [MXNET-360]auto convert str to bytes in img.imdecode when py3 (#10697) add dba9487 Update and modify Windows docs (#12620) No new revisions were added by this update. Summary of changes: docs/install/windows_setup.md | 5 +++-- 1 file changed, 3 insertions(+), 2 deletions(-)

[incubator-mxnet] branch master updated: Remove pip overwrites (#12604)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 0f8d2d2 Remove pip overwrites (#12604)

0f8d2d2 is described below

commit 0f8d2d2267880ec575e8d41255f9b02c5d6ad552

Author: Aaron Markham

AuthorDate: Thu Sep 20 10:17:34 2018 -0700

Remove pip overwrites (#12604)

* remove overwrites on pip instructions coming from source

* removing examples version overwrite

---

docs/build_version_doc/AddVersion.py | 25 -

1 file changed, 25 deletions(-)

diff --git a/docs/build_version_doc/AddVersion.py

b/docs/build_version_doc/AddVersion.py

index f5e28df..f429d34 100755

--- a/docs/build_version_doc/AddVersion.py

+++ b/docs/build_version_doc/AddVersion.py

@@ -87,30 +87,5 @@ if __name__ == '__main__':

outstr = outstr.replace('http://mxnet.io',

'https://mxnet.incubator.apache.org/'

'versions/%s' %

(args.current_version))

-# Fix git clone and pip installation to specific tag

-pip_pattern = ['', '-cu80', '-cu75', '-cu80mkl', '-cu75mkl',

'-mkl']

-if args.current_version == 'master':

-outstr = outstr.replace('git clone --recursive

https://github.com/dmlc/mxnet',

-'git clone --recursive

https://github.com/apache/incubator-mxnet.git mxnet')

-for trail in pip_pattern:

-outstr = outstr.replace('pip install mxnet%s<' % (trail),

-'pip install mxnet%s --pre<' %

(trail))

-outstr = outstr.replace('pip install mxnet%s\n<' % (trail),

-'pip install mxnet%s --pre\n<' %

(trail))

-else:

-outstr = outstr.replace('git clone --recursive

https://github.com/dmlc/mxnet',

-'git clone --recursive

https://github.com/apache/incubator-mxnet.git mxnet '

-'--branch %s' % (args.current_version))

-for trail in pip_pattern:

-outstr = outstr.replace('pip install mxnet%s<' % (trail),

-'pip install mxnet%s==%s<' %

(trail, args.current_version))

-outstr = outstr.replace('pip install mxnet%s\n<' % (trail),

-'pip install mxnet%s==%s\n<' %

(trail, args.current_version))

-

-# Add tag for example link

-outstr =

outstr.replace('https://github.com/apache/incubator-mxnet/tree/master/example',

-

'https://github.com/apache/incubator-mxnet/tree/%s/example' %

-(args.current_version))

-

with open(os.path.join(path, name), "w") as outf:

outf.write(outstr)

[incubator-mxnet] branch master updated: improve tutorial redirection (#12607)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new ba993d1 improve tutorial redirection (#12607) ba993d1 is described below commit ba993d1091044ab9e74e84dae21cef340f263bf5 Author: Aaron Markham AuthorDate: Thu Sep 20 09:53:09 2018 -0700 improve tutorial redirection (#12607) --- docs/build_version_doc/artifacts/.htaccess | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/docs/build_version_doc/artifacts/.htaccess b/docs/build_version_doc/artifacts/.htaccess index 95b311a..d553ce5 100644 --- a/docs/build_version_doc/artifacts/.htaccess +++ b/docs/build_version_doc/artifacts/.htaccess @@ -11,7 +11,7 @@ RewriteRule ^versions/[^\/]+/community/.*$ /community/ [R=301,L] RewriteRule ^versions/[^\/]+/faq/.*$ /faq/ [R=301,L] RewriteRule ^versions/[^\/]+/gluon/.*$ /gluon/ [R=301,L] RewriteRule ^versions/[^\/]+/install/.*$ /install/ [R=301,L] -RewriteRule ^versions/[^\/]+/tutorials/.*$ /tutorials/ [R=301,L] +RewriteRule ^versions/[^\/]+/tutorials/(.*)$ /tutorials/$1 [R=301,L] # Redirect navbar APIs that did not exist RewriteRule ^versions/0.11.0/api/python/contrib/onnx.html /error/api.html [R=301,L]

[incubator-mxnet] branch master updated: add TensorRT tutorial to index and fix ToC (#12587)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new 73d8897 add TensorRT tutorial to index and fix ToC (#12587) 73d8897 is described below commit 73d88974f8bca1e68441606fb0787a2cd17eb364 Author: Aaron Markham AuthorDate: Wed Sep 19 10:32:22 2018 -0700 add TensorRT tutorial to index and fix ToC (#12587) * adding tensorrt tutorial to index * fix toc for tutorials * adding back new tutorials * add control_flow index to whitelist --- docs/tutorials/control_flow/index.md | 8 docs/tutorials/index.md | 4 +++- tests/tutorials/test_sanity_tutorials.py | 1 + 3 files changed, 12 insertions(+), 1 deletion(-) diff --git a/docs/tutorials/control_flow/index.md b/docs/tutorials/control_flow/index.md new file mode 100644 index 000..87d7289 --- /dev/null +++ b/docs/tutorials/control_flow/index.md @@ -0,0 +1,8 @@ +# Tutorials + +```eval_rst +.. toctree:: + :glob: + + * +``` diff --git a/docs/tutorials/index.md b/docs/tutorials/index.md index 8a6ac40..df1d892 100644 --- a/docs/tutorials/index.md +++ b/docs/tutorials/index.md @@ -3,9 +3,9 @@ ```eval_rst .. toctree:: :hidden: - basic/index.md c++/index.md + control_flow/index.md embedded/index.md gluon/index.md nlp/index.md @@ -15,6 +15,7 @@ scala/index.md sparse/index.md speech_recognition/index.md + tensorrt/index.md unsupervised_learning/index.md vision/index.md ``` @@ -118,6 +119,7 @@ Select API: * [Large-Scale Multi-Host Multi-GPU Image Classification](/tutorials/vision/large_scale_classification.html) * [Importing an ONNX model into MXNet](/tutorials/onnx/super_resolution.html) * [Hybridize Gluon models with control flows](/tutorials/control_flow/ControlFlowTutorial.html) +* [Optimizing Deep Learning Computation Graphs with TensorRT](/tutorials/tensorrt/inference_with_trt.html) * API Guides * Core APIs * NDArray diff --git a/tests/tutorials/test_sanity_tutorials.py b/tests/tutorials/test_sanity_tutorials.py index 0fa3ccb..f9fb0ac 100644 --- a/tests/tutorials/test_sanity_tutorials.py +++ b/tests/tutorials/test_sanity_tutorials.py @@ -27,6 +27,7 @@ import re whitelist = ['basic/index.md', 'c++/basics.md', 'c++/index.md', + 'control_flow/index.md', 'embedded/index.md', 'embedded/wine_detector.md', 'gluon/index.md',

[incubator-mxnet] branch master updated: Disable installation nightly test (#12571)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new f6d2bef Disable installation nightly test (#12571)

f6d2bef is described below

commit f6d2bef6562116c479b4e615bc9294a8bc404af3

Author: Aaron Markham

AuthorDate: Tue Sep 18 10:07:16 2018 -0700

Disable installation nightly test (#12571)

* force versions to go to API version

* fully disable the nightly installation tests

---

tests/nightly/Jenkinsfile | 5 ++---

1 file changed, 2 insertions(+), 3 deletions(-)

diff --git a/tests/nightly/Jenkinsfile b/tests/nightly/Jenkinsfile

index 35996fb..de550a0 100755

--- a/tests/nightly/Jenkinsfile

+++ b/tests/nightly/Jenkinsfile

@@ -45,7 +45,7 @@ core_logic: {

//Some install guide tests are currently diabled and tracked here:

//1. https://github.com/apache/incubator-mxnet/issues/11369

//2. https://github.com/apache/incubator-mxnet/issues/11288

- utils.docker_run('ubuntu_base_cpu', 'nightly_test_installation

ubuntu_python_cpu_virtualenv', false)

+ //utils.docker_run('ubuntu_base_cpu', 'nightly_test_installation

ubuntu_python_cpu_virtualenv', false)

//docker_run('ubuntu_base_cpu', 'nightly_test_installation

ubuntu_python_cpu_pip', false)

//docker_run('ubuntu_base_cpu', 'nightly_test_installation

ubuntu_python_cpu_docker', false)

//docker_run('ubuntu_base_cpu', 'nightly_test_installation

ubuntu_python_cpu_source', false)

@@ -59,7 +59,7 @@ core_logic: {

//Some install guide tests are currently diabled and tracked here:

//1. https://github.com/apache/incubator-mxnet/issues/11369

//2. https://github.com/apache/incubator-mxnet/issues/11288

- utils.docker_run('ubuntu_base_gpu', 'nightly_test_installation

ubuntu_python_gpu_virtualenv', true)

+ //utils.docker_run('ubuntu_base_gpu', 'nightly_test_installation

ubuntu_python_gpu_virtualenv', true)

//docker_run('ubuntu_base_gpu', 'nightly_test_installation

ubuntu_python_gpu_pip', true)

//docker_run('ubuntu_base_gpu', 'nightly_test_installation

ubuntu_python_gpu_docker', true)

utils.docker_run('ubuntu_base_gpu', 'nightly_test_installation

ubuntu_python_gpu_source', true)

@@ -131,4 +131,3 @@ failure_handler: {

}

}

)

-

[incubator-mxnet] branch master updated (9032e93 -> d8c51e5)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git. from 9032e93 Updating news, readme files and bumping master version to 1.3.1 (#12525) add d8c51e5 replacing windows setup with newer instructions (#12504) No new revisions were added by this update. Summary of changes: docs/install/windows_setup.md | 246 -- 1 file changed, 190 insertions(+), 56 deletions(-)

[incubator-mxnet] branch master updated: update apachecon links to https (#12521)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new e81c0e2 update apachecon links to https (#12521) e81c0e2 is described below commit e81c0e246f0bb00a5e0d0b134e93a1af7f0c82bb Author: Aaron Markham AuthorDate: Wed Sep 12 15:53:44 2018 -0700 update apachecon links to https (#12521) * update apachecon links to https * nudging file to get past flakey test --- docs/_static/mxnet-theme/index.html | 3 +-- 1 file changed, 1 insertion(+), 2 deletions(-) diff --git a/docs/_static/mxnet-theme/index.html b/docs/_static/mxnet-theme/index.html index c8417ef..d23d45d 100644 --- a/docs/_static/mxnet-theme/index.html +++ b/docs/_static/mxnet-theme/index.html @@ -15,7 +15,7 @@ -http://www.apachecon.com/acna18/; class="section-tout-promo">http://www.apachecon.com/acna18/banners/acna-sleek-highres.png; width="65%" alt="apachecon"/> +https://www.apachecon.com/acna18/; class="section-tout-promo">https://www.apachecon.com/acna18/banners/acna-sleek-highres.png; width="65%" alt="apachecon"/> @@ -42,7 +42,6 @@ -

[incubator-mxnet] branch master updated: fix subscribe links, remove disabled icons (#12474)

This is an automated email from the ASF dual-hosted git repository. indhub pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git The following commit(s) were added to refs/heads/master by this push: new acede67 fix subscribe links, remove disabled icons (#12474) acede67 is described below commit acede67649bf40bdbcf0081d4aea0a13aba8f500 Author: Aaron Markham AuthorDate: Mon Sep 10 16:54:17 2018 -0700 fix subscribe links, remove disabled icons (#12474) * fix subscribe links, remove disabled icons * update slack channel --- docs/community/mxnet_channels.md | 8 1 file changed, 4 insertions(+), 4 deletions(-) diff --git a/docs/community/mxnet_channels.md b/docs/community/mxnet_channels.md index 18dc1bc..98cce94 100644 --- a/docs/community/mxnet_channels.md +++ b/docs/community/mxnet_channels.md @@ -2,9 +2,9 @@ Converse with the MXNet community via the following channels: -- [Forum](https://discuss.mxnet.io/): [discuss.mxnet.io](https://discuss.mxnet.io/) -- [MXNet Apache developer mailing list](https://lists.apache.org/list.html?d...@mxnet.apache.org) (d...@mxnet.apache.org): To subscribe, send an email to mailto:user-subscr...@mxnet.apache.org;>dev-subscr...@mxnet.apache.org -- [MXNet Apache user mailing list](https://lists.apache.org/list.html?u...@mxnet.apache.org) (u...@mxnet.apache.org): To subscribe, send an email to mailto:dev-subscr...@mxnet.apache.org;>user-subscr...@mxnet.apache.org -- [MXNet Slack channel](https://apache-mxnet.slack.com): To request an invitation to the channel please subscribe to the mailing list above and then email: mailto:d...@mxnet.apache.org;>d...@mxnet.apache.org +- [Forum](https://discuss.mxnet.io/): [discuss.mxnet.io](https://discuss.mxnet.io/) +- [MXNet Apache developer mailing list](https://lists.apache.org/list.html?d...@mxnet.apache.org) (d...@mxnet.apache.org): To subscribe, send an email to mailto:user-subscr...@mxnet.apache.org;>user-subscr...@mxnet.apache.org +- [MXNet Apache user mailing list](https://lists.apache.org/list.html?u...@mxnet.apache.org) (u...@mxnet.apache.org): To subscribe, send an email to mailto:dev-subscr...@mxnet.apache.org;>dev-subscr...@mxnet.apache.org +- [MXNet Slack channel](https://the-asf.slack.com/) (Channel: #mxnet): To request an invitation to the channel please subscribe to the mailing list above and then email: mailto:d...@mxnet.apache.org;>d...@mxnet.apache.org Note: if you have an email address with apache.org, you do not need an approval to join the MXNet Slack channel.

[incubator-mxnet] branch master updated: Installation instructions consolidation (#12388)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new d7111d3 Installation instructions consolidation (#12388)

d7111d3 is described below

commit d7111d357dd682e9e75c6adc5c73e6b74c5541dd

Author: Aaron Markham

AuthorDate: Wed Sep 5 14:31:42 2018 -0700

Installation instructions consolidation (#12388)

* initial edits for c++ instructions

* consolidation and reorg of install docs

* simplify python section

* fix url rewrite to allow for testing

* further simplify install

* fix render issues

* adjust formatting

* adjust formatting

* fix python/gpu/master

* install footers

* update footers

* add mac dev setup link for osx

* adjust formatting for footer

* separate validation page

* add toc

* adjust formatting

* break out julia and perl

* fix pypi link

* fix formatting

* fix formatting

* fix formatting

* add build from source link

* updating from PR feedback

* add c++ and clojure to ubuntu guide; clarify c++ setup

* added pip chart to install; updates from PR feedback

* correct reference to c infer api

---

docs/_static/js/options.js| 20 +-

docs/install/build_from_source.md | 493 ++--

docs/install/c_plus_plus.md | 29 +

docs/install/centos_setup.md | 98 +-

docs/install/index.md | 2523 -

docs/install/osx_setup.md | 11 +

docs/install/ubuntu_setup.md | 181 +--

docs/install/validate_mxnet.md| 185 +++

docs/install/windows_setup.md | 14 +-

9 files changed, 1093 insertions(+), 2461 deletions(-)

diff --git a/docs/_static/js/options.js b/docs/_static/js/options.js

index 87e50b8..b43f391 100644

--- a/docs/_static/js/options.js

+++ b/docs/_static/js/options.js

@@ -8,7 +8,7 @@ $(document).ready(function () {

function label(lbl) {

return lbl.replace(/[ .]/g, '-').toLowerCase();

}

-

+

function urlSearchParams(searchString) {

let urlDict = new Map();

let searchParams = searchString.substring(1).split("&");

@@ -45,11 +45,11 @@ $(document).ready(function () {

showContent();

if (window.location.href.indexOf("/install/index.html") >= 0) {

if (versionSelect.indexOf(defaultVersion) >= 0) {

-history.pushState(null, null, '/install/index.html?platform='

+ platformSelect + '=' + languageSelect + '=' +

processorSelect);

+history.pushState(null, null, 'index.html?platform=' +

platformSelect + '=' + languageSelect + '=' +

processorSelect);

} else {

-history.pushState(null, null, '/install/index.html?version=' +

versionSelect + '=' + platformSelect + '=' + languageSelect +

'=' + processorSelect);

+history.pushState(null, null, 'index.html?version=' +

versionSelect + '=' + platformSelect + '=' + languageSelect +

'=' + processorSelect);

}

-}

+}

}

function showContent() {

@@ -73,22 +73,22 @@ $(document).ready(function () {

$('.current-version').html( $(this).text() + ' ' );

if ($(this).text().indexOf(defaultVersion) < 0) {

if (window.location.search.indexOf("version") < 0) {

-history.pushState(null, null, '/install/index.html' +

window.location.search.concat( '=' + $(this).text() ));

+history.pushState(null, null, 'index.html' +

window.location.search.concat( '=' + $(this).text() ));

} else {

-history.pushState(null, null, '/install/index.html' +

window.location.search.replace( urlParams.get('version'), $(this).text() ));

+history.pushState(null, null, 'index.html' +

window.location.search.replace( urlParams.get('version'), $(this).text() ));

}

} else if (window.location.search.indexOf("version") >= 0) {

- history.pushState(null, null, '/install/index.html' +

window.location.search.replace( 'version', 'prev' ));

+ history.pushState(null, null, 'index.html' +

window.location.search.replace( 'version', 'prev' ));

}

}

else if ($(this).hasClass("platforms")) {

-history.pushState(null, null, '/install/index.html' +

window.location.search.replace( urlParams.get('platform'), $(this).text() ));

+history.pushState(null, null, 'index.html' +

window.location.search.replace( urlParams.get('platform'), $(this).text() ));

}

else if ($(this).hasClass("lang

[incubator-mxnet] branch master updated: [MXAPPS-581] Straight Dope nightly fixes. (#11934)

This is an automated email from the ASF dual-hosted git repository.

indhub pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new c000930 [MXAPPS-581] Straight Dope nightly fixes. (#11934)

c000930 is described below

commit c00093053e1d18f82c6b5109bf56471891986e19

Author: vishaalkapoor <40836875+vishaalkap...@users.noreply.github.com>

AuthorDate: Wed Aug 8 13:31:48 2018 -0700

[MXAPPS-581] Straight Dope nightly fixes. (#11934)

Enable 3 notebooks that were failing tests after making updates to the

Straight Dope book. We also add pandas required by one of these

notebooks.

---

ci/docker/install/ubuntu_nightly_tests.sh | 5 +

.../nightly/straight_dope/test_notebooks_single_gpu.py | 17 +++--

2 files changed, 12 insertions(+), 10 deletions(-)

diff --git a/ci/docker/install/ubuntu_nightly_tests.sh

b/ci/docker/install/ubuntu_nightly_tests.sh

index df56cf5..0e6b437 100755

--- a/ci/docker/install/ubuntu_nightly_tests.sh

+++ b/ci/docker/install/ubuntu_nightly_tests.sh

@@ -30,3 +30,8 @@ apt-get -y install time

# Install for RAT License Check Nightly Test

apt-get install -y subversion maven -y #>/dev/null

+

+# Packages needed for the Straight Dope Nightly tests.

+pip2 install pandas

+pip3 install pandas

+

diff --git a/tests/nightly/straight_dope/test_notebooks_single_gpu.py

b/tests/nightly/straight_dope/test_notebooks_single_gpu.py

index ee7c94c..06ced96 100644

--- a/tests/nightly/straight_dope/test_notebooks_single_gpu.py