spark git commit: [SPARK-18874][SQL][FOLLOW-UP] Improvement type mismatched message

Repository: spark

Updated Branches:

refs/heads/master 78e0a725e -> 7a5fd4a91

[SPARK-18874][SQL][FOLLOW-UP] Improvement type mismatched message

## What changes were proposed in this pull request?

Improvement `IN` predicate type mismatched message:

```sql

Mismatched columns:

[(, t, 4, ., `, t, 4, a, `, :, d, o, u, b, l, e, ,, , t, 5, ., `, t, 5, a, `,

:, d, e, c, i, m, a, l, (, 1, 8, ,, 0, ), ), (, t, 4, ., `, t, 4, c, `, :, s,

t, r, i, n, g, ,, , t, 5, ., `, t, 5, c, `, :, b, i, g, i, n, t, )]

```

After this patch:

```sql

Mismatched columns:

[(t4.`t4a`:double, t5.`t5a`:decimal(18,0)), (t4.`t4c`:string, t5.`t5c`:bigint)]

```

## How was this patch tested?

unit tests

Author: Yuming Wang

Closes #21863 from wangyum/SPARK-18874.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/7a5fd4a9

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/7a5fd4a9

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/7a5fd4a9

Branch: refs/heads/master

Commit: 7a5fd4a91e19ee32b365eaf5678c627ad6c6d4c2

Parents: 78e0a72

Author: Yuming Wang

Authored: Tue Jul 24 23:59:13 2018 -0700

Committer: Xiao Li

Committed: Tue Jul 24 23:59:13 2018 -0700

--

.../sql/catalyst/expressions/predicates.scala | 2 +-

.../negative-cases/subq-input-typecheck.sql | 16 -

.../negative-cases/subq-input-typecheck.sql.out | 66

3 files changed, 70 insertions(+), 14 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/7a5fd4a9/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/predicates.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/predicates.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/predicates.scala

index 699601e..f4077f78 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/predicates.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/predicates.scala

@@ -189,7 +189,7 @@ case class In(value: Expression, list: Seq[Expression])

extends Predicate {

} else {

val mismatchedColumns = valExprs.zip(childOutputs).flatMap {

case (l, r) if l.dataType != r.dataType =>

-s"(${l.sql}:${l.dataType.catalogString},

${r.sql}:${r.dataType.catalogString})"

+Seq(s"(${l.sql}:${l.dataType.catalogString},

${r.sql}:${r.dataType.catalogString})")

case _ => None

}

TypeCheckResult.TypeCheckFailure(

http://git-wip-us.apache.org/repos/asf/spark/blob/7a5fd4a9/sql/core/src/test/resources/sql-tests/inputs/subquery/negative-cases/subq-input-typecheck.sql

--

diff --git

a/sql/core/src/test/resources/sql-tests/inputs/subquery/negative-cases/subq-input-typecheck.sql

b/sql/core/src/test/resources/sql-tests/inputs/subquery/negative-cases/subq-input-typecheck.sql

index b15f4da..95b115a 100644

---

a/sql/core/src/test/resources/sql-tests/inputs/subquery/negative-cases/subq-input-typecheck.sql

+++

b/sql/core/src/test/resources/sql-tests/inputs/subquery/negative-cases/subq-input-typecheck.sql

@@ -13,6 +13,14 @@ CREATE TEMPORARY VIEW t3 AS SELECT * FROM VALUES

(3, 1, 2)

AS t3(t3a, t3b, t3c);

+CREATE TEMPORARY VIEW t4 AS SELECT * FROM VALUES

+ (CAST(1 AS DOUBLE), CAST(2 AS STRING), CAST(3 AS STRING))

+AS t1(t4a, t4b, t4c);

+

+CREATE TEMPORARY VIEW t5 AS SELECT * FROM VALUES

+ (CAST(1 AS DECIMAL(18, 0)), CAST(2 AS STRING), CAST(3 AS BIGINT))

+AS t1(t5a, t5b, t5c);

+

-- TC 01.01

SELECT

( SELECT max(t2b), min(t2b)

@@ -44,4 +52,10 @@ WHERE

(t1a, t1b) IN (SELECT t2a

FROM t2

WHERE t1a = t2a);

-

+-- TC 01.05

+SELECT * FROM t4

+WHERE

+(t4a, t4b, t4c) IN (SELECT t5a,

+ t5b,

+ t5c

+FROM t5);

http://git-wip-us.apache.org/repos/asf/spark/blob/7a5fd4a9/sql/core/src/test/resources/sql-tests/results/subquery/negative-cases/subq-input-typecheck.sql.out

--

diff --git

a/sql/core/src/test/resources/sql-tests/results/subquery/negative-cases/subq-input-typecheck.sql.out

b/sql/core/src/test/resources/sql-tests/results/subquery/negative-cases/subq-input-typecheck.sql.out

index 70aeb93..dcd3005 100644

---

a/sql/core/src/test/resources/sql-tests/results/subquery/negative-cases/subq-input-typecheck.sql.out

+++

b/sql/core/src/test/resources/sql-tests/results/subquery/negative-cases/subq-input-typecheck.sql.out

@@ -1,5 +1,5 @@

-- Automatically generated by SQLQueryTestSuite

--- Number of queries: 7

+-- Number of queries: 10

-- !query 0

@@ -33,6 +33,2

spark git commit: [SPARK-19018][SQL] Add support for custom encoding on csv writer

Repository: spark

Updated Branches:

refs/heads/master afb062753 -> 78e0a725e

[SPARK-19018][SQL] Add support for custom encoding on csv writer

## What changes were proposed in this pull request?

Add support for custom encoding on csv writer, see

https://issues.apache.org/jira/browse/SPARK-19018

## How was this patch tested?

Added two unit tests in CSVSuite

Author: crafty-coder

Author: Carlos

Closes #20949 from crafty-coder/master.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/78e0a725

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/78e0a725

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/78e0a725

Branch: refs/heads/master

Commit: 78e0a725e06665cf92d4b8f987ee01947a1d620c

Parents: afb0627

Author: crafty-coder

Authored: Wed Jul 25 14:17:20 2018 +0800

Committer: hyukjinkwon

Committed: Wed Jul 25 14:17:20 2018 +0800

--

python/pyspark/sql/readwriter.py| 7 +++-

.../org/apache/spark/sql/DataFrameWriter.scala | 2 +

.../datasources/csv/CSVFileFormat.scala | 6 ++-

.../execution/datasources/csv/CSVSuite.scala| 39 +++-

4 files changed, 50 insertions(+), 4 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/78e0a725/python/pyspark/sql/readwriter.py

--

diff --git a/python/pyspark/sql/readwriter.py b/python/pyspark/sql/readwriter.py

index 3efe2ad..98b2cd9 100644

--- a/python/pyspark/sql/readwriter.py

+++ b/python/pyspark/sql/readwriter.py

@@ -859,7 +859,7 @@ class DataFrameWriter(OptionUtils):

def csv(self, path, mode=None, compression=None, sep=None, quote=None,

escape=None,

header=None, nullValue=None, escapeQuotes=None, quoteAll=None,

dateFormat=None,

timestampFormat=None, ignoreLeadingWhiteSpace=None,

ignoreTrailingWhiteSpace=None,

-charToEscapeQuoteEscaping=None):

+charToEscapeQuoteEscaping=None, encoding=None):

"""Saves the content of the :class:`DataFrame` in CSV format at the

specified path.

:param path: the path in any Hadoop supported file system

@@ -909,6 +909,8 @@ class DataFrameWriter(OptionUtils):

the quote character. If None is set,

the default value is

escape character when escape and

quote characters are

different, ``\0`` otherwise..

+:param encoding: sets the encoding (charset) of saved csv files. If

None is set,

+ the default UTF-8 charset will be used.

>>> df.write.csv(os.path.join(tempfile.mkdtemp(), 'data'))

"""

@@ -918,7 +920,8 @@ class DataFrameWriter(OptionUtils):

dateFormat=dateFormat, timestampFormat=timestampFormat,

ignoreLeadingWhiteSpace=ignoreLeadingWhiteSpace,

ignoreTrailingWhiteSpace=ignoreTrailingWhiteSpace,

- charToEscapeQuoteEscaping=charToEscapeQuoteEscaping)

+ charToEscapeQuoteEscaping=charToEscapeQuoteEscaping,

+ encoding=encoding)

self._jwrite.csv(path)

@since(1.5)

http://git-wip-us.apache.org/repos/asf/spark/blob/78e0a725/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

--

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

index 90bea2d..b9fa43f 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

@@ -629,6 +629,8 @@ final class DataFrameWriter[T] private[sql](ds: Dataset[T])

{

* enclosed in quotes. Default is to only escape values containing a quote

character.

* `header` (default `false`): writes the names of columns as the first

line.

* `nullValue` (default empty string): sets the string representation of

a null value.

+ * `encoding` (by default it is not set): specifies encoding (charset)

of saved csv

+ * files. If it is not set, the UTF-8 charset will be used.

* `compression` (default `null`): compression codec to use when saving

to file. This can be

* one of the known case-insensitive shorten names (`none`, `bzip2`, `gzip`,

`lz4`,

* `snappy` and `deflate`).

http://git-wip-us.apache.org/repos/asf/spark/blob/78e0a725/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/csv/CSVFileFormat.scala

--

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/csv/CSVFileFormat.scala

spark git commit: [SPARK-23957][SQL] Sorts in subqueries are redundant and can be removed

Repository: spark

Updated Branches:

refs/heads/master d4c341589 -> afb062753

[SPARK-23957][SQL] Sorts in subqueries are redundant and can be removed

## What changes were proposed in this pull request?

Thanks to henryr for the original idea at

https://github.com/apache/spark/pull/21049

Description from the original PR :

Subqueries (at least in SQL) have 'bag of tuples' semantics. Ordering

them is therefore redundant (unless combined with a limit).

This patch removes the top sort operators from the subquery plans.

This closes https://github.com/apache/spark/pull/21049.

## How was this patch tested?

Added test cases in SubquerySuite to cover in, exists and scalar subqueries.

Please review http://spark.apache.org/contributing.html before opening a pull

request.

Author: Dilip Biswal

Closes #21853 from dilipbiswal/SPARK-23957.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/afb06275

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/afb06275

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/afb06275

Branch: refs/heads/master

Commit: afb0627536494c654ce5dd72db648f1ee7da641c

Parents: d4c3415

Author: Dilip Biswal

Authored: Tue Jul 24 20:46:27 2018 -0700

Committer: Xiao Li

Committed: Tue Jul 24 20:46:27 2018 -0700

--

.../sql/catalyst/optimizer/Optimizer.scala | 12 +-

.../org/apache/spark/sql/SubquerySuite.scala| 300 ++-

2 files changed, 310 insertions(+), 2 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/afb06275/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

index 5ed7412..adb1350 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/Optimizer.scala

@@ -180,10 +180,20 @@ abstract class Optimizer(sessionCatalog: SessionCatalog)

* Optimize all the subqueries inside expression.

*/

object OptimizeSubqueries extends Rule[LogicalPlan] {

+private def removeTopLevelSort(plan: LogicalPlan): LogicalPlan = {

+ plan match {

+case Sort(_, _, child) => child

+case Project(fields, child) => Project(fields,

removeTopLevelSort(child))

+case other => other

+ }

+}

def apply(plan: LogicalPlan): LogicalPlan = plan transformAllExpressions {

case s: SubqueryExpression =>

val Subquery(newPlan) = Optimizer.this.execute(Subquery(s.plan))

-s.withNewPlan(newPlan)

+// At this point we have an optimized subquery plan that we are going

to attach

+// to this subquery expression. Here we can safely remove any top

level sort

+// in the plan as tuples produced by a subquery are un-ordered.

+s.withNewPlan(removeTopLevelSort(newPlan))

}

}

http://git-wip-us.apache.org/repos/asf/spark/blob/afb06275/sql/core/src/test/scala/org/apache/spark/sql/SubquerySuite.scala

--

diff --git a/sql/core/src/test/scala/org/apache/spark/sql/SubquerySuite.scala

b/sql/core/src/test/scala/org/apache/spark/sql/SubquerySuite.scala

index acef62d..cbffed9 100644

--- a/sql/core/src/test/scala/org/apache/spark/sql/SubquerySuite.scala

+++ b/sql/core/src/test/scala/org/apache/spark/sql/SubquerySuite.scala

@@ -17,7 +17,10 @@

package org.apache.spark.sql

-import org.apache.spark.sql.catalyst.plans.logical.Join

+import scala.collection.mutable.ArrayBuffer

+

+import org.apache.spark.sql.catalyst.expressions.SubqueryExpression

+import org.apache.spark.sql.catalyst.plans.logical.{Join, LogicalPlan, Sort}

import org.apache.spark.sql.test.SharedSQLContext

class SubquerySuite extends QueryTest with SharedSQLContext {

@@ -970,4 +973,299 @@ class SubquerySuite extends QueryTest with

SharedSQLContext {

Row("3", "b") :: Row("4", "b") :: Nil)

}

}

+

+ private def getNumSortsInQuery(query: String): Int = {

+val plan = sql(query).queryExecution.optimizedPlan

+getNumSorts(plan) + getSubqueryExpressions(plan).map{s =>

getNumSorts(s.plan)}.sum

+ }

+

+ private def getSubqueryExpressions(plan: LogicalPlan):

Seq[SubqueryExpression] = {

+val subqueryExpressions = ArrayBuffer.empty[SubqueryExpression]

+plan transformAllExpressions {

+ case s: SubqueryExpression =>

+subqueryExpressions ++= (getSubqueryExpressions(s.plan) :+ s)

+s

+}

+subqueryExpressions

+ }

+

+ private def getNumSorts(plan: LogicalPlan): Int = {

+plan.collect { case s: Sort => s

spark git commit: [SPARK-24890][SQL] Short circuiting the `if` condition when `trueValue` and `falseValue` are the same

Repository: spark

Updated Branches:

refs/heads/master c26b09216 -> d4c341589

[SPARK-24890][SQL] Short circuiting the `if` condition when `trueValue` and

`falseValue` are the same

## What changes were proposed in this pull request?

When `trueValue` and `falseValue` are semantic equivalence, the condition

expression in `if` can be removed to avoid extra computation in runtime.

## How was this patch tested?

Test added.

Author: DB Tsai

Closes #21848 from dbtsai/short-circuit-if.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/d4c34158

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/d4c34158

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/d4c34158

Branch: refs/heads/master

Commit: d4c341589499099654ed4febf235f19897a21601

Parents: c26b092

Author: DB Tsai

Authored: Tue Jul 24 20:21:11 2018 -0700

Committer: Xiao Li

Committed: Tue Jul 24 20:21:11 2018 -0700

--

.../sql/catalyst/optimizer/expressions.scala| 7 --

.../optimizer/SimplifyConditionalSuite.scala| 24 +++-

.../apache/spark/sql/test/SQLTestUtils.scala| 2 +-

3 files changed, 29 insertions(+), 4 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/d4c34158/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/expressions.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/expressions.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/expressions.scala

index cf17f59..4696699 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/expressions.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/expressions.scala

@@ -390,6 +390,8 @@ object SimplifyConditionals extends Rule[LogicalPlan] with

PredicateHelper {

case If(TrueLiteral, trueValue, _) => trueValue

case If(FalseLiteral, _, falseValue) => falseValue

case If(Literal(null, _), _, falseValue) => falseValue

+ case If(cond, trueValue, falseValue)

+if cond.deterministic && trueValue.semanticEquals(falseValue) =>

trueValue

case e @ CaseWhen(branches, elseValue) if branches.exists(x =>

falseOrNullLiteral(x._1)) =>

// If there are branches that are always false, remove them.

@@ -403,14 +405,14 @@ object SimplifyConditionals extends Rule[LogicalPlan]

with PredicateHelper {

e.copy(branches = newBranches)

}

- case e @ CaseWhen(branches, _) if branches.headOption.map(_._1) ==

Some(TrueLiteral) =>

+ case CaseWhen(branches, _) if

branches.headOption.map(_._1).contains(TrueLiteral) =>

// If the first branch is a true literal, remove the entire CaseWhen

and use the value

// from that. Note that CaseWhen.branches should never be empty, and

as a result the

// headOption (rather than head) added above is just an extra (and

unnecessary) safeguard.

branches.head._2

case CaseWhen(branches, _) if branches.exists(_._1 == TrueLiteral) =>

-// a branc with a TRue condition eliminates all following branches,

+// a branch with a true condition eliminates all following branches,

// these branches can be pruned away

val (h, t) = branches.span(_._1 != TrueLiteral)

CaseWhen( h :+ t.head, None)

@@ -651,6 +653,7 @@ object SimplifyCaseConversionExpressions extends

Rule[LogicalPlan] {

}

}

+

/**

* Combine nested [[Concat]] expressions.

*/

http://git-wip-us.apache.org/repos/asf/spark/blob/d4c34158/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/optimizer/SimplifyConditionalSuite.scala

--

diff --git

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/optimizer/SimplifyConditionalSuite.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/optimizer/SimplifyConditionalSuite.scala

index b597c8e..e210874 100644

---

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/optimizer/SimplifyConditionalSuite.scala

+++

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/optimizer/SimplifyConditionalSuite.scala

@@ -17,6 +17,8 @@

package org.apache.spark.sql.catalyst.optimizer

+import org.apache.spark.sql.catalyst.analysis.UnresolvedAttribute

+import org.apache.spark.sql.catalyst.dsl.expressions._

import org.apache.spark.sql.catalyst.dsl.plans._

import org.apache.spark.sql.catalyst.expressions._

import org.apache.spark.sql.catalyst.expressions.Literal.{FalseLiteral,

TrueLiteral}

@@ -29,7 +31,8 @@ import org.apache.spark.sql.types.{IntegerType, NullType}

class SimplifyConditionalSuite extends PlanTest with PredicateHelper {

object Optimize extends RuleExe

svn commit: r28337 - in /dev/spark/2.4.0-SNAPSHOT-2018_07_24_20_02-c26b092-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Wed Jul 25 03:17:00 2018 New Revision: 28337 Log: Apache Spark 2.4.0-SNAPSHOT-2018_07_24_20_02-c26b092 docs [This commit notification would consist of 1469 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-24891][SQL] Fix HandleNullInputsForUDF rule

Repository: spark

Updated Branches:

refs/heads/branch-2.3 740a23d7d -> 6a5999286

[SPARK-24891][SQL] Fix HandleNullInputsForUDF rule

The HandleNullInputsForUDF would always add a new `If` node every time it is

applied. That would cause a difference between the same plan being analyzed

once and being analyzed twice (or more), thus raising issues like plan not

matched in the cache manager. The solution is to mark the arguments as

null-checked, which is to add a "KnownNotNull" node above those arguments, when

adding the UDF under an `If` node, because clearly the UDF will not be called

when any of those arguments is null.

Add new tests under sql/UDFSuite and AnalysisSuite.

Author: maryannxue

Closes #21851 from maryannxue/spark-24891.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/6a599928

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/6a599928

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/6a599928

Branch: refs/heads/branch-2.3

Commit: 6a59992866a971abf6052e479ba48c6abded4d04

Parents: 740a23d

Author: maryannxue

Authored: Tue Jul 24 19:35:34 2018 -0700

Committer: Xiao Li

Committed: Tue Jul 24 19:39:23 2018 -0700

--

.../spark/sql/catalyst/analysis/Analyzer.scala | 22

.../expressions/constraintExpressions.scala | 35

.../sql/catalyst/analysis/AnalysisSuite.scala | 16 +++--

.../scala/org/apache/spark/sql/UDFSuite.scala | 31 -

4 files changed, 94 insertions(+), 10 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/6a599928/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

index 2858bee..5963c14 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

@@ -27,7 +27,7 @@ import org.apache.spark.sql.catalyst.encoders.OuterScopes

import org.apache.spark.sql.catalyst.expressions._

import org.apache.spark.sql.catalyst.expressions.SubExprUtils._

import org.apache.spark.sql.catalyst.expressions.aggregate._

-import org.apache.spark.sql.catalyst.expressions.objects.{LambdaVariable,

MapObjects, NewInstance, UnresolvedMapObjects}

+import org.apache.spark.sql.catalyst.expressions.objects._

import org.apache.spark.sql.catalyst.plans._

import org.apache.spark.sql.catalyst.plans.logical._

import org.apache.spark.sql.catalyst.rules._

@@ -2046,14 +2046,24 @@ class Analyzer(

val parameterTypes = ScalaReflection.getParameterTypes(func)

assert(parameterTypes.length == inputs.length)

+ // TODO: skip null handling for not-nullable primitive inputs after

we can completely

+ // trust the `nullable` information.

+ // (cls, expr) => cls.isPrimitive && expr.nullable

+ val needsNullCheck = (cls: Class[_], expr: Expression) =>

+cls.isPrimitive && !expr.isInstanceOf[KnowNotNull]

val inputsNullCheck = parameterTypes.zip(inputs)

-// TODO: skip null handling for not-nullable primitive inputs

after we can completely

-// trust the `nullable` information.

-// .filter { case (cls, expr) => cls.isPrimitive && expr.nullable }

-.filter { case (cls, _) => cls.isPrimitive }

+.filter { case (cls, expr) => needsNullCheck(cls, expr) }

.map { case (_, expr) => IsNull(expr) }

.reduceLeftOption[Expression]((e1, e2) => Or(e1, e2))

- inputsNullCheck.map(If(_, Literal.create(null, udf.dataType),

udf)).getOrElse(udf)

+ // Once we add an `If` check above the udf, it is safe to mark those

checked inputs

+ // as not nullable (i.e., wrap them with `KnownNotNull`), because

the null-returning

+ // branch of `If` will be called if any of these checked inputs is

null. Thus we can

+ // prevent this rule from being applied repeatedly.

+ val newInputs = parameterTypes.zip(inputs).map{ case (cls, expr) =>

+if (needsNullCheck(cls, expr)) KnowNotNull(expr) else expr }

+ inputsNullCheck

+.map(If(_, Literal.create(null, udf.dataType), udf.copy(children =

newInputs)))

+.getOrElse(udf)

}

}

}

http://git-wip-us.apache.org/repos/asf/spark/blob/6a599928/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/constraintExpressions.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apa

spark git commit: [SPARK-24891][SQL] Fix HandleNullInputsForUDF rule

Repository: spark

Updated Branches:

refs/heads/master 15fff7903 -> c26b09216

[SPARK-24891][SQL] Fix HandleNullInputsForUDF rule

## What changes were proposed in this pull request?

The HandleNullInputsForUDF would always add a new `If` node every time it is

applied. That would cause a difference between the same plan being analyzed

once and being analyzed twice (or more), thus raising issues like plan not

matched in the cache manager. The solution is to mark the arguments as

null-checked, which is to add a "KnownNotNull" node above those arguments, when

adding the UDF under an `If` node, because clearly the UDF will not be called

when any of those arguments is null.

## How was this patch tested?

Add new tests under sql/UDFSuite and AnalysisSuite.

Author: maryannxue

Closes #21851 from maryannxue/spark-24891.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/c26b0921

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/c26b0921

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/c26b0921

Branch: refs/heads/master

Commit: c26b0921693814f0726507f16b836d82e2e8cfe0

Parents: 15fff79

Author: maryannxue

Authored: Tue Jul 24 19:35:34 2018 -0700

Committer: Xiao Li

Committed: Tue Jul 24 19:35:34 2018 -0700

--

.../spark/sql/catalyst/analysis/Analyzer.scala | 22

.../expressions/constraintExpressions.scala | 35

.../sql/catalyst/analysis/AnalysisSuite.scala | 16 +++--

.../scala/org/apache/spark/sql/UDFSuite.scala | 31 -

4 files changed, 94 insertions(+), 10 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/c26b0921/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

index 866396c..4f474f4 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala

@@ -30,7 +30,7 @@ import org.apache.spark.sql.catalyst.encoders.OuterScopes

import org.apache.spark.sql.catalyst.expressions._

import org.apache.spark.sql.catalyst.expressions.SubExprUtils._

import org.apache.spark.sql.catalyst.expressions.aggregate._

-import org.apache.spark.sql.catalyst.expressions.objects.{LambdaVariable,

MapObjects, NewInstance, UnresolvedMapObjects}

+import org.apache.spark.sql.catalyst.expressions.objects._

import org.apache.spark.sql.catalyst.plans._

import org.apache.spark.sql.catalyst.plans.logical._

import org.apache.spark.sql.catalyst.rules._

@@ -2145,14 +2145,24 @@ class Analyzer(

val parameterTypes = ScalaReflection.getParameterTypes(func)

assert(parameterTypes.length == inputs.length)

+ // TODO: skip null handling for not-nullable primitive inputs after

we can completely

+ // trust the `nullable` information.

+ // (cls, expr) => cls.isPrimitive && expr.nullable

+ val needsNullCheck = (cls: Class[_], expr: Expression) =>

+cls.isPrimitive && !expr.isInstanceOf[KnowNotNull]

val inputsNullCheck = parameterTypes.zip(inputs)

-// TODO: skip null handling for not-nullable primitive inputs

after we can completely

-// trust the `nullable` information.

-// .filter { case (cls, expr) => cls.isPrimitive && expr.nullable }

-.filter { case (cls, _) => cls.isPrimitive }

+.filter { case (cls, expr) => needsNullCheck(cls, expr) }

.map { case (_, expr) => IsNull(expr) }

.reduceLeftOption[Expression]((e1, e2) => Or(e1, e2))

- inputsNullCheck.map(If(_, Literal.create(null, udf.dataType),

udf)).getOrElse(udf)

+ // Once we add an `If` check above the udf, it is safe to mark those

checked inputs

+ // as not nullable (i.e., wrap them with `KnownNotNull`), because

the null-returning

+ // branch of `If` will be called if any of these checked inputs is

null. Thus we can

+ // prevent this rule from being applied repeatedly.

+ val newInputs = parameterTypes.zip(inputs).map{ case (cls, expr) =>

+if (needsNullCheck(cls, expr)) KnowNotNull(expr) else expr }

+ inputsNullCheck

+.map(If(_, Literal.create(null, udf.dataType), udf.copy(children =

newInputs)))

+.getOrElse(udf)

}

}

}

http://git-wip-us.apache.org/repos/asf/spark/blob/c26b0921/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/constraintExpressions.scala

spark git commit: [SPARK-24297][CORE] Fetch-to-disk by default for > 2gb

Repository: spark

Updated Branches:

refs/heads/master 3efdf3532 -> 15fff7903

[SPARK-24297][CORE] Fetch-to-disk by default for > 2gb

Fetch-to-mem is guaranteed to fail if the message is bigger than 2 GB,

so we might as well use fetch-to-disk in that case. The message includes

some metadata in addition to the block data itself (in particular

UploadBlock has a lot of metadata), so we leave a little room.

Author: Imran Rashid

Closes #21474 from squito/SPARK-24297.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/15fff790

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/15fff790

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/15fff790

Branch: refs/heads/master

Commit: 15fff79032f6d708d8570b5e83144f1f84519552

Parents: 3efdf35

Author: Imran Rashid

Authored: Wed Jul 25 09:08:42 2018 +0800

Committer: jerryshao

Committed: Wed Jul 25 09:08:42 2018 +0800

--

.../scala/org/apache/spark/internal/config/package.scala | 6 +-

docs/configuration.md | 10 ++

2 files changed, 11 insertions(+), 5 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/15fff790/core/src/main/scala/org/apache/spark/internal/config/package.scala

--

diff --git a/core/src/main/scala/org/apache/spark/internal/config/package.scala

b/core/src/main/scala/org/apache/spark/internal/config/package.scala

index ba892bf..8fef2aa 100644

--- a/core/src/main/scala/org/apache/spark/internal/config/package.scala

+++ b/core/src/main/scala/org/apache/spark/internal/config/package.scala

@@ -432,7 +432,11 @@ package object config {

"external shuffle service, this feature can only be worked when

external shuffle" +

"service is newer than Spark 2.2.")

.bytesConf(ByteUnit.BYTE)

- .createWithDefault(Long.MaxValue)

+ // fetch-to-mem is guaranteed to fail if the message is bigger than 2

GB, so we might

+ // as well use fetch-to-disk in that case. The message includes some

metadata in addition

+ // to the block data itself (in particular UploadBlock has a lot of

metadata), so we leave

+ // extra room.

+ .createWithDefault(Int.MaxValue - 512)

private[spark] val TASK_METRICS_TRACK_UPDATED_BLOCK_STATUSES =

ConfigBuilder("spark.taskMetrics.trackUpdatedBlockStatuses")

http://git-wip-us.apache.org/repos/asf/spark/blob/15fff790/docs/configuration.md

--

diff --git a/docs/configuration.md b/docs/configuration.md

index 0c7c447..60c0358 100644

--- a/docs/configuration.md

+++ b/docs/configuration.md

@@ -580,13 +580,15 @@ Apart from these, the following properties are also

available, and may be useful

spark.maxRemoteBlockSizeFetchToMem

- Long.MaxValue

+ Int.MaxValue - 512

The remote block will be fetched to disk when size of the block is above

this threshold in bytes.

-This is to avoid a giant request takes too much memory. We can enable this

config by setting

-a specific value(e.g. 200m). Note this configuration will affect both

shuffle fetch

+This is to avoid a giant request that takes too much memory. By default,

this is only enabled

+for blocks > 2GB, as those cannot be fetched directly into memory, no

matter what resources are

+available. But it can be turned down to a much lower value (eg. 200m) to

avoid using too much

+memory on smaller blocks as well. Note this configuration will affect both

shuffle fetch

and block manager remote block fetch. For users who enabled external

shuffle service,

-this feature can only be worked when external shuffle service is newer

than Spark 2.2.

+this feature can only be used when external shuffle service is newer than

Spark 2.2.

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r28327 - in /dev/spark/2.4.0-SNAPSHOT-2018_07_24_16_01-fc21f19-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Tue Jul 24 23:16:06 2018 New Revision: 28327 Log: Apache Spark 2.4.0-SNAPSHOT-2018_07_24_16_01-fc21f19 docs [This commit notification would consist of 1469 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-24908][R][STYLE] removing spaces to make lintr happy

Repository: spark

Updated Branches:

refs/heads/master fc21f192a -> 3efdf3532

[SPARK-24908][R][STYLE] removing spaces to make lintr happy

## What changes were proposed in this pull request?

during my travails in porting spark builds to run on our centos worker, i

managed to recreate (as best i could) the centos environment on our new

ubuntu-testing machine.

while running my initial builds, lintr was crashing on some extraneous spaces

in test_basic.R (see:

https://amplab.cs.berkeley.edu/jenkins/job/spark-master-test-sbt-hadoop-2.6-ubuntu-test/862/console)

after removing those spaces, the ubuntu build happily passed the lintr tests.

## How was this patch tested?

i then tested this against a modified spark-master-test-sbt-hadoop-2.6 build

(see

https://amplab.cs.berkeley.edu/jenkins/view/RISELab%20Infra/job/testing-spark-master-test-with-updated-R-crap/4/),

which scp'ed a copy of test_basic.R in to the repo after the git clone.

everything seems to be working happily.

Author: shane knapp

Closes #21864 from shaneknapp/fixing-R-lint-spacing.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/3efdf353

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/3efdf353

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/3efdf353

Branch: refs/heads/master

Commit: 3efdf35327be38115b04b08e9c8d0aa282a904ab

Parents: fc21f19

Author: shane knapp

Authored: Tue Jul 24 16:13:57 2018 -0700

Committer: DB Tsai

Committed: Tue Jul 24 16:13:57 2018 -0700

--

R/pkg/inst/tests/testthat/test_basic.R | 8

1 file changed, 4 insertions(+), 4 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/3efdf353/R/pkg/inst/tests/testthat/test_basic.R

--

diff --git a/R/pkg/inst/tests/testthat/test_basic.R

b/R/pkg/inst/tests/testthat/test_basic.R

index 243f5f0..80df3d8 100644

--- a/R/pkg/inst/tests/testthat/test_basic.R

+++ b/R/pkg/inst/tests/testthat/test_basic.R

@@ -18,9 +18,9 @@

context("basic tests for CRAN")

test_that("create DataFrame from list or data.frame", {

- tryCatch( checkJavaVersion(),

+ tryCatch(checkJavaVersion(),

error = function(e) { skip("error on Java check") },

-warning = function(e) { skip("warning on Java check") } )

+warning = function(e) { skip("warning on Java check") })

sparkR.session(master = sparkRTestMaster, enableHiveSupport = FALSE,

sparkConfig = sparkRTestConfig)

@@ -54,9 +54,9 @@ test_that("create DataFrame from list or data.frame", {

})

test_that("spark.glm and predict", {

- tryCatch( checkJavaVersion(),

+ tryCatch(checkJavaVersion(),

error = function(e) { skip("error on Java check") },

-warning = function(e) { skip("warning on Java check") } )

+warning = function(e) { skip("warning on Java check") })

sparkR.session(master = sparkRTestMaster, enableHiveSupport = FALSE,

sparkConfig = sparkRTestConfig)

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-24895] Remove spotbugs plugin

Repository: spark

Updated Branches:

refs/heads/master d4a277f0c -> fc21f192a

[SPARK-24895] Remove spotbugs plugin

## What changes were proposed in this pull request?

Spotbugs maven plugin was a recently added plugin before 2.4.0 snapshot

artifacts were broken. To ensure it does not affect the maven deploy plugin,

this change removes it.

## How was this patch tested?

Local build was ran, but this patch will be actually tested by monitoring the

apache repo artifacts and making sure metadata is correctly uploaded after this

job is ran:

https://amplab.cs.berkeley.edu/jenkins/view/Spark%20Packaging/job/spark-master-maven-snapshots/

Author: Eric Chang

Closes #21865 from ericfchang/SPARK-24895.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/fc21f192

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/fc21f192

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/fc21f192

Branch: refs/heads/master

Commit: fc21f192a302e48e5c321852e2a25639c5a182b5

Parents: d4a277f

Author: Eric Chang

Authored: Tue Jul 24 15:53:50 2018 -0700

Committer: Yin Huai

Committed: Tue Jul 24 15:53:50 2018 -0700

--

pom.xml | 22 --

1 file changed, 22 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/fc21f192/pom.xml

--

diff --git a/pom.xml b/pom.xml

index 81a53ee..d75db0f 100644

--- a/pom.xml

+++ b/pom.xml

@@ -2610,28 +2610,6 @@

-

-com.github.spotbugs

-spotbugs-maven-plugin

-3.1.3

-

-

${basedir}/target/scala-${scala.binary.version}/classes

-

${basedir}/target/scala-${scala.binary.version}/test-classes

- Max

- Low

- true

- FindPuzzlers

- true

-

-

-

-

- check

-

-compile

-

-

-

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r28323 - in /dev/spark/2.4.0-SNAPSHOT-2018_07_24_12_01-d4a277f-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Tue Jul 24 19:16:08 2018 New Revision: 28323 Log: Apache Spark 2.4.0-SNAPSHOT-2018_07_24_12_01-d4a277f docs [This commit notification would consist of 1469 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

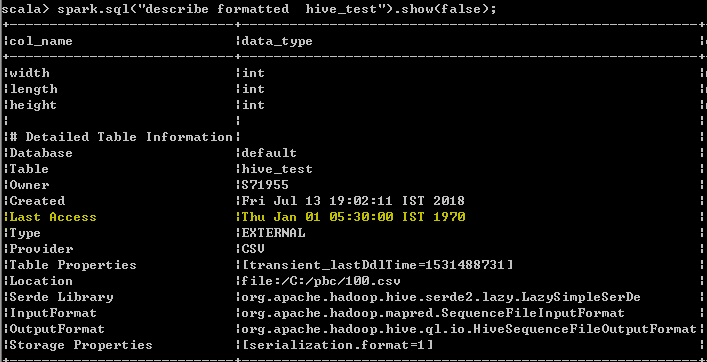

spark git commit: [SPARK-24812][SQL] Last Access Time in the table description is not valid

Repository: spark

Updated Branches:

refs/heads/master 9d27541a8 -> d4a277f0c

[SPARK-24812][SQL] Last Access Time in the table description is not valid

## What changes were proposed in this pull request?

Last Access Time will always displayed wrong date Thu Jan 01 05:30:00 IST 1970

when user run DESC FORMATTED table command

In hive its displayed as "UNKNOWN" which makes more sense than displaying wrong

date. seems to be a limitation as of now even from hive, better we can follow

the hive behavior unless the limitation has been resolved from hive.

spark client output

Hive client output

## How was this patch tested?

UT has been added which makes sure that the wrong date "Thu Jan 01 05:30:00 IST

1970 "

shall not be added as value for the Last Access property

Author: s71955

Closes #21775 from sujith71955/master_hive.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/d4a277f0

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/d4a277f0

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/d4a277f0

Branch: refs/heads/master

Commit: d4a277f0ce2d6e1832d87cae8faec38c5bc730f4

Parents: 9d27541

Author: s71955

Authored: Tue Jul 24 11:31:27 2018 -0700

Committer: Xiao Li

Committed: Tue Jul 24 11:31:27 2018 -0700

--

docs/sql-programming-guide.md | 1 +

.../apache/spark/sql/catalyst/catalog/interface.scala | 5 -

.../apache/spark/sql/hive/execution/HiveDDLSuite.scala | 13 +

3 files changed, 18 insertions(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/d4a277f0/docs/sql-programming-guide.md

--

diff --git a/docs/sql-programming-guide.md b/docs/sql-programming-guide.md

index 4bab58a..e815e5b 100644

--- a/docs/sql-programming-guide.md

+++ b/docs/sql-programming-guide.md

@@ -1850,6 +1850,7 @@ working with timestamps in `pandas_udf`s to get the best

performance, see

## Upgrading From Spark SQL 2.3 to 2.4

+ - Since Spark 2.4, Spark will display table description column Last Access

value as UNKNOWN when the value was Jan 01 1970.

- Since Spark 2.4, Spark maximizes the usage of a vectorized ORC reader for

ORC files by default. To do that, `spark.sql.orc.impl` and

`spark.sql.orc.filterPushdown` change their default values to `native` and

`true` respectively.

- In PySpark, when Arrow optimization is enabled, previously `toPandas` just

failed when Arrow optimization is unable to be used whereas `createDataFrame`

from Pandas DataFrame allowed the fallback to non-optimization. Now, both

`toPandas` and `createDataFrame` from Pandas DataFrame allow the fallback by

default, which can be switched off by

`spark.sql.execution.arrow.fallback.enabled`.

- Since Spark 2.4, writing an empty dataframe to a directory launches at

least one write task, even if physically the dataframe has no partition. This

introduces a small behavior change that for self-describing file formats like

Parquet and Orc, Spark creates a metadata-only file in the target directory

when writing a 0-partition dataframe, so that schema inference can still work

if users read that directory later. The new behavior is more reasonable and

more consistent regarding writing empty dataframe.

http://git-wip-us.apache.org/repos/asf/spark/blob/d4a277f0/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

--

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

index c6105c5..a4ead53 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

@@ -114,7 +114,10 @@ case class CatalogTablePartition(

map.put("Partition Parameters", s"{${parameters.map(p => p._1 + "=" +

p._2).mkString(", ")}}")

}

map.put("Created Time", new Date(createTime).toString)

-map.put("Last Access", new Date(lastAccessTime).toString)

+val lastAccess = {

+ if (-1 == lastAccessTime) "UNKNOWN" else new

Date(lastAccessTime).toString

+}

+map.put("Last Access", lastAccess)

stats.foreach(s => map.put("Partition Statistics", s.simpleString))

map

}

http://git-wip-us.apache.org/repos/asf/spark/blob/d4a277f0/sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/HiveDDLSuite.scala

---

spark git commit: [SPARK-23325] Use InternalRow when reading with DataSourceV2.

Repository: spark

Updated Branches:

refs/heads/master 3d5c61e5f -> 9d27541a8

[SPARK-23325] Use InternalRow when reading with DataSourceV2.

## What changes were proposed in this pull request?

This updates the DataSourceV2 API to use InternalRow instead of Row for the

default case with no scan mix-ins.

Support for readers that produce Row is added through

SupportsDeprecatedScanRow, which matches the previous API. Readers that used

Row now implement this class and should be migrated to InternalRow.

Readers that previously implemented SupportsScanUnsafeRow have been migrated to

use no SupportsScan mix-ins and produce InternalRow.

## How was this patch tested?

This uses existing tests.

Author: Ryan Blue

Closes #21118 from rdblue/SPARK-23325-datasource-v2-internal-row.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/9d27541a

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/9d27541a

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/9d27541a

Branch: refs/heads/master

Commit: 9d27541a856d95635386cbc98f2bb1f1f2f30c13

Parents: 3d5c61e

Author: Ryan Blue

Authored: Tue Jul 24 10:46:36 2018 -0700

Committer: Xiao Li

Committed: Tue Jul 24 10:46:36 2018 -0700

--

.../sql/kafka010/KafkaContinuousReader.scala| 16 ---

.../sql/kafka010/KafkaMicroBatchReader.scala| 21 -

.../kafka010/KafkaMicroBatchSourceSuite.scala | 2 +-

.../sql/sources/v2/reader/DataSourceReader.java | 6 +--

.../sources/v2/reader/InputPartitionReader.java | 7 +--

.../v2/reader/SupportsDeprecatedScanRow.java| 39 +

.../v2/reader/SupportsScanColumnarBatch.java| 4 +-

.../v2/reader/SupportsScanUnsafeRow.java| 46

.../datasources/v2/DataSourceRDD.scala | 1 -

.../datasources/v2/DataSourceV2ScanExec.scala | 17

.../datasources/v2/DataSourceV2Strategy.scala | 13 +++---

.../continuous/ContinuousDataSourceRDD.scala| 26 +--

.../continuous/ContinuousQueuedDataReader.scala | 8 ++--

.../continuous/ContinuousRateStreamSource.scala | 4 +-

.../spark/sql/execution/streaming/memory.scala | 16 +++

.../sources/ContinuousMemoryStream.scala| 7 +--

.../sources/RateStreamMicroBatchReader.scala| 4 +-

.../execution/streaming/sources/socket.scala| 7 +--

.../sources/v2/JavaAdvancedDataSourceV2.java| 4 +-

.../v2/JavaPartitionAwareDataSource.java| 4 +-

.../v2/JavaSchemaRequiredDataSource.java| 5 ++-

.../sql/sources/v2/JavaSimpleDataSourceV2.java | 5 ++-

.../sources/v2/JavaUnsafeRowDataSourceV2.java | 9 ++--

.../sources/RateStreamProviderSuite.scala | 6 +--

.../sql/sources/v2/DataSourceV2Suite.scala | 30 +++--

.../sources/v2/SimpleWritableDataSource.scala | 7 +--

.../sql/streaming/StreamingQuerySuite.scala | 6 +--

.../ContinuousQueuedDataReaderSuite.scala | 6 +--

.../sources/StreamingDataSourceV2Suite.scala| 7 +--

29 files changed, 168 insertions(+), 165 deletions(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/9d27541a/external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaContinuousReader.scala

--

diff --git

a/external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaContinuousReader.scala

b/external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaContinuousReader.scala

index badaa69..48b91df 100644

---

a/external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaContinuousReader.scala

+++

b/external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaContinuousReader.scala

@@ -26,6 +26,7 @@ import org.apache.kafka.common.TopicPartition

import org.apache.spark.TaskContext

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

+import org.apache.spark.sql.catalyst.InternalRow

import org.apache.spark.sql.catalyst.expressions.UnsafeRow

import

org.apache.spark.sql.kafka010.KafkaSourceProvider.{INSTRUCTION_FOR_FAIL_ON_DATA_LOSS_FALSE,

INSTRUCTION_FOR_FAIL_ON_DATA_LOSS_TRUE}

import org.apache.spark.sql.sources.v2.reader._

@@ -53,7 +54,7 @@ class KafkaContinuousReader(

metadataPath: String,

initialOffsets: KafkaOffsetRangeLimit,

failOnDataLoss: Boolean)

- extends ContinuousReader with SupportsScanUnsafeRow with Logging {

+ extends ContinuousReader with Logging {

private lazy val session = SparkSession.getActiveSession.get

private lazy val sc = session.sparkContext

@@ -86,7 +87,7 @@ class KafkaContinuousReader(

KafkaSourceOffset(JsonUtils.partitionOffsets(json))

}

- override def planUnsafeInputPartitions(): ju.List[InputPartition[UnsafeRow]]

= {

+ override def planInputPartitions(): ju.List[InputPa

svn commit: r28322 - in /dev/spark/2.4.0-SNAPSHOT-2018_07_24_08_02-3d5c61e-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Tue Jul 24 15:16:47 2018 New Revision: 28322 Log: Apache Spark 2.4.0-SNAPSHOT-2018_07_24_08_02-3d5c61e docs [This commit notification would consist of 1469 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r28315 - in /dev/spark/2.3.3-SNAPSHOT-2018_07_24_06_01-740a23d-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Tue Jul 24 13:16:23 2018 New Revision: 28315 Log: Apache Spark 2.3.3-SNAPSHOT-2018_07_24_06_01-740a23d docs [This commit notification would consist of 1443 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-22499][FOLLOWUP][SQL] Reduce input string expressions for Least and Greatest to reduce time in its test

Repository: spark

Updated Branches:

refs/heads/branch-2.2 144426cff -> f339e2fd7

[SPARK-22499][FOLLOWUP][SQL] Reduce input string expressions for Least and

Greatest to reduce time in its test

## What changes were proposed in this pull request?

It's minor and trivial but looks 2000 input is good enough to reproduce and

test in SPARK-22499.

## How was this patch tested?

Manually brought the change and tested.

Locally tested:

Before: 3m 21s 288ms

After: 1m 29s 134ms

Given the latest successful build took:

```

ArithmeticExpressionSuite:

- SPARK-22499: Least and greatest should not generate codes beyond 64KB (7

minutes, 49 seconds)

```

I expect it's going to save 4ish mins.

Author: hyukjinkwon

Closes #21855 from HyukjinKwon/minor-fix-suite.

(cherry picked from commit 3d5c61e5fd24f07302e39b5d61294da79aa0c2f9)

Signed-off-by: hyukjinkwon

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/f339e2fd

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/f339e2fd

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/f339e2fd

Branch: refs/heads/branch-2.2

Commit: f339e2fd7f9bfa1c7326749ad604e194ca0c300c

Parents: 144426c

Author: hyukjinkwon

Authored: Tue Jul 24 19:51:09 2018 +0800

Committer: hyukjinkwon

Committed: Tue Jul 24 19:51:53 2018 +0800

--

.../spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/f339e2fd/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

--

diff --git

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

index ca66010..0676b94 100644

---

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

+++

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

@@ -329,7 +329,7 @@ class ArithmeticExpressionSuite extends SparkFunSuite with

ExpressionEvalHelper

}

test("SPARK-22499: Least and greatest should not generate codes beyond

64KB") {

-val N = 3000

+val N = 2000

val strings = (1 to N).map(x => "s" * x)

val inputsExpr = strings.map(Literal.create(_, StringType))

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-22499][FOLLOWUP][SQL] Reduce input string expressions for Least and Greatest to reduce time in its test

Repository: spark

Updated Branches:

refs/heads/branch-2.3 f5bc94861 -> 740a23d7d

[SPARK-22499][FOLLOWUP][SQL] Reduce input string expressions for Least and

Greatest to reduce time in its test

## What changes were proposed in this pull request?

It's minor and trivial but looks 2000 input is good enough to reproduce and

test in SPARK-22499.

## How was this patch tested?

Manually brought the change and tested.

Locally tested:

Before: 3m 21s 288ms

After: 1m 29s 134ms

Given the latest successful build took:

```

ArithmeticExpressionSuite:

- SPARK-22499: Least and greatest should not generate codes beyond 64KB (7

minutes, 49 seconds)

```

I expect it's going to save 4ish mins.

Author: hyukjinkwon

Closes #21855 from HyukjinKwon/minor-fix-suite.

(cherry picked from commit 3d5c61e5fd24f07302e39b5d61294da79aa0c2f9)

Signed-off-by: hyukjinkwon

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/740a23d7

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/740a23d7

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/740a23d7

Branch: refs/heads/branch-2.3

Commit: 740a23d7d13a57083e190fe85636de893480dab5

Parents: f5bc948

Author: hyukjinkwon

Authored: Tue Jul 24 19:51:09 2018 +0800

Committer: hyukjinkwon

Committed: Tue Jul 24 19:51:26 2018 +0800

--

.../spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/740a23d7/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

--

diff --git

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

index 6edb434..94d046a 100644

---

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

+++

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

@@ -337,7 +337,7 @@ class ArithmeticExpressionSuite extends SparkFunSuite with

ExpressionEvalHelper

}

test("SPARK-22499: Least and greatest should not generate codes beyond

64KB") {

-val N = 3000

+val N = 2000

val strings = (1 to N).map(x => "s" * x)

val inputsExpr = strings.map(Literal.create(_, StringType))

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

spark git commit: [SPARK-22499][FOLLOWUP][SQL] Reduce input string expressions for Least and Greatest to reduce time in its test

Repository: spark

Updated Branches:

refs/heads/master 13a67b070 -> 3d5c61e5f

[SPARK-22499][FOLLOWUP][SQL] Reduce input string expressions for Least and

Greatest to reduce time in its test

## What changes were proposed in this pull request?

It's minor and trivial but looks 2000 input is good enough to reproduce and

test in SPARK-22499.

## How was this patch tested?

Manually brought the change and tested.

Locally tested:

Before: 3m 21s 288ms

After: 1m 29s 134ms

Given the latest successful build took:

```

ArithmeticExpressionSuite:

- SPARK-22499: Least and greatest should not generate codes beyond 64KB (7

minutes, 49 seconds)

```

I expect it's going to save 4ish mins.

Author: hyukjinkwon

Closes #21855 from HyukjinKwon/minor-fix-suite.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/3d5c61e5

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/3d5c61e5

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/3d5c61e5

Branch: refs/heads/master

Commit: 3d5c61e5fd24f07302e39b5d61294da79aa0c2f9

Parents: 13a67b0

Author: hyukjinkwon

Authored: Tue Jul 24 19:51:09 2018 +0800

Committer: hyukjinkwon

Committed: Tue Jul 24 19:51:09 2018 +0800

--

.../spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

--

http://git-wip-us.apache.org/repos/asf/spark/blob/3d5c61e5/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

--

diff --git

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

index 0212176..9a752af 100644

---

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

+++

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ArithmeticExpressionSuite.scala

@@ -349,7 +349,7 @@ class ArithmeticExpressionSuite extends SparkFunSuite with

ExpressionEvalHelper

}

test("SPARK-22499: Least and greatest should not generate codes beyond

64KB") {

-val N = 3000

+val N = 2000

val strings = (1 to N).map(x => "s" * x)

val inputsExpr = strings.map(Literal.create(_, StringType))

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

svn commit: r28311 - in /dev/spark/2.4.0-SNAPSHOT-2018_07_24_00_02-13a67b0-docs: ./ _site/ _site/api/ _site/api/R/ _site/api/java/ _site/api/java/lib/ _site/api/java/org/ _site/api/java/org/apache/ _s

Author: pwendell Date: Tue Jul 24 07:17:47 2018 New Revision: 28311 Log: Apache Spark 2.4.0-SNAPSHOT-2018_07_24_00_02-13a67b0 docs [This commit notification would consist of 1469 parts, which exceeds the limit of 50 ones, so it was shortened to the summary.] - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org