[GitHub] [spark-website] huaxingao commented on pull request #278: Add Huaxin Gao to committers.md

huaxingao commented on pull request #278: URL: https://github.com/apache/spark-website/pull/278#issuecomment-653306775 Thanks everyone! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] huaxingao closed pull request #278: Add Huaxin Gao to committers.md

huaxingao closed pull request #278: URL: https://github.com/apache/spark-website/pull/278 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark-website] branch asf-site updated: Add Huaxin Gao to committers.md

This is an automated email from the ASF dual-hosted git repository. huaxingao pushed a commit to branch asf-site in repository https://gitbox.apache.org/repos/asf/spark-website.git The following commit(s) were added to refs/heads/asf-site by this push: new 18d7e21 Add Huaxin Gao to committers.md 18d7e21 is described below commit 18d7e2103f9713adc09d69b65ebd4a48107c88f0 Author: Huaxin Gao AuthorDate: Thu Jul 2 19:38:42 2020 -0700 Add Huaxin Gao to committers.md Author: Huaxin Gao Closes #278 from huaxingao/asf-site. --- committers.md| 1 + site/committers.html | 4 2 files changed, 5 insertions(+) diff --git a/committers.md b/committers.md index 42b89d4..77e768d 100644 --- a/committers.md +++ b/committers.md @@ -26,6 +26,7 @@ navigation: |Erik Erlandson|Red Hat| |Robert Evans|NVIDIA| |Wenchen Fan|Databricks| +|Huaxin Gao|IBM| |Joseph Gonzalez|UC Berkeley| |Thomas Graves|NVIDIA| |Stephen Haberman|LinkedIn| diff --git a/site/committers.html b/site/committers.html index 5299961..66de9a1 100644 --- a/site/committers.html +++ b/site/committers.html @@ -275,6 +275,10 @@ Databricks + Huaxin Gao + IBM + + Joseph Gonzalez UC Berkeley - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] HeartSaVioR commented on pull request #278: Add Huaxin Gao to committers.md

HeartSaVioR commented on pull request #278: URL: https://github.com/apache/spark-website/pull/278#issuecomment-653269669 She's added in the roaster. http://people.apache.org/committer-index.html Probably she didn't setup ASF Gitbox yet? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] viirya edited a comment on pull request #278: Add Huaxin Gao to committers.md

viirya edited a comment on pull request #278: URL: https://github.com/apache/spark-website/pull/278#issuecomment-653265728 @srowen, @huaxingao seems not been added into https://github.com/orgs/apache/teams/spark-committers/members yet? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] viirya commented on pull request #278: Add Huaxin Gao to committers.md

viirya commented on pull request #278: URL: https://github.com/apache/spark-website/pull/278#issuecomment-653265728 @srowen @huaxingao seems not been added into https://github.com/orgs/apache/teams/spark-committers/members yet? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] viirya commented on pull request #277: Add Jungtaek Lim to committers.md

viirya commented on pull request #277: URL: https://github.com/apache/spark-website/pull/277#issuecomment-653260609 Congrats! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] huaxingao commented on pull request #277: Add Jungtaek Lim to committers.md

huaxingao commented on pull request #277: URL: https://github.com/apache/spark-website/pull/277#issuecomment-653256977 Congratulations! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] huaxingao opened a new pull request #278: Add Huaxin Gao to committers.md

huaxingao opened a new pull request #278: URL: https://github.com/apache/spark-website/pull/278 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 31e1ea1 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark 31e1ea1 is described below commit 31e1ea165f3cbb503b05452b448010e81474dcad Author: Max Gekk AuthorDate: Thu Jul 2 13:26:57 2020 -0700 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark ### What changes were proposed in this pull request? Set the JSON option `inferTimestamp` to `true` for the cases that measure perf of timestamp inference. ### Why are the changes needed? The PR https://github.com/apache/spark/pull/28966 disabled timestamp inference by default. As a consequence, some benchmarks don't measure perf of timestamp inference from JSON fields. This PR explicitly enable such inference. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By re-generating results of `JsonBenchmark`. Closes #28981 from MaxGekk/json-inferTimestamps-disable-by-default-followup. Authored-by: Max Gekk Signed-off-by: Dongjoon Hyun (cherry picked from commit 42f01e314b4874236544cc8b94bef766269385ee) Signed-off-by: Dongjoon Hyun --- .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) diff --git a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt index ff37084..2d506f0 100644 --- a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt +++ b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt @@ -7,106 +7,106 @@ OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-106 Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz JSON schema inferring:Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 69219 69342 116 1.4 692.2 1.0X -UTF-8 is set 143950 143986 55 0.71439.5 0.5X +No encoding 73307 73400 141 1.4 733.1 1.0X +UTF-8 is set 143834 143925 152 0.71438.3 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a short column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 57828 57913 136 1.7 578.3 1.0X -UTF-8 is set 83649 83711 60 1.2 836.5 0.7X +No encoding 50894 51065 292 2.0 508.9 1.0X +UTF-8 is set 98462 99455 1173 1.0 984.6 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a wide column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 64560 65193 1023 0.26456.0 1.0X -UTF-8 is set 102925 103174 216 0.1 10292.5 0.6X +No encoding 64011 64969 1001 0.26401.1 1.0X +UTF-8 is set 102757 102984 311 0.1 10275.7 0.6X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz select wide row: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative

[spark] branch branch-3.0 updated: [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 31e1ea1 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark 31e1ea1 is described below commit 31e1ea165f3cbb503b05452b448010e81474dcad Author: Max Gekk AuthorDate: Thu Jul 2 13:26:57 2020 -0700 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark ### What changes were proposed in this pull request? Set the JSON option `inferTimestamp` to `true` for the cases that measure perf of timestamp inference. ### Why are the changes needed? The PR https://github.com/apache/spark/pull/28966 disabled timestamp inference by default. As a consequence, some benchmarks don't measure perf of timestamp inference from JSON fields. This PR explicitly enable such inference. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By re-generating results of `JsonBenchmark`. Closes #28981 from MaxGekk/json-inferTimestamps-disable-by-default-followup. Authored-by: Max Gekk Signed-off-by: Dongjoon Hyun (cherry picked from commit 42f01e314b4874236544cc8b94bef766269385ee) Signed-off-by: Dongjoon Hyun --- .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) diff --git a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt index ff37084..2d506f0 100644 --- a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt +++ b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt @@ -7,106 +7,106 @@ OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-106 Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz JSON schema inferring:Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 69219 69342 116 1.4 692.2 1.0X -UTF-8 is set 143950 143986 55 0.71439.5 0.5X +No encoding 73307 73400 141 1.4 733.1 1.0X +UTF-8 is set 143834 143925 152 0.71438.3 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a short column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 57828 57913 136 1.7 578.3 1.0X -UTF-8 is set 83649 83711 60 1.2 836.5 0.7X +No encoding 50894 51065 292 2.0 508.9 1.0X +UTF-8 is set 98462 99455 1173 1.0 984.6 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a wide column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 64560 65193 1023 0.26456.0 1.0X -UTF-8 is set 102925 103174 216 0.1 10292.5 0.6X +No encoding 64011 64969 1001 0.26401.1 1.0X +UTF-8 is set 102757 102984 311 0.1 10275.7 0.6X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz select wide row: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative

[spark] branch master updated (0acad58 -> 42f01e3)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 0acad58 [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from in HiveExternalCatalogSuite add 42f01e3 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark No new revisions were added by this update. Summary of changes: .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 31e1ea1 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark 31e1ea1 is described below commit 31e1ea165f3cbb503b05452b448010e81474dcad Author: Max Gekk AuthorDate: Thu Jul 2 13:26:57 2020 -0700 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark ### What changes were proposed in this pull request? Set the JSON option `inferTimestamp` to `true` for the cases that measure perf of timestamp inference. ### Why are the changes needed? The PR https://github.com/apache/spark/pull/28966 disabled timestamp inference by default. As a consequence, some benchmarks don't measure perf of timestamp inference from JSON fields. This PR explicitly enable such inference. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By re-generating results of `JsonBenchmark`. Closes #28981 from MaxGekk/json-inferTimestamps-disable-by-default-followup. Authored-by: Max Gekk Signed-off-by: Dongjoon Hyun (cherry picked from commit 42f01e314b4874236544cc8b94bef766269385ee) Signed-off-by: Dongjoon Hyun --- .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) diff --git a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt index ff37084..2d506f0 100644 --- a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt +++ b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt @@ -7,106 +7,106 @@ OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-106 Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz JSON schema inferring:Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 69219 69342 116 1.4 692.2 1.0X -UTF-8 is set 143950 143986 55 0.71439.5 0.5X +No encoding 73307 73400 141 1.4 733.1 1.0X +UTF-8 is set 143834 143925 152 0.71438.3 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a short column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 57828 57913 136 1.7 578.3 1.0X -UTF-8 is set 83649 83711 60 1.2 836.5 0.7X +No encoding 50894 51065 292 2.0 508.9 1.0X +UTF-8 is set 98462 99455 1173 1.0 984.6 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a wide column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 64560 65193 1023 0.26456.0 1.0X -UTF-8 is set 102925 103174 216 0.1 10292.5 0.6X +No encoding 64011 64969 1001 0.26401.1 1.0X +UTF-8 is set 102757 102984 311 0.1 10275.7 0.6X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz select wide row: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative

[spark] branch master updated (0acad58 -> 42f01e3)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 0acad58 [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from in HiveExternalCatalogSuite add 42f01e3 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark No new revisions were added by this update. Summary of changes: .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 31e1ea1 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark 31e1ea1 is described below commit 31e1ea165f3cbb503b05452b448010e81474dcad Author: Max Gekk AuthorDate: Thu Jul 2 13:26:57 2020 -0700 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark ### What changes were proposed in this pull request? Set the JSON option `inferTimestamp` to `true` for the cases that measure perf of timestamp inference. ### Why are the changes needed? The PR https://github.com/apache/spark/pull/28966 disabled timestamp inference by default. As a consequence, some benchmarks don't measure perf of timestamp inference from JSON fields. This PR explicitly enable such inference. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By re-generating results of `JsonBenchmark`. Closes #28981 from MaxGekk/json-inferTimestamps-disable-by-default-followup. Authored-by: Max Gekk Signed-off-by: Dongjoon Hyun (cherry picked from commit 42f01e314b4874236544cc8b94bef766269385ee) Signed-off-by: Dongjoon Hyun --- .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) diff --git a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt index ff37084..2d506f0 100644 --- a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt +++ b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt @@ -7,106 +7,106 @@ OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-106 Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz JSON schema inferring:Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 69219 69342 116 1.4 692.2 1.0X -UTF-8 is set 143950 143986 55 0.71439.5 0.5X +No encoding 73307 73400 141 1.4 733.1 1.0X +UTF-8 is set 143834 143925 152 0.71438.3 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a short column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 57828 57913 136 1.7 578.3 1.0X -UTF-8 is set 83649 83711 60 1.2 836.5 0.7X +No encoding 50894 51065 292 2.0 508.9 1.0X +UTF-8 is set 98462 99455 1173 1.0 984.6 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a wide column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 64560 65193 1023 0.26456.0 1.0X -UTF-8 is set 102925 103174 216 0.1 10292.5 0.6X +No encoding 64011 64969 1001 0.26401.1 1.0X +UTF-8 is set 102757 102984 311 0.1 10275.7 0.6X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz select wide row: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative

[spark] branch master updated (0acad58 -> 42f01e3)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 0acad58 [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from in HiveExternalCatalogSuite add 42f01e3 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark No new revisions were added by this update. Summary of changes: .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 31e1ea1 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark 31e1ea1 is described below commit 31e1ea165f3cbb503b05452b448010e81474dcad Author: Max Gekk AuthorDate: Thu Jul 2 13:26:57 2020 -0700 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark ### What changes were proposed in this pull request? Set the JSON option `inferTimestamp` to `true` for the cases that measure perf of timestamp inference. ### Why are the changes needed? The PR https://github.com/apache/spark/pull/28966 disabled timestamp inference by default. As a consequence, some benchmarks don't measure perf of timestamp inference from JSON fields. This PR explicitly enable such inference. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By re-generating results of `JsonBenchmark`. Closes #28981 from MaxGekk/json-inferTimestamps-disable-by-default-followup. Authored-by: Max Gekk Signed-off-by: Dongjoon Hyun (cherry picked from commit 42f01e314b4874236544cc8b94bef766269385ee) Signed-off-by: Dongjoon Hyun --- .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) diff --git a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt index ff37084..2d506f0 100644 --- a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt +++ b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt @@ -7,106 +7,106 @@ OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-106 Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz JSON schema inferring:Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 69219 69342 116 1.4 692.2 1.0X -UTF-8 is set 143950 143986 55 0.71439.5 0.5X +No encoding 73307 73400 141 1.4 733.1 1.0X +UTF-8 is set 143834 143925 152 0.71438.3 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a short column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 57828 57913 136 1.7 578.3 1.0X -UTF-8 is set 83649 83711 60 1.2 836.5 0.7X +No encoding 50894 51065 292 2.0 508.9 1.0X +UTF-8 is set 98462 99455 1173 1.0 984.6 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a wide column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 64560 65193 1023 0.26456.0 1.0X -UTF-8 is set 102925 103174 216 0.1 10292.5 0.6X +No encoding 64011 64969 1001 0.26401.1 1.0X +UTF-8 is set 102757 102984 311 0.1 10275.7 0.6X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz select wide row: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative

[spark] branch master updated (0acad58 -> 42f01e3)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 0acad58 [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from in HiveExternalCatalogSuite add 42f01e3 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark No new revisions were added by this update. Summary of changes: .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/master by this push: new 42f01e3 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark 42f01e3 is described below commit 42f01e314b4874236544cc8b94bef766269385ee Author: Max Gekk AuthorDate: Thu Jul 2 13:26:57 2020 -0700 [SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark ### What changes were proposed in this pull request? Set the JSON option `inferTimestamp` to `true` for the cases that measure perf of timestamp inference. ### Why are the changes needed? The PR https://github.com/apache/spark/pull/28966 disabled timestamp inference by default. As a consequence, some benchmarks don't measure perf of timestamp inference from JSON fields. This PR explicitly enable such inference. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By re-generating results of `JsonBenchmark`. Closes #28981 from MaxGekk/json-inferTimestamps-disable-by-default-followup. Authored-by: Max Gekk Signed-off-by: Dongjoon Hyun --- .../benchmarks/JsonBenchmark-jdk11-results.txt | 86 +++--- sql/core/benchmarks/JsonBenchmark-results.txt | 86 +++--- .../execution/datasources/json/JsonBenchmark.scala | 4 +- 3 files changed, 88 insertions(+), 88 deletions(-) diff --git a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt index ff37084..2d506f0 100644 --- a/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt +++ b/sql/core/benchmarks/JsonBenchmark-jdk11-results.txt @@ -7,106 +7,106 @@ OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-106 Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz JSON schema inferring:Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 69219 69342 116 1.4 692.2 1.0X -UTF-8 is set 143950 143986 55 0.71439.5 0.5X +No encoding 73307 73400 141 1.4 733.1 1.0X +UTF-8 is set 143834 143925 152 0.71438.3 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a short column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 57828 57913 136 1.7 578.3 1.0X -UTF-8 is set 83649 83711 60 1.2 836.5 0.7X +No encoding 50894 51065 292 2.0 508.9 1.0X +UTF-8 is set 98462 99455 1173 1.0 984.6 0.5X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz count a wide column: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding 64560 65193 1023 0.26456.0 1.0X -UTF-8 is set 102925 103174 216 0.1 10292.5 0.6X +No encoding 64011 64969 1001 0.26401.1 1.0X +UTF-8 is set 102757 102984 311 0.1 10275.7 0.6X Preparing data for benchmarking ... OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz select wide row: Best Time(ms) Avg Time(ms) Stdev(ms)Rate(M/s) Per Row(ns) Relative -No encoding

[GitHub] [spark-website] MaxGekk commented on pull request #277: Add Jungtaek Lim to committers.md

MaxGekk commented on pull request #277: URL: https://github.com/apache/spark-website/pull/277#issuecomment-653139604 @HeartSaVioR Congratulations! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f082a79 -> 0acad58)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f082a79 [SPARK-31100][SQL] Check namespace existens when setting namespace add 0acad58 [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from in HiveExternalCatalogSuite No new revisions were added by this update. Summary of changes: .../spark/sql/hive/HiveExternalCatalogSuite.scala | 54 -- 1 file changed, 19 insertions(+), 35 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f082a79 -> 0acad58)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f082a79 [SPARK-31100][SQL] Check namespace existens when setting namespace add 0acad58 [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from in HiveExternalCatalogSuite No new revisions were added by this update. Summary of changes: .../spark/sql/hive/HiveExternalCatalogSuite.scala | 54 -- 1 file changed, 19 insertions(+), 35 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f082a79 -> 0acad58)

This is an automated email from the ASF dual-hosted git repository. dongjoon pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f082a79 [SPARK-31100][SQL] Check namespace existens when setting namespace add 0acad58 [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from in HiveExternalCatalogSuite No new revisions were added by this update. Summary of changes: .../spark/sql/hive/HiveExternalCatalogSuite.scala | 54 -- 1 file changed, 19 insertions(+), 35 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from in HiveExternalCatalogSuite

This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 0acad58 [SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar

test cases from in HiveExternalCatalogSuite

0acad58 is described below

commit 0acad589e120cd777b25c03777a3cce4ef704422

Author: TJX2014

AuthorDate: Thu Jul 2 10:15:10 2020 -0700

[SPARK-32156][SPARK-31061][TESTS][SQL] Refactor two similar test cases from

in HiveExternalCatalogSuite

### What changes were proposed in this pull request?

1.Merge two similar tests for SPARK-31061 and make the code clean.

2.Fix table alter issue due to lose path.

### Why are the changes needed?

Because this two tests for SPARK-31061 is very similar and could be merged.

And the first test case should use `rawTable` instead of `parquetTable` to

alter.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test.

Closes #28980 from TJX2014/master-follow-merge-spark-31061-test-case.

Authored-by: TJX2014

Signed-off-by: Dongjoon Hyun

---

.../spark/sql/hive/HiveExternalCatalogSuite.scala | 54 --

1 file changed, 19 insertions(+), 35 deletions(-)

diff --git

a/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveExternalCatalogSuite.scala

b/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveExternalCatalogSuite.scala

index 473a93b..270595b 100644

---

a/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveExternalCatalogSuite.scala

+++

b/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveExternalCatalogSuite.scala

@@ -181,41 +181,25 @@ class HiveExternalCatalogSuite extends

ExternalCatalogSuite {

"INSERT overwrite directory \"fs://localhost/tmp\" select 1 as a"))

}

- test("SPARK-31061: alterTable should be able to change table provider") {

+ test("SPARK-31061: alterTable should be able to change table provider/hive")

{

val catalog = newBasicCatalog()

-val parquetTable = CatalogTable(

- identifier = TableIdentifier("parq_tbl", Some("db1")),

- tableType = CatalogTableType.MANAGED,

- storage = storageFormat.copy(locationUri = Some(new

URI("file:/some/path"))),

- schema = new StructType().add("col1", "int").add("col2", "string"),

- provider = Some("parquet"))

-catalog.createTable(parquetTable, ignoreIfExists = false)

-

-val rawTable = externalCatalog.getTable("db1", "parq_tbl")

-assert(rawTable.provider === Some("parquet"))

-

-val fooTable = parquetTable.copy(provider = Some("foo"))

-catalog.alterTable(fooTable)

-val alteredTable = externalCatalog.getTable("db1", "parq_tbl")

-assert(alteredTable.provider === Some("foo"))

- }

-

- test("SPARK-31061: alterTable should be able to change table provider from

hive") {

-val catalog = newBasicCatalog()

-val hiveTable = CatalogTable(

- identifier = TableIdentifier("parq_tbl", Some("db1")),

- tableType = CatalogTableType.MANAGED,

- storage = storageFormat,

- schema = new StructType().add("col1", "int").add("col2", "string"),

- provider = Some("hive"))

-catalog.createTable(hiveTable, ignoreIfExists = false)

-

-val rawTable = externalCatalog.getTable("db1", "parq_tbl")

-assert(rawTable.provider === Some("hive"))

-

-val fooTable = rawTable.copy(provider = Some("foo"))

-catalog.alterTable(fooTable)

-val alteredTable = externalCatalog.getTable("db1", "parq_tbl")

-assert(alteredTable.provider === Some("foo"))

+Seq("parquet", "hive").foreach( provider => {

+ val tableDDL = CatalogTable(

+identifier = TableIdentifier("parq_tbl", Some("db1")),

+tableType = CatalogTableType.MANAGED,

+storage = storageFormat,

+schema = new StructType().add("col1", "int"),

+provider = Some(provider))

+ catalog.dropTable("db1", "parq_tbl", true, true)

+ catalog.createTable(tableDDL, ignoreIfExists = false)

+

+ val rawTable = externalCatalog.getTable("db1", "parq_tbl")

+ assert(rawTable.provider === Some(provider))

+

+ val fooTable = rawTable.copy(provider = Some("foo"))

+ catalog.alterTable(fooTable)

+ val alteredTable = externalCatalog.getTable("db1", "parq_tbl")

+ assert(alteredTable.provider === Some("foo"))

+})

}

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] gengliangwang commented on pull request #277: Add Jungtaek Lim to committers.md

gengliangwang commented on pull request #277: URL: https://github.com/apache/spark-website/pull/277#issuecomment-653124021 Congrats! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[GitHub] [spark-website] dongjoon-hyun commented on pull request #277: Add Jungtaek Lim to committers.md

dongjoon-hyun commented on pull request #277: URL: https://github.com/apache/spark-website/pull/277#issuecomment-653066964 Congrats! :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f834156 -> f082a79)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f834156 [MINOR][TEST][SQL] Make in-limit.sql more robust add f082a79 [SPARK-31100][SQL] Check namespace existens when setting namespace No new revisions were added by this update. Summary of changes: .../sql/connector/catalog/CatalogManager.scala | 15 +++- .../connector/catalog/CatalogManagerSuite.scala| 16 + .../spark/sql/connector/DataSourceV2SQLSuite.scala | 28 ++ 3 files changed, 48 insertions(+), 11 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f834156 -> f082a79)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f834156 [MINOR][TEST][SQL] Make in-limit.sql more robust add f082a79 [SPARK-31100][SQL] Check namespace existens when setting namespace No new revisions were added by this update. Summary of changes: .../sql/connector/catalog/CatalogManager.scala | 15 +++- .../connector/catalog/CatalogManagerSuite.scala| 16 + .../spark/sql/connector/DataSourceV2SQLSuite.scala | 28 ++ 3 files changed, 48 insertions(+), 11 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f834156 -> f082a79)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f834156 [MINOR][TEST][SQL] Make in-limit.sql more robust add f082a79 [SPARK-31100][SQL] Check namespace existens when setting namespace No new revisions were added by this update. Summary of changes: .../sql/connector/catalog/CatalogManager.scala | 15 +++- .../connector/catalog/CatalogManagerSuite.scala| 16 + .../spark/sql/connector/DataSourceV2SQLSuite.scala | 28 ++ 3 files changed, 48 insertions(+), 11 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (f834156 -> f082a79)

This is an automated email from the ASF dual-hosted git repository. wenchen pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from f834156 [MINOR][TEST][SQL] Make in-limit.sql more robust add f082a79 [SPARK-31100][SQL] Check namespace existens when setting namespace No new revisions were added by this update. Summary of changes: .../sql/connector/catalog/CatalogManager.scala | 15 +++- .../connector/catalog/CatalogManagerSuite.scala| 16 + .../spark/sql/connector/DataSourceV2SQLSuite.scala | 28 ++ 3 files changed, 48 insertions(+), 11 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-2.4 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-2.4 by this push: new 2227a16 [MINOR][TEST][SQL] Make in-limit.sql more robust 2227a16 is described below commit 2227a166782797f0e47a5d850b9713829300a466 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index a40ee08..a3cab37 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 71ca1f8..cde1577 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-2.4 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-2.4 by this push: new 2227a16 [MINOR][TEST][SQL] Make in-limit.sql more robust 2227a16 is described below commit 2227a166782797f0e47a5d850b9713829300a466 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index a40ee08..a3cab37 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 71ca1f8..cde1577 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 334b1e8 [MINOR][TEST][SQL] Make in-limit.sql more robust 334b1e8 is described below commit 334b1e8c88d6f10d5e4cde8fa400e34fb04faa39 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index 481b5e8..0a16f11 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 1c33544..e24538b 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (45fe6b6 -> f834156)

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 45fe6b6 [MINOR][DOCS] Pyspark getActiveSession docstring add f834156 [MINOR][TEST][SQL] Make in-limit.sql more robust No new revisions were added by this update. Summary of changes: .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-2.4 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-2.4 by this push: new 2227a16 [MINOR][TEST][SQL] Make in-limit.sql more robust 2227a16 is described below commit 2227a166782797f0e47a5d850b9713829300a466 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index a40ee08..a3cab37 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 71ca1f8..cde1577 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 334b1e8 [MINOR][TEST][SQL] Make in-limit.sql more robust 334b1e8 is described below commit 334b1e8c88d6f10d5e4cde8fa400e34fb04faa39 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index 481b5e8..0a16f11 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 1c33544..e24538b 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (45fe6b6 -> f834156)

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 45fe6b6 [MINOR][DOCS] Pyspark getActiveSession docstring add f834156 [MINOR][TEST][SQL] Make in-limit.sql more robust No new revisions were added by this update. Summary of changes: .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [MINOR][DOCS] Pyspark getActiveSession docstring

This is an automated email from the ASF dual-hosted git repository.

gurwls223 pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 5361f76 [MINOR][DOCS] Pyspark getActiveSession docstring

5361f76 is described below

commit 5361f76d84de4986aa65a687bece94f4220edd94

Author: animenon

AuthorDate: Thu Jul 2 21:02:00 2020 +0900

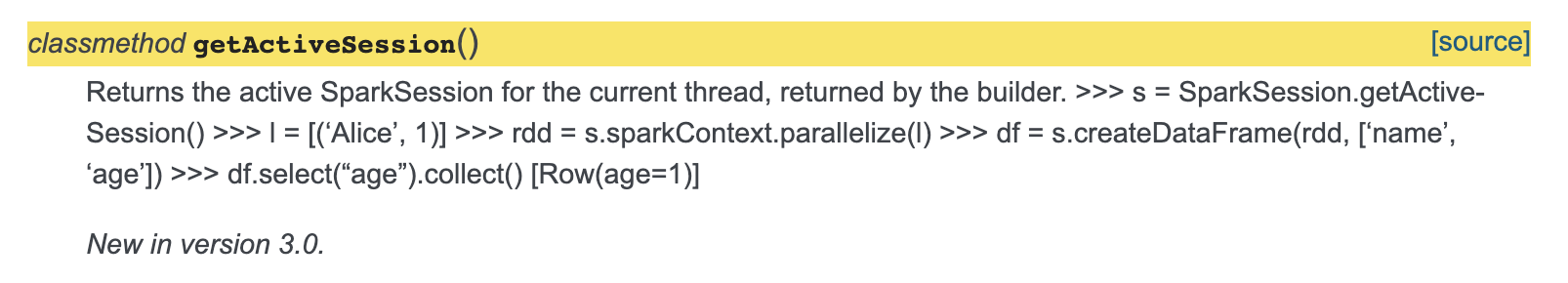

[MINOR][DOCS] Pyspark getActiveSession docstring

### What changes were proposed in this pull request?

Minor fix so that the documentation of `getActiveSession` is fixed.

The sample code snippet doesn't come up formatted rightly, added spacing

for this to be fixed.

Also added return to docs.

### Why are the changes needed?

The sample code is getting mixed up as description in the docs.

[Current Doc

Link](http://spark.apache.org/docs/latest/api/python/pyspark.sql.html?highlight=getactivesession#pyspark.sql.SparkSession.getActiveSession)

### Does this PR introduce _any_ user-facing change?

Yes, documentation of getActiveSession is fixed.

And added description about return.

### How was this patch tested?

Adding a spacing between description and code seems to fix the issue.

Closes #28978 from animenon/docs_minor.

Authored-by: animenon

Signed-off-by: HyukjinKwon

(cherry picked from commit 45fe6b62a73540ff010317fc7518b007206707d6)

Signed-off-by: HyukjinKwon

---

python/pyspark/sql/session.py | 5 -

1 file changed, 4 insertions(+), 1 deletion(-)

diff --git a/python/pyspark/sql/session.py b/python/pyspark/sql/session.py

index 233f492..e9486a3 100644

--- a/python/pyspark/sql/session.py

+++ b/python/pyspark/sql/session.py

@@ -265,7 +265,10 @@ class SparkSession(SparkConversionMixin):

@since(3.0)

def getActiveSession(cls):

"""

-Returns the active SparkSession for the current thread, returned by

the builder.

+Returns the active SparkSession for the current thread, returned by

the builder

+

+:return: :class:`SparkSession` if an active session exists for the

current thread

+

>>> s = SparkSession.getActiveSession()

>>> l = [('Alice', 1)]

>>> rdd = s.sparkContext.parallelize(l)

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-2.4 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-2.4 by this push: new 2227a16 [MINOR][TEST][SQL] Make in-limit.sql more robust 2227a16 is described below commit 2227a166782797f0e47a5d850b9713829300a466 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index a40ee08..a3cab37 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 71ca1f8..cde1577 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 334b1e8 [MINOR][TEST][SQL] Make in-limit.sql more robust 334b1e8 is described below commit 334b1e8c88d6f10d5e4cde8fa400e34fb04faa39 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index 481b5e8..0a16f11 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 1c33544..e24538b 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (45fe6b6 -> f834156)

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 45fe6b6 [MINOR][DOCS] Pyspark getActiveSession docstring add f834156 [MINOR][TEST][SQL] Make in-limit.sql more robust No new revisions were added by this update. Summary of changes: .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [MINOR][DOCS] Pyspark getActiveSession docstring

This is an automated email from the ASF dual-hosted git repository.

gurwls223 pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 5361f76 [MINOR][DOCS] Pyspark getActiveSession docstring

5361f76 is described below

commit 5361f76d84de4986aa65a687bece94f4220edd94

Author: animenon

AuthorDate: Thu Jul 2 21:02:00 2020 +0900

[MINOR][DOCS] Pyspark getActiveSession docstring

### What changes were proposed in this pull request?

Minor fix so that the documentation of `getActiveSession` is fixed.

The sample code snippet doesn't come up formatted rightly, added spacing

for this to be fixed.

Also added return to docs.

### Why are the changes needed?

The sample code is getting mixed up as description in the docs.

[Current Doc

Link](http://spark.apache.org/docs/latest/api/python/pyspark.sql.html?highlight=getactivesession#pyspark.sql.SparkSession.getActiveSession)

### Does this PR introduce _any_ user-facing change?

Yes, documentation of getActiveSession is fixed.

And added description about return.

### How was this patch tested?

Adding a spacing between description and code seems to fix the issue.

Closes #28978 from animenon/docs_minor.

Authored-by: animenon

Signed-off-by: HyukjinKwon

(cherry picked from commit 45fe6b62a73540ff010317fc7518b007206707d6)

Signed-off-by: HyukjinKwon

---

python/pyspark/sql/session.py | 5 -

1 file changed, 4 insertions(+), 1 deletion(-)

diff --git a/python/pyspark/sql/session.py b/python/pyspark/sql/session.py

index 233f492..e9486a3 100644

--- a/python/pyspark/sql/session.py

+++ b/python/pyspark/sql/session.py

@@ -265,7 +265,10 @@ class SparkSession(SparkConversionMixin):

@since(3.0)

def getActiveSession(cls):

"""

-Returns the active SparkSession for the current thread, returned by

the builder.

+Returns the active SparkSession for the current thread, returned by

the builder

+

+:return: :class:`SparkSession` if an active session exists for the

current thread

+

>>> s = SparkSession.getActiveSession()

>>> l = [('Alice', 1)]

>>> rdd = s.sparkContext.parallelize(l)

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (7fda184 -> 45fe6b6)

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 7fda184 [SPARK-32121][SHUFFLE] Support Windows OS in ExecutorDiskUtils add 45fe6b6 [MINOR][DOCS] Pyspark getActiveSession docstring No new revisions were added by this update. Summary of changes: python/pyspark/sql/session.py | 5 - 1 file changed, 4 insertions(+), 1 deletion(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-2.4 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-2.4 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-2.4 by this push: new 2227a16 [MINOR][TEST][SQL] Make in-limit.sql more robust 2227a16 is described below commit 2227a166782797f0e47a5d850b9713829300a466 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index a40ee08..a3cab37 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 71ca1f8..cde1577 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [MINOR][TEST][SQL] Make in-limit.sql more robust

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a commit to branch branch-3.0 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.0 by this push: new 334b1e8 [MINOR][TEST][SQL] Make in-limit.sql more robust 334b1e8 is described below commit 334b1e8c88d6f10d5e4cde8fa400e34fb04faa39 Author: Wenchen Fan AuthorDate: Thu Jul 2 21:04:26 2020 +0900 [MINOR][TEST][SQL] Make in-limit.sql more robust ### What changes were proposed in this pull request? For queries like `t1d in (SELECT t2d FROM t2 ORDER BY t2c LIMIT 2)`, the result can be non-deterministic as the result of the subquery may output different results (it's not sorted by `t2d` and it has shuffle). This PR makes the test more robust by sorting the output column. ### Why are the changes needed? avoid flaky test ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? N/A Closes #28976 from cloud-fan/small. Authored-by: Wenchen Fan Signed-off-by: HyukjinKwon (cherry picked from commit f83415629b18d628f72a32285f0afc24f29eaa1e) Signed-off-by: HyukjinKwon --- .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) diff --git a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql index 481b5e8..0a16f11 100644 --- a/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql +++ b/sql/core/src/test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql @@ -72,7 +72,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -93,7 +93,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last diff --git a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out index 1c33544..e24538b 100644 --- a/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out +++ b/sql/core/src/test/resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out @@ -103,7 +103,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d IN (SELECT t2d FROM t2 - ORDER BY t2c + ORDER BY t2c, t2d LIMIT 2) GROUP BY t1b ORDER BY t1b DESC NULLS FIRST @@ -136,7 +136,7 @@ SELECT Count(DISTINCT( t1a )), FROM t1 WHERE t1d NOT IN (SELECT t2d FROM t2 - ORDER BY t2b DESC nulls first + ORDER BY t2b DESC nulls first, t2d LIMIT 1) GROUP BY t1b ORDER BY t1b NULLS last - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (45fe6b6 -> f834156)

This is an automated email from the ASF dual-hosted git repository. gurwls223 pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 45fe6b6 [MINOR][DOCS] Pyspark getActiveSession docstring add f834156 [MINOR][TEST][SQL] Make in-limit.sql more robust No new revisions were added by this update. Summary of changes: .../test/resources/sql-tests/inputs/subquery/in-subquery/in-limit.sql | 4 ++-- .../resources/sql-tests/results/subquery/in-subquery/in-limit.sql.out | 4 ++-- 2 files changed, 4 insertions(+), 4 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [MINOR][DOCS] Pyspark getActiveSession docstring