(spark) branch master updated (ee2a87b4642c -> 8fa794b13195)

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from ee2a87b4642c [SPARK-40876][SQL][TESTS][FOLLOW-UP] Remove invalid decimal test case when ANSI mode is on add 8fa794b13195 [SPARK-46627][SS][UI] Fix timeline tooltip content on streaming ui No new revisions were added by this update. Summary of changes: core/src/main/resources/org/apache/spark/ui/static/streaming-page.js | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-44490][WEBUI] Remove unused `TaskPagedTable` in StagePage

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 546e39c5dab [SPARK-44490][WEBUI] Remove unused `TaskPagedTable` in

StagePage

546e39c5dab is described below

commit 546e39c5dabc243ab81b6238dc893d9993e0

Author: sychen

AuthorDate: Tue Aug 1 15:37:27 2023 +0900

[SPARK-44490][WEBUI] Remove unused `TaskPagedTable` in StagePage

### What changes were proposed in this pull request?

Remove `TaskPagedTable`

### Why are the changes needed?

In [SPARK-21809](https://issues.apache.org/jira/browse/SPARK-21809), we

introduced `stagespage-template.html` to show the running status of Stage.

`TaskPagedTable` is no longer effective, but there are still many PRs

updating related codes.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

local test

Closes #42085 from cxzl25/SPARK-44490.

Authored-by: sychen

Signed-off-by: Kousuke Saruta

---

.../scala/org/apache/spark/ui/jobs/StagePage.scala | 301 +

.../scala/org/apache/spark/ui/StagePageSuite.scala | 12 +-

2 files changed, 13 insertions(+), 300 deletions(-)

diff --git a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

index 02aece6e50a..d50ccdadff5 100644

--- a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

+++ b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

@@ -17,17 +17,12 @@

package org.apache.spark.ui.jobs

-import java.net.URLEncoder

-import java.nio.charset.StandardCharsets.UTF_8

import java.util.Date

-import java.util.concurrent.TimeUnit

import javax.servlet.http.HttpServletRequest

-import scala.collection.mutable.{HashMap, HashSet}

+import scala.collection.mutable.HashSet

import scala.xml.{Node, Unparsed}

-import org.apache.commons.text.StringEscapeUtils

-

import org.apache.spark.internal.config.UI._

import org.apache.spark.scheduler.TaskLocality

import org.apache.spark.status._

@@ -209,32 +204,20 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

val dagViz = UIUtils.showDagVizForStage(stageId, stageGraph)

val currentTime = System.currentTimeMillis()

-val taskTable = try {

- val _taskTable = new TaskPagedTable(

-stageData,

-UIUtils.prependBaseUri(request, parent.basePath) +

- s"/stages/stage/?id=${stageId}&attempt=${stageAttemptId}",

-pageSize = taskPageSize,

-sortColumn = taskSortColumn,

-desc = taskSortDesc,

-store = parent.store

- )

- _taskTable

-} catch {

- case e @ (_ : IllegalArgumentException | _ : IndexOutOfBoundsException)

=>

-null

-}

val content =

summary ++

dagViz ++ ++

makeTimeline(

// Only show the tasks in the table

-Option(taskTable).map({ taskPagedTable =>

+() => {

val from = (eventTimelineTaskPage - 1) * eventTimelineTaskPageSize

- val to = taskPagedTable.dataSource.dataSize.min(

-eventTimelineTaskPage * eventTimelineTaskPageSize)

- taskPagedTable.dataSource.sliceData(from, to)}).getOrElse(Nil),

currentTime,

+ val dataSize = store.taskCount(stageData.stageId,

stageData.attemptId).toInt

+ val to = dataSize.min(eventTimelineTaskPage *

eventTimelineTaskPageSize)

+ val sliceData = store.taskList(stageData.stageId,

stageData.attemptId, from, to - from,

+indexName(taskSortColumn), !taskSortDesc)

+ sliceData

+}, currentTime,

eventTimelineTaskPage, eventTimelineTaskPageSize,

eventTimelineTotalPages, stageId,

stageAttemptId, totalTasks) ++

@@ -246,8 +229,8 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

}

- def makeTimeline(

- tasks: Seq[TaskData],

+ private def makeTimeline(

+ tasksFunc: () => Seq[TaskData],

currentTime: Long,

page: Int,

pageSize: Int,

@@ -258,6 +241,8 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

if (!TIMELINE_ENABLED) return Seq.empty[Node]

+val tasks = tasksFunc()

+

val executorsSet = new HashSet[(String, String)]

var minLaunchTime = Long.MaxValue

var maxFinishTime = Long.MinValue

@@ -453,268 +438,6 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

}

-private[ui] class TaskDataSource(

-stage: StageData,

-pageSize: Int,

-sortColumn: String,

-desc: Boolean,

-store: AppStatusStore) extends PagedDataSource[TaskData](pageSize) {

- import ApiHelper._

-

- // Keep an internal cache of executor log maps so that long task lists

ren

[spark] branch master updated: [MINOR][UI] Simplify columnDefs in stagepage.js

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 46440a4a542 [MINOR][UI] Simplify columnDefs in stagepage.js

46440a4a542 is described below

commit 46440a4a542148bc05b8c0f80d1860e6380efdb6

Author: Kent Yao

AuthorDate: Sat Jul 22 17:12:07 2023 +0900

[MINOR][UI] Simplify columnDefs in stagepage.js

### What changes were proposed in this pull request?

Simplify `columnDefs` in stagepage.js

### Why are the changes needed?

Reduce hardcode in stagepage.js and potential inconsistency for hidden/show

in future changes.

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

Locally verified.

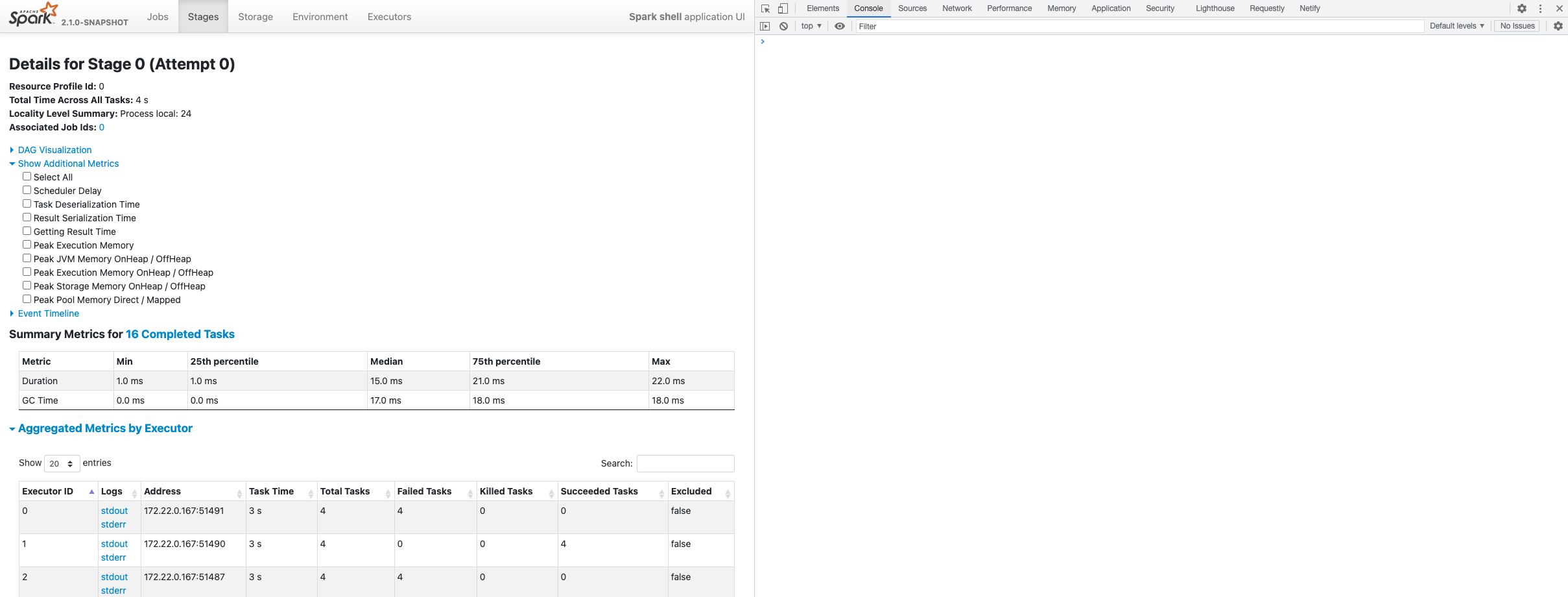

https://github.com/apache/spark/assets/8326978/3b3595a4-7825-47d5-8c28-30ec916321e6";>

Closes #42101 from yaooqinn/m.

Authored-by: Kent Yao

Signed-off-by: Kousuke Saruta

---

.../org/apache/spark/ui/static/stagepage.js| 35 ++

1 file changed, 9 insertions(+), 26 deletions(-)

diff --git a/core/src/main/resources/org/apache/spark/ui/static/stagepage.js

b/core/src/main/resources/org/apache/spark/ui/static/stagepage.js

index 50bf959d3aa..a8792593bf2 100644

--- a/core/src/main/resources/org/apache/spark/ui/static/stagepage.js

+++ b/core/src/main/resources/org/apache/spark/ui/static/stagepage.js

@@ -235,11 +235,7 @@ function

createDataTableForTaskSummaryMetricsTable(taskSummaryMetricsTable) {

}

],

"columnDefs": [

-{ "type": "duration", "targets": 1 },

-{ "type": "duration", "targets": 2 },

-{ "type": "duration", "targets": 3 },

-{ "type": "duration", "targets": 4 },

-{ "type": "duration", "targets": 5 }

+{ "type": "duration", "targets": [1, 2, 3, 4, 5] }

],

"paging": false,

"info": false,

@@ -592,22 +588,16 @@ $(document).ready(function () {

// The targets: $id represents column id which comes from

stagespage-template.html

// #summary-executor-table.If the relative position of the

columns in the table

// #summary-executor-table has changed,please be careful to

adjust the column index here

-// Input Size / Records

-{"type": "size", "targets": 9},

-// Output Size / Records

-{"type": "size", "targets": 10},

-// Shuffle Read Size / Records

-{"type": "size", "targets": 11},

-// Shuffle Write Size / Records

-{"type": "size", "targets": 12},

+// Input Size / Records - 9

+// Output Size / Records - 10

+// Shuffle Read Size / Records - 11

+// Shuffle Write Size / Records - 12

+{"type": "size", "targets": [9, 10, 11, 12]},

// Peak JVM Memory OnHeap / OffHeap

-{"visible": false, "targets": 15},

// Peak Execution Memory OnHeap / OffHeap

-{"visible": false, "targets": 16},

// Peak Storage Memory OnHeap / OffHeap

-{"visible": false, "targets": 17},

// Peak Pool Memory Direct / Mapped

-{"visible": false, "targets": 18}

+{"visible": false, "targets": executorOptionalColumns},

],

"deferRender": true,

"order": [[0, "asc"]],

@@ -1079,15 +1069,8 @@ $(document).ready(function () {

}

],

"columnDefs": [

-{ "visible": false, "targets": 11 },

-{ "visible": false, "targets": 12 },

-{ "visible": false, "targets": 13 },

-{ "visible": false, "targets": 14 },

-{ "visible": false, "targets": 15 },

-{ "visible": false, "targets": 16 },

-{ "visible": false, "targets": 17 },

-{ "visible": false, "targets": 18 },

-{ "visible": false, "targets": 21 }

+{ "visible": false, "targets": optionalColumns },

+{ "visible": false, "targets": 18 }, // accumulators

],

"deferRender": true

};

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-44279][BUILD] Upgrade `optionator` to ^0.9.3

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new d35fda69e49 [SPARK-44279][BUILD] Upgrade `optionator` to ^0.9.3

d35fda69e49 is described below

commit d35fda69e49b06cda316ecd664acb22cb8c12266

Author: Bjørn Jørgensen

AuthorDate: Fri Jul 14 03:26:56 2023 +0900

[SPARK-44279][BUILD] Upgrade `optionator` to ^0.9.3

### What changes were proposed in this pull request?

This PR proposes a change in the package.json file to update the resolution

for the `optionator` package to ^0.9.3.

I've added a resolutions field to package.json and specified the

`optionator` package version as ^0.9.3.

This will ensure that our project uses `optionator` version 0.9.3 or the

latest minor or patch version (due to the caret ^), regardless of any other

version that may be specified in the dependencies or nested dependencies of our

project.

### Why are the changes needed?

[CVE-2023-26115](https://nvd.nist.gov/vuln/detail/CVE-2023-26115)

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass GA

Closes #41955 from bjornjorgensen/word-wrap.

Authored-by: Bjørn Jørgensen

Signed-off-by: Kousuke Saruta

---

dev/package-lock.json | 774 ++

dev/package.json | 3 +

2 files changed, 350 insertions(+), 427 deletions(-)

diff --git a/dev/package-lock.json b/dev/package-lock.json

index 104a3fb7854..f676b9cec07 100644

--- a/dev/package-lock.json

+++ b/dev/package-lock.json

@@ -10,6 +10,15 @@

"minimatch": "^3.1.2"

}

},

+"node_modules/@aashutoshrathi/word-wrap": {

+ "version": "1.2.6",

+ "resolved":

"https://registry.npmjs.org/@aashutoshrathi/word-wrap/-/word-wrap-1.2.6.tgz";,

+ "integrity":

"sha512-1Yjs2SvM8TflER/OD3cOjhWWOZb58A2t7wpE2S9XfBYTiIl+XFhQG2bjy4Pu1I+EAlCNUzRDYDdFwFYUKvXcIA==",

+ "dev": true,

+ "engines": {

+"node": ">=0.10.0"

+ }

+},

"node_modules/@babel/code-frame": {

"version": "7.12.11",

"resolved":

"https://registry.npmjs.org/@babel/code-frame/-/code-frame-7.12.11.tgz";,

@@ -20,20 +29,38 @@

}

},

"node_modules/@babel/helper-validator-identifier": {

- "version": "7.14.0",

- "resolved":

"https://registry.npmjs.org/@babel/helper-validator-identifier/-/helper-validator-identifier-7.14.0.tgz";,

- "integrity":

"sha512-V3ts7zMSu5lfiwWDVWzRDGIN+lnCEUdaXgtVHJgLb1rGaA6jMrtB9EmE7L18foXJIE8Un/A/h6NJfGQp/e1J4A==",

- "dev": true

+ "version": "7.22.5",

+ "resolved":

"https://registry.npmjs.org/@babel/helper-validator-identifier/-/helper-validator-identifier-7.22.5.tgz";,

+ "integrity":

"sha512-aJXu+6lErq8ltp+JhkJUfk1MTGyuA4v7f3pA+BJ5HLfNC6nAQ0Cpi9uOquUj8Hehg0aUiHzWQbOVJGao6ztBAQ==",

+ "dev": true,

+ "engines": {

+"node": ">=6.9.0"

+ }

},

"node_modules/@babel/highlight": {

- "version": "7.14.0",

- "resolved":

"https://registry.npmjs.org/@babel/highlight/-/highlight-7.14.0.tgz";,

- "integrity":

"sha512-YSCOwxvTYEIMSGaBQb5kDDsCopDdiUGsqpatp3fOlI4+2HQSkTmEVWnVuySdAC5EWCqSWWTv0ib63RjR7dTBdg==",

+ "version": "7.22.5",

+ "resolved":

"https://registry.npmjs.org/@babel/highlight/-/highlight-7.22.5.tgz";,

+ "integrity":

"sha512-BSKlD1hgnedS5XRnGOljZawtag7H1yPfQp0tdNJCHoH6AZ+Pcm9VvkrK59/Yy593Ypg0zMxH2BxD1VPYUQ7UIw==",

"dev": true,

"dependencies": {

-"@babel/helper-validator-identifier": "^7.14.0",

+"@babel/helper-validator-identifier": "^7.22.5",

"chalk": "^2.0.0",

"js-tokens": "^4.0.0"

+ },

+ "engines": {

+"node": ">=6.9.0"

+ }

+},

+"node_modules/@babel/highlight/node_modules/ansi-styles": {

+ "version": "3.2.1",

+ "resolved":

"https://registry.npmjs.org/ansi-styles/-/ansi-styles-3.2.1.tgz";,

+ "integrity":

"sha512-VT0ZI6kZRdTh8YyJw3SMbYm/u+NqfsAxEpWO0Pf9sq8/e94WxxOpPKx9FR1FlyCtOVDNOQ+8ntlqFxiRc+r5qA==",

+ "dev": true,

+ "dependencies": {

+

[spark] branch master updated: [SPARK-41634][BUILD] Upgrade `minimatch` to 3.1.2

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 4539260f4ac [SPARK-41634][BUILD] Upgrade `minimatch` to 3.1.2

4539260f4ac is described below

commit 4539260f4ac346f22ce1a47ca9e94e3181803490

Author: Bjørn

AuthorDate: Wed Dec 21 13:49:45 2022 +0900

[SPARK-41634][BUILD] Upgrade `minimatch` to 3.1.2

### What changes were proposed in this pull request?

Upgrade `minimatch` to 3.1.2

$ npm -v

9.1.2

$ npm install

added 118 packages, and audited 119 packages in 2s

15 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

### Why are the changes needed?

[CVE-2022-3517](https://nvd.nist.gov/vuln/detail/CVE-2022-3517)

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass GA

Closes #39143 from bjornjorgensen/upgrade-minimatch.

Authored-by: Bjørn

Signed-off-by: Kousuke Saruta

---

dev/package-lock.json | 15 ---

dev/package.json | 3 ++-

2 files changed, 10 insertions(+), 8 deletions(-)

diff --git a/dev/package-lock.json b/dev/package-lock.json

index c2a61b389ac..104a3fb7854 100644

--- a/dev/package-lock.json

+++ b/dev/package-lock.json

@@ -6,7 +6,8 @@

"": {

"devDependencies": {

"ansi-regex": "^5.0.1",

-"eslint": "^7.25.0"

+"eslint": "^7.25.0",

+"minimatch": "^3.1.2"

}

},

"node_modules/@babel/code-frame": {

@@ -853,9 +854,9 @@

}

},

"node_modules/minimatch": {

- "version": "3.0.4",

- "resolved": "https://registry.npmjs.org/minimatch/-/minimatch-3.0.4.tgz";,

- "integrity":

"sha512-yJHVQEhyqPLUTgt9B83PXu6W3rx4MvvHvSUvToogpwoGDOUQ+yDrR0HRot+yOCdCO7u4hX3pWft6kWBBcqh0UA==",

+ "version": "3.1.2",

+ "resolved": "https://registry.npmjs.org/minimatch/-/minimatch-3.1.2.tgz";,

+ "integrity":

"sha512-J7p63hRiAjw1NDEww1W7i37+ByIrOWO5XQQAzZ3VOcL0PNybwpfmV/N05zFAzwQ9USyEcX6t3UO+K5aqBQOIHw==",

"dev": true,

"dependencies": {

"brace-expansion": "^1.1.7"

@@ -1931,9 +1932,9 @@

}

},

"minimatch": {

- "version": "3.0.4",

- "resolved": "https://registry.npmjs.org/minimatch/-/minimatch-3.0.4.tgz";,

- "integrity":

"sha512-yJHVQEhyqPLUTgt9B83PXu6W3rx4MvvHvSUvToogpwoGDOUQ+yDrR0HRot+yOCdCO7u4hX3pWft6kWBBcqh0UA==",

+ "version": "3.1.2",

+ "resolved": "https://registry.npmjs.org/minimatch/-/minimatch-3.1.2.tgz";,

+ "integrity":

"sha512-J7p63hRiAjw1NDEww1W7i37+ByIrOWO5XQQAzZ3VOcL0PNybwpfmV/N05zFAzwQ9USyEcX6t3UO+K5aqBQOIHw==",

"dev": true,

"requires": {

"brace-expansion": "^1.1.7"

diff --git a/dev/package.json b/dev/package.json

index f975bdde831..4e4a4bf1bca 100644

--- a/dev/package.json

+++ b/dev/package.json

@@ -1,6 +1,7 @@

{

"devDependencies": {

"eslint": "^7.25.0",

-"ansi-regex": "^5.0.1"

+"ansi-regex": "^5.0.1",

+"minimatch": "^3.1.2"

}

}

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-41587][BUILD] Upgrade `org.scalatestplus:selenium-4-4` to `org.scalatestplus:selenium-4-7`

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new ee2e582ff19 [SPARK-41587][BUILD] Upgrade

`org.scalatestplus:selenium-4-4` to `org.scalatestplus:selenium-4-7`

ee2e582ff19 is described below

commit ee2e582ff195fa11047545f43d1cb0ebd20a7091

Author: yangjie01

AuthorDate: Wed Dec 21 13:40:40 2022 +0900

[SPARK-41587][BUILD] Upgrade `org.scalatestplus:selenium-4-4` to

`org.scalatestplus:selenium-4-7`

### What changes were proposed in this pull request?

This pr aims upgrade `org.scalatestplus:selenium-4-4` to

`org.scalatestplus:selenium-4-7`:

- `org.scalatestplus:selenium-4-4` -> `org.scalatestplus:selenium-4-7`

- `selenium-java`: 4.4.0 -> 4.7.1

- `htmlunit-driver`: 3.64.0 -> 4.7.0

- `htmlunit` -> 2.64.0 -> 2.67.0

And all upgraded dependencies versions are matched.

### Why are the changes needed?

The release notes as follows:

-

https://github.com/scalatest/scalatestplus-selenium/releases/tag/release-3.2.14.0-for-selenium-4.7

### Does this PR introduce _any_ user-facing change?

No, just for test

### How was this patch tested?

- Pass Github Actions

- Manual test:

- ChromeUISeleniumSuite

```

build/sbt -Dguava.version=31.1-jre

-Dspark.test.webdriver.chrome.driver=/path/to/chromedriver

-Dtest.default.exclude.tags="" -Phive -Phive-thriftserver "core/testOnly

org.apache.spark.ui.ChromeUISeleniumSuite"

```

```

[info] ChromeUISeleniumSuite:

Starting ChromeDriver 108.0.5359.71

(1e0e3868ee06e91ad636a874420e3ca3ae3756ac-refs/branch-heads/5359{#1016}) on

port 13600

Only local connections are allowed.

Please see https://chromedriver.chromium.org/security-considerations for

suggestions on keeping ChromeDriver safe.

ChromeDriver was started successfully.

[info] - SPARK-31534: text for tooltip should be escaped (2 seconds, 702

milliseconds)

[info] - SPARK-31882: Link URL for Stage DAGs should not depend on paged

table. (824 milliseconds)

[info] - SPARK-31886: Color barrier execution mode RDD correctly (313

milliseconds)

[info] - Search text for paged tables should not be saved (1 second, 745

milliseconds)

[info] Run completed in 10 seconds, 266 milliseconds.

[info] Total number of tests run: 4

[info] Suites: completed 1, aborted 0

[info] Tests: succeeded 4, failed 0, canceled 0, ignored 0, pending 0

[info] All tests passed.

[success] Total time: 23 s, completed 2022-12-19 19:41:26

```

- RocksDBBackendChromeUIHistoryServerSuite

```

build/sbt -Dguava.version=31.1-jre

-Dspark.test.webdriver.chrome.driver=/path/to/chromedriver

-Dtest.default.exclude.tags="" -Phive -Phive-thriftserver "core/testOnly

org.apache.spark.deploy.history.RocksDBBackendChromeUIHistoryServerSuite"

```

```

[info] RocksDBBackendChromeUIHistoryServerSuite:

Starting ChromeDriver 108.0.5359.71

(1e0e3868ee06e91ad636a874420e3ca3ae3756ac-refs/branch-heads/5359{#1016}) on

port 2201

Only local connections are allowed.

Please see https://chromedriver.chromium.org/security-considerations for

suggestions on keeping ChromeDriver safe.

ChromeDriver was started successfully.

[info] - ajax rendered relative links are prefixed with uiRoot

(spark.ui.proxyBase) (2 seconds, 362 milliseconds)

[info] Run completed in 10 seconds, 254 milliseconds.

[info] Total number of tests run: 1

[info] Suites: completed 1, aborted 0

[info] Tests: succeeded 1, failed 0, canceled 0, ignored 0, pending 0

[info] All tests passed.

[success] Total time: 24 s, completed 2022-12-19 19:40:42

```

Closes #39129 from LuciferYang/selenium-47.

Authored-by: yangjie01

Signed-off-by: Kousuke Saruta

---

pom.xml | 10 +-

1 file changed, 5 insertions(+), 5 deletions(-)

diff --git a/pom.xml b/pom.xml

index 5ae26570e2d..f09207c660f 100644

--- a/pom.xml

+++ b/pom.xml

@@ -205,9 +205,9 @@

4.9.3

1.1

-4.4.0

-3.64.0

-2.64.0

+4.7.1

+4.7.0

+2.67.0

1.8

1.1.0

1.5.0

@@ -416,7 +416,7 @@

org.scalatestplus

- selenium-4-4_${scala.binary.version}

+ selenium-4-7_${scala.binary.version}

test

@@ -1144,7 +1144,7 @@

org.scalatestplus

-selenium-4-4_${scala.binary.version}

+selenium-4-7_${scala.binary.version}

3.2.14.0

test

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (12e48527846 -> 40590e6d911)

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git from 12e48527846 [SPARK-40423][K8S][TESTS] Add explicit YuniKorn queue submission test coverage add 40590e6d911 [SPARK-40397][BUILD] Upgrade `org.scalatestplus:selenium` to 3.12.13 No new revisions were added by this update. Summary of changes: dev/deps/spark-deps-hadoop-2-hive-2.3 | 2 +- dev/deps/spark-deps-hadoop-3-hive-2.3 | 2 +- pom.xml | 18 +++--- 3 files changed, 13 insertions(+), 9 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [MINOR][INFRA] Add ANTLR generated files to .gitignore

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/master by this push: new 46ccc22 [MINOR][INFRA] Add ANTLR generated files to .gitignore 46ccc22 is described below commit 46ccc22ee40c780f6ae4a9af4562fb1ad10ccd9f Author: Yuto Akutsu AuthorDate: Thu Mar 17 18:12:13 2022 +0900 [MINOR][INFRA] Add ANTLR generated files to .gitignore ### What changes were proposed in this pull request? Add git ignore entries for files created by ANTLR. ### Why are the changes needed? To avoid developers from accidentally adding those files when working on parser/lexer. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? To make sure those files are ignored by `git status` when they exist. Closes #35838 from yutoacts/minor_gitignore. Authored-by: Yuto Akutsu Signed-off-by: Kousuke Saruta --- .gitignore | 5 + 1 file changed, 5 insertions(+) diff --git a/.gitignore b/.gitignore index b758781..0e2f59f 100644 --- a/.gitignore +++ b/.gitignore @@ -117,3 +117,8 @@ spark-warehouse/ # For Node.js node_modules + +# For Antlr +sql/catalyst/gen/ +sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBaseLexer.tokens +sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/gen/ - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.2 updated: [SPARK-38303][BUILD] Upgrade `ansi-regex` from 5.0.0 to 5.0.1 in /dev

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch branch-3.2

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.2 by this push:

new 637a69f [SPARK-38303][BUILD] Upgrade `ansi-regex` from 5.0.0 to 5.0.1

in /dev

637a69f is described below

commit 637a69f349d01199db8af7331a22d2b9154cb50e

Author: bjornjorgensen

AuthorDate: Fri Feb 25 11:43:36 2022 +0900

[SPARK-38303][BUILD] Upgrade `ansi-regex` from 5.0.0 to 5.0.1 in /dev

### What changes were proposed in this pull request?

Upgrade ansi-regex from 5.0.0 to 5.0.1 in /dev

### Why are the changes needed?

[CVE-2021-3807](https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2021-3807)

[Releases notes at github](https://github.com/chalk/ansi-regex/releases)

By upgrading ansi-regex from 5.0.0 to 5.0.1 we will resolve this issue.

### Does this PR introduce _any_ user-facing change?

Some users use remote security scanners and this is one of the issues that

comes up. How this can do some damage with spark is highly uncertain. but let's

remove the uncertainty that any user may have.

### How was this patch tested?

All test must pass.

Closes #35628 from bjornjorgensen/ansi-regex-from-5.0.0-to-5.0.1.

Authored-by: bjornjorgensen

Signed-off-by: Kousuke Saruta

(cherry picked from commit 9758d55918dfec236e8ac9f1655a9ff0acd7156e)

Signed-off-by: Kousuke Saruta

---

dev/package-lock.json | 3189 ++---

dev/package.json |3 +-

2 files changed, 2229 insertions(+), 963 deletions(-)

diff --git a/dev/package-lock.json b/dev/package-lock.json

index a57f45b..c2a61b3 100644

--- a/dev/package-lock.json

+++ b/dev/package-lock.json

@@ -1,979 +1,2244 @@

{

-"requires": true,

-"lockfileVersion": 1,

-"dependencies": {

-"@babel/code-frame": {

-"version": "7.12.11",

-"resolved":

"https://registry.npmjs.org/@babel/code-frame/-/code-frame-7.12.11.tgz";,

-"integrity":

"sha512-Zt1yodBx1UcyiePMSkWnU4hPqhwq7hGi2nFL1LeA3EUl+q2LQx16MISgJ0+z7dnmgvP9QtIleuETGOiOH1RcIw==",

-"dev": true,

-"requires": {

-"@babel/highlight": "^7.10.4"

-}

-},

-"@babel/helper-validator-identifier": {

-"version": "7.14.0",

-"resolved":

"https://registry.npmjs.org/@babel/helper-validator-identifier/-/helper-validator-identifier-7.14.0.tgz";,

-"integrity":

"sha512-V3ts7zMSu5lfiwWDVWzRDGIN+lnCEUdaXgtVHJgLb1rGaA6jMrtB9EmE7L18foXJIE8Un/A/h6NJfGQp/e1J4A==",

-"dev": true

-},

-"@babel/highlight": {

-"version": "7.14.0",

-"resolved":

"https://registry.npmjs.org/@babel/highlight/-/highlight-7.14.0.tgz";,

-"integrity":

"sha512-YSCOwxvTYEIMSGaBQb5kDDsCopDdiUGsqpatp3fOlI4+2HQSkTmEVWnVuySdAC5EWCqSWWTv0ib63RjR7dTBdg==",

-"dev": true,

-"requires": {

-"@babel/helper-validator-identifier": "^7.14.0",

-"chalk": "^2.0.0",

-"js-tokens": "^4.0.0"

-},

-"dependencies": {

-"chalk": {

-"version": "2.4.2",

-"resolved":

"https://registry.npmjs.org/chalk/-/chalk-2.4.2.tgz";,

-"integrity":

"sha512-Mti+f9lpJNcwF4tWV8/OrTTtF1gZi+f8FqlyAdouralcFWFQWF2+NgCHShjkCb+IFBLq9buZwE1xckQU4peSuQ==",

-"dev": true,

-"requires": {

-"ansi-styles": "^3.2.1",

-"escape-string-regexp": "^1.0.5",

-"supports-color": "^5.3.0"

-}

-}

-}

-},

-"@eslint/eslintrc": {

-"version": "0.4.0",

-"resolved":

"https://registry.npmjs.org/@eslint/eslintrc/-/eslintrc-0.4.0.tgz";,

-"integrity":

"sha512-2ZPCc+uNbjV5ERJr+aKSPRwZgKd2z11x0EgLvb1PURmUrn9QNRXFqje0Ldq454PfAVyaJYyrDvvIKSFP4NnBog==",

-"dev": true,

-"requires": {

-"ajv": "^6.12.4",

-"debug": "^4.1.1",

-"espree&

[spark] branch master updated: [SPARK-38303][BUILD] Upgrade `ansi-regex` from 5.0.0 to 5.0.1 in /dev

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 9758d55 [SPARK-38303][BUILD] Upgrade `ansi-regex` from 5.0.0 to 5.0.1

in /dev

9758d55 is described below

commit 9758d55918dfec236e8ac9f1655a9ff0acd7156e

Author: bjornjorgensen

AuthorDate: Fri Feb 25 11:43:36 2022 +0900

[SPARK-38303][BUILD] Upgrade `ansi-regex` from 5.0.0 to 5.0.1 in /dev

### What changes were proposed in this pull request?

Upgrade ansi-regex from 5.0.0 to 5.0.1 in /dev

### Why are the changes needed?

[CVE-2021-3807](https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2021-3807)

[Releases notes at github](https://github.com/chalk/ansi-regex/releases)

By upgrading ansi-regex from 5.0.0 to 5.0.1 we will resolve this issue.

### Does this PR introduce _any_ user-facing change?

Some users use remote security scanners and this is one of the issues that

comes up. How this can do some damage with spark is highly uncertain. but let's

remove the uncertainty that any user may have.

### How was this patch tested?

All test must pass.

Closes #35628 from bjornjorgensen/ansi-regex-from-5.0.0-to-5.0.1.

Authored-by: bjornjorgensen

Signed-off-by: Kousuke Saruta

---

dev/package-lock.json | 3189 ++---

dev/package.json |3 +-

2 files changed, 2229 insertions(+), 963 deletions(-)

diff --git a/dev/package-lock.json b/dev/package-lock.json

index a57f45b..c2a61b3 100644

--- a/dev/package-lock.json

+++ b/dev/package-lock.json

@@ -1,979 +1,2244 @@

{

-"requires": true,

-"lockfileVersion": 1,

-"dependencies": {

-"@babel/code-frame": {

-"version": "7.12.11",

-"resolved":

"https://registry.npmjs.org/@babel/code-frame/-/code-frame-7.12.11.tgz";,

-"integrity":

"sha512-Zt1yodBx1UcyiePMSkWnU4hPqhwq7hGi2nFL1LeA3EUl+q2LQx16MISgJ0+z7dnmgvP9QtIleuETGOiOH1RcIw==",

-"dev": true,

-"requires": {

-"@babel/highlight": "^7.10.4"

-}

-},

-"@babel/helper-validator-identifier": {

-"version": "7.14.0",

-"resolved":

"https://registry.npmjs.org/@babel/helper-validator-identifier/-/helper-validator-identifier-7.14.0.tgz";,

-"integrity":

"sha512-V3ts7zMSu5lfiwWDVWzRDGIN+lnCEUdaXgtVHJgLb1rGaA6jMrtB9EmE7L18foXJIE8Un/A/h6NJfGQp/e1J4A==",

-"dev": true

-},

-"@babel/highlight": {

-"version": "7.14.0",

-"resolved":

"https://registry.npmjs.org/@babel/highlight/-/highlight-7.14.0.tgz";,

-"integrity":

"sha512-YSCOwxvTYEIMSGaBQb5kDDsCopDdiUGsqpatp3fOlI4+2HQSkTmEVWnVuySdAC5EWCqSWWTv0ib63RjR7dTBdg==",

-"dev": true,

-"requires": {

-"@babel/helper-validator-identifier": "^7.14.0",

-"chalk": "^2.0.0",

-"js-tokens": "^4.0.0"

-},

-"dependencies": {

-"chalk": {

-"version": "2.4.2",

-"resolved":

"https://registry.npmjs.org/chalk/-/chalk-2.4.2.tgz";,

-"integrity":

"sha512-Mti+f9lpJNcwF4tWV8/OrTTtF1gZi+f8FqlyAdouralcFWFQWF2+NgCHShjkCb+IFBLq9buZwE1xckQU4peSuQ==",

-"dev": true,

-"requires": {

-"ansi-styles": "^3.2.1",

-"escape-string-regexp": "^1.0.5",

-"supports-color": "^5.3.0"

-}

-}

-}

-},

-"@eslint/eslintrc": {

-"version": "0.4.0",

-"resolved":

"https://registry.npmjs.org/@eslint/eslintrc/-/eslintrc-0.4.0.tgz";,

-"integrity":

"sha512-2ZPCc+uNbjV5ERJr+aKSPRwZgKd2z11x0EgLvb1PURmUrn9QNRXFqje0Ldq454PfAVyaJYyrDvvIKSFP4NnBog==",

-"dev": true,

-"requires": {

-"ajv": "^6.12.4",

-"debug": "^4.1.1",

-"espree": "^7.3.0",

-"

[spark] branch master updated (a103a49 -> 48b56c0)

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from a103a49 [SPARK-38279][TESTS][3.2] Pin MarkupSafe to 2.0.1 fix linter failure add 48b56c0 [SPARK-38278][PYTHON] Add SparkContext.addArchive in PySpark No new revisions were added by this update. Summary of changes: python/docs/source/reference/pyspark.rst | 1 + python/pyspark/context.py| 44 2 files changed, 45 insertions(+) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.2 updated (3dea6c4 -> 0dde12f)

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a change to branch branch-3.2 in repository https://gitbox.apache.org/repos/asf/spark.git. from 3dea6c4 [SPARK-38211][SQL][DOCS] Add SQL migration guide on restoring loose upcast from string to other types add 0dde12f [SPARK-36808][BUILD][3.2] Upgrade Kafka to 2.8.1 No new revisions were added by this update. Summary of changes: pom.xml | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.2 updated: [SPARK-37934][BUILD][3.2] Upgrade Jetty version to 9.4.44

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a commit to branch branch-3.2 in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/branch-3.2 by this push: new adba516 [SPARK-37934][BUILD][3.2] Upgrade Jetty version to 9.4.44 adba516 is described below commit adba5165a56bd4e7a71fcad77c568c0cbc2e7f97 Author: Jack Richard Buggins AuthorDate: Wed Feb 9 02:28:03 2022 +0900 [SPARK-37934][BUILD][3.2] Upgrade Jetty version to 9.4.44 ### What changes were proposed in this pull request? This pull request updates provides a minor update to the Jetty version from `9.4.43.v20210629` to `9.4.44.v20210927` which is required against branch-3.2 to fully resolve https://issues.apache.org/jira/browse/SPARK-37934 ### Why are the changes needed? As discussed in https://github.com/apache/spark/pull/35338, DoS vector is available even within a private or restricted network. The below result is the output of a twistlock scan, which also detects this vulnerability. ``` Source: https://github.com/eclipse/jetty.project/issues/6973 CVE: PRISMA-2021-0182 Sev.: medium Package Name: org.eclipse.jetty_jetty-server Package Ver.: 9.4.43.v20210629 Status: fixed in 9.4.44 Description: org.eclipse.jetty_jetty-server package versions before 9.4.44 are vulnerable to DoS (Denial of Service). Logback-access calls Request.getParameterNames() for request logging. That will force a request body read (if it hasn't been read before) per the servlet. This will now consume resources to read the request body content, which could easily be malicious (in size? in keys? etc), even though the application intentionally didn't read the request body. ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? * Core local ``` $ build/sbt > project core > test ``` * CI Closes #35442 from JackBuggins/branch-3.2. Authored-by: Jack Richard Buggins Signed-off-by: Kousuke Saruta --- pom.xml | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/pom.xml b/pom.xml index bc3f925..8af3d6a 100644 --- a/pom.xml +++ b/pom.xml @@ -138,7 +138,7 @@ 10.14.2.0 1.12.2 1.6.13 -9.4.43.v20210629 +9.4.44.v20210927 4.0.3 0.10.0 2.5.0 - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (3d736d9 -> 6115f58)

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 3d736d9 [SPARK-37412][PYTHON][ML] Inline typehints for pyspark.ml.stat add 6115f58 [MINOR][SQL] Remove redundant array creation in UnsafeRow No new revisions were added by this update. Summary of changes: .../java/org/apache/spark/sql/catalyst/expressions/UnsafeRow.java | 4 ++-- 1 file changed, 2 insertions(+), 2 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-38021][BUILD] Upgrade dropwizard metrics from 4.2.2 to 4.2.7

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/spark.git The following commit(s) were added to refs/heads/master by this push: new a1b061d [SPARK-38021][BUILD] Upgrade dropwizard metrics from 4.2.2 to 4.2.7 a1b061d is described below commit a1b061d7fc5427138bfaa9fe68d2748f8bf3907c Author: yangjie01 AuthorDate: Tue Jan 25 20:57:16 2022 +0900 [SPARK-38021][BUILD] Upgrade dropwizard metrics from 4.2.2 to 4.2.7 ### What changes were proposed in this pull request? This pr upgrade dropwizard metrics from 4.2.2 to 4.2.7. ### Why are the changes needed? There are 5 versions after 4.2.2, the release notes as follows: - https://github.com/dropwizard/metrics/releases/tag/v4.2.3 - https://github.com/dropwizard/metrics/releases/tag/v4.2.4 - https://github.com/dropwizard/metrics/releases/tag/v4.2.5 - https://github.com/dropwizard/metrics/releases/tag/v4.2.6 - https://github.com/dropwizard/metrics/releases/tag/v4.2.7 And after 4.2.5, dropwizard metrics supports [build with JDK 17](https://github.com/dropwizard/metrics/pull/2180). ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GA Closes #35317 from LuciferYang/upgrade-metrics. Authored-by: yangjie01 Signed-off-by: Kousuke Saruta --- dev/deps/spark-deps-hadoop-2-hive-2.3 | 10 +- dev/deps/spark-deps-hadoop-3-hive-2.3 | 10 +- pom.xml | 2 +- 3 files changed, 11 insertions(+), 11 deletions(-) diff --git a/dev/deps/spark-deps-hadoop-2-hive-2.3 b/dev/deps/spark-deps-hadoop-2-hive-2.3 index 5efdca9..8284237 100644 --- a/dev/deps/spark-deps-hadoop-2-hive-2.3 +++ b/dev/deps/spark-deps-hadoop-2-hive-2.3 @@ -195,11 +195,11 @@ logging-interceptor/3.12.12//logging-interceptor-3.12.12.jar lz4-java/1.8.0//lz4-java-1.8.0.jar macro-compat_2.12/1.1.1//macro-compat_2.12-1.1.1.jar mesos/1.4.3/shaded-protobuf/mesos-1.4.3-shaded-protobuf.jar -metrics-core/4.2.2//metrics-core-4.2.2.jar -metrics-graphite/4.2.2//metrics-graphite-4.2.2.jar -metrics-jmx/4.2.2//metrics-jmx-4.2.2.jar -metrics-json/4.2.2//metrics-json-4.2.2.jar -metrics-jvm/4.2.2//metrics-jvm-4.2.2.jar +metrics-core/4.2.7//metrics-core-4.2.7.jar +metrics-graphite/4.2.7//metrics-graphite-4.2.7.jar +metrics-jmx/4.2.7//metrics-jmx-4.2.7.jar +metrics-json/4.2.7//metrics-json-4.2.7.jar +metrics-jvm/4.2.7//metrics-jvm-4.2.7.jar minlog/1.3.0//minlog-1.3.0.jar netty-all/4.1.73.Final//netty-all-4.1.73.Final.jar netty-buffer/4.1.73.Final//netty-buffer-4.1.73.Final.jar diff --git a/dev/deps/spark-deps-hadoop-3-hive-2.3 b/dev/deps/spark-deps-hadoop-3-hive-2.3 index a79a71b..f169277 100644 --- a/dev/deps/spark-deps-hadoop-3-hive-2.3 +++ b/dev/deps/spark-deps-hadoop-3-hive-2.3 @@ -181,11 +181,11 @@ logging-interceptor/3.12.12//logging-interceptor-3.12.12.jar lz4-java/1.8.0//lz4-java-1.8.0.jar macro-compat_2.12/1.1.1//macro-compat_2.12-1.1.1.jar mesos/1.4.3/shaded-protobuf/mesos-1.4.3-shaded-protobuf.jar -metrics-core/4.2.2//metrics-core-4.2.2.jar -metrics-graphite/4.2.2//metrics-graphite-4.2.2.jar -metrics-jmx/4.2.2//metrics-jmx-4.2.2.jar -metrics-json/4.2.2//metrics-json-4.2.2.jar -metrics-jvm/4.2.2//metrics-jvm-4.2.2.jar +metrics-core/4.2.7//metrics-core-4.2.7.jar +metrics-graphite/4.2.7//metrics-graphite-4.2.7.jar +metrics-jmx/4.2.7//metrics-jmx-4.2.7.jar +metrics-json/4.2.7//metrics-json-4.2.7.jar +metrics-jvm/4.2.7//metrics-jvm-4.2.7.jar minlog/1.3.0//minlog-1.3.0.jar netty-all/4.1.73.Final//netty-all-4.1.73.Final.jar netty-buffer/4.1.73.Final//netty-buffer-4.1.73.Final.jar diff --git a/pom.xml b/pom.xml index 5bae4d2..09577f2 100644 --- a/pom.xml +++ b/pom.xml @@ -147,7 +147,7 @@ If you changes codahale.metrics.version, you also need to change the link to metrics.dropwizard.io in docs/monitoring.md. --> -4.2.2 +4.2.7 1.11.0 1.12.0 - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

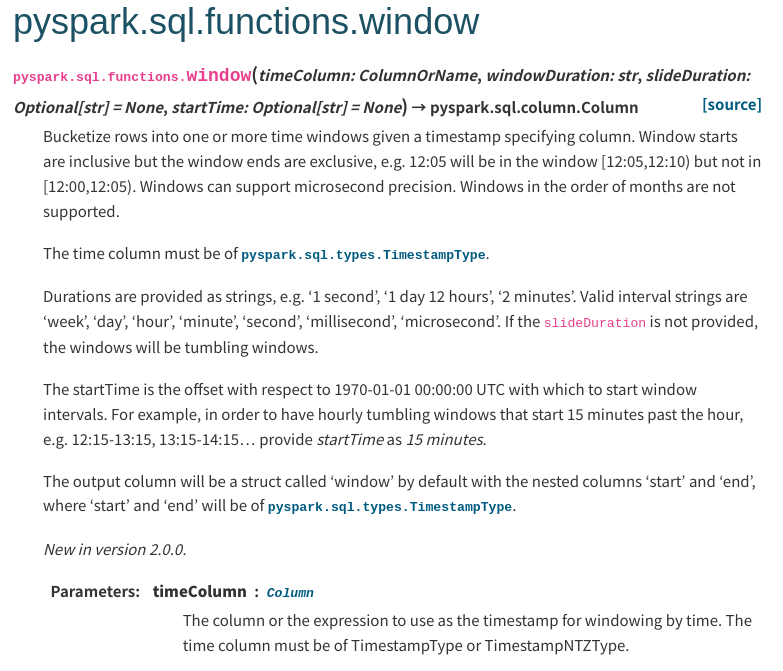

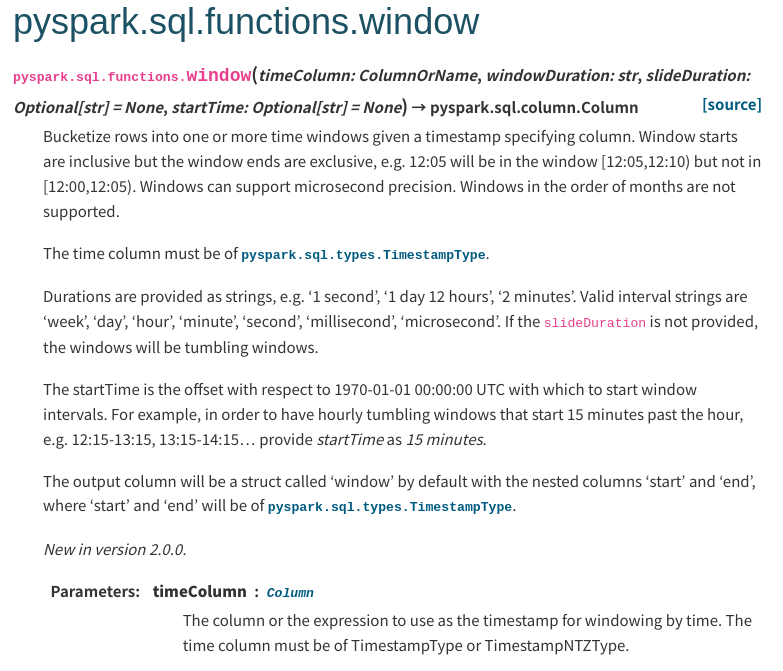

[spark] branch branch-3.2 updated: [SPARK-38017][SQL][DOCS] Fix the API doc for window to say it supports TimestampNTZType too as timeColumn

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch branch-3.2

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.2 by this push:

new 263fe44 [SPARK-38017][SQL][DOCS] Fix the API doc for window to say it

supports TimestampNTZType too as timeColumn

263fe44 is described below

commit 263fe44f8a9738fc8d7dcfbcc1c0c10c942146e3

Author: Kousuke Saruta

AuthorDate: Tue Jan 25 20:44:06 2022 +0900

[SPARK-38017][SQL][DOCS] Fix the API doc for window to say it supports

TimestampNTZType too as timeColumn

### What changes were proposed in this pull request?

This PR fixes the API docs for `window` to say it supports

`TimestampNTZType` too as `timeColumn`.

### Why are the changes needed?

`window` function supports not only `TimestampType` but also

`TimestampNTZType`.

### Does this PR introduce _any_ user-facing change?

Yes, but I don't think this change affects existing users.

### How was this patch tested?

Built the docs with the following commands.

```

bundle install

SKIP_RDOC=1 SKIP_SQLDOC=1 bundle exec jekyll build

```

Then, confirmed the built doc.

Closes #35313 from sarutak/window-timestampntz-doc.

Authored-by: Kousuke Saruta

Signed-off-by: Kousuke Saruta

(cherry picked from commit 76f685d26dc1f0f4d92293cd370e58ee2fa68452)

Signed-off-by: Kousuke Saruta

---

python/pyspark/sql/functions.py | 2 +-

sql/core/src/main/scala/org/apache/spark/sql/functions.scala | 6 +++---

2 files changed, 4 insertions(+), 4 deletions(-)

diff --git a/python/pyspark/sql/functions.py b/python/pyspark/sql/functions.py

index c7bc581..acde817 100644

--- a/python/pyspark/sql/functions.py

+++ b/python/pyspark/sql/functions.py

@@ -2304,7 +2304,7 @@ def window(timeColumn, windowDuration,

slideDuration=None, startTime=None):

--

timeColumn : :class:`~pyspark.sql.Column`

The column or the expression to use as the timestamp for windowing by

time.

-The time column must be of TimestampType.

+The time column must be of TimestampType or TimestampNTZType.

windowDuration : str

A string specifying the width of the window, e.g. `10 minutes`,

`1 second`. Check `org.apache.spark.unsafe.types.CalendarInterval` for

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

index a4c77b2..f4801ee 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

@@ -3517,7 +3517,7 @@ object functions {

* processing time.

*

* @param timeColumn The column or the expression to use as the timestamp

for windowing by time.

- * The time column must be of TimestampType.

+ * The time column must be of TimestampType or

TimestampNTZType.

* @param windowDuration A string specifying the width of the window, e.g.

`10 minutes`,

* `1 second`. Check

`org.apache.spark.unsafe.types.CalendarInterval` for

* valid duration identifiers. Note that the duration

is a fixed length of

@@ -3573,7 +3573,7 @@ object functions {

* processing time.

*

* @param timeColumn The column or the expression to use as the timestamp

for windowing by time.

- * The time column must be of TimestampType.

+ * The time column must be of TimestampType or

TimestampNTZType.

* @param windowDuration A string specifying the width of the window, e.g.

`10 minutes`,

* `1 second`. Check

`org.apache.spark.unsafe.types.CalendarInterval` for

* valid duration identifiers. Note that the duration

is a fixed length of

@@ -3618,7 +3618,7 @@ object functions {

* processing time.

*

* @param timeColumn The column or the expression to use as the timestamp

for windowing by time.

- * The time column must be of TimestampType.

+ * The time column must be of TimestampType or

TimestampNTZType.

* @param windowDuration A string specifying the width of the window, e.g.

`10 minutes`,

* `1 second`. Check

`org.apache.spark.unsafe.types.CalendarInterval` for

* valid duration identifiers.

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e

[spark] branch master updated: [SPARK-38017][SQL][DOCS] Fix the API doc for window to say it supports TimestampNTZType too as timeColumn

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 76f685d [SPARK-38017][SQL][DOCS] Fix the API doc for window to say it

supports TimestampNTZType too as timeColumn

76f685d is described below

commit 76f685d26dc1f0f4d92293cd370e58ee2fa68452

Author: Kousuke Saruta

AuthorDate: Tue Jan 25 20:44:06 2022 +0900

[SPARK-38017][SQL][DOCS] Fix the API doc for window to say it supports

TimestampNTZType too as timeColumn

### What changes were proposed in this pull request?

This PR fixes the API docs for `window` to say it supports

`TimestampNTZType` too as `timeColumn`.

### Why are the changes needed?

`window` function supports not only `TimestampType` but also

`TimestampNTZType`.

### Does this PR introduce _any_ user-facing change?

Yes, but I don't think this change affects existing users.

### How was this patch tested?

Built the docs with the following commands.

```

bundle install

SKIP_RDOC=1 SKIP_SQLDOC=1 bundle exec jekyll build

```

Then, confirmed the built doc.

Closes #35313 from sarutak/window-timestampntz-doc.

Authored-by: Kousuke Saruta

Signed-off-by: Kousuke Saruta

---

python/pyspark/sql/functions.py | 2 +-

sql/core/src/main/scala/org/apache/spark/sql/functions.scala | 6 +++---

2 files changed, 4 insertions(+), 4 deletions(-)

diff --git a/python/pyspark/sql/functions.py b/python/pyspark/sql/functions.py

index bfee994..2dfaec8 100644

--- a/python/pyspark/sql/functions.py

+++ b/python/pyspark/sql/functions.py

@@ -2551,7 +2551,7 @@ def window(

--

timeColumn : :class:`~pyspark.sql.Column`

The column or the expression to use as the timestamp for windowing by

time.

-The time column must be of TimestampType.

+The time column must be of TimestampType or TimestampNTZType.

windowDuration : str

A string specifying the width of the window, e.g. `10 minutes`,

`1 second`. Check `org.apache.spark.unsafe.types.CalendarInterval` for

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

index f217dad..0db12a2 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

@@ -3621,7 +3621,7 @@ object functions {

* processing time.

*

* @param timeColumn The column or the expression to use as the timestamp

for windowing by time.

- * The time column must be of TimestampType.

+ * The time column must be of TimestampType or

TimestampNTZType.

* @param windowDuration A string specifying the width of the window, e.g.

`10 minutes`,

* `1 second`. Check

`org.apache.spark.unsafe.types.CalendarInterval` for

* valid duration identifiers. Note that the duration

is a fixed length of

@@ -3677,7 +3677,7 @@ object functions {

* processing time.

*

* @param timeColumn The column or the expression to use as the timestamp

for windowing by time.

- * The time column must be of TimestampType.

+ * The time column must be of TimestampType or

TimestampNTZType.

* @param windowDuration A string specifying the width of the window, e.g.

`10 minutes`,

* `1 second`. Check

`org.apache.spark.unsafe.types.CalendarInterval` for

* valid duration identifiers. Note that the duration

is a fixed length of

@@ -3722,7 +3722,7 @@ object functions {

* processing time.

*

* @param timeColumn The column or the expression to use as the timestamp

for windowing by time.

- * The time column must be of TimestampType.

+ * The time column must be of TimestampType or

TimestampNTZType.

* @param windowDuration A string specifying the width of the window, e.g.

`10 minutes`,

* `1 second`. Check

`org.apache.spark.unsafe.types.CalendarInterval` for

* valid duration identifiers.

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

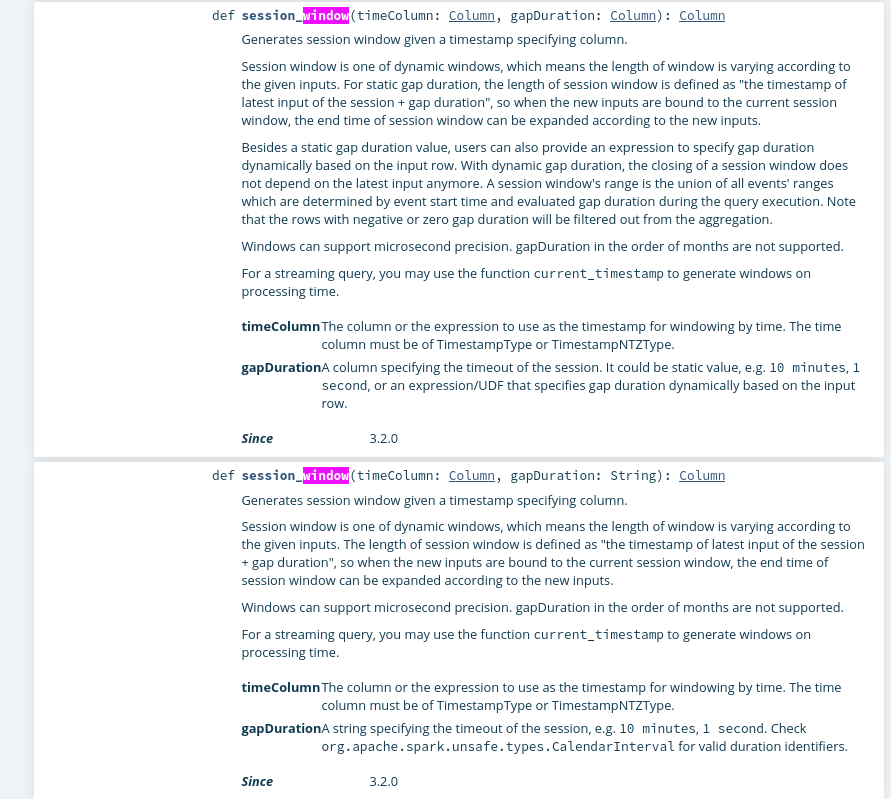

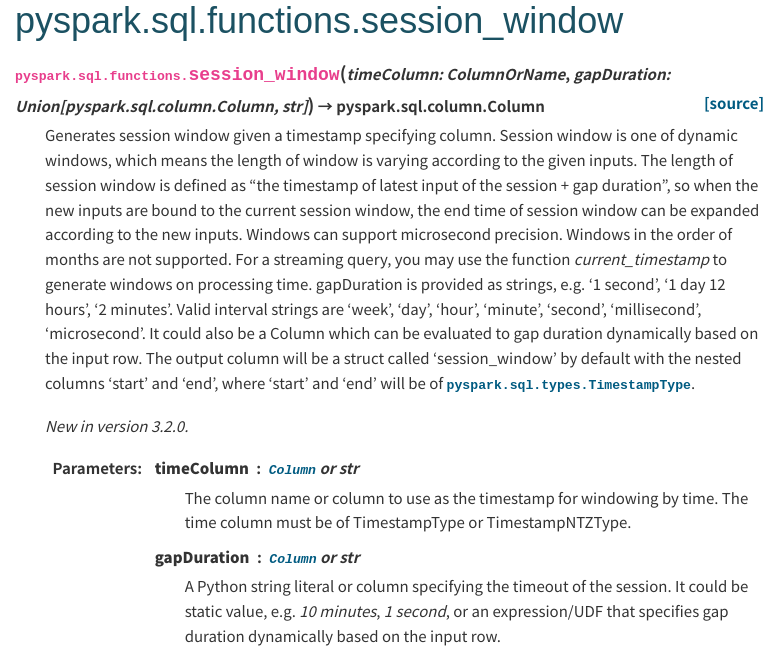

[spark] branch master updated: [SPARK-38016][SQL][DOCS] Fix the API doc for session_window to say it supports TimestampNTZType too as timeColumn

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 48a440f [SPARK-38016][SQL][DOCS] Fix the API doc for session_window

to say it supports TimestampNTZType too as timeColumn

48a440f is described below

commit 48a440fe1fc334134f42a726cc6fb3d98802e0fd

Author: Kousuke Saruta

AuthorDate: Tue Jan 25 20:41:38 2022 +0900

[SPARK-38016][SQL][DOCS] Fix the API doc for session_window to say it

supports TimestampNTZType too as timeColumn

### What changes were proposed in this pull request?

This PR fixes the API docs for `session_window` to say it supports

`TimestampNTZType` too as `timeColumn`.

### Why are the changes needed?

As of Spark 3.3.0 (e858cd568a74123f7fd8fe4c3d2917a), `session_window`

supports not only `TimestampType` but also `TimestampNTZType`.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Built the docs with the following commands.

```

bundle install

SKIP_RDOC=1 SKIP_SQLDOC=1 bundle exec jekyll build

```

Then, confirmed the built doc.

Closes #35312 from sarutak/sessionwindow-timestampntz-doc.

Authored-by: Kousuke Saruta

Signed-off-by: Kousuke Saruta

---

python/pyspark/sql/functions.py | 2 +-

sql/core/src/main/scala/org/apache/spark/sql/functions.scala | 4 ++--

2 files changed, 3 insertions(+), 3 deletions(-)

diff --git a/python/pyspark/sql/functions.py b/python/pyspark/sql/functions.py

index e69c37d..bfee994 100644

--- a/python/pyspark/sql/functions.py

+++ b/python/pyspark/sql/functions.py

@@ -2623,7 +2623,7 @@ def session_window(timeColumn: "ColumnOrName",

gapDuration: Union[Column, str])

--

timeColumn : :class:`~pyspark.sql.Column` or str

The column name or column to use as the timestamp for windowing by

time.

-The time column must be of TimestampType.

+The time column must be of TimestampType or TimestampNTZType.

gapDuration : :class:`~pyspark.sql.Column` or str

A Python string literal or column specifying the timeout of the

session. It could be

static value, e.g. `10 minutes`, `1 second`, or an expression/UDF that

specifies gap

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

index ec28d8d..f217dad 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

@@ -3750,7 +3750,7 @@ object functions {

* processing time.

*

* @param timeColumn The column or the expression to use as the timestamp

for windowing by time.

- * The time column must be of TimestampType.

+ * The time column must be of TimestampType or

TimestampNTZType.

* @param gapDuration A string specifying the timeout of the session, e.g.

`10 minutes`,

*`1 second`. Check

`org.apache.spark.unsafe.types.CalendarInterval` for

*valid duration identifiers.

@@ -3787,7 +3787,7 @@ object functions {

* processing time.

*

* @param timeColumn The column or the expression to use as the timestamp

for windowing by time.

- * The time column must be of TimestampType.

+ * The time column must be of TimestampType or

TimestampNTZType.

* @param gapDuration A column specifying the timeout of the session. It

could be static value,

*e.g. `10 minutes`, `1 second`, or an expression/UDF

that specifies gap

*duration dynamically based on the input row.

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.0 updated: [SPARK-37860][UI] Fix taskindex in the stage page task event timeline

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 755d11d [SPARK-37860][UI] Fix taskindex in the stage page task event

timeline

755d11d is described below

commit 755d11d0d1479f5441c6ead2cc6142bab45d6e16

Author: stczwd

AuthorDate: Tue Jan 11 15:23:12 2022 +0900

[SPARK-37860][UI] Fix taskindex in the stage page task event timeline

### What changes were proposed in this pull request?

This reverts commit 450b415028c3b00f3a002126cd11318d3932e28f.

### Why are the changes needed?

In #32888, shahidki31 change taskInfo.index to taskInfo.taskId. However, we

generally use `index.attempt` or `taskId` to distinguish tasks within a stage,

not `taskId.attempt`.

Thus #32888 was a wrong fix issue, we should revert it.

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

origin test suites

Closes #35160 from stczwd/SPARK-37860.

Authored-by: stczwd

Signed-off-by: Kousuke Saruta

(cherry picked from commit 3d2fde5242c8989688c176b8ed5eb0bff5e1f17f)

Signed-off-by: Kousuke Saruta

---

core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

index e9eb62e..ccaa70b 100644

--- a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

+++ b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

@@ -352,7 +352,7 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

|'content': '

+ |data-title="${s"Task " + index + " (attempt " + attempt +

")"}

|Status: ${taskInfo.status}

|Launch Time: ${UIUtils.formatDate(new Date(launchTime))}

|${

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.1 updated: [SPARK-37860][UI] Fix taskindex in the stage page task event timeline

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch branch-3.1

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.1 by this push:

new 830d5b6 [SPARK-37860][UI] Fix taskindex in the stage page task event

timeline

830d5b6 is described below

commit 830d5b650ce9ac00f2a64bbf3e7fe9d31b02e51d

Author: stczwd

AuthorDate: Tue Jan 11 15:23:12 2022 +0900

[SPARK-37860][UI] Fix taskindex in the stage page task event timeline

### What changes were proposed in this pull request?

This reverts commit 450b415028c3b00f3a002126cd11318d3932e28f.

### Why are the changes needed?

In #32888, shahidki31 change taskInfo.index to taskInfo.taskId. However, we

generally use `index.attempt` or `taskId` to distinguish tasks within a stage,

not `taskId.attempt`.

Thus #32888 was a wrong fix issue, we should revert it.

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

origin test suites

Closes #35160 from stczwd/SPARK-37860.

Authored-by: stczwd

Signed-off-by: Kousuke Saruta

(cherry picked from commit 3d2fde5242c8989688c176b8ed5eb0bff5e1f17f)

Signed-off-by: Kousuke Saruta

---

core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

index 459e09a..47ba951 100644

--- a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

+++ b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

@@ -355,7 +355,7 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

|'content': '

+ |data-title="${s"Task " + index + " (attempt " + attempt +

")"}

|Status: ${taskInfo.status}

|Launch Time: ${UIUtils.formatDate(new Date(launchTime))}

|${

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.2 updated: [SPARK-37860][UI] Fix taskindex in the stage page task event timeline

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch branch-3.2

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.2 by this push:

new db1023c [SPARK-37860][UI] Fix taskindex in the stage page task event

timeline

db1023c is described below

commit db1023c728c5e0bdcd4ef457cf5f7ba4f13cb79d

Author: stczwd

AuthorDate: Tue Jan 11 15:23:12 2022 +0900

[SPARK-37860][UI] Fix taskindex in the stage page task event timeline

### What changes were proposed in this pull request?

This reverts commit 450b415028c3b00f3a002126cd11318d3932e28f.

### Why are the changes needed?

In #32888, shahidki31 change taskInfo.index to taskInfo.taskId. However, we

generally use `index.attempt` or `taskId` to distinguish tasks within a stage,

not `taskId.attempt`.

Thus #32888 was a wrong fix issue, we should revert it.

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

origin test suites

Closes #35160 from stczwd/SPARK-37860.

Authored-by: stczwd

Signed-off-by: Kousuke Saruta

(cherry picked from commit 3d2fde5242c8989688c176b8ed5eb0bff5e1f17f)

Signed-off-by: Kousuke Saruta

---

core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

index 81dfe83..777a6b0 100644

--- a/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

+++ b/core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala

@@ -355,7 +355,7 @@ private[ui] class StagePage(parent: StagesTab, store:

AppStatusStore) extends We

|'content': '

+ |data-title="${s"Task " + index + " (attempt " + attempt +

")"}

|Status: ${taskInfo.status}

|Launch Time: ${UIUtils.formatDate(new Date(launchTime))}

|${

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (7463564 -> 3d2fde5)

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 7463564 [SPARK-37847][CORE][SHUFFLE] PushBlockStreamCallback#isStale should check null to avoid NPE add 3d2fde5 [SPARK-37860][UI] Fix taskindex in the stage page task event timeline No new revisions were added by this update. Summary of changes: core/src/main/scala/org/apache/spark/ui/jobs/StagePage.scala | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (98e1c77 -> 3b88bc8)

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from 98e1c77 [SPARK-37803][SQL] Add ORC read benchmarks for structs add 3b88bc8 [SPARK-37792][CORE] Fix the check of custom configuration in SparkShellLoggingFilter No new revisions were added by this update. Summary of changes: .../scala/org/apache/spark/internal/Logging.scala | 19 -- .../org/apache/spark/internal/LoggingSuite.scala | 23 +++--- .../scala/org/apache/spark/repl/ReplSuite.scala| 19 -- 3 files changed, 42 insertions(+), 19 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch branch-3.2 updated: [SPARK-37302][BUILD][FOLLOWUP] Extract the versions of dependencies accurately

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch branch-3.2

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.2 by this push:

new 0888622 [SPARK-37302][BUILD][FOLLOWUP] Extract the versions of

dependencies accurately

0888622 is described below

commit 08886223c6373cc7c7e132bfb58f1536e70286ef

Author: Kousuke Saruta

AuthorDate: Fri Dec 24 11:29:37 2021 +0900

[SPARK-37302][BUILD][FOLLOWUP] Extract the versions of dependencies

accurately

### What changes were proposed in this pull request?

This PR changes `dev/test-dependencies.sh` to extract the versions of

dependencies accurately.

In the current implementation, the versions are extracted like as follows.

```

GUAVA_VERSION=`build/mvn help:evaluate -Dexpression=guava.version -q

-DforceStdout`

```

But, if the output of the `mvn` command includes not only the version but

also other messages like warnings, a following command referring the version

will fail.

```

build/mvn dependency:get -Dartifact=com.google.guava:guava:${GUAVA_VERSION}

-q

...

[ERROR] Failed to execute goal

org.apache.maven.plugins:maven-dependency-plugin:3.1.1:get (default-cli) on

project spark-parent_2.12: Couldn't download artifact:

org.eclipse.aether.resolution.DependencyResolutionException:

com.google.guava:guava:jar:Falling was not found in

https://maven-central.storage-download.googleapis.com/maven2/ during a previous

attempt. This failure was cached in the local repository and resolution is not

reattempted until the update interval of gcs-maven-cent [...]

```

Actually, this causes the recent linter failure.

https://github.com/apache/spark/runs/4623297663?check_suite_focus=true

### Why are the changes needed?

To recover the CI.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually run `dev/test-dependencies.sh`.

Closes #35006 from sarutak/followup-SPARK-37302.

Authored-by: Kousuke Saruta

Signed-off-by: Kousuke Saruta

(cherry picked from commit dd0decff5f1e95cedd8fe83de7e4449be57cb31c)

Signed-off-by: Kousuke Saruta

---

dev/test-dependencies.sh | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/dev/test-dependencies.sh b/dev/test-dependencies.sh

index 363ba1a..39a11e7 100755

--- a/dev/test-dependencies.sh

+++ b/dev/test-dependencies.sh

@@ -48,9 +48,9 @@ OLD_VERSION=$($MVN -q \

--non-recursive \

org.codehaus.mojo:exec-maven-plugin:1.6.0:exec | grep -E

'[0-9]+\.[0-9]+\.[0-9]+')

# dependency:get for guava and jetty-io are workaround for SPARK-37302.

-GUAVA_VERSION=`build/mvn help:evaluate -Dexpression=guava.version -q

-DforceStdout`

+GUAVA_VERSION=$(build/mvn help:evaluate -Dexpression=guava.version -q

-DforceStdout | grep -E "^[0-9.]+$")

build/mvn dependency:get -Dartifact=com.google.guava:guava:${GUAVA_VERSION} -q

-JETTY_VERSION=`build/mvn help:evaluate -Dexpression=jetty.version -q

-DforceStdout`

+JETTY_VERSION=$(build/mvn help:evaluate -Dexpression=jetty.version -q

-DforceStdout | grep -E "^[0-9.]+v[0-9]+")

build/mvn dependency:get

-Dartifact=org.eclipse.jetty:jetty-io:${JETTY_VERSION} -q

if [ $? != 0 ]; then

echo -e "Error while getting version string from Maven:\n$OLD_VERSION"

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-37302][BUILD][FOLLOWUP] Extract the versions of dependencies accurately

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new dd0decf [SPARK-37302][BUILD][FOLLOWUP] Extract the versions of

dependencies accurately

dd0decf is described below

commit dd0decff5f1e95cedd8fe83de7e4449be57cb31c

Author: Kousuke Saruta

AuthorDate: Fri Dec 24 11:29:37 2021 +0900

[SPARK-37302][BUILD][FOLLOWUP] Extract the versions of dependencies

accurately

### What changes were proposed in this pull request?

This PR changes `dev/test-dependencies.sh` to extract the versions of

dependencies accurately.

In the current implementation, the versions are extracted like as follows.

```

GUAVA_VERSION=`build/mvn help:evaluate -Dexpression=guava.version -q

-DforceStdout`

```

But, if the output of the `mvn` command includes not only the version but

also other messages like warnings, a following command referring the version

will fail.

```

build/mvn dependency:get -Dartifact=com.google.guava:guava:${GUAVA_VERSION}

-q

...

[ERROR] Failed to execute goal

org.apache.maven.plugins:maven-dependency-plugin:3.1.1:get (default-cli) on

project spark-parent_2.12: Couldn't download artifact:

org.eclipse.aether.resolution.DependencyResolutionException:

com.google.guava:guava:jar:Falling was not found in

https://maven-central.storage-download.googleapis.com/maven2/ during a previous

attempt. This failure was cached in the local repository and resolution is not

reattempted until the update interval of gcs-maven-cent [...]

```

Actually, this causes the recent linter failure.

https://github.com/apache/spark/runs/4623297663?check_suite_focus=true

### Why are the changes needed?

To recover the CI.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually run `dev/test-dependencies.sh`.

Closes #35006 from sarutak/followup-SPARK-37302.

Authored-by: Kousuke Saruta

Signed-off-by: Kousuke Saruta

---

dev/test-dependencies.sh | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/dev/test-dependencies.sh b/dev/test-dependencies.sh

index cf05126..2268a26 100755

--- a/dev/test-dependencies.sh

+++ b/dev/test-dependencies.sh

@@ -50,9 +50,9 @@ OLD_VERSION=$($MVN -q \

--non-recursive \

org.codehaus.mojo:exec-maven-plugin:1.6.0:exec | grep -E

'[0-9]+\.[0-9]+\.[0-9]+')

# dependency:get for guava and jetty-io are workaround for SPARK-37302.

-GUAVA_VERSION=`build/mvn help:evaluate -Dexpression=guava.version -q

-DforceStdout`

+GUAVA_VERSION=$(build/mvn help:evaluate -Dexpression=guava.version -q

-DforceStdout | grep -E "^[0-9.]+$")

build/mvn dependency:get -Dartifact=com.google.guava:guava:${GUAVA_VERSION} -q

-JETTY_VERSION=`build/mvn help:evaluate -Dexpression=jetty.version -q

-DforceStdout`

+JETTY_VERSION=$(build/mvn help:evaluate -Dexpression=jetty.version -q

-DforceStdout | grep -E "^[0-9.]+v[0-9]+")

build/mvn dependency:get

-Dartifact=org.eclipse.jetty:jetty-io:${JETTY_VERSION} -q

if [ $? != 0 ]; then

echo -e "Error while getting version string from Maven:\n$OLD_VERSION"

-

To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org

For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated: [SPARK-37391][SQL] JdbcConnectionProvider tells if it modifies security context

This is an automated email from the ASF dual-hosted git repository.

sarutak pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 6cc4c90 [SPARK-37391][SQL] JdbcConnectionProvider tells if it

modifies security context

6cc4c90 is described below

commit 6cc4c90cbc09a7729f9c40f440fcdda83e3d8648

Author: Danny Guinther

AuthorDate: Fri Dec 24 10:07:16 2021 +0900

[SPARK-37391][SQL] JdbcConnectionProvider tells if it modifies security

context

Augments the JdbcConnectionProvider API such that a provider can indicate

that it will need to modify the global security configuration when establishing

a connection, and as such, if access to the global security configuration

should be synchronized to prevent races.

### What changes were proposed in this pull request?

As suggested by gaborgsomogyi

[here](https://github.com/apache/spark/pull/29024/files#r755788709), augments

the `JdbcConnectionProvider` API to include a `modifiesSecurityContext` method

that can be used by `ConnectionProvider` to determine when

`SecurityConfigurationLock.synchronized` is required to avoid race conditions

when establishing a JDBC connection.

### Why are the changes needed?

Provides a path forward for working around a significant bottleneck

introduced by synchronizing `SecurityConfigurationLock` every time a connection

is established. The synchronization isn't always needed and it should be at the

discretion of the `JdbcConnectionProvider` to determine when locking is

necessary. See [SPARK-37391](https://issues.apache.org/jira/browse/SPARK-37391)

or [this thread](https://github.com/apache/spark/pull/29024/files#r754441783).

### Does this PR introduce _any_ user-facing change?

Any existing implementations of `JdbcConnectionProvider` will need to add a

definition of `modifiesSecurityContext`. I'm also open to adding a default

implementation, but it seemed to me that requiring an explicit implementation

of the method was preferable.

A drop-in implementation that would continue the existing behavior is:

```scala

override def modifiesSecurityContext(

driver: Driver,

options: Map[String, String]

): Boolean = true

```

### How was this patch tested?

Unit tests, but I also plan to run a real workflow once I get the initial

thumbs up on this implementation.

Closes #34745 from tdg5/SPARK-37391-opt-in-security-configuration-sync.

Authored-by: Danny Guinther

Signed-off-by: Kousuke Saruta

---

.../sql/jdbc/ExampleJdbcConnectionProvider.scala | 5 ++

project/MimaExcludes.scala | 5 +-

.../jdbc/connection/BasicConnectionProvider.scala | 8

.../jdbc/connection/ConnectionProvider.scala | 22 +

.../spark/sql/jdbc/JdbcConnectionProvider.scala| 19 +++-

.../main/scala/org/apache/spark/sql/jdbc/README.md | 5 +-

.../jdbc/connection/ConnectionProviderSuite.scala | 55 ++

.../IntentionallyFaultyConnectionProvider.scala| 4 ++

8 files changed, 109 insertions(+), 14 deletions(-)

diff --git

a/examples/src/main/scala/org/apache/spark/examples/sql/jdbc/ExampleJdbcConnectionProvider.scala

b/examples/src/main/scala/org/apache/spark/examples/sql/jdbc/ExampleJdbcConnectionProvider.scala

index 6d275d4..c63467d 100644

---

a/examples/src/main/scala/org/apache/spark/examples/sql/jdbc/ExampleJdbcConnectionProvider.scala

+++

b/examples/src/main/scala/org/apache/spark/examples/sql/jdbc/ExampleJdbcConnectionProvider.scala

@@ -30,4 +30,9 @@ class ExampleJdbcConnectionProvider extends

JdbcConnectionProvider with Logging

override def canHandle(driver: Driver, options: Map[String, String]):

Boolean = false

override def getConnection(driver: Driver, options: Map[String, String]):

Connection = null

+

+ override def modifiesSecurityContext(

+driver: Driver,

+options: Map[String, String]

+ ): Boolean = false

}

diff --git a/project/MimaExcludes.scala b/project/MimaExcludes.scala

index 75fa001..6cf639f 100644

--- a/project/MimaExcludes.scala

+++ b/project/MimaExcludes.scala

@@ -40,7 +40,10 @@ object MimaExcludes {

// The followings are necessary for Scala 2.13.

ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.executor.CoarseGrainedExecutorBackend#Arguments.*"),

ProblemFilters.exclude[IncompatibleResultTypeProblem]("org.apache.spark.executor.CoarseGrainedExecutorBackend#Arguments.*"),

-

ProblemFilters.exclude[MissingTypesProblem]("org.apache.spark.executor.CoarseGrainedExecutorBackend$Arguments$")

+

ProblemFilters.exclude[MissingTypesProblem]("org.apache.spark.executor.CoarseGrainedExecutorBackend$Arguments$"),

+

+// [SPARK-37391][SQL] JdbcConnectionProvider tells if it modifies security

context

+

P

[spark] branch master updated (ae8940c -> d270d40)

This is an automated email from the ASF dual-hosted git repository. sarutak pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/spark.git. from ae8940c [SPARK-37310][SQL] Migrate ALTER NAMESPACE ... SET PROPERTIES to use V2 command by default add d270d40 [SPARK-37635][SQL] SHOW TBLPROPERTIES should print the fully qualified table name No new revisions were added by this update. Summary of changes: .../spark/sql/execution/datasources/v2/DataSourceV2Strategy.scala | 2 +- .../spark/sql/execution/datasources/v2/ShowTablePropertiesExec.scala | 3 ++- .../src/test/resources/sql-tests/results/show-tblproperties.sql.out| 2 +- 3 files changed, 4 insertions(+), 3 deletions(-) - To unsubscribe, e-mail: commits-unsubscr...@spark.apache.org For additional commands, e-mail: commits-h...@spark.apache.org

[spark] branch master updated (6a59fba -> ae8940c)