[GitHub] [incubator-tvm] aniketchopade opened a new issue #6635: onnx model compilation - TVMError: Check failed: n.defined():

aniketchopade opened a new issue #6635:

URL: https://github.com/apache/incubator-tvm/issues/6635

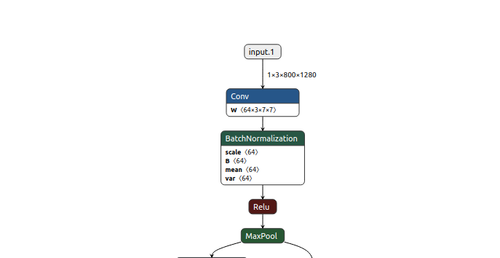

I am trying to compile the model with relay with below error

[](https://freeimage.host/i/2kfgKx)

```

onnx_model = onnx.load('model.onnx')

mod, params = relay.frontend.from_onnx(onnx_model, {"input.1":

[1,3,800,1280]})

``

```

>>> mod, params = relay.frontend.from_onnx(onnx_model, {"input.1":

[1,3,800,1280]})

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

WARNING:root:Attribute momentum is ignored in relay.sym.batch_norm

Traceback (most recent call last):

File "", line 1, in

File "/home/aniket/tvm/python/tvm/relay/frontend/onnx.py", line 2470, in

from_onnx

mod, params = g.from_onnx(graph, opset, freeze_params)

File "/home/aniket/tvm/python/tvm/relay/frontend/onnx.py", line 2278, in

from_onnx

op = self._convert_operator(op_name, inputs, attr, opset)

File "/home/aniket/tvm/python/tvm/relay/frontend/onnx.py", line 2385, in

_convert_operator

sym = convert_map[op_name](inputs, attrs, self._params)

File "/home/aniket/tvm/python/tvm/relay/frontend/onnx.py", line 1858, in

_impl_v11

scale_shape = infer_shape(scale)

File "/home/aniket/tvm/python/tvm/relay/frontend/common.py", line 500, in

infer_shape

out_type = infer_type(inputs, mo

[incubator-tvm] branch master updated: bump dockerfile (#6632)

This is an automated email from the ASF dual-hosted git repository. jroesch pushed a commit to branch master in repository https://gitbox.apache.org/repos/asf/incubator-tvm.git The following commit(s) were added to refs/heads/master by this push: new feb041d bump dockerfile (#6632) feb041d is described below commit feb041d08c7658ee262430e6660435630e636f90 Author: Thierry Moreau AuthorDate: Mon Oct 5 21:55:46 2020 -0700 bump dockerfile (#6632) --- Jenkinsfile | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/Jenkinsfile b/Jenkinsfile index 9e3b0f5..b44aa2a 100644 --- a/Jenkinsfile +++ b/Jenkinsfile @@ -46,7 +46,7 @@ // NOTE: these lines are scanned by docker/dev_common.sh. Please update the regex as needed. --> ci_lint = "tlcpack/ci-lint:v0.62" ci_gpu = "tlcpack/ci-gpu:v0.64" -ci_cpu = "tlcpack/ci-cpu:v0.65" +ci_cpu = "tlcpack/ci-cpu:v0.66" ci_wasm = "tlcpack/ci-wasm:v0.60" ci_i386 = "tlcpack/ci-i386:v0.52" // <--- End of regex-scanned config.

[GitHub] [incubator-tvm] jroesch merged pull request #6632: [CI] Update ci-cpu to the latest

jroesch merged pull request #6632: URL: https://github.com/apache/incubator-tvm/pull/6632 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] Hecmay opened a new issue #6634: [Anosr][Bug] ValueError: arch(sm_xy) is not passed, and we cannot detect it from env

Hecmay opened a new issue #6634:

URL: https://github.com/apache/incubator-tvm/issues/6634

## Environment

* Ubuntu 18.04.5 LTS

* CUDA Version: 11.0.

* GPU: RTX 2080 Ti. Compute Compatibility : 7.5

## Description

I created a simple MatMul tensor expression in C++, and want to use Anosr to

search the optimal schedule for it. Here is the skeleton of the program that I

am using. The `args` is an array of tensor inputs and output of the MatMul op.

```c++

// Create DAG and search task

const auto& dag = tvm::auto_scheduler::ComputeDAG(args);

auto task = SearchTask(dag, "test", Target("cuda"), Target("llvm"),

Optional());

// Create tuning options and search policy

auto options = TuningOptions(num_measure_trials, early_stopping,

num_measures_per_round, verbose, builder,

runner,

Optional>());

auto policy = SketchPolicy(task, cost_model, params, seed, verbose,

callbacks);

// Launch Ansor

std::pair> res = AutoSchedule(policy,

options);

```

When running the program, `measure.py` complains that the GPU architecture

cannot be found. The error message is as followed. I tried some solutions in

this link:

https://discuss.tvm.apache.org/t/solved-compile-error-related-to-autotvm/804/11.

But none of them worked in my case.

```shell

- [ Search ]

Generate Sketches #s: 1

Sample Initial Population #s: 744 fail_ct: 3352 Time elapsed: 0.39

GA Iter: 0 Max score: 0. Min score: 0. #Pop: 744

#M+: 0 #M-: 0

GA Iter: 5 Max score: 0. Min score: 0. #Pop: 2048

#M+: 1446 #M-: 89

GA Iter: 10 Max score: 0. Min score: 0. #Pop: 2048

#M+: 1573 #M-: 94

EvolutionarySearch #s: 128 Time elapsed: 3.50

- [ Measure ]

Get 10 programs for measure. (This may take a while)

.E.E.E.E.E.E.E.E.E.E

==

No: 1 GFLOPS: 0.00 / 0.00 results:

MeasureResult(error_type:CompileHostError, error_msg:Traceback (most recent

call last):

File "/home/sx/dlcb/build/tvm/python/tvm/auto_scheduler/measure.py", line

516, in timed_func

sch, args, target=task.target, target_host=task.target_host

File "/home/sx/dlcb/build/tvm/python/tvm/driver/bu

...

et_arch)

File "/home/sx/dlcb/build/tvm/python/tvm/contrib/nvcc.py", line 71, in

compile_cuda

raise ValueError("arch(sm_xy) is not passed, and we cannot detect it

from env")

ValueError: arch(sm_xy) is not passed, and we cannot detect it from env

, all_cost:0.02, Tstamp:1601955085.48)

==

```

I was able to run the Ansor tutorial, so I guess my env settings should be

correct. I also tried to specify the architecture string (i.e. `sm_75` in my

case). Ansor will throw the following error when I do so:

```shell

==

No: 4 GFLOPS: 0.00 / 0.00 results:

MeasureResult(error_type:RuntimeDeviceError, error_msg:Traceback (most recent

call last):

File "/home/sx/dlcb/build/tvm/python/tvm/auto_scheduler/measure.py", line

672, in timed_func

ndarray.empty(get_const_tuple(x.shape), x.dtype, ctx) for x in

build_res.args

File "/home/sx/dlcb/build/tvm/py

...

] (0) /home/sx/dlcb/build/tvm/build/libtvm.so(+0x1791844) [0x7fd1ecfb3844]

File "/home/sx/dlcb/build/tvm/src/runtime/cuda/cuda_device_api.cc", line

115

CUDA: Check failed: e == cudaSuccess || e == cudaErrorCudartUnloading:

initialization error

, all_cost:0.46, Tstamp:1601956508.32)

==

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [incubator-tvm] zhiics commented on issue #6629: Cuda NMS argsorts with invalid entries

zhiics commented on issue #6629: URL: https://github.com/apache/incubator-tvm/issues/6629#issuecomment-704009824 cc @Laurawly This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] caoqichun opened a new issue #6633: tvm.error:OpNotImplemented: The following operators are not supported for frontend ONNX: NonMaxSuppression

caoqichun opened a new issue #6633: URL: https://github.com/apache/incubator-tvm/issues/6633 Thanks for participating in the TVM community! We use https://discuss.tvm.ai for any general usage questions and discussions. The issue tracker is used for actionable items such as feature proposals discussion, roadmaps, and bug tracking. You are always welcomed to post on the forum first :) Issues that are inactive for a period of time may get closed. We adopt this policy so that we won't lose track of actionable issues that may fall at the bottom of the pile. Feel free to reopen a new one if you feel there is an additional problem that needs attention when an old one gets closed. For bug reports, to help the developer act on the issues, please include a description of your environment, preferably a minimum script to reproduce the problem. For feature proposals, list clear, small actionable items so we can track the progress of the change. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] jroesch commented on issue #6628: [Rust] Add MXNet to ci-cpu image to test Rust ResNet examples.

jroesch commented on issue #6628: URL: https://github.com/apache/incubator-tvm/issues/6628#issuecomment-703952444 As part of this branch we need to add Rust to GPU image so we can build Rust docs in CI. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[incubator-tvm] branch master updated: Fix a bug with Alter Op Layout (#6626)

This is an automated email from the ASF dual-hosted git repository.

moreau pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-tvm.git

The following commit(s) were added to refs/heads/master by this push:

new 311eca4 Fix a bug with Alter Op Layout (#6626)

311eca4 is described below

commit 311eca49a696f137e8cac6f4b9ba485b80bda0ee

Author: Matthew Brookhart

AuthorDate: Mon Oct 5 17:54:21 2020 -0600

Fix a bug with Alter Op Layout (#6626)

* Regression test for a Scalar type issue in Alter Op Layout

* fix the regression test by avoiding the Scalar optimization if types

aren't defined

---

src/relay/transforms/transform_layout.h | 2 +-

tests/python/relay/test_pass_alter_op_layout.py | 89 +

2 files changed, 90 insertions(+), 1 deletion(-)

diff --git a/src/relay/transforms/transform_layout.h

b/src/relay/transforms/transform_layout.h

index 19632de..bf9bcb9 100644

--- a/src/relay/transforms/transform_layout.h

+++ b/src/relay/transforms/transform_layout.h

@@ -126,7 +126,7 @@ class TransformMemorizer : public ObjectRef {

if (src_layout.ndim_primal() < dst_layout.ndim_primal()) {

// If scalar, then no need of layout transformation as scalar can be

broadcasted easily even

// if the other operand has a transformed layout.

- if (IsScalar(input_expr)) {

+ if (input_expr->checked_type_.defined() && IsScalar(input_expr)) {

return raw;

}

int num_new_axis = dst_layout.ndim_primal() - src_layout.ndim_primal();

diff --git a/tests/python/relay/test_pass_alter_op_layout.py

b/tests/python/relay/test_pass_alter_op_layout.py

index 7b242c4..4d50840 100644

--- a/tests/python/relay/test_pass_alter_op_layout.py

+++ b/tests/python/relay/test_pass_alter_op_layout.py

@@ -463,6 +463,95 @@ def test_alter_layout_scalar():

assert tvm.ir.structural_equal(a, b), "Actual = \n" + str(a)

+def test_alter_layout_scalar_regression():

+"""regression test where scalar fails"""

+

+def before():

+x = relay.var("x", shape=(1, 56, 56, 64))

+weight = relay.var("weight", shape=(3, 3, 64, 16))

+bias = relay.var("bias", shape=(1, 1, 1, 16))

+y = relay.nn.conv2d(

+x,

+weight,

+channels=16,

+kernel_size=(3, 3),

+padding=(1, 1),

+data_layout="NHWC",

+kernel_layout="HWIO",

+)

+y = relay.add(y, bias)

+mean = relay.mean(y, axis=3, exclude=True)

+var = relay.variance(y, axis=3, exclude=True)

+gamma = relay.var("gamma")

+beta = relay.var("beta")

+y = relay.nn.batch_norm(y, gamma, beta, mean, var, axis=3)

+y = y[0]

+y = relay.Function(analysis.free_vars(y), y)

+return y

+

+def alter_conv2d(attrs, inputs, tinfos, out_type):

+data, weight = inputs

+new_attrs = dict(attrs)

+new_attrs["data_layout"] = "NCHW16c"

+return relay.nn.conv2d(data, weight, **new_attrs)

+

+def expected():

+x = relay.var("x", shape=(1, 56, 56, 64))

+weight = relay.var("weight", shape=(3, 3, 64, 16))

+bias = relay.var("bias", shape=(1, 1, 1, 16))

+x = relay.layout_transform(x, src_layout="NHWC", dst_layout="NCHW")

+x = relay.layout_transform(x, src_layout="NCHW", dst_layout="NCHW16c")

+weight = relay.layout_transform(weight, src_layout="HWIO",

dst_layout="OIHW")

+y = relay.nn.conv2d(

+x, weight, channels=16, kernel_size=(3, 3), padding=(1, 1),

data_layout="NCHW16c"

+)

+bias = relay.layout_transform(bias, src_layout="NHWC",

dst_layout="NCHW")

+bias = relay.layout_transform(bias, src_layout="NCHW",

dst_layout="NCHW16c")

+add = relay.add(y, bias)

+y = relay.layout_transform(add, src_layout="NCHW16c",

dst_layout="NCHW")

+y = relay.layout_transform(y, src_layout="NCHW", dst_layout="NHWC")

+mean = relay.mean(y, axis=3, exclude=True)

+var = relay.variance(y, axis=3, exclude=True)

+denom = relay.const(1.0) / relay.sqrt(var + relay.const(1e-05))

+gamma = relay.var("gamma", shape=(16,))

+denom = denom * gamma

+denom_expand1 = relay.expand_dims(denom, axis=1, num_newaxis=2)

+denom_expand2 = relay.expand_dims(denom_expand1, axis=0)

+denom_nchwc16 = relay.layout_transform(

+denom_expand2, src_layout="NCHW", dst_layout="NCHW16c"

+)

+out = add * denom_nchwc16

+beta = relay.var("beta", shape=(16,))

+numerator = (-mean) * denom + beta

+numerator_expand1 = relay.expand_dims(numerator, axis=1, num_newaxis=2)

+numerator_expand2 = relay.expand_dims(numerator_expand1, axis=0)

+numerator_nchwc16 = relay.layout_transform(

+numerator_expand2, src_layout="NCHW", dst_layout="NCHW16c"

+)

+out = out + numerator_nchwc16

+out = relay.layo

[GitHub] [incubator-tvm] tmoreau89 commented on pull request #6626: Fix a bug with Alter Op Layout

tmoreau89 commented on pull request #6626: URL: https://github.com/apache/incubator-tvm/pull/6626#issuecomment-703949576 Thank you @mbrookhart @jwfromm the PR has been merged. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] tmoreau89 merged pull request #6626: Fix a bug with Alter Op Layout

tmoreau89 merged pull request #6626: URL: https://github.com/apache/incubator-tvm/pull/6626 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[incubator-tvm] branch master updated: [FIX, AUTOTVM] Print warning when all autotvm tasks fail with errors (#6612)

This is an automated email from the ASF dual-hosted git repository.

comaniac pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-tvm.git

The following commit(s) were added to refs/heads/master by this push:

new 86122d1 [FIX,AUTOTVM] Print warning when all autotvm tasks fail with

errors (#6612)

86122d1 is described below

commit 86122d125d9fc8d08003bf6a3fffeeca490dc634

Author: Tristan Konolige

AuthorDate: Mon Oct 5 16:50:25 2020 -0700

[FIX,AUTOTVM] Print warning when all autotvm tasks fail with errors (#6612)

* [FIX,AUTOTVM] Print warning when all autotvm tasks fail with errors.

* formatting

* write errors to tempfile

* wording

* wording

* don't duplicate errors

* Ensure we have a string for an error

---

python/tvm/autotvm/tuner/tuner.py | 17 +

1 file changed, 17 insertions(+)

diff --git a/python/tvm/autotvm/tuner/tuner.py

b/python/tvm/autotvm/tuner/tuner.py

index 9864ba0..b769d34 100644

--- a/python/tvm/autotvm/tuner/tuner.py

+++ b/python/tvm/autotvm/tuner/tuner.py

@@ -17,6 +17,7 @@

# pylint: disable=unused-argument, no-self-use, invalid-name

"""Base class of tuner"""

import logging

+import tempfile

import numpy as np

@@ -121,6 +122,7 @@ class Tuner(object):

GLOBAL_SCOPE.in_tuning = True

i = error_ct = 0

+errors = []

while i < n_trial:

if not self.has_next():

break

@@ -139,6 +141,11 @@ class Tuner(object):

else:

flops = 0

error_ct += 1

+error = res.costs[0]

+if isinstance(error, str):

+errors.append(error)

+else:

+errors.append(str(error))

if flops > self.best_flops:

self.best_flops = flops

@@ -174,6 +181,16 @@ class Tuner(object):

else:

logger.setLevel(old_level)

+if error_ct == i:

+_, f = tempfile.mkstemp(prefix="tvm_tuning_errors_",

suffix=".log", text=True)

+with open(f, "w") as file:

+file.write("\n".join(errors))

+logging.warning(

+"Could not find any valid schedule for task %s. "

+"A file containing the errors has been written to %s.",

+self.task,

+f,

+)

GLOBAL_SCOPE.in_tuning = False

del measure_batch

[GitHub] [incubator-tvm] comaniac merged pull request #6612: [FIX,AUTOTVM] Print warning when all autotvm tasks fail with errors

comaniac merged pull request #6612: URL: https://github.com/apache/incubator-tvm/pull/6612 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] comaniac commented on pull request #6612: [FIX,AUTOTVM] Print warning when all autotvm tasks fail with errors

comaniac commented on pull request #6612: URL: https://github.com/apache/incubator-tvm/pull/6612#issuecomment-703948589 Thanks @tkonolige This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[incubator-tvm] 01/01: add QEMU build to regression

This is an automated email from the ASF dual-hosted git repository.

moreau pushed a commit to branch ci-docker-staging

in repository https://gitbox.apache.org/repos/asf/incubator-tvm.git

commit 073bc6ea8d4a18258153cd01742fbbcd7b037968

Author: Andrew Reusch

AuthorDate: Mon Oct 5 15:55:02 2020 -0700

add QEMU build to regression

---

Jenkinsfile | 13 +

tests/scripts/task_config_build_qemu.sh | 31 +++

tests/scripts/task_python_microtvm.sh | 20

3 files changed, 64 insertions(+)

diff --git a/Jenkinsfile b/Jenkinsfile

index 9e3b0f5..7787da7 100644

--- a/Jenkinsfile

+++ b/Jenkinsfile

@@ -49,6 +49,7 @@ ci_gpu = "tlcpack/ci-gpu:v0.64"

ci_cpu = "tlcpack/ci-cpu:v0.65"

ci_wasm = "tlcpack/ci-wasm:v0.60"

ci_i386 = "tlcpack/ci-i386:v0.52"

+ci_qemu = "tlcpack/ci-qemu:v0.01"

// <--- End of regex-scanned config.

// tvm libraries

@@ -210,6 +211,18 @@ stage('Build') {

pack_lib('i386', tvm_multilib)

}

}

+ },

+ 'BUILD: QEMU': {

+node('CPU') {

+ ws(per_exec_ws("tvm/build-qemu")) {

+init_git()

+sh "${docker_run} ${ci_qemu} ./tests/scripts/task_config_build_qemu.sh"

+make(ci_qemu, 'build', '-j2')

+timeout(time: max_time, unit: 'MINUTES') {

+ sh "${docker_run} ${ci_qemu} ./tests/scripts/task_python_microtvm.sh"

+}

+ }

+}

}

}

diff --git a/tests/scripts/task_config_build_qemu.sh

b/tests/scripts/task_config_build_qemu.sh

new file mode 100755

index 000..2cf491f

--- /dev/null

+++ b/tests/scripts/task_config_build_qemu.sh

@@ -0,0 +1,31 @@

+#!/bin/bash

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+set -e

+set -u

+

+mkdir -p build

+cd build

+cp ../cmake/config.cmake .

+

+echo set\(USE_SORT ON\) >> config.cmake

+echo set\(USE_MICRO ON\) >> config.cmake

+echo set\(USE_STANDALONE_CRT ON\) >> config.cmake

+echo set\(CMAKE_CXX_COMPILER g++\) >> config.cmake

+echo set\(CMAKE_CXX_FLAGS -Werror\) >> config.cmake

+echo set\(HIDE_PRIVATE_SYMBOLS ON\) >> config.cmake

diff --git a/tests/scripts/task_python_microtvm.sh

b/tests/scripts/task_python_microtvm.sh

new file mode 100755

index 000..f5332ef

--- /dev/null

+++ b/tests/scripts/task_python_microtvm.sh

@@ -0,0 +1,20 @@

+#!/bin/bash

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+set -e

+set -u

[incubator-tvm] branch ci-docker-staging updated (d901d58 -> 073bc6e)

This is an automated email from the ASF dual-hosted git repository. moreau pushed a change to branch ci-docker-staging in repository https://gitbox.apache.org/repos/asf/incubator-tvm.git. omit d901d58 Add ci_qemu to Jenkinsfile omit f8c298b one last syntax error omit c3fffe9 fix jenkins syntax hopefully omit 3599e87 retrigger staging CI omit 6a29e88 fix test_micro_artifact omit e40f77f export SessionTerminatedError and update except after moving omit a65a554 fix SETFL logic omit 3d2ede3 fix typo omit f58ec63 Fix paths for qemu/ dir omit 8c65383 add qemu regression omit 2ae251c more black format omit bcd5b64 clang-format again omit 9860e72 fixes related to pylint omit 4d2fe58 fix compiler warning omit a9538c7 kill utvm rpc_server bindings, which don't work anymore and fail pylint omit 75a7b48 more pylint omit 43efa91 black format omit c291443 lint omit 1f25b58 add asf header omit e646f7c add zephyr exclusions to check_file_type omit a18e26b remove logging omit f1fdaaf re-comment serial until added omit 053bca7 cleanup zephyr compiler omit 818928a gitignore test debug files omit 1160695 validate FD are in non-blocking mode omit cf30739 don't double-open transport omit f522d4f fix nonblocking piping on some linux kernels omit 4dd93cc cleanup zephyr main omit 3ff0fa1 fix typo omit 7307c89 Add qemu build config omit 3469cde add zephyr test against qemu omit 1d1cb54 Simplify utvm rpc server API and ease handling of short packets. omit 6550674 Add metadata-only artifacts omit 709e963 black format omit 18bc0b9 Introduce transport timeouts. omit 3a4ce80 Split transport classes into transport package. add fde8d43 [tvmc] unify all logs on a single logger 'TVMC' (#6577) add cab4ce1 [COMMUNITY] vegaluisjose -> Committer (#6582) add 22ad0dd Add rocblas_sgemm_strided_batched impl. (#6579) add 27abfad disable stacked bidir test (#6585) add c0a6bc3 [tvmc] Introduce 'tune' subcommand (part 3/4) (#6537) add 0535fd1 [RUNTIME] NDArray CopyFrom/To Bytes always synchronize (#6586) add 2cfbd09 [BYOC][ETHOSN] Fix tests for new module API (#6560) add c549239 properly pass through command-line args in docker/bash.sh (#6599) add e78aa61 dynamic conv2d for cuda (#6598) add e31564e Allow datatypes besides fp32 in conv2d_transpose for cuda. (#6593) add b2bdc9b add black-format to docker/lint.sh, suppport in-place format (#6601) add 8348a44 [Parser] Fix parsing op string attributes (#6605) add 8f64286 [docs] Missing documentation 'autodocsumm' (#6595) add b553bb7 [Doc] add KEYS to downloads.apache.org (#6581) add b754bec Add ci_qemu docker image (#6485) add 5db80f0 [tvmc] Introduce 'run' subcommand (part 4/4) (#6578) add 9f5b9da [tvmc][docs] Getting started tutorial for TVMC (#6597) add 1ff5f39 [Bugfix] Simplify reduce expression in te.gradient (#6611) add 52b776a [RELEASE] Bump version to 0.7.0 (#6614) add 7e671cb [BUG_FIX] Fixes #6608: CHECK(data != nullptr) causes type checking to fail (#6610) add f9abf56 Updated runtime to run under FreeBSD. (#6600) add 72969b2 [Ansor] Support multiple output ops and fix Python API printing (#6584) add 728b829 [RELEASE] Update NEWS.md for v0.7 (#6613) add e892c95 [ETHOSN] Update to 20.08 version of the ethosn-driver. (#6606) add a413458 [VERSION] Version for v0.8 cycle (#6615) add 2658ebe Dynamic ONNX Importer (#6351) add 21002cd Fix Strided Slice Infer Layout (#6621) new 073bc6e add QEMU build to regression This update added new revisions after undoing existing revisions. That is to say, some revisions that were in the old version of the branch are not in the new version. This situation occurs when a user --force pushes a change and generates a repository containing something like this: * -- * -- B -- O -- O -- O (d901d58) \ N -- N -- N refs/heads/ci-docker-staging (073bc6e) You should already have received notification emails for all of the O revisions, and so the following emails describe only the N revisions from the common base, B. Any revisions marked "omit" are not gone; other references still refer to them. Any revisions marked "discard" are gone forever. The 1 revisions listed above as "new" are entirely new to this repository and will be described in separate emails. The revisions listed as "add" were already present in the repository and have only been added to this reference. Summary of changes: CONTRIBUTORS.md|1 + Jenkinsfile| 23 +- NEWS.md| 1338 conda/tvm-libs/meta.yaml |2 +- conda/tvm/meta.yaml

[GitHub] [incubator-tvm] tkonolige commented on pull request #6612: [FIX,AUTOTVM] Print warning when all autotvm tasks fail with errors

tkonolige commented on pull request #6612: URL: https://github.com/apache/incubator-tvm/pull/6612#issuecomment-703937603 @comaniac CI is all green now. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] tqchen edited a comment on issue #6623: [RFC] Move to use main as default branch

tqchen edited a comment on issue #6623: URL: https://github.com/apache/incubator-tvm/issues/6623#issuecomment-703171276 To be fully transparent about what it would take: - create main branch from master - setup branch protection - New PRs and other activities will continue to work as normal - For stale PRs that still need updates, reset the base to main (Thanks @szha for pointing it out) - In github UI, click edit, branches, change from master to main This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] tqchen edited a comment on issue #6623: [RFC] Move to use main as default branch

tqchen edited a comment on issue #6623: URL: https://github.com/apache/incubator-tvm/issues/6623#issuecomment-703171276 To be fully transparent about what it would take: - create main branch from master - setup branch protection - New PRs and other activities will continue to work as normal - For stale PRs that still need updates, reset the base to main - In github UI, click edit, branches, change from master to main This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] mbrookhart commented on a change in pull request #6533: Scatter on Cuda

mbrookhart commented on a change in pull request #6533:

URL: https://github.com/apache/incubator-tvm/pull/6533#discussion_r499885677

##

File path: tests/python/relay/test_op_level3.py

##

@@ -903,8 +904,8 @@ def verify_scatter(dshape, ishape, axis=0):

indices_np = np.random.randint(-dshape[axis], dshape[axis] - 1,

ishape).astype("int64")

ref_res = ref_scatter(data_np, indices_np, updates_np, axis)

-# TODO(mbrookhart): expand testing when adding more backend schedules

-for target, ctx in [("llvm", tvm.cpu())]:

+

Review comment:

Hmm, that's a good idea. I'm not sure the dyn namespace is the right

place though? Maybe just add a second test with dynamic shapes here? or in

test_any?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [incubator-tvm] tmoreau89 opened a new pull request #6632: [CI] Update ci-cpu to the latest

tmoreau89 opened a new pull request #6632: URL: https://github.com/apache/incubator-tvm/pull/6632 Specifically, making sure that some of the latest changes to TVM dockerfile are taken into account: * Ethos n driver update: https://github.com/apache/incubator-tvm/pull/6606 * Vitis AI CI support: https://github.com/apache/incubator-tvm/pull/6342 @u99127 @leandron @jornt-xilinx This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] hogepodge commented on issue #6623: [RFC] Move to use main as default branch

hogepodge commented on issue #6623: URL: https://github.com/apache/incubator-tvm/issues/6623#issuecomment-703897640 This change would be in accordance with the [Apache Code of Conduct](https://www.apache.org/foundation/policies/conduct.html) across several dimensions. 1. It creates a more open community. 2. It shows empathy for the diverse open source community. 3. It encourages collaboration. 4. It is being careful with the words we choose. 5. It aligns with the diversity statement. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] mbrookhart commented on a change in pull request #6533: Scatter on Cuda

mbrookhart commented on a change in pull request #6533:

URL: https://github.com/apache/incubator-tvm/pull/6533#discussion_r499881267

##

File path: python/tvm/topi/cuda/scatter.py

##

@@ -0,0 +1,444 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+# pylint: disable=invalid-name, no-member, too-many-locals,

too-many-arguments, too-many-statements, singleton-comparison, unused-argument

+"""Scatter operator """

+import tvm

+from tvm import te

+

+

+def ceil_div(a, b):

+return (a + b - 1) // b

+

+

+def gen_ir_1d(data, indices, updates, axis, out):

+"""Generate scatter ir for 1d inputs

+

+Parameters

+--

+data : tir.Tensor

+The input data to the operator.

+

+indices : tir.Tensor

+The index locations to update.

+

+updates : tir.Tensor

+The values to update.

+

+axis : int

+The axis to scatter on

+

+out : tir.Tensor

+The output tensor.

+

+Returns

+---

+ret : tir

+The computational ir.

+"""

+assert axis == 0

+n = data.shape[0]

+

+ib = tvm.tir.ir_builder.create()

+

+out_ptr = ib.buffer_ptr(out)

+data_ptr = ib.buffer_ptr(data)

+

+with ib.new_scope():

+bx = te.thread_axis("blockIdx.x")

+ib.scope_attr(bx, "thread_extent", n)

+out_ptr[bx] = data_ptr[bx]

+

+indices_ptr = ib.buffer_ptr(indices)

+updates_ptr = ib.buffer_ptr(updates)

+

+ni = indices.shape[0]

+

+with ib.new_scope():

+bx = te.thread_axis("blockIdx.x")

+ib.scope_attr(bx, "thread_extent", 1)

Review comment:

If I don't define at least one block in the scope, the generate cuda

code fails to compile.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[incubator-tvm] branch ci-docker-staging updated (f8c298b -> d901d58)

This is an automated email from the ASF dual-hosted git repository. moreau pushed a change to branch ci-docker-staging in repository https://gitbox.apache.org/repos/asf/incubator-tvm.git. from f8c298b one last syntax error add d901d58 Add ci_qemu to Jenkinsfile No new revisions were added by this update. Summary of changes: Jenkinsfile | 1 + 1 file changed, 1 insertion(+)

[GitHub] [incubator-tvm] jroesch commented on issue #6622: [VOTE] Release Apache TVM (incubating) v0.7.0.rc0

jroesch commented on issue #6622: URL: https://github.com/apache/incubator-tvm/issues/6622#issuecomment-703882812 +1 (binding) * Checked the code compiles * Checked License and Notice * Version This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] alec-anyvision opened a new issue #6631: Race Condition in Cuda SSD Multibox

alec-anyvision opened a new issue #6631: URL: https://github.com/apache/incubator-tvm/issues/6631 I may be missing something about the IR, but there seems to be a race condition in the following line. There is no guarantee that `temp_valid_count[tid * num_anchors + k - 1]` will be computed before `tid == 0` reaches this point. However, in practice this seems very unlikely. https://github.com/apache/incubator-tvm/blob/21002cd094c34716b1e07a63ed76f53dadd60e23/python/tvm/topi/cuda/ssd/multibox.py#L234 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] tmoreau89 edited a comment on issue #6622: [VOTE] Release Apache TVM (incubating) v0.7.0.rc0

tmoreau89 edited a comment on issue #6622: URL: https://github.com/apache/incubator-tvm/issues/6622#issuecomment-703874194 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] tmoreau89 commented on issue #6622: [VOTE] Release Apache TVM (incubating) v0.7.0.rc0

tmoreau89 commented on issue #6622: URL: https://github.com/apache/incubator-tvm/issues/6622#issuecomment-703874194 +1 (binding): This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] areusch opened a new pull request #6630: [µTVM] Avoid use of builtin math functions

areusch opened a new pull request #6630: URL: https://github.com/apache/incubator-tvm/pull/6630 Fixes `undefined reference to \`expf'` errors This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] alec-anyvision opened a new issue #6629: Cuda NMS argsorts with invalid entries

alec-anyvision opened a new issue #6629: URL: https://github.com/apache/incubator-tvm/issues/6629 I believe the following two lines should have `valid_count=valid_count`, as in [the default implementation](https://github.com/apache/incubator-tvm/blob/21002cd094c34716b1e07a63ed76f53dadd60e23/python/tvm/topi/vision/nms.py#L558). I believe the `data` array is zeroed at initialization for cuda, so the issue only manifests if nms is run more than once, with the first run containing more valid boxes than the second run. https://github.com/apache/incubator-tvm/blob/21002cd094c34716b1e07a63ed76f53dadd60e23/python/tvm/topi/cuda/nms.py#L488 https://github.com/apache/incubator-tvm/blob/21002cd094c34716b1e07a63ed76f53dadd60e23/python/tvm/topi/cuda/nms.py#L492 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] jroesch commented on pull request #6563: [Rust] Improve NDArray, GraphRt, and Relay bindings

jroesch commented on pull request #6563: URL: https://github.com/apache/incubator-tvm/pull/6563#issuecomment-703849631 We need to update the docker images to match MxNet see #6628 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] jroesch opened a new issue #6628: [Rust] Add MXNet to ci-cpu image to test Rust ResNet examples.

jroesch opened a new issue #6628: URL: https://github.com/apache/incubator-tvm/issues/6628 @mwillsey and I updated the ResNet example to check the correctness of the model by using a pre-trained model instead of random weights. In order to turn this on in CI we need to update the image and then reissue a build with it turned back on. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[incubator-tvm] branch ci-docker-staging updated (c3fffe9 -> f8c298b)

This is an automated email from the ASF dual-hosted git repository. moreau pushed a change to branch ci-docker-staging in repository https://gitbox.apache.org/repos/asf/incubator-tvm.git. from c3fffe9 fix jenkins syntax hopefully add f8c298b one last syntax error No new revisions were added by this update. Summary of changes: Jenkinsfile | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-)

[GitHub] [incubator-tvm] jwfromm commented on a change in pull request #6616: [Topi] Allow batch_matmul to broadcast along batch dimension.

jwfromm commented on a change in pull request #6616: URL: https://github.com/apache/incubator-tvm/pull/6616#discussion_r499822421 ## File path: tests/python/frontend/onnx/test_forward.py ## @@ -3628,7 +3628,6 @@ def verify_roi_align( test_clip_min_max_as_inputs() test_onehot() test_matmul() -test_batch_matmul() Review comment: Just wanted to note that I removed this since `test_batch_matmul` is now run with `tvm.testing.parametrize`, which means it will cause an error when run using python instead of pytest. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] tqchen commented on pull request #6603: Add µTVM Zephyr support + QEMU regression test

tqchen commented on pull request #6603: URL: https://github.com/apache/incubator-tvm/pull/6603#issuecomment-703838421 cc @tmoreau89 , please sync push to ci-docker-staging to confirm if docker-staging passes This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] tqchen commented on pull request #6603: Add µTVM Zephyr support + QEMU regression test

tqchen commented on pull request #6603: URL: https://github.com/apache/incubator-tvm/pull/6603#issuecomment-703837862 @jroesch can you help manage the PR? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] rkimball opened a new pull request #6627: Fix example code

rkimball opened a new pull request #6627: URL: https://github.com/apache/incubator-tvm/pull/6627 The example code in the comment for Object had errors This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] mbrookhart opened a new pull request #6626: Fix a bug with Alter Op Layout

mbrookhart opened a new pull request #6626: URL: https://github.com/apache/incubator-tvm/pull/6626 We found a case where models imported from Tensorflow in NCHW layout fail in AlterOpLayout due to an odd combination of passes. This PR adds a regression tests and short-circuits the Scalar optimization to fix the bug. Tagging @anijain2305 becuase you originally added the Scalar check in Alter Op Layout. You can see the bug if you remove my changes to that check, I don't see a cleaner fix at the moment. cc @jwfromm @tmoreau89 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] giuseros commented on pull request #6445: Add dot product support for quantized convolution.

giuseros commented on pull request #6445: URL: https://github.com/apache/incubator-tvm/pull/6445#issuecomment-703764060 Hi @mbaret , Thank you for the careful review! @FrozenGene , @anijain2305 should we merge this in? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] tkonolige commented on a change in pull request #6580: Faster sparse_dense on GPUs

tkonolige commented on a change in pull request #6580: URL: https://github.com/apache/incubator-tvm/pull/6580#discussion_r499745730 ## File path: python/tvm/tir/ir_builder.py ## @@ -74,8 +75,23 @@ def asobject(self): def dtype(self): return self._content_type +def _linear_index(self, index): +if not isinstance(index, tuple): +return index +assert len(index) == len(self._shape), "Index size (%s) does not match shape size (%s)" % ( +len(index), +len(self._shape), +) +dim_size = 1 +lidx = 0 +for dim, idx in zip(reversed(self._shape), reversed(index)): +lidx += idx * dim_size +dim_size *= dim +return lidx + def __getitem__(self, index): t = DataType(self._content_type) +index = self._linear_index(index) Review comment: This allows you to use multidimensional indices with IRBuilder. Manually calculating the correct index into multidimensional arrays caused a couple of bugs when I was developing this code. Using multidimensional indices made everything cleaner and easier to debug. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] zhiics commented on issue #6622: [VOTE] Release Apache TVM (incubating) v0.7.0.rc0

zhiics commented on issue #6622: URL: https://github.com/apache/incubator-tvm/issues/6622#issuecomment-703743085 +1 (binding) - Checked the signature and hash - The code compiles - Checked LICESE and NOTICE This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] hogepodge commented on pull request #6625: [TVMC] fail gracefully in case no subcommand is provided

hogepodge commented on pull request #6625: URL: https://github.com/apache/incubator-tvm/pull/6625#issuecomment-703735736 lgtm, thanks! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] leandron opened a new pull request #6625: [TVMC] fail gracefully in case no subcommand is provided

leandron opened a new pull request #6625: URL: https://github.com/apache/incubator-tvm/pull/6625 This fixes an issue, when the user calls `tvmc` with no arguments at all. Rather than showing an `AssertError`, it now shows _usage_ and exit with code 1. @hogepodge reported it on Discuss. cc @comaniac @hogepodge This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] FrozenGene commented on issue #6622: [VOTE] Release Apache TVM (incubating) v0.7.0.rc0

FrozenGene commented on issue #6622: URL: https://github.com/apache/incubator-tvm/issues/6622#issuecomment-703569247 +1, I checked - signature and hash - LICENSE, DISCLAIMER and NOTICE - Version - Code compiles This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] bgchun commented on issue #6622: [VOTE] Release Apache TVM (incubating) v0.7.0.rc0

bgchun commented on issue #6622: URL: https://github.com/apache/incubator-tvm/issues/6622#issuecomment-703514088 +1 (binding) - I checked the signature and hash. - DISCLAIMER, LICENSE, and NOTICE look good - No unexpected binary files - The code compiles and runs Thanks. -Gon This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [incubator-tvm] m3at opened a new issue #6624: [Relay] Module mutated in-place

m3at opened a new issue #6624:

URL: https://github.com/apache/incubator-tvm/issues/6624

Following [discussion on the

forum](https://discuss.tvm.apache.org/t/unable-to-build-relay-function-twice/7987)

I'm opening this issue for what appear to be a bug when running `relay.build`,

with potentially `nn.pad` being mutated in-place.

In practice the issue can be side-stepped by making a `deepcopy` of the

module before building. This issue is to find which pass is potentially

mutating the module in-place.

Steps to reproduce:

```sh

# Obtain the model

python3 -m pip install geffnet

wget

"https://github.com/rwightman/gen-efficientnet-pytorch/blob/master/onnx_export.py";

python3 onnx_export.py ./b4.onnx --model="tf_efficientnet_b4_ns"

--img-size=380

```

```python

import numpy as np

import onnx

import tvm

from tvm import relay

from tvm.contrib import graph_runtime

# Prepare parameters

input_shape = [1, 3, 380, 380]

example_input = np.random.randn(*input_shape).astype(np.float32)

target = "llvm -mcpu=core-avx2"

ctx = tvm.cpu(0)

# Get model from ONNX

onnx_model = onnx.load("./b4.onnx")

mod, params = relay.frontend.from_onnx(

onnx_model, {"input0": input_shape},

)

# Build module

with tvm.transform.PassContext(opt_level=3):

graph_module = relay.build(mod, target=target, target_host=target,

params=params)

# Run, no issue

runtime_module = graph_runtime.GraphModule(graph_module['default'](ctx))

runtime_module.set_input(key="input0", value=tvm.nd.array(example_input))

runtime_module.run()

tvm_output = runtime_module.get_output(0).asnumpy()

# Build again, or use autotvm.task.extract_from_program

# Error (see below)

with tvm.transform.PassContext(opt_level=3):

graph_module = relay.build(mod, target=target, target_host=target,

params=params

```

Relevant part of the error:

```

%45 = multiply(%43, %44);

%46 = nn.pad(%45, pad_width=[[0, 0], [0, 0], [0, 1], [0, 1], [0, 0]]) an

internal invariant was violated while typechecking your program [05:19:37]

../src/relay/op/nn/pad.cc:125: Check failed: data->shape.size() ==

param->pad_width.size(): There should be as many pad width pairs as shape

dimensions but the shape has 4 dimensions and there are 5 pad width pairs.

; ;

%47 = nn.conv2d(%46, meta[relay.Constant][38], strides=[2, 2], padding=[0,

0, 0, 0], groups=144, kernel_size=[3, 3]);

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org