[GitHub] [tvm] yzh119 edited a comment on pull request #10207: Support sub warp reduction for CUDA target.

yzh119 edited a comment on pull request #10207:

URL: https://github.com/apache/tvm/pull/10207#issuecomment-1034575574

There are some issues to be solved:

If in the following case:

```python

@T.prim_func

def reduce(a: T.handle, b: T.handle, n: T.int32) -> None:

A = T.match_buffer(a, [1024, 4, 8])

B = T.match_buffer(b, [1024, 4])

for i, j, k in T.grid(1024, 4, 8):

with T.block("reduce"):

vi, vj, vk = T.axis.remap("SSR", [i, j, k])

with T.init():

B[vi, vj] = 0.

B[vi, vj] = B[vi, vj] + A[vi, vj, vk]

```

we bind `j` to `threadIdx.y` and `k` to `threadIdx.x`, different `j`'s might

be mapped to the same warp, we need different masks for different `j` to

distinguish them.

Another thing worth noting is, we can only allow cross warp reduction by

shuffle-down, thus warp size might be a multiple of `blockDim.x` when

`blockDim.y * blockDim.z != 1`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] yzh119 edited a comment on pull request #10207: Support sub warp reduction for CUDA target.

yzh119 edited a comment on pull request #10207:

URL: https://github.com/apache/tvm/pull/10207#issuecomment-1034575574

There are some issues to be solved:

If in the following case:

```python

@T.prim_func

def reduce(a: T.handle, b: T.handle, n: T.int32) -> None:

A = T.match_buffer(a, [1024, 4, 8])

B = T.match_buffer(b, [1024, 4])

for i, j, k in T.grid(1024, 4, 8):

with T.block("reduce"):

vi, vj, vk = T.axis.remap("SSR", [i, j, k])

with T.init():

B[vi, vj] = 0.

B[vi, vj] = B[vi, vj] + A[vi, vj, vk]

```

we bind j to `threadIdx.y` and k to `threadIdx.x`, different `j`'s might be

mapped to the same warp, we need different masks for different `j` to

distinguish them.

Another thing worth noting is, we can only allow cross warp reduction by

shuffle-down, thus warp size might be a multiple of `blockDim.x` when

`blockDim.y * blockDim.z != 1`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] yzh119 commented on pull request #10207: Support sub warp reduction for CUDA target.

yzh119 commented on pull request #10207:

URL: https://github.com/apache/tvm/pull/10207#issuecomment-1034575574

There are some issues to be solved:

If in the following case:

```

@T.prim_func

def reduce(a: T.handle, b: T.handle, n: T.int32) -> None:

A = T.match_buffer(a, [1024, 4, 8])

B = T.match_buffer(b, [1024, 4])

for i, j, k in T.grid(1024, 4, 8):

with T.block("reduce"):

vi, vj, vk = T.axis.remap("SSR", [i, j, k])

with T.init():

B[vi, vj] = 0.

B[vi, vj] = B[vi, vj] + A[vi, vj, vk]

```

we bind j to `threadIdx.y` and k to `threadIdx.x`, different `j`'s might be

mapped to the same warp, we need different masks for different `j` to

distinguish them.

Another thing worth noting is, we can only allow cross warp reduction by

shuffle-down, thus warp size might be a multiple of `blockDim.x` when

`blockDim.y * blockDim.z != 1`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] junrushao1994 commented on pull request #10207: Support sub warp reduction for CUDA target.

junrushao1994 commented on pull request #10207: URL: https://github.com/apache/tvm/pull/10207#issuecomment-1034562205 CC @MasterJH5574 I believe you are interested -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] yangulei commented on a change in pull request #10112: [TIR, Relay] improve bfloat16 support

yangulei commented on a change in pull request #10112:

URL: https://github.com/apache/tvm/pull/10112#discussion_r80719

##

File path: src/relay/op/nn/nn.cc

##

@@ -1177,7 +1177,8 @@ bool NLLLossRel(const Array& types, int num_inputs,

const Attrs& attrs,

<< ", weights shape = " <<

weights->shape);

return false;

}

- if (!(predictions->dtype == weights->dtype &&

predictions->dtype.is_float())) {

+ if (!(predictions->dtype == weights->dtype &&

+(predictions->dtype.is_float() || predictions->dtype.is_bfloat16( {

Review comment:

I prefer this way too, since they **are** all floating-point datatypes.

While there are some practical inconsistences so far, for example, if we let

`is_float() == true` for `bfloat16`, then we cannot distinguish `bfloat16` and

`float16` anymore as they both satisfy the condition `is_float() == true &&

bits() == 16`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] prateek9623 commented on pull request #10097: [CMake] add support for find_package

prateek9623 commented on pull request #10097: URL: https://github.com/apache/tvm/pull/10097#issuecomment-1034554865 @tkonolige done with requested changes -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

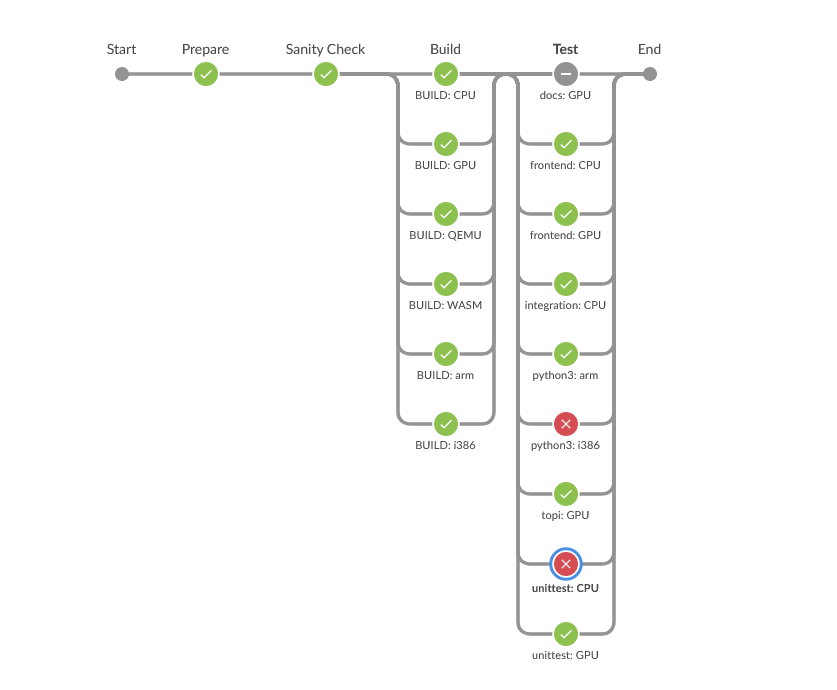

[GitHub] [tvm] masahi commented on pull request #10209: [BUG]fix batch matmul not set attrs_type_key, when using tvm.parse.parse_expr will raise error.

masahi commented on pull request #10209: URL: https://github.com/apache/tvm/pull/10209#issuecomment-1034548834 Seems you have hit an flaky error, please run another job (rebase and push, or empty commit) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] yangulei commented on a change in pull request #10112: [TIR, Relay] improve bfloat16 support

yangulei commented on a change in pull request #10112:

URL: https://github.com/apache/tvm/pull/10112#discussion_r80719

##

File path: src/relay/op/nn/nn.cc

##

@@ -1177,7 +1177,8 @@ bool NLLLossRel(const Array& types, int num_inputs,

const Attrs& attrs,

<< ", weights shape = " <<

weights->shape);

return false;

}

- if (!(predictions->dtype == weights->dtype &&

predictions->dtype.is_float())) {

+ if (!(predictions->dtype == weights->dtype &&

+(predictions->dtype.is_float() || predictions->dtype.is_bfloat16( {

Review comment:

I prefer this way too, since they **are** all floating-point datatypes.

While there are some practice inconsistences so far, for example, if we let

`is_float() == true` for `bfloat16`, then we cannot distinguish `bfloat16` and

`float16` anymore as they both satisfy the condition `is_float() == true &&

bits() == 16`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] yzh119 commented on pull request #10207: Support sub warp reduction for CUDA target.

yzh119 commented on pull request #10207:

URL: https://github.com/apache/tvm/pull/10207#issuecomment-1034535980

Sure, below is the measured time of the kernel:

```python

@T.prim_func

def reduce(a: T.handle, b: T.handle, n: T.int32) -> None:

A = T.match_buffer(a, [1048576, n])

B = T.match_buffer(b, [1048576])

for i, j in T.grid(1048576, n):

with T.block("reduce"):

vi, vj = T.axis.remap("SR", [i, j])

with T.init():

B[vi] = 0.

B[vi] = B[vi] + A[vi, vj]

```

and change n between 2,4,8,16,32.

| n | 2 | 4 | 8 | 16

| 32 |

|--||---||||

| shuffle-down time(ms) | 1.840511957804362 | 1.877586046854655 |

2.1820863087972007 | 2.2471348444620767 | 2.1001497904459634 |

| shared mem time(ms) | 1.7892122268676758 | 1.922925313313802 |

2.053538958231608 | 2.0630757013956704 | 2.1170775095621743 |

there are some variance across multiple runs.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] driazati commented on issue #10167: [Bug] Missing pytest during tvmc compile/tuning

driazati commented on issue #10167:

URL: https://github.com/apache/tvm/issues/10167#issuecomment-1034476313

Thanks for reporting! Can you share the script you used to generate this

error so we can reproduce it? This should be fixable since pytest isn't

actually used in that code path, so something like this

[here](https://github.com/apache/tvm/blob/main/python/tvm/testing/utils.py#L74)

would avoid the error and still give a nice message if pytest is actually used.

```python

class MissingModuleStub:

def __init__(self, name):

self.name = name

def __getattr__(self):

raise RuntimeError(f"Module '{self.name}' is not installed")

try:

import pytest

except ImportError:

pytest = MissingModuleStub("pytest")

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] MeJerry215 commented on pull request #10209: [BUG]fix batch matmul not set attrs_type_key, when using tvm.parse.parse_expr will raise error.

MeJerry215 commented on pull request #10209: URL: https://github.com/apache/tvm/pull/10209#issuecomment-1034475052 @yzhliu -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] MeJerry215 opened a new pull request #10209: [BUG]fix batch matmul not set attrs_type_key, when using tvm.parse.parse_expr will raise error.

MeJerry215 opened a new pull request #10209:

URL: https://github.com/apache/tvm/pull/10209

```

df_parsed = tvm.parser.parse_expr(

'''

fn (%p0527: Tensor[(16, 256, 256), float16], %p1361: Tensor[(16, 64,

256), float16]) -> Tensor[(16, 256, 64), float16] {

nn.batch_matmul(%p0527, %p1361, transpose_b=True) /* ty=Tensor[(16,

256, 64), float32] */

}

'''

)

```

the code above may fail, cause not set nn.batch_matmul attrs_type_key.

test_code:

```

def test_nn_batch_matmul():

df_parsed = tvm.parser.parse_expr(

'''

fn (%p0527: Tensor[(16, 256, 256), float16], %p1361: Tensor[(16, 64,

256), float16]) -> Tensor[(16, 256, 64), float16] {

nn.batch_matmul(%p0527, %p1361, transpose_b=True) /* ty=Tensor[(16,

256, 64), float32] */

}

''')

```

now the attr has been properly set.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] driazati opened a new pull request #10208: [ci] Check more events before pinging reviewers

driazati opened a new pull request #10208: URL: https://github.com/apache/tvm/pull/10208 This was missing some events before (reviews without comments, PR updated from a draft -> ready for review) so these were being ignored when finding the latest event. This PR adds them and restructures the code a bit to make it more clear what is happening for each PR. This addresses some of the issues from #9983 Thanks for contributing to TVM! Please refer to guideline https://tvm.apache.org/docs/contribute/ for useful information and tips. After the pull request is submitted, please request code reviews from [Reviewers](https://github.com/apache/incubator-tvm/blob/master/CONTRIBUTORS.md#reviewers) by @ them in the pull request thread. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] Hzfengsy commented on a change in pull request #10112: [TIR, Relay] improve bfloat16 support

Hzfengsy commented on a change in pull request #10112:

URL: https://github.com/apache/tvm/pull/10112#discussion_r803273135

##

File path: src/relay/op/nn/nn.cc

##

@@ -1177,7 +1177,8 @@ bool NLLLossRel(const Array& types, int num_inputs,

const Attrs& attrs,

<< ", weights shape = " <<

weights->shape);

return false;

}

- if (!(predictions->dtype == weights->dtype &&

predictions->dtype.is_float())) {

+ if (!(predictions->dtype == weights->dtype &&

+(predictions->dtype.is_float() || predictions->dtype.is_bfloat16( {

Review comment:

Shall we let `is_float()` be true for `bfloat16` exprs?

##

File path: src/relay/backend/utils.h

##

@@ -302,6 +302,8 @@ inline std::string DType2String(const tvm::DataType dtype) {

os << "int";

} else if (dtype.is_uint()) {

os << "uint";

+ } else if (dtype.is_bfloat16()) {

+os << "bfloat";

Review comment:

```suggestion

os << "bfloat16";

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] FranckQC edited a comment on pull request #9482: Implementation of Common Subexpression Elimination for TIR

FranckQC edited a comment on pull request #9482: URL: https://github.com/apache/tvm/pull/9482#issuecomment-1034443098 Sorry I forgot to answer to the other parts of your message @mbs-octoml . Many thanks for it by the way! > Thanks so much for the clear and complete comments, very much appreciate that. > Echo @Hzfengsy suggestion to switch to TVMScript for your tests -- perhaps just pretty printing what you already constructs would be a short cut. Yes I didn't know about TVMScript before. When writing the tests, I initially stared at some other test files and got inspiration from them. Unfortunately the ones I've been looking at might not have been the most up-to-date way of doing things, sorry for that! :-( I guess we can still improve the tests later on, but they still accomplish their main function for now, which is the most important I think, even though they are probably a little bit more verbose than they would be using TVMScript, where I could just directly write the expected TIR code instead of unfolding the TIR code that the CSE pass has produced. > The code duplication across PrimExpr & Stmnt in CommonSubexpressionElimintor is unfortunate but suspect removing that would only obscure things. Yes, I agree that this duplication is a little bit unfortunate. @masahi did pointed it out [here](https://github.com/apache/tvm/pull/9482#discussion_r790201064). I was also a little bit annoyed with it at the beginning. So I tried to factorize it out a few times, including an attempt described in my answer [here](https://github.com/apache/tvm/pull/9482#discussion_r797243979). But all my attempt ended up with something much too complicated for what we would gain. In fact, we just happen to want to do almost exactly the same treatment for an expression and for a statement from an algorithmic point of view, but from a data-type point of view, quite a things are still different type-wise. That's a pretty rare situation. In the end, I decided to not force things, and to leave it like that. And the positive point of having the VisitStmt() and VisitExpr() not factorized by some weird magic is that we can still easily customize the algorithm if at some point we need to, between expressions and statements (I can't think of any reason we would want that, but still! :) ) Many thanks again! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] FranckQC edited a comment on pull request #9482: Implementation of Common Subexpression Elimination for TIR

FranckQC edited a comment on pull request #9482: URL: https://github.com/apache/tvm/pull/9482#issuecomment-1034443098 Sorry I forgot to answer to the other parts of your message @mbs-octoml . Many thanks for it by the way! > Thanks so much for the clear and complete comments, very much appreciate that. > Echo @Hzfengsy suggestion to switch to TVMScript for your tests -- perhaps just pretty printing what you already constructs would be a short cut. Yes I didn't know about TVMScript before. When writing the tests, I initially stared at some other test files and got inspiration from them. Unfortunately the ones I've been looking at might not have been the most up-to-date way of doing things, sorry for that! :-( I guess we can still improve the tests later on, but they still accomplish their main function for now, which is the most important I think, even though they are probably a little bit more verbose than they would be using TVMScript, where I could just directly write the expected TIR code instead of unfolding the TIR code that the CSE pass has produced. > The code duplication across PrimExpr & Stmnt in CommonSubexpressionElimintor is unfortunate but suspect removing that would only obscure things. Yes, I agree that this duplication is a little bit unfortunate. @masahi did pointed it out [here](https://github.com/apache/tvm/pull/9482#discussion_r790201064). I was also a little bit annoyed with it at the beginning. So I tried to factorize it out a few times, including an attempt described in my answer [here](https://github.com/apache/tvm/pull/9482#discussion_r797243979). But all my attempt ended up with something much too complicated for what we would gain. In fact, we just happen to want to do almost exactly the same treatment for Expr() and Stmt() from an algorithmic point of view, but from a data-type point of view, quite a things are still different type-wise. That's a pretty rare situation. In the end, I decided to not force things, and to leave it like that. And the positive point of having the VisitStmt() and VisitExpr() not factorized by some weird magic is that we can still easily customize the algorithm if at some point we need to, between expressions and statements (I can't think of any reason we would want that, but still! :) ) Many thanks again! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] FranckQC commented on pull request #9482: Implementation of Common Subexpression Elimination for TIR

FranckQC commented on pull request #9482: URL: https://github.com/apache/tvm/pull/9482#issuecomment-1034443098 Sorry I forgot to answer to the other parts of your message @mbs-octoml . Many thanks for it by the way! > Thanks so much for the clear and complete comments, very much appreciate that. > Echo @Hzfengsy suggestion to switch to TVMScript for your tests -- perhaps just pretty printing what you already constructs would be a short cut. Yes I didn't know about TVMScript before. When writing the tests, I initially stared at some other test files and got inspiration from there. The ones I've been looking at might not have been the most up-to-date way of doing things, sorry for that! :-( I guess we can still improve the tests later on, but they still accomplish their main function for now, which is the most important I think, even though they are probably a little bit more verbose than they would be using TVMScript, where I could just directly write the expected TIR code instead of unfolding the TIR code that the CSE pass has produced. > The code duplication across PrimExpr & Stmnt in CommonSubexpressionElimintor is unfortunate but suspect removing that would only obscure things. Yes, I agree that this duplication is a little bit unfortunate. @masahi did pointed it out [here](https://github.com/apache/tvm/pull/9482#discussion_r790201064). I was also a little bit annoyed with it at the beginning. So I tried to factorize it out a few times, including an attempt described in my answer [here](https://github.com/apache/tvm/pull/9482#discussion_r797243979). But all my attempt ended up with something much too complicated for what we would gain. In fact, we just happen to want to do almost exactly the same treatment for Expr() and Stmt() from an algorithmic point of view, but from a data-type point of view, quite a things are still different type-wise. That's a pretty rare situation. In the end, I decided to not force things, and to leave it like that. And the positive point of having the VisitStmt() and VisitExpr() not factorized by some weird magic is that we can still easily customize the algorithm if at some point we need to, between expressions and statements (I can't think of any reason we would want that, but still! :) ) Many thanks again! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] yangulei commented on a change in pull request #10112: [TIR, Relay] improve bfloat16 support

yangulei commented on a change in pull request #10112:

URL: https://github.com/apache/tvm/pull/10112#discussion_r803262987

##

File path: tests/python/relay/test_cpp_build_module.py

##

@@ -93,6 +93,35 @@ def test_fp16_build():

np.testing.assert_allclose(out.numpy(), X.numpy() + Y.numpy(), atol=1e-5,

rtol=1e-5)

+@tvm.testing.requires_llvm

+def test_bf16_build():

+data = relay.var("data", shape=(1, 3, 224, 224), dtype='float32')

+weight = relay.var("weight", shape=(64, 3, 7, 7), dtype='float32')

+bn_gamma = relay.var("gamma", shape=(64,), dtype='float32')

+bn_beta = relay.var("beta", shape=(64,), dtype='float32')

+bn_mean = relay.var("mean", shape=(64,), dtype='float32')

+bn_var = relay.var("var", shape=(64,), dtype='float32')

+params = {

+"weight": np.random.uniform(-1, 1, size=(64, 3, 7,

7)).astype('float32'),

+"gamma": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"beta": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"mean": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"var": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+}

+conv_bf16 = relay.nn.conv2d(relay.cast(data, 'bfloat16'),

relay.cast(weight, 'bfloat16'),

+strides=(2, 2), padding=(3, 3, 3, 3),

channels=64, kernel_size=(7, 7), out_dtype='bfloat16')

+bn_bf16 = relay.nn.batch_norm(conv_bf16, relay.cast(bn_gamma, 'bfloat16'),

+ relay.cast(bn_beta, 'bfloat16'),

relay.cast(bn_mean, 'bfloat16'), relay.cast(bn_var, 'bfloat16'))

+relu_bf16 = relay.nn.relu(bn_bf16[0])

+maxpool_bf16 = relay.nn.max_pool2d(

+relu_bf16, pool_size=(2, 2), strides=(2, 2))

+avgpool_bf16 = relay.nn.avg_pool2d(

+maxpool_bf16, pool_size=(2, 2), strides=(2, 2))

+mod_bf16 = tvm.IRModule.from_expr(avgpool_bf16)

+with tvm.transform.PassContext(opt_level=3):

+relay.build(mod_bf16, target="llvm", params=params)

Review comment:

The errors I mentioned above are using FP32 results as reference, and

the bfloat16 results are casted to FP32 for comparisons.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] comaniac commented on a change in pull request #10112: [TIR, Relay] improve bfloat16 support

comaniac commented on a change in pull request #10112:

URL: https://github.com/apache/tvm/pull/10112#discussion_r803259544

##

File path: tests/python/relay/test_cpp_build_module.py

##

@@ -93,6 +93,35 @@ def test_fp16_build():

np.testing.assert_allclose(out.numpy(), X.numpy() + Y.numpy(), atol=1e-5,

rtol=1e-5)

+@tvm.testing.requires_llvm

+def test_bf16_build():

+data = relay.var("data", shape=(1, 3, 224, 224), dtype='float32')

+weight = relay.var("weight", shape=(64, 3, 7, 7), dtype='float32')

+bn_gamma = relay.var("gamma", shape=(64,), dtype='float32')

+bn_beta = relay.var("beta", shape=(64,), dtype='float32')

+bn_mean = relay.var("mean", shape=(64,), dtype='float32')

+bn_var = relay.var("var", shape=(64,), dtype='float32')

+params = {

+"weight": np.random.uniform(-1, 1, size=(64, 3, 7,

7)).astype('float32'),

+"gamma": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"beta": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"mean": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"var": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+}

+conv_bf16 = relay.nn.conv2d(relay.cast(data, 'bfloat16'),

relay.cast(weight, 'bfloat16'),

+strides=(2, 2), padding=(3, 3, 3, 3),

channels=64, kernel_size=(7, 7), out_dtype='bfloat16')

+bn_bf16 = relay.nn.batch_norm(conv_bf16, relay.cast(bn_gamma, 'bfloat16'),

+ relay.cast(bn_beta, 'bfloat16'),

relay.cast(bn_mean, 'bfloat16'), relay.cast(bn_var, 'bfloat16'))

+relu_bf16 = relay.nn.relu(bn_bf16[0])

+maxpool_bf16 = relay.nn.max_pool2d(

+relu_bf16, pool_size=(2, 2), strides=(2, 2))

+avgpool_bf16 = relay.nn.avg_pool2d(

+maxpool_bf16, pool_size=(2, 2), strides=(2, 2))

+mod_bf16 = tvm.IRModule.from_expr(avgpool_bf16)

+with tvm.transform.PassContext(opt_level=3):

+relay.build(mod_bf16, target="llvm", params=params)

Review comment:

I see. Does that make sense if we calculate the a reference result using

FP32 and cast it to bfloat16 for comparison? This is the only way I could think

of, so I have no clue if that doesn't make sense.

@masahi @AndrewZhaoLuo do you have comments?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] yangulei commented on a change in pull request #10112: [TIR, Relay] improve bfloat16 support

yangulei commented on a change in pull request #10112:

URL: https://github.com/apache/tvm/pull/10112#discussion_r803256748

##

File path: tests/python/relay/test_cpp_build_module.py

##

@@ -93,6 +93,35 @@ def test_fp16_build():

np.testing.assert_allclose(out.numpy(), X.numpy() + Y.numpy(), atol=1e-5,

rtol=1e-5)

+@tvm.testing.requires_llvm

+def test_bf16_build():

+data = relay.var("data", shape=(1, 3, 224, 224), dtype='float32')

+weight = relay.var("weight", shape=(64, 3, 7, 7), dtype='float32')

+bn_gamma = relay.var("gamma", shape=(64,), dtype='float32')

+bn_beta = relay.var("beta", shape=(64,), dtype='float32')

+bn_mean = relay.var("mean", shape=(64,), dtype='float32')

+bn_var = relay.var("var", shape=(64,), dtype='float32')

+params = {

+"weight": np.random.uniform(-1, 1, size=(64, 3, 7,

7)).astype('float32'),

+"gamma": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"beta": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"mean": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"var": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+}

+conv_bf16 = relay.nn.conv2d(relay.cast(data, 'bfloat16'),

relay.cast(weight, 'bfloat16'),

+strides=(2, 2), padding=(3, 3, 3, 3),

channels=64, kernel_size=(7, 7), out_dtype='bfloat16')

+bn_bf16 = relay.nn.batch_norm(conv_bf16, relay.cast(bn_gamma, 'bfloat16'),

+ relay.cast(bn_beta, 'bfloat16'),

relay.cast(bn_mean, 'bfloat16'), relay.cast(bn_var, 'bfloat16'))

+relu_bf16 = relay.nn.relu(bn_bf16[0])

+maxpool_bf16 = relay.nn.max_pool2d(

+relu_bf16, pool_size=(2, 2), strides=(2, 2))

+avgpool_bf16 = relay.nn.avg_pool2d(

+maxpool_bf16, pool_size=(2, 2), strides=(2, 2))

+mod_bf16 = tvm.IRModule.from_expr(avgpool_bf16)

+with tvm.transform.PassContext(opt_level=3):

+relay.build(mod_bf16, target="llvm", params=params)

Review comment:

Good point.

The correctness of simple bfloat16 `adding` has been checked at

https://github.com/apache/tvm/blob/14d0187ce9cefc41e33aa30b55c08a75a6711732/tests/python/unittest/test_target_codegen_llvm.py#L739

While, verifying the correctness of bfloat16 inference is much more complex

than fist thought.

We usually use relative error and absolute error to evaluate the accuracy,

but both of them could be large for a simple `multiply` with random inputs.

On the other hand, the MSE of the outputs (array with len=1000) of native

bfloat16 inference for ResNet\<18/34/50/101/152\>_v1b and VGG\<11/13/16/19\>

are about 0.5% to 1%, and less than 0.2% for BYOC-oneDNN inference. Also the

**_envelope curve_** of the bfloat16 and float32 outputs are close.

I couldn't find any metrics good enough to estimate the accuracy of bfloat16

inference. Any suggestions for this?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] Hzfengsy commented on pull request #10207: Support sub warp reduction for CUDA target.

Hzfengsy commented on pull request #10207: URL: https://github.com/apache/tvm/pull/10207#issuecomment-103443 Do you have any performance results? Also please add testcases -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] comaniac commented on a change in pull request #10112: [TIR, Relay] improve bfloat16 support

comaniac commented on a change in pull request #10112:

URL: https://github.com/apache/tvm/pull/10112#discussion_r803238389

##

File path: tests/python/relay/test_cpp_build_module.py

##

@@ -93,6 +93,35 @@ def test_fp16_build():

np.testing.assert_allclose(out.numpy(), X.numpy() + Y.numpy(), atol=1e-5,

rtol=1e-5)

+@tvm.testing.requires_llvm

+def test_bf16_build():

+data = relay.var("data", shape=(1, 3, 224, 224), dtype='float32')

+weight = relay.var("weight", shape=(64, 3, 7, 7), dtype='float32')

+bn_gamma = relay.var("gamma", shape=(64,), dtype='float32')

+bn_beta = relay.var("beta", shape=(64,), dtype='float32')

+bn_mean = relay.var("mean", shape=(64,), dtype='float32')

+bn_var = relay.var("var", shape=(64,), dtype='float32')

+params = {

+"weight": np.random.uniform(-1, 1, size=(64, 3, 7,

7)).astype('float32'),

+"gamma": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"beta": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"mean": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+"var": np.random.uniform(-1, 1, size=(64, )).astype('float32'),

+}

+conv_bf16 = relay.nn.conv2d(relay.cast(data, 'bfloat16'),

relay.cast(weight, 'bfloat16'),

+strides=(2, 2), padding=(3, 3, 3, 3),

channels=64, kernel_size=(7, 7), out_dtype='bfloat16')

+bn_bf16 = relay.nn.batch_norm(conv_bf16, relay.cast(bn_gamma, 'bfloat16'),

+ relay.cast(bn_beta, 'bfloat16'),

relay.cast(bn_mean, 'bfloat16'), relay.cast(bn_var, 'bfloat16'))

+relu_bf16 = relay.nn.relu(bn_bf16[0])

+maxpool_bf16 = relay.nn.max_pool2d(

+relu_bf16, pool_size=(2, 2), strides=(2, 2))

+avgpool_bf16 = relay.nn.avg_pool2d(

+maxpool_bf16, pool_size=(2, 2), strides=(2, 2))

+mod_bf16 = tvm.IRModule.from_expr(avgpool_bf16)

+with tvm.transform.PassContext(opt_level=3):

+relay.build(mod_bf16, target="llvm", params=params)

Review comment:

Could we also verify the output correctness?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] comaniac commented on a change in pull request #10179: [LIBXSMM] Add libxsmm to tvm ci

comaniac commented on a change in pull request #10179: URL: https://github.com/apache/tvm/pull/10179#discussion_r803227205 ## File path: docker/install/ubuntu_install_libxsmm.sh ## @@ -0,0 +1,32 @@ +#!/bin/bash +# Licensed to the Apache Software Foundation (ASF) under one +# or more contributor license agreements. See the NOTICE file +# distributed with this work for additional information +# regarding copyright ownership. The ASF licenses this file +# to you under the Apache License, Version 2.0 (the +# "License"); you may not use this file except in compliance +# with the License. You may obtain a copy of the License at +# +# http://www.apache.org/licenses/LICENSE-2.0 +# +# Unless required by applicable law or agreed to in writing, +# software distributed under the License is distributed on an +# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY +# KIND, either express or implied. See the License for the +# specific language governing permissions and limitations +# under the License. + +set -e +set -u +set -o pipefail + +pushd /usr/local/ +wget -q https://github.com/libxsmm/libxsmm/archive/refs/tags/1.17.tar.gz +tar -xzf 1.17.tar.gz +pushd ./libxsmm-1.17/ +make STATIC=0 -j10 Review comment: better to config the thread number in a more flexible way. ```suggestion make STATIC=0 -j$(($(nproc) - 1)) ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[tvm] branch main updated (3b2780a -> 9f7f4c6)

This is an automated email from the ASF dual-hosted git repository. masahi pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/tvm.git. from 3b2780a [microTVM][Tutorials] Add tutorials to run on ci_qemu (#10154) add 9f7f4c6 Disable tensorflow v2 behavior in all unit tests (#10204) No new revisions were added by this update. Summary of changes: tests/python/frontend/tensorflow/test_bn_dynamic.py | 2 ++ tests/python/frontend/tensorflow/test_forward.py| 2 ++ tests/python/frontend/tensorflow/test_no_op.py | 2 ++ 3 files changed, 6 insertions(+)

[GitHub] [tvm] masahi merged pull request #10204: Disable tensorflow v2 behavior in all unit tests

masahi merged pull request #10204: URL: https://github.com/apache/tvm/pull/10204 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] yangulei commented on pull request #9996: [TIR] add support for multi-blocking layout and their transformation

yangulei commented on pull request #9996: URL: https://github.com/apache/tvm/pull/9996#issuecomment-1034370222 Yes, the transformation from "NK" to "NK16n4k" is well supported, even without this PR. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] masahi commented on pull request #9996: [TIR] add support for multi-blocking layout and their transformation

masahi commented on pull request #9996: URL: https://github.com/apache/tvm/pull/9996#issuecomment-1034366873 Yeah I think that's possible. If I understand correctly, the point is to have 16 x 4 loop in the inner-most axis, so something like `(n // 16, k // 4, 16, 4)` should be ok as well. Does this answer your question? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] yzh119 opened a new pull request #10207: Support sub warp reduction for CUDA target.

yzh119 opened a new pull request #10207:

URL: https://github.com/apache/tvm/pull/10207

Previously the `LowerThreadAllReduce` pass will only emit code that uses

`shfl_down` when reduce extent equals warp size, when reduce extent is less

than warp size, the codegen fall back to emit code that uses shared memory,

which is not efficient. Consider CUDA supports sub warp reduction by specifying

the mask, we can still use shuffle-down approach for reduction by changing the

mask.

Example code:

```python

import tvm

import numpy as np

from tvm.script import tir as T

@T.prim_func

def reduce(a: T.handle, b: T.handle) -> None:

A = T.match_buffer(a, [1024, 11])

B = T.match_buffer(b, [1024])

for i, j in T.grid(1024, 11):

with T.block("reduce"):

vi, vj = T.axis.remap("SR", [i, j])

with T.init():

B[vi] = 0.

B[vi] = B[vi] + A[vi, vj]

sch = tvm.tir.Schedule(reduce)

blk = sch.get_block("reduce")

i, j = sch.get_loops(blk)

sch.bind(i, "blockIdx.x")

sch.bind(j, "threadIdx.x")

f = tvm.build(sch.mod["main"], target="cuda")

print(f.imported_modules[0].get_source())

```

Emitted code before this PR:

```cuda

extern "C" __global__ void __launch_bounds__(11)

default_function_kernel0(float* __restrict__ A, float* __restrict__ B) {

__shared__ float red_buf0[11];

__syncthreads();

((volatile float*)red_buf0)[(((int)threadIdx.x))] = A[(int)blockIdx.x)

* 11) + ((int)threadIdx.x)))];

__syncthreads();

if (((int)threadIdx.x) < 3) {

((volatile float*)red_buf0)[(((int)threadIdx.x))] = (((volatile

float*)red_buf0)[(((int)threadIdx.x))] + ((volatile

float*)red_buf0)[int)threadIdx.x) + 8))]);

}

__syncthreads();

if (((int)threadIdx.x) < 4) {

float w_4_0 = (((volatile float*)red_buf0)[(((int)threadIdx.x))] +

((volatile float*)red_buf0)[int)threadIdx.x) + 4))]);

((volatile float*)red_buf0)[(((int)threadIdx.x))] = w_4_0;

float w_2_0 = (((volatile float*)red_buf0)[(((int)threadIdx.x))] +

((volatile float*)red_buf0)[int)threadIdx.x) + 2))]);

((volatile float*)red_buf0)[(((int)threadIdx.x))] = w_2_0;

float w_1_0 = (((volatile float*)red_buf0)[(((int)threadIdx.x))] +

((volatile float*)red_buf0)[int)threadIdx.x) + 1))]);

((volatile float*)red_buf0)[(((int)threadIdx.x))] = w_1_0;

}

__syncthreads();

B[(((int)blockIdx.x))] = ((volatile float*)red_buf0)[(0)];

}

```

Emitted code after this PR:

```cuda

extern "C" __global__ void __launch_bounds__(11)

default_function_kernel0(float* __restrict__ A, float* __restrict__ B) {

float red_buf0[1];

uint mask[1];

float t0[1];

red_buf0[(0)] = A[(int)blockIdx.x) * 11) + ((int)threadIdx.x)))];

mask[(0)] = (__activemask() & (uint)2047);

t0[(0)] = __shfl_down_sync(mask[(0)], red_buf0[(0)], 8, 32);

red_buf0[(0)] = (red_buf0[(0)] + t0[(0)]);

t0[(0)] = __shfl_down_sync(mask[(0)], red_buf0[(0)], 4, 32);

red_buf0[(0)] = (red_buf0[(0)] + t0[(0)]);

t0[(0)] = __shfl_down_sync(mask[(0)], red_buf0[(0)], 2, 32);

red_buf0[(0)] = (red_buf0[(0)] + t0[(0)]);

t0[(0)] = __shfl_down_sync(mask[(0)], red_buf0[(0)], 1, 32);

red_buf0[(0)] = (red_buf0[(0)] + t0[(0)]);

red_buf0[(0)] = __shfl_sync(mask[(0)], red_buf0[(0)], 0, 32);

B[(((int)blockIdx.x))] = red_buf0[(0)];

}

```

# Future work

CUDA 11 supports [cooperative group

reduction](https://developer.nvidia.com/blog/cuda-11-features-revealed/) which

we can directly use.

cc @vinx13 @junrushao1994

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] yangulei commented on pull request #9996: [TIR] add support for multi-blocking layout and their transformation

yangulei commented on pull request #9996: URL: https://github.com/apache/tvm/pull/9996#issuecomment-1034364727 @masahi The workaround we talked about has been removed in https://github.com/apache/tvm/pull/9996/commits/1c7ef996d9d9c3fe72d717eb847d6dc25c44366d. The transformation you mentioned doesn't seems like _blocking_ only. Could this test written in a way including the transformation like "NK" to "NK16n4k"? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] FranckQC edited a comment on pull request #9482: Implementation of Common Subexpression Elimination for TIR

FranckQC edited a comment on pull request #9482: URL: https://github.com/apache/tvm/pull/9482#issuecomment-1034315546 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] alanmacd opened a new issue #10206: [microTVM][zephyr] Add support for nRF5340 DK v2

alanmacd opened a new issue #10206: URL: https://github.com/apache/tvm/issues/10206 There are two versions of the nRF5340 development kit, 1.0.0 and 2.0.0. The 1.0.0 DK exposes three COM ports, where COM2 is used to communicate with the device while running tests. The 2.0.0 DK only has two COM ports as can be seen here: ``` $ nrfjprog --com 1050063518/dev/ttyACM0VCOM0 1050063518/dev/ttyACM1VCOM1 ``` [microtvm_api_server.py always uses VCOM2](https://github.com/apache/tvm/blob/3b2780a4141b5654439c43dfc7c1409f9cb1858c/apps/microtvm/zephyr/template_project/microtvm_api_server.py#L628) which doesn't exist on the 2.0.0 DK. To accommodate this, the USB product ID (PID) can be used to differentiate between the 1.0.0 and 2.0.0 DKs. The PID for 1.0.0 is 1055, while the PID for the 2.0.0 DK is reported as 1051 via lsusb: ``` $ lsusb Bus 005 Device 006: ID 1366:1051 SEGGER J-Link ``` Here are more details from Nordic Semiconductor's site: [nRF5340 DK v2.0.0 COM ports](https://developer.nordicsemi.com/nRF_Connect_SDK/doc/latest/nrf/ug_nrf5340.html#nrf5340-dk-v2-0-0-com-ports) When connected to a computer, the nRF5340 DK v2.0.0 emulates two virtual COM ports. In the default configuration, they are set up as follows: - The first COM port outputs the log from the network core (if available). - The second COM port outputs the log from the application core. [nRF5340 DK v1.0.0 COM ports](https://developer.nordicsemi.com/nRF_Connect_SDK/doc/latest/nrf/ug_nrf5340.html#nrf5340-dk-v1-0-0-com-ports) When connected to a computer, the nRF5340 DK v1.0.0 emulates three virtual COM ports. In the default configuration, they are set up as follows: - The first COM port outputs the log from the network core (if available). - The second (middle) COM port is routed to the P24 connector of the nRF5340 DK. - The third (last) COM port outputs the log from the application core. ### Expected behavior Tests pass while successfully connection to device via VCOM2 ### Actual behavior Tests fail due to inability to connect to device as VCOM2 does not exits. ### Environment Linux Ubuntu 20.04 with nRF5340 v2.0.0 development kit ### Steps to reproduce `pytest test_zephyr.py --zephyr-board=nrf5340dk_nrf5340_cpuapp` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

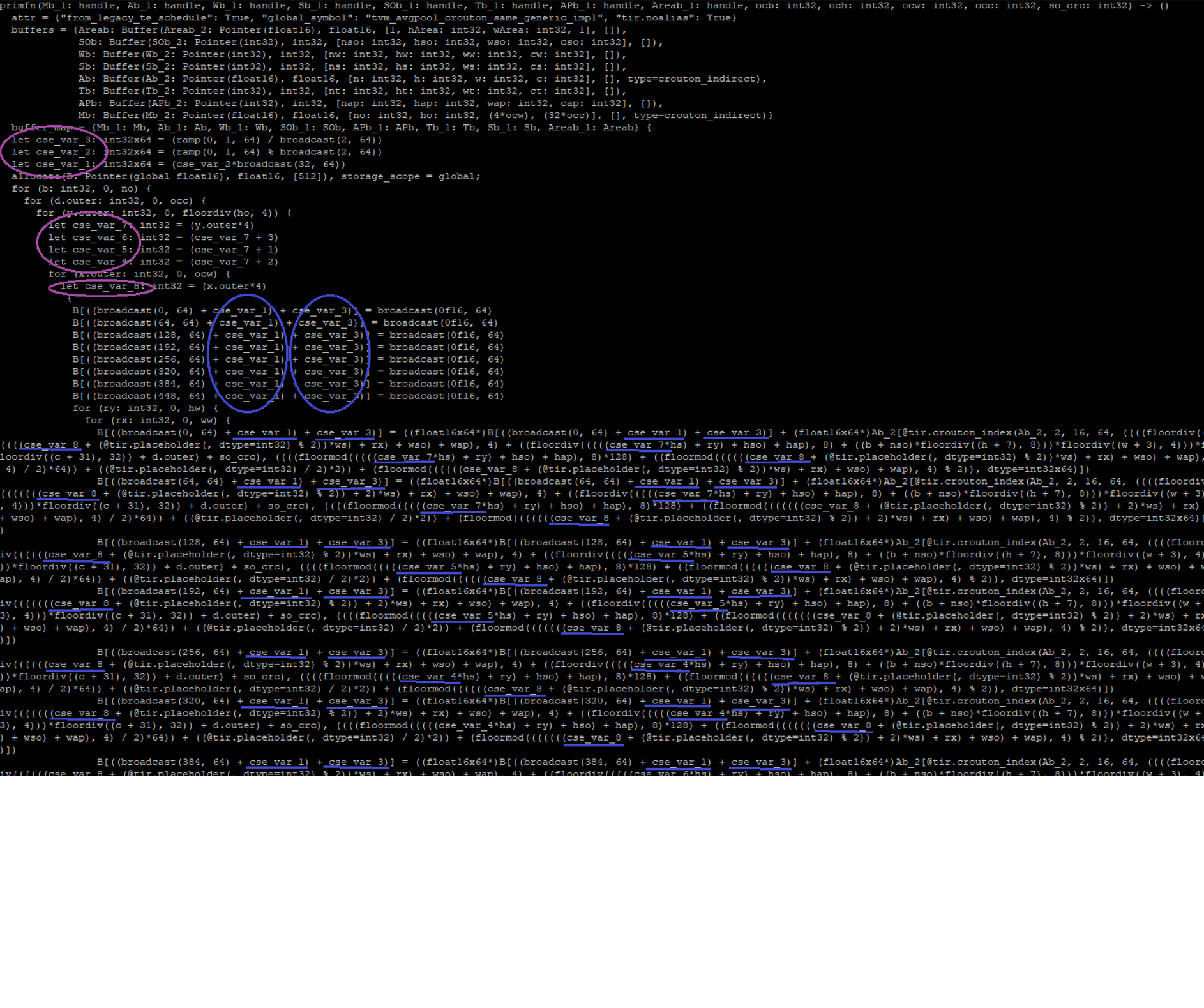

[GitHub] [tvm] FranckQC commented on pull request #9482: Implementation of Common Subexpression Elimination for TIR

FranckQC commented on pull request #9482: URL: https://github.com/apache/tvm/pull/9482#issuecomment-1034315546 > Thanks so much for the clear and complete comments, very much appreciate that. > > Echo @Hzfengsy suggestion to switch to TVMScript for your tests -- perhaps just pretty printing what you already constructs would be a short cut. > > The code duplication across PrimExpr & Stmnt in CommonSubexpressionElimintor is unfortunate but suspect removing that would only obscure things. > > In your experience is this mostly firing on the affine index sub-expressions, or do you see cse over actual data sub-expressions? Thank you so much for the compliment, I really appreciate it. It makes me happy to know that the code is easy to read! If I recall well I saw quite a lot of indices (mostly from loop unrolling), just like what @wrongtest had here https://github.com/apache/tvm/pull/9482#issuecomment-972463175. Also some indices due to lowering of memory accesses, for instance: C[x,y] = C[x,y] + A[x,k] * B[y,k] which can lowered (2D to 1D) to: C[x*128+y] = C[x*128+y] + A[x*128+k]*B[y*128+k] which gives the opportunity to create first: cse_var_1 = x*128+y and then in cascade: cse_var_2 = x*128 And I also recall a lot of random commoning, like:  I'll post more if I can find my notes where I had more interesting commonings performed in test files. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm-rfcs] vinx13 commented on a change in pull request #39: [RFC][TIR] Layout transformations on buffer access

vinx13 commented on a change in pull request #39: URL: https://github.com/apache/tvm-rfcs/pull/39#discussion_r803178694 ## File path: rfcs/0039-buffer-physical-layout.md ## @@ -0,0 +1,784 @@ +- Feature Name: Buffer Physical Layout +- Authors: Eric Lunderberg (@Lunderberg), Wuwei Lin (@vinx13) +- Start Date: 2021-10-05 +- RFC PR: [apache/tvm-rfcs#0039](https://github.com/apache/tvm-rfcs/pull/0039) +- GitHub Issue: Not Yet Written + +# Summary +[summary]: #summary + +This RFC introduces layout transformations that can be applied to a +buffer during the lowering process. These transformations will be +part of the schedule, allowing the same compute definition to be used +across multiple different layouts. These transformations can produce +either flat memory buffers or multi-dimensional memory buffers to be +exposed to the low-level code generators. + +# Motivation +[motivation]: #motivation + +Currently, TVM assumes that all buffers can be treated as flat memory. +That is, while a rank-N tensor requires N values to describe its shape +and N indices to identify a particular value within it, the underlying +buffer allocated by the low-level codegen has a single value defining +the size, and access into that buffer is done using a single index. +This assumptions holds for most cases, such as a CPU accessing RAM, +but doesn't hold in all cases. For example, texture memory on a GPU +requires two indices to access. These are currently handled on a +case-by-case basis, such as using `tvm::tir::builtin::texture2d_store` +in a `CallNode`. + +In addition, computations that are semantically identical (e.g. 2-d +convolution) require independent compute definitions and schedules +(e.g. `conv2d_nchw` and `conv2d_hwcn`) based on the format of the data +accepted as input. + +This RFC introduces a mechanism to specify transformations to be +applied to the layout of buffers in memory, along with a unified +method of presenting multiple indices to the low-level code +generators. This will allow for target-specific handling of non-flat +memory, and will allow for code re-use across compute definitions that +differ only in memory layout. + +# Guide-level explanation +[guide-level-explanation]: #guide-level-explanation + +A buffer is represented by a `tvm::tir::Buffer` object, and has some +shape associated with it. This shape is initially defined from the +buffer's shape in the compute definition. Buffers can either be +allocated within a `tvm::tir::PrimFunc` using a `tvm::tir::Allocate` +node, or can be passed in as parameters to a `PrimFunc`. Buffer +access is done using `tvm::tir::BufferLoad` and +`tvm::tir::BufferStore` for reads and writes, respectively. + +When a TIR graph is passed into the low-level code generator +`tvm::codegen::Build`, the rank of each buffer must be supported by +the target code generator. Typically, this will mean generating a +single index representing access into flat memory. Some code +generators may attach alternative semantics for `rank>1` +buffers (e.g. rank-2 buffers to represent texture memory on OpenCL). +A low-level code generator should check the rank of the buffers it is +acting on, and give a diagnostic error for unsupported rank. + +To define the layout transformation in a TE schedule, use the +`transform_layout` method of a schedule, as shown below. The +arguments to `transform_layout` is a function that accepts a list of +`tvm.tir.Var` representing a logical index, and outputs a list of +`tvm.tir.PrimExpr` giving a corresponding physical index. If +`transform_layout` isn't called, then no additional layout +transformations are applied. + +For example, below defines the reordering from NHWC logical layout to +NCHWc physical layout. + +```python +# Compute definition, written in terms of NHWC logical axes +B = te.compute(A.shape, lambda n,h,w,c: A[n,h,w,c]) +s = te.create_schedule(B.op) + +def nhwc_to_nchwc(logical_axes): +n,h,w,c = logical_axes +return [n, c//4, h, w, c%4] + +B_nchwc = s[B].transform_layout(nhwc_to_nchwc) + +# Compute definition that would produce an equivalent physical layout +B_equivalent = te.compute( +[A.shape[0], A.shape[3]//4, A.shape[1], A.shape[2], 4], +lambda n, c_outer, h, w, c_inner: A[n, h, w, 4*c_outer+c_inner], +) +``` + +By default, after all explicitly specified layout transformations are +applied, all axes are flattened to a single axis by following a +row-major traversal. This produces a 1-d buffer, which corresponds to +flat memory. To produce `rank>1` buffers in the physical layout, +insert `te.AXIS_SEPARATOR` into the axis list return by the physical +layout function. These define groups of axes, where each group is +combined into a single physical axis. + +```python +B = te.compute(shape=(M,N,P,Q), ...) +s = te.create_schedule(B.op) + +# Default, produces a 1-d allocation with shape (M*N*P*Q,) +s[B].transform_layout(lambda m,n,p,q: [m,n,p,q]) + +# One separator, produces a 2-d allocation with sha

[GitHub] [tvm] FranckQC commented on pull request #9482: Implementation of Common Subexpression Elimination for TIR

FranckQC commented on pull request #9482: URL: https://github.com/apache/tvm/pull/9482#issuecomment-1034303962 > > Overall looks good to me and we should merge as is, @masahi do you mind adding some follow up issues to track the TODOs generated during review? > > Sure! In addition to [#9482 (comment)](https://github.com/apache/tvm/pull/9482#discussion_r790200526), I think we can refactor our `Substitute` function to be a special case of the subsutiuter with a predicate added in this PR. I would be very happy to look into that as soon as this is merged. Hopefully the current run of the CI should be the last one! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] mehrdadh commented on pull request #10205: [Hexagon] Follow up fixes on PR #9631

mehrdadh commented on pull request #10205: URL: https://github.com/apache/tvm/pull/10205#issuecomment-1034281564 cc @kparzysz-quic -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] mehrdadh opened a new pull request #10205: [Hexagon] Follow up fixes on PR #9631

mehrdadh opened a new pull request #10205: URL: https://github.com/apache/tvm/pull/10205 Follow up fixes on hexagon RPC server. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[tvm] branch main updated (5e4e239 -> 3b2780a)

This is an automated email from the ASF dual-hosted git repository. areusch pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/tvm.git. from 5e4e239 [QNN] Lookup operations for hard to implement operators (#10053) add 3b2780a [microTVM][Tutorials] Add tutorials to run on ci_qemu (#10154) No new revisions were added by this update. Summary of changes: .../how_to/work_with_microtvm/micro_autotune.py| 125 - tests/python/unittest/test_crt.py | 2 +- tests/scripts/task_python_microtvm.sh | 10 ++ 3 files changed, 82 insertions(+), 55 deletions(-)

[GitHub] [tvm] areusch merged pull request #10154: [microTVM][Tutorials] Add tutorials to run on ci_qemu

areusch merged pull request #10154: URL: https://github.com/apache/tvm/pull/10154 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] masahi commented on pull request #9482: Implementation of Common Subexpression Elimination for TIR

masahi commented on pull request #9482: URL: https://github.com/apache/tvm/pull/9482#issuecomment-1034251858 > Overall looks good to me and we should merge as is, @masahi do you mind adding some follow up issues to track the TODOs generated during review? Sure! In addition to https://github.com/apache/tvm/pull/9482#discussion_r790200526, I think we can refactor our `Substitute` function to be a special case of the subsutiuter with a predicate added in this PR. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] driazati closed pull request #10197: [ci] Invoke tensorflow tests individually

driazati closed pull request #10197: URL: https://github.com/apache/tvm/pull/10197 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[tvm] branch node-info-test created (now ea764b3)

This is an automated email from the ASF dual-hosted git repository. mousius pushed a change to branch node-info-test in repository https://gitbox.apache.org/repos/asf/tvm.git. at ea764b3 address comments No new revisions were added by this update.

[GitHub] [tvm] masahi commented on pull request #9482: Implementation of Common Subexpression Elimination for TIR

masahi commented on pull request #9482: URL: https://github.com/apache/tvm/pull/9482#issuecomment-1034147763 Please have a look again @Hzfengsy @wrongtest I'm merging this this week unless there are other comments @tqchen @junrushao1994 @vinx13 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] driazati opened a new pull request #10204: Disable tensorflow v2 behavior in all unit tests

driazati opened a new pull request #10204: URL: https://github.com/apache/tvm/pull/10204 This should resolve the issue posted here https://discuss.tvm.apache.org/t/tensorflow-2-0-test-failures-while-running-the-tensor-flow-frontend-test-forward-py-function/11322 based on [this comment](https://discuss.tvm.apache.org/t/tensorflow-2-0-test-failures-while-running-the-tensor-flow-frontend-test-forward-py-function/11322/3?u=driazati). Thanks for contributing to TVM! Please refer to guideline https://tvm.apache.org/docs/contribute/ for useful information and tips. After the pull request is submitted, please request code reviews from [Reviewers](https://github.com/apache/incubator-tvm/blob/master/CONTRIBUTORS.md#reviewers) by @ them in the pull request thread. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] driazati commented on pull request #10056: [ci] Add auto-updating `last-successful` branch

driazati commented on pull request #10056: URL: https://github.com/apache/tvm/pull/10056#issuecomment-1034131918 Sorry about the overactive bot everyone, it's still missing some checks to look at before sending out these pings -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] github-actions[bot] commented on pull request #10057: Add @slow decorator to run tests on `main`

github-actions[bot] commented on pull request #10057: URL: https://github.com/apache/tvm/pull/10057#issuecomment-1034131159 It has been a while since this PR was updated, @Mousius @driazati @areusch please leave a review or address the outstanding comments -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] github-actions[bot] commented on pull request #9931: Add action to label mergeable PRs

github-actions[bot] commented on pull request #9931: URL: https://github.com/apache/tvm/pull/9931#issuecomment-1034131180 It has been a while since this PR was updated, @areusch please leave a review or address the outstanding comments -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] github-actions[bot] commented on pull request #10056: [ci] Add auto-updating `last-successful` branch

github-actions[bot] commented on pull request #10056: URL: https://github.com/apache/tvm/pull/10056#issuecomment-1034131149 It has been a while since this PR was updated, @leandron @mbrookhart @Mousius @AndrewZhaoLuo @driazati @areusch please leave a review or address the outstanding comments -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] Lunderberg commented on pull request #9727: [TE][TIR] Implement layout transformations, non-flat memory buffers

Lunderberg commented on pull request #9727: URL: https://github.com/apache/tvm/pull/9727#issuecomment-1034114792 Rebased onto main, resolving merge conflict. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[tvm] branch main updated (1571112 -> 5e4e239)

This is an automated email from the ASF dual-hosted git repository. andrewzhaoluo pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/tvm.git. from 1571112 [USMP] Register hill climb algorithm (#10182) add 5e4e239 [QNN] Lookup operations for hard to implement operators (#10053) No new revisions were added by this update. Summary of changes: python/tvm/relay/qnn/op/__init__.py| 4 +- python/tvm/relay/qnn/op/canonicalizations.py | 160 ++ python/tvm/relay/qnn/op/legalizations.py | 20 +- python/tvm/relay/qnn/op/op.py | 25 ++- .../transform/fake_quantization_to_integer.py | 4 + src/relay/op/tensor/transform.cc | 3 +- src/relay/qnn/op/rsqrt.cc | 42 +--- tests/python/relay/qnn/test_canonicalizations.py | 231 + tests/python/relay/test_op_level3.py | 9 +- tests/python/relay/test_op_qnn_rsqrt.py| 4 +- .../test_pass_fake_quantization_to_integer.py | 11 +- tests/python/topi/python/test_topi_transform.py| 17 +- 12 files changed, 461 insertions(+), 69 deletions(-) create mode 100644 python/tvm/relay/qnn/op/canonicalizations.py create mode 100644 tests/python/relay/qnn/test_canonicalizations.py

[GitHub] [tvm] AndrewZhaoLuo merged pull request #10053: [QNN] Lookup operations for hard to implement operators

AndrewZhaoLuo merged pull request #10053: URL: https://github.com/apache/tvm/pull/10053 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] driazati commented on a change in pull request #10195: [ci] Add more details when showing node info

driazati commented on a change in pull request #10195:

URL: https://github.com/apache/tvm/pull/10195#discussion_r802971996

##

File path: Jenkinsfile

##

@@ -93,10 +93,8 @@ def per_exec_ws(folder) {

def init_git() {

// Add more info about job node

sh (

-script: """

- echo "INFO: NODE_NAME=${NODE_NAME} EXECUTOR_NUMBER=${EXECUTOR_NUMBER}"

- """,

- label: 'Show executor node info',

+script: './task/scripts/task_show_node_info.sh',

Review comment:

definitely agree on the re-org, I'll file a follow up to move everything

to a new structure in one go

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[tvm] branch main updated (69403f1 -> 1571112)

This is an automated email from the ASF dual-hosted git repository. manupa pushed a change to branch main in repository https://gitbox.apache.org/repos/asf/tvm.git. from 69403f1 [OpenCL] Fix vthread_extent for warp size 1 case (#10199) add 1571112 [USMP] Register hill climb algorithm (#10182) No new revisions were added by this update. Summary of changes: include/tvm/tir/usmp/algorithms.h | 11 +++ src/tir/usmp/unified_static_memory_planner.cc | 5 +++-- tests/python/relay/aot/test_crt_aot_usmp.py | 1 + 3 files changed, 15 insertions(+), 2 deletions(-)

[GitHub] [tvm] manupa-arm commented on pull request #10182: [USMP] Register hill climb algorithm

manupa-arm commented on pull request #10182: URL: https://github.com/apache/tvm/pull/10182#issuecomment-1034026501 Thanks @PhilippvK ! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] manupa-arm merged pull request #10182: [USMP] Register hill climb algorithm

manupa-arm merged pull request #10182: URL: https://github.com/apache/tvm/pull/10182 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] electriclilies commented on a change in pull request #9848: [Relay] [Virtual Device] Store function result virtual device in virtual_device_ field

electriclilies commented on a change in pull request #9848:

URL: https://github.com/apache/tvm/pull/9848#discussion_r802921017

##

File path: src/parser/parser.cc

##

@@ -1130,16 +1146,25 @@ class Parser {

ret_type = ParseType();

}

+ ObjectRef virtual_device;

+ if (WhenMatch(TokenType::kComma)) {

+virtual_device = ParseVirtualDevice();

Review comment:

OK, can do that

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] tkonolige commented on a change in pull request #10097: [CMake] add support for find_package

tkonolige commented on a change in pull request #10097:

URL: https://github.com/apache/tvm/pull/10097#discussion_r802913037

##

File path: CMakeLists.txt

##

@@ -620,33 +611,54 @@ endif()

add_custom_target(runtime DEPENDS tvm_runtime)

# Installation rules

-install(TARGETS tvm DESTINATION lib${LIB_SUFFIX})

-install(TARGETS tvm_runtime DESTINATION lib${LIB_SUFFIX})

+install(TARGETS tvm EXPORT ${PROJECT_NAME}Targets DESTINATION lib${LIB_SUFFIX})

+install(TARGETS tvm_runtime EXPORT ${PROJECT_NAME}Targets DESTINATION

lib${LIB_SUFFIX})

if (INSTALL_DEV)

install(

-DIRECTORY "include/." DESTINATION "include"

+DIRECTORY "include/" DESTINATION "include"

FILES_MATCHING

PATTERN "*.h"

)

install(

-DIRECTORY "3rdparty/dlpack/include/." DESTINATION "include"

+DIRECTORY "3rdparty/dlpack/include/" DESTINATION "include"

FILES_MATCHING

PATTERN "*.h"

)

install(

-DIRECTORY "3rdparty/dmlc-core/include/." DESTINATION "include"

+DIRECTORY "3rdparty/dmlc-core/include/" DESTINATION "include"

FILES_MATCHING

PATTERN "*.h"

)

else(INSTALL_DEV)

install(

-DIRECTORY "include/tvm/runtime/." DESTINATION "include/tvm/runtime"

+DIRECTORY "include/tvm/runtime/" DESTINATION "include/tvm/runtime"

FILES_MATCHING

PATTERN "*.h"

)

endif(INSTALL_DEV)

+include(GNUInstallDirs)

+include(CMakePackageConfigHelpers)

+set(PROJECT_CONFIG_CONTENT "@PACKAGE_INIT@\n")

+string(APPEND PROJECT_CONFIG_CONTENT

+ "include(\"\${CMAKE_CURRENT_LIST_DIR}/${PROJECT_NAME}Targets.cmake\")")

+file(WRITE "${CMAKE_CURRENT_BINARY_DIR}/PROJECT_CONFIG_FILE"

${PROJECT_CONFIG_CONTENT})

+

+install(EXPORT ${PROJECT_NAME}Targets

+ NAMESPACE ${PROJECT_NAME}::

+ DESTINATION ${CMAKE_INSTALL_LIBDIR}/cmake/${PROJECT_NAME})

+

+# Create config for find_package()

+configure_package_config_file(

+ "${CMAKE_CURRENT_BINARY_DIR}/PROJECT_CONFIG_FILE" ${PROJECT_NAME}Config.cmake

Review comment:

How about `temp_config_file.cmake` then? Because it is all caps, people

might be confused that a variable substitution was forgotten. This makes the

use case clearer.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [tvm] tkonolige commented on a change in pull request #10097: [CMake] add support for find_package

tkonolige commented on a change in pull request #10097:

URL: https://github.com/apache/tvm/pull/10097#discussion_r802913037

##

File path: CMakeLists.txt

##

@@ -620,33 +611,54 @@ endif()

add_custom_target(runtime DEPENDS tvm_runtime)

# Installation rules

-install(TARGETS tvm DESTINATION lib${LIB_SUFFIX})

-install(TARGETS tvm_runtime DESTINATION lib${LIB_SUFFIX})

+install(TARGETS tvm EXPORT ${PROJECT_NAME}Targets DESTINATION lib${LIB_SUFFIX})

+install(TARGETS tvm_runtime EXPORT ${PROJECT_NAME}Targets DESTINATION

lib${LIB_SUFFIX})

if (INSTALL_DEV)

install(

-DIRECTORY "include/." DESTINATION "include"

+DIRECTORY "include/" DESTINATION "include"

FILES_MATCHING

PATTERN "*.h"

)

install(

-DIRECTORY "3rdparty/dlpack/include/." DESTINATION "include"

+DIRECTORY "3rdparty/dlpack/include/" DESTINATION "include"

FILES_MATCHING

PATTERN "*.h"

)

install(

-DIRECTORY "3rdparty/dmlc-core/include/." DESTINATION "include"

+DIRECTORY "3rdparty/dmlc-core/include/" DESTINATION "include"

FILES_MATCHING

PATTERN "*.h"

)

else(INSTALL_DEV)

install(

-DIRECTORY "include/tvm/runtime/." DESTINATION "include/tvm/runtime"

+DIRECTORY "include/tvm/runtime/" DESTINATION "include/tvm/runtime"

FILES_MATCHING

PATTERN "*.h"

)

endif(INSTALL_DEV)

+include(GNUInstallDirs)

+include(CMakePackageConfigHelpers)

+set(PROJECT_CONFIG_CONTENT "@PACKAGE_INIT@\n")

+string(APPEND PROJECT_CONFIG_CONTENT

+ "include(\"\${CMAKE_CURRENT_LIST_DIR}/${PROJECT_NAME}Targets.cmake\")")

+file(WRITE "${CMAKE_CURRENT_BINARY_DIR}/PROJECT_CONFIG_FILE"

${PROJECT_CONFIG_CONTENT})

+

+install(EXPORT ${PROJECT_NAME}Targets

+ NAMESPACE ${PROJECT_NAME}::

+ DESTINATION ${CMAKE_INSTALL_LIBDIR}/cmake/${PROJECT_NAME})

+

+# Create config for find_package()

+configure_package_config_file(

+ "${CMAKE_CURRENT_BINARY_DIR}/PROJECT_CONFIG_FILE" ${PROJECT_NAME}Config.cmake

Review comment:

How about `temp_config_file.cmake` then?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[tvm] branch main updated: [OpenCL] Fix vthread_extent for warp size 1 case (#10199)

This is an automated email from the ASF dual-hosted git repository. zhaowu pushed a commit to branch main in repository https://gitbox.apache.org/repos/asf/tvm.git The following commit(s) were added to refs/heads/main by this push: new 69403f1 [OpenCL] Fix vthread_extent for warp size 1 case (#10199) 69403f1 is described below commit 69403f19db5fbb64a3e8c74c76d8b0fde124c123 Author: Masahiro Masuda AuthorDate: Thu Feb 10 02:22:36 2022 +0900 [OpenCL] Fix vthread_extent for warp size 1 case (#10199) --- src/auto_scheduler/search_task.cc | 2 +- 1 file changed, 1 insertion(+), 1 deletion(-) diff --git a/src/auto_scheduler/search_task.cc b/src/auto_scheduler/search_task.cc index cc18de2..2623400 100755 --- a/src/auto_scheduler/search_task.cc +++ b/src/auto_scheduler/search_task.cc @@ -127,7 +127,7 @@ HardwareParams HardwareParamsNode::GetDefaultHardwareParams(const Target& target << "Warp size 1 is not recommended for OpenCL devices. Tuning might crash or stuck"; } - int max_vthread_extent = warp_size / 4; + int max_vthread_extent = std::max(1, warp_size / 4); return HardwareParams(-1, 16, 64, max_shared_memory_per_block, max_local_memory_per_block, max_threads_per_block, max_vthread_extent, warp_size); }

[GitHub] [tvm] FrozenGene merged pull request #10199: [Ansor] OpenCL follow-up

FrozenGene merged pull request #10199: URL: https://github.com/apache/tvm/pull/10199 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: commits-unsubscr...@tvm.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [tvm] mbs-octoml commented on a change in pull request #9848: [Relay] [Virtual Device] Store function result virtual device in virtual_device_ field

mbs-octoml commented on a change in pull request #9848:

URL: https://github.com/apache/tvm/pull/9848#discussion_r802907310

##

File path: src/parser/parser.cc

##

@@ -1130,16 +1146,25 @@ class Parser {

ret_type = ParseType();

}

+ ObjectRef virtual_device;

+ if (WhenMatch(TokenType::kComma)) {

+virtual_device = ParseVirtualDevice();

Review comment:

Ideal would be if we could parse the attributes as usual, then 'promote'