luyaor opened a new issue #7200: URL: https://github.com/apache/tvm/issues/7200

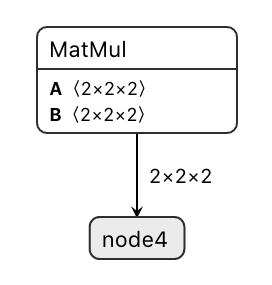

## Description When compiling following model with opt_level=2, TVM will crash. While if turn opt_level to 3, it will run normally. The model(with ONNX as frontend) with error is as follows, check bug.onnx in bug0.zip.  ## Error Log ``` Traceback (most recent call last): File "check.py", line 19, in <module> tvm_graph, tvm_lib, tvm_params = relay.build_module.build(mod, target, params=params) File "/Users/luyaor/Documents/tvm/python/tvm/relay/build_module.py", line 275, in build graph_json, mod, params = bld_mod.build(mod, target, target_host, params) File "/Users/luyaor/Documents/tvm/python/tvm/relay/build_module.py", line 138, in build self._build(mod, target, target_host) File "/Users/luyaor/Documents/tvm/python/tvm/_ffi/_ctypes/packed_func.py", line 237, in __call__ raise get_last_ffi_error() tvm._ffi.base.TVMError: Traceback (most recent call last): [bt] (8) 9 libtvm.dylib 0x00000001112c2a02 tvm::relay::StorageAllocator::Plan(tvm::relay::Function const&) + 354 [bt] (7) 8 libtvm.dylib 0x00000001112c68ca tvm::relay::StorageAllocaBaseVisitor::Run(tvm::relay::Function const&) + 154 [bt] (6) 7 libtvm.dylib 0x00000001112c44c7 tvm::relay::StorageAllocaBaseVisitor::GetToken(tvm::RelayExpr const&) + 23 [bt] (5) 6 libtvm.dylib 0x0000000111359b58 tvm::relay::ExprVisitor::VisitExpr(tvm::RelayExpr const&) + 344 [bt] (4) 5 libtvm.dylib 0x00000001110f1fad tvm::relay::ExprFunctor<void (tvm::RelayExpr const&)>::VisitExpr(tvm::RelayExpr const&) + 173 [bt] (3) 4 libtvm.dylib 0x00000001110f22a0 tvm::NodeFunctor<void (tvm::runtime::ObjectRef const&, tvm::relay::ExprFunctor<void (tvm::RelayExpr const&)>*)>::operator()(tvm::runtime::ObjectRef const&, tvm::relay::ExprFunctor<void (tvm::RelayExpr const&)>*) const + 288 [bt] (2) 3 libtvm.dylib 0x00000001112c425b tvm::relay::StorageAllocator::CreateToken(tvm::RelayExprNode const*, bool) + 1179 [bt] (1) 2 libtvm.dylib 0x00000001112c6093 tvm::relay::StorageAllocator::GetMemorySize(tvm::relay::StorageToken*) + 451 [bt] (0) 1 libtvm.dylib 0x00000001105dac6f dmlc::LogMessageFatal::~LogMessageFatal() + 111 File "/Users/luyaor/Documents/tvm/src/relay/backend/graph_plan_memory.cc", line 292 TVMError: --------------------------------------------------------------- An internal invariant was violated during the execution of TVM. Please read TVM's error reporting guidelines. More details can be found here: https://discuss.tvm.ai/t/error-reporting/7793. --------------------------------------------------------------- Check failed: pval != nullptr == false: Cannot allocate memory symbolic tensor shape [?, ?, ?] ``` ## How to reproduce ### Environment Python3, with tvm, onnx tvm version: c31e338d5f98a8e8c97286c5b93b20caee8be602 Wed Dec 9 14:52:58 2020 +0900 Conda environment reference: see environment.yml 1. Download [bug0.zip](https://github.com/apache/tvm/files/5764374/bug0.zip) 2. Run `python check.py`. ---------------------------------------------------------------- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org