[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[

https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=672966&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672966

]

ASF GitHub Bot logged work on HADOOP-17981:

---

Author: ASF GitHub Bot

Created on: 02/Nov/21 04:56

Start Date: 02/Nov/21 04:56

Worklog Time Spent: 10m

Work Description: sidseth commented on a change in pull request #3597:

URL: https://github.com/apache/hadoop/pull/3597#discussion_r740720279

##

File path:

hadoop-mapreduce-project/hadoop-mapreduce-client/hadoop-mapreduce-client-core/src/main/java/org/apache/hadoop/mapreduce/lib/output/FileOutputCommitter.java

##

@@ -497,6 +535,302 @@ private void mergePaths(FileSystem fs, final FileStatus

from,

}

}

+ @VisibleForTesting

+ public int getMoveThreads() {

+return moveThreads;

+ }

+

+ public boolean isParallelMoveEnabled() {

+// Only available for algo v1

+return (moveThreads > 1 && algorithmVersion == 1);

+ }

+

+ void validateParallelMove() throws IOException {

+if (!isParallelMoveEnabled()) {

+ throw new IOException("Parallel file move is not enabled. "

+ + "moveThreads=" + moveThreads

+ + ", algo=" + algorithmVersion);

+}

+ }

+

+ void validateThreadPool(ExecutorService pool, BlockingQueue>

futures)

+ throws IOException {

+boolean threadPoolEnabled = isParallelMoveEnabled();

+if (!threadPoolEnabled || pool == null || futures == null) {

+ String errorMsg = "Thread pool is not configured correctly. "

+ + "threadPoolEnabled: " + threadPoolEnabled

+ + ", pool: " + pool

+ + ", futures: " + futures;

+ LOG.error(errorMsg);

+ throw new IOException(errorMsg);

+}

+ }

+

+ /**

+ * Get executor service for moving files for v1 algorithm.

+ * @return executor service

+ * @throws IOException on error

+ */

+ private ExecutorService createExecutorService() throws IOException {

+// intentional validation

+validateParallelMove();

+

+ExecutorService pool = new ThreadPoolExecutor(moveThreads, moveThreads,

+0L, TimeUnit.MILLISECONDS,

+new LinkedBlockingQueue<>(),

+new ThreadFactoryBuilder()

+.setDaemon(true)

+.setNameFormat("FileCommitter-v1-move-thread-%d")

+.build(),

+new ThreadPoolExecutor.CallerRunsPolicy()

+);

+LOG.info("Size of move thread pool: {}, pool: {}", moveThreads, pool);

+return pool;

+ }

+

+ private void parallelCommitJobInternal(JobContext context) throws

IOException {

+// validate to be on safer side.

+validateParallelMove();

+

+if (hasOutputPath()) {

+ Path finalOutput = getOutputPath();

+ FileSystem fs = finalOutput.getFileSystem(context.getConfiguration());

+ // created resilient commit helper bonded to the destination FS/path

+ resilientCommitHelper = new ResilientCommitByRenameHelper(fs);

Review comment:

This mechanism becomes very FileSystem specific. Implemented by Azure

right now.

Other users of rename will not see the benefits without changing interfaces,

which in turn requires shimming etc.

Would it be better for AzureFileSystem rename itself to add a config

parameter which can lookup the src etag (at the cost of a performance hit for

consistency), so that downstream components / any users of the rename operation

can benefit from this change without having to change interfaces. Also, if the

performance penalty is a big problem - Abfs could create very short-lived

caches for FileStatus objects, and handle errors on discrepancies with the

cached copy.

Essentially - don't force usage of the new interface to get the benefits.

Side note: The fs.getStatus within ResilientCommitByRenameHelper for

FileSystems where this new functionality is not supported will lead to a

performance penalty for the other FileSystems (performing a getFileStatus on

src).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 672966)

Time Spent: 2h (was: 1h 50m)

> Support etag-assisted renames in FileOutputCommitter

>

>

> Key: HADOOP-17981

> URL: https://issues.apache.org/jira/browse/HADOOP-17981

> Project: Hadoop Common

> Issue Type: New Feature

> Components: fs, fs/azure

>Affects Versions: 3.4.0

>Reporter: Steve Loughran

>Assignee: Steve Loughran

>Priority: Major

> Labels: pull-request-available

>

[GitHub] [hadoop] sidseth commented on a change in pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

sidseth commented on a change in pull request #3597:

URL: https://github.com/apache/hadoop/pull/3597#discussion_r740720279

##

File path:

hadoop-mapreduce-project/hadoop-mapreduce-client/hadoop-mapreduce-client-core/src/main/java/org/apache/hadoop/mapreduce/lib/output/FileOutputCommitter.java

##

@@ -497,6 +535,302 @@ private void mergePaths(FileSystem fs, final FileStatus

from,

}

}

+ @VisibleForTesting

+ public int getMoveThreads() {

+return moveThreads;

+ }

+

+ public boolean isParallelMoveEnabled() {

+// Only available for algo v1

+return (moveThreads > 1 && algorithmVersion == 1);

+ }

+

+ void validateParallelMove() throws IOException {

+if (!isParallelMoveEnabled()) {

+ throw new IOException("Parallel file move is not enabled. "

+ + "moveThreads=" + moveThreads

+ + ", algo=" + algorithmVersion);

+}

+ }

+

+ void validateThreadPool(ExecutorService pool, BlockingQueue>

futures)

+ throws IOException {

+boolean threadPoolEnabled = isParallelMoveEnabled();

+if (!threadPoolEnabled || pool == null || futures == null) {

+ String errorMsg = "Thread pool is not configured correctly. "

+ + "threadPoolEnabled: " + threadPoolEnabled

+ + ", pool: " + pool

+ + ", futures: " + futures;

+ LOG.error(errorMsg);

+ throw new IOException(errorMsg);

+}

+ }

+

+ /**

+ * Get executor service for moving files for v1 algorithm.

+ * @return executor service

+ * @throws IOException on error

+ */

+ private ExecutorService createExecutorService() throws IOException {

+// intentional validation

+validateParallelMove();

+

+ExecutorService pool = new ThreadPoolExecutor(moveThreads, moveThreads,

+0L, TimeUnit.MILLISECONDS,

+new LinkedBlockingQueue<>(),

+new ThreadFactoryBuilder()

+.setDaemon(true)

+.setNameFormat("FileCommitter-v1-move-thread-%d")

+.build(),

+new ThreadPoolExecutor.CallerRunsPolicy()

+);

+LOG.info("Size of move thread pool: {}, pool: {}", moveThreads, pool);

+return pool;

+ }

+

+ private void parallelCommitJobInternal(JobContext context) throws

IOException {

+// validate to be on safer side.

+validateParallelMove();

+

+if (hasOutputPath()) {

+ Path finalOutput = getOutputPath();

+ FileSystem fs = finalOutput.getFileSystem(context.getConfiguration());

+ // created resilient commit helper bonded to the destination FS/path

+ resilientCommitHelper = new ResilientCommitByRenameHelper(fs);

Review comment:

This mechanism becomes very FileSystem specific. Implemented by Azure

right now.

Other users of rename will not see the benefits without changing interfaces,

which in turn requires shimming etc.

Would it be better for AzureFileSystem rename itself to add a config

parameter which can lookup the src etag (at the cost of a performance hit for

consistency), so that downstream components / any users of the rename operation

can benefit from this change without having to change interfaces. Also, if the

performance penalty is a big problem - Abfs could create very short-lived

caches for FileStatus objects, and handle errors on discrepancies with the

cached copy.

Essentially - don't force usage of the new interface to get the benefits.

Side note: The fs.getStatus within ResilientCommitByRenameHelper for

FileSystems where this new functionality is not supported will lead to a

performance penalty for the other FileSystems (performing a getFileStatus on

src).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on pull request #3538: HDFS-16266. Add remote port information to HDFS audit log

tomscut commented on pull request #3538: URL: https://github.com/apache/hadoop/pull/3538#issuecomment-957045765 Hi @aajisaka , could you please review this again. Thanks a lot. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[

https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=672918&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672918

]

ASF GitHub Bot logged work on HADOOP-17981:

---

Author: ASF GitHub Bot

Created on: 02/Nov/21 00:19

Start Date: 02/Nov/21 00:19

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3597:

URL: https://github.com/apache/hadoop/pull/3597#issuecomment-956975430

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 6 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 49s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 21m 18s | | trunk passed |

| +1 :green_heart: | compile | 21m 38s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 19m 0s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 3m 40s | | trunk passed |

| +1 :green_heart: | mvnsite | 4m 26s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 20s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 52s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 10s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 27s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 28s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 31s | | the patch passed |

| +1 :green_heart: | compile | 21m 2s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 21m 2s | | the patch passed |

| +1 :green_heart: | compile | 19m 7s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 19m 7s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 3m 37s |

[/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/5/artifact/out/results-checkstyle-root.txt)

| root: The patch generated 17 new + 99 unchanged - 0 fixed = 116 total (was

99) |

| +1 :green_heart: | mvnsite | 4m 21s | | the patch passed |

| +1 :green_heart: | javadoc | 3m 20s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 49s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 51s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 36s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 17m 21s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 5m 57s | | hadoop-mapreduce-client-core in

the patch passed. |

| +1 :green_heart: | unit | 135m 38s | |

hadoop-mapreduce-client-jobclient in the patch passed. |

| +1 :green_heart: | unit | 2m 41s | | hadoop-azure in the patch

passed. |

| +1 :green_heart: | asflicense | 1m 12s | | The patch does not

generate ASF License warnings. |

| | | 370m 13s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/5/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3597 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 80640d7e2e23 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 712c7cbc0dad45bf31378ec38935dc6141faff2e |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

[GitHub] [hadoop] hadoop-yetus commented on pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

hadoop-yetus commented on pull request #3597:

URL: https://github.com/apache/hadoop/pull/3597#issuecomment-956975430

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 6 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 49s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 21m 18s | | trunk passed |

| +1 :green_heart: | compile | 21m 38s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 19m 0s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 3m 40s | | trunk passed |

| +1 :green_heart: | mvnsite | 4m 26s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 20s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 52s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 10s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 27s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 28s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 31s | | the patch passed |

| +1 :green_heart: | compile | 21m 2s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 21m 2s | | the patch passed |

| +1 :green_heart: | compile | 19m 7s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 19m 7s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 3m 37s |

[/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/5/artifact/out/results-checkstyle-root.txt)

| root: The patch generated 17 new + 99 unchanged - 0 fixed = 116 total (was

99) |

| +1 :green_heart: | mvnsite | 4m 21s | | the patch passed |

| +1 :green_heart: | javadoc | 3m 20s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 49s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 51s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 36s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 17m 21s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 5m 57s | | hadoop-mapreduce-client-core in

the patch passed. |

| +1 :green_heart: | unit | 135m 38s | |

hadoop-mapreduce-client-jobclient in the patch passed. |

| +1 :green_heart: | unit | 2m 41s | | hadoop-azure in the patch

passed. |

| +1 :green_heart: | asflicense | 1m 12s | | The patch does not

generate ASF License warnings. |

| | | 370m 13s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/5/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3597 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 80640d7e2e23 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 712c7cbc0dad45bf31378ec38935dc6141faff2e |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/5/testReport/ |

| Max. process+thread count | 1573 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common

hadoop-mapreduce-project/hadoop-mapreduce-client/

[jira] [Commented] (HADOOP-17006) Fix the CosCrendentials Provider in core-site.xml for unit tests.

[ https://issues.apache.org/jira/browse/HADOOP-17006?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17436977#comment-17436977 ] Yang Yu commented on HADOOP-17006: -- [~csun] Thank you for your reminder. I will complete this task as soon as possible. > Fix the CosCrendentials Provider in core-site.xml for unit tests. > - > > Key: HADOOP-17006 > URL: https://issues.apache.org/jira/browse/HADOOP-17006 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/cos >Reporter: Yang Yu >Assignee: Yang Yu >Priority: Blocker > > Fix the CosCredentials Provider classpath in core-site.xml for unit tests. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HADOOP-17702) HADOOP-17065 breaks compatibility between 3.3.0 and 3.3.1

[

https://issues.apache.org/jira/browse/HADOOP-17702?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17436967#comment-17436967

]

Chao Sun edited comment on HADOOP-17702 at 11/1/21, 6:30 PM:

-

Hi [~ste...@apache.org] [~weichiu], is this still a blocker for 3.3.2?

was (Author: csun):

Hi, is this still a blocker for 3.3.2?

> HADOOP-17065 breaks compatibility between 3.3.0 and 3.3.1

> -

>

> Key: HADOOP-17702

> URL: https://issues.apache.org/jira/browse/HADOOP-17702

> Project: Hadoop Common

> Issue Type: Bug

> Components: fs/azure

>Affects Versions: 3.3.1

>Reporter: Wei-Chiu Chuang

>Priority: Blocker

>

> AzureBlobFileSystemStore is a Public, Evolving class, by contract can't break

> compatibility between maintenance releases.

> HADOOP-17065 changed its constructor signature from

> {noformat}

> public AzureBlobFileSystemStore(URI uri, boolean isSecureScheme,

> Configuration configuration,

> AbfsCounters abfsCounters) throws

> IOException {

> {noformat}

> to

> {noformat}

> public AzureBlobFileSystemStore(URI uri, boolean isSecureScheme,

> Configuration configuration,

> AbfsCounters abfsCounters) throws

> IOException {

> {noformat}

> between 3.3.0 and 3.3.1.

> cc: [~mehakmeetSingh], [~tmarquardt]

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-17006) Fix the CosCrendentials Provider in core-site.xml for unit tests.

[ https://issues.apache.org/jira/browse/HADOOP-17006?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17436971#comment-17436971 ] Chao Sun commented on HADOOP-17006: --- [~yuyang733] [~weichiu] what's the progress on this one? still a blocker? I'm working on the 3.3.2 release right now. > Fix the CosCrendentials Provider in core-site.xml for unit tests. > - > > Key: HADOOP-17006 > URL: https://issues.apache.org/jira/browse/HADOOP-17006 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/cos >Reporter: Yang Yu >Assignee: Yang Yu >Priority: Blocker > > Fix the CosCredentials Provider classpath in core-site.xml for unit tests. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-7370) Optimize pread on ChecksumFileSystem

[ https://issues.apache.org/jira/browse/HADOOP-7370?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17436969#comment-17436969 ] Chao Sun commented on HADOOP-7370: -- [~ste...@apache.org] what's the progress on this? is it still a blocker for 3.3.2? I'm working on the release right now. > Optimize pread on ChecksumFileSystem > > > Key: HADOOP-7370 > URL: https://issues.apache.org/jira/browse/HADOOP-7370 > Project: Hadoop Common > Issue Type: Improvement > Components: fs >Reporter: Todd Lipcon >Assignee: Todd Lipcon >Priority: Blocker > Attachments: checksumfs-pread-0.20.txt > > > Currently the implementation of positional read in ChecksumFileSystem is > verify inefficient - it actually re-opens the underlying file and checksum > file, then seeks and uses normal read. Instead, it can push down positional > read directly to the underlying FS and verify checksum. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-17702) HADOOP-17065 breaks compatibility between 3.3.0 and 3.3.1

[

https://issues.apache.org/jira/browse/HADOOP-17702?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17436967#comment-17436967

]

Chao Sun commented on HADOOP-17702:

---

Hi, is this still a blocker for 3.3.2?

> HADOOP-17065 breaks compatibility between 3.3.0 and 3.3.1

> -

>

> Key: HADOOP-17702

> URL: https://issues.apache.org/jira/browse/HADOOP-17702

> Project: Hadoop Common

> Issue Type: Bug

> Components: fs/azure

>Affects Versions: 3.3.1

>Reporter: Wei-Chiu Chuang

>Priority: Blocker

>

> AzureBlobFileSystemStore is a Public, Evolving class, by contract can't break

> compatibility between maintenance releases.

> HADOOP-17065 changed its constructor signature from

> {noformat}

> public AzureBlobFileSystemStore(URI uri, boolean isSecureScheme,

> Configuration configuration,

> AbfsCounters abfsCounters) throws

> IOException {

> {noformat}

> to

> {noformat}

> public AzureBlobFileSystemStore(URI uri, boolean isSecureScheme,

> Configuration configuration,

> AbfsCounters abfsCounters) throws

> IOException {

> {noformat}

> between 3.3.0 and 3.3.1.

> cc: [~mehakmeetSingh], [~tmarquardt]

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-15566) Support OpenTelemetry

[ https://issues.apache.org/jira/browse/HADOOP-15566?focusedWorklogId=672783&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672783 ] ASF GitHub Bot logged work on HADOOP-15566: --- Author: ASF GitHub Bot Created on: 01/Nov/21 18:14 Start Date: 01/Nov/21 18:14 Worklog Time Spent: 10m Work Description: steveloughran commented on pull request #3445: URL: https://github.com/apache/hadoop/pull/3445#issuecomment-956467506 @ArkenKiran i'm delegating review and test to @ndimiduk for now -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 672783) Time Spent: 5h 40m (was: 5.5h) > Support OpenTelemetry > - > > Key: HADOOP-15566 > URL: https://issues.apache.org/jira/browse/HADOOP-15566 > Project: Hadoop Common > Issue Type: New Feature > Components: metrics, tracing >Affects Versions: 3.1.0 >Reporter: Todd Lipcon >Assignee: Siyao Meng >Priority: Major > Labels: pull-request-available, security > Attachments: HADOOP-15566-WIP.1.patch, HADOOP-15566.000.WIP.patch, > OpenTelemetry Support Scope Doc v2.pdf, OpenTracing Support Scope Doc.pdf, > Screen Shot 2018-06-29 at 11.59.16 AM.png, ss-trace-s3a.png > > Time Spent: 5h 40m > Remaining Estimate: 0h > > The HTrace incubator project has voted to retire itself and won't be making > further releases. The Hadoop project currently has various hooks with HTrace. > It seems in some cases (eg HDFS-13702) these hooks have had measurable > performance overhead. Given these two factors, I think we should consider > removing the HTrace integration. If there is someone willing to do the work, > replacing it with OpenTracing might be a better choice since there is an > active community. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] steveloughran commented on pull request #3445: HADOOP-15566 Opentelemtery changes using java agent

steveloughran commented on pull request #3445: URL: https://github.com/apache/hadoop/pull/3445#issuecomment-956467506 @ArkenKiran i'm delegating review and test to @ndimiduk for now -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[

https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=672782&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672782

]

ASF GitHub Bot logged work on HADOOP-17981:

---

Author: ASF GitHub Bot

Created on: 01/Nov/21 18:12

Start Date: 01/Nov/21 18:12

Worklog Time Spent: 10m

Work Description: hadoop-yetus removed a comment on pull request #3597:

URL: https://github.com/apache/hadoop/pull/3597#issuecomment-955112045

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 30s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 6 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 13m 27s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 30m 36s | | trunk passed |

| +1 :green_heart: | compile | 33m 57s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 28m 42s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 4m 57s | | trunk passed |

| +1 :green_heart: | mvnsite | 5m 25s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 42s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 4m 36s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 38s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 35s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 28s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 46s | | the patch passed |

| +1 :green_heart: | compile | 26m 9s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 26m 9s | | the patch passed |

| +1 :green_heart: | compile | 22m 14s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 22m 14s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 3m 59s |

[/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/4/artifact/out/results-checkstyle-root.txt)

| root: The patch generated 19 new + 99 unchanged - 0 fixed = 118 total (was

99) |

| +1 :green_heart: | mvnsite | 4m 9s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 56s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 23s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 21s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 49s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 19m 57s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 6m 19s | | hadoop-mapreduce-client-core in

the patch passed. |

| +1 :green_heart: | unit | 143m 58s | |

hadoop-mapreduce-client-jobclient in the patch passed. |

| +1 :green_heart: | unit | 2m 38s | | hadoop-azure in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 57s | | The patch does not

generate ASF License warnings. |

| | | 434m 32s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3597 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 6ba9a731eb8d 4.15.0-143-generic #147-Ubuntu SMP Wed Apr 14

16:10:11 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / f4950b3d0416b764433d18d24e8b19b09b663cc4 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK ve

[GitHub] [hadoop] hadoop-yetus removed a comment on pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

hadoop-yetus removed a comment on pull request #3597:

URL: https://github.com/apache/hadoop/pull/3597#issuecomment-955112045

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 30s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 6 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 13m 27s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 30m 36s | | trunk passed |

| +1 :green_heart: | compile | 33m 57s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 28m 42s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 4m 57s | | trunk passed |

| +1 :green_heart: | mvnsite | 5m 25s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 42s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 4m 36s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 38s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 35s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 28s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 46s | | the patch passed |

| +1 :green_heart: | compile | 26m 9s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 26m 9s | | the patch passed |

| +1 :green_heart: | compile | 22m 14s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 22m 14s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 3m 59s |

[/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/4/artifact/out/results-checkstyle-root.txt)

| root: The patch generated 19 new + 99 unchanged - 0 fixed = 118 total (was

99) |

| +1 :green_heart: | mvnsite | 4m 9s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 56s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 23s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 7m 21s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 49s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 19m 57s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 6m 19s | | hadoop-mapreduce-client-core in

the patch passed. |

| +1 :green_heart: | unit | 143m 58s | |

hadoop-mapreduce-client-jobclient in the patch passed. |

| +1 :green_heart: | unit | 2m 38s | | hadoop-azure in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 57s | | The patch does not

generate ASF License warnings. |

| | | 434m 32s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3597 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 6ba9a731eb8d 4.15.0-143-generic #147-Ubuntu SMP Wed Apr 14

16:10:11 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / f4950b3d0416b764433d18d24e8b19b09b663cc4 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/4/testReport/ |

| Max. process+thread count | 3143 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common

hadoop-mapreduce-project/hadoop-mapreduc

[jira] [Updated] (HADOOP-17939) Support building on Apple Silicon

[ https://issues.apache.org/jira/browse/HADOOP-17939?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Akira Ajisaka updated HADOOP-17939: --- Fix Version/s: 3.4.0 > Support building on Apple Silicon > -- > > Key: HADOOP-17939 > URL: https://issues.apache.org/jira/browse/HADOOP-17939 > Project: Hadoop Common > Issue Type: Improvement > Components: build, common >Affects Versions: 3.4.0 >Reporter: Dongjoon Hyun >Assignee: Dongjoon Hyun >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0, 3.3.2 > > Time Spent: 1h > Remaining Estimate: 0h > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Commented] (HADOOP-17985) Disable JIRA plugin for YETUS on Hadoop

[

https://issues.apache.org/jira/browse/HADOOP-17985?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17436937#comment-17436937

]

Akira Ajisaka commented on HADOOP-17985:

bq. Regardless, I think we can cherry-pick this fix to the other branches since

the JIRA plugin isn't used.

Agreed. Would you open backport PR?

> Disable JIRA plugin for YETUS on Hadoop

> ---

>

> Key: HADOOP-17985

> URL: https://issues.apache.org/jira/browse/HADOOP-17985

> Project: Hadoop Common

> Issue Type: Bug

> Components: build

>Affects Versions: 3.4.0

>Reporter: Gautham Banasandra

>Assignee: Gautham Banasandra

>Priority: Critical

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Time Spent: 1h

> Remaining Estimate: 0h

>

> I’ve been noticing an issue with Jenkins CI where a file jira-json goes

> missing all of a sudden – jenkins / hadoop-multibranch / PR-3588 / #2

> (apache.org)

> {code}

> [2021-10-27T17:52:58.787Z] Processing:

> https://github.com/apache/hadoop/pull/3588

> [2021-10-27T17:52:58.787Z] GITHUB PR #3588 is being downloaded from

> [2021-10-27T17:52:58.787Z]

> https://api.github.com/repos/apache/hadoop/pulls/3588

> [2021-10-27T17:52:58.787Z] JSON data at Wed Oct 27 17:52:55 UTC 2021

> [2021-10-27T17:52:58.787Z] Patch data at Wed Oct 27 17:52:56 UTC 2021

> [2021-10-27T17:52:58.787Z] Diff data at Wed Oct 27 17:52:56 UTC 2021

> [2021-10-27T17:52:59.814Z] awk: cannot open

> /home/jenkins/jenkins-home/workspace/hadoop-multibranch_PR-3588/centos-7/out/jira-json

> (No such file or directory)

> [2021-10-27T17:52:59.814Z] ERROR: https://github.com/apache/hadoop/pull/3588

> issue status is not matched with "Patch Available".

> [2021-10-27T17:52:59.814Z]

> {code}

> This causes the pipeline run to fail. I’ve seen this in my multiple attempts

> to re-run the CI on my PR –

> # After 45 minutes – [jenkins / hadoop-multibranch / PR-3588 / #1

> (apache.org)|https://ci-hadoop.apache.org/blue/organizations/jenkins/hadoop-multibranch/detail/PR-3588/1/pipeline/]

> # After 1 minute – [jenkins / hadoop-multibranch / PR-3588 / #2

> (apache.org)|https://ci-hadoop.apache.org/blue/organizations/jenkins/hadoop-multibranch/detail/PR-3588/2/pipeline/]

> # After 17 minutes – [jenkins / hadoop-multibranch / PR-3588 / #3

> (apache.org)|https://ci-hadoop.apache.org/blue/organizations/jenkins/hadoop-multibranch/detail/PR-3588/3/pipeline/]

> The hadoop-multibranch pipeline doesn't use ASF JIRA, thus, we're disabling

> the *jira* plugin to fix this issue.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on pull request #3602: HDFS-16291.Make the comment of INode#ReclaimContext more standardized.

jianghuazhu commented on pull request #3602: URL: https://github.com/apache/hadoop/pull/3602#issuecomment-956373649 Thank you @tomscut for your comments and reviews. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

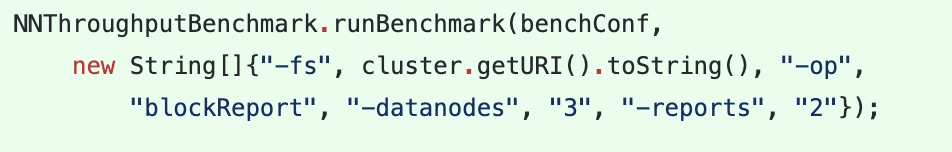

[GitHub] [hadoop] aajisaka commented on pull request #3544: HDFS-16269.[Fix] Improve NNThroughputBenchmark#blockReport operation.

aajisaka commented on pull request #3544: URL: https://github.com/apache/hadoop/pull/3544#issuecomment-956355572 Merged. Thank you @jianghuazhu @ferhui @jojochuang -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] aajisaka merged pull request #3544: HDFS-16269.[Fix] Improve NNThroughputBenchmark#blockReport operation.

aajisaka merged pull request #3544: URL: https://github.com/apache/hadoop/pull/3544 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3544: HDFS-16269.[Fix] Improve NNThroughputBenchmark#blockReport operation.

hadoop-yetus commented on pull request #3544:

URL: https://github.com/apache/hadoop/pull/3544#issuecomment-956229769

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 3s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 37m 15s | | trunk passed |

| +1 :green_heart: | compile | 1m 33s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 25s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 4s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 31s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 5s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 33s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 35s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 35s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 19s | | the patch passed |

| +1 :green_heart: | compile | 1m 28s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 28s | | the patch passed |

| +1 :green_heart: | compile | 1m 16s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 16s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 56s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 24s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 55s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 25s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 39s | | the patch passed |

| +1 :green_heart: | shadedclient | 25m 32s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 376m 41s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3544/6/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 45s | | The patch does not

generate ASF License warnings. |

| | | 487m 51s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.fs.viewfs.TestViewFileSystemOverloadSchemeHdfsFileSystemContract |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3544/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3544 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 55004c7946a0 4.15.0-143-generic #147-Ubuntu SMP Wed Apr 14

16:10:11 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / bfedca87dc856dec491c81af77c3cc2eb58b1537 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3544/6/testReport/ |

| Max. process+thread count | 2103 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3544/6/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

[GitHub] [hadoop] sodonnel commented on a change in pull request #3593: HDFS-16286. Add a debug tool to verify the correctness of erasure coding on file

sodonnel commented on a change in pull request #3593:

URL: https://github.com/apache/hadoop/pull/3593#discussion_r740188593

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/tools/DebugAdmin.java

##

@@ -387,6 +414,211 @@ int run(List args) throws IOException {

}

}

+ /**

+ * The command for verifying the correctness of erasure coding on an erasure

coded file.

+ */

+ private class VerifyECCommand extends DebugCommand {

+private DFSClient client;

+private int dataBlkNum;

+private int parityBlkNum;

+private int cellSize;

+private boolean useDNHostname;

+private CachingStrategy cachingStrategy;

+private int stripedReadBufferSize;

+private CompletionService readService;

+private RawErasureDecoder decoder;

+private BlockReader[] blockReaders;

+

+

+VerifyECCommand() {

+ super("verifyEC",

+ "verifyEC -file ",

+ " Verify HDFS erasure coding on all block groups of the file.");

+}

+

+int run(List args) throws IOException {

+ if (args.size() < 2) {

+System.out.println(usageText);

+System.out.println(helpText + System.lineSeparator());

+return 1;

+ }

+ String file = StringUtils.popOptionWithArgument("-file", args);

+ Path path = new Path(file);

+ DistributedFileSystem dfs = AdminHelper.getDFS(getConf());

+ this.client = dfs.getClient();

+

+ FileStatus fileStatus;

+ try {

+fileStatus = dfs.getFileStatus(path);

+ } catch (FileNotFoundException e) {

+System.err.println("File " + file + " does not exist.");

+return 1;

+ }

+

+ if (!fileStatus.isFile()) {

+System.err.println("File " + file + " is not a regular file.");

+return 1;

+ }

+ if (!dfs.isFileClosed(path)) {

+System.err.println("File " + file + " is not closed.");

+return 1;

+ }

+ this.useDNHostname =

getConf().getBoolean(DFSConfigKeys.DFS_DATANODE_USE_DN_HOSTNAME,

+ DFSConfigKeys.DFS_DATANODE_USE_DN_HOSTNAME_DEFAULT);

+ this.cachingStrategy = CachingStrategy.newDefaultStrategy();

+ this.stripedReadBufferSize = getConf().getInt(

+ DFSConfigKeys.DFS_DN_EC_RECONSTRUCTION_STRIPED_READ_BUFFER_SIZE_KEY,

+

DFSConfigKeys.DFS_DN_EC_RECONSTRUCTION_STRIPED_READ_BUFFER_SIZE_DEFAULT);

+

+ LocatedBlocks locatedBlocks = client.getLocatedBlocks(file, 0,

fileStatus.getLen());

+ if (locatedBlocks.getErasureCodingPolicy() == null) {

+System.err.println("File " + file + " is not erasure coded.");

+return 1;

+ }

+ ErasureCodingPolicy ecPolicy = locatedBlocks.getErasureCodingPolicy();

+ this.dataBlkNum = ecPolicy.getNumDataUnits();

+ this.parityBlkNum = ecPolicy.getNumParityUnits();

+ this.cellSize = ecPolicy.getCellSize();

+ this.decoder = CodecUtil.createRawDecoder(getConf(),

ecPolicy.getCodecName(),

+ new ErasureCoderOptions(

+ ecPolicy.getNumDataUnits(), ecPolicy.getNumParityUnits()));

+ int blockNum = dataBlkNum + parityBlkNum;

+ this.readService = new ExecutorCompletionService<>(

+ DFSUtilClient.getThreadPoolExecutor(blockNum, blockNum, 60,

+ new LinkedBlockingQueue<>(), "read-", false));

+ this.blockReaders = new BlockReader[dataBlkNum + parityBlkNum];

+

+ for (LocatedBlock locatedBlock : locatedBlocks.getLocatedBlocks()) {

+System.out.println("Checking EC block group: blk_" +

locatedBlock.getBlock().getBlockId());

+LocatedStripedBlock blockGroup = (LocatedStripedBlock) locatedBlock;

+

+try {

+ verifyBlockGroup(blockGroup);

+ System.out.println("Status: OK");

+} catch (Exception e) {

+ System.err.println("Status: ERROR, message: " + e.getMessage());

+ return 1;

+} finally {

+ closeBlockReaders();

+}

+ }

+ System.out.println("\nAll EC block group status: OK");

+ return 0;

+}

+

+private void verifyBlockGroup(LocatedStripedBlock blockGroup) throws

Exception {

+ final LocatedBlock[] indexedBlocks =

StripedBlockUtil.parseStripedBlockGroup(blockGroup,

+ cellSize, dataBlkNum, parityBlkNum);

+

+ int blockNumExpected = Math.min(dataBlkNum,

+ (int) ((blockGroup.getBlockSize() - 1) / cellSize + 1)) +

parityBlkNum;

+ if (blockGroup.getBlockIndices().length < blockNumExpected) {

+throw new Exception("Block group is under-erasure-coded.");

+ }

+

+ long maxBlockLen = 0L;

+ DataChecksum checksum = null;

+ for (int i = 0; i < dataBlkNum + parityBlkNum; i++) {

+LocatedBlock block = indexedBlocks[i];

+if (block == null) {

+ blockReaders[i] = null;

+ continue;

+}

+if (block.getBlockSize() > maxBlockLen) {

+ maxBlockLen = block.getBlockSize();

+}

+BlockReader blockReader = createBlockReader(block

[GitHub] [hadoop] sodonnel commented on a change in pull request #3593: HDFS-16286. Add a debug tool to verify the correctness of erasure coding on file

sodonnel commented on a change in pull request #3593:

URL: https://github.com/apache/hadoop/pull/3593#discussion_r740187297

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/tools/DebugAdmin.java

##

@@ -387,6 +414,211 @@ int run(List args) throws IOException {

}

}

+ /**

+ * The command for verifying the correctness of erasure coding on an erasure

coded file.

+ */

+ private class VerifyECCommand extends DebugCommand {

+private DFSClient client;

+private int dataBlkNum;

+private int parityBlkNum;

+private int cellSize;

+private boolean useDNHostname;

+private CachingStrategy cachingStrategy;

+private int stripedReadBufferSize;

+private CompletionService readService;

+private RawErasureDecoder decoder;

+private BlockReader[] blockReaders;

+

+

+VerifyECCommand() {

+ super("verifyEC",

+ "verifyEC -file ",

+ " Verify HDFS erasure coding on all block groups of the file.");

+}

+

+int run(List args) throws IOException {

+ if (args.size() < 2) {

+System.out.println(usageText);

+System.out.println(helpText + System.lineSeparator());

+return 1;

+ }

+ String file = StringUtils.popOptionWithArgument("-file", args);

+ Path path = new Path(file);

+ DistributedFileSystem dfs = AdminHelper.getDFS(getConf());

+ this.client = dfs.getClient();

+

+ FileStatus fileStatus;

+ try {

+fileStatus = dfs.getFileStatus(path);

+ } catch (FileNotFoundException e) {

+System.err.println("File " + file + " does not exist.");

+return 1;

+ }

+

+ if (!fileStatus.isFile()) {

+System.err.println("File " + file + " is not a regular file.");

+return 1;

+ }

+ if (!dfs.isFileClosed(path)) {

+System.err.println("File " + file + " is not closed.");

+return 1;

+ }

+ this.useDNHostname =

getConf().getBoolean(DFSConfigKeys.DFS_DATANODE_USE_DN_HOSTNAME,

+ DFSConfigKeys.DFS_DATANODE_USE_DN_HOSTNAME_DEFAULT);

+ this.cachingStrategy = CachingStrategy.newDefaultStrategy();

+ this.stripedReadBufferSize = getConf().getInt(

+ DFSConfigKeys.DFS_DN_EC_RECONSTRUCTION_STRIPED_READ_BUFFER_SIZE_KEY,

+

DFSConfigKeys.DFS_DN_EC_RECONSTRUCTION_STRIPED_READ_BUFFER_SIZE_DEFAULT);

+

+ LocatedBlocks locatedBlocks = client.getLocatedBlocks(file, 0,

fileStatus.getLen());

+ if (locatedBlocks.getErasureCodingPolicy() == null) {

+System.err.println("File " + file + " is not erasure coded.");

+return 1;

+ }

+ ErasureCodingPolicy ecPolicy = locatedBlocks.getErasureCodingPolicy();

+ this.dataBlkNum = ecPolicy.getNumDataUnits();

+ this.parityBlkNum = ecPolicy.getNumParityUnits();

+ this.cellSize = ecPolicy.getCellSize();

+ this.decoder = CodecUtil.createRawDecoder(getConf(),

ecPolicy.getCodecName(),

+ new ErasureCoderOptions(

+ ecPolicy.getNumDataUnits(), ecPolicy.getNumParityUnits()));

+ int blockNum = dataBlkNum + parityBlkNum;

+ this.readService = new ExecutorCompletionService<>(

+ DFSUtilClient.getThreadPoolExecutor(blockNum, blockNum, 60,

+ new LinkedBlockingQueue<>(), "read-", false));

+ this.blockReaders = new BlockReader[dataBlkNum + parityBlkNum];

+

+ for (LocatedBlock locatedBlock : locatedBlocks.getLocatedBlocks()) {

+System.out.println("Checking EC block group: blk_" +

locatedBlock.getBlock().getBlockId());

+LocatedStripedBlock blockGroup = (LocatedStripedBlock) locatedBlock;

+

+try {

+ verifyBlockGroup(blockGroup);

+ System.out.println("Status: OK");

+} catch (Exception e) {

+ System.err.println("Status: ERROR, message: " + e.getMessage());

+ return 1;

+} finally {

+ closeBlockReaders();

+}

+ }

+ System.out.println("\nAll EC block group status: OK");

+ return 0;

+}

+

+private void verifyBlockGroup(LocatedStripedBlock blockGroup) throws

Exception {

+ final LocatedBlock[] indexedBlocks =

StripedBlockUtil.parseStripedBlockGroup(blockGroup,

+ cellSize, dataBlkNum, parityBlkNum);

+

+ int blockNumExpected = Math.min(dataBlkNum,

+ (int) ((blockGroup.getBlockSize() - 1) / cellSize + 1)) +

parityBlkNum;

+ if (blockGroup.getBlockIndices().length < blockNumExpected) {

+throw new Exception("Block group is under-erasure-coded.");

+ }

+

+ long maxBlockLen = 0L;

+ DataChecksum checksum = null;

+ for (int i = 0; i < dataBlkNum + parityBlkNum; i++) {

+LocatedBlock block = indexedBlocks[i];

+if (block == null) {

+ blockReaders[i] = null;

+ continue;

+}

+if (block.getBlockSize() > maxBlockLen) {

+ maxBlockLen = block.getBlockSize();

+}

+BlockReader blockReader = createBlockReader(block

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[

https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=672604&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672604

]

ASF GitHub Bot logged work on HADOOP-17981:

---

Author: ASF GitHub Bot

Created on: 01/Nov/21 10:25

Start Date: 01/Nov/21 10:25

Worklog Time Spent: 10m

Work Description: hadoop-yetus removed a comment on pull request #3597:

URL: https://github.com/apache/hadoop/pull/3597#issuecomment-954717074

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 44s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 11m 30s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 22m 10s | | trunk passed |

| +1 :green_heart: | compile | 22m 34s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 19m 34s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 3m 36s | | trunk passed |

| +1 :green_heart: | mvnsite | 4m 23s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 22s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 47s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 11s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 22s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 29s | | Maven dependency ordering for patch |

| -1 :x: | mvninstall | 0m 24s |

[/patch-mvninstall-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-core.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-mvninstall-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-core.txt)

| hadoop-mapreduce-client-core in the patch failed. |

| -1 :x: | mvninstall | 0m 26s |

[/patch-mvninstall-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-jobclient.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-mvninstall-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-jobclient.txt)

| hadoop-mapreduce-client-jobclient in the patch failed. |

| -1 :x: | compile | 10m 14s |

[/patch-compile-root-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-compile-root-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt)

| root in the patch failed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04. |

| -1 :x: | javac | 10m 14s |

[/patch-compile-root-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-compile-root-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt)

| root in the patch failed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04. |

| -1 :x: | compile | 9m 10s |

[/patch-compile-root-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-compile-root-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt)

| root in the patch failed with JDK Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10. |

| -1 :x: | javac | 9m 10s |

[/patch-compile-root-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-compile-root-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt)

| root in the patch failed with JDK Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10. |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 3m 15s |

[/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/results-checkstyle-root.txt)

| root: The patch generated 16 new + 40 unchanged - 0 fixed = 56 total (was

40) |

| -1 :x: | mvnsite | 0m 31s |

[/patch-mvnsite-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-core.txt]

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[ https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=672603&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672603 ] ASF GitHub Bot logged work on HADOOP-17981: --- Author: ASF GitHub Bot Created on: 01/Nov/21 10:25 Start Date: 01/Nov/21 10:25 Worklog Time Spent: 10m Work Description: hadoop-yetus removed a comment on pull request #3597: URL: https://github.com/apache/hadoop/pull/3597#issuecomment-954195372 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 672603) Time Spent: 1h 20m (was: 1h 10m) > Support etag-assisted renames in FileOutputCommitter > > > Key: HADOOP-17981 > URL: https://issues.apache.org/jira/browse/HADOOP-17981 > Project: Hadoop Common > Issue Type: New Feature > Components: fs, fs/azure >Affects Versions: 3.4.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 1h 20m > Remaining Estimate: 0h > > To deal with some throttling/retry issues in object stores, > pass the FileStatus entries retrieved during listing > into a private interface ResilientCommitByRename which filesystems > may implement to use extra attributes in the listing (etag, version) > to constrain and validate the operation. > Although targeting azure, GCS and others could use. no point in S3A as they > shouldn't use this committer. > # And we are not going to do any changes to FileSystem as there are explicit > guarantees of public use and stability. > I am not going to make that change as the hive thing that will suddenly start > expecting it to work forever. > # I'm not planning to merge this in, as the manifest committer is going to > include this and more (MAPREDUCE-7341) > However, I do need to get this in on a branch, so am doing this work on trunk > for dev & test and for others to review -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus removed a comment on pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

hadoop-yetus removed a comment on pull request #3597:

URL: https://github.com/apache/hadoop/pull/3597#issuecomment-954717074

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 44s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 3 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 11m 30s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 22m 10s | | trunk passed |

| +1 :green_heart: | compile | 22m 34s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 19m 34s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 3m 36s | | trunk passed |

| +1 :green_heart: | mvnsite | 4m 23s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 22s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 47s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 11s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 22s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 29s | | Maven dependency ordering for patch |

| -1 :x: | mvninstall | 0m 24s |

[/patch-mvninstall-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-core.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-mvninstall-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-core.txt)

| hadoop-mapreduce-client-core in the patch failed. |

| -1 :x: | mvninstall | 0m 26s |

[/patch-mvninstall-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-jobclient.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-mvninstall-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-jobclient.txt)

| hadoop-mapreduce-client-jobclient in the patch failed. |

| -1 :x: | compile | 10m 14s |

[/patch-compile-root-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-compile-root-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt)

| root in the patch failed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04. |

| -1 :x: | javac | 10m 14s |

[/patch-compile-root-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-compile-root-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt)

| root in the patch failed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04. |

| -1 :x: | compile | 9m 10s |

[/patch-compile-root-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-compile-root-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt)

| root in the patch failed with JDK Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10. |

| -1 :x: | javac | 9m 10s |

[/patch-compile-root-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-compile-root-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt)

| root in the patch failed with JDK Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10. |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 3m 15s |

[/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/results-checkstyle-root.txt)

| root: The patch generated 16 new + 40 unchanged - 0 fixed = 56 total (was

40) |

| -1 :x: | mvnsite | 0m 31s |

[/patch-mvnsite-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-core.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3597/3/artifact/out/patch-mvnsite-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-core.txt)

| hadoop-mapreduce-client-core in the patch failed. |

| -1 :x: | mvnsite | 0m 33s |

[/patch-mvnsite-hadoop-mapreduce-project_hadoop-mapreduce-client_hadoop-mapreduce-client-jobclient.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/P

[GitHub] [hadoop] hadoop-yetus removed a comment on pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

hadoop-yetus removed a comment on pull request #3597: URL: https://github.com/apache/hadoop/pull/3597#issuecomment-954195372 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[

https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=672602&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672602

]

ASF GitHub Bot logged work on HADOOP-17981:

---

Author: ASF GitHub Bot

Created on: 01/Nov/21 10:24

Start Date: 01/Nov/21 10:24

Worklog Time Spent: 10m

Work Description: steveloughran commented on a change in pull request

#3597:

URL: https://github.com/apache/hadoop/pull/3597#discussion_r740107063

##

File path:

hadoop-mapreduce-project/hadoop-mapreduce-client/hadoop-mapreduce-client-core/src/main/java/org/apache/hadoop/mapreduce/lib/output/committer/manifest/impl/StoreOperationsThroughFileSystem.java

##

@@ -0,0 +1,271 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hadoop.mapreduce.lib.output.committer.manifest.impl;

+

+import java.io.IOException;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import org.apache.hadoop.fs.CommonPathCapabilities;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.Path;

+import org.apache.hadoop.fs.PathIOException;

+import org.apache.hadoop.fs.RemoteIterator;

+import org.apache.hadoop.fs.Trash;

+import org.apache.hadoop.fs.impl.ResilientCommitByRename;

+import

org.apache.hadoop.mapreduce.lib.output.committer.manifest.files.AbstractManifestData;

+import

org.apache.hadoop.mapreduce.lib.output.committer.manifest.files.FileOrDirEntry;

+import

org.apache.hadoop.mapreduce.lib.output.committer.manifest.files.TaskManifest;

+import org.apache.hadoop.util.JsonSerialization;

+

+import static

org.apache.hadoop.fs.CommonConfigurationKeysPublic.FS_TRASH_INTERVAL_KEY;

+

+/**

+ * Implement task and job operations through the filesystem API.

+ */

+public class StoreOperationsThroughFileSystem extends StoreOperations {

Review comment:

I screwed up that commit by cherrypicking from the manifest committer

branch (which I needed to) -but missed that git adds that as a file. Removed

them

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 672602)

Time Spent: 1h 10m (was: 1h)

> Support etag-assisted renames in FileOutputCommitter

>

>

> Key: HADOOP-17981

> URL: https://issues.apache.org/jira/browse/HADOOP-17981

> Project: Hadoop Common

> Issue Type: New Feature

> Components: fs, fs/azure

>Affects Versions: 3.4.0

>Reporter: Steve Loughran

>Assignee: Steve Loughran

>Priority: Major