[jira] [Commented] (HADOOP-17374) AliyunOSS: support ListObjectsV2

[ https://issues.apache.org/jira/browse/HADOOP-17374?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17438502#comment-17438502 ] wujinhu commented on HADOOP-17374: -- Thanks [~cheersyang], please merge this change to branch-3.1, branch-3.2, branch-3.3, too. > AliyunOSS: support ListObjectsV2 > > > Key: HADOOP-17374 > URL: https://issues.apache.org/jira/browse/HADOOP-17374 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/oss >Affects Versions: 2.9.2, 3.0.3, 3.3.0, 3.2.1, 3.1.4, 2.10.1 >Reporter: wujinhu >Assignee: wujinhu >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > OSS supports > ListObjectsV2([https://help.aliyun.com/document_detail/187544.html?spm=a2c4g.11186623.6.1589.e0623d9fE1b64S)] > to optimize versioning bucket list. We should support this feature in > AliyunOSS module. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Updated] (HADOOP-17374) AliyunOSS: support ListObjectsV2

[ https://issues.apache.org/jira/browse/HADOOP-17374?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] wujinhu updated HADOOP-17374: - Attachment: (was: HADOOP-17374.001.patch) > AliyunOSS: support ListObjectsV2 > > > Key: HADOOP-17374 > URL: https://issues.apache.org/jira/browse/HADOOP-17374 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/oss >Affects Versions: 2.9.2, 3.0.3, 3.3.0, 3.2.1, 3.1.4, 2.10.1 >Reporter: wujinhu >Assignee: wujinhu >Priority: Major > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > OSS supports > ListObjectsV2([https://help.aliyun.com/document_detail/187544.html?spm=a2c4g.11186623.6.1589.e0623d9fE1b64S)] > to optimize versioning bucket list. We should support this feature in > AliyunOSS module. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3613: HDFS-16296. RouterRpcFairnessPolicyController add rejected permits for each nameservice

hadoop-yetus commented on pull request #3613:

URL: https://github.com/apache/hadoop/pull/3613#issuecomment-960483188

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 15s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 0s | | trunk passed |

| +1 :green_heart: | compile | 0m 47s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 0m 41s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 0m 28s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 43s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 45s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 52s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 1m 20s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 21s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 33s | | the patch passed |

| +1 :green_heart: | compile | 0m 33s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 0m 33s | | the patch passed |

| +1 :green_heart: | compile | 0m 31s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 0m 31s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 17s | | the patch passed |

| +1 :green_heart: | mvnsite | 0m 33s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 33s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 49s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 1m 19s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 5s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 34m 16s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt)

| hadoop-hdfs-rbf in the patch passed. |

| +1 :green_heart: | asflicense | 0m 38s | | The patch does not

generate ASF License warnings. |

| | | 121m 55s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.rbfbalance.TestRouterDistCpProcedure |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3613 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 6b97e564ae90 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 9df5178b099d7039f1848b9e526e6c66b6c2a8bd |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/4/testReport/ |

| Max. process+thread count | 2706 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs-rbf U:

hadoop-hdfs-project/hadoop-hdfs-rbf |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/4/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To r

[jira] [Commented] (HADOOP-17374) AliyunOSS: support ListObjectsV2

[ https://issues.apache.org/jira/browse/HADOOP-17374?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17438479#comment-17438479 ] Weiwei Yang commented on HADOOP-17374: -- Looks good, I have merged the PR to the trunk. What else branches needed for this change? > AliyunOSS: support ListObjectsV2 > > > Key: HADOOP-17374 > URL: https://issues.apache.org/jira/browse/HADOOP-17374 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/oss >Affects Versions: 2.9.2, 3.0.3, 3.3.0, 3.2.1, 3.1.4, 2.10.1 >Reporter: wujinhu >Assignee: wujinhu >Priority: Major > Labels: pull-request-available > Attachments: HADOOP-17374.001.patch > > Time Spent: 10m > Remaining Estimate: 0h > > OSS supports > ListObjectsV2([https://help.aliyun.com/document_detail/187544.html?spm=a2c4g.11186623.6.1589.e0623d9fE1b64S)] > to optimize versioning bucket list. We should support this feature in > AliyunOSS module. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17374) AliyunOSS: support ListObjectsV2

[ https://issues.apache.org/jira/browse/HADOOP-17374?focusedWorklogId=676204&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-676204 ] ASF GitHub Bot logged work on HADOOP-17374: --- Author: ASF GitHub Bot Created on: 04/Nov/21 04:48 Start Date: 04/Nov/21 04:48 Worklog Time Spent: 10m Work Description: yangwwei merged pull request #3587: URL: https://github.com/apache/hadoop/pull/3587 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 676204) Remaining Estimate: 0h Time Spent: 10m > AliyunOSS: support ListObjectsV2 > > > Key: HADOOP-17374 > URL: https://issues.apache.org/jira/browse/HADOOP-17374 > Project: Hadoop Common > Issue Type: Sub-task > Components: fs/oss >Affects Versions: 2.9.2, 3.0.3, 3.3.0, 3.2.1, 3.1.4, 2.10.1 >Reporter: wujinhu >Assignee: wujinhu >Priority: Major > Labels: pull-request-available > Attachments: HADOOP-17374.001.patch > > Time Spent: 10m > Remaining Estimate: 0h > > OSS supports > ListObjectsV2([https://help.aliyun.com/document_detail/187544.html?spm=a2c4g.11186623.6.1589.e0623d9fE1b64S)] > to optimize versioning bucket list. We should support this feature in > AliyunOSS module. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] yangwwei merged pull request #3587: HADOOP-17374. support listObjectV2

yangwwei merged pull request #3587: URL: https://github.com/apache/hadoop/pull/3587 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ferhui commented on pull request #3613: HDFS-16296. RouterRpcFairnessPolicyController add rejected permits for each nameservice

ferhui commented on pull request #3613: URL: https://github.com/apache/hadoop/pull/3613#issuecomment-960449761 @symious Thanks for contribution. @goiri Thanks for review. Will merge tomorrow if no other comments. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ferhui commented on pull request #3602: HDFS-16291.Make the comment of INode#ReclaimContext more standardized.

ferhui commented on pull request #3602: URL: https://github.com/apache/hadoop/pull/3602#issuecomment-960369289 @jianghuazhu Thanks for contribution. @virajjasani @tomscut Thanks for review! Merged -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on pull request #3538: HDFS-16266. Add remote port information to HDFS audit log

tomscut commented on pull request #3538: URL: https://github.com/apache/hadoop/pull/3538#issuecomment-960359159 Thanks @tasanuma for the merge. Thanks all for your reviews and suggestions. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ferhui merged pull request #3602: HDFS-16291.Make the comment of INode#ReclaimContext more standardized.

ferhui merged pull request #3602: URL: https://github.com/apache/hadoop/pull/3602 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[ https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=676132&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-676132 ] ASF GitHub Bot logged work on HADOOP-17981: --- Author: ASF GitHub Bot Created on: 04/Nov/21 02:01 Start Date: 04/Nov/21 02:01 Worklog Time Spent: 10m Work Description: hadoop-yetus removed a comment on pull request #3597: URL: https://github.com/apache/hadoop/pull/3597#issuecomment-956975430 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 676132) Time Spent: 8h 20m (was: 8h 10m) > Support etag-assisted renames in FileOutputCommitter > > > Key: HADOOP-17981 > URL: https://issues.apache.org/jira/browse/HADOOP-17981 > Project: Hadoop Common > Issue Type: New Feature > Components: fs, fs/azure >Affects Versions: 3.4.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 8h 20m > Remaining Estimate: 0h > > To deal with some throttling/retry issues in object stores, > pass the FileStatus entries retrieved during listing > into a private interface ResilientCommitByRename which filesystems > may implement to use extra attributes in the listing (etag, version) > to constrain and validate the operation. > Although targeting azure, GCS and others could use. no point in S3A as they > shouldn't use this committer. > # And we are not going to do any changes to FileSystem as there are explicit > guarantees of public use and stability. > I am not going to make that change as the hive thing that will suddenly start > expecting it to work forever. > # I'm not planning to merge this in, as the manifest committer is going to > include this and more (MAPREDUCE-7341) > However, I do need to get this in on a branch, so am doing this work on trunk > for dev & test and for others to review -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus removed a comment on pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

hadoop-yetus removed a comment on pull request #3597: URL: https://github.com/apache/hadoop/pull/3597#issuecomment-956975430 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] sodonnel merged pull request #3593: HDFS-16286. Add a debug tool to verify the correctness of erasure coding on file

sodonnel merged pull request #3593: URL: https://github.com/apache/hadoop/pull/3593 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tasanuma commented on pull request #3538: HDFS-16266. Add remote port information to HDFS audit log

tasanuma commented on pull request #3538: URL: https://github.com/apache/hadoop/pull/3538#issuecomment-960354847 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] ferhui commented on pull request #3613: HDFS-16296. RouterRpcFairnessPolicyController add rejected permits for each nameservice

ferhui commented on pull request #3613: URL: https://github.com/apache/hadoop/pull/3613#issuecomment-958680152 @symious Thanks for contribution, it looks good. Let's wait for the CI reports. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[ https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=676063&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-676063 ] ASF GitHub Bot logged work on HADOOP-17981: --- Author: ASF GitHub Bot Created on: 04/Nov/21 01:55 Start Date: 04/Nov/21 01:55 Worklog Time Spent: 10m Work Description: sidseth commented on pull request #3597: URL: https://github.com/apache/hadoop/pull/3597#issuecomment-958658416 > > This mechanism becomes very FileSystem specific. Implemented by Azure right now. > > I agree, which is why the API is restricted for its uses to mr-client-core only. as abfs is the only one which needs it for correctness under load, And I'm not worried about that specifity. Can I point to how much of the hadoop fs api are hdfs-only -and they are public. > > > Other users of rename will not see the benefits without changing interfaces, which in turn requires shimming etc. > > Please don't try and use this particular interface in Hive. > Was referring to any potential usage - including Hive. > > Would it be better for AzureFileSystem rename itself to add a config parameter which can lookup the src etag (at the cost of a performance hit for consistency), so that downstream components / any users of the rename operation can benefit from this change without having to change interfaces. > > We are going straight from a listing (1 request/500 entries) to that rename. doing a HEAD first cuts the throughtput in half. so no. > In the scenario where this is encountered. Would not be the default behaviour, and limits the change to Abfs. Could also have the less consistent version which is not etag based, and responds only on failures. Again - limited to Abfs. > > Also, if the performance penalty is a big problem - Abfs could create very short-lived caches for FileStatus objects, and handle errors on discrepancies with the cached copy. > > Possible but convoluted. > Agree. Quite convoluted. Tossing in potential options - to avoid a new public API. > > Essentially - don't force usage of the new interface to get the benefits. > > I understand the interests of the hive team, but this fix is not the place to do a better API. > > Briefly cacheing the source FS entries is something to consider though. Not this week. > > What I could do with is some help getting #2735 in, then we can start on a public rename() builder API which will take a file status, as openFile does. > This particular change would be FSImpl agnostic, and potentially remove the need for the new interface here? > > Side note: The fs.getStatus within ResilientCommitByRenameHelper for FileSystems where this new functionality is not supported will lead to a performance penalty for the other FileSystems (performing a getFileStatus on src). > > There is an option to say "i know it is not there"; this skips the check. the committer passes this option down because it issues a delete call first. > EOD - this ends up being a new API (almost on the FileSystem), which is used by the committer first; then someone discovers it and decides to make use of it. > FWIW the manifest committer will make that pre-rename commit optional, saving that IO request. I am curious as to how well that will work I went executed on well formed tables. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 676063) Time Spent: 8h 10m (was: 8h) > Support etag-assisted renames in FileOutputCommitter > > > Key: HADOOP-17981 > URL: https://issues.apache.org/jira/browse/HADOOP-17981 > Project: Hadoop Common > Issue Type: New Feature > Components: fs, fs/azure >Affects Versions: 3.4.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 8h 10m > Remaining Estimate: 0h > > To deal with some throttling/retry issues in object stores, > pass the FileStatus entries retrieved during listing > into a private interface ResilientCommitByRename which filesystems > may implement to use extra attributes in the listing (etag, version) > to constrain and validate the operation. > Although targeting azure, GCS and others could use. no point in S3A as they > shouldn't use this committer. > # And we are not going to do any changes to FileSystem as

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[ https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=676062&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-676062 ] ASF GitHub Bot logged work on HADOOP-17981: --- Author: ASF GitHub Bot Created on: 04/Nov/21 01:55 Start Date: 04/Nov/21 01:55 Worklog Time Spent: 10m Work Description: steveloughran closed pull request #3597: URL: https://github.com/apache/hadoop/pull/3597 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 676062) Time Spent: 8h (was: 7h 50m) > Support etag-assisted renames in FileOutputCommitter > > > Key: HADOOP-17981 > URL: https://issues.apache.org/jira/browse/HADOOP-17981 > Project: Hadoop Common > Issue Type: New Feature > Components: fs, fs/azure >Affects Versions: 3.4.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 8h > Remaining Estimate: 0h > > To deal with some throttling/retry issues in object stores, > pass the FileStatus entries retrieved during listing > into a private interface ResilientCommitByRename which filesystems > may implement to use extra attributes in the listing (etag, version) > to constrain and validate the operation. > Although targeting azure, GCS and others could use. no point in S3A as they > shouldn't use this committer. > # And we are not going to do any changes to FileSystem as there are explicit > guarantees of public use and stability. > I am not going to make that change as the hive thing that will suddenly start > expecting it to work forever. > # I'm not planning to merge this in, as the manifest committer is going to > include this and more (MAPREDUCE-7341) > However, I do need to get this in on a branch, so am doing this work on trunk > for dev & test and for others to review -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] haiyang1987 commented on pull request #3596: HDFS-16287. Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

haiyang1987 commented on pull request #3596: URL: https://github.com/apache/hadoop/pull/3596#issuecomment-958731868 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

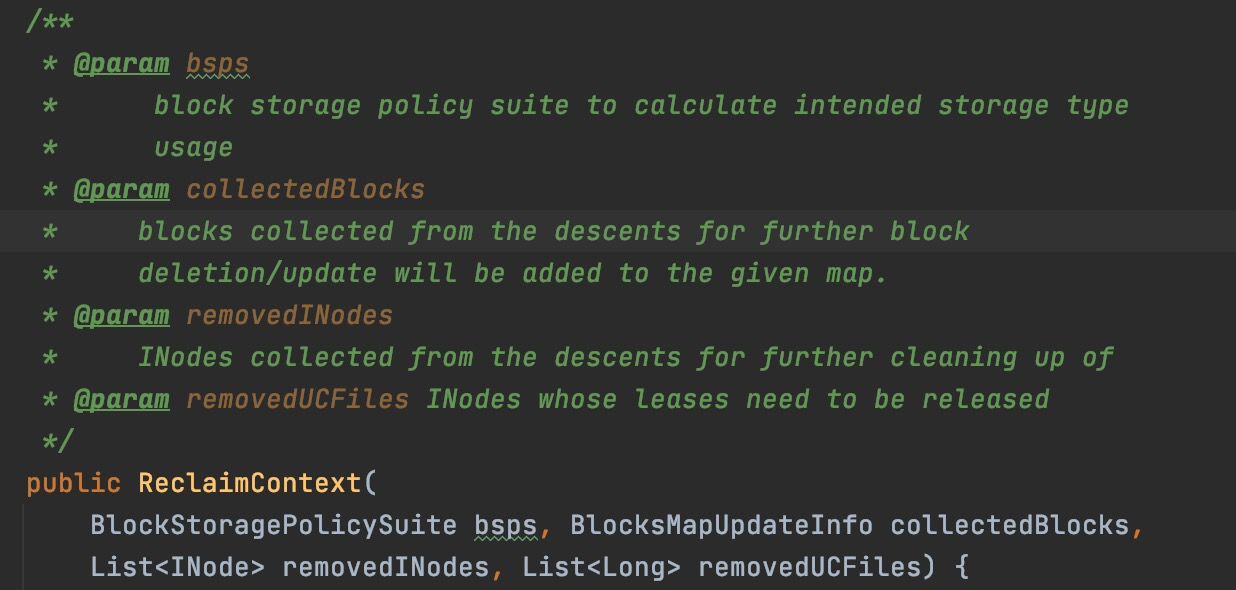

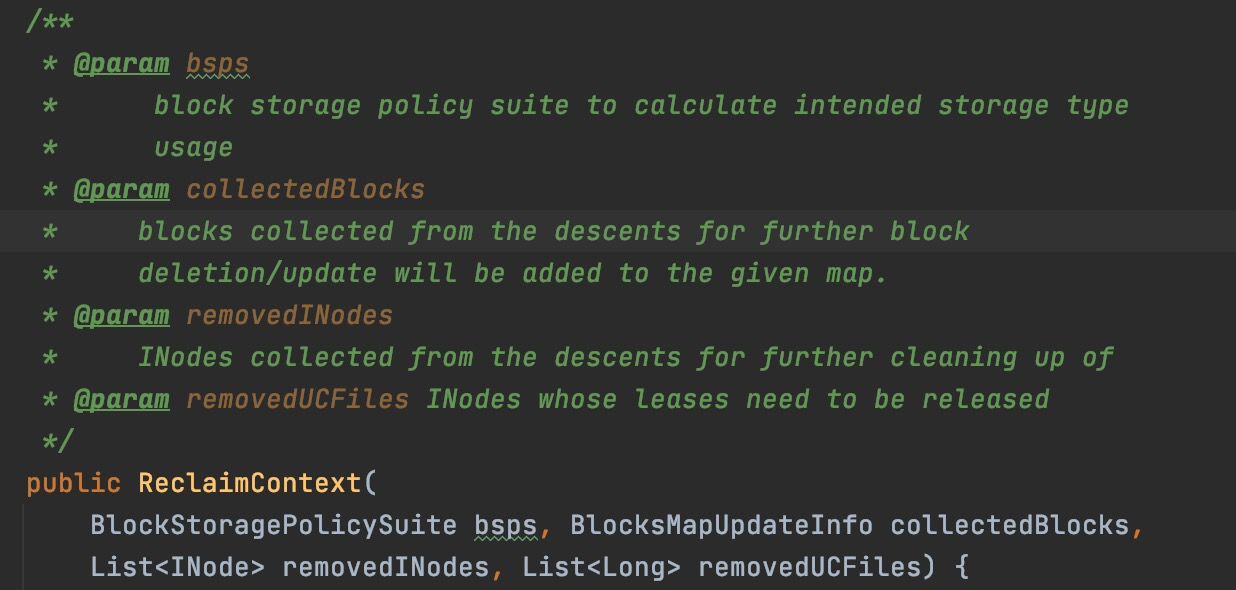

[GitHub] [hadoop] jianghuazhu commented on a change in pull request #3602: HDFS-16291.Make the comment of INode#ReclaimContext more standardized.

jianghuazhu commented on a change in pull request #3602:

URL: https://github.com/apache/hadoop/pull/3602#discussion_r741662220

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/INode.java

##

@@ -993,15 +993,13 @@ public long getNsDelta() {

private final QuotaDelta quotaDelta;

/**

- * @param bsps

- * block storage policy suite to calculate intended storage type

Review comment:

Thanks @ferhui for the comment and review.

I will update it later.

The new style will look like this:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] sidseth commented on pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

sidseth commented on pull request #3597: URL: https://github.com/apache/hadoop/pull/3597#issuecomment-958658416 > > This mechanism becomes very FileSystem specific. Implemented by Azure right now. > > I agree, which is why the API is restricted for its uses to mr-client-core only. as abfs is the only one which needs it for correctness under load, And I'm not worried about that specifity. Can I point to how much of the hadoop fs api are hdfs-only -and they are public. > > > Other users of rename will not see the benefits without changing interfaces, which in turn requires shimming etc. > > Please don't try and use this particular interface in Hive. > Was referring to any potential usage - including Hive. > > Would it be better for AzureFileSystem rename itself to add a config parameter which can lookup the src etag (at the cost of a performance hit for consistency), so that downstream components / any users of the rename operation can benefit from this change without having to change interfaces. > > We are going straight from a listing (1 request/500 entries) to that rename. doing a HEAD first cuts the throughtput in half. so no. > In the scenario where this is encountered. Would not be the default behaviour, and limits the change to Abfs. Could also have the less consistent version which is not etag based, and responds only on failures. Again - limited to Abfs. > > Also, if the performance penalty is a big problem - Abfs could create very short-lived caches for FileStatus objects, and handle errors on discrepancies with the cached copy. > > Possible but convoluted. > Agree. Quite convoluted. Tossing in potential options - to avoid a new public API. > > Essentially - don't force usage of the new interface to get the benefits. > > I understand the interests of the hive team, but this fix is not the place to do a better API. > > Briefly cacheing the source FS entries is something to consider though. Not this week. > > What I could do with is some help getting #2735 in, then we can start on a public rename() builder API which will take a file status, as openFile does. > This particular change would be FSImpl agnostic, and potentially remove the need for the new interface here? > > Side note: The fs.getStatus within ResilientCommitByRenameHelper for FileSystems where this new functionality is not supported will lead to a performance penalty for the other FileSystems (performing a getFileStatus on src). > > There is an option to say "i know it is not there"; this skips the check. the committer passes this option down because it issues a delete call first. > EOD - this ends up being a new API (almost on the FileSystem), which is used by the committer first; then someone discovers it and decides to make use of it. > FWIW the manifest committer will make that pre-rename commit optional, saving that IO request. I am curious as to how well that will work I went executed on well formed tables. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] steveloughran closed pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

steveloughran closed pull request #3597: URL: https://github.com/apache/hadoop/pull/3597 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on a change in pull request #3596: HDFS-16287. Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

tomscut commented on a change in pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#discussion_r742015162

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Thanks @haiyang1987 for your comment. I think the logic right now is no

problem.

I mean that when ```excludeSlowNodesEnabled``` is set to true we

```startSlowPeerCollector```, and ```stopSlowPeerCollector``` when

```excludeSlowNodesEnabled``` is set to false. There is no extra overhead. What

do you think?

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Thanks @haiyang1987 for your comment. I think the logic right now is no

problem.

I mean that only when ```excludeSlowNodesEnabled``` is set to true we

```startSlowPeerCollector```, and ```stopSlowPeerCollector``` when

```excludeSlowNodesEnabled``` is set to false. There is no extra overhead. What

do you think?

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Thanks @haiyang1987 for your comment. I think the logic right now is no

problem.

I mean that only when `excludeSlowNodesEnabled` is set to `true` we

`startSlowPeerCollector`, and `stopSlowPeerCollector` when

`excludeSlowNodesEnabled` is set to `false`. There is no extra overhead. What

do you think?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3602: HDFS-16291.Make the comment of INode#ReclaimContext more standardized.

hadoop-yetus commented on pull request #3602:

URL: https://github.com/apache/hadoop/pull/3602#issuecomment-959335456

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 56s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 2s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 35m 13s | | trunk passed |

| +1 :green_heart: | compile | 1m 22s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 15s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 2s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 22s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 57s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 26s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 15s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 3s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 15s | | the patch passed |

| +1 :green_heart: | compile | 1m 17s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 10s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 10s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 54s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 14s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 47s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 17s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 19s | | the patch passed |

| +1 :green_heart: | shadedclient | 26m 58s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 348m 51s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3602/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 39s | | The patch does not

generate ASF License warnings. |

| | | 457m 26s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestHDFSFileSystemContract |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3602/2/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3602 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 5d5d26c42b34 4.15.0-147-generic #151-Ubuntu SMP Fri Jun 18

19:21:19 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 673f55d0883ee7bf09e70202f14d4e334adc3cc5 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3602/2/testReport/ |

| Max. process+thread count | 1906 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3602/2/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was aut

[GitHub] [hadoop] goiri commented on a change in pull request #3553: HDFS-16273. RBF: RouterRpcFairnessPolicyController add availableHandl…

goiri commented on a change in pull request #3553:

URL: https://github.com/apache/hadoop/pull/3553#discussion_r742198185

##

File path:

hadoop-hdfs-project/hadoop-hdfs-rbf/src/main/java/org/apache/hadoop/hdfs/server/federation/fairness/NoRouterRpcFairnessPolicyController.java

##

@@ -46,4 +46,9 @@ public void releasePermit(String nsId) {

public void shutdown() {

// Nothing for now.

}

+

+ @Override

+ public String getAvailableHandlerOnPerNs(){

+return "N/A";

Review comment:

Should we test for this?

##

File path:

hadoop-hdfs-project/hadoop-hdfs-rbf/src/main/java/org/apache/hadoop/hdfs/server/federation/fairness/AbstractRouterRpcFairnessPolicyController.java

##

@@ -75,4 +77,17 @@ protected void insertNameServiceWithPermits(String nsId, int

maxPermits) {

protected int getAvailablePermits(String nsId) {

return this.permits.get(nsId).availablePermits();

}

+

+ @Override

+ public String getAvailableHandlerOnPerNs() {

+JSONObject json = new JSONObject();

+for (Map.Entry entry : permits.entrySet()) {

+ try {

+json.put(entry.getKey(), entry.getValue().availablePermits());

Review comment:

Let's extract entry.getKey() and entry.getValue() to have a particular

name.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] haiyang1987 commented on a change in pull request #3596: HDFS-16287. Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

haiyang1987 commented on a change in pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#discussion_r741696525

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -260,17 +257,14 @@

final Timer timer = new Timer();

this.slowPeerTracker = dataNodePeerStatsEnabled ?

new SlowPeerTracker(conf, timer) : null;

-this.excludeSlowNodesEnabled = conf.getBoolean(

-DFS_NAMENODE_BLOCKPLACEMENTPOLICY_EXCLUDE_SLOW_NODES_ENABLED_KEY,

-DFS_NAMENODE_BLOCKPLACEMENTPOLICY_EXCLUDE_SLOW_NODES_ENABLED_DEFAULT);

this.maxSlowPeerReportNodes = conf.getInt(

DFSConfigKeys.DFS_NAMENODE_MAX_SLOWPEER_COLLECT_NODES_KEY,

DFSConfigKeys.DFS_NAMENODE_MAX_SLOWPEER_COLLECT_NODES_DEFAULT);

this.slowPeerCollectionInterval = conf.getTimeDuration(

DFSConfigKeys.DFS_NAMENODE_SLOWPEER_COLLECT_INTERVAL_KEY,

DFSConfigKeys.DFS_NAMENODE_SLOWPEER_COLLECT_INTERVAL_DEFAULT,

TimeUnit.MILLISECONDS);

-if (slowPeerTracker != null && excludeSlowNodesEnabled) {

Review comment:

@tomscut Thank you for your review.

1.Current parameter 'dataNodePeerStatsEnabled' and 'excludeSlowNodesEnabled'

decision SlowPeerCollector thread whether to start ,But it didn't take into

account avoid SlowDataNodesForRead logic

2.So think about two phases:

a.The first is to start SlowPeerCollector thread

b.Second, you can control whether to enable read/write avoid slow datanode

according to dynamic parameters

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Consider slowNodesUuidSet is generated when the SlowPeerCollector thread

is started,therefore it is logical to judge Therefore, it is logical to judge

whether the dnUuid exists in the slowNodesUuidSet?

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Consider slowNodesUuidSet is generated when the SlowPeerCollector thread

is started,therefore it is logical to judge whether the dnUuid exists in the

slowNodesUuidSet?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17124) Support LZO using aircompressor

[ https://issues.apache.org/jira/browse/HADOOP-17124?focusedWorklogId=675999&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-675999 ] ASF GitHub Bot logged work on HADOOP-17124: --- Author: ASF GitHub Bot Created on: 04/Nov/21 01:49 Start Date: 04/Nov/21 01:49 Worklog Time Spent: 10m Work Description: hadoop-yetus commented on pull request #3612: URL: https://github.com/apache/hadoop/pull/3612#issuecomment-958607089 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 675999) Time Spent: 6h 40m (was: 6.5h) > Support LZO using aircompressor > --- > > Key: HADOOP-17124 > URL: https://issues.apache.org/jira/browse/HADOOP-17124 > Project: Hadoop Common > Issue Type: New Feature > Components: common >Affects Versions: 3.3.0 >Reporter: DB Tsai >Priority: Major > Labels: pull-request-available > Time Spent: 6h 40m > Remaining Estimate: 0h > > LZO codec was removed in HADOOP-4874 because the original LZO binding is GPL > which is problematic. However, many legacy data is still compressed by LZO > codec, and companies often use vendor's GPL LZO codec in the classpath which > might cause GPL contamination. > Presro and ORC-77 use [aircompressor| > [https://github.com/airlift/aircompressor]] (Apache V2 licensed) to compress > and decompress LZO data. Hadoop can add back LZO support using aircompressor > without GPL violation. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] cndaimin commented on a change in pull request #3593: HDFS-16286. Add a debug tool to verify the correctness of erasure coding on file

cndaimin commented on a change in pull request #3593:

URL: https://github.com/apache/hadoop/pull/3593#discussion_r741582271

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/tools/TestDebugAdmin.java

##

@@ -166,8 +179,91 @@ public void testComputeMetaCommand() throws Exception {

@Test(timeout = 6)

public void testRecoverLeaseforFileNotFound() throws Exception {

+cluster = new MiniDFSCluster.Builder(conf).numDataNodes(1).build();

+cluster.waitActive();

assertTrue(runCmd(new String[] {

"recoverLease", "-path", "/foo", "-retries", "2" }).contains(

"Giving up on recoverLease for /foo after 1 try"));

}

+

+ @Test(timeout = 6)

+ public void testVerifyECCommand() throws Exception {

+final ErasureCodingPolicy ecPolicy = SystemErasureCodingPolicies.getByID(

+SystemErasureCodingPolicies.RS_3_2_POLICY_ID);

+cluster = DFSTestUtil.setupCluster(conf, 6, 5, 0);

+cluster.waitActive();

+DistributedFileSystem fs = cluster.getFileSystem();

+

+assertEquals("ret: 1, verifyEC -file Verify HDFS erasure coding on

" +

+"all block groups of the file.", runCmd(new String[]{"verifyEC"}));

+

+assertEquals("ret: 1, File /bar does not exist.",

+runCmd(new String[]{"verifyEC", "-file", "/bar"}));

+

+fs.create(new Path("/bar")).close();

+assertEquals("ret: 1, File /bar is not erasure coded.",

+runCmd(new String[]{"verifyEC", "-file", "/bar"}));

+

+

+final Path ecDir = new Path("/ec");

+fs.mkdir(ecDir, FsPermission.getDirDefault());

+fs.enableErasureCodingPolicy(ecPolicy.getName());

+fs.setErasureCodingPolicy(ecDir, ecPolicy.getName());

+

+assertEquals("ret: 1, File /ec is not a regular file.",

+runCmd(new String[]{"verifyEC", "-file", "/ec"}));

+

+fs.create(new Path(ecDir, "foo"));

+assertEquals("ret: 1, File /ec/foo is not closed.",

+runCmd(new String[]{"verifyEC", "-file", "/ec/foo"}));

+

+final short repl = 1;

+final long k = 1024;

+final long m = k * k;

+final long seed = 0x1234567L;

+DFSTestUtil.createFile(fs, new Path(ecDir, "foo_65535"), 65535, repl,

seed);

+assertTrue(runCmd(new String[]{"verifyEC", "-file", "/ec/foo_65535"})

+.contains("All EC block group status: OK"));

+DFSTestUtil.createFile(fs, new Path(ecDir, "foo_256k"), 256 * k, repl,

seed);

+assertTrue(runCmd(new String[]{"verifyEC", "-file", "/ec/foo_256k"})

+.contains("All EC block group status: OK"));

+DFSTestUtil.createFile(fs, new Path(ecDir, "foo_1m"), m, repl, seed);

+assertTrue(runCmd(new String[]{"verifyEC", "-file", "/ec/foo_1m"})

+.contains("All EC block group status: OK"));

+DFSTestUtil.createFile(fs, new Path(ecDir, "foo_2m"), 2 * m, repl, seed);

+assertTrue(runCmd(new String[]{"verifyEC", "-file", "/ec/foo_2m"})

+.contains("All EC block group status: OK"));

+DFSTestUtil.createFile(fs, new Path(ecDir, "foo_3m"), 3 * m, repl, seed);

+assertTrue(runCmd(new String[]{"verifyEC", "-file", "/ec/foo_3m"})

+.contains("All EC block group status: OK"));

+DFSTestUtil.createFile(fs, new Path(ecDir, "foo_5m"), 5 * m, repl, seed);

+assertTrue(runCmd(new String[]{"verifyEC", "-file", "/ec/foo_5m"})

+.contains("All EC block group status: OK"));

+

Review comment:

Thanks, that's a good advice, updated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3612: WIP. HADOOP-17124. Support LZO Codec using aircompressor

hadoop-yetus commented on pull request #3612: URL: https://github.com/apache/hadoop/pull/3612#issuecomment-958607089 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17124) Support LZO using aircompressor

[ https://issues.apache.org/jira/browse/HADOOP-17124?focusedWorklogId=675927&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-675927 ] ASF GitHub Bot logged work on HADOOP-17124: --- Author: ASF GitHub Bot Created on: 04/Nov/21 01:41 Start Date: 04/Nov/21 01:41 Worklog Time Spent: 10m Work Description: sunchao commented on pull request #3612: URL: https://github.com/apache/hadoop/pull/3612#issuecomment-959727355 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 675927) Time Spent: 6.5h (was: 6h 20m) > Support LZO using aircompressor > --- > > Key: HADOOP-17124 > URL: https://issues.apache.org/jira/browse/HADOOP-17124 > Project: Hadoop Common > Issue Type: New Feature > Components: common >Affects Versions: 3.3.0 >Reporter: DB Tsai >Priority: Major > Labels: pull-request-available > Time Spent: 6.5h > Remaining Estimate: 0h > > LZO codec was removed in HADOOP-4874 because the original LZO binding is GPL > which is problematic. However, many legacy data is still compressed by LZO > codec, and companies often use vendor's GPL LZO codec in the classpath which > might cause GPL contamination. > Presro and ORC-77 use [aircompressor| > [https://github.com/airlift/aircompressor]] (Apache V2 licensed) to compress > and decompress LZO data. Hadoop can add back LZO support using aircompressor > without GPL violation. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] tasanuma merged pull request #3538: HDFS-16266. Add remote port information to HDFS audit log

tasanuma merged pull request #3538: URL: https://github.com/apache/hadoop/pull/3538 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] haiyang1987 closed pull request #3596: HDFS-16287. Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

haiyang1987 closed pull request #3596: URL: https://github.com/apache/hadoop/pull/3596 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3593: HDFS-16286. Add a debug tool to verify the correctness of erasure coding on file

hadoop-yetus commented on pull request #3593: URL: https://github.com/apache/hadoop/pull/3593#issuecomment-958791127 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[ https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=675938&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-675938 ] ASF GitHub Bot logged work on HADOOP-17981: --- Author: ASF GitHub Bot Created on: 04/Nov/21 01:42 Start Date: 04/Nov/21 01:42 Worklog Time Spent: 10m Work Description: steveloughran commented on pull request #3597: URL: https://github.com/apache/hadoop/pull/3597#issuecomment-959193924 > EOD - this ends up being a new API (almost on the FileSystem), which is used by the committer first; then someone discovers it and decides to make use of it. yes, and both hive and hbase are known to do that, often ending forcing hdfs only apis to get pulled up without concern for the other stores (see git history of FileSystem there). a builder rename would be a big job with 1. rename/3 public with tests and performant object store impls 2. default builder to delegate to that with same semantics 3. add options for etag etc on a store by store or cross store basis -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 675938) Time Spent: 7h 50m (was: 7h 40m) > Support etag-assisted renames in FileOutputCommitter > > > Key: HADOOP-17981 > URL: https://issues.apache.org/jira/browse/HADOOP-17981 > Project: Hadoop Common > Issue Type: New Feature > Components: fs, fs/azure >Affects Versions: 3.4.0 >Reporter: Steve Loughran >Assignee: Steve Loughran >Priority: Major > Labels: pull-request-available > Time Spent: 7h 50m > Remaining Estimate: 0h > > To deal with some throttling/retry issues in object stores, > pass the FileStatus entries retrieved during listing > into a private interface ResilientCommitByRename which filesystems > may implement to use extra attributes in the listing (etag, version) > to constrain and validate the operation. > Although targeting azure, GCS and others could use. no point in S3A as they > shouldn't use this committer. > # And we are not going to do any changes to FileSystem as there are explicit > guarantees of public use and stability. > I am not going to make that change as the hive thing that will suddenly start > expecting it to work forever. > # I'm not planning to merge this in, as the manifest committer is going to > include this and more (MAPREDUCE-7341) > However, I do need to get this in on a branch, so am doing this work on trunk > for dev & test and for others to review -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] steveloughran commented on pull request #3597: HADOOP-17981 Support etag-assisted renames in FileOutputCommitter

steveloughran commented on pull request #3597: URL: https://github.com/apache/hadoop/pull/3597#issuecomment-959193924 > EOD - this ends up being a new API (almost on the FileSystem), which is used by the committer first; then someone discovers it and decides to make use of it. yes, and both hive and hbase are known to do that, often ending forcing hdfs only apis to get pulled up without concern for the other stores (see git history of FileSystem there). a builder rename would be a big job with 1. rename/3 public with tests and performant object store impls 2. default builder to delegate to that with same semantics 3. add options for etag etc on a store by store or cross store basis -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] sunchao commented on pull request #3612: WIP. HADOOP-17124. Support LZO Codec using aircompressor

sunchao commented on pull request #3612: URL: https://github.com/apache/hadoop/pull/3612#issuecomment-959727355 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17124) Support LZO using aircompressor

[ https://issues.apache.org/jira/browse/HADOOP-17124?focusedWorklogId=675901&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-675901 ] ASF GitHub Bot logged work on HADOOP-17124: --- Author: ASF GitHub Bot Created on: 04/Nov/21 01:39 Start Date: 04/Nov/21 01:39 Worklog Time Spent: 10m Work Description: viirya commented on pull request #3612: URL: https://github.com/apache/hadoop/pull/3612#issuecomment-958606536 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 675901) Time Spent: 6h 20m (was: 6h 10m) > Support LZO using aircompressor > --- > > Key: HADOOP-17124 > URL: https://issues.apache.org/jira/browse/HADOOP-17124 > Project: Hadoop Common > Issue Type: New Feature > Components: common >Affects Versions: 3.3.0 >Reporter: DB Tsai >Priority: Major > Labels: pull-request-available > Time Spent: 6h 20m > Remaining Estimate: 0h > > LZO codec was removed in HADOOP-4874 because the original LZO binding is GPL > which is problematic. However, many legacy data is still compressed by LZO > codec, and companies often use vendor's GPL LZO codec in the classpath which > might cause GPL contamination. > Presro and ORC-77 use [aircompressor| > [https://github.com/airlift/aircompressor]] (Apache V2 licensed) to compress > and decompress LZO data. Hadoop can add back LZO support using aircompressor > without GPL violation. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop-site] GauthamBanasandra commented on pull request #28: Add Gautham Banasandra to Hadoop committers' list

GauthamBanasandra commented on pull request #28: URL: https://github.com/apache/hadoop-site/pull/28#issuecomment-959811956 @aajisaka could you please review this PR? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] viirya commented on pull request #3612: WIP. HADOOP-17124. Support LZO Codec using aircompressor

viirya commented on pull request #3612: URL: https://github.com/apache/hadoop/pull/3612#issuecomment-958606536 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17124) Support LZO using aircompressor

[ https://issues.apache.org/jira/browse/HADOOP-17124?focusedWorklogId=675889&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-675889 ] ASF GitHub Bot logged work on HADOOP-17124: --- Author: ASF GitHub Bot Created on: 04/Nov/21 01:38 Start Date: 04/Nov/21 01:38 Worklog Time Spent: 10m Work Description: viirya edited a comment on pull request #3612: URL: https://github.com/apache/hadoop/pull/3612#issuecomment-959725363 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 675889) Time Spent: 6h 10m (was: 6h) > Support LZO using aircompressor > --- > > Key: HADOOP-17124 > URL: https://issues.apache.org/jira/browse/HADOOP-17124 > Project: Hadoop Common > Issue Type: New Feature > Components: common >Affects Versions: 3.3.0 >Reporter: DB Tsai >Priority: Major > Labels: pull-request-available > Time Spent: 6h 10m > Remaining Estimate: 0h > > LZO codec was removed in HADOOP-4874 because the original LZO binding is GPL > which is problematic. However, many legacy data is still compressed by LZO > codec, and companies often use vendor's GPL LZO codec in the classpath which > might cause GPL contamination. > Presro and ORC-77 use [aircompressor| > [https://github.com/airlift/aircompressor]] (Apache V2 licensed) to compress > and decompress LZO data. Hadoop can add back LZO support using aircompressor > without GPL violation. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] viirya edited a comment on pull request #3612: WIP. HADOOP-17124. Support LZO Codec using aircompressor

viirya edited a comment on pull request #3612: URL: https://github.com/apache/hadoop/pull/3612#issuecomment-959725363 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3596: HDFS-16287. Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

hadoop-yetus commented on pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#issuecomment-959651079

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 24s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 36m 58s | | trunk passed |

| +1 :green_heart: | compile | 1m 35s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 22s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 4s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 33s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 3s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 28s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 41s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 39s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 27s | | the patch passed |

| +1 :green_heart: | compile | 1m 28s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 28s | | the patch passed |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 18s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 57s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 25s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 54s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 24s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 44s | | the patch passed |

| +1 :green_heart: | shadedclient | 25m 53s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 374m 11s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3596/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 43s | | The patch does not

generate ASF License warnings. |

| | | 486m 5s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestHDFSFileSystemContract |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3596/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3596 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 1fb2ee0e949b 4.15.0-143-generic #147-Ubuntu SMP Wed Apr 14

16:10:11 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 2ec82e1c420789afb326f4ebb451522a8a4e2358 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3596/4/testReport/ |

| Max. process+thread count | 2022 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3596/4/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please

[GitHub] [hadoop] goiri commented on a change in pull request #3613: HDFS-16296. RouterRpcFairnessPolicyController add rejected permits for each nameservice

goiri commented on a change in pull request #3613:

URL: https://github.com/apache/hadoop/pull/3613#discussion_r742196019

##

File path:

hadoop-hdfs-project/hadoop-hdfs-rbf/src/test/java/org/apache/hadoop/hdfs/server/federation/fairness/TestRouterHandlersFairness.java

##

@@ -208,4 +212,15 @@ private void invokeConcurrent(ClientProtocol routerProto,

String clientName)

routerProto.renewLease(clientName);

}

+ private int getTotalRejectedPermits(RouterContext routerContext) {

+int totalRejectedPermits = 0;

+for (String ns : cluster.getNameservices()) {

+ totalRejectedPermits += routerContext.getRouter().getRpcServer()

Review comment:

We may want to extract:

routerContext.getRouter().getRpcServer().getRPCClient()

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] goiri commented on a change in pull request #3595: HDFS-16283: RBF: improve renewLease() to call only a specific NameNod…

goiri commented on a change in pull request #3595: URL: https://github.com/apache/hadoop/pull/3595#discussion_r742199623 ## File path: hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/protocol/ClientProtocol.java ## @@ -765,6 +765,14 @@ BatchedDirectoryListing getBatchedListing( @Idempotent void renewLease(String clientName) throws IOException; + /** + * The functionality is the same as renewLease(clientName). This is to support + * router based FileSystem to newLease against a specific target FileSystem instead + * of all the target FileSystems in each call. + */ + @Idempotent + void renewLease(String clientName, String nsId) throws IOException; Review comment: That's a good point. ClientProtocol shouldn't care about subclusters. The whole abstraction is based on paths and that would make more sense. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop-site] aajisaka commented on pull request #28: Add Gautham Banasandra to Hadoop committers' list

aajisaka commented on pull request #28: URL: https://github.com/apache/hadoop-site/pull/28#issuecomment-959830761 Would you run `hugo` to generate the html files and add them to the commit? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on pull request #3602: HDFS-16291.Make the comment of INode#ReclaimContext more standardized.

jianghuazhu commented on pull request #3602: URL: https://github.com/apache/hadoop/pull/3602#issuecomment-958751029 Thank you very much. @ferhui @virajjasani -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: common-issues-h...@hadoop.apache.org

[jira] [Work logged] (HADOOP-17981) Support etag-assisted renames in FileOutputCommitter

[

https://issues.apache.org/jira/browse/HADOOP-17981?focusedWorklogId=675761&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-675761

]

ASF GitHub Bot logged work on HADOOP-17981:

---

Author: ASF GitHub Bot

Created on: 04/Nov/21 01:25

Start Date: 04/Nov/21 01:25

Worklog Time Spent: 10m

Work Description: hadoop-yetus removed a comment on pull request #3611:

URL: https://github.com/apache/hadoop/pull/3611#issuecomment-958583977

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 3s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | markdownlint | 0m 0s | | markdownlint was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 4 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 46s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 29m 49s | | trunk passed |