[jira] [Commented] (HDFS-15982) Deleted data on the Web UI must be saved to the trash

[ https://issues.apache.org/jira/browse/HDFS-15982?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17324175#comment-17324175 ] Viraj Jasani commented on HDFS-15982: - FYI [~ayushtkn] [~weichiu] > Deleted data on the Web UI must be saved to the trash > -- > > Key: HDFS-15982 > URL: https://issues.apache.org/jira/browse/HDFS-15982 > Project: Hadoop HDFS > Issue Type: New Feature > Components: hdfs >Reporter: Bhavik Patel >Priority: Major > > If we delete the data from the Web UI then it should be first moved to > configured/default Trash directory and after the trash interval time, it > should be removed. currently, data directly removed from the system[This > behavior should be the same as CLI cmd] > > This can be helpful when the user accidentally deletes data from the Web UI. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15988) Stabilise HDFS Pre-Commit

[

https://issues.apache.org/jira/browse/HDFS-15988?focusedWorklogId=584582&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584582

]

ASF GitHub Bot logged work on HDFS-15988:

-

Author: ASF GitHub Bot

Created on: 17/Apr/21 02:37

Start Date: 17/Apr/21 02:37

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #2860:

URL: https://github.com/apache/hadoop/pull/2860#issuecomment-821753013

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 38s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | shelldocs | 0m 0s | | Shelldocs was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 6 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 15m 50s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 20m 26s | | trunk passed |

| +1 :green_heart: | compile | 4m 55s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 4m 32s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | checkstyle | 1m 21s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 15s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 30s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 18s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 6m 59s | | trunk passed |

| +1 :green_heart: | shadedclient | 14m 33s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 34s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 36s | | the patch passed |

| +1 :green_heart: | compile | 4m 45s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 4m 45s | | the patch passed |

| +1 :green_heart: | compile | 4m 28s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | javac | 4m 28s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 1m 10s |

[/results-checkstyle-hadoop-hdfs-project.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2860/15/artifact/out/results-checkstyle-hadoop-hdfs-project.txt)

| hadoop-hdfs-project: The patch generated 1 new + 177 unchanged - 1 fixed =

178 total (was 178) |

| +1 :green_heart: | hadolint | 0m 2s | | No new issues. |

| +1 :green_heart: | mvnsite | 2m 43s | | the patch passed |

| +1 :green_heart: | shellcheck | 0m 2s | | No new issues. |

| +1 :green_heart: | javadoc | 2m 0s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 45s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 7m 2s | | the patch passed |

| +1 :green_heart: | shadedclient | 14m 18s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 25s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 229m 5s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2860/15/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | unit | 18m 41s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 54s | | The patch does not

generate ASF License warnings. |

| | | 374m 14s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.qjournal.server.TestJournalNodeRespectsBindHostKeys |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2860/15/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2860 |

| Optional Tests | dupname asflicense mvnsite un

[jira] [Work logged] (HDFS-15980) Fix tests for HDFS-15754 Create packet metrics for DataNode

[

https://issues.apache.org/jira/browse/HDFS-15980?focusedWorklogId=584579&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584579

]

ASF GitHub Bot logged work on HDFS-15980:

-

Author: ASF GitHub Bot

Created on: 17/Apr/21 01:21

Start Date: 17/Apr/21 01:21

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #2921:

URL: https://github.com/apache/hadoop/pull/2921#issuecomment-821743561

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 57s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 52s | | trunk passed |

| +1 :green_heart: | compile | 1m 20s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 1m 16s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | checkstyle | 1m 1s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 22s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 52s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 24s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 6s | | trunk passed |

| +1 :green_heart: | shadedclient | 16m 7s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 10s | | the patch passed |

| +1 :green_heart: | compile | 1m 12s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 1m 12s | | the patch passed |

| +1 :green_heart: | compile | 1m 4s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | javac | 1m 4s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 52s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2921/1/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 6 new + 13 unchanged -

0 fixed = 19 total (was 13) |

| +1 :green_heart: | mvnsite | 1m 11s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 44s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 14s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 4s | | the patch passed |

| +1 :green_heart: | shadedclient | 15m 57s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 390m 24s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2921/1/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 44s | | The patch does not

generate ASF License warnings. |

| | | 476m 50s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.namenode.TestDecommissioningStatus

|

| | hadoop.hdfs.server.namenode.TestAddOverReplicatedStripedBlocks |

| | hadoop.hdfs.TestSnapshotCommands |

| | hadoop.hdfs.TestPersistBlocks |

| | hadoop.hdfs.TestDFSShell |

| | hadoop.hdfs.server.datanode.fsdataset.impl.TestFsVolumeList |

| | hadoop.hdfs.TestFileConcurrentReader |

| | hadoop.hdfs.TestStateAlignmentContextWithHA |

| | hadoop.hdfs.server.namenode.ha.TestSeveralNameNodes |

| |

hadoop.hdfs.server.namenode.TestDecommissioningStatusWithBackoffMonitor |

| | hadoop.hdfs.server.namenode.ha.TestEditLogTailer |

| | hadoop.hdfs.server.datanode.TestBlockScanner |

| | hadoop.hdfs.server.namenode.snapshot.TestNestedSnapshots |

| | hadoop.hdfs.qjournal.server.TestJournalNodeRespectsBindHostKeys |

| | hadoop.hdfs.server.datanode.TestDirectoryScanner |

| | hadoop.hdfs.TestHDFSFileS

[jira] [Commented] (HDFS-15980) Fix tests for HDFS-15754 Create packet metrics for DataNode

[

https://issues.apache.org/jira/browse/HDFS-15980?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17324142#comment-17324142

]

Hadoop QA commented on HDFS-15980:

--

| (x) *{color:red}-1 overall{color}* |

\\

\\

|| Vote || Subsystem || Runtime || Logfile || Comment ||

| {color:blue}0{color} | {color:blue} reexec {color} | {color:blue} 0m

57s{color} | | {color:blue} Docker mode activated. {color} |

|| || || || {color:brown} Prechecks {color} || ||

| {color:green}+1{color} | {color:green} dupname {color} | {color:green} 0m

0s{color} | | {color:green} No case conflicting files found. {color} |

| {color:blue}0{color} | {color:blue} codespell {color} | {color:blue} 0m

0s{color} | | {color:blue} codespell was not available. {color} |

| {color:green}+1{color} | {color:green} @author {color} | {color:green} 0m

0s{color} | | {color:green} The patch does not contain any @author tags.

{color} |

| {color:green}+1{color} | {color:green} test4tests {color} | {color:green} 0m

0s{color} | | {color:green} The patch appears to include 1 new or modified

test files. {color} |

|| || || || {color:brown} trunk Compile Tests {color} || ||

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 33m

52s{color} | | {color:green} trunk passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

20s{color} | | {color:green} trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

16s{color} | | {color:green} trunk passed with JDK Private

Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 {color} |

| {color:green}+1{color} | {color:green} checkstyle {color} | {color:green} 1m

1s{color} | | {color:green} trunk passed {color} |

| {color:green}+1{color} | {color:green} mvnsite {color} | {color:green} 1m

22s{color} | | {color:green} trunk passed {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 0m

52s{color} | | {color:green} trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

24s{color} | | {color:green} trunk passed with JDK Private

Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 {color} |

| {color:green}+1{color} | {color:green} spotbugs {color} | {color:green} 3m

6s{color} | | {color:green} trunk passed {color} |

| {color:green}+1{color} | {color:green} shadedclient {color} | {color:green}

16m 7s{color} | | {color:green} branch has no errors when building and

testing our client artifacts. {color} |

|| || || || {color:brown} Patch Compile Tests {color} || ||

| {color:green}+1{color} | {color:green} mvninstall {color} | {color:green} 1m

10s{color} | | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

12s{color} | | {color:green} the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 {color} |

| {color:green}+1{color} | {color:green} javac {color} | {color:green} 1m

12s{color} | | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} compile {color} | {color:green} 1m

4s{color} | | {color:green} the patch passed with JDK Private

Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 {color} |

| {color:green}+1{color} | {color:green} javac {color} | {color:green} 1m

4s{color} | | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} blanks {color} | {color:green} 0m

0s{color} | | {color:green} The patch has no blanks issues. {color} |

| {color:orange}-0{color} | {color:orange} checkstyle {color} | {color:orange}

0m 52s{color} |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt|https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2921/1/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt]

| {color:orange} hadoop-hdfs-project/hadoop-hdfs: The patch generated 6 new +

13 unchanged - 0 fixed = 19 total (was 13) {color} |

| {color:green}+1{color} | {color:green} mvnsite {color} | {color:green} 1m

11s{color} | | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 0m

44s{color} | | {color:green} the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 {color} |

| {color:green}+1{color} | {color:green} javadoc {color} | {color:green} 1m

14s{color} | | {color:green} the patch passed with JDK Private

Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 {color} |

| {color:green}+1{color} | {color:green} spotbugs {color} | {color:green} 3m

4s{color} | | {color:green} the patch passed {color} |

| {color:green}+1{color} | {color:green} shadedclient {color} | {color:green}

15m 57s{color} | | {color:green} patch has no errors when building and testing

our client artifacts. {color} |

|| || || || {color:brown} Oth

[jira] [Commented] (HDFS-15878) Flaky test TestRouterWebHDFSContractCreate>AbstractContractCreateTest#testSyncable in Trunk

[ https://issues.apache.org/jira/browse/HDFS-15878?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17324127#comment-17324127 ] Fengnan Li commented on HDFS-15878: --- [~inigoiri] How can we verify the tests are fixed? Is there some jenkins job I can monitor on? > Flaky test > TestRouterWebHDFSContractCreate>AbstractContractCreateTest#testSyncable in > Trunk > --- > > Key: HDFS-15878 > URL: https://issues.apache.org/jira/browse/HDFS-15878 > Project: Hadoop HDFS > Issue Type: Sub-task > Components: hdfs, rbf >Reporter: Renukaprasad C >Assignee: Fengnan Li >Priority: Major > > ERROR] Tests run: 16, Failures: 0, Errors: 1, Skipped: 2, Time elapsed: > 24.627 s <<< FAILURE! - in > org.apache.hadoop.fs.contract.router.web.TestRouterWebHDFSContractCreate > [ERROR] > testSyncable(org.apache.hadoop.fs.contract.router.web.TestRouterWebHDFSContractCreate) > Time elapsed: 0.222 s <<< ERROR! > java.io.FileNotFoundException: File /test/testSyncable not found. > at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) > at > sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) > at > sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) > at java.lang.reflect.Constructor.newInstance(Constructor.java:423) > at > org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:121) > at > org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:110) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem.toIOException(WebHdfsFileSystem.java:576) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem.access$900(WebHdfsFileSystem.java:146) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.shouldRetry(WebHdfsFileSystem.java:892) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.runWithRetry(WebHdfsFileSystem.java:858) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.access$100(WebHdfsFileSystem.java:652) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner$1.run(WebHdfsFileSystem.java:690) > at java.security.AccessController.doPrivileged(Native Method) > at javax.security.auth.Subject.doAs(Subject.java:422) > at > org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1899) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.run(WebHdfsFileSystem.java:686) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem$ReadRunner.getRedirectedUrl(WebHdfsFileSystem.java:2307) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem$ReadRunner.(WebHdfsFileSystem.java:2296) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem$WebHdfsInputStream.(WebHdfsFileSystem.java:2176) > at > org.apache.hadoop.hdfs.web.WebHdfsFileSystem.open(WebHdfsFileSystem.java:1610) > at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:975) > at > org.apache.hadoop.fs.contract.AbstractContractCreateTest.validateSyncableSemantics(AbstractContractCreateTest.java:556) > at > org.apache.hadoop.fs.contract.AbstractContractCreateTest.testSyncable(AbstractContractCreateTest.java:459) > at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) > at > sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) > at > sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) > at java.lang.reflect.Method.invoke(Method.java:498) > at > org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50) > at > org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12) > at > org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47) > at > org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17) > at > org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26) > at > org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27) > at org.junit.rules.TestWatcher$1.evaluate(TestWatcher.java:55) > at > org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:298) > at > org.junit.internal.runners.statements.FailOnTimeout$CallableStatement.call(FailOnTimeout.java:292) > at java.util.concurrent.FutureTask.run(FutureTask.java:266) > at java.lang.Thread.run(Thread.java:748) > Caused by: > org.apache.hadoop.ipc.RemoteException(java.io.FileNotFoundException): File > /test/testSyncable not found. > at > org.apache.

[jira] [Work logged] (HDFS-15970) Print network topology on the web

[

https://issues.apache.org/jira/browse/HDFS-15970?focusedWorklogId=584533&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584533

]

ASF GitHub Bot logged work on HDFS-15970:

-

Author: ASF GitHub Bot

Created on: 16/Apr/21 22:16

Start Date: 16/Apr/21 22:16

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #2896:

URL: https://github.com/apache/hadoop/pull/2896#issuecomment-821608597

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 36m 46s | | trunk passed |

| +1 :green_heart: | compile | 1m 30s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 1m 15s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | checkstyle | 1m 6s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 26s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 56s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 36s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 30s | | trunk passed |

| +1 :green_heart: | shadedclient | 17m 51s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 22s | | the patch passed |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 1m 18s | | the patch passed |

| +1 :green_heart: | compile | 1m 15s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | javac | 1m 15s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 1m 1s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2896/5/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 54 new + 5 unchanged -

1 fixed = 59 total (was 6) |

| +1 :green_heart: | mvnsite | 1m 23s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 49s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 21s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 34s | | the patch passed |

| +1 :green_heart: | shadedclient | 17m 43s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 239m 47s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2896/5/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 46s | | The patch does not

generate ASF License warnings. |

| | | 334m 20s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.server.diskbalancer.command.TestDiskBalancerCommand |

| | hadoop.hdfs.server.namenode.snapshot.TestNestedSnapshots |

| | hadoop.hdfs.qjournal.server.TestJournalNodeRespectsBindHostKeys |

| | hadoop.hdfs.server.datanode.TestDirectoryScanner |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2896/5/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2896 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux c516aeb7f3b1 4.15.0-136-generic #140-Ubuntu SMP Thu Jan 28

05:20:47 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 4fc4bfa78aa50d0

[jira] [Work logged] (HDFS-15987) Improve oiv tool to parse fsimage file in parallel with delimited format

[

https://issues.apache.org/jira/browse/HDFS-15987?focusedWorklogId=584511&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584511

]

ASF GitHub Bot logged work on HDFS-15987:

-

Author: ASF GitHub Bot

Created on: 16/Apr/21 20:54

Start Date: 16/Apr/21 20:54

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #2918:

URL: https://github.com/apache/hadoop/pull/2918#issuecomment-821559317

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 21m 32s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 36m 53s | | trunk passed |

| +1 :green_heart: | compile | 1m 31s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 1m 26s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | checkstyle | 1m 9s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 35s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 4s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 28s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 37s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 9s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 11s | | the patch passed |

| +1 :green_heart: | compile | 1m 16s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 1m 16s | | the patch passed |

| +1 :green_heart: | compile | 1m 5s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | javac | 1m 5s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 56s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2918/2/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 13 new + 50 unchanged

- 0 fixed = 63 total (was 50) |

| +1 :green_heart: | mvnsite | 1m 15s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 46s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 16s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| -1 :x: | spotbugs | 3m 21s |

[/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2918/2/artifact/out/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html)

| hadoop-hdfs-project/hadoop-hdfs generated 2 new + 0 unchanged - 0 fixed = 2

total (was 0) |

| +1 :green_heart: | shadedclient | 19m 6s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 323m 21s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2918/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 36s | | The patch does not

generate ASF License warnings. |

| | | 441m 33s | | |

| Reason | Tests |

|---:|:--|

| SpotBugs | module:hadoop-hdfs-project/hadoop-hdfs |

| | Found reliance on default encoding in

org.apache.hadoop.hdfs.tools.offlineImageViewer.PBImageTextWriter.outputInParallel(Configuration,

FsImageProto$FileSummary, ArrayList):in

org.apache.hadoop.hdfs.tools.offlineImageViewer.PBImageTextWriter.outputInParallel(Configuration,

FsImageProto$FileSummary, ArrayList): new java.io.PrintStream(String) At

PBImageTextWriter.java:[line 788] |

| | Exceptional return value of java.io.File.delete() ignored in

org.apache.hadoop.hdfs.tools.offlineImageViewer.PBImageTextWriter.mergeFiles(String[],

String) At PBImageTextWriter.java:ignored in

org.apache.hadoop.hdfs.tools.offlineImageV

[jira] [Work logged] (HDFS-15988) Stabilise HDFS Pre-Commit

[

https://issues.apache.org/jira/browse/HDFS-15988?focusedWorklogId=584510&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584510

]

ASF GitHub Bot logged work on HDFS-15988:

-

Author: ASF GitHub Bot

Created on: 16/Apr/21 20:52

Start Date: 16/Apr/21 20:52

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #2860:

URL: https://github.com/apache/hadoop/pull/2860#issuecomment-821556485

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 41s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | shelldocs | 0m 0s | | Shelldocs was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 6 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 15m 34s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 20m 52s | | trunk passed |

| +1 :green_heart: | compile | 4m 56s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 4m 33s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | checkstyle | 1m 19s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 10s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 29s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 16s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 6m 58s | | trunk passed |

| +1 :green_heart: | shadedclient | 14m 26s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 32s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 39s | | the patch passed |

| +1 :green_heart: | compile | 4m 42s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 4m 42s | | the patch passed |

| +1 :green_heart: | compile | 4m 28s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | javac | 4m 28s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 1m 10s |

[/results-checkstyle-hadoop-hdfs-project.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2860/14/artifact/out/results-checkstyle-hadoop-hdfs-project.txt)

| hadoop-hdfs-project: The patch generated 1 new + 177 unchanged - 1 fixed =

178 total (was 178) |

| +1 :green_heart: | hadolint | 0m 2s | | No new issues. |

| +1 :green_heart: | mvnsite | 2m 46s | | the patch passed |

| +1 :green_heart: | shellcheck | 0m 0s | | No new issues. |

| +1 :green_heart: | javadoc | 2m 0s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 2m 48s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 7m 0s | | the patch passed |

| +1 :green_heart: | shadedclient | 14m 7s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 25s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 238m 28s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2860/14/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | unit | 18m 54s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 49s | | The patch does not

generate ASF License warnings. |

| | | 383m 44s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.balancer.TestBalancer |

| | hadoop.hdfs.server.namenode.TestAddOverReplicatedStripedBlocks |

| | hadoop.hdfs.qjournal.server.TestJournalNodeRespectsBindHostKeys |

| | hadoop.hdfs.server.blockmanagement.TestUnderReplicatedBlocks |

| | hadoop.hdfs.TestReconstructStripedFileWithRandomECPolicy |

| | hadoop.hdfs.server.blockmanagement.TestBlockTokenWithDFS

[jira] [Comment Edited] (HDFS-15982) Deleted data on the Web UI must be saved to the trash

[ https://issues.apache.org/jira/browse/HDFS-15982?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17324072#comment-17324072 ] Viraj Jasani edited comment on HDFS-15982 at 4/16/21, 8:47 PM: --- +1 to this improvement. Given the importance, does it make sense to keep "moving file to .Trash" logic as part of FileSystem's Delete implementation rather than keeping at individual client interface (shell, webhdfs etc)? was (Author: vjasani): +1 to this improvement. Does it make sense to keep "moving file to .Trash" logic as part of FileSystem's Delete implementation rather than keeping at individual client interface (shell, webhdfs etc)? > Deleted data on the Web UI must be saved to the trash > -- > > Key: HDFS-15982 > URL: https://issues.apache.org/jira/browse/HDFS-15982 > Project: Hadoop HDFS > Issue Type: New Feature > Components: hdfs >Reporter: Bhavik Patel >Priority: Major > > If we delete the data from the Web UI then it should be first moved to > configured/default Trash directory and after the trash interval time, it > should be removed. currently, data directly removed from the system[This > behavior should be the same as CLI cmd] > > This can be helpful when the user accidentally deletes data from the Web UI. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-15982) Deleted data on the Web UI must be saved to the trash

[ https://issues.apache.org/jira/browse/HDFS-15982?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17324072#comment-17324072 ] Viraj Jasani commented on HDFS-15982: - +1 to this improvement. Does it make sense to keep "moving file to .Trash" logic as part of FileSystem's Delete implementation rather than keeping at individual client interface (shell, webhdfs etc)? > Deleted data on the Web UI must be saved to the trash > -- > > Key: HDFS-15982 > URL: https://issues.apache.org/jira/browse/HDFS-15982 > Project: Hadoop HDFS > Issue Type: New Feature > Components: hdfs >Reporter: Bhavik Patel >Priority: Major > > If we delete the data from the Web UI then it should be first moved to > configured/default Trash directory and after the trash interval time, it > should be removed. currently, data directly removed from the system[This > behavior should be the same as CLI cmd] > > This can be helpful when the user accidentally deletes data from the Web UI. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15988) Stabilise HDFS Pre-Commit

[ https://issues.apache.org/jira/browse/HDFS-15988?focusedWorklogId=584487&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584487 ] ASF GitHub Bot logged work on HDFS-15988: - Author: ASF GitHub Bot Created on: 16/Apr/21 20:24 Start Date: 16/Apr/21 20:24 Worklog Time Spent: 10m Work Description: hadoop-yetus commented on pull request #2860: URL: https://github.com/apache/hadoop/pull/2860#issuecomment-821541081 (!) A patch to the testing environment has been detected. Re-executing against the patched versions to perform further tests. The console is at https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2860/15/console in case of problems. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 584487) Time Spent: 20m (was: 10m) > Stabilise HDFS Pre-Commit > - > > Key: HDFS-15988 > URL: https://issues.apache.org/jira/browse/HDFS-15988 > Project: Hadoop HDFS > Issue Type: Bug >Reporter: Ayush Saxena >Assignee: Ayush Saxena >Priority: Major > Labels: pull-request-available > Time Spent: 20m > Remaining Estimate: 0h > > Fix couple of unit-tests: > TestRouterRpc > TestRouterRpcMultiDest > TestNestedSnapshots > TestPersistBlocks > TestDirectoryScanner > * Increase Maven OPTS, Remove timeouts from couple of tests and Add a retry > flaky test option in the build, So, as to make the build little stable -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15979) Move within EZ fails and cannot remove nested EZs

[

https://issues.apache.org/jira/browse/HDFS-15979?focusedWorklogId=584405&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584405

]

ASF GitHub Bot logged work on HDFS-15979:

-

Author: ASF GitHub Bot

Created on: 16/Apr/21 17:55

Start Date: 16/Apr/21 17:55

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #2919:

URL: https://github.com/apache/hadoop/pull/2919#issuecomment-821354712

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 36s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 35m 19s | | trunk passed |

| +1 :green_heart: | compile | 1m 24s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 1m 19s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | checkstyle | 1m 7s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 25s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 55s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 32s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 30s | | trunk passed |

| +1 :green_heart: | shadedclient | 19m 26s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 26s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 1m 26s | | the patch passed |

| +1 :green_heart: | compile | 1m 12s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | javac | 1m 12s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 58s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 18s | | the patch passed |

| +1 :green_heart: | xml | 0m 1s | | The patch has no ill-formed XML

file. |

| +1 :green_heart: | javadoc | 0m 53s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 1m 22s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 3m 28s | | the patch passed |

| +1 :green_heart: | shadedclient | 18m 13s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 243m 41s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2919/1/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 44s | | The patch does not

generate ASF License warnings. |

| | | 338m 35s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.datanode.TestDirectoryScanner |

| | hadoop.hdfs.qjournal.server.TestJournalNodeRespectsBindHostKeys |

| | hadoop.hdfs.server.namenode.snapshot.TestNestedSnapshots |

| | hadoop.hdfs.TestFileCreation |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2919/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2919 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell xml |

| uname | Linux 58fe044e51b6 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6

11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 687a324ecbe2a53006aeff1185ec106311555b8e |

| Default Java | Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04

/usr/lib/jvm/java-

[jira] [Updated] (HDFS-15980) Fix tests for HDFS-15754 Create packet metrics for DataNode

[ https://issues.apache.org/jira/browse/HDFS-15980?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Zheng Shao updated HDFS-15980: -- Fix Version/s: 3.4.0 Affects Version/s: 3.4.0 Status: Patch Available (was: In Progress) https://github.com/apache/hadoop/pull/2921 > Fix tests for HDFS-15754 Create packet metrics for DataNode > --- > > Key: HDFS-15980 > URL: https://issues.apache.org/jira/browse/HDFS-15980 > Project: Hadoop HDFS > Issue Type: Bug > Components: datanode >Affects Versions: 3.4.0 >Reporter: Zheng Shao >Assignee: Zheng Shao >Priority: Trivial > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 10m > Remaining Estimate: 0h > > HDFS-15754 introduces 4 new metrics in DataNodeMetrics. However the test > associated with the patch has some bugs. This issue is to fix those bugs in > the tests. > Please note that the non-test code of HDFS-15754 worked fine without any bugs. > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-15980) Fix tests for HDFS-15754 Create packet metrics for DataNode

[ https://issues.apache.org/jira/browse/HDFS-15980?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated HDFS-15980: -- Labels: pull-request-available (was: ) > Fix tests for HDFS-15754 Create packet metrics for DataNode > --- > > Key: HDFS-15980 > URL: https://issues.apache.org/jira/browse/HDFS-15980 > Project: Hadoop HDFS > Issue Type: Bug > Components: datanode >Reporter: Zheng Shao >Assignee: Zheng Shao >Priority: Trivial > Labels: pull-request-available > Time Spent: 10m > Remaining Estimate: 0h > > HDFS-15754 introduces 4 new metrics in DataNodeMetrics. However the test > associated with the patch has some bugs. This issue is to fix those bugs in > the tests. > Please note that the non-test code of HDFS-15754 worked fine without any bugs. > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15980) Fix tests for HDFS-15754 Create packet metrics for DataNode

[ https://issues.apache.org/jira/browse/HDFS-15980?focusedWorklogId=584393&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584393 ] ASF GitHub Bot logged work on HDFS-15980: - Author: ASF GitHub Bot Created on: 16/Apr/21 17:23 Start Date: 16/Apr/21 17:23 Worklog Time Spent: 10m Work Description: uzshao opened a new pull request #2921: URL: https://github.com/apache/hadoop/pull/2921 See https://issues.apache.org/jira/browse/HDFS-15980 HDFS-15754 introduces 4 new metrics in DataNodeMetrics. However the test associated with the patch has some bugs. This issue is to fix those bugs in the tests. Please note that the non-test code of HDFS-15754 worked fine without any bugs. There are 3 issues: 1. The metric names in the tests were incorrect; 2. The tests only checked the metrics of DataNode 0, but one of the metrics, PacketsSlowWriteToMirror, is only updated on 2 of the 3 DataNodes, so this created an indeterministic failure. 3. The metric PacketsSlowWriteToOsCache was not updated in the test due to the fact that the size of the file was smaller than BlockReceiver.CACHE_DROP_LAG_BYTES All of them are fixed in this patch. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 584393) Remaining Estimate: 0h Time Spent: 10m > Fix tests for HDFS-15754 Create packet metrics for DataNode > --- > > Key: HDFS-15980 > URL: https://issues.apache.org/jira/browse/HDFS-15980 > Project: Hadoop HDFS > Issue Type: Bug > Components: datanode >Reporter: Zheng Shao >Assignee: Zheng Shao >Priority: Trivial > Time Spent: 10m > Remaining Estimate: 0h > > HDFS-15754 introduces 4 new metrics in DataNodeMetrics. However the test > associated with the patch has some bugs. This issue is to fix those bugs in > the tests. > Please note that the non-test code of HDFS-15754 worked fine without any bugs. > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Reopened] (HDFS-15971) Make mkstemp cross platform

[ https://issues.apache.org/jira/browse/HDFS-15971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Eric Badger reopened HDFS-15971: > Make mkstemp cross platform > --- > > Key: HDFS-15971 > URL: https://issues.apache.org/jira/browse/HDFS-15971 > Project: Hadoop HDFS > Issue Type: Improvement > Components: libhdfs++ >Affects Versions: 3.4.0 >Reporter: Gautham Banasandra >Assignee: Gautham Banasandra >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 1h > Remaining Estimate: 0h > > mkstemp isn't available in Visual C++. Need to make it cross platform. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-15971) Make mkstemp cross platform

[ https://issues.apache.org/jira/browse/HDFS-15971?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Eric Badger updated HDFS-15971: --- Fix Version/s: (was: 3.4.0) I've reverted this from trunk > Make mkstemp cross platform > --- > > Key: HDFS-15971 > URL: https://issues.apache.org/jira/browse/HDFS-15971 > Project: Hadoop HDFS > Issue Type: Improvement > Components: libhdfs++ >Affects Versions: 3.4.0 >Reporter: Gautham Banasandra >Assignee: Gautham Banasandra >Priority: Major > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > > mkstemp isn't available in Visual C++. Need to make it cross platform. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work started] (HDFS-15980) Fix tests for HDFS-15754 Create packet metrics for DataNode

[ https://issues.apache.org/jira/browse/HDFS-15980?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Work on HDFS-15980 started by Zheng Shao. - > Fix tests for HDFS-15754 Create packet metrics for DataNode > --- > > Key: HDFS-15980 > URL: https://issues.apache.org/jira/browse/HDFS-15980 > Project: Hadoop HDFS > Issue Type: Bug > Components: datanode >Reporter: Zheng Shao >Assignee: Zheng Shao >Priority: Trivial > > HDFS-15754 introduces 4 new metrics in DataNodeMetrics. However the test > associated with the patch has some bugs. This issue is to fix those bugs in > the tests. > Please note that the non-test code of HDFS-15754 worked fine without any bugs. > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-15971) Make mkstemp cross platform

[ https://issues.apache.org/jira/browse/HDFS-15971?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17323953#comment-17323953 ] Eric Badger commented on HDFS-15971: Yea, I think reverting would be best until we can figure out how to fix it on RHEL. I'll revert it. I'm not familiar with the code that was modified, but I'm happy to test any patches on RHEL to make sure that they work on that environment before we merge again. > Make mkstemp cross platform > --- > > Key: HDFS-15971 > URL: https://issues.apache.org/jira/browse/HDFS-15971 > Project: Hadoop HDFS > Issue Type: Improvement > Components: libhdfs++ >Affects Versions: 3.4.0 >Reporter: Gautham Banasandra >Assignee: Gautham Banasandra >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 1h > Remaining Estimate: 0h > > mkstemp isn't available in Visual C++. Need to make it cross platform. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HDFS-15971) Make mkstemp cross platform

[ https://issues.apache.org/jira/browse/HDFS-15971?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17323948#comment-17323948 ] Gautham Banasandra edited comment on HDFS-15971 at 4/16/21, 5:08 PM: - Sorry about the inconvenience [~ebadger]. It's quite strange as to why the CMake in RHEL 7.6 isn't able to find x_platform_obj_c_api. Since, this runs without any issues on Ubuntu Focal. [~elgoiri] I've started looking into this. But if it's blocking folks on RHEL 7, please feel free to revert this PR. was (Author: gautham): Sorry about the inconvenience [~ebadger]. It's quite strange as to why the CMake in RHEL 7.6 isn't able to find x_platform_obj_c_api. Since, this runs without any issues on Ubuntu Focal. [~elgoiri] I'll fix this over the weekend. But if it's blocking folks on RHEL 7, please feel free to revert this. > Make mkstemp cross platform > --- > > Key: HDFS-15971 > URL: https://issues.apache.org/jira/browse/HDFS-15971 > Project: Hadoop HDFS > Issue Type: Improvement > Components: libhdfs++ >Affects Versions: 3.4.0 >Reporter: Gautham Banasandra >Assignee: Gautham Banasandra >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 1h > Remaining Estimate: 0h > > mkstemp isn't available in Visual C++. Need to make it cross platform. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15975) Use LongAdder instead of AtomicLong

[

https://issues.apache.org/jira/browse/HDFS-15975?focusedWorklogId=584372&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584372

]

ASF GitHub Bot logged work on HDFS-15975:

-

Author: ASF GitHub Bot

Created on: 16/Apr/21 17:05

Start Date: 16/Apr/21 17:05

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #2907:

URL: https://github.com/apache/hadoop/pull/2907#issuecomment-821314734

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 37s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 15m 36s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 20m 16s | | trunk passed |

| +1 :green_heart: | compile | 20m 46s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 18m 23s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | checkstyle | 3m 45s | | trunk passed |

| +1 :green_heart: | mvnsite | 4m 14s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 2s | | trunk passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 4m 7s | | trunk passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 8m 15s | | trunk passed |

| +1 :green_heart: | shadedclient | 16m 26s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 26s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 51s | | the patch passed |

| +1 :green_heart: | compile | 20m 12s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 20m 12s | | the patch passed |

| +1 :green_heart: | compile | 18m 14s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | javac | 18m 14s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 3m 46s |

[/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2907/4/artifact/out/results-checkstyle-root.txt)

| root: The patch generated 5 new + 242 unchanged - 5 fixed = 247 total (was

247) |

| +1 :green_heart: | mvnsite | 4m 14s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 58s | | the patch passed with JDK

Ubuntu-11.0.10+9-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 59s | | the patch passed with JDK

Private Build-1.8.0_282-8u282-b08-0ubuntu1~20.04-b08 |

| +1 :green_heart: | spotbugs | 8m 56s | | the patch passed |

| +1 :green_heart: | shadedclient | 17m 39s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 17m 33s | | hadoop-common in the patch

passed. |

| +1 :green_heart: | unit | 2m 31s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 242m 3s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2907/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 59s | | The patch does not

generate ASF License warnings. |

| | | 461m 25s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.datanode.TestDirectoryScanner |

| | hadoop.hdfs.qjournal.server.TestJournalNodeSync |

| | hadoop.hdfs.qjournal.server.TestJournalNodeRespectsBindHostKeys |

| | hadoop.hdfs.server.namenode.snapshot.TestNestedSnapshots |

| | hadoop.hdfs.TestViewDistributedFileSystem |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-2907/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/2907 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsi

[jira] [Commented] (HDFS-15971) Make mkstemp cross platform

[ https://issues.apache.org/jira/browse/HDFS-15971?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17323948#comment-17323948 ] Gautham Banasandra commented on HDFS-15971: --- Sorry about the inconvenience [~ebadger]. It's quite strange as to why the CMake in RHEL 7.6 isn't able to find x_platform_obj_c_api. Since, this runs without any issues on Ubuntu Focal. [~elgoiri] I'll fix this over the weekend. But if it's blocking folks on RHEL 7, please feel free to revert this. > Make mkstemp cross platform > --- > > Key: HDFS-15971 > URL: https://issues.apache.org/jira/browse/HDFS-15971 > Project: Hadoop HDFS > Issue Type: Improvement > Components: libhdfs++ >Affects Versions: 3.4.0 >Reporter: Gautham Banasandra >Assignee: Gautham Banasandra >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > Time Spent: 1h > Remaining Estimate: 0h > > mkstemp isn't available in Visual C++. Need to make it cross platform. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-15985) Incorrect sorting will cause failure to load an FsImage file

[

https://issues.apache.org/jira/browse/HDFS-15985?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17323944#comment-17323944

]

Xiaoqiao He commented on HDFS-15985:

I am also concerned that how to catch the exception here. which version

(with/without this patch) to checkpoint and generate fsimage and which

version(with/without this patch) to load it? While new version fsimage

format(after patch) could not been parsed by old version(without patch).

> Incorrect sorting will cause failure to load an FsImage file

>

>

> Key: HDFS-15985

> URL: https://issues.apache.org/jira/browse/HDFS-15985

> Project: Hadoop HDFS

> Issue Type: Sub-task

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 20m

> Remaining Estimate: 0h

>

> After we have introduced HDFS-14617 or HDFS-14771, when loading an fsimage

> file, the following error will pop up:

> 2021-04-15 17:21:17,868 [293072]-INFO [main:FSImage@784]-Planning to load

> image:

> FSImageFile(file=//hadoop/hdfs/namenode/current/fsimage_0,

> cpktTxId=0)

> 2021-04-15 17:25:53,288 [568492]-INFO

> [main:FSImageFormatPBINode$Loader@229]-Loading 725097952 INodes.

> 2021-04-15 17:25:53,289 [568493]-ERROR [main:FSImage@730]-Failed to load

> image from

> FSImageFile(file=//hadoop/hdfs/namenode/current/fsimage_0,

> cpktTxId=0)

> java.lang.IllegalStateException: GLOBAL: serial number 3 does not exist

> at

> org.apache.hadoop.hdfs.server.namenode.SerialNumberMap.get(SerialNumberMap.java:85)

> at

> org.apache.hadoop.hdfs.server.namenode.SerialNumberManager.getString(SerialNumberManager.java:121)

> at

> org.apache.hadoop.hdfs.server.namenode.SerialNumberManager.getString(SerialNumberManager.java:125)

> at

> org.apache.hadoop.hdfs.server.namenode.INodeWithAdditionalFields$PermissionStatusFormat.toPermissionStatus(INodeWithAdditionalFields.java:86)

> at

> org.apache.hadoop.hdfs.server.namenode.FSImageFormatPBINode$Loader.loadPermission(FSImageFormatPBINode.java:93)

> at

> org.apache.hadoop.hdfs.server.namenode.FSImageFormatPBINode$Loader.loadINodeFile(FSImageFormatPBINode.java:303)

> at

> org.apache.hadoop.hdfs.server.namenode.FSImageFormatPBINode$Loader.loadINode(FSImageFormatPBINode.java:280)

> at

> org.apache.hadoop.hdfs.server.namenode.FSImageFormatPBINode$Loader.loadINodeSection(FSImageFormatPBINode.java:237)

> at

> org.apache.hadoop.hdfs.server.namenode.FSImageFormatProtobuf$Loader.loadInternal(FSImageFormatProtobuf.java:237)

> at

> org.apache.hadoop.hdfs.server.namenode.FSImageFormatProtobuf$Loader.load(FSImageFormatProtobuf.java:176)

> at

> org.apache.hadoop.hdfs.server.namenode.FSImageFormat$LoaderDelegator.load(FSImageFormat.java:226)

> at

> org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:937)

> It was found that this anomaly was related to sorting, as follows:

> ArrayList sections = Lists.newArrayList(summary

> .getSectionsList());

> Collections.sort(sections, new Comparator() {

> @Override

> public int compare(FileSummary.Section s1, FileSummary.Section s2) {

> SectionName n1 = SectionName.fromString(s1.getName());

> SectionName n2 = SectionName.fromString(s2.getName());

> if (n1 == null) {

> return n2 == null? 0: -1;

> } else if (n2 == null) {

> return -1;

> } else {

> return n1.ordinal()-n2.ordinal();

> }

> }

> });

> When n1 != null and n2 == null, this will cause sorting errors.

> When loading Sections, the correct order of loading Sections:

> NS_INFO -> STRING_TABLE -> INODE

> If the sorting is incorrect, the loading order is as follows:

> INDOE -> NS_INFO -> STRING_TABLE

> Because when loading INODE, you need to rely on STRING_TABLE.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

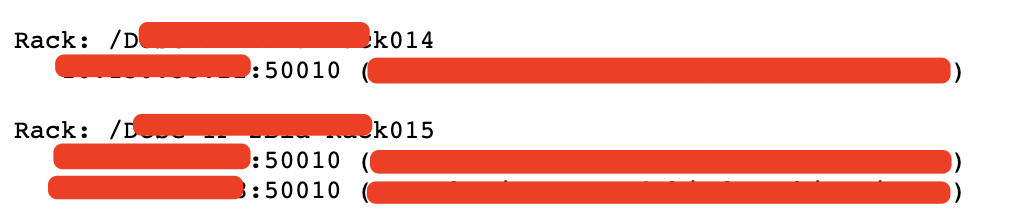

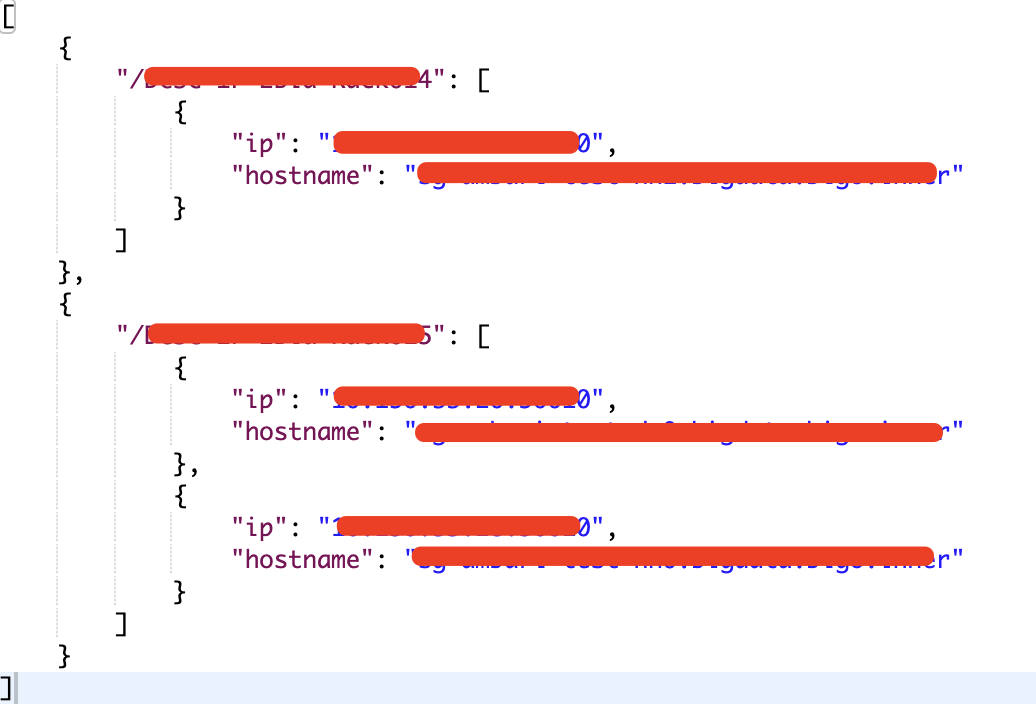

[jira] [Work logged] (HDFS-15970) Print network topology on the web

[

https://issues.apache.org/jira/browse/HDFS-15970?focusedWorklogId=584366&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584366

]

ASF GitHub Bot logged work on HDFS-15970:

-

Author: ASF GitHub Bot

Created on: 16/Apr/21 16:53

Start Date: 16/Apr/21 16:53

Worklog Time Spent: 10m

Work Description: tomscut commented on a change in pull request #2896:

URL: https://github.com/apache/hadoop/pull/2896#discussion_r614993153

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/NetworkTopologyServlet.java

##

@@ -0,0 +1,115 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.hadoop.hdfs.server.namenode;

+

+import org.apache.hadoop.classification.InterfaceAudience;

+import org.apache.hadoop.hdfs.server.blockmanagement.BlockManager;

+import org.apache.hadoop.net.NetUtils;

+import org.apache.hadoop.net.Node;

+import org.apache.hadoop.net.NodeBase;

+import org.apache.hadoop.util.StringUtils;

+

+import javax.servlet.ServletContext;

+import javax.servlet.http.HttpServletRequest;

+import javax.servlet.http.HttpServletResponse;

+import java.io.IOException;

+import java.io.PrintStream;

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.TreeSet;

+

+/**

+ * A servlet to print out the network topology.

+ */

+@InterfaceAudience.Private

+public class NetworkTopologyServlet extends DfsServlet {

+

+ public static final String PATH_SPEC = "/topology";

+

+ @Override

+ public void doGet(HttpServletRequest request, HttpServletResponse response)

Review comment:

> Right now we are exposing this as plain text in the Web UI when

clicking.

> We could have something with some more format like a JSON that can be

shown nicely in the Web UI.

Thanks @goiri for your advice. I changed the code to support json format. By

default, the output is still in text format. When the request

header(Accept:application/json) is set, the results are displayed in JSON

format, just like conf api (http://namenode:port/conf).

Text format:

curl http://namenode:port/topology

Json format:

curl -H 'Accept:application/json' http://namenode:port/topology

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 584366)

Time Spent: 2.5h (was: 2h 20m)

> Print network topology on the web

> -

>

> Key: HDFS-15970

> URL: https://issues.apache.org/jira/browse/HDFS-15970

> Project: Hadoop HDFS

> Issue Type: Wish

>Reporter: tomscut

>Assignee: tomscut

>Priority: Minor

> Labels: pull-request-available

> Attachments: hdfs-topology-json.jpg, hdfs-topology.jpg, hdfs-web.jpg

>

> Time Spent: 2.5h

> Remaining Estimate: 0h

>

> In order to query the network topology information conveniently, we can print

> it on the web.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-15970) Print network topology on the web

[ https://issues.apache.org/jira/browse/HDFS-15970?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] tomscut updated HDFS-15970: --- Attachment: hdfs-topology-json.jpg > Print network topology on the web > - > > Key: HDFS-15970 > URL: https://issues.apache.org/jira/browse/HDFS-15970 > Project: Hadoop HDFS > Issue Type: Wish >Reporter: tomscut >Assignee: tomscut >Priority: Minor > Labels: pull-request-available > Attachments: hdfs-topology-json.jpg, hdfs-topology.jpg, hdfs-web.jpg > > Time Spent: 2h 20m > Remaining Estimate: 0h > > In order to query the network topology information conveniently, we can print > it on the web. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-15963) Unreleased volume references cause an infinite loop

[

https://issues.apache.org/jira/browse/HDFS-15963?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Xiaoqiao He updated HDFS-15963:

---

Fix Version/s: 3.4.0

Hadoop Flags: Reviewed

Resolution: Fixed

Status: Resolved (was: Patch Available)

Committed to trunk. Thanks [~zhangshuyan] for your report and contribution!

Thanks [~weichiu] for your reviews.

> Unreleased volume references cause an infinite loop

> ---

>

> Key: HDFS-15963

> URL: https://issues.apache.org/jira/browse/HDFS-15963

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Reporter: Shuyan Zhang

>Assignee: Shuyan Zhang

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Attachments: HDFS-15963.001.patch, HDFS-15963.002.patch,

> HDFS-15963.003.patch

>

> Time Spent: 4h

> Remaining Estimate: 0h

>

> When BlockSender throws an exception because the meta-data cannot be found,

> the volume reference obtained by the thread is not released, which causes the

> thread trying to remove the volume to wait and fall into an infinite loop.

> {code:java}

> boolean checkVolumesRemoved() {

> Iterator it = volumesBeingRemoved.iterator();

> while (it.hasNext()) {

> FsVolumeImpl volume = it.next();

> if (!volume.checkClosed()) {

> return false;

> }

> it.remove();

> }

> return true;

> }

> boolean checkClosed() {

> // always be true.

> if (this.reference.getReferenceCount() > 0) {

> FsDatasetImpl.LOG.debug("The reference count for {} is {}, wait to be 0.",

> this, reference.getReferenceCount());

> return false;

> }

> return true;

> }

> {code}

> At the same time, because the thread has been holding checkDirsLock when

> removing the volume, other threads trying to acquire the same lock will be

> permanently blocked.

> Similar problems also occur in RamDiskAsyncLazyPersistService and

> FsDatasetAsyncDiskService.

> This patch releases the three previously unreleased volume references.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15963) Unreleased volume references cause an infinite loop

[

https://issues.apache.org/jira/browse/HDFS-15963?focusedWorklogId=584335&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584335

]

ASF GitHub Bot logged work on HDFS-15963:

-

Author: ASF GitHub Bot

Created on: 16/Apr/21 16:11

Start Date: 16/Apr/21 16:11

Worklog Time Spent: 10m

Work Description: Hexiaoqiao commented on pull request #2889:

URL: https://github.com/apache/hadoop/pull/2889#issuecomment-821284155

Committed to trunk. Thanks @zhangshuyan0 for your works! Thanks @jojochuang

for your reviews!

Will backport to other active branches for a while.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 584335)

Time Spent: 4h (was: 3h 50m)

> Unreleased volume references cause an infinite loop

> ---

>

> Key: HDFS-15963

> URL: https://issues.apache.org/jira/browse/HDFS-15963

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Reporter: Shuyan Zhang

>Assignee: Shuyan Zhang

>Priority: Major

> Labels: pull-request-available

> Attachments: HDFS-15963.001.patch, HDFS-15963.002.patch,

> HDFS-15963.003.patch

>

> Time Spent: 4h

> Remaining Estimate: 0h

>

> When BlockSender throws an exception because the meta-data cannot be found,

> the volume reference obtained by the thread is not released, which causes the

> thread trying to remove the volume to wait and fall into an infinite loop.

> {code:java}

> boolean checkVolumesRemoved() {

> Iterator it = volumesBeingRemoved.iterator();

> while (it.hasNext()) {

> FsVolumeImpl volume = it.next();

> if (!volume.checkClosed()) {

> return false;

> }

> it.remove();

> }

> return true;

> }

> boolean checkClosed() {

> // always be true.

> if (this.reference.getReferenceCount() > 0) {

> FsDatasetImpl.LOG.debug("The reference count for {} is {}, wait to be 0.",

> this, reference.getReferenceCount());

> return false;

> }

> return true;

> }

> {code}

> At the same time, because the thread has been holding checkDirsLock when

> removing the volume, other threads trying to acquire the same lock will be

> permanently blocked.

> Similar problems also occur in RamDiskAsyncLazyPersistService and

> FsDatasetAsyncDiskService.

> This patch releases the three previously unreleased volume references.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-15963) Unreleased volume references cause an infinite loop

[

https://issues.apache.org/jira/browse/HDFS-15963?focusedWorklogId=584332&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-584332

]

ASF GitHub Bot logged work on HDFS-15963:

-

Author: ASF GitHub Bot

Created on: 16/Apr/21 16:08

Start Date: 16/Apr/21 16:08

Worklog Time Spent: 10m

Work Description: Hexiaoqiao merged pull request #2889:

URL: https://github.com/apache/hadoop/pull/2889

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 584332)

Time Spent: 3h 50m (was: 3h 40m)

> Unreleased volume references cause an infinite loop

> ---

>

> Key: HDFS-15963

> URL: https://issues.apache.org/jira/browse/HDFS-15963

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: datanode

>Reporter: Shuyan Zhang

>Assignee: Shuyan Zhang

>Priority: Major

> Labels: pull-request-available

> Attachments: HDFS-15963.001.patch, HDFS-15963.002.patch,

> HDFS-15963.003.patch

>

> Time Spent: 3h 50m

> Remaining Estimate: 0h

>

> When BlockSender throws an exception because the meta-data cannot be found,

> the volume reference obtained by the thread is not released, which causes the

> thread trying to remove the volume to wait and fall into an infinite loop.

> {code:java}

> boolean checkVolumesRemoved() {