[jira] [Work logged] (HDFS-16266) Add remote port information to HDFS audit log

[ https://issues.apache.org/jira/browse/HDFS-16266?focusedWorklogId=672941=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672941 ] ASF GitHub Bot logged work on HDFS-16266: - Author: ASF GitHub Bot Created on: 02/Nov/21 02:38 Start Date: 02/Nov/21 02:38 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3538: URL: https://github.com/apache/hadoop/pull/3538#issuecomment-957045765 Hi @aajisaka , could you please review this again. Thanks a lot. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 672941) Time Spent: 7h (was: 6h 50m) > Add remote port information to HDFS audit log > - > > Key: HDFS-16266 > URL: https://issues.apache.org/jira/browse/HDFS-16266 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Time Spent: 7h > Remaining Estimate: 0h > > In our production environment, we occasionally encounter a problem where a > user submits an abnormal computation task, causing a sudden flood of > requests, which causes the queueTime and processingTime of the Namenode to > rise very high, causing a large backlog of tasks. > We usually locate and kill specific Spark, Flink, or MapReduce tasks based on > metrics and audit logs. Currently, IP and UGI are recorded in audit logs, but > there is no port information, so it is difficult to locate specific processes > sometimes. Therefore, I propose that we add the port information to the audit > log, so that we can easily track the upstream process. > Currently, some projects contain port information in audit logs, such as > Hbase and Alluxio. I think it is also necessary to add port information for > HDFS audit logs. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Resolved] (HDFS-14240) blockReport test in NNThroughputBenchmark throws ArrayIndexOutOfBoundsException

[

https://issues.apache.org/jira/browse/HDFS-14240?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Akira Ajisaka resolved HDFS-14240.

--

Assignee: (was: Ranith Sardar)

Resolution: Duplicate

Closing as duplicate.

> blockReport test in NNThroughputBenchmark throws

> ArrayIndexOutOfBoundsException

> ---

>

> Key: HDFS-14240

> URL: https://issues.apache.org/jira/browse/HDFS-14240

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: Shen Yinjie

>Priority: Major

> Attachments: screenshot-1.png

>

>

> _emphasized text_When I run a blockReport test with NNThroughputBenchmark,

> BlockReportStats.addBlocks() throws ArrayIndexOutOfBoundsException.

> digging the code:

> {code:java}

> for(DatanodeInfo dnInfo : loc.getLocations())

> { int dnIdx = dnInfo.getXferPort() - 1;

> datanodes[dnIdx].addBlock(loc.getBlock().getLocalBlock());{code}

>

> problem is here:array datanodes's length is determined by args as

> "-datanodes" or "-threads" ,but dnIdx = dnInfo.getXferPort() is a random port.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HDFS-16292) The DFS Input Stream is waiting to be read

[ https://issues.apache.org/jira/browse/HDFS-16292?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437082#comment-17437082 ] tomscut edited comment on HDFS-16292 at 11/2/21, 12:57 AM: --- Hi [~weichiu], do you mean this issue HDFS-10223 . was (Author: tomscut): Hi [~weichiu], do you mean this issue [HDFS-10223|https://issues.apache.org/jira/browse/HDFS-10223]. > The DFS Input Stream is waiting to be read > -- > > Key: HDFS-16292 > URL: https://issues.apache.org/jira/browse/HDFS-16292 > Project: Hadoop HDFS > Issue Type: Improvement > Components: datanode >Affects Versions: 2.5.2 >Reporter: Hualong Zhang >Priority: Minor > Attachments: HDFS-16292.path, image-2021-11-01-18-36-54-329.png, > image-2021-11-02-08-54-27-273.png > > > The input stream has been waiting.The problem seems to be that > BlockReaderPeer#peer does not set ReadTimeout and WriteTimeout.We can solve > this problem by setting the timeout in BlockReaderFactory#nextTcpPeer > Jstack as follows > !image-2021-11-01-18-36-54-329.png! -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HDFS-16292) The DFS Input Stream is waiting to be read

[

https://issues.apache.org/jira/browse/HDFS-16292?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437084#comment-17437084

]

tomscut edited comment on HDFS-16292 at 11/2/21, 12:57 AM:

---

Coincidentally, our cluster also encountered this problem yesterday, but our

version is 3.1.0 (this patch HDFS-10223 has been merged).

Client stack:

!image-2021-11-02-08-54-27-273.png|width=607,height=341!

{code:java}

"Executor task launch worker for task 2690" #47 daemon prio=5 os_prio=0

tid=0x7f3730286800 nid=0x1abc4 runnable [0x7f37109ed000]"Executor task

launch worker for task 2690" #47 daemon prio=5 os_prio=0 tid=0x7f3730286800

nid=0x1abc4 runnable [0x7f37109ed000] java.lang.Thread.State: RUNNABLE at

sun.nio.ch.EPollArrayWrapper.epollWait(Native Method) at

sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269) at

sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79) at

sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86) - locked

<0x0006cb9cf3a0> (a sun.nio.ch.Util$2) - locked <0x0006cb9cf390> (a

java.util.Collections$UnmodifiableSet) - locked <0x0006cb9cf168> (a

sun.nio.ch.EPollSelectorImpl) at

sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97) at

org.apache.hadoop.net.SocketIOWithTimeout$SelectorPool.select(SocketIOWithTimeout.java:335)

at

org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:157) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118) at

java.io.FilterInputStream.read(FilterInputStream.java:83) at

org.apache.hadoop.hdfs.protocolPB.PBHelperClient.vintPrefixed(PBHelperClient.java:547)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderRemote.newBlockReader(BlockReaderRemote.java:407)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:853)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:749)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.build(BlockReaderFactory.java:379)

at

org.apache.hadoop.hdfs.DFSInputStream.getBlockReader(DFSInputStream.java:669)

at

org.apache.hadoop.hdfs.DFSInputStream.actualGetFromOneDataNode(DFSInputStream.java:1117)

at

org.apache.hadoop.hdfs.DFSInputStream.fetchBlockByteRange(DFSInputStream.java:1069)

at org.apache.hadoop.hdfs.DFSInputStream.pread(DFSInputStream.java:1501) at

org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:1465) at

org.apache.hadoop.fs.FSInputStream.readFully(FSInputStream.java:121) at

org.apache.hadoop.fs.FSDataInputStream.readFully(FSDataInputStream.java:111) at

org.apache.orc.impl.RecordReaderUtils.readDiskRanges(RecordReaderUtils.java:566)

at

org.apache.orc.impl.RecordReaderUtils$DefaultDataReader.readRowIndex(RecordReaderUtils.java:219)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1419)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1402)

at

org.apache.orc.impl.RecordReaderImpl.pickRowGroups(RecordReaderImpl.java:1056)

at org.apache.orc.impl.RecordReaderImpl.readStripe(RecordReaderImpl.java:1087)

at

org.apache.orc.impl.RecordReaderImpl.advanceStripe(RecordReaderImpl.java:1254)

at

org.apache.orc.impl.RecordReaderImpl.advanceToNextRow(RecordReaderImpl.java:1289)

at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1325)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextBatch(OrcColumnarBatchReader.java:196)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextKeyValue(OrcColumnarBatchReader.java:99)

at

org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:39)

at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:145)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:73) at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:93)

at

org.apache.spark.sql.execution.FileSourceScanExec$$anon$1.hasNext(DataSourceScanExec.scala:492)

at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.columnartorow_nextBatch_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.agg_doAggregateWithKeys_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.processNext(Unknown

Source) at

org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at

org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:729)

at

[jira] [Comment Edited] (HDFS-16292) The DFS Input Stream is waiting to be read

[

https://issues.apache.org/jira/browse/HDFS-16292?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437084#comment-17437084

]

tomscut edited comment on HDFS-16292 at 11/2/21, 12:56 AM:

---

Coincidentally, our cluster also encountered this problem yesterday, but our

version is 3.1.0(This patch

[HDFS-10223|https://issues.apache.org/jira/browse/HDFS-10223] has been merged).

Client stack:

!image-2021-11-02-08-54-27-273.png|width=607,height=341!

{code:java}

"Executor task launch worker for task 2690" #47 daemon prio=5 os_prio=0

tid=0x7f3730286800 nid=0x1abc4 runnable [0x7f37109ed000]"Executor task

launch worker for task 2690" #47 daemon prio=5 os_prio=0 tid=0x7f3730286800

nid=0x1abc4 runnable [0x7f37109ed000] java.lang.Thread.State: RUNNABLE at

sun.nio.ch.EPollArrayWrapper.epollWait(Native Method) at

sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269) at

sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79) at

sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86) - locked

<0x0006cb9cf3a0> (a sun.nio.ch.Util$2) - locked <0x0006cb9cf390> (a

java.util.Collections$UnmodifiableSet) - locked <0x0006cb9cf168> (a

sun.nio.ch.EPollSelectorImpl) at

sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97) at

org.apache.hadoop.net.SocketIOWithTimeout$SelectorPool.select(SocketIOWithTimeout.java:335)

at

org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:157) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118) at

java.io.FilterInputStream.read(FilterInputStream.java:83) at

org.apache.hadoop.hdfs.protocolPB.PBHelperClient.vintPrefixed(PBHelperClient.java:547)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderRemote.newBlockReader(BlockReaderRemote.java:407)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:853)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:749)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.build(BlockReaderFactory.java:379)

at

org.apache.hadoop.hdfs.DFSInputStream.getBlockReader(DFSInputStream.java:669)

at

org.apache.hadoop.hdfs.DFSInputStream.actualGetFromOneDataNode(DFSInputStream.java:1117)

at

org.apache.hadoop.hdfs.DFSInputStream.fetchBlockByteRange(DFSInputStream.java:1069)

at org.apache.hadoop.hdfs.DFSInputStream.pread(DFSInputStream.java:1501) at

org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:1465) at

org.apache.hadoop.fs.FSInputStream.readFully(FSInputStream.java:121) at

org.apache.hadoop.fs.FSDataInputStream.readFully(FSDataInputStream.java:111) at

org.apache.orc.impl.RecordReaderUtils.readDiskRanges(RecordReaderUtils.java:566)

at

org.apache.orc.impl.RecordReaderUtils$DefaultDataReader.readRowIndex(RecordReaderUtils.java:219)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1419)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1402)

at

org.apache.orc.impl.RecordReaderImpl.pickRowGroups(RecordReaderImpl.java:1056)

at org.apache.orc.impl.RecordReaderImpl.readStripe(RecordReaderImpl.java:1087)

at

org.apache.orc.impl.RecordReaderImpl.advanceStripe(RecordReaderImpl.java:1254)

at

org.apache.orc.impl.RecordReaderImpl.advanceToNextRow(RecordReaderImpl.java:1289)

at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1325)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextBatch(OrcColumnarBatchReader.java:196)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextKeyValue(OrcColumnarBatchReader.java:99)

at

org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:39)

at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:145)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:73) at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:93)

at

org.apache.spark.sql.execution.FileSourceScanExec$$anon$1.hasNext(DataSourceScanExec.scala:492)

at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.columnartorow_nextBatch_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.agg_doAggregateWithKeys_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.processNext(Unknown

Source) at

org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at

[jira] [Comment Edited] (HDFS-16292) The DFS Input Stream is waiting to be read

[

https://issues.apache.org/jira/browse/HDFS-16292?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437084#comment-17437084

]

tomscut edited comment on HDFS-16292 at 11/2/21, 12:54 AM:

---

Coincidentally, our cluster also encountered this problem yesterday, but our

version is 3.1.0.

Client stack:

!image-2021-11-02-08-54-27-273.png|width=607,height=341!

{code:java}

"Executor task launch worker for task 2690" #47 daemon prio=5 os_prio=0

tid=0x7f3730286800 nid=0x1abc4 runnable [0x7f37109ed000]"Executor task

launch worker for task 2690" #47 daemon prio=5 os_prio=0 tid=0x7f3730286800

nid=0x1abc4 runnable [0x7f37109ed000] java.lang.Thread.State: RUNNABLE at

sun.nio.ch.EPollArrayWrapper.epollWait(Native Method) at

sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269) at

sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79) at

sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86) - locked

<0x0006cb9cf3a0> (a sun.nio.ch.Util$2) - locked <0x0006cb9cf390> (a

java.util.Collections$UnmodifiableSet) - locked <0x0006cb9cf168> (a

sun.nio.ch.EPollSelectorImpl) at

sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97) at

org.apache.hadoop.net.SocketIOWithTimeout$SelectorPool.select(SocketIOWithTimeout.java:335)

at

org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:157) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118) at

java.io.FilterInputStream.read(FilterInputStream.java:83) at

org.apache.hadoop.hdfs.protocolPB.PBHelperClient.vintPrefixed(PBHelperClient.java:547)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderRemote.newBlockReader(BlockReaderRemote.java:407)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:853)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:749)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.build(BlockReaderFactory.java:379)

at

org.apache.hadoop.hdfs.DFSInputStream.getBlockReader(DFSInputStream.java:669)

at

org.apache.hadoop.hdfs.DFSInputStream.actualGetFromOneDataNode(DFSInputStream.java:1117)

at

org.apache.hadoop.hdfs.DFSInputStream.fetchBlockByteRange(DFSInputStream.java:1069)

at org.apache.hadoop.hdfs.DFSInputStream.pread(DFSInputStream.java:1501) at

org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:1465) at

org.apache.hadoop.fs.FSInputStream.readFully(FSInputStream.java:121) at

org.apache.hadoop.fs.FSDataInputStream.readFully(FSDataInputStream.java:111) at

org.apache.orc.impl.RecordReaderUtils.readDiskRanges(RecordReaderUtils.java:566)

at

org.apache.orc.impl.RecordReaderUtils$DefaultDataReader.readRowIndex(RecordReaderUtils.java:219)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1419)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1402)

at

org.apache.orc.impl.RecordReaderImpl.pickRowGroups(RecordReaderImpl.java:1056)

at org.apache.orc.impl.RecordReaderImpl.readStripe(RecordReaderImpl.java:1087)

at

org.apache.orc.impl.RecordReaderImpl.advanceStripe(RecordReaderImpl.java:1254)

at

org.apache.orc.impl.RecordReaderImpl.advanceToNextRow(RecordReaderImpl.java:1289)

at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1325)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextBatch(OrcColumnarBatchReader.java:196)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextKeyValue(OrcColumnarBatchReader.java:99)

at

org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:39)

at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:145)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:73) at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:93)

at

org.apache.spark.sql.execution.FileSourceScanExec$$anon$1.hasNext(DataSourceScanExec.scala:492)

at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.columnartorow_nextBatch_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.agg_doAggregateWithKeys_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.processNext(Unknown

Source) at

org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at

org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:729)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458) at

[jira] [Comment Edited] (HDFS-16292) The DFS Input Stream is waiting to be read

[

https://issues.apache.org/jira/browse/HDFS-16292?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437084#comment-17437084

]

tomscut edited comment on HDFS-16292 at 11/2/21, 12:53 AM:

---

Coincidentally, our cluster also encountered this problem yesterday, but our

version is 3.1.0.

Client stack:

{code:java}

"Executor task launch worker for task 2690" #47 daemon prio=5 os_prio=0

tid=0x7f3730286800 nid=0x1abc4 runnable [0x7f37109ed000]"Executor task

launch worker for task 2690" #47 daemon prio=5 os_prio=0 tid=0x7f3730286800

nid=0x1abc4 runnable [0x7f37109ed000] java.lang.Thread.State: RUNNABLE at

sun.nio.ch.EPollArrayWrapper.epollWait(Native Method) at

sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269) at

sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79) at

sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86) - locked

<0x0006cb9cf3a0> (a sun.nio.ch.Util$2) - locked <0x0006cb9cf390> (a

java.util.Collections$UnmodifiableSet) - locked <0x0006cb9cf168> (a

sun.nio.ch.EPollSelectorImpl) at

sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97) at

org.apache.hadoop.net.SocketIOWithTimeout$SelectorPool.select(SocketIOWithTimeout.java:335)

at

org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:157) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118) at

java.io.FilterInputStream.read(FilterInputStream.java:83) at

org.apache.hadoop.hdfs.protocolPB.PBHelperClient.vintPrefixed(PBHelperClient.java:547)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderRemote.newBlockReader(BlockReaderRemote.java:407)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:853)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:749)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.build(BlockReaderFactory.java:379)

at

org.apache.hadoop.hdfs.DFSInputStream.getBlockReader(DFSInputStream.java:669)

at

org.apache.hadoop.hdfs.DFSInputStream.actualGetFromOneDataNode(DFSInputStream.java:1117)

at

org.apache.hadoop.hdfs.DFSInputStream.fetchBlockByteRange(DFSInputStream.java:1069)

at org.apache.hadoop.hdfs.DFSInputStream.pread(DFSInputStream.java:1501) at

org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:1465) at

org.apache.hadoop.fs.FSInputStream.readFully(FSInputStream.java:121) at

org.apache.hadoop.fs.FSDataInputStream.readFully(FSDataInputStream.java:111) at

org.apache.orc.impl.RecordReaderUtils.readDiskRanges(RecordReaderUtils.java:566)

at

org.apache.orc.impl.RecordReaderUtils$DefaultDataReader.readRowIndex(RecordReaderUtils.java:219)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1419)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1402)

at

org.apache.orc.impl.RecordReaderImpl.pickRowGroups(RecordReaderImpl.java:1056)

at org.apache.orc.impl.RecordReaderImpl.readStripe(RecordReaderImpl.java:1087)

at

org.apache.orc.impl.RecordReaderImpl.advanceStripe(RecordReaderImpl.java:1254)

at

org.apache.orc.impl.RecordReaderImpl.advanceToNextRow(RecordReaderImpl.java:1289)

at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1325)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextBatch(OrcColumnarBatchReader.java:196)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextKeyValue(OrcColumnarBatchReader.java:99)

at

org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:39)

at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:145)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:73) at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:93)

at

org.apache.spark.sql.execution.FileSourceScanExec$$anon$1.hasNext(DataSourceScanExec.scala:492)

at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.columnartorow_nextBatch_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.agg_doAggregateWithKeys_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.processNext(Unknown

Source) at

org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at

org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:729)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458) at

[jira] [Comment Edited] (HDFS-16292) The DFS Input Stream is waiting to be read

[

https://issues.apache.org/jira/browse/HDFS-16292?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437084#comment-17437084

]

tomscut edited comment on HDFS-16292 at 11/2/21, 12:52 AM:

---

Coincidentally, our cluster also encountered this problem yesterday, but our

version is 3.1.0.

Client stack:

{noformat}

"Executor task launch worker for task 2690" #47 daemon prio=5 os_prio=0

tid=0x7f3730286800 nid=0x1abc4 runnable [0x7f37109ed000]"Executor task

launch worker for task 2690" #47 daemon prio=5 os_prio=0 tid=0x7f3730286800

nid=0x1abc4 runnable [0x7f37109ed000] java.lang.Thread.State: RUNNABLE at

sun.nio.ch.EPollArrayWrapper.epollWait(Native Method) at

sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269) at

sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79) at

sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86) - locked

<0x0006cb9cf3a0> (a sun.nio.ch.Util$2) - locked <0x0006cb9cf390> (a

java.util.Collections$UnmodifiableSet) - locked <0x0006cb9cf168> (a

sun.nio.ch.EPollSelectorImpl) at

sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97) at

org.apache.hadoop.net.SocketIOWithTimeout$SelectorPool.select(SocketIOWithTimeout.java:335)

at

org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:157) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118) at

java.io.FilterInputStream.read(FilterInputStream.java:83) at

org.apache.hadoop.hdfs.protocolPB.PBHelperClient.vintPrefixed(PBHelperClient.java:547)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderRemote.newBlockReader(BlockReaderRemote.java:407)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:853)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:749)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.build(BlockReaderFactory.java:379)

at

org.apache.hadoop.hdfs.DFSInputStream.getBlockReader(DFSInputStream.java:669)

at

org.apache.hadoop.hdfs.DFSInputStream.actualGetFromOneDataNode(DFSInputStream.java:1117)

at

org.apache.hadoop.hdfs.DFSInputStream.fetchBlockByteRange(DFSInputStream.java:1069)

at org.apache.hadoop.hdfs.DFSInputStream.pread(DFSInputStream.java:1501) at

org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:1465) at

org.apache.hadoop.fs.FSInputStream.readFully(FSInputStream.java:121) at

org.apache.hadoop.fs.FSDataInputStream.readFully(FSDataInputStream.java:111) at

org.apache.orc.impl.RecordReaderUtils.readDiskRanges(RecordReaderUtils.java:566)

at

org.apache.orc.impl.RecordReaderUtils$DefaultDataReader.readRowIndex(RecordReaderUtils.java:219)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1419)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1402)

at

org.apache.orc.impl.RecordReaderImpl.pickRowGroups(RecordReaderImpl.java:1056)

at org.apache.orc.impl.RecordReaderImpl.readStripe(RecordReaderImpl.java:1087)

at

org.apache.orc.impl.RecordReaderImpl.advanceStripe(RecordReaderImpl.java:1254)

at

org.apache.orc.impl.RecordReaderImpl.advanceToNextRow(RecordReaderImpl.java:1289)

at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1325)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextBatch(OrcColumnarBatchReader.java:196)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextKeyValue(OrcColumnarBatchReader.java:99)

at

org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:39)

at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:145)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:73) at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:93)

at

org.apache.spark.sql.execution.FileSourceScanExec$$anon$1.hasNext(DataSourceScanExec.scala:492)

at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.columnartorow_nextBatch_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.agg_doAggregateWithKeys_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.processNext(Unknown

Source) at

org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at

org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:729)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458) at

[jira] [Commented] (HDFS-16292) The DFS Input Stream is waiting to be read

[

https://issues.apache.org/jira/browse/HDFS-16292?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437084#comment-17437084

]

tomscut commented on HDFS-16292:

Coincidentally, our cluster also encountered this problem yesterday, but our

version is 3.1.0.

Client stack:

{code:java}

"Executor task launch worker for task 2690" #47 daemon prio=5 os_prio=0

tid=0x7f3730286800 nid=0x1abc4 runnable [0x7f37109ed000]"Executor task

launch worker for task 2690" #47 daemon prio=5 os_prio=0 tid=0x7f3730286800

nid=0x1abc4 runnable [0x7f37109ed000] java.lang.Thread.State: RUNNABLE at

sun.nio.ch.EPollArrayWrapper.epollWait(Native Method) at

sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269) at

sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79) at

sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86) - locked

<0x0006cb9cf3a0> (a sun.nio.ch.Util$2) - locked <0x0006cb9cf390> (a

java.util.Collections$UnmodifiableSet) - locked <0x0006cb9cf168> (a

sun.nio.ch.EPollSelectorImpl) at

sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97) at

org.apache.hadoop.net.SocketIOWithTimeout$SelectorPool.select(SocketIOWithTimeout.java:335)

at

org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:157) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131) at

org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118) at

java.io.FilterInputStream.read(FilterInputStream.java:83) at

org.apache.hadoop.hdfs.protocolPB.PBHelperClient.vintPrefixed(PBHelperClient.java:547)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderRemote.newBlockReader(BlockReaderRemote.java:407)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:853)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:749)

at

org.apache.hadoop.hdfs.client.impl.BlockReaderFactory.build(BlockReaderFactory.java:379)

at

org.apache.hadoop.hdfs.DFSInputStream.getBlockReader(DFSInputStream.java:669)

at

org.apache.hadoop.hdfs.DFSInputStream.actualGetFromOneDataNode(DFSInputStream.java:1117)

at

org.apache.hadoop.hdfs.DFSInputStream.fetchBlockByteRange(DFSInputStream.java:1069)

at org.apache.hadoop.hdfs.DFSInputStream.pread(DFSInputStream.java:1501) at

org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:1465) at

org.apache.hadoop.fs.FSInputStream.readFully(FSInputStream.java:121) at

org.apache.hadoop.fs.FSDataInputStream.readFully(FSDataInputStream.java:111) at

org.apache.orc.impl.RecordReaderUtils.readDiskRanges(RecordReaderUtils.java:566)

at

org.apache.orc.impl.RecordReaderUtils$DefaultDataReader.readRowIndex(RecordReaderUtils.java:219)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1419)

at

org.apache.orc.impl.RecordReaderImpl.readRowIndex(RecordReaderImpl.java:1402)

at

org.apache.orc.impl.RecordReaderImpl.pickRowGroups(RecordReaderImpl.java:1056)

at org.apache.orc.impl.RecordReaderImpl.readStripe(RecordReaderImpl.java:1087)

at

org.apache.orc.impl.RecordReaderImpl.advanceStripe(RecordReaderImpl.java:1254)

at

org.apache.orc.impl.RecordReaderImpl.advanceToNextRow(RecordReaderImpl.java:1289)

at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1325)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextBatch(OrcColumnarBatchReader.java:196)

at

org.apache.spark.sql.execution.datasources.orc.OrcColumnarBatchReader.nextKeyValue(OrcColumnarBatchReader.java:99)

at

org.apache.spark.sql.execution.datasources.RecordReaderIterator.hasNext(RecordReaderIterator.scala:39)

at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1$$anon$2.getNext(FileScanRDD.scala:145)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:73) at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:93)

at

org.apache.spark.sql.execution.FileSourceScanExec$$anon$1.hasNext(DataSourceScanExec.scala:492)

at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.columnartorow_nextBatch_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.agg_doAggregateWithKeys_0$(Unknown

Source) at

org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage5.processNext(Unknown

Source) at

org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at

org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:729)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458) at

org.apache.spark.shuffle.sort.UnsafeShuffleWriter.write(UnsafeShuffleWriter.java:181)

at

[jira] [Commented] (HDFS-16292) The DFS Input Stream is waiting to be read

[ https://issues.apache.org/jira/browse/HDFS-16292?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437082#comment-17437082 ] tomscut commented on HDFS-16292: Hi [~weichiu], do you mean this issue [HDFS-10223|https://issues.apache.org/jira/browse/HDFS-10223]. > The DFS Input Stream is waiting to be read > -- > > Key: HDFS-16292 > URL: https://issues.apache.org/jira/browse/HDFS-16292 > Project: Hadoop HDFS > Issue Type: Improvement > Components: datanode >Affects Versions: 2.5.2 >Reporter: Hualong Zhang >Priority: Minor > Attachments: HDFS-16292.path, image-2021-11-01-18-36-54-329.png > > > The input stream has been waiting.The problem seems to be that > BlockReaderPeer#peer does not set ReadTimeout and WriteTimeout.We can solve > this problem by setting the timeout in BlockReaderFactory#nextTcpPeer > Jstack as follows > !image-2021-11-01-18-36-54-329.png! -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-6994) libhdfs3 - A native C/C++ HDFS client

[ https://issues.apache.org/jira/browse/HDFS-6994?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17437043#comment-17437043 ] Clay B. commented on HDFS-6994: --- Hi [~wangzw], I updated this JIRA's description to have a working URL to libhdfs3 for those looking. Please revert my changes if that is undesirable. > libhdfs3 - A native C/C++ HDFS client > - > > Key: HDFS-6994 > URL: https://issues.apache.org/jira/browse/HDFS-6994 > Project: Hadoop HDFS > Issue Type: New Feature > Components: hdfs-client >Reporter: Zhanwei Wang >Assignee: Zhanwei Wang >Priority: Major > Attachments: HDFS-6994-rpc-8.patch, HDFS-6994.patch > > > Hi All > I just got the permission to open source libhdfs3, which is a native C/C++ > HDFS client based on Hadoop RPC protocol and HDFS Data Transfer Protocol. > libhdfs3 provide the libhdfs style C interface and a C++ interface. Support > both HADOOP RPC version 8 and 9. Support Namenode HA and Kerberos > authentication. > libhdfs3 is currently used by Apache HAWQ at: > https://github.com/apache/hawq/tree/master/depends/libhdfs3 > I'd like to integrate libhdfs3 into HDFS source code to benefit others. > The libhdfs3 code originally from Pivotal was available on github at: > https://github.com/Pivotal-Data-Attic/pivotalrd-libhdfs3 > http://pivotal-data-attic.github.io/pivotalrd-libhdfs3/ -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-6994) libhdfs3 - A native C/C++ HDFS client

[ https://issues.apache.org/jira/browse/HDFS-6994?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Clay B. updated HDFS-6994: -- Description: Hi All I just got the permission to open source libhdfs3, which is a native C/C++ HDFS client based on Hadoop RPC protocol and HDFS Data Transfer Protocol. libhdfs3 provide the libhdfs style C interface and a C++ interface. Support both HADOOP RPC version 8 and 9. Support Namenode HA and Kerberos authentication. libhdfs3 is currently used by Apache HAWQ at: https://github.com/apache/hawq/tree/master/depends/libhdfs3 I'd like to integrate libhdfs3 into HDFS source code to benefit others. The libhdfs3 code originally from Pivotal was available on github at: https://github.com/Pivotal-Data-Attic/pivotalrd-libhdfs3 http://pivotal-data-attic.github.io/pivotalrd-libhdfs3/ was: Hi All I just got the permission to open source libhdfs3, which is a native C/C++ HDFS client based on Hadoop RPC protocol and HDFS Data Transfer Protocol. libhdfs3 provide the libhdfs style C interface and a C++ interface. Support both HADOOP RPC version 8 and 9. Support Namenode HA and Kerberos authentication. libhdfs3 is currently used by HAWQ of Pivotal I'd like to integrate libhdfs3 into HDFS source code to benefit others. You can find libhdfs3 code from github https://github.com/Pivotal-Data-Attic/pivotalrd-libhdfs3 http://pivotal-data-attic.github.io/pivotalrd-libhdfs3/ > libhdfs3 - A native C/C++ HDFS client > - > > Key: HDFS-6994 > URL: https://issues.apache.org/jira/browse/HDFS-6994 > Project: Hadoop HDFS > Issue Type: New Feature > Components: hdfs-client >Reporter: Zhanwei Wang >Assignee: Zhanwei Wang >Priority: Major > Attachments: HDFS-6994-rpc-8.patch, HDFS-6994.patch > > > Hi All > I just got the permission to open source libhdfs3, which is a native C/C++ > HDFS client based on Hadoop RPC protocol and HDFS Data Transfer Protocol. > libhdfs3 provide the libhdfs style C interface and a C++ interface. Support > both HADOOP RPC version 8 and 9. Support Namenode HA and Kerberos > authentication. > libhdfs3 is currently used by Apache HAWQ at: > https://github.com/apache/hawq/tree/master/depends/libhdfs3 > I'd like to integrate libhdfs3 into HDFS source code to benefit others. > The libhdfs3 code originally from Pivotal was available on github at: > https://github.com/Pivotal-Data-Attic/pivotalrd-libhdfs3 > http://pivotal-data-attic.github.io/pivotalrd-libhdfs3/ -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

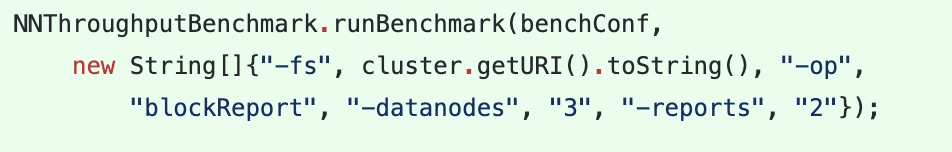

[jira] [Updated] (HDFS-16269) [Fix] Improve NNThroughputBenchmark#blockReport operation

[

https://issues.apache.org/jira/browse/HDFS-16269?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Akira Ajisaka updated HDFS-16269:

-

Fix Version/s: 3.3.2

Backported to branch-3.3.

> [Fix] Improve NNThroughputBenchmark#blockReport operation

> -

>

> Key: HDFS-16269

> URL: https://issues.apache.org/jira/browse/HDFS-16269

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: benchmarks, namenode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0, 3.3.2

>

> Time Spent: 5h

> Remaining Estimate: 0h

>

> When using NNThroughputBenchmark to verify the blockReport, you will get some

> exception information.

> Commands used:

> ./bin/hadoop org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark -fs

> -op blockReport -datanodes 3 -reports 1

> The exception information:

> 21/10/12 14:35:18 INFO namenode.NNThroughputBenchmark: Starting benchmark:

> blockReport

> 21/10/12 14:35:19 INFO namenode.NNThroughputBenchmark: Creating 10 files with

> 10 blocks each.

> 21/10/12 14:35:19 ERROR namenode.NNThroughputBenchmark:

> java.lang.ArrayIndexOutOfBoundsException: 50009

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.addBlocks(NNThroughputBenchmark.java:1161)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.generateInputs(NNThroughputBenchmark.java:1143)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$OperationStatsBase.benchmark(NNThroughputBenchmark.java:257)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.run(NNThroughputBenchmark.java:1528)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.runBenchmark(NNThroughputBenchmark.java:1430)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.main(NNThroughputBenchmark.java:1550)

> Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: 50009

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.addBlocks(NNThroughputBenchmark.java:1161)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.generateInputs(NNThroughputBenchmark.java:1143)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$OperationStatsBase.benchmark(NNThroughputBenchmark.java:257)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.run(NNThroughputBenchmark.java:1528)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.runBenchmark(NNThroughputBenchmark.java:1430)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.main(NNThroughputBenchmark.java:1550)

> Checked some code and found that the problem appeared here.

> private ExtendedBlock addBlocks(String fileName, String clientName)

> throws IOException {

> for(DatanodeInfo dnInfo: loc.getLocations()) {

>int dnIdx = dnInfo.getXferPort()-1;

>datanodes[dnIdx].addBlock(loc.getBlock().getLocalBlock());

> }

> }

> It can be seen from this that what dnInfo.getXferPort() gets is a port

> information and should not be used as an index of an array.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16291) Make the comment of INode#ReclaimContext more standardized

[ https://issues.apache.org/jira/browse/HDFS-16291?focusedWorklogId=672744=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672744 ] ASF GitHub Bot logged work on HDFS-16291: - Author: ASF GitHub Bot Created on: 01/Nov/21 16:15 Start Date: 01/Nov/21 16:15 Worklog Time Spent: 10m Work Description: jianghuazhu commented on pull request #3602: URL: https://github.com/apache/hadoop/pull/3602#issuecomment-956373649 Thank you @tomscut for your comments and reviews. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 672744) Time Spent: 40m (was: 0.5h) > Make the comment of INode#ReclaimContext more standardized > -- > > Key: HDFS-16291 > URL: https://issues.apache.org/jira/browse/HDFS-16291 > Project: Hadoop HDFS > Issue Type: Improvement > Components: documentation, namenode >Affects Versions: 3.4.0 >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Minor > Labels: pull-request-available > Attachments: image-2021-10-31-20-25-08-379.png > > Time Spent: 40m > Remaining Estimate: 0h > > In the INode#ReclaimContext class, there are some comments that are not > standardized enough. > E.g: > !image-2021-10-31-20-25-08-379.png! > We should make comments more standardized. This will be more readable. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Comment Edited] (HDFS-15413) DFSStripedInputStream throws exception when datanodes close idle connections

[

https://issues.apache.org/jira/browse/HDFS-15413?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17436908#comment-17436908

]

Jeff Kubina edited comment on HDFS-15413 at 11/1/21, 4:11 PM:

--

Is this issue being worked or was it resolved already?

was (Author: jmkubin):

Is this issue being worked to was it resolved already?

> DFSStripedInputStream throws exception when datanodes close idle connections

>

>

> Key: HDFS-15413

> URL: https://issues.apache.org/jira/browse/HDFS-15413

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: ec, erasure-coding, hdfs-client

>Affects Versions: 3.1.3

> Environment: - Hadoop 3.1.3

> - erasure coding with ISA-L and RS-3-2-1024k scheme

> - running in kubernetes

> - dfs.client.socket-timeout = 1

> - dfs.datanode.socket.write.timeout = 1

>Reporter: Andrey Elenskiy

>Priority: Critical

> Attachments: out.log

>

>

> We've run into an issue with compactions failing in HBase when erasure coding

> is enabled on a table directory. After digging further I was able to narrow

> it down to a seek + read logic and able to reproduce the issue with hdfs

> client only:

> {code:java}

> import org.apache.hadoop.conf.Configuration;

> import org.apache.hadoop.fs.Path;

> import org.apache.hadoop.fs.FileSystem;

> import org.apache.hadoop.fs.FSDataInputStream;

> public class ReaderRaw {

> public static void main(final String[] args) throws Exception {

> Path p = new Path(args[0]);

> int bufLen = Integer.parseInt(args[1]);

> int sleepDuration = Integer.parseInt(args[2]);

> int countBeforeSleep = Integer.parseInt(args[3]);

> int countAfterSleep = Integer.parseInt(args[4]);

> Configuration conf = new Configuration();

> FSDataInputStream istream = FileSystem.get(conf).open(p);

> byte[] buf = new byte[bufLen];

> int readTotal = 0;

> int count = 0;

> try {

> while (true) {

> istream.seek(readTotal);

> int bytesRemaining = bufLen;

> int bufOffset = 0;

> while (bytesRemaining > 0) {

> int nread = istream.read(buf, 0, bufLen);

> if (nread < 0) {

> throw new Exception("nread is less than zero");

> }

> readTotal += nread;

> bufOffset += nread;

> bytesRemaining -= nread;

> }

> count++;

> if (count == countBeforeSleep) {

> System.out.println("sleeping for " + sleepDuration + "

> milliseconds");

> Thread.sleep(sleepDuration);

> System.out.println("resuming");

> }

> if (count == countBeforeSleep + countAfterSleep) {

> System.out.println("done");

> break;

> }

> }

> } catch (Exception e) {

> System.out.println("exception on read " + count + " read total "

> + readTotal);

> throw e;

> }

> }

> }

> {code}

> The issue appears to be due to the fact that datanodes close the connection

> of EC client if it doesn't fetch next packet for longer than

> dfs.client.socket-timeout. The EC client doesn't retry and instead assumes

> that those datanodes went away resulting in "missing blocks" exception.

> I was able to consistently reproduce with the following arguments:

> {noformat}

> bufLen = 100 (just below 1MB which is the size of the stripe)

> sleepDuration = (dfs.client.socket-timeout + 1) * 1000 (in our case 11000)

> countBeforeSleep = 1

> countAfterSleep = 7

> {noformat}

> I've attached the entire log output of running the snippet above against

> erasure coded file with RS-3-2-1024k policy. And here are the logs from

> datanodes of disconnecting the client:

> datanode 1:

> {noformat}

> 2020-06-15 19:06:20,697 INFO datanode.DataNode: Likely the client has stopped

> reading, disconnecting it (datanode-v11-0-hadoop.hadoop:9866:DataXceiver

> error processing READ_BLOCK operation src: /10.128.23.40:53748 dst:

> /10.128.14.46:9866); java.net.SocketTimeoutException: 1 millis timeout

> while waiting for channel to be ready for write. ch :

> java.nio.channels.SocketChannel[connected local=/10.128.14.46:9866

> remote=/10.128.23.40:53748]

> {noformat}

> datanode 2:

> {noformat}

> 2020-06-15 19:06:20,341 INFO datanode.DataNode: Likely the client has stopped

> reading, disconnecting it (datanode-v11-1-hadoop.hadoop:9866:DataXceiver

> error processing READ_BLOCK operation src: /10.128.23.40:48772 dst:

> /10.128.9.42:9866); java.net.SocketTimeoutException: 1 millis timeout

> while waiting for channel to be ready for write. ch :

>

[jira] [Resolved] (HDFS-16269) [Fix] Improve NNThroughputBenchmark#blockReport operation

[

https://issues.apache.org/jira/browse/HDFS-16269?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Akira Ajisaka resolved HDFS-16269.

--

Fix Version/s: 3.4.0

Resolution: Fixed

Committed to trunk. Thank you [~jianghuazhu] for your contribution.

> [Fix] Improve NNThroughputBenchmark#blockReport operation

> -

>

> Key: HDFS-16269

> URL: https://issues.apache.org/jira/browse/HDFS-16269

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: benchmarks, namenode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Time Spent: 5h

> Remaining Estimate: 0h

>

> When using NNThroughputBenchmark to verify the blockReport, you will get some

> exception information.

> Commands used:

> ./bin/hadoop org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark -fs

> -op blockReport -datanodes 3 -reports 1

> The exception information:

> 21/10/12 14:35:18 INFO namenode.NNThroughputBenchmark: Starting benchmark:

> blockReport

> 21/10/12 14:35:19 INFO namenode.NNThroughputBenchmark: Creating 10 files with

> 10 blocks each.

> 21/10/12 14:35:19 ERROR namenode.NNThroughputBenchmark:

> java.lang.ArrayIndexOutOfBoundsException: 50009

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.addBlocks(NNThroughputBenchmark.java:1161)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.generateInputs(NNThroughputBenchmark.java:1143)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$OperationStatsBase.benchmark(NNThroughputBenchmark.java:257)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.run(NNThroughputBenchmark.java:1528)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.runBenchmark(NNThroughputBenchmark.java:1430)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.main(NNThroughputBenchmark.java:1550)

> Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: 50009

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.addBlocks(NNThroughputBenchmark.java:1161)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.generateInputs(NNThroughputBenchmark.java:1143)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$OperationStatsBase.benchmark(NNThroughputBenchmark.java:257)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.run(NNThroughputBenchmark.java:1528)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.runBenchmark(NNThroughputBenchmark.java:1430)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.main(NNThroughputBenchmark.java:1550)

> Checked some code and found that the problem appeared here.

> private ExtendedBlock addBlocks(String fileName, String clientName)

> throws IOException {

> for(DatanodeInfo dnInfo: loc.getLocations()) {

>int dnIdx = dnInfo.getXferPort()-1;

>datanodes[dnIdx].addBlock(loc.getBlock().getLocalBlock());

> }

> }

> It can be seen from this that what dnInfo.getXferPort() gets is a port

> information and should not be used as an index of an array.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16269) [Fix] Improve NNThroughputBenchmark#blockReport operation

[

https://issues.apache.org/jira/browse/HDFS-16269?focusedWorklogId=672733=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672733

]

ASF GitHub Bot logged work on HDFS-16269:

-

Author: ASF GitHub Bot

Created on: 01/Nov/21 15:56

Start Date: 01/Nov/21 15:56

Worklog Time Spent: 10m

Work Description: aajisaka merged pull request #3544:

URL: https://github.com/apache/hadoop/pull/3544

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 672733)

Time Spent: 4h 50m (was: 4h 40m)

> [Fix] Improve NNThroughputBenchmark#blockReport operation

> -

>

> Key: HDFS-16269

> URL: https://issues.apache.org/jira/browse/HDFS-16269

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: benchmarks, namenode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 4h 50m

> Remaining Estimate: 0h

>

> When using NNThroughputBenchmark to verify the blockReport, you will get some

> exception information.

> Commands used:

> ./bin/hadoop org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark -fs

> -op blockReport -datanodes 3 -reports 1

> The exception information:

> 21/10/12 14:35:18 INFO namenode.NNThroughputBenchmark: Starting benchmark:

> blockReport

> 21/10/12 14:35:19 INFO namenode.NNThroughputBenchmark: Creating 10 files with

> 10 blocks each.

> 21/10/12 14:35:19 ERROR namenode.NNThroughputBenchmark:

> java.lang.ArrayIndexOutOfBoundsException: 50009

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.addBlocks(NNThroughputBenchmark.java:1161)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.generateInputs(NNThroughputBenchmark.java:1143)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$OperationStatsBase.benchmark(NNThroughputBenchmark.java:257)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.run(NNThroughputBenchmark.java:1528)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.runBenchmark(NNThroughputBenchmark.java:1430)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.main(NNThroughputBenchmark.java:1550)

> Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: 50009

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.addBlocks(NNThroughputBenchmark.java:1161)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.generateInputs(NNThroughputBenchmark.java:1143)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$OperationStatsBase.benchmark(NNThroughputBenchmark.java:257)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.run(NNThroughputBenchmark.java:1528)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.runBenchmark(NNThroughputBenchmark.java:1430)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.main(NNThroughputBenchmark.java:1550)

> Checked some code and found that the problem appeared here.

> private ExtendedBlock addBlocks(String fileName, String clientName)

> throws IOException {

> for(DatanodeInfo dnInfo: loc.getLocations()) {

>int dnIdx = dnInfo.getXferPort()-1;

>datanodes[dnIdx].addBlock(loc.getBlock().getLocalBlock());

> }

> }

> It can be seen from this that what dnInfo.getXferPort() gets is a port

> information and should not be used as an index of an array.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16269) [Fix] Improve NNThroughputBenchmark#blockReport operation

[

https://issues.apache.org/jira/browse/HDFS-16269?focusedWorklogId=672735=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672735

]

ASF GitHub Bot logged work on HDFS-16269:

-

Author: ASF GitHub Bot

Created on: 01/Nov/21 15:56

Start Date: 01/Nov/21 15:56

Worklog Time Spent: 10m

Work Description: aajisaka commented on pull request #3544:

URL: https://github.com/apache/hadoop/pull/3544#issuecomment-956355572

Merged. Thank you @jianghuazhu @ferhui @jojochuang

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 672735)

Time Spent: 5h (was: 4h 50m)

> [Fix] Improve NNThroughputBenchmark#blockReport operation

> -

>

> Key: HDFS-16269

> URL: https://issues.apache.org/jira/browse/HDFS-16269

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: benchmarks, namenode

>Affects Versions: 2.9.2

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 5h

> Remaining Estimate: 0h

>

> When using NNThroughputBenchmark to verify the blockReport, you will get some

> exception information.

> Commands used:

> ./bin/hadoop org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark -fs

> -op blockReport -datanodes 3 -reports 1

> The exception information:

> 21/10/12 14:35:18 INFO namenode.NNThroughputBenchmark: Starting benchmark:

> blockReport

> 21/10/12 14:35:19 INFO namenode.NNThroughputBenchmark: Creating 10 files with

> 10 blocks each.

> 21/10/12 14:35:19 ERROR namenode.NNThroughputBenchmark:

> java.lang.ArrayIndexOutOfBoundsException: 50009

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.addBlocks(NNThroughputBenchmark.java:1161)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.generateInputs(NNThroughputBenchmark.java:1143)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$OperationStatsBase.benchmark(NNThroughputBenchmark.java:257)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.run(NNThroughputBenchmark.java:1528)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.runBenchmark(NNThroughputBenchmark.java:1430)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.main(NNThroughputBenchmark.java:1550)

> Exception in thread "main" java.lang.ArrayIndexOutOfBoundsException: 50009

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.addBlocks(NNThroughputBenchmark.java:1161)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$BlockReportStats.generateInputs(NNThroughputBenchmark.java:1143)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark$OperationStatsBase.benchmark(NNThroughputBenchmark.java:257)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.run(NNThroughputBenchmark.java:1528)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

> at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.runBenchmark(NNThroughputBenchmark.java:1430)

> at

> org.apache.hadoop.hdfs.server.namenode.NNThroughputBenchmark.main(NNThroughputBenchmark.java:1550)

> Checked some code and found that the problem appeared here.

> private ExtendedBlock addBlocks(String fileName, String clientName)

> throws IOException {

> for(DatanodeInfo dnInfo: loc.getLocations()) {

>int dnIdx = dnInfo.getXferPort()-1;

>datanodes[dnIdx].addBlock(loc.getBlock().getLocalBlock());

> }

> }

> It can be seen from this that what dnInfo.getXferPort() gets is a port

> information and should not be used as an index of an array.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-15413) DFSStripedInputStream throws exception when datanodes close idle connections

[

https://issues.apache.org/jira/browse/HDFS-15413?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17436908#comment-17436908

]

Jeff Kubina commented on HDFS-15413:

Is this issue being worked to was it resolved already?

> DFSStripedInputStream throws exception when datanodes close idle connections

>

>

> Key: HDFS-15413

> URL: https://issues.apache.org/jira/browse/HDFS-15413

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: ec, erasure-coding, hdfs-client

>Affects Versions: 3.1.3

> Environment: - Hadoop 3.1.3

> - erasure coding with ISA-L and RS-3-2-1024k scheme

> - running in kubernetes

> - dfs.client.socket-timeout = 1

> - dfs.datanode.socket.write.timeout = 1

>Reporter: Andrey Elenskiy

>Priority: Critical

> Attachments: out.log

>

>

> We've run into an issue with compactions failing in HBase when erasure coding

> is enabled on a table directory. After digging further I was able to narrow

> it down to a seek + read logic and able to reproduce the issue with hdfs

> client only:

> {code:java}

> import org.apache.hadoop.conf.Configuration;

> import org.apache.hadoop.fs.Path;

> import org.apache.hadoop.fs.FileSystem;

> import org.apache.hadoop.fs.FSDataInputStream;

> public class ReaderRaw {

> public static void main(final String[] args) throws Exception {

> Path p = new Path(args[0]);

> int bufLen = Integer.parseInt(args[1]);

> int sleepDuration = Integer.parseInt(args[2]);

> int countBeforeSleep = Integer.parseInt(args[3]);

> int countAfterSleep = Integer.parseInt(args[4]);

> Configuration conf = new Configuration();

> FSDataInputStream istream = FileSystem.get(conf).open(p);

> byte[] buf = new byte[bufLen];

> int readTotal = 0;

> int count = 0;

> try {

> while (true) {

> istream.seek(readTotal);

> int bytesRemaining = bufLen;

> int bufOffset = 0;

> while (bytesRemaining > 0) {

> int nread = istream.read(buf, 0, bufLen);

> if (nread < 0) {

> throw new Exception("nread is less than zero");

> }

> readTotal += nread;

> bufOffset += nread;

> bytesRemaining -= nread;

> }

> count++;

> if (count == countBeforeSleep) {

> System.out.println("sleeping for " + sleepDuration + "

> milliseconds");

> Thread.sleep(sleepDuration);

> System.out.println("resuming");

> }

> if (count == countBeforeSleep + countAfterSleep) {

> System.out.println("done");

> break;

> }

> }

> } catch (Exception e) {

> System.out.println("exception on read " + count + " read total "

> + readTotal);

> throw e;

> }

> }

> }

> {code}

> The issue appears to be due to the fact that datanodes close the connection

> of EC client if it doesn't fetch next packet for longer than

> dfs.client.socket-timeout. The EC client doesn't retry and instead assumes

> that those datanodes went away resulting in "missing blocks" exception.

> I was able to consistently reproduce with the following arguments:

> {noformat}

> bufLen = 100 (just below 1MB which is the size of the stripe)

> sleepDuration = (dfs.client.socket-timeout + 1) * 1000 (in our case 11000)

> countBeforeSleep = 1

> countAfterSleep = 7

> {noformat}

> I've attached the entire log output of running the snippet above against

> erasure coded file with RS-3-2-1024k policy. And here are the logs from

> datanodes of disconnecting the client:

> datanode 1:

> {noformat}

> 2020-06-15 19:06:20,697 INFO datanode.DataNode: Likely the client has stopped

> reading, disconnecting it (datanode-v11-0-hadoop.hadoop:9866:DataXceiver

> error processing READ_BLOCK operation src: /10.128.23.40:53748 dst:

> /10.128.14.46:9866); java.net.SocketTimeoutException: 1 millis timeout

> while waiting for channel to be ready for write. ch :

> java.nio.channels.SocketChannel[connected local=/10.128.14.46:9866

> remote=/10.128.23.40:53748]

> {noformat}

> datanode 2:

> {noformat}

> 2020-06-15 19:06:20,341 INFO datanode.DataNode: Likely the client has stopped

> reading, disconnecting it (datanode-v11-1-hadoop.hadoop:9866:DataXceiver

> error processing READ_BLOCK operation src: /10.128.23.40:48772 dst:

> /10.128.9.42:9866); java.net.SocketTimeoutException: 1 millis timeout

> while waiting for channel to be ready for write. ch :

> java.nio.channels.SocketChannel[connected local=/10.128.9.42:9866

> remote=/10.128.23.40:48772]

> {noformat}

> datanode 3:

> {noformat}

> 2020-06-15 19:06:20,467 INFO

[jira] [Updated] (HDFS-16293) Client sleeps and holds 'dataQueue' when DataNodes are congested

[ https://issues.apache.org/jira/browse/HDFS-16293?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuanxin Zhu updated HDFS-16293: --- Summary: Client sleeps and holds 'dataQueue' when DataNodes are congested (was: Client sleep and hold 'dataQueue' when DataNodes are congested) > Client sleeps and holds 'dataQueue' when DataNodes are congested > > > Key: HDFS-16293 > URL: https://issues.apache.org/jira/browse/HDFS-16293 > Project: Hadoop HDFS > Issue Type: Bug > Components: hdfs-client >Affects Versions: 3.2.2 >Reporter: Yuanxin Zhu >Priority: Major > Original Estimate: 24h > Remaining Estimate: 24h > > When I open the ECN and use Terasort for testing, DataNodes are > congested(HDFS-8008). The client enters the sleep state after receiving the > ACK for many times, but does not release the 'dataQueue'. The > ResponseProcessor thread needs the 'dataQueue' to execute > 'ackQueue.getFirst()', so the ResponseProcessor will wait for the client to > release the 'dataQueue', which is equivalent to that the ResponseProcessor > thread also enters sleep, resulting in ACK delay.MapReduce tasks can be > delayed by tens of minutes or even hours. > The DataStreamer thread can first execute 'one = dataQueue. getFirst()', > release 'dataQueue', and then judge whether to execute 'backOffIfNecessary()' > according to 'one.isHeartbeatPacket()' > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16293) Client sleep and hold 'dataQueue' when DataNodes are congested

[ https://issues.apache.org/jira/browse/HDFS-16293?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuanxin Zhu updated HDFS-16293: --- Summary: Client sleep and hold 'dataQueue' when DataNodes are congested (was: Client sleep and hold 'dataqueue' when datanode are condensed) > Client sleep and hold 'dataQueue' when DataNodes are congested > -- > > Key: HDFS-16293 > URL: https://issues.apache.org/jira/browse/HDFS-16293 > Project: Hadoop HDFS > Issue Type: Bug > Components: hdfs-client >Affects Versions: 3.2.2 >Reporter: Yuanxin Zhu >Priority: Major > Original Estimate: 24h > Remaining Estimate: 24h > > When I open the ECN and use Terasort for testing, DataNodes are > congested(HDFS-8008). The client enters the sleep state after receiving the > ACK for many times, but does not release the 'dataQueue'. The > ResponseProcessor thread needs the 'dataQueue' to execute > 'ackQueue.getFirst()', so the ResponseProcessor will wait for the client to > release the 'dataQueue', which is equivalent to that the ResponseProcessor > thread also enters sleep, resulting in ACK delay.MapReduce tasks can be > delayed by tens of minutes or even hours. > The DataStreamer thread can first execute 'one = dataQueue. getFirst()', > release 'dataQueue', and then judge whether to execute 'backOffIfNecessary()' > according to 'one.isHeartbeatPacket()' > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-16293) Client sleep and hold 'dataqueue' when datanode are condensed

[ https://issues.apache.org/jira/browse/HDFS-16293?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuanxin Zhu updated HDFS-16293: --- Description: When I open the ECN and use Terasort for testing, DataNodes are congested(HDFS-8008). The client enters the sleep state after receiving the ACK for many times, but does not release the 'dataQueue'. The ResponseProcessor thread needs the 'dataQueue' to execute 'ackQueue.getFirst()', so the ResponseProcessor will wait for the client to release the 'dataQueue', which is equivalent to that the ResponseProcessor thread also enters sleep, resulting in ACK delay.MapReduce tasks can be delayed by tens of minutes or even hours. The DataStreamer thread can first execute 'one = dataQueue. getFirst()', release 'dataQueue', and then judge whether to execute 'backOffIfNecessary()' according to 'one.isHeartbeatPacket()' was: When I open the ECN and use Terasort for testing, datanodes are congested([HDFS-8008|https://issues.apache.org/jira/browse/HDFS-8008]). The client enters the sleep state after receiving the ACK for many times, but does not release the 'dataqueue'. The ResponseProcessor thread needs the 'dataqueue' to execute 'ackqueue. getfirst()', so the ResponseProcessor will wait for the client to release the 'dataqueue', which is equivalent to that the ResponseProcessor thread also enters sleep, resulting in ack delay.MapReduce tasks can be delayed by tens of minutes or even hours > Client sleep and hold 'dataqueue' when datanode are condensed > - > > Key: HDFS-16293 > URL: https://issues.apache.org/jira/browse/HDFS-16293 > Project: Hadoop HDFS > Issue Type: Bug > Components: hdfs-client >Affects Versions: 3.2.2 >Reporter: Yuanxin Zhu >Priority: Major > Original Estimate: 24h > Remaining Estimate: 24h > > When I open the ECN and use Terasort for testing, DataNodes are > congested(HDFS-8008). The client enters the sleep state after receiving the > ACK for many times, but does not release the 'dataQueue'. The > ResponseProcessor thread needs the 'dataQueue' to execute > 'ackQueue.getFirst()', so the ResponseProcessor will wait for the client to > release the 'dataQueue', which is equivalent to that the ResponseProcessor > thread also enters sleep, resulting in ACK delay.MapReduce tasks can be > delayed by tens of minutes or even hours. > The DataStreamer thread can first execute 'one = dataQueue. getFirst()', > release 'dataQueue', and then judge whether to execute 'backOffIfNecessary()' > according to 'one.isHeartbeatPacket()' > -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16269) [Fix] Improve NNThroughputBenchmark#blockReport operation

[

https://issues.apache.org/jira/browse/HDFS-16269?focusedWorklogId=672657=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-672657

]

ASF GitHub Bot logged work on HDFS-16269:

-

Author: ASF GitHub Bot

Created on: 01/Nov/21 13:22

Start Date: 01/Nov/21 13:22

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3544:

URL: https://github.com/apache/hadoop/pull/3544#issuecomment-956229769

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|