[jira] [Commented] (HDFS-17030) Limit wait time for getHAServiceState in ObserverReaderProxy

[

https://issues.apache.org/jira/browse/HDFS-17030?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727822#comment-17727822

]

ASF GitHub Bot commented on HDFS-17030:

---

hadoop-yetus commented on PR #5700:

URL: https://github.com/apache/hadoop/pull/5700#issuecomment-1569577944

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 33s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 16m 43s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 20m 0s | | trunk passed |

| +1 :green_heart: | compile | 5m 18s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | compile | 5m 3s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | checkstyle | 1m 18s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 13s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 51s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 2m 11s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 5m 44s | | trunk passed |

| +1 :green_heart: | shadedclient | 21m 59s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 29s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 48s | | the patch passed |

| +1 :green_heart: | compile | 5m 5s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javac | 5m 5s | | the patch passed |

| +1 :green_heart: | compile | 4m 59s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | javac | 4m 59s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 4s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 57s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 27s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 1m 57s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 5m 33s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 22s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 24s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 209m 22s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5700/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 2m 59s | | The patch does not

generate ASF License warnings. |

| | | 344m 12s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.namenode.ha.TestObserverNode |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.43 ServerAPI=1.43 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5700/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5700 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux 2664664269b2 4.15.0-206-generic #217-Ubuntu SMP Fri Feb 3

19:10:13 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 0953457a06c957f9a30d937a300b3eed14160efe |

| Default Java | Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20

[jira] [Commented] (HDFS-17026) RBF: NamenodeHeartbeatService should update JMX report with configurable frequency

[

https://issues.apache.org/jira/browse/HDFS-17026?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727820#comment-17727820

]

ASF GitHub Bot commented on HDFS-17026:

---

Hexiaoqiao commented on PR #5691:

URL: https://github.com/apache/hadoop/pull/5691#issuecomment-1569570682

Committed to trunk. Thanks @hchaverri for your contributions! And thanks

every reviewers (Too many to mentioned folks)!

> RBF: NamenodeHeartbeatService should update JMX report with configurable

> frequency

> --

>

> Key: HDFS-17026

> URL: https://issues.apache.org/jira/browse/HDFS-17026

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: rbf

>Reporter: Hector Sandoval Chaverri

>Assignee: Hector Sandoval Chaverri

>Priority: Major

> Labels: pull-request-available

> Attachments: HDFS-17026-branch-3.3.patch

>

>

> The NamenodeHeartbeatService currently calls each of the Namenode's JMX

> endpoint every time it wakes up (default value is every 5 seconds).

> In a cluster with 40 routers, we have observed service degradation on some of

> the Namenodes, since the JMX request obtains Datanode status and blocks

> other RPC requests. However, JMX report data doesn't seem to be used for

> critical paths on the routers.

> We should configure the NamenodeHeartbeatService so it updates the JMX

> reports on a slower frequency than the Namenode states or to disable the

> reports completely.

> The class calls out the JMX request being optional even though there is no

> implementation to turn it off:

> {noformat}

> // Read the stats from JMX (optional)

> updateJMXParameters(webAddress, report);{noformat}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Resolved] (HDFS-17026) RBF: NamenodeHeartbeatService should update JMX report with configurable frequency

[

https://issues.apache.org/jira/browse/HDFS-17026?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Xiaoqiao He resolved HDFS-17026.

Fix Version/s: 3.4.0

Hadoop Flags: Reviewed

Resolution: Fixed

> RBF: NamenodeHeartbeatService should update JMX report with configurable

> frequency

> --

>

> Key: HDFS-17026

> URL: https://issues.apache.org/jira/browse/HDFS-17026

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: rbf

>Reporter: Hector Sandoval Chaverri

>Assignee: Hector Sandoval Chaverri

>Priority: Major

> Labels: pull-request-available

> Fix For: 3.4.0

>

> Attachments: HDFS-17026-branch-3.3.patch

>

>

> The NamenodeHeartbeatService currently calls each of the Namenode's JMX

> endpoint every time it wakes up (default value is every 5 seconds).

> In a cluster with 40 routers, we have observed service degradation on some of

> the Namenodes, since the JMX request obtains Datanode status and blocks

> other RPC requests. However, JMX report data doesn't seem to be used for

> critical paths on the routers.

> We should configure the NamenodeHeartbeatService so it updates the JMX

> reports on a slower frequency than the Namenode states or to disable the

> reports completely.

> The class calls out the JMX request being optional even though there is no

> implementation to turn it off:

> {noformat}

> // Read the stats from JMX (optional)

> updateJMXParameters(webAddress, report);{noformat}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-17026) RBF: NamenodeHeartbeatService should update JMX report with configurable frequency

[

https://issues.apache.org/jira/browse/HDFS-17026?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727817#comment-17727817

]

ASF GitHub Bot commented on HDFS-17026:

---

Hexiaoqiao merged PR #5691:

URL: https://github.com/apache/hadoop/pull/5691

> RBF: NamenodeHeartbeatService should update JMX report with configurable

> frequency

> --

>

> Key: HDFS-17026

> URL: https://issues.apache.org/jira/browse/HDFS-17026

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: rbf

>Reporter: Hector Sandoval Chaverri

>Assignee: Hector Sandoval Chaverri

>Priority: Major

> Labels: pull-request-available

> Attachments: HDFS-17026-branch-3.3.patch

>

>

> The NamenodeHeartbeatService currently calls each of the Namenode's JMX

> endpoint every time it wakes up (default value is every 5 seconds).

> In a cluster with 40 routers, we have observed service degradation on some of

> the Namenodes, since the JMX request obtains Datanode status and blocks

> other RPC requests. However, JMX report data doesn't seem to be used for

> critical paths on the routers.

> We should configure the NamenodeHeartbeatService so it updates the JMX

> reports on a slower frequency than the Namenode states or to disable the

> reports completely.

> The class calls out the JMX request being optional even though there is no

> implementation to turn it off:

> {noformat}

> // Read the stats from JMX (optional)

> updateJMXParameters(webAddress, report);{noformat}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-17030) Limit wait time for getHAServiceState in ObserverReaderProxy

[

https://issues.apache.org/jira/browse/HDFS-17030?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727808#comment-17727808

]

ASF GitHub Bot commented on HDFS-17030:

---

hadoop-yetus commented on PR #5700:

URL: https://github.com/apache/hadoop/pull/5700#issuecomment-1569553809

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 48s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 1s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 16m 1s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 22m 45s | | trunk passed |

| +1 :green_heart: | compile | 5m 45s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | compile | 5m 34s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | checkstyle | 1m 19s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 11s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 46s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 2m 7s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 6m 5s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 33s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 24s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 53s | | the patch passed |

| +1 :green_heart: | compile | 5m 42s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javac | 5m 42s | | the patch passed |

| +1 :green_heart: | compile | 5m 27s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | javac | 5m 27s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 8s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 57s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 28s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 1m 58s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 5m 58s | | the patch passed |

| +1 :green_heart: | shadedclient | 25m 50s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 17s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 222m 37s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5700/3/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 42s | | The patch does not

generate ASF License warnings. |

| | | 366m 25s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestRollingUpgrade |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.43 ServerAPI=1.43 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5700/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5700 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux 55df75039f53 4.15.0-206-generic #217-Ubuntu SMP Fri Feb 3

19:10:13 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / d18b36a0f1881fd236d2e276e6c1e678925cf89c |

| Default Java | Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| T

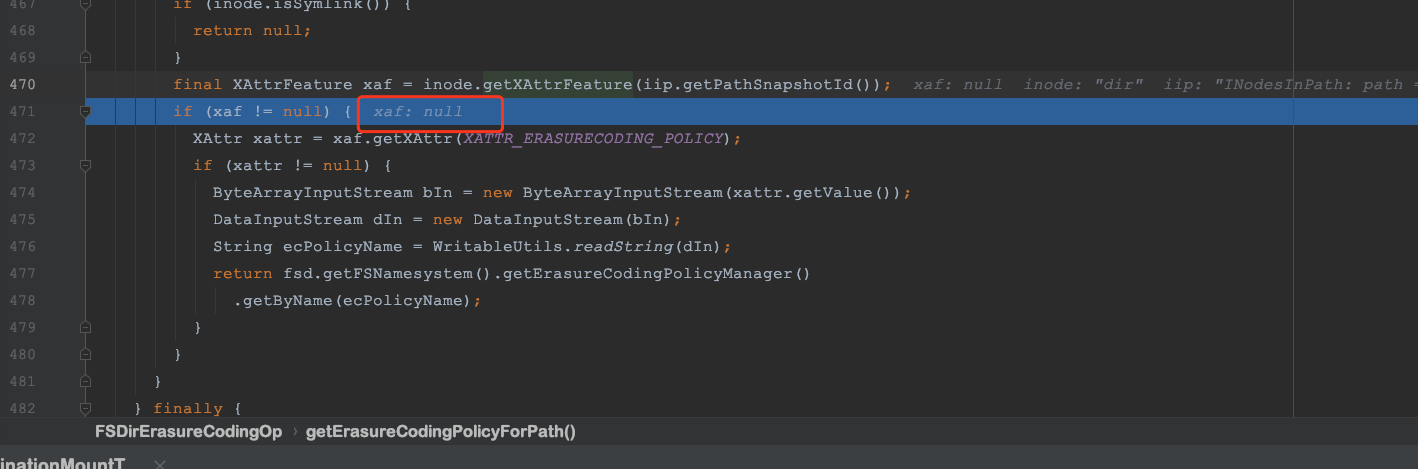

[jira] [Commented] (HDFS-17029) Support getECPolices API in WebHDFS

[

https://issues.apache.org/jira/browse/HDFS-17029?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727804#comment-17727804

]

ASF GitHub Bot commented on HDFS-17029:

---

slfan1989 commented on code in PR #5698:

URL: https://github.com/apache/hadoop/pull/5698#discussion_r1211125291

##

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/web/JsonUtil.java:

##

@@ -741,4 +741,27 @@ public static Map toJsonMap(FsStatus

status) {

m.put("remaining", status.getRemaining());

return m;

}

+

+ public static Map toJsonMap(ErasureCodingPolicyInfo

ecPolicyInfo) {

+if (ecPolicyInfo == null) {

+ return null;

+}

+Map m = new HashMap<>();

+m.put("policy", ecPolicyInfo.getPolicy());

+m.put("state", ecPolicyInfo.getState());

+return m;

+ }

+

+ public static String toJsonString(ErasureCodingPolicyInfo[] ecPolicyInfos) {

+final Map erasureCodingPolicies = new TreeMap<>();

Review Comment:

Why TreeMap?

> Support getECPolices API in WebHDFS

> ---

>

> Key: HDFS-17029

> URL: https://issues.apache.org/jira/browse/HDFS-17029

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: webhdfs

>Affects Versions: 3.4.0

>Reporter: Hualong Zhang

>Assignee: Hualong Zhang

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2023-05-29-23-55-09-224.png

>

>

> WebHDFS should support getEcPolicies:

> !image-2023-05-29-23-55-09-224.png|width=817,height=234!

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16946) RBF: top real owners metrics can't been parsed json string

[ https://issues.apache.org/jira/browse/HDFS-16946?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727803#comment-17727803 ] ASF GitHub Bot commented on HDFS-16946: --- NishthaShah commented on PR #5696: URL: https://github.com/apache/hadoop/pull/5696#issuecomment-1569533718 @goiri Thanks a lot for reviewing the PR. Have addressed the review comments, could you please take a look again? > RBF: top real owners metrics can't been parsed json string > -- > > Key: HDFS-16946 > URL: https://issues.apache.org/jira/browse/HDFS-16946 > Project: Hadoop HDFS > Issue Type: Bug > Components: rbf >Affects Versions: 3.4.0 >Reporter: Max Xie >Assignee: Nishtha Shah >Priority: Minor > Labels: pull-request-available > Attachments: image-2023-03-09-22-24-39-833.png > > > After HDFS-15447, Add top real owners metrics for delegation tokens. But the > metrics can't been parsed json string. > RBFMetrics$getTopTokenRealOwners method just return > `org.apache.hadoop.metrics2.util.Metrics2Util$NameValuePair@1` > !image-2023-03-09-22-24-39-833.png! -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-17029) Support getECPolices API in WebHDFS

[ https://issues.apache.org/jira/browse/HDFS-17029?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727766#comment-17727766 ] ASF GitHub Bot commented on HDFS-17029: --- zhtttylz commented on PR #5698: URL: https://github.com/apache/hadoop/pull/5698#issuecomment-1569437949 @ayushtkn @slfan1989 Would you be so kind as to review my pull request, please? Thank you very much! > Support getECPolices API in WebHDFS > --- > > Key: HDFS-17029 > URL: https://issues.apache.org/jira/browse/HDFS-17029 > Project: Hadoop HDFS > Issue Type: Improvement > Components: webhdfs >Affects Versions: 3.4.0 >Reporter: Hualong Zhang >Assignee: Hualong Zhang >Priority: Major > Labels: pull-request-available > Attachments: image-2023-05-29-23-55-09-224.png > > > WebHDFS should support getEcPolicies: > !image-2023-05-29-23-55-09-224.png|width=817,height=234! -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-17027) RBF: Add supports for observer.auto-msync-period when using routers

[

https://issues.apache.org/jira/browse/HDFS-17027?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727751#comment-17727751

]

ASF GitHub Bot commented on HDFS-17027:

---

hadoop-yetus commented on PR #5693:

URL: https://github.com/apache/hadoop/pull/5693#issuecomment-1569361537

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 54s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +0 :ok: | xmllint | 0m 0s | | xmllint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 18m 49s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 19m 39s | | trunk passed |

| +1 :green_heart: | compile | 6m 28s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | compile | 5m 46s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | checkstyle | 1m 21s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 39s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 23s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 1m 5s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 4m 10s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 39s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 49s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 18s | | the patch passed |

| +1 :green_heart: | compile | 6m 31s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javac | 6m 31s | | the patch passed |

| +1 :green_heart: | compile | 5m 40s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | javac | 5m 40s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 9s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 25s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 1s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 0m 56s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 3m 58s | | the patch passed |

| +1 :green_heart: | shadedclient | 21m 16s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 23s | | hadoop-hdfs-client in the patch

passed. |

| +1 :green_heart: | unit | 23m 16s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 39s | | The patch does not

generate ASF License warnings. |

| | | 155m 24s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.43 ServerAPI=1.43 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5693/7/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5693 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets xmllint |

| uname | Linux fbfe1ac6ba4c 4.15.0-206-generic #217-Ubuntu SMP Fri Feb 3

19:10:13 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / f0d0d0811c9b4206cfe4a723aa625d4638cf673b |

| Default Java | Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5693/7/testReport/ |

| Max. process+thread count | 2443 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project

[jira] [Commented] (HDFS-17027) RBF: Add supports for observer.auto-msync-period when using routers

[

https://issues.apache.org/jira/browse/HDFS-17027?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727749#comment-17727749

]

ASF GitHub Bot commented on HDFS-17027:

---

hadoop-yetus commented on PR #5693:

URL: https://github.com/apache/hadoop/pull/5693#issuecomment-1569359136

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 39s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +0 :ok: | xmllint | 0m 0s | | xmllint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 17m 0s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 19m 46s | | trunk passed |

| +1 :green_heart: | compile | 5m 26s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | compile | 6m 18s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | checkstyle | 1m 23s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 2s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 27s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 1m 5s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 4m 2s | | trunk passed |

| +1 :green_heart: | shadedclient | 21m 6s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 56s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 18s | | the patch passed |

| +1 :green_heart: | compile | 5m 38s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javac | 5m 38s | | the patch passed |

| +1 :green_heart: | compile | 5m 58s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | javac | 5m 58s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 1m 14s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 50s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 4s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 0m 56s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 4m 8s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 58s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 17s | | hadoop-hdfs-client in the patch

passed. |

| +1 :green_heart: | unit | 23m 5s | | hadoop-hdfs-rbf in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 38s | | The patch does not

generate ASF License warnings. |

| | | 154m 24s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.43 ServerAPI=1.43 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5693/6/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5693 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets xmllint |

| uname | Linux e0525b983887 4.15.0-206-generic #217-Ubuntu SMP Fri Feb 3

19:10:13 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / f0d0d0811c9b4206cfe4a723aa625d4638cf673b |

| Default Java | Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5693/6/testReport/ |

| Max. process+thread count | 2307 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project

[jira] [Commented] (HDFS-17030) Limit wait time for getHAServiceState in ObserverReaderProxy

[ https://issues.apache.org/jira/browse/HDFS-17030?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727743#comment-17727743 ] ASF GitHub Bot commented on HDFS-17030: --- xinglin commented on PR #5700: URL: https://github.com/apache/hadoop/pull/5700#issuecomment-1569342137 Hi @chliang71 @Hexiaoqiao @ZanderXu, Can I get a review? Thanks, > Limit wait time for getHAServiceState in ObserverReaderProxy > > > Key: HDFS-17030 > URL: https://issues.apache.org/jira/browse/HDFS-17030 > Project: Hadoop HDFS > Issue Type: Improvement > Components: hdfs >Affects Versions: 3.4.0 >Reporter: Xing Lin >Assignee: Xing Lin >Priority: Minor > Labels: pull-request-available > > When namenode HA is enabled and a standby NN is not responsible, we have > observed it would take a long time to serve a request, even though we have a > healthy observer or active NN. > Basically, when a standby is down, the RPC client would (re)try to create > socket connection to that standby for _ipc.client.connect.timeout_ _* > ipc.client.connect.max.retries.on.timeouts_ before giving up. When we take a > heap dump at a standby, the NN still accepts the socket connection but it > won't send responses to these RPC requests and we would timeout after > _ipc.client.rpc-timeout.ms._ This adds a significantly latency. For clusters > at Linkedin, we set _ipc.client.rpc-timeout.ms_ to 120 seconds and thus a > request takes more than 2 mins to complete when we take a heap dump at a > standby. This has been causing user job failures. > We could set _ipc.client.rpc-timeout.ms to_ a smaller value when sending > getHAServiceState requests in ObserverReaderProxy (for user rpc requests, we > still use the original value from the config). However, that would double the > socket connection between clients and the NN (which is a deal-breaker). > The proposal is to add a timeout on getHAServiceState() calls in > ObserverReaderProxy and we will only wait for the timeout for an NN to > respond its HA state. Once we pass that timeout, we will move on to probe the > next NN. > -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-17030) Limit wait time for getHAServiceState in ObserverReaderProxy

[ https://issues.apache.org/jira/browse/HDFS-17030?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727731#comment-17727731 ] ASF GitHub Bot commented on HDFS-17030: --- xinglin commented on PR #5700: URL: https://github.com/apache/hadoop/pull/5700#issuecomment-1569309426 The one unit test failure is a false alarm. passed the unit test locally. ``` ~/p/h/t/hadoop-hdfs-project (HDFS-17030)> mvn test -Dtest="TestBlockStatsMXBean" [INFO] --- [INFO] T E S T S [INFO] --- [INFO] Running org.apache.hadoop.hdfs.server.blockmanagement.TestBlockStatsMXBean [INFO] Tests run: 4, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 25.687 s - in org.apache.hadoop.hdfs.server.blockmanagement.TestBlockStatsMXBean [INFO] [INFO] Results: [INFO] [INFO] Tests run: 4, Failures: 0, Errors: 0, Skipped: 0 ``` > Limit wait time for getHAServiceState in ObserverReaderProxy > > > Key: HDFS-17030 > URL: https://issues.apache.org/jira/browse/HDFS-17030 > Project: Hadoop HDFS > Issue Type: Improvement > Components: hdfs >Affects Versions: 3.4.0 >Reporter: Xing Lin >Assignee: Xing Lin >Priority: Minor > Labels: pull-request-available > > When namenode HA is enabled and a standby NN is not responsible, we have > observed it would take a long time to serve a request, even though we have a > healthy observer or active NN. > Basically, when a standby is down, the RPC client would (re)try to create > socket connection to that standby for _ipc.client.connect.timeout_ _* > ipc.client.connect.max.retries.on.timeouts_ before giving up. When we take a > heap dump at a standby, the NN still accepts the socket connection but it > won't send responses to these RPC requests and we would timeout after > _ipc.client.rpc-timeout.ms._ This adds a significantly latency. For clusters > at Linkedin, we set _ipc.client.rpc-timeout.ms_ to 120 seconds and thus a > request takes more than 2 mins to complete when we take a heap dump at a > standby. This has been causing user job failures. > We could set _ipc.client.rpc-timeout.ms to_ a smaller value when sending > getHAServiceState requests in ObserverReaderProxy (for user rpc requests, we > still use the original value from the config). However, that would double the > socket connection between clients and the NN (which is a deal-breaker). > The proposal is to add a timeout on getHAServiceState() calls in > ObserverReaderProxy and we will only wait for the timeout for an NN to > respond its HA state. Once we pass that timeout, we will move on to probe the > next NN. > -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-17030) Limit wait time for getHAServiceState in ObserverReaderProxy

[

https://issues.apache.org/jira/browse/HDFS-17030?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727726#comment-17727726

]

ASF GitHub Bot commented on HDFS-17030:

---

hadoop-yetus commented on PR #5700:

URL: https://github.com/apache/hadoop/pull/5700#issuecomment-1569249401

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 50s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | detsecrets | 0m 0s | | detect-secrets was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +0 :ok: | mvndep | 17m 17s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 22m 41s | | trunk passed |

| +1 :green_heart: | compile | 5m 55s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | compile | 5m 35s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | checkstyle | 1m 20s | | trunk passed |

| +1 :green_heart: | mvnsite | 2m 11s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 46s | | trunk passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 2m 9s | | trunk passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 6m 3s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 40s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 24s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 1m 58s | | the patch passed |

| +1 :green_heart: | compile | 5m 42s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javac | 5m 42s | | the patch passed |

| +1 :green_heart: | compile | 5m 31s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | javac | 5m 31s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 1m 10s |

[/results-checkstyle-hadoop-hdfs-project.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5700/2/artifact/out/results-checkstyle-hadoop-hdfs-project.txt)

| hadoop-hdfs-project: The patch generated 1 new + 1 unchanged - 0 fixed = 2

total (was 1) |

| +1 :green_heart: | mvnsite | 1m 57s | | the patch passed |

| +1 :green_heart: | javadoc | 1m 27s | | the patch passed with JDK

Ubuntu-11.0.19+7-post-Ubuntu-0ubuntu120.04.1 |

| +1 :green_heart: | javadoc | 1m 54s | | the patch passed with JDK

Private Build-1.8.0_362-8u372-ga~us1-0ubuntu1~20.04-b09 |

| +1 :green_heart: | spotbugs | 5m 55s | | the patch passed |

| +1 :green_heart: | shadedclient | 25m 43s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 2m 18s | | hadoop-hdfs-client in the patch

passed. |

| -1 :x: | unit | 226m 48s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5700/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 42s | | The patch does not

generate ASF License warnings. |

| | | 372m 3s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.datanode.TestDirectoryScanner |

| | hadoop.hdfs.server.namenode.ha.TestObserverNode |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.43 ServerAPI=1.43 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-5700/2/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/5700 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell detsecrets |

| uname | Linux 4b4b9c75eec9 4.15.0-206-generic #217-Ubuntu SMP Fri Feb 3

19:10:13 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / ccf6278c9af52

[jira] [Commented] (HDFS-17027) RBF: Add supports for observer.auto-msync-period when using routers

[

https://issues.apache.org/jira/browse/HDFS-17027?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727725#comment-17727725

]

ASF GitHub Bot commented on HDFS-17027:

---

simbadzina commented on code in PR #5693:

URL: https://github.com/apache/hadoop/pull/5693#discussion_r1210904336

##

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/server/namenode/ha/RouterObserverReadProxyProvider.java:

##

@@ -0,0 +1,228 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.hadoop.hdfs.server.namenode.ha;

+

+import java.io.IOException;

+import java.lang.reflect.InvocationTargetException;

+import java.lang.reflect.Method;

+import java.lang.reflect.Proxy;

+import java.net.URI;

+import java.util.concurrent.TimeUnit;

+import org.apache.hadoop.classification.VisibleForTesting;

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hdfs.ClientGSIContext;

+import org.apache.hadoop.hdfs.protocol.ClientProtocol;

+import org.apache.hadoop.ipc.AlignmentContext;

+import org.apache.hadoop.ipc.Client;

+import org.apache.hadoop.ipc.RPC;

+import org.apache.hadoop.ipc.RpcInvocationHandler;

+import org.apache.hadoop.util.Time;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static

org.apache.hadoop.hdfs.server.namenode.ha.ObserverReadProxyProvider.AUTO_MSYNC_PERIOD_KEY_PREFIX;

+import static

org.apache.hadoop.hdfs.server.namenode.ha.ObserverReadProxyProvider.AUTO_MSYNC_PERIOD_DEFAULT;

+

+/**

+ * A {@link org.apache.hadoop.io.retry.FailoverProxyProvider} implementation

+ * to support automatic msync-ing when using routers.

+ *

+ * This constructs a wrapper proxy around an internal one, and

+ * injects msync calls when necessary via the InvocationHandler.

+ */

+public class RouterObserverReadProxyProvider extends

AbstractNNFailoverProxyProvider {

+ @VisibleForTesting

+ static final Logger LOG =

LoggerFactory.getLogger(ObserverReadProxyProvider.class);

+

+ /** Client-side context for syncing with the NameNode server side. */

+ private final AlignmentContext alignmentContext;

+

+ /** The inner proxy provider used for active/standby failover. */

+ private final AbstractNNFailoverProxyProvider innerProxy;

+

+ /** The proxy which redirects the internal one. */

+ private final ProxyInfo wrapperProxy;

+

+ /**

+ * Whether reading from observer is enabled. If this is false, this proxy

+ * will not call msync.

+ */

+ private final boolean observerReadEnabled;

+

+ /**

+ * This adjusts how frequently this proxy provider should auto-msync to the

+ * Active NameNode, automatically performing an msync() call to the active

+ * to fetch the current transaction ID before submitting read requests to

+ * observer nodes. See HDFS-14211 for more description of this feature.

+ * If this is below 0, never auto-msync. If this is 0, perform an msync on

+ * every read operation. If this is above 0, perform an msync after this many

+ * ms have elapsed since the last msync.

+ */

+ private final long autoMsyncPeriodMs;

+

+ /**

+ * The time, in millisecond epoch, that the last msync operation was

+ * performed. This includes any implicit msync (any operation which is

+ * serviced by the Active NameNode).

+ */

+ private volatile long lastMsyncTimeMs = -1;

+

+ public RouterObserverReadProxyProvider(Configuration conf, URI uri, Class

xface,

+ HAProxyFactory factory) {

+this(conf, uri, xface, factory, new IPFailoverProxyProvider<>(conf, uri,

xface, factory));

+ }

+

+ @SuppressWarnings("unchecked")

+ public RouterObserverReadProxyProvider(Configuration conf, URI uri, Class

xface,

+ HAProxyFactory factory, AbstractNNFailoverProxyProvider

failoverProxy) {

+super(conf, uri, xface, factory);

+this.alignmentContext = new ClientGSIContext();

+factory.setAlignmentContext(alignmentContext);

+this.innerProxy = failoverProxy;

+

+String proxyInfoString = "RouterObserverReadProxyProvider for " +

innerProxy.getProxy();

+

+T wrappedProxy = (T) Proxy.newProxyInstance(

+RouterObserverReadInvocationHandler.class.getClassLoader(),

+new Class[

[jira] [Commented] (HDFS-17027) RBF: Add supports for observer.auto-msync-period when using routers

[

https://issues.apache.org/jira/browse/HDFS-17027?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727724#comment-17727724

]

ASF GitHub Bot commented on HDFS-17027:

---

simbadzina commented on code in PR #5693:

URL: https://github.com/apache/hadoop/pull/5693#discussion_r1210904336

##

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/server/namenode/ha/RouterObserverReadProxyProvider.java:

##

@@ -0,0 +1,228 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.hadoop.hdfs.server.namenode.ha;

+

+import java.io.IOException;

+import java.lang.reflect.InvocationTargetException;

+import java.lang.reflect.Method;

+import java.lang.reflect.Proxy;

+import java.net.URI;

+import java.util.concurrent.TimeUnit;

+import org.apache.hadoop.classification.VisibleForTesting;

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hdfs.ClientGSIContext;

+import org.apache.hadoop.hdfs.protocol.ClientProtocol;

+import org.apache.hadoop.ipc.AlignmentContext;

+import org.apache.hadoop.ipc.Client;

+import org.apache.hadoop.ipc.RPC;

+import org.apache.hadoop.ipc.RpcInvocationHandler;

+import org.apache.hadoop.util.Time;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static

org.apache.hadoop.hdfs.server.namenode.ha.ObserverReadProxyProvider.AUTO_MSYNC_PERIOD_KEY_PREFIX;

+import static

org.apache.hadoop.hdfs.server.namenode.ha.ObserverReadProxyProvider.AUTO_MSYNC_PERIOD_DEFAULT;

+

+/**

+ * A {@link org.apache.hadoop.io.retry.FailoverProxyProvider} implementation

+ * to support automatic msync-ing when using routers.

+ *

+ * This constructs a wrapper proxy around an internal one, and

+ * injects msync calls when necessary via the InvocationHandler.

+ */

+public class RouterObserverReadProxyProvider extends

AbstractNNFailoverProxyProvider {

+ @VisibleForTesting

+ static final Logger LOG =

LoggerFactory.getLogger(ObserverReadProxyProvider.class);

+

+ /** Client-side context for syncing with the NameNode server side. */

+ private final AlignmentContext alignmentContext;

+

+ /** The inner proxy provider used for active/standby failover. */

+ private final AbstractNNFailoverProxyProvider innerProxy;

+

+ /** The proxy which redirects the internal one. */

+ private final ProxyInfo wrapperProxy;

+

+ /**

+ * Whether reading from observer is enabled. If this is false, this proxy

+ * will not call msync.

+ */

+ private final boolean observerReadEnabled;

+

+ /**

+ * This adjusts how frequently this proxy provider should auto-msync to the

+ * Active NameNode, automatically performing an msync() call to the active

+ * to fetch the current transaction ID before submitting read requests to

+ * observer nodes. See HDFS-14211 for more description of this feature.

+ * If this is below 0, never auto-msync. If this is 0, perform an msync on

+ * every read operation. If this is above 0, perform an msync after this many

+ * ms have elapsed since the last msync.

+ */

+ private final long autoMsyncPeriodMs;

+

+ /**

+ * The time, in millisecond epoch, that the last msync operation was

+ * performed. This includes any implicit msync (any operation which is

+ * serviced by the Active NameNode).

+ */

+ private volatile long lastMsyncTimeMs = -1;

+

+ public RouterObserverReadProxyProvider(Configuration conf, URI uri, Class

xface,

+ HAProxyFactory factory) {

+this(conf, uri, xface, factory, new IPFailoverProxyProvider<>(conf, uri,

xface, factory));

+ }

+

+ @SuppressWarnings("unchecked")

+ public RouterObserverReadProxyProvider(Configuration conf, URI uri, Class

xface,

+ HAProxyFactory factory, AbstractNNFailoverProxyProvider

failoverProxy) {

+super(conf, uri, xface, factory);

+this.alignmentContext = new ClientGSIContext();

+factory.setAlignmentContext(alignmentContext);

+this.innerProxy = failoverProxy;

+

+String proxyInfoString = "RouterObserverReadProxyProvider for " +

innerProxy.getProxy();

+

+T wrappedProxy = (T) Proxy.newProxyInstance(

+RouterObserverReadInvocationHandler.class.getClassLoader(),

+new Class[

[jira] [Commented] (HDFS-17026) RBF: NamenodeHeartbeatService should update JMX report with configurable frequency

[

https://issues.apache.org/jira/browse/HDFS-17026?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727712#comment-17727712

]

ASF GitHub Bot commented on HDFS-17026:

---

hchaverri commented on PR #5691:

URL: https://github.com/apache/hadoop/pull/5691#issuecomment-1569141987

@Hexiaoqiao @virajjasani @mkuchenbecker we have a few approvals already. Are

you ok with closing this PR?

> RBF: NamenodeHeartbeatService should update JMX report with configurable

> frequency

> --

>

> Key: HDFS-17026

> URL: https://issues.apache.org/jira/browse/HDFS-17026

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: rbf

>Reporter: Hector Sandoval Chaverri

>Assignee: Hector Sandoval Chaverri

>Priority: Major

> Labels: pull-request-available

> Attachments: HDFS-17026-branch-3.3.patch

>

>

> The NamenodeHeartbeatService currently calls each of the Namenode's JMX

> endpoint every time it wakes up (default value is every 5 seconds).

> In a cluster with 40 routers, we have observed service degradation on some of

> the Namenodes, since the JMX request obtains Datanode status and blocks

> other RPC requests. However, JMX report data doesn't seem to be used for

> critical paths on the routers.

> We should configure the NamenodeHeartbeatService so it updates the JMX

> reports on a slower frequency than the Namenode states or to disable the

> reports completely.

> The class calls out the JMX request being optional even though there is no

> implementation to turn it off:

> {noformat}

> // Read the stats from JMX (optional)

> updateJMXParameters(webAddress, report);{noformat}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Assigned] (HDFS-17026) RBF: NamenodeHeartbeatService should update JMX report with configurable frequency

[

https://issues.apache.org/jira/browse/HDFS-17026?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Íñigo Goiri reassigned HDFS-17026:

--

Assignee: Hector Sandoval Chaverri

> RBF: NamenodeHeartbeatService should update JMX report with configurable

> frequency

> --

>

> Key: HDFS-17026

> URL: https://issues.apache.org/jira/browse/HDFS-17026

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: rbf

>Reporter: Hector Sandoval Chaverri

>Assignee: Hector Sandoval Chaverri

>Priority: Major

> Labels: pull-request-available

> Attachments: HDFS-17026-branch-3.3.patch

>

>

> The NamenodeHeartbeatService currently calls each of the Namenode's JMX

> endpoint every time it wakes up (default value is every 5 seconds).

> In a cluster with 40 routers, we have observed service degradation on some of

> the Namenodes, since the JMX request obtains Datanode status and blocks

> other RPC requests. However, JMX report data doesn't seem to be used for

> critical paths on the routers.

> We should configure the NamenodeHeartbeatService so it updates the JMX

> reports on a slower frequency than the Namenode states or to disable the

> reports completely.

> The class calls out the JMX request being optional even though there is no

> implementation to turn it off:

> {noformat}

> // Read the stats from JMX (optional)

> updateJMXParameters(webAddress, report);{noformat}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-17027) RBF: Add supports for observer.auto-msync-period when using routers

[

https://issues.apache.org/jira/browse/HDFS-17027?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727694#comment-17727694

]

ASF GitHub Bot commented on HDFS-17027:

---

hchaverri commented on code in PR #5693:

URL: https://github.com/apache/hadoop/pull/5693#discussion_r1210770190

##

hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/server/namenode/ha/RouterObserverReadProxyProvider.java:

##

@@ -0,0 +1,228 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.hadoop.hdfs.server.namenode.ha;

+

+import java.io.IOException;

+import java.lang.reflect.InvocationTargetException;

+import java.lang.reflect.Method;

+import java.lang.reflect.Proxy;

+import java.net.URI;

+import java.util.concurrent.TimeUnit;

+import org.apache.hadoop.classification.VisibleForTesting;

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hdfs.ClientGSIContext;

+import org.apache.hadoop.hdfs.protocol.ClientProtocol;

+import org.apache.hadoop.ipc.AlignmentContext;

+import org.apache.hadoop.ipc.Client;

+import org.apache.hadoop.ipc.RPC;

+import org.apache.hadoop.ipc.RpcInvocationHandler;

+import org.apache.hadoop.util.Time;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static

org.apache.hadoop.hdfs.server.namenode.ha.ObserverReadProxyProvider.AUTO_MSYNC_PERIOD_KEY_PREFIX;

+import static

org.apache.hadoop.hdfs.server.namenode.ha.ObserverReadProxyProvider.AUTO_MSYNC_PERIOD_DEFAULT;

+

+/**

+ * A {@link org.apache.hadoop.io.retry.FailoverProxyProvider} implementation

+ * to support automatic msync-ing when using routers.

+ *

+ * This constructs a wrapper proxy around an internal one, and

+ * injects msync calls when necessary via the InvocationHandler.

+ */

+public class RouterObserverReadProxyProvider extends

AbstractNNFailoverProxyProvider {

+ @VisibleForTesting

+ static final Logger LOG =

LoggerFactory.getLogger(ObserverReadProxyProvider.class);

+

+ /** Client-side context for syncing with the NameNode server side. */

+ private final AlignmentContext alignmentContext;

+

+ /** The inner proxy provider used for active/standby failover. */

+ private final AbstractNNFailoverProxyProvider innerProxy;

+

+ /** The proxy which redirects the internal one. */

+ private final ProxyInfo wrapperProxy;

+

+ /**

+ * Whether reading from observer is enabled. If this is false, this proxy

+ * will not call msync.

+ */

+ private final boolean observerReadEnabled;

+

+ /**

+ * This adjusts how frequently this proxy provider should auto-msync to the

+ * Active NameNode, automatically performing an msync() call to the active

+ * to fetch the current transaction ID before submitting read requests to

+ * observer nodes. See HDFS-14211 for more description of this feature.

+ * If this is below 0, never auto-msync. If this is 0, perform an msync on

+ * every read operation. If this is above 0, perform an msync after this many

+ * ms have elapsed since the last msync.

+ */

+ private final long autoMsyncPeriodMs;

+

+ /**

+ * The time, in millisecond epoch, that the last msync operation was

+ * performed. This includes any implicit msync (any operation which is

+ * serviced by the Active NameNode).

+ */

+ private volatile long lastMsyncTimeMs = -1;

+

+ public RouterObserverReadProxyProvider(Configuration conf, URI uri, Class

xface,

+ HAProxyFactory factory) {

+this(conf, uri, xface, factory, new IPFailoverProxyProvider<>(conf, uri,

xface, factory));

+ }

+

+ @SuppressWarnings("unchecked")

+ public RouterObserverReadProxyProvider(Configuration conf, URI uri, Class

xface,

+ HAProxyFactory factory, AbstractNNFailoverProxyProvider

failoverProxy) {

+super(conf, uri, xface, factory);

+this.alignmentContext = new ClientGSIContext();

+factory.setAlignmentContext(alignmentContext);

+this.innerProxy = failoverProxy;

+

+String proxyInfoString = "RouterObserverReadProxyProvider for " +

innerProxy.getProxy();

+

+T wrappedProxy = (T) Proxy.newProxyInstance(

+RouterObserverReadInvocationHandler.class.getClassLoader(),

+new Class[]

[jira] [Commented] (HDFS-16959) RBF: State store cache loading metrics

[ https://issues.apache.org/jira/browse/HDFS-16959?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727679#comment-17727679 ] ASF GitHub Bot commented on HDFS-16959: --- MayankSinghParmar commented on PR #5497: URL: https://github.com/apache/hadoop/pull/5497#issuecomment-1568984267 I want to implement a new cache replacement algorithm in hdfs but I don't know which part of module to use and modify. Can any one please help me find the modules and functions which need to override to add my own caching replacement algorithm in hdfs > RBF: State store cache loading metrics > -- > > Key: HDFS-16959 > URL: https://issues.apache.org/jira/browse/HDFS-16959 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Viraj Jasani >Assignee: Viraj Jasani >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > > With increasing num of state store records (like mount points), it would be > good to be able to get the cache loading metrics like avg time for cache load > during refresh, num of times cache is loaded etc. -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16959) RBF: State store cache loading metrics

[ https://issues.apache.org/jira/browse/HDFS-16959?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727675#comment-17727675 ] ASF GitHub Bot commented on HDFS-16959: --- MayankSinghParmar commented on PR #5497: URL: https://github.com/apache/hadoop/pull/5497#issuecomment-1568977449 I want to implement a new cache replacement algorithm in hdfs but I don't know which part of module to use and modify. Can any one please help me find the modules and functions which need to override to add my own caching replacement algorithm in hdfs > RBF: State store cache loading metrics > -- > > Key: HDFS-16959 > URL: https://issues.apache.org/jira/browse/HDFS-16959 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Viraj Jasani >Assignee: Viraj Jasani >Priority: Major > Labels: pull-request-available > Fix For: 3.4.0 > > > With increasing num of state store records (like mount points), it would be > good to be able to get the cache loading metrics like avg time for cache load > during refresh, num of times cache is loaded etc. -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-17000) Potential infinite loop in TestDFSStripedOutputStreamUpdatePipeline.testDFSStripedOutputStreamUpdatePipeline

[

https://issues.apache.org/jira/browse/HDFS-17000?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17727669#comment-17727669

]

ASF GitHub Bot commented on HDFS-17000:

---

MayankSinghParmar commented on PR #5699:

URL: https://github.com/apache/hadoop/pull/5699#issuecomment-1568968901

I want to implement a new cache replacement algorithm in hdfs but I don't

know which part of module to use and modify. Can any one please help me find

the modules and functions which need to override to add my own caching

replacement algorithm in hdfs

> Potential infinite loop in

> TestDFSStripedOutputStreamUpdatePipeline.testDFSStripedOutputStreamUpdatePipeline

>

>

> Key: HDFS-17000

> URL: https://issues.apache.org/jira/browse/HDFS-17000

> Project: Hadoop HDFS

> Issue Type: Bug

>Reporter: Marcono1234

>Priority: Major

> Labels: pull-request-available

>

> The method

> {{TestDFSStripedOutputStreamUpdatePipeline.testDFSStripedOutputStreamUpdatePipeline}}

> contains the following line:

> {code}

> for (int i = 0; i < Long.MAX_VALUE; i++) {

> {code}

> [GitHub source

> link|https://github.com/apache/hadoop/blob/4ee92efb73a90ae7f909e96de242d216ad6878b2/hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/TestDFSStripedOutputStreamUpdatePipeline.java#L48]

> Because {{i}} is an {{int}} the condition {{i < Long.MAX_VALUE}} will always

> be true and {{i}} will simply overflow.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Updated] (HDFS-17030) Limit wait time for getHAServiceState in ObserverReaderProxy

[ https://issues.apache.org/jira/browse/HDFS-17030?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xing Lin updated HDFS-17030: Description: When namenode HA is enabled and a standby NN is not responsible, we have observed it would take a long time to serve a request, even though we have a healthy observer or active NN. Basically, when a standby is down, the RPC client would (re)try to create socket connection to that standby for _ipc.client.connect.timeout_ _* ipc.client.connect.max.retries.on.timeouts_ before giving up. When we take a heap dump at a standby, the NN still accepts the socket connection but it won't send responses to these RPC requests and we would timeout after _ipc.client.rpc-timeout.ms._ This adds a significantly latency. For clusters at Linkedin, we set _ipc.client.rpc-timeout.ms_ to 120 seconds and thus a request takes more than 2 mins to complete when we take a heap dump at a standby. This has been causing user job failures. We could set _ipc.client.rpc-timeout.ms to_ a smaller value when sending getHAServiceState requests in ObserverReaderProxy (for user rpc requests, we still use the original value from the config). However, that would double the socket connection between clients and the NN (which is a deal-breaker). The proposal is to add a timeout on getHAServiceState() calls in ObserverReaderProxy and we will only wait for the timeout for an NN to respond its HA state. Once we pass that timeout, we will move on to the next NN. was: When namenode HA is enabled and a standby NN is not responsible, we have observed it would take a long time to serve a request, even though we have a healthy observer or active NN. Basically, when a standby is down, the RPC client would (re)try to create socket connection to that standby for _ipc.client.connect.timeout_ _* ipc.client.connect.max.retries.on.timeouts_ before giving up. When we take a heap dump at a standby, the NN still accepts the socket connection but it won't send responses to these RPC requests and we would timeout after _ipc.client.rpc-timeout.ms._ This adds a significantly latency. For clusters at Linkedin, we set _ipc.client.rpc-timeout.ms_ to 120 seconds and thus a request takes more than 2 mins to complete when we take a heap dump at a standby. This has been causing user job failures. We could set _ipc.client.rpc-timeout.ms to_ a smaller value when sending getHAServiceState requests in ObserverReaderProxy (for user rpc requests, we still use the original value from the config). However, that would double the socket connection between clients and the NN. The proposal is to add a timeout on getHAServiceState() calls in ObserverReaderProxy and we will only wait for the timeout for an NN to respond its HA state. Once we pass that timeout, we will move on to the next NN. > Limit wait time for getHAServiceState in ObserverReaderProxy > > > Key: HDFS-17030 > URL: https://issues.apache.org/jira/browse/HDFS-17030 > Project: Hadoop HDFS > Issue Type: Improvement > Components: hdfs >Affects Versions: 3.4.0 >Reporter: Xing Lin >Assignee: Xing Lin >Priority: Minor > Labels: pull-request-available > > When namenode HA is enabled and a standby NN is not responsible, we have > observed it would take a long time to serve a request, even though we have a > healthy observer or active NN. > Basically, when a standby is down, the RPC client would (re)try to create > socket connection to that standby for _ipc.client.connect.timeout_ _* > ipc.client.connect.max.retries.on.timeouts_ before giving up. When we take a > heap dump at a standby, the NN still accepts the socket connection but it > won't send responses to these RPC requests and we would timeout after > _ipc.client.rpc-timeout.ms._ This adds a significantly latency. For clusters > at Linkedin, we set _ipc.client.rpc-timeout.ms_ to 120 seconds and thus a > request takes more than 2 mins to complete when we take a heap dump at a > standby. This has been causing user job failures. > We could set _ipc.client.rpc-timeout.ms to_ a smaller value when sending > getHAServiceState requests in ObserverReaderProxy (for user rpc requests, we > still use the original value from the config). However, that would double the > socket connection between clients and the NN (which is a deal-breaker). > The proposal is to add a timeout on getHAServiceState() calls in > ObserverReaderProxy and we will only wait for the timeout for an NN to > respond its HA state. Once we pass that timeout, we will move on to the next > NN. > -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@

[jira] [Updated] (HDFS-17030) Limit wait time for getHAServiceState in ObserverReaderProxy

[ https://issues.apache.org/jira/browse/HDFS-17030?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Xing Lin updated HDFS-17030: Description: When namenode HA is enabled and a standby NN is not responsible, we have observed it would take a long time to serve a request, even though we have a healthy observer or active NN. Basically, when a standby is down, the RPC client would (re)try to create socket connection to that standby for _ipc.client.connect.timeout_ _* ipc.client.connect.max.retries.on.timeouts_ before giving up. When we take a heap dump at a standby, the NN still accepts the socket connection but it won't send responses to these RPC requests and we would timeout after _ipc.client.rpc-timeout.ms._ This adds a significantly latency. For clusters at Linkedin, we set _ipc.client.rpc-timeout.ms_ to 120 seconds and thus a request takes more than 2 mins to complete when we take a heap dump at a standby. This has been causing user job failures. We could set _ipc.client.rpc-timeout.ms to_ a smaller value when sending getHAServiceState requests in ObserverReaderProxy (for user rpc requests, we still use the original value from the config). However, that would double the socket connection between clients and the NN (which is a deal-breaker). The proposal is to add a timeout on getHAServiceState() calls in ObserverReaderProxy and we will only wait for the timeout for an NN to respond its HA state. Once we pass that timeout, we will move on to probe the next NN. was: When namenode HA is enabled and a standby NN is not responsible, we have observed it would take a long time to serve a request, even though we have a healthy observer or active NN. Basically, when a standby is down, the RPC client would (re)try to create socket connection to that standby for _ipc.client.connect.timeout_ _* ipc.client.connect.max.retries.on.timeouts_ before giving up. When we take a heap dump at a standby, the NN still accepts the socket connection but it won't send responses to these RPC requests and we would timeout after _ipc.client.rpc-timeout.ms._ This adds a significantly latency. For clusters at Linkedin, we set _ipc.client.rpc-timeout.ms_ to 120 seconds and thus a request takes more than 2 mins to complete when we take a heap dump at a standby. This has been causing user job failures. We could set _ipc.client.rpc-timeout.ms to_ a smaller value when sending getHAServiceState requests in ObserverReaderProxy (for user rpc requests, we still use the original value from the config). However, that would double the socket connection between clients and the NN (which is a deal-breaker). The proposal is to add a timeout on getHAServiceState() calls in ObserverReaderProxy and we will only wait for the timeout for an NN to respond its HA state. Once we pass that timeout, we will move on to the next NN. > Limit wait time for getHAServiceState in ObserverReaderProxy > > > Key: HDFS-17030 > URL: https://issues.apache.org/jira/browse/HDFS-17030 > Project: Hadoop HDFS > Issue Type: Improvement > Components: hdfs >Affects Versions: 3.4.0 >Reporter: Xing Lin >Assignee: Xing Lin >Priority: Minor > Labels: pull-request-available > > When namenode HA is enabled and a standby NN is not responsible, we have > observed it would take a long time to serve a request, even though we have a > healthy observer or active NN. > Basically, when a standby is down, the RPC client would (re)try to create > socket connection to that standby for _ipc.client.connect.timeout_ _* > ipc.client.connect.max.retries.on.timeouts_ before giving up. When we take a > heap dump at a standby, the NN still accepts the socket connection but it > won't send responses to these RPC requests and we would timeout after > _ipc.client.rpc-timeout.ms._ This adds a significantly latency. For clusters > at Linkedin, we set _ipc.client.rpc-timeout.ms_ to 120 seconds and thus a > request takes more than 2 mins to complete when we take a heap dump at a > standby. This has been causing user job failures. > We could set _ipc.client.rpc-timeout.ms to_ a smaller value when sending > getHAServiceState requests in ObserverReaderProxy (for user rpc requests, we > still use the original value from the config). However, that would double the > socket connection between clients and the NN (which is a deal-breaker). > The proposal is to add a timeout on getHAServiceState() calls in > ObserverReaderProxy and we will only wait for the timeout for an NN to > respond its HA state. Once we pass that timeout, we will move on to probe the > next NN. > -- This message was sent by Atlassian Jira (v8.20.10#820010) - To unsubsc

[jira] [Updated] (HDFS-17030) Limit wait time for getHAServiceState in ObserverReaderProxy