[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

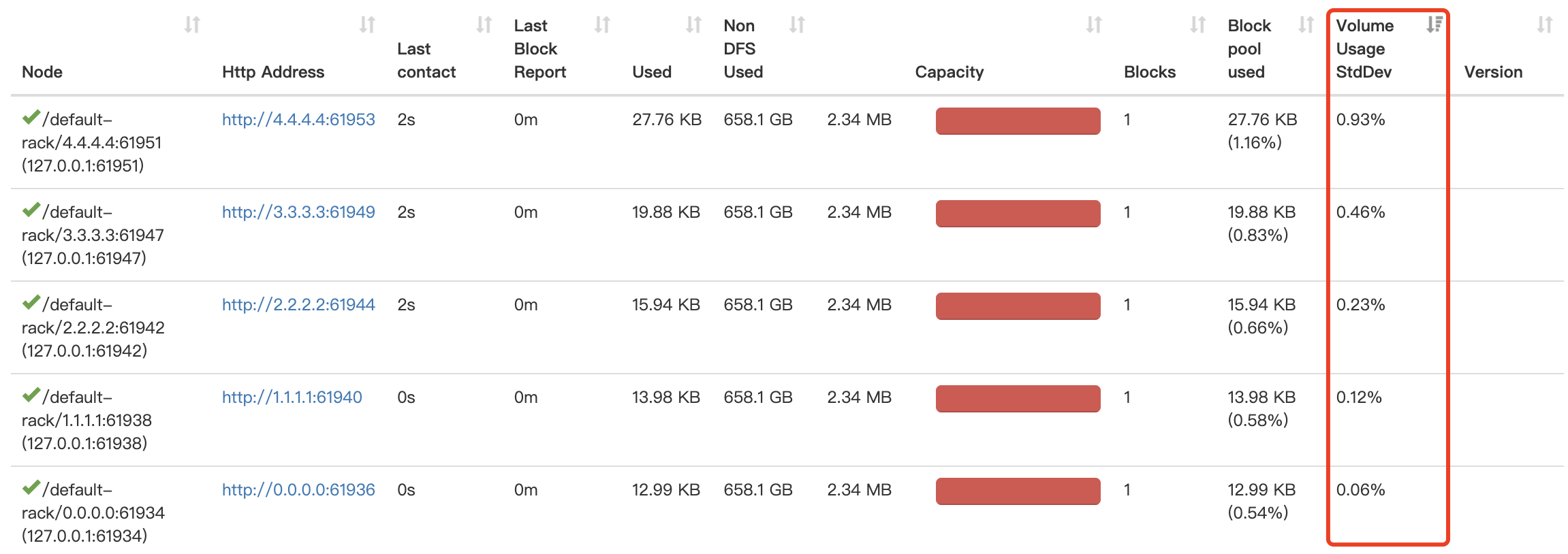

[ https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=645126&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-645126 ] ASF GitHub Bot logged work on HDFS-16158: - Author: ASF GitHub Bot Created on: 01/Sep/21 09:39 Start Date: 01/Sep/21 09:39 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3288: URL: https://github.com/apache/hadoop/pull/3288#issuecomment-909761516 Hi @jojochuang , this PR has a lot of changes, which can make rolling updates difficult. I re-implemented this feature and will submit another PR later. Thank you for your review. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 645126) Time Spent: 2h 10m (was: 2h) > Discover datanodes with unbalanced volume usage by the standard deviation > -- > > Key: HDFS-16158 > URL: https://issues.apache.org/jira/browse/HDFS-16158 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Attachments: image-2021-08-11-10-14-58-430.png > > Time Spent: 2h 10m > Remaining Estimate: 0h > > Discover datanodes with unbalanced volume usage by the standard deviation > In some scenarios, we may cause unbalanced datanode disk usage: > 1. Repair the damaged disk and make it online again. > 2. Add disks to some Datanodes. > 3. Some disks are damaged, resulting in slow data writing. > 4. Use some custom volume choosing policies. > In the case of unbalanced disk usage, a sudden increase in datanode write > traffic may result in busy disk I/O with low volume usage, resulting in > decreased throughput across datanodes. > In this case, we need to find these nodes in time to do diskBalance, or other > processing. Based on the volume usage of each datanode, we can calculate the > standard deviation of the volume usage. The more unbalanced the volume, the > higher the standard deviation. > To prevent the namenode from being too busy, we can calculate the standard > variance on the datanode side, transmit it to the namenode through heartbeat, > and display the result on the Web of namenode. We can then sort directly to > find the nodes on the Web where the volumes usages are unbalanced. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[ https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=645096&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-645096 ] ASF GitHub Bot logged work on HDFS-16158: - Author: ASF GitHub Bot Created on: 01/Sep/21 09:36 Start Date: 01/Sep/21 09:36 Worklog Time Spent: 10m Work Description: tomscut closed pull request #3288: URL: https://github.com/apache/hadoop/pull/3288 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 645096) Time Spent: 2h (was: 1h 50m) > Discover datanodes with unbalanced volume usage by the standard deviation > -- > > Key: HDFS-16158 > URL: https://issues.apache.org/jira/browse/HDFS-16158 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Attachments: image-2021-08-11-10-14-58-430.png > > Time Spent: 2h > Remaining Estimate: 0h > > Discover datanodes with unbalanced volume usage by the standard deviation > In some scenarios, we may cause unbalanced datanode disk usage: > 1. Repair the damaged disk and make it online again. > 2. Add disks to some Datanodes. > 3. Some disks are damaged, resulting in slow data writing. > 4. Use some custom volume choosing policies. > In the case of unbalanced disk usage, a sudden increase in datanode write > traffic may result in busy disk I/O with low volume usage, resulting in > decreased throughput across datanodes. > In this case, we need to find these nodes in time to do diskBalance, or other > processing. Based on the volume usage of each datanode, we can calculate the > standard deviation of the volume usage. The more unbalanced the volume, the > higher the standard deviation. > To prevent the namenode from being too busy, we can calculate the standard > variance on the datanode side, transmit it to the namenode through heartbeat, > and display the result on the Web of namenode. We can then sort directly to > find the nodes on the Web where the volumes usages are unbalanced. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[ https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=644691&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-644691 ] ASF GitHub Bot logged work on HDFS-16158: - Author: ASF GitHub Bot Created on: 01/Sep/21 00:38 Start Date: 01/Sep/21 00:38 Worklog Time Spent: 10m Work Description: tomscut closed pull request #3288: URL: https://github.com/apache/hadoop/pull/3288 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 644691) Time Spent: 1h 50m (was: 1h 40m) > Discover datanodes with unbalanced volume usage by the standard deviation > -- > > Key: HDFS-16158 > URL: https://issues.apache.org/jira/browse/HDFS-16158 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Attachments: image-2021-08-11-10-14-58-430.png > > Time Spent: 1h 50m > Remaining Estimate: 0h > > Discover datanodes with unbalanced volume usage by the standard deviation > In some scenarios, we may cause unbalanced datanode disk usage: > 1. Repair the damaged disk and make it online again. > 2. Add disks to some Datanodes. > 3. Some disks are damaged, resulting in slow data writing. > 4. Use some custom volume choosing policies. > In the case of unbalanced disk usage, a sudden increase in datanode write > traffic may result in busy disk I/O with low volume usage, resulting in > decreased throughput across datanodes. > In this case, we need to find these nodes in time to do diskBalance, or other > processing. Based on the volume usage of each datanode, we can calculate the > standard deviation of the volume usage. The more unbalanced the volume, the > higher the standard deviation. > To prevent the namenode from being too busy, we can calculate the standard > variance on the datanode side, transmit it to the namenode through heartbeat, > and display the result on the Web of namenode. We can then sort directly to > find the nodes on the Web where the volumes usages are unbalanced. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[ https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=644690&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-644690 ] ASF GitHub Bot logged work on HDFS-16158: - Author: ASF GitHub Bot Created on: 01/Sep/21 00:37 Start Date: 01/Sep/21 00:37 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3288: URL: https://github.com/apache/hadoop/pull/3288#issuecomment-909761516 Hi @jojochuang , this PR has a lot of changes, which can make rolling updates difficult. I re-implemented this feature and will submit another PR later. Thank you for your review. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 644690) Time Spent: 1h 40m (was: 1.5h) > Discover datanodes with unbalanced volume usage by the standard deviation > -- > > Key: HDFS-16158 > URL: https://issues.apache.org/jira/browse/HDFS-16158 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Attachments: image-2021-08-11-10-14-58-430.png > > Time Spent: 1h 40m > Remaining Estimate: 0h > > Discover datanodes with unbalanced volume usage by the standard deviation > In some scenarios, we may cause unbalanced datanode disk usage: > 1. Repair the damaged disk and make it online again. > 2. Add disks to some Datanodes. > 3. Some disks are damaged, resulting in slow data writing. > 4. Use some custom volume choosing policies. > In the case of unbalanced disk usage, a sudden increase in datanode write > traffic may result in busy disk I/O with low volume usage, resulting in > decreased throughput across datanodes. > In this case, we need to find these nodes in time to do diskBalance, or other > processing. Based on the volume usage of each datanode, we can calculate the > standard deviation of the volume usage. The more unbalanced the volume, the > higher the standard deviation. > To prevent the namenode from being too busy, we can calculate the standard > variance on the datanode side, transmit it to the namenode through heartbeat, > and display the result on the Web of namenode. We can then sort directly to > find the nodes on the Web where the volumes usages are unbalanced. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[ https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=638618&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-638618 ] ASF GitHub Bot logged work on HDFS-16158: - Author: ASF GitHub Bot Created on: 17/Aug/21 11:38 Start Date: 17/Aug/21 11:38 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3288: URL: https://github.com/apache/hadoop/pull/3288#issuecomment-900221653 Thanks @jojochuang for your review, I will fix these problems ASAP. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 638618) Time Spent: 1.5h (was: 1h 20m) > Discover datanodes with unbalanced volume usage by the standard deviation > -- > > Key: HDFS-16158 > URL: https://issues.apache.org/jira/browse/HDFS-16158 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Attachments: image-2021-08-11-10-14-58-430.png > > Time Spent: 1.5h > Remaining Estimate: 0h > > Discover datanodes with unbalanced volume usage by the standard deviation > In some scenarios, we may cause unbalanced datanode disk usage: > 1. Repair the damaged disk and make it online again. > 2. Add disks to some Datanodes. > 3. Some disks are damaged, resulting in slow data writing. > 4. Use some custom volume choosing policies. > In the case of unbalanced disk usage, a sudden increase in datanode write > traffic may result in busy disk I/O with low volume usage, resulting in > decreased throughput across datanodes. > In this case, we need to find these nodes in time to do diskBalance, or other > processing. Based on the volume usage of each datanode, we can calculate the > standard deviation of the volume usage. The more unbalanced the volume, the > higher the standard deviation. > To prevent the namenode from being too busy, we can calculate the standard > variance on the datanode side, transmit it to the namenode through heartbeat, > and display the result on the Web of namenode. We can then sort directly to > find the nodes on the Web where the volumes usages are unbalanced. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=638617&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-638617

]

ASF GitHub Bot logged work on HDFS-16158:

-

Author: ASF GitHub Bot

Created on: 17/Aug/21 11:29

Start Date: 17/Aug/21 11:29

Worklog Time Spent: 10m

Work Description: jojochuang commented on a change in pull request #3288:

URL: https://github.com/apache/hadoop/pull/3288#discussion_r690277334

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##

@@ -3184,6 +3191,26 @@ public void shutdownBlockPool(String bpid) {

return info;

}

+ @Override

+ public float getVolumeUsageStdDev() {

+Collection volumeInfos = getVolumeInfo();

+ArrayList usages = new ArrayList();

+float totalDfsUsed = 0;

+float dev = 0;

+for (VolumeInfo v : volumeInfos) {

+ usages.add(v.volumeUsagePercent);

+ totalDfsUsed += v.volumeUsagePercent;

+}

+

+totalDfsUsed /= volumeInfos.size();

+Collections.sort(usages);

+for (Float usage : usages) {

+ dev += (usage - totalDfsUsed) * (usage - totalDfsUsed);

+}

+dev = (float) Math.sqrt(dev / usages.size());

Review comment:

can we add a check to ensure usages.size() never returns 0?

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##

@@ -675,6 +675,10 @@ public long getDfsUsed() throws IOException {

return volumes.getDfsUsed();

}

+ public long setDfsUsed() throws IOException {

Review comment:

this API doesn't appear to be used.

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/FSNamesystem.java

##

@@ -6515,15 +6518,16 @@ public String getLiveNodes() {

.put("nonDfsUsedSpace", node.getNonDfsUsed())

.put("capacity", node.getCapacity())

.put("numBlocks", node.numBlocks())

- .put("version", node.getSoftwareVersion())

+ .put("version", "")

Review comment:

why is this removed?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 638617)

Time Spent: 1h 20m (was: 1h 10m)

> Discover datanodes with unbalanced volume usage by the standard deviation

> --

>

> Key: HDFS-16158

> URL: https://issues.apache.org/jira/browse/HDFS-16158

> Project: Hadoop HDFS

> Issue Type: New Feature

>Reporter: tomscut

>Assignee: tomscut

>Priority: Major

> Labels: pull-request-available

> Attachments: image-2021-08-11-10-14-58-430.png

>

> Time Spent: 1h 20m

> Remaining Estimate: 0h

>

> Discover datanodes with unbalanced volume usage by the standard deviation

> In some scenarios, we may cause unbalanced datanode disk usage:

> 1. Repair the damaged disk and make it online again.

> 2. Add disks to some Datanodes.

> 3. Some disks are damaged, resulting in slow data writing.

> 4. Use some custom volume choosing policies.

> In the case of unbalanced disk usage, a sudden increase in datanode write

> traffic may result in busy disk I/O with low volume usage, resulting in

> decreased throughput across datanodes.

> In this case, we need to find these nodes in time to do diskBalance, or other

> processing. Based on the volume usage of each datanode, we can calculate the

> standard deviation of the volume usage. The more unbalanced the volume, the

> higher the standard deviation.

> To prevent the namenode from being too busy, we can calculate the standard

> variance on the datanode side, transmit it to the namenode through heartbeat,

> and display the result on the Web of namenode. We can then sort directly to

> find the nodes on the Web where the volumes usages are unbalanced.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[ https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=638024&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-638024 ] ASF GitHub Bot logged work on HDFS-16158: - Author: ASF GitHub Bot Created on: 16/Aug/21 02:37 Start Date: 16/Aug/21 02:37 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3288: URL: https://github.com/apache/hadoop/pull/3288#issuecomment-899173264 Hi @aajisaka , could you please help review the code. Thanks a lot. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 638024) Time Spent: 1h 10m (was: 1h) > Discover datanodes with unbalanced volume usage by the standard deviation > -- > > Key: HDFS-16158 > URL: https://issues.apache.org/jira/browse/HDFS-16158 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Attachments: image-2021-08-11-10-14-58-430.png > > Time Spent: 1h 10m > Remaining Estimate: 0h > > Discover datanodes with unbalanced volume usage by the standard deviation > In some scenarios, we may cause unbalanced datanode disk usage: > 1. Repair the damaged disk and make it online again. > 2. Add disks to some Datanodes. > 3. Some disks are damaged, resulting in slow data writing. > 4. Use some custom volume choosing policies. > In the case of unbalanced disk usage, a sudden increase in datanode write > traffic may result in busy disk I/O with low volume usage, resulting in > decreased throughput across datanodes. > In this case, we need to find these nodes in time to do diskBalance, or other > processing. Based on the volume usage of each datanode, we can calculate the > standard deviation of the volume usage. The more unbalanced the volume, the > higher the standard deviation. > To prevent the namenode from being too busy, we can calculate the standard > variance on the datanode side, transmit it to the namenode through heartbeat, > and display the result on the Web of namenode. We can then sort directly to > find the nodes on the Web where the volumes usages are unbalanced. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[ https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=637200&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-637200 ] ASF GitHub Bot logged work on HDFS-16158: - Author: ASF GitHub Bot Created on: 12/Aug/21 00:52 Start Date: 12/Aug/21 00:52 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3288: URL: https://github.com/apache/hadoop/pull/3288#issuecomment-897262834 These failed unit tests work fine locally. Hi @tasanuma @jojochuang @Hexiaoqiao @ayushtkn , Could you please help review the code. Thanks. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 637200) Time Spent: 1h (was: 50m) > Discover datanodes with unbalanced volume usage by the standard deviation > -- > > Key: HDFS-16158 > URL: https://issues.apache.org/jira/browse/HDFS-16158 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Labels: pull-request-available > Attachments: image-2021-08-11-10-14-58-430.png > > Time Spent: 1h > Remaining Estimate: 0h > > Discover datanodes with unbalanced volume usage by the standard deviation > In some scenarios, we may cause unbalanced datanode disk usage: > 1. Repair the damaged disk and make it online again. > 2. Add disks to some Datanodes. > 3. Some disks are damaged, resulting in slow data writing. > 4. Use some custom volume choosing policies. > In the case of unbalanced disk usage, a sudden increase in datanode write > traffic may result in busy disk I/O with low volume usage, resulting in > decreased throughput across datanodes. > In this case, we need to find these nodes in time to do diskBalance, or other > processing. Based on the volume usage of each datanode, we can calculate the > standard deviation of the volume usage. The more unbalanced the volume, the > higher the standard deviation. > To prevent the namenode from being too busy, we can calculate the standard > variance on the datanode side, transmit it to the namenode through heartbeat, > and display the result on the Web of namenode. We can then sort directly to > find the nodes on the Web where the volumes usages are unbalanced. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=637048&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-637048

]

ASF GitHub Bot logged work on HDFS-16158:

-

Author: ASF GitHub Bot

Created on: 11/Aug/21 18:29

Start Date: 11/Aug/21 18:29

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3288:

URL: https://github.com/apache/hadoop/pull/3288#issuecomment-897054535

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 53s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | buf | 0m 0s | | buf was not available. |

| +0 :ok: | jshint | 0m 0s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 27 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 33m 2s | | trunk passed |

| +1 :green_heart: | compile | 1m 23s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 14s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 3s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 21s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 58s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 29s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 15s | | trunk passed |

| +1 :green_heart: | shadedclient | 19m 8s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 14s | | the patch passed |

| +1 :green_heart: | compile | 1m 17s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | cc | 1m 17s | | the patch passed |

| +1 :green_heart: | javac | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 10s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | cc | 1m 10s | | the patch passed |

| +1 :green_heart: | javac | 1m 10s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 58s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/4/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 9 new + 869 unchanged

- 5 fixed = 878 total (was 874) |

| +1 :green_heart: | mvnsite | 1m 17s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 48s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 23s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 19s | | the patch passed |

| +1 :green_heart: | shadedclient | 18m 50s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 329m 12s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 37s | | The patch does not

generate ASF License warnings. |

| | | 421m 22s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.mover.TestMover |

| | hadoop.hdfs.server.namenode.ha.TestEditLogTailer |

| |

hadoop.hdfs.server.namenode.TestDecommissioningStatusWithBackoffMonitor |

| | hadoop.hdfs.server.namenode.TestDecommissioningStatus |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3288 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell c

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=636863&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-636863

]

ASF GitHub Bot logged work on HDFS-16158:

-

Author: ASF GitHub Bot

Created on: 11/Aug/21 11:23

Start Date: 11/Aug/21 11:23

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3288:

URL: https://github.com/apache/hadoop/pull/3288#issuecomment-896744785

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 6s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | buf | 0m 1s | | buf was not available. |

| +0 :ok: | jshint | 0m 1s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 27 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 35m 25s | | trunk passed |

| +1 :green_heart: | compile | 1m 22s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 14s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 3s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 22s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 55s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 28s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 18s | | trunk passed |

| +1 :green_heart: | shadedclient | 18m 44s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 15s | | the patch passed |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | cc | 1m 18s | | the patch passed |

| +1 :green_heart: | javac | 1m 18s | | the patch passed |

| +1 :green_heart: | compile | 1m 7s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | cc | 1m 7s | | the patch passed |

| +1 :green_heart: | javac | 1m 7s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 57s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/2/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 22 new + 869 unchanged

- 5 fixed = 891 total (was 874) |

| +1 :green_heart: | mvnsite | 1m 19s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 50s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 23s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 30s | | the patch passed |

| +1 :green_heart: | shadedclient | 19m 44s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 523m 39s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 44s | | The patch does not

generate ASF License warnings. |

| | | 619m 19s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.balancer.TestBalancerService |

| | hadoop.hdfs.server.balancer.TestBalancerWithNodeGroup |

| | hadoop.hdfs.server.datanode.TestBPOfferService |

| | hadoop.hdfs.TestRead |

| | hadoop.hdfs.TestSetrepIncreasing |

| | hadoop.hdfs.server.datanode.TestDataNodeInitStorage |

| | hadoop.hdfs.server.namenode.ha.TestEditLogTailer |

| | hadoop.hdfs.server.datanode.TestDataNodeVolumeFailure |

| |

hadoop.hdfs.server.namenode.TestDecommissioningStatusWithBackoffMonitor |

| | hadoop.hdfs.server.namenode.TestDecommissioningStatus |

| | hadoop.hdfs.server.balancer.TestBalancerWithEncryptedTransfe

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=636847&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-636847

]

ASF GitHub Bot logged work on HDFS-16158:

-

Author: ASF GitHub Bot

Created on: 11/Aug/21 10:16

Start Date: 11/Aug/21 10:16

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3288:

URL: https://github.com/apache/hadoop/pull/3288#issuecomment-896698116

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 55s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | buf | 0m 0s | | buf was not available. |

| +0 :ok: | jshint | 0m 0s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 27 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 35m 49s | | trunk passed |

| +1 :green_heart: | compile | 1m 40s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 30s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 13s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 38s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 7s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 38s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 44s | | trunk passed |

| +1 :green_heart: | shadedclient | 21m 21s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 21s | | the patch passed |

| +1 :green_heart: | compile | 1m 23s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | cc | 1m 23s | | the patch passed |

| +1 :green_heart: | javac | 1m 23s | | the patch passed |

| +1 :green_heart: | compile | 1m 13s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | cc | 1m 13s | | the patch passed |

| +1 :green_heart: | javac | 1m 13s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 56s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/3/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 13 new + 869 unchanged

- 5 fixed = 882 total (was 874) |

| +1 :green_heart: | mvnsite | 1m 20s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 56s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 25s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 43s | | the patch passed |

| +1 :green_heart: | shadedclient | 20m 52s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 380m 24s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/3/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 46s | | The patch does not

generate ASF License warnings. |

| | | 482m 8s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.server.namenode.ha.TestEditLogTailer |

| |

hadoop.hdfs.server.namenode.TestDecommissioningStatusWithBackoffMonitor |

| | hadoop.hdfs.server.namenode.TestDecommissioningStatus |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3288 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell cc buflint bufcompat

jshint |

| uname | Li

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[

https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=636699&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-636699

]

ASF GitHub Bot logged work on HDFS-16158:

-

Author: ASF GitHub Bot

Created on: 11/Aug/21 01:25

Start Date: 11/Aug/21 01:25

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3288:

URL: https://github.com/apache/hadoop/pull/3288#issuecomment-896425423

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 51s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +0 :ok: | buf | 0m 1s | | buf was not available. |

| +0 :ok: | jshint | 0m 1s | | jshint was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 27 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 57s | | trunk passed |

| +1 :green_heart: | compile | 1m 23s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 14s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 2s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 21s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 55s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 25s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 23s | | trunk passed |

| +1 :green_heart: | shadedclient | 18m 49s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 14s | | the patch passed |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | cc | 1m 18s | | the patch passed |

| -1 :x: | javac | 1m 18s |

[/results-compile-javac-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/1/artifact/out/results-compile-javac-hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04.txt)

| hadoop-hdfs-project_hadoop-hdfs-jdkUbuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 generated 1 new + 468 unchanged

- 1 fixed = 469 total (was 469) |

| +1 :green_heart: | compile | 1m 12s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | cc | 1m 12s | | the patch passed |

| -1 :x: | javac | 1m 12s |

[/results-compile-javac-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/1/artifact/out/results-compile-javac-hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt)

|

hadoop-hdfs-project_hadoop-hdfs-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10

with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 generated 1 new

+ 452 unchanged - 1 fixed = 453 total (was 453) |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| -0 :warning: | checkstyle | 0m 56s |

[/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/1/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs-project/hadoop-hdfs: The patch generated 22 new + 869 unchanged

- 5 fixed = 891 total (was 874) |

| +1 :green_heart: | mvnsite | 1m 16s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 48s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 20s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 20s | | the patch passed |

| +1 :green_heart: | shadedclient | 18m 41s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 508m 30s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3288/1/artifact/out/patch-unit-had

[jira] [Work logged] (HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation

[ https://issues.apache.org/jira/browse/HDFS-16158?focusedWorklogId=636487&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-636487 ] ASF GitHub Bot logged work on HDFS-16158: - Author: ASF GitHub Bot Created on: 10/Aug/21 15:25 Start Date: 10/Aug/21 15:25 Worklog Time Spent: 10m Work Description: tomscut opened a new pull request #3288: URL: https://github.com/apache/hadoop/pull/3288 JIRA: [HDFS-16158](https://issues.apache.org/jira/browse/HDFS-16158) Discover datanodes with unbalanced volume usage by the standard deviation In some scenarios, we may cause unbalanced datanode disk usage: 1. Repair the damaged disk and make it online again. 2. Add disks to some Datanodes. 3. Some disks are damaged, resulting in slow data writing. 4. Use some custom volume choosing policies. In the case of unbalanced disk usage, a sudden increase in datanode write traffic may result in busy disk I/O with low volume usage, resulting in decreased throughput across datanodes. In this case, we need to find these nodes in time to do diskBalance, or other processing. Based on the volume usage of each datanode, we can calculate the standard deviation of the volume usage. The more unbalanced the volume, the higher the standard deviation. To prevent the namenode from being too busy, we can calculate the standard variance on the datanode side, transmit it to the namenode through heartbeat, and display the result on the Web of namenode. We can then sort directly to find the nodes on the Web where the volumes usages are unbalanced.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 636487) Remaining Estimate: 0h Time Spent: 10m > Discover datanodes with unbalanced volume usage by the standard deviation > -- > > Key: HDFS-16158 > URL: https://issues.apache.org/jira/browse/HDFS-16158 > Project: Hadoop HDFS > Issue Type: New Feature >Reporter: tomscut >Assignee: tomscut >Priority: Major > Attachments: namenode-web.jpg > > Time Spent: 10m > Remaining Estimate: 0h > > Discover datanodes with unbalanced volume usage by the standard deviation > In some scenarios, we may cause unbalanced datanode disk usage: > 1. Repair the damaged disk and make it online again. > 2. Add disks to some Datanodes. > 3. Some disks are damaged, resulting in slow data writing. > 4. Use some custom volume choosing policies. > In the case of unbalanced disk usage, a sudden increase in datanode write > traffic may result in busy disk I/O with low volume usage, resulting in > decreased throughput across datanodes. > In this case, we need to find these nodes in time to do diskBalance, or other > processing. Based on the volume usage of each datanode, we can calculate the > standard deviation of the volume usage. The more unbalanced the volume, the > higher the standard deviation. > To prevent the namenode from being too busy, we can calculate the standard > variance on the datanode side, transmit it to the namenode through heartbeat, > and display the result on the Web of namenode. We can then sort directly to > find the nodes on the Web where the volumes usages are unbalanced. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org