[jira] [Work logged] (HDFS-16270) Improve NNThroughputBenchmark#printUsage() related to block size

[ https://issues.apache.org/jira/browse/HDFS-16270?focusedWorklogId=669879&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-669879 ] ASF GitHub Bot logged work on HDFS-16270: - Author: ASF GitHub Bot Created on: 26/Oct/21 02:23 Start Date: 26/Oct/21 02:23 Worklog Time Spent: 10m Work Description: jianghuazhu closed pull request #3547: URL: https://github.com/apache/hadoop/pull/3547 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 669879) Time Spent: 1h 20m (was: 1h 10m) > Improve NNThroughputBenchmark#printUsage() related to block size > > > Key: HDFS-16270 > URL: https://issues.apache.org/jira/browse/HDFS-16270 > Project: Hadoop HDFS > Issue Type: Improvement > Components: benchmarks, namenode >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Major > Labels: pull-request-available > Time Spent: 1h 20m > Remaining Estimate: 0h > > When using the NNThroughputBenchmark test, if the usage is not correct, we > will get some prompt messages. > E.g: > ' > If connecting to a remote NameNode with -fs option, > dfs.namenode.fs-limits.min-block-size should be set to 16. > 21/10/13 11:55:32 INFO util.ExitUtil: Exiting with status -1: ExitException > ' > Yes, this way is good. > However, the setting of'dfs.blocksize' has been completed before execution, > for example: > conf.setInt(DFSConfigKeys.DFS_BLOCK_SIZE_KEY, 16); > We will still get the above prompt, which is wrong. > At the same time, it should also be explained. The hint here should not be > for'dfs.namenode.fs-limits.min-block-size', but should be'dfs.blocksize'. > Because in the NNThroughputBenchmark construction, > the'dfs.namenode.fs-limits.min-block-size' has been set to 0 in advance. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16270) Improve NNThroughputBenchmark#printUsage() related to block size

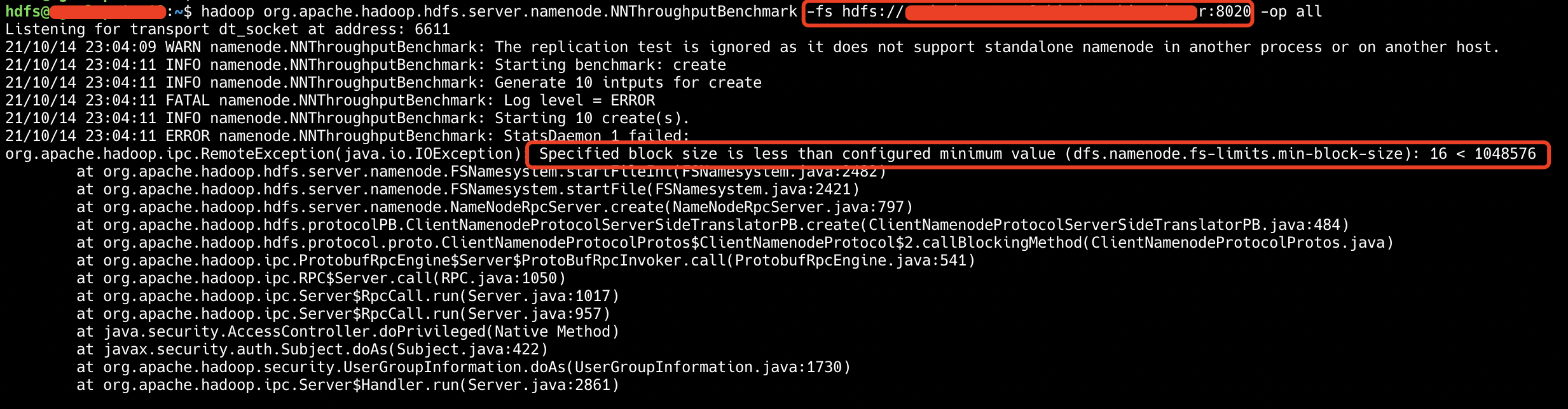

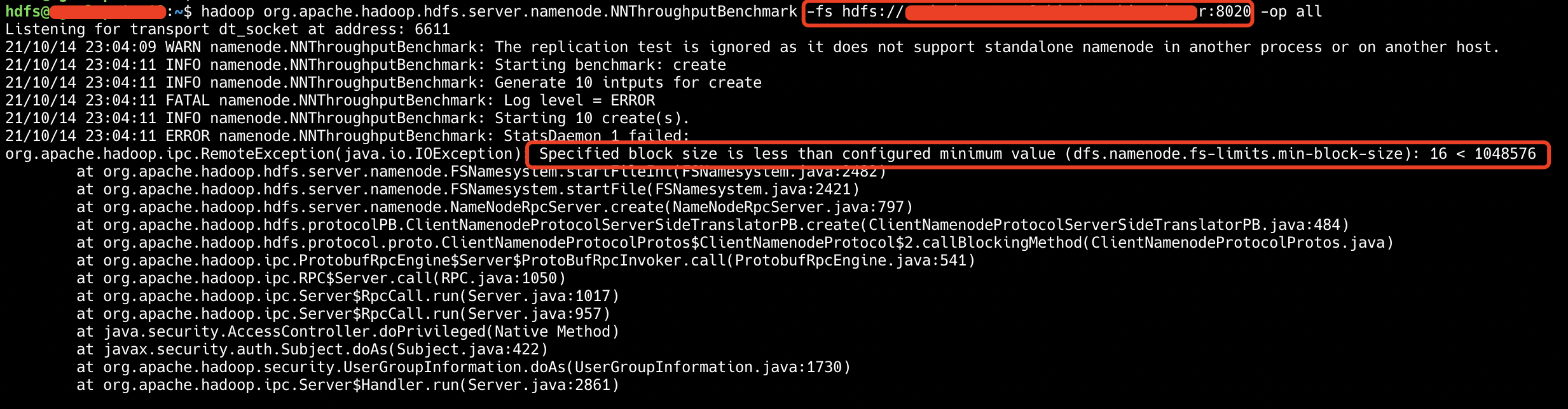

[ https://issues.apache.org/jira/browse/HDFS-16270?focusedWorklogId=665721&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-665721 ] ASF GitHub Bot logged work on HDFS-16270: - Author: ASF GitHub Bot Created on: 14/Oct/21 15:18 Start Date: 14/Oct/21 15:18 Worklog Time Spent: 10m Work Description: tomscut removed a comment on pull request #3547: URL: https://github.com/apache/hadoop/pull/3547#issuecomment-943459371 > In the results of jenkins, there are some exceptions, such as: TestHDFSFileSystemContract TestLeaseRecovery TestFileTruncate TestBlockTokenWithDFSStriped TestViewDistributedFileSystemWithMountLinks After analysis, it does not seem to have much connection with the code I submitted. > > @tomscut @prasad-acit , would you like to spend some time to help review this pr. Thank you very much. Hi @jianghuazhu , sorry for the late reply. IMO, there's nothing wrong with this hint. Because when you specify the remote namenode with ```-fs```, if you do not set ```dfs.namenode.fs-limits.min-block-size=16``` (because default ```BLOCK_SIZE = 16```), the default value of ```dfs.namenode.fs-limits.min-block-size``` on remote namenode will be 1024*1024 -> 1048576, and which will causes the following exception.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 665721) Time Spent: 1h 10m (was: 1h) > Improve NNThroughputBenchmark#printUsage() related to block size > > > Key: HDFS-16270 > URL: https://issues.apache.org/jira/browse/HDFS-16270 > Project: Hadoop HDFS > Issue Type: Improvement > Components: benchmarks, namenode >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Major > Labels: pull-request-available > Time Spent: 1h 10m > Remaining Estimate: 0h > > When using the NNThroughputBenchmark test, if the usage is not correct, we > will get some prompt messages. > E.g: > ' > If connecting to a remote NameNode with -fs option, > dfs.namenode.fs-limits.min-block-size should be set to 16. > 21/10/13 11:55:32 INFO util.ExitUtil: Exiting with status -1: ExitException > ' > Yes, this way is good. > However, the setting of'dfs.blocksize' has been completed before execution, > for example: > conf.setInt(DFSConfigKeys.DFS_BLOCK_SIZE_KEY, 16); > We will still get the above prompt, which is wrong. > At the same time, it should also be explained. The hint here should not be > for'dfs.namenode.fs-limits.min-block-size', but should be'dfs.blocksize'. > Because in the NNThroughputBenchmark construction, > the'dfs.namenode.fs-limits.min-block-size' has been set to 0 in advance. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16270) Improve NNThroughputBenchmark#printUsage() related to block size

[ https://issues.apache.org/jira/browse/HDFS-16270?focusedWorklogId=665719&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-665719 ] ASF GitHub Bot logged work on HDFS-16270: - Author: ASF GitHub Bot Created on: 14/Oct/21 15:17 Start Date: 14/Oct/21 15:17 Worklog Time Spent: 10m Work Description: tomscut commented on pull request #3547: URL: https://github.com/apache/hadoop/pull/3547#issuecomment-943459371 > In the results of jenkins, there are some exceptions, such as: TestHDFSFileSystemContract TestLeaseRecovery TestFileTruncate TestBlockTokenWithDFSStriped TestViewDistributedFileSystemWithMountLinks After analysis, it does not seem to have much connection with the code I submitted. > > @tomscut @prasad-acit , would you like to spend some time to help review this pr. Thank you very much. Hi @jianghuazhu , sorry for the late reply. IMO, there's nothing wrong with this hint. Because when you specify the remote namenode with ```-fs```, if you do not set ```dfs.namenode.fs-limits.min-block-size=16``` (because default ```BLOCK_SIZE = 16```), the default value of ```dfs.namenode.fs-limits.min-block-size``` on remote namenode will be 1024*1024 -> 1048576, and which will causes the following exception.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 665719) Time Spent: 1h (was: 50m) > Improve NNThroughputBenchmark#printUsage() related to block size > > > Key: HDFS-16270 > URL: https://issues.apache.org/jira/browse/HDFS-16270 > Project: Hadoop HDFS > Issue Type: Improvement > Components: benchmarks, namenode >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Major > Labels: pull-request-available > Time Spent: 1h > Remaining Estimate: 0h > > When using the NNThroughputBenchmark test, if the usage is not correct, we > will get some prompt messages. > E.g: > ' > If connecting to a remote NameNode with -fs option, > dfs.namenode.fs-limits.min-block-size should be set to 16. > 21/10/13 11:55:32 INFO util.ExitUtil: Exiting with status -1: ExitException > ' > Yes, this way is good. > However, the setting of'dfs.blocksize' has been completed before execution, > for example: > conf.setInt(DFSConfigKeys.DFS_BLOCK_SIZE_KEY, 16); > We will still get the above prompt, which is wrong. > At the same time, it should also be explained. The hint here should not be > for'dfs.namenode.fs-limits.min-block-size', but should be'dfs.blocksize'. > Because in the NNThroughputBenchmark construction, > the'dfs.namenode.fs-limits.min-block-size' has been set to 0 in advance. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16270) Improve NNThroughputBenchmark#printUsage() related to block size

[ https://issues.apache.org/jira/browse/HDFS-16270?focusedWorklogId=665490&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-665490 ] ASF GitHub Bot logged work on HDFS-16270: - Author: ASF GitHub Bot Created on: 14/Oct/21 02:17 Start Date: 14/Oct/21 02:17 Worklog Time Spent: 10m Work Description: jianghuazhu commented on pull request #3547: URL: https://github.com/apache/hadoop/pull/3547#issuecomment-942885036 In the results of jenkins, there are some exceptions, such as: TestHDFSFileSystemContract TestLeaseRecovery TestFileTruncate TestBlockTokenWithDFSStriped TestViewDistributedFileSystemWithMountLinks After analysis, it does not seem to have much connection with the code I submitted. @tomscut @prasad-acit , would you like to spend some time to help review this pr. Thank you very much. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 665490) Time Spent: 50m (was: 40m) > Improve NNThroughputBenchmark#printUsage() related to block size > > > Key: HDFS-16270 > URL: https://issues.apache.org/jira/browse/HDFS-16270 > Project: Hadoop HDFS > Issue Type: Improvement > Components: benchmarks, namenode >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Major > Labels: pull-request-available > Time Spent: 50m > Remaining Estimate: 0h > > When using the NNThroughputBenchmark test, if the usage is not correct, we > will get some prompt messages. > E.g: > ' > If connecting to a remote NameNode with -fs option, > dfs.namenode.fs-limits.min-block-size should be set to 16. > 21/10/13 11:55:32 INFO util.ExitUtil: Exiting with status -1: ExitException > ' > Yes, this way is good. > However, the setting of'dfs.blocksize' has been completed before execution, > for example: > conf.setInt(DFSConfigKeys.DFS_BLOCK_SIZE_KEY, 16); > We will still get the above prompt, which is wrong. > At the same time, it should also be explained. The hint here should not be > for'dfs.namenode.fs-limits.min-block-size', but should be'dfs.blocksize'. > Because in the NNThroughputBenchmark construction, > the'dfs.namenode.fs-limits.min-block-size' has been set to 0 in advance. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16270) Improve NNThroughputBenchmark#printUsage() related to block size

[

https://issues.apache.org/jira/browse/HDFS-16270?focusedWorklogId=665131&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-665131

]

ASF GitHub Bot logged work on HDFS-16270:

-

Author: ASF GitHub Bot

Created on: 13/Oct/21 18:47

Start Date: 13/Oct/21 18:47

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3547:

URL: https://github.com/apache/hadoop/pull/3547#issuecomment-942349833

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 58s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 34m 16s | | trunk passed |

| +1 :green_heart: | compile | 1m 24s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 15s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 1s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 20s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 56s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 26s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 14s | | trunk passed |

| +1 :green_heart: | shadedclient | 24m 12s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 14s | | the patch passed |

| +1 :green_heart: | compile | 1m 17s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 8s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 8s | | the patch passed |

| +1 :green_heart: | blanks | 0m 1s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 53s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 16s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 47s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 18s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 20s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 54s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 383m 26s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3547/1/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 54s | | The patch does not

generate ASF License warnings. |

| | | 487m 4s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestHDFSFileSystemContract |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3547/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3547 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux b9993f782838 4.15.0-147-generic #151-Ubuntu SMP Fri Jun 18

19:21:19 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 4b3a9d7e04fd7e7d55e1af93e07691b379e8d8c7 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3547/1/testReport/ |

| Max. process+thread count | 2009 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdf

[jira] [Work logged] (HDFS-16270) Improve NNThroughputBenchmark#printUsage() related to block size

[ https://issues.apache.org/jira/browse/HDFS-16270?focusedWorklogId=664971&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-664971 ] ASF GitHub Bot logged work on HDFS-16270: - Author: ASF GitHub Bot Created on: 13/Oct/21 18:32 Start Date: 13/Oct/21 18:32 Worklog Time Spent: 10m Work Description: jianghuazhu opened a new pull request #3547: URL: https://github.com/apache/hadoop/pull/3547 ### Description of PR When NNThroughputBenchmark#printUsage() is executed, some configurations are verified first, and the correct prompts are printed. ### How was this patch tested? This is to print some information, the test pressure is not great. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 664971) Time Spent: 0.5h (was: 20m) > Improve NNThroughputBenchmark#printUsage() related to block size > > > Key: HDFS-16270 > URL: https://issues.apache.org/jira/browse/HDFS-16270 > Project: Hadoop HDFS > Issue Type: Improvement > Components: benchmarks, namenode >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Major > Labels: pull-request-available > Time Spent: 0.5h > Remaining Estimate: 0h > > When using the NNThroughputBenchmark test, if the usage is not correct, we > will get some prompt messages. > E.g: > ' > If connecting to a remote NameNode with -fs option, > dfs.namenode.fs-limits.min-block-size should be set to 16. > 21/10/13 11:55:32 INFO util.ExitUtil: Exiting with status -1: ExitException > ' > Yes, this way is good. > However, the setting of'dfs.blocksize' has been completed before execution, > for example: > conf.setInt(DFSConfigKeys.DFS_BLOCK_SIZE_KEY, 16); > We will still get the above prompt, which is wrong. > At the same time, it should also be explained. The hint here should not be > for'dfs.namenode.fs-limits.min-block-size', but should be'dfs.blocksize'. > Because in the NNThroughputBenchmark construction, > the'dfs.namenode.fs-limits.min-block-size' has been set to 0 in advance. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16270) Improve NNThroughputBenchmark#printUsage() related to block size

[

https://issues.apache.org/jira/browse/HDFS-16270?focusedWorklogId=664656&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-664656

]

ASF GitHub Bot logged work on HDFS-16270:

-

Author: ASF GitHub Bot

Created on: 13/Oct/21 14:13

Start Date: 13/Oct/21 14:13

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3547:

URL: https://github.com/apache/hadoop/pull/3547#issuecomment-942349833

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 58s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 34m 16s | | trunk passed |

| +1 :green_heart: | compile | 1m 24s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 15s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 1s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 20s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 56s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 26s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 14s | | trunk passed |

| +1 :green_heart: | shadedclient | 24m 12s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 14s | | the patch passed |

| +1 :green_heart: | compile | 1m 17s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 8s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 8s | | the patch passed |

| +1 :green_heart: | blanks | 0m 1s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 53s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 16s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 47s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 18s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 20s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 54s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 383m 26s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3547/1/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 54s | | The patch does not

generate ASF License warnings. |

| | | 487m 4s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestHDFSFileSystemContract |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3547/1/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3547 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux b9993f782838 4.15.0-147-generic #151-Ubuntu SMP Fri Jun 18

19:21:19 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 4b3a9d7e04fd7e7d55e1af93e07691b379e8d8c7 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3547/1/testReport/ |

| Max. process+thread count | 2009 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdf

[jira] [Work logged] (HDFS-16270) Improve NNThroughputBenchmark#printUsage() related to block size

[ https://issues.apache.org/jira/browse/HDFS-16270?focusedWorklogId=664469&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-664469 ] ASF GitHub Bot logged work on HDFS-16270: - Author: ASF GitHub Bot Created on: 13/Oct/21 06:04 Start Date: 13/Oct/21 06:04 Worklog Time Spent: 10m Work Description: jianghuazhu opened a new pull request #3547: URL: https://github.com/apache/hadoop/pull/3547 ### Description of PR When NNThroughputBenchmark#printUsage() is executed, some configurations are verified first, and the correct prompts are printed. ### How was this patch tested? This is to print some information, the test pressure is not great. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 664469) Remaining Estimate: 0h Time Spent: 10m > Improve NNThroughputBenchmark#printUsage() related to block size > > > Key: HDFS-16270 > URL: https://issues.apache.org/jira/browse/HDFS-16270 > Project: Hadoop HDFS > Issue Type: Improvement > Components: benchmarks, namenode >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Major > Time Spent: 10m > Remaining Estimate: 0h > > When using the NNThroughputBenchmark test, if the usage is not correct, we > will get some prompt messages. > E.g: > ' > If connecting to a remote NameNode with -fs option, > dfs.namenode.fs-limits.min-block-size should be set to 16. > 21/10/13 11:55:32 INFO util.ExitUtil: Exiting with status -1: ExitException > ' > Yes, this way is good. > However, the setting of'dfs.blocksize' has been completed before execution, > for example: > conf.setInt(DFSConfigKeys.DFS_BLOCK_SIZE_KEY, 16); > We will still get the above prompt, which is wrong. > At the same time, it should also be explained. The hint here should not be > for'dfs.namenode.fs-limits.min-block-size', but should be'dfs.blocksize'. > Because in the NNThroughputBenchmark construction, > the'dfs.namenode.fs-limits.min-block-size' has been set to 0 in advance. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org