[jira] [Work logged] (BEAM-3072) Improve error handling at staging time time for DataflowRunner

[ https://issues.apache.org/jira/browse/BEAM-3072?focusedWorklogId=220422&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220422 ] ASF GitHub Bot logged work on BEAM-3072: Author: ASF GitHub Bot Created on: 29/Mar/19 07:22 Start Date: 29/Mar/19 07:22 Worklog Time Spent: 10m Work Description: NikeNano commented on issue #8158: [BEAM-3072] updates to that the error handling and collected the fail… URL: https://github.com/apache/beam/pull/8158#issuecomment-477895867 @aaltay after thinking about it a bit more, could you elaborate on how "hat errors are captured as expected" and i will add some tests for it. I have set it up so that errors ar captured both if the command is not present compare to default "No such file or director" [1]. If the command is valid but the arguments are incorrect an error is rased giving the command stating that the arguments are wrong. @pabloem I have resolved the error in the build concerning the formatting. [1] https://stackoverflow.com/questions/24306205/file-not-found-error-when-launching-a-subprocess-containing-piped-commands This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220422) Time Spent: 2.5h (was: 2h 20m) > Improve error handling at staging time time for DataflowRunner > -- > > Key: BEAM-3072 > URL: https://issues.apache.org/jira/browse/BEAM-3072 > Project: Beam > Issue Type: Bug > Components: sdk-py-core >Reporter: Ahmet Altay >Assignee: niklas Hansson >Priority: Minor > Labels: starter, triaged > Time Spent: 2.5h > Remaining Estimate: 0h > > dependency.py calls out to external process to collect dependencies: > https://github.com/apache/beam/blob/de7cc05cc67d1aa6331cddc17c2e02ed0efbe37d/sdks/python/apache_beam/runners/dataflow/internal/dependency.py#L263 > If these calls fails, the error is not clear. The error only tells what > failed but does not show the actual error message, and is not helpful for > users. > As a general fix processes.py should have general better output collection > from failed processes. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-3072) Improve error handling at staging time time for DataflowRunner

[ https://issues.apache.org/jira/browse/BEAM-3072?focusedWorklogId=220423&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220423 ] ASF GitHub Bot logged work on BEAM-3072: Author: ASF GitHub Bot Created on: 29/Mar/19 07:23 Start Date: 29/Mar/19 07:23 Worklog Time Spent: 10m Work Description: NikeNano commented on issue #8158: [BEAM-3072] updates to that the error handling and collected the fail… URL: https://github.com/apache/beam/pull/8158#issuecomment-477895867 @aaltay after thinking about it a bit more, could you elaborate on "errors are captured as expected" and I will add some tests for it. I have set it up so that errors are captured both if the command is not present and raise a more meaningful error, compare to default "No such file or director" [1]. If the command is valid but the arguments are incorrect an error is rased giving the command stating that the arguments are wrong. @pabloem I have resolved the error in the build concerning the formatting. [1] https://stackoverflow.com/questions/24306205/file-not-found-error-when-launching-a-subprocess-containing-piped-commands This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220423) Time Spent: 2h 40m (was: 2.5h) > Improve error handling at staging time time for DataflowRunner > -- > > Key: BEAM-3072 > URL: https://issues.apache.org/jira/browse/BEAM-3072 > Project: Beam > Issue Type: Bug > Components: sdk-py-core >Reporter: Ahmet Altay >Assignee: niklas Hansson >Priority: Minor > Labels: starter, triaged > Time Spent: 2h 40m > Remaining Estimate: 0h > > dependency.py calls out to external process to collect dependencies: > https://github.com/apache/beam/blob/de7cc05cc67d1aa6331cddc17c2e02ed0efbe37d/sdks/python/apache_beam/runners/dataflow/internal/dependency.py#L263 > If these calls fails, the error is not clear. The error only tells what > failed but does not show the actual error message, and is not helpful for > users. > As a general fix processes.py should have general better output collection > from failed processes. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Closed] (BEAM-6703) Support Java 11 in Jenkins

[ https://issues.apache.org/jira/browse/BEAM-6703?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Michal Walenia closed BEAM-6703. Resolution: Done Fix Version/s: Not applicable > Support Java 11 in Jenkins > -- > > Key: BEAM-6703 > URL: https://issues.apache.org/jira/browse/BEAM-6703 > Project: Beam > Issue Type: Sub-task > Components: runner-dataflow, runner-direct >Reporter: Michal Walenia >Assignee: Michal Walenia >Priority: Minor > Fix For: Not applicable > > Time Spent: 15h 40m > Remaining Estimate: 0h > > In this issue I'll create a Jenkins job that compiles Dataflow and Direct > runners with tests using Java 8 and runs Validates Runner suites with Java 11 > Runtime. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Created] (BEAM-6936) Add a Jenkins job running Java examples on Java 11 Dataflow

Michal Walenia created BEAM-6936: Summary: Add a Jenkins job running Java examples on Java 11 Dataflow Key: BEAM-6936 URL: https://issues.apache.org/jira/browse/BEAM-6936 Project: Beam Issue Type: Sub-task Components: examples-java, testing Reporter: Michal Walenia Assignee: Michal Walenia -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (BEAM-6910) Beam does not consider BigQuery's processing location when getting query results

[

https://issues.apache.org/jira/browse/BEAM-6910?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16804669#comment-16804669

]

niklas Hansson commented on BEAM-6910:

--

As far as I understand this issue is solved in BEAM-6909. I will leave this

task. Let me know if there is anything that needs to be done.

> Beam does not consider BigQuery's processing location when getting query

> results

>

>

> Key: BEAM-6910

> URL: https://issues.apache.org/jira/browse/BEAM-6910

> Project: Beam

> Issue Type: Bug

> Components: dependencies, runner-dataflow, sdk-py-core

>Affects Versions: 2.11.0

> Environment: Python

>Reporter: Graham Polley

>Assignee: niklas Hansson

>Priority: Major

>

> When using the BigQuery source with a SQL query in a pipeline, the

> "processing location" is not taken into consideration and the pipeline fails.

> For example, consider the following which uses {{BigQuerySource}} to read

> from BigQuery using some SQL. The BigQuery dataset and tables are located in

> {{australia-southeast1}}. The query is submitted successfully ([Beam works

> out the processing location by examining the first table referenced in the

> query and sets it

> accordingly|https://github.com/apache/beam/blob/master/sdks/python/apache_beam/io/gcp/bigquery_tools.py#L221]),

> but when Beam attempts to poll for the job status after it has been

> submitted, it fails because it doesn't set the {{location}} to be

> {{australia-southeast1}}, which is required by BigQuery:

>

> {code:java}

> p | 'read' >> beam.io.Read(beam.io.BigQuerySource(use_standard_sql=True,

> query='SELECT * from

> `a_project_id.dataset_in_australia.table_in_australia`'){code}

>

> {code:java}

> HttpNotFoundError: HttpError accessing

> :

> response: <{'status': '404', 'content-length': '328', 'x-xss-protection':

> '1; mode=block', 'x-content-type-options': 'nosniff', 'transfer-encoding':

> 'chunked', 'vary': 'Origin, X-Origin, Referer', 'server': 'ESF',

> '-content-encoding': 'gzip', 'cache-control': 'private', 'date': 'Tue, 26 Mar

> 2019 03:11:32 GMT', 'x-frame-options': 'SAMEORIGIN', 'alt-svc': 'quic=":443";

> ma=2592000; v="46,44,43,39"', 'content-type': 'application/json;

> charset=UTF-8'}>, content <{

> "error": {

> "code": 404,

> "message": "Not found: Job a_project_id:5ad9cc803baa432290b6cd0203f556d9",

> "errors": [

> {

> "message": "Not found: Job

> a_project_id:5ad9cc803baa432290b6cd0203f556d9",

> "domain": "global",

> "reason": "notFound"

> }

> ],

> "status": "NOT_FOUND"

> }

> }

> {code}

>

> The problem can be seen/found here:

> [https://github.com/apache/beam/blob/v2.11.0/sdks/python/apache_beam/io/gcp/bigquery_tools.py#L571]

> [https://github.com/apache/beam/blob/master/sdks/python/apache_beam/io/gcp/bigquery_tools.py#L357]

> The location of the job (in this case {{australia-southeast1}}) needs to

> set/inferred (or exposed via the API), otherwise its fails.

> For reference, Airflow had the same bug/problem:

> [https://github.com/apache/airflow/pull/4695]

>

>

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Work logged] (BEAM-5995) Create Jenkins jobs to run the load tests

[ https://issues.apache.org/jira/browse/BEAM-5995?focusedWorklogId=220431&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220431 ] ASF GitHub Bot logged work on BEAM-5995: Author: ASF GitHub Bot Created on: 29/Mar/19 08:30 Start Date: 29/Mar/19 08:30 Worklog Time Spent: 10m Work Description: kkucharc commented on issue #8151: [BEAM-5995] add Jenkins job with GBK Python load tests URL: https://github.com/apache/beam/pull/8151#issuecomment-477912806 run seed job This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220431) Time Spent: 16.5h (was: 16h 20m) > Create Jenkins jobs to run the load tests > - > > Key: BEAM-5995 > URL: https://issues.apache.org/jira/browse/BEAM-5995 > Project: Beam > Issue Type: Sub-task > Components: testing >Reporter: Kasia Kucharczyk >Assignee: Kasia Kucharczyk >Priority: Major > Labels: triaged > Time Spent: 16.5h > Remaining Estimate: 0h > > (/) Add SMOKE test > Add GBK load tests. > Add CoGBK load tests. > Add Pardo load tests. > Add SideInput tests. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-5995) Create Jenkins jobs to run the load tests

[ https://issues.apache.org/jira/browse/BEAM-5995?focusedWorklogId=220432&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220432 ] ASF GitHub Bot logged work on BEAM-5995: Author: ASF GitHub Bot Created on: 29/Mar/19 08:30 Start Date: 29/Mar/19 08:30 Worklog Time Spent: 10m Work Description: kkucharc commented on issue #8151: [BEAM-5995] add Jenkins job with GBK Python load tests URL: https://github.com/apache/beam/pull/8151#issuecomment-477676995 run seed job This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220432) Time Spent: 16h 40m (was: 16.5h) > Create Jenkins jobs to run the load tests > - > > Key: BEAM-5995 > URL: https://issues.apache.org/jira/browse/BEAM-5995 > Project: Beam > Issue Type: Sub-task > Components: testing >Reporter: Kasia Kucharczyk >Assignee: Kasia Kucharczyk >Priority: Major > Labels: triaged > Time Spent: 16h 40m > Remaining Estimate: 0h > > (/) Add SMOKE test > Add GBK load tests. > Add CoGBK load tests. > Add Pardo load tests. > Add SideInput tests. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-6922) Remove LGPL test library dependency in cassandraio-test

[ https://issues.apache.org/jira/browse/BEAM-6922?focusedWorklogId=220433&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220433 ] ASF GitHub Bot logged work on BEAM-6922: Author: ASF GitHub Bot Created on: 29/Mar/19 08:35 Start Date: 29/Mar/19 08:35 Worklog Time Spent: 10m Work Description: echauchot commented on issue #8160: [BEAM-6922] do not deliver cassandra-io test jar and test-sources jar URL: https://github.com/apache/beam/pull/8160#issuecomment-477914123 @apilloud as you which. Let's see with the upcoming Achilles release maybe this week end This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220433) Time Spent: 40m (was: 0.5h) > Remove LGPL test library dependency in cassandraio-test > --- > > Key: BEAM-6922 > URL: https://issues.apache.org/jira/browse/BEAM-6922 > Project: Beam > Issue Type: Bug > Components: io-java-cassandra >Reporter: Etienne Chauchot >Assignee: Etienne Chauchot >Priority: Blocker > Fix For: 2.12.0 > > Time Spent: 40m > Remaining Estimate: 0h > > cassandra-io tests use cassandra-unit test library that has LGPLV3 ASF > category X licence , we cannot deliver test jars that depend on LGPL licence. > A similar discussion at > https://issues.apache.org/jira/browse/LEGAL-153?focusedCommentId=13548819 -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-5995) Create Jenkins jobs to run the load tests

[ https://issues.apache.org/jira/browse/BEAM-5995?focusedWorklogId=220436&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220436 ] ASF GitHub Bot logged work on BEAM-5995: Author: ASF GitHub Bot Created on: 29/Mar/19 08:54 Start Date: 29/Mar/19 08:54 Worklog Time Spent: 10m Work Description: kkucharc commented on issue #8151: [BEAM-5995] add Jenkins job with GBK Python load tests URL: https://github.com/apache/beam/pull/8151#issuecomment-477919548 Run Java Load Tests Smoke This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220436) Time Spent: 17h (was: 16h 50m) > Create Jenkins jobs to run the load tests > - > > Key: BEAM-5995 > URL: https://issues.apache.org/jira/browse/BEAM-5995 > Project: Beam > Issue Type: Sub-task > Components: testing >Reporter: Kasia Kucharczyk >Assignee: Kasia Kucharczyk >Priority: Major > Labels: triaged > Time Spent: 17h > Remaining Estimate: 0h > > (/) Add SMOKE test > Add GBK load tests. > Add CoGBK load tests. > Add Pardo load tests. > Add SideInput tests. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-5995) Create Jenkins jobs to run the load tests

[ https://issues.apache.org/jira/browse/BEAM-5995?focusedWorklogId=220435&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220435 ] ASF GitHub Bot logged work on BEAM-5995: Author: ASF GitHub Bot Created on: 29/Mar/19 08:54 Start Date: 29/Mar/19 08:54 Worklog Time Spent: 10m Work Description: kkucharc commented on issue #8151: [BEAM-5995] add Jenkins job with GBK Python load tests URL: https://github.com/apache/beam/pull/8151#issuecomment-477919504 Run Python Load Tests Smoke This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220435) Time Spent: 16h 50m (was: 16h 40m) > Create Jenkins jobs to run the load tests > - > > Key: BEAM-5995 > URL: https://issues.apache.org/jira/browse/BEAM-5995 > Project: Beam > Issue Type: Sub-task > Components: testing >Reporter: Kasia Kucharczyk >Assignee: Kasia Kucharczyk >Priority: Major > Labels: triaged > Time Spent: 16h 50m > Remaining Estimate: 0h > > (/) Add SMOKE test > Add GBK load tests. > Add CoGBK load tests. > Add Pardo load tests. > Add SideInput tests. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-5995) Create Jenkins jobs to run the load tests

[ https://issues.apache.org/jira/browse/BEAM-5995?focusedWorklogId=220440&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220440 ] ASF GitHub Bot logged work on BEAM-5995: Author: ASF GitHub Bot Created on: 29/Mar/19 08:55 Start Date: 29/Mar/19 08:55 Worklog Time Spent: 10m Work Description: kkucharc commented on issue #8151: [BEAM-5995] add Jenkins job with GBK Python load tests URL: https://github.com/apache/beam/pull/8151#issuecomment-477161668 Run Java Load Tests Smoke This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220440) Time Spent: 17.5h (was: 17h 20m) > Create Jenkins jobs to run the load tests > - > > Key: BEAM-5995 > URL: https://issues.apache.org/jira/browse/BEAM-5995 > Project: Beam > Issue Type: Sub-task > Components: testing >Reporter: Kasia Kucharczyk >Assignee: Kasia Kucharczyk >Priority: Major > Labels: triaged > Time Spent: 17.5h > Remaining Estimate: 0h > > (/) Add SMOKE test > Add GBK load tests. > Add CoGBK load tests. > Add Pardo load tests. > Add SideInput tests. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-5995) Create Jenkins jobs to run the load tests

[ https://issues.apache.org/jira/browse/BEAM-5995?focusedWorklogId=220438&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220438 ] ASF GitHub Bot logged work on BEAM-5995: Author: ASF GitHub Bot Created on: 29/Mar/19 08:55 Start Date: 29/Mar/19 08:55 Worklog Time Spent: 10m Work Description: kkucharc commented on issue #8151: [BEAM-5995] add Jenkins job with GBK Python load tests URL: https://github.com/apache/beam/pull/8151#issuecomment-477919844 Run Load Tests Java GBK Dataflow Batch This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220438) Time Spent: 17h 10m (was: 17h) > Create Jenkins jobs to run the load tests > - > > Key: BEAM-5995 > URL: https://issues.apache.org/jira/browse/BEAM-5995 > Project: Beam > Issue Type: Sub-task > Components: testing >Reporter: Kasia Kucharczyk >Assignee: Kasia Kucharczyk >Priority: Major > Labels: triaged > Time Spent: 17h 10m > Remaining Estimate: 0h > > (/) Add SMOKE test > Add GBK load tests. > Add CoGBK load tests. > Add Pardo load tests. > Add SideInput tests. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-5995) Create Jenkins jobs to run the load tests

[ https://issues.apache.org/jira/browse/BEAM-5995?focusedWorklogId=220441&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220441 ] ASF GitHub Bot logged work on BEAM-5995: Author: ASF GitHub Bot Created on: 29/Mar/19 08:55 Start Date: 29/Mar/19 08:55 Worklog Time Spent: 10m Work Description: kkucharc commented on issue #8151: [BEAM-5995] add Jenkins job with GBK Python load tests URL: https://github.com/apache/beam/pull/8151#issuecomment-477164083 Run Python Load Tests Smoke This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220441) Time Spent: 17h 40m (was: 17.5h) > Create Jenkins jobs to run the load tests > - > > Key: BEAM-5995 > URL: https://issues.apache.org/jira/browse/BEAM-5995 > Project: Beam > Issue Type: Sub-task > Components: testing >Reporter: Kasia Kucharczyk >Assignee: Kasia Kucharczyk >Priority: Major > Labels: triaged > Time Spent: 17h 40m > Remaining Estimate: 0h > > (/) Add SMOKE test > Add GBK load tests. > Add CoGBK load tests. > Add Pardo load tests. > Add SideInput tests. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-5995) Create Jenkins jobs to run the load tests

[ https://issues.apache.org/jira/browse/BEAM-5995?focusedWorklogId=220439&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220439 ] ASF GitHub Bot logged work on BEAM-5995: Author: ASF GitHub Bot Created on: 29/Mar/19 08:55 Start Date: 29/Mar/19 08:55 Worklog Time Spent: 10m Work Description: kkucharc commented on issue #8151: [BEAM-5995] add Jenkins job with GBK Python load tests URL: https://github.com/apache/beam/pull/8151#issuecomment-477919896 Run Python Load Tests GBK Dataflow Batch This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220439) Time Spent: 17h 20m (was: 17h 10m) > Create Jenkins jobs to run the load tests > - > > Key: BEAM-5995 > URL: https://issues.apache.org/jira/browse/BEAM-5995 > Project: Beam > Issue Type: Sub-task > Components: testing >Reporter: Kasia Kucharczyk >Assignee: Kasia Kucharczyk >Priority: Major > Labels: triaged > Time Spent: 17h 20m > Remaining Estimate: 0h > > (/) Add SMOKE test > Add GBK load tests. > Add CoGBK load tests. > Add Pardo load tests. > Add SideInput tests. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-1893) Add IO module for Couchbase

[

https://issues.apache.org/jira/browse/BEAM-1893?focusedWorklogId=220465&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220465

]

ASF GitHub Bot logged work on BEAM-1893:

Author: ASF GitHub Bot

Created on: 29/Mar/19 09:57

Start Date: 29/Mar/19 09:57

Worklog Time Spent: 10m

Work Description: aromanenko-dev commented on issue #8152:

[DoNotMerge][BEAM-1893] Implementation of CouchbaseIO

URL: https://github.com/apache/beam/pull/8152#issuecomment-477938700

@iemejia This mappings are on low level and this API is not supposed to be

exposed to users, as @EdgarLGB said. So, I think we need to follow an approach

based on splitting by number of records and offset, and implement it using

`ParDo`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 220465)

Time Spent: 2h 20m (was: 2h 10m)

> Add IO module for Couchbase

> ---

>

> Key: BEAM-1893

> URL: https://issues.apache.org/jira/browse/BEAM-1893

> Project: Beam

> Issue Type: New Feature

> Components: io-ideas

>Reporter: Xu Mingmin

>Assignee: LI Guobao

>Priority: Major

> Labels: Couchbase, IO, features, triaged

> Time Spent: 2h 20m

> Remaining Estimate: 0h

>

> Create a {{CouchbaseIO}} for Couchbase database.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Work logged] (BEAM-1893) Add IO module for Couchbase

[

https://issues.apache.org/jira/browse/BEAM-1893?focusedWorklogId=220467&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220467

]

ASF GitHub Bot logged work on BEAM-1893:

Author: ASF GitHub Bot

Created on: 29/Mar/19 09:57

Start Date: 29/Mar/19 09:57

Worklog Time Spent: 10m

Work Description: aromanenko-dev commented on issue #8152:

[DoNotMerge][BEAM-1893] Implementation of CouchbaseIO

URL: https://github.com/apache/beam/pull/8152#issuecomment-477938700

@iemejia These mappings are on low level and this API is not supposed to be

exposed to users, as @EdgarLGB said. So, I think we need to follow an approach

based on splitting by number of records and offset, and implement it using

`ParDo`

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 220467)

Time Spent: 2.5h (was: 2h 20m)

> Add IO module for Couchbase

> ---

>

> Key: BEAM-1893

> URL: https://issues.apache.org/jira/browse/BEAM-1893

> Project: Beam

> Issue Type: New Feature

> Components: io-ideas

>Reporter: Xu Mingmin

>Assignee: LI Guobao

>Priority: Major

> Labels: Couchbase, IO, features, triaged

> Time Spent: 2.5h

> Remaining Estimate: 0h

>

> Create a {{CouchbaseIO}} for Couchbase database.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Work logged] (BEAM-6929) Session Windows with lateness cause NullPointerException in Flink Runner

[

https://issues.apache.org/jira/browse/BEAM-6929?focusedWorklogId=220470&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220470

]

ASF GitHub Bot logged work on BEAM-6929:

Author: ASF GitHub Bot

Created on: 29/Mar/19 10:08

Start Date: 29/Mar/19 10:08

Worklog Time Spent: 10m

Work Description: mxm commented on issue #8162: [BEAM-6929] Prevent

NullPointerException in Flink's CombiningState

URL: https://github.com/apache/beam/pull/8162#issuecomment-477942111

>Is it a release blocker though? This bug has existed presumably for many

releases.

I'd say yes because it is reported as a blocker for session windows by a

user on the mailing list. I've verified that it prevents merging session

windows when late data arrives.

The bug in Spark's state internals was easy to fix. It assumed that

accumulators are always modified in place, which does not have to be the case

(though it usually is for performance reasons).

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 220470)

Time Spent: 50m (was: 40m)

> Session Windows with lateness cause NullPointerException in Flink Runner

>

>

> Key: BEAM-6929

> URL: https://issues.apache.org/jira/browse/BEAM-6929

> Project: Beam

> Issue Type: Bug

> Components: runner-flink

>Reporter: Maximilian Michels

>Assignee: Maximilian Michels

>Priority: Major

> Fix For: 2.12.0

>

> Time Spent: 50m

> Remaining Estimate: 0h

>

> Reported on the mailing list:

> {noformat}

> I am using Beam 2.11.0, Runner - beam-runners-flink-1.7, Flink Cluster -

> 1.7.2.

> I have this flow in my pipeline:

> KafkaSource(withCreateTime()) --> ApplyWindow(SessionWindow with

> gapDuration=1 Minute, lateness=3 Minutes, AccumulatingFiredPanes, default

> trigger) --> BeamSQL(GroupBy query) --> Window.remerge() --> Enrichment

> --> KafkaSink

> I am generating data in such a way that the first two records belong to two

> different sessions. And, generating the third record before the first session

> expires with the timestamp for the third record in such a way that the two

> sessions will be merged to become a single session.

> For Example, These are the sample input and output obtained when I ran the

> same pipeline in DirectRunner.

> Sample Input:

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-27-44"}}

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-28-51"}}

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-28-26"}}

> Sample Output:

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":1},"WST":{"string":"2019-03-27

> 15-27-44"},"WET":{"string":"2019-03-27 15-28-44"}}

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":1},"WST":{"string":"2019-03-27

> 15-28-51"},"WET":{"string":"2019-03-27 15-29-51"}}

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":3},"WST":{"string":"2019-03-27

> 15-27-44"},"WET":{"string":"2019-03-27 15-29-51"}}

> Where "NumberOfRecords" is the count, "WST" is the Avro field Name which

> indicates the window start time for the session window. Similarly "WET"

> indicates the window End time of the session window. I am getting "WST" and

> "WET" after remerging and applying ParDo(Enrichment stage of the pipeline).

> The program ran successfully in DirectRunner. But, in FlinkRunner, I am

> getting this exception when the third record arrives:

> 2019-03-27 15:31:00,442 INFO

> org.apache.flink.runtime.executiongraph.ExecutionGraph- Source:

> DfleKafkaSource/KafkaIO.Read/Read(KafkaUnboundedSource) -> Flat Map ->

> DfleKafkaSource/ParDo(ConvertKafkaRecordtoRow)/ParMultiDo(ConvertKafkaRecordtoRow)

> -> (Window.Into()/Window.Assign.out ->

> DfleSql/SqlTransform/BeamIOSourceRel_4/Convert.ConvertTransform/ParDo(Anonymous)/ParMultiDo(Anonymous)

> ->

> DfleSql/SqlTransform/BeamAggregationRel_45/Group.CombineFieldsByFields/Group.ByFields/Group

> by fields/ParMultiDo(Anonymous) -> ToKeyedWorkItem,

> DfleKafkaSink/ParDo(RowToGenericRecordConverter)/ParMultiDo(RowToGenericRecordConverter)

> -> DfleKafkaSink/KafkaIO.KafkaValueWrite/Kafka values with default

> key/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink/KafkaIO.KafkaValueWrite/KafkaIO.Write/Kafka

> ProducerRecord/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink/KafkaIO.KafkaValueWrite/KafkaIO.Write/KafkaIO.WriteRecords/ParDo(KafkaWriter)/ParMultiDo(KafkaWriter))

> (1/1) (d00be62e110c

[jira] [Updated] (BEAM-6929) Session Windows with lateness cause NullPointerException in Flink Runner

[

https://issues.apache.org/jira/browse/BEAM-6929?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Maximilian Michels updated BEAM-6929:

-

Priority: Critical (was: Major)

> Session Windows with lateness cause NullPointerException in Flink Runner

>

>

> Key: BEAM-6929

> URL: https://issues.apache.org/jira/browse/BEAM-6929

> Project: Beam

> Issue Type: Bug

> Components: runner-flink

>Reporter: Maximilian Michels

>Assignee: Maximilian Michels

>Priority: Critical

> Fix For: 2.12.0

>

> Time Spent: 1h

> Remaining Estimate: 0h

>

> Reported on the mailing list:

> {noformat}

> I am using Beam 2.11.0, Runner - beam-runners-flink-1.7, Flink Cluster -

> 1.7.2.

> I have this flow in my pipeline:

> KafkaSource(withCreateTime()) --> ApplyWindow(SessionWindow with

> gapDuration=1 Minute, lateness=3 Minutes, AccumulatingFiredPanes, default

> trigger) --> BeamSQL(GroupBy query) --> Window.remerge() --> Enrichment

> --> KafkaSink

> I am generating data in such a way that the first two records belong to two

> different sessions. And, generating the third record before the first session

> expires with the timestamp for the third record in such a way that the two

> sessions will be merged to become a single session.

> For Example, These are the sample input and output obtained when I ran the

> same pipeline in DirectRunner.

> Sample Input:

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-27-44"}}

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-28-51"}}

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-28-26"}}

> Sample Output:

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":1},"WST":{"string":"2019-03-27

> 15-27-44"},"WET":{"string":"2019-03-27 15-28-44"}}

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":1},"WST":{"string":"2019-03-27

> 15-28-51"},"WET":{"string":"2019-03-27 15-29-51"}}

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":3},"WST":{"string":"2019-03-27

> 15-27-44"},"WET":{"string":"2019-03-27 15-29-51"}}

> Where "NumberOfRecords" is the count, "WST" is the Avro field Name which

> indicates the window start time for the session window. Similarly "WET"

> indicates the window End time of the session window. I am getting "WST" and

> "WET" after remerging and applying ParDo(Enrichment stage of the pipeline).

> The program ran successfully in DirectRunner. But, in FlinkRunner, I am

> getting this exception when the third record arrives:

> 2019-03-27 15:31:00,442 INFO

> org.apache.flink.runtime.executiongraph.ExecutionGraph- Source:

> DfleKafkaSource/KafkaIO.Read/Read(KafkaUnboundedSource) -> Flat Map ->

> DfleKafkaSource/ParDo(ConvertKafkaRecordtoRow)/ParMultiDo(ConvertKafkaRecordtoRow)

> -> (Window.Into()/Window.Assign.out ->

> DfleSql/SqlTransform/BeamIOSourceRel_4/Convert.ConvertTransform/ParDo(Anonymous)/ParMultiDo(Anonymous)

> ->

> DfleSql/SqlTransform/BeamAggregationRel_45/Group.CombineFieldsByFields/Group.ByFields/Group

> by fields/ParMultiDo(Anonymous) -> ToKeyedWorkItem,

> DfleKafkaSink/ParDo(RowToGenericRecordConverter)/ParMultiDo(RowToGenericRecordConverter)

> -> DfleKafkaSink/KafkaIO.KafkaValueWrite/Kafka values with default

> key/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink/KafkaIO.KafkaValueWrite/KafkaIO.Write/Kafka

> ProducerRecord/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink/KafkaIO.KafkaValueWrite/KafkaIO.Write/KafkaIO.WriteRecords/ParDo(KafkaWriter)/ParMultiDo(KafkaWriter))

> (1/1) (d00be62e110cef00d9d772042f4b87a9) switched from DEPLOYING to RUNNING.

> 2019-03-27 15:33:25,427 INFO

> org.apache.flink.runtime.executiongraph.ExecutionGraph-

> DfleSql/SqlTransform/BeamAggregationRel_45/Group.CombineFieldsByFields/Group.ByFields/GroupByKey

> ->

> DfleSql/SqlTransform/BeamAggregationRel_45/Group.CombineFieldsByFields/Combine.GroupedValues/ParDo(Anonymous)/ParMultiDo(Anonymous)

> ->

> DfleSql/SqlTransform/BeamAggregationRel_45/mergeRecord/ParMultiDo(Anonymous)

> -> DfleSql/SqlTransform/BeamCalcRel_46/ParDo(Calc)/ParMultiDo(Calc) ->

> DfleSql/Window.Remerge/Identity/Map/ParMultiDo(Anonymous) ->

> DfleSql/ParDo(EnrichRecordWithWindowTimeInfo)/ParMultiDo(EnrichRecordWithWindowTimeInfo)

> ->

> DfleKafkaSink2/ParDo(RowToGenericRecordConverter)/ParMultiDo(RowToGenericRecordConverter)

> -> DfleKafkaSink2/KafkaIO.KafkaValueWrite/Kafka values with default

> key/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink2/KafkaIO.KafkaValueWrite/KafkaIO.Write/Kafka

> ProducerRecord/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink2/KafkaIO.KafkaValueWrite/KafkaIO.Write/KafkaIO.WriteRecords/ParDo(KafkaWriter)/ParMultiDo(KafkaWriter)

> (1/1) (d95af17b7457443c13bd327b46b282e6) switched from RUNNING to FAILED.

> org.apache.beam.sdk.util.UserCode

[jira] [Work logged] (BEAM-6929) Session Windows with lateness cause NullPointerException in Flink Runner

[

https://issues.apache.org/jira/browse/BEAM-6929?focusedWorklogId=220472&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220472

]

ASF GitHub Bot logged work on BEAM-6929:

Author: ASF GitHub Bot

Created on: 29/Mar/19 10:11

Start Date: 29/Mar/19 10:11

Worklog Time Spent: 10m

Work Description: mxm commented on pull request #8162: [BEAM-6929]

Prevent NullPointerException in Flink's CombiningState

URL: https://github.com/apache/beam/pull/8162#discussion_r270347525

##

File path:

runners/spark/src/main/java/org/apache/beam/runners/spark/stateful/SparkStateInternals.java

##

@@ -297,8 +297,7 @@ public OutputT read() {

@Override

public void add(InputT input) {

- AccumT accum = getAccum();

- combineFn.addInput(accum, input);

+ AccumT accum = combineFn.addInput(getAccum(), input);

Review comment:

CC @iemejia FYI

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 220472)

Time Spent: 1h (was: 50m)

> Session Windows with lateness cause NullPointerException in Flink Runner

>

>

> Key: BEAM-6929

> URL: https://issues.apache.org/jira/browse/BEAM-6929

> Project: Beam

> Issue Type: Bug

> Components: runner-flink

>Reporter: Maximilian Michels

>Assignee: Maximilian Michels

>Priority: Major

> Fix For: 2.12.0

>

> Time Spent: 1h

> Remaining Estimate: 0h

>

> Reported on the mailing list:

> {noformat}

> I am using Beam 2.11.0, Runner - beam-runners-flink-1.7, Flink Cluster -

> 1.7.2.

> I have this flow in my pipeline:

> KafkaSource(withCreateTime()) --> ApplyWindow(SessionWindow with

> gapDuration=1 Minute, lateness=3 Minutes, AccumulatingFiredPanes, default

> trigger) --> BeamSQL(GroupBy query) --> Window.remerge() --> Enrichment

> --> KafkaSink

> I am generating data in such a way that the first two records belong to two

> different sessions. And, generating the third record before the first session

> expires with the timestamp for the third record in such a way that the two

> sessions will be merged to become a single session.

> For Example, These are the sample input and output obtained when I ran the

> same pipeline in DirectRunner.

> Sample Input:

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-27-44"}}

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-28-51"}}

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-28-26"}}

> Sample Output:

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":1},"WST":{"string":"2019-03-27

> 15-27-44"},"WET":{"string":"2019-03-27 15-28-44"}}

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":1},"WST":{"string":"2019-03-27

> 15-28-51"},"WET":{"string":"2019-03-27 15-29-51"}}

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":3},"WST":{"string":"2019-03-27

> 15-27-44"},"WET":{"string":"2019-03-27 15-29-51"}}

> Where "NumberOfRecords" is the count, "WST" is the Avro field Name which

> indicates the window start time for the session window. Similarly "WET"

> indicates the window End time of the session window. I am getting "WST" and

> "WET" after remerging and applying ParDo(Enrichment stage of the pipeline).

> The program ran successfully in DirectRunner. But, in FlinkRunner, I am

> getting this exception when the third record arrives:

> 2019-03-27 15:31:00,442 INFO

> org.apache.flink.runtime.executiongraph.ExecutionGraph- Source:

> DfleKafkaSource/KafkaIO.Read/Read(KafkaUnboundedSource) -> Flat Map ->

> DfleKafkaSource/ParDo(ConvertKafkaRecordtoRow)/ParMultiDo(ConvertKafkaRecordtoRow)

> -> (Window.Into()/Window.Assign.out ->

> DfleSql/SqlTransform/BeamIOSourceRel_4/Convert.ConvertTransform/ParDo(Anonymous)/ParMultiDo(Anonymous)

> ->

> DfleSql/SqlTransform/BeamAggregationRel_45/Group.CombineFieldsByFields/Group.ByFields/Group

> by fields/ParMultiDo(Anonymous) -> ToKeyedWorkItem,

> DfleKafkaSink/ParDo(RowToGenericRecordConverter)/ParMultiDo(RowToGenericRecordConverter)

> -> DfleKafkaSink/KafkaIO.KafkaValueWrite/Kafka values with default

> key/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink/KafkaIO.KafkaValueWrite/KafkaIO.Write/Kafka

> ProducerRecord/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink/KafkaIO.KafkaValueWrite/KafkaIO.Write/KafkaIO.WriteRecords/ParDo(KafkaWriter)/ParMultiDo(KafkaWriter))

> (1/1) (d00be62e110cef00d9d772042f4b87a9) switched from DEPLOYING to RUNNING.

> 2019-03-27

[jira] [Commented] (BEAM-3312) Add IO convenient "with" methods

[

https://issues.apache.org/jira/browse/BEAM-3312?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16804770#comment-16804770

]

Ismaël Mejía commented on BEAM-3312:

We need to add the methods to the `ConnectionConfiguration` object not to the

Read/Write transforms, this is simpler and keeps the nice builder style, only

difference is that the connection building stays in one place so it is less

error-prone.

> Add IO convenient "with" methods

>

>

> Key: BEAM-3312

> URL: https://issues.apache.org/jira/browse/BEAM-3312

> Project: Beam

> Issue Type: Improvement

> Components: io-java-mqtt

>Reporter: Jean-Baptiste Onofré

>Assignee: Jean-Baptiste Onofré

>Priority: Major

> Labels: triaged

>

> Now, for instance, {{MqttIO}} requires a {{ConnectionConfiguration}} object

> to pass the URL and topic name. It means, the user has to do something like:

> {code}

> MqttIO.read()

> .withConnectionConfiguration(MqttIO.ConnectionConfiguration.create("tcp://localhost:1883",

> "CAR"))

> {code}

> It's pretty verbose and long. I think it makes sense to provide convenient

> "direct" method allowing to do:

> {code}

> MqttIO.read().withUrl().withTopic()

> {code}

> or even:

> {code}

> MqttIO.read().withConnection("url", "topic")

> {code}

> The same apply for some other IOs (JMS, ...).

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Reopened] (BEAM-3604) MqttIOTest testReadNoClientId failure timeout

[

https://issues.apache.org/jira/browse/BEAM-3604?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Ismaël Mejía reopened BEAM-3604:

The test was disabled but it has not been fixed yet.

> MqttIOTest testReadNoClientId failure timeout

> -

>

> Key: BEAM-3604

> URL: https://issues.apache.org/jira/browse/BEAM-3604

> Project: Beam

> Issue Type: Bug

> Components: io-java-mqtt

>Reporter: Kenneth Knowles

>Assignee: Ismaël Mejía

>Priority: Critical

> Labels: flake

> Fix For: Not applicable

>

> Time Spent: 1h 10m

> Remaining Estimate: 0h

>

> I've seen failures a bit today. Here is one:

> [https://builds.apache.org/job/beam_PreCommit_Java_GradleBuild/1758/testReport/junit/org.apache.beam.sdk.io.mqtt/MqttIOTest/testReadNoClientId/]

> Filing all flakes as "Critical" priority so we can sickbay or fix.

> Since that build will get GC'd, here is the Standard Error. It looks like

> from that perspective everything went as planned, but perhaps the test has a

> race condition or something?

> {code}

> Feb 01, 2018 11:28:01 PM org.apache.beam.sdk.io.mqtt.MqttIOTest startBroker

> INFO: Finding free network port

> Feb 01, 2018 11:28:01 PM org.apache.beam.sdk.io.mqtt.MqttIOTest startBroker

> INFO: Starting ActiveMQ brokerService on 57986

> Feb 01, 2018 11:28:03 PM org.apache.activemq.broker.BrokerService

> doStartPersistenceAdapter

> INFO: Using Persistence Adapter: MemoryPersistenceAdapter

> Feb 01, 2018 11:28:04 PM org.apache.activemq.broker.BrokerService

> doStartBroker

> INFO: Apache ActiveMQ 5.13.1 (localhost,

> ID:115.98.154.104.bc.googleusercontent.com-38646-1517527683931-0:1) is

> starting

> Feb 01, 2018 11:28:04 PM

> org.apache.activemq.transport.TransportServerThreadSupport doStart

> INFO: Listening for connections at: mqtt://localhost:57986

> Feb 01, 2018 11:28:04 PM org.apache.activemq.broker.TransportConnector start

> INFO: Connector mqtt://localhost:57986 started

> Feb 01, 2018 11:28:04 PM org.apache.activemq.broker.BrokerService

> doStartBroker

> INFO: Apache ActiveMQ 5.13.1 (localhost,

> ID:115.98.154.104.bc.googleusercontent.com-38646-1517527683931-0:1) started

> Feb 01, 2018 11:28:04 PM org.apache.activemq.broker.BrokerService

> doStartBroker

> INFO: For help or more information please see: http://activemq.apache.org

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.BrokerService stop

> INFO: Apache ActiveMQ 5.13.1 (localhost,

> ID:115.98.154.104.bc.googleusercontent.com-38646-1517527683931-0:1) is

> shutting down

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.TransportConnector stop

> INFO: Connector mqtt://localhost:57986 stopped

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.BrokerService stop

> INFO: Apache ActiveMQ 5.13.1 (localhost,

> ID:115.98.154.104.bc.googleusercontent.com-38646-1517527683931-0:1) uptime

> 24.039 seconds

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.BrokerService stop

> INFO: Apache ActiveMQ 5.13.1 (localhost,

> ID:115.98.154.104.bc.googleusercontent.com-38646-1517527683931-0:1) is

> shutdown

> Feb 01, 2018 11:28:26 PM org.apache.beam.sdk.io.mqtt.MqttIOTest startBroker

> INFO: Finding free network port

> Feb 01, 2018 11:28:26 PM org.apache.beam.sdk.io.mqtt.MqttIOTest startBroker

> INFO: Starting ActiveMQ brokerService on 46799

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.BrokerService

> doStartPersistenceAdapter

> INFO: Using Persistence Adapter: MemoryPersistenceAdapter

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.BrokerService

> doStartBroker

> INFO: Apache ActiveMQ 5.13.1 (localhost,

> ID:115.98.154.104.bc.googleusercontent.com-38646-1517527683931-0:2) is

> starting

> Feb 01, 2018 11:28:26 PM

> org.apache.activemq.transport.TransportServerThreadSupport doStart

> INFO: Listening for connections at: mqtt://localhost:46799

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.TransportConnector start

> INFO: Connector mqtt://localhost:46799 started

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.BrokerService

> doStartBroker

> INFO: Apache ActiveMQ 5.13.1 (localhost,

> ID:115.98.154.104.bc.googleusercontent.com-38646-1517527683931-0:2) started

> Feb 01, 2018 11:28:26 PM org.apache.activemq.broker.BrokerService

> doStartBroker

> INFO: For help or more information please see: http://activemq.apache.org

> Feb 01, 2018 11:28:28 PM org.apache.beam.sdk.io.mqtt.MqttIOTest

> lambda$testRead$1

> INFO: Waiting pipeline connected to the MQTT broker before sending messages

> ...

> Feb 01, 2018 11:28:35 PM org.apache.activemq.broker.BrokerService stop

> INFO: Apache ActiveMQ 5.13.1 (localhost,

> ID:115.98.154.104.bc.googleusercontent.com-38646-1517527683931-0:2) is

> shutting down

> Feb 01, 2018 11:28:35 PM org.apache.activemq.broker.TransportConnector stop

> INFO: Connector mqtt://local

[jira] [Work logged] (BEAM-2939) Fn API streaming SDF support

[

https://issues.apache.org/jira/browse/BEAM-2939?focusedWorklogId=220479&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220479

]

ASF GitHub Bot logged work on BEAM-2939:

Author: ASF GitHub Bot

Created on: 29/Mar/19 10:25

Start Date: 29/Mar/19 10:25

Worklog Time Spent: 10m

Work Description: robertwb commented on pull request #8088: [BEAM-2939]

SDF sizing in FnAPI and Python SDK/runner.

URL: https://github.com/apache/beam/pull/8088#discussion_r270352238

##

File path: model/pipeline/src/main/proto/beam_runner_api.proto

##

@@ -301,8 +301,15 @@ message StandardPTransforms {

// Like PROCESS_KEYED_ELEMENTS, but without the unique key - just elements

// and restrictions.

-// Input: KV(element, restriction); output: DoFn's output.

+// Input: KV(KV(element, restriction), weight); output: DoFn's output.

PROCESS_ELEMENTS = 3 [(beam_urn) =

"beam:transform:sdf_process_elements:v1"];

Review comment:

Incomplete find-and-replace. Fixed.

I updated the URNs as per our discussion. "with weights" was ambiguous

(producing vs. consuming) so I went with "split_and_size" and

"process_weighted."

https://github.com/apache/beam/pull/8166 for the double coder.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 220479)

Time Spent: 14h 10m (was: 14h)

> Fn API streaming SDF support

>

>

> Key: BEAM-2939

> URL: https://issues.apache.org/jira/browse/BEAM-2939

> Project: Beam

> Issue Type: Improvement

> Components: beam-model

>Reporter: Henning Rohde

>Assignee: Luke Cwik

>Priority: Major

> Labels: portability, triaged

> Time Spent: 14h 10m

> Remaining Estimate: 0h

>

> The Fn API should support streaming SDF. Detailed design TBD.

> Once design is ready, expand subtasks similarly to BEAM-2822.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Assigned] (BEAM-3312) Add IO convenient "with" methods

[

https://issues.apache.org/jira/browse/BEAM-3312?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

LI Guobao reassigned BEAM-3312:

---

Assignee: LI Guobao (was: Jean-Baptiste Onofré)

> Add IO convenient "with" methods

>

>

> Key: BEAM-3312

> URL: https://issues.apache.org/jira/browse/BEAM-3312

> Project: Beam

> Issue Type: Improvement

> Components: io-java-mqtt

>Reporter: Jean-Baptiste Onofré

>Assignee: LI Guobao

>Priority: Major

> Labels: triaged

>

> Now, for instance, {{MqttIO}} requires a {{ConnectionConfiguration}} object

> to pass the URL and topic name. It means, the user has to do something like:

> {code}

> MqttIO.read()

> .withConnectionConfiguration(MqttIO.ConnectionConfiguration.create("tcp://localhost:1883",

> "CAR"))

> {code}

> It's pretty verbose and long. I think it makes sense to provide convenient

> "direct" method allowing to do:

> {code}

> MqttIO.read().withUrl().withTopic()

> {code}

> or even:

> {code}

> MqttIO.read().withConnection("url", "topic")

> {code}

> The same apply for some other IOs (JMS, ...).

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (BEAM-3312) Add convenient "with" to MqttIO.ConnectionConfiguration

[

https://issues.apache.org/jira/browse/BEAM-3312?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Ismaël Mejía updated BEAM-3312:

---

Summary: Add convenient "with" to MqttIO.ConnectionConfiguration (was: Add

IO convenient "with" methods)

> Add convenient "with" to MqttIO.ConnectionConfiguration

> ---

>

> Key: BEAM-3312

> URL: https://issues.apache.org/jira/browse/BEAM-3312

> Project: Beam

> Issue Type: Improvement

> Components: io-java-mqtt

>Reporter: Jean-Baptiste Onofré

>Assignee: LI Guobao

>Priority: Major

> Labels: triaged

>

> Now, for instance, {{MqttIO}} requires a {{ConnectionConfiguration}} object

> to pass the URL and topic name. It means, the user has to do something like:

> {code}

> MqttIO.read()

> .withConnectionConfiguration(MqttIO.ConnectionConfiguration.create("tcp://localhost:1883",

> "CAR"))

> {code}

> It's pretty verbose and long. I think it makes sense to provide convenient

> "direct" method allowing to do:

> {code}

> MqttIO.read().withUrl().withTopic()

> {code}

> or even:

> {code}

> MqttIO.read().withConnection("url", "topic")

> {code}

> The same apply for some other IOs (JMS, ...).

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (BEAM-3312) Add convenient "with" to MqttIO.ConnectionConfiguration

[

https://issues.apache.org/jira/browse/BEAM-3312?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16804794#comment-16804794

]

LI Guobao commented on BEAM-3312:

-

So let us just extend the `ConnectionConfiguration` currently and probably we

might just delegate the connection construction to the users.

> Add convenient "with" to MqttIO.ConnectionConfiguration

> ---

>

> Key: BEAM-3312

> URL: https://issues.apache.org/jira/browse/BEAM-3312

> Project: Beam

> Issue Type: Improvement

> Components: io-java-mqtt

>Reporter: Jean-Baptiste Onofré

>Assignee: LI Guobao

>Priority: Major

> Labels: triaged

>

> Now, for instance, {{MqttIO}} requires a {{ConnectionConfiguration}} object

> to pass the URL and topic name. It means, the user has to do something like:

> {code}

> MqttIO.read()

> .withConnectionConfiguration(MqttIO.ConnectionConfiguration.create("tcp://localhost:1883",

> "CAR"))

> {code}

> It's pretty verbose and long. I think it makes sense to provide convenient

> "direct" method allowing to do:

> {code}

> MqttIO.read().withUrl().withTopic()

> {code}

> or even:

> {code}

> MqttIO.read().withConnection("url", "topic")

> {code}

> The same apply for some other IOs (JMS, ...).

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Work logged] (BEAM-6929) Session Windows with lateness cause NullPointerException in Flink Runner

[

https://issues.apache.org/jira/browse/BEAM-6929?focusedWorklogId=220487&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220487

]

ASF GitHub Bot logged work on BEAM-6929:

Author: ASF GitHub Bot

Created on: 29/Mar/19 11:13

Start Date: 29/Mar/19 11:13

Worklog Time Spent: 10m

Work Description: mxm commented on issue #8162: [BEAM-6929] Prevent

NullPointerException in Flink's CombiningState

URL: https://github.com/apache/beam/pull/8162#issuecomment-477960940

Run Java PreCommit

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 220487)

Time Spent: 1h 10m (was: 1h)

> Session Windows with lateness cause NullPointerException in Flink Runner

>

>

> Key: BEAM-6929

> URL: https://issues.apache.org/jira/browse/BEAM-6929

> Project: Beam

> Issue Type: Bug

> Components: runner-flink

>Reporter: Maximilian Michels

>Assignee: Maximilian Michels

>Priority: Critical

> Fix For: 2.12.0

>

> Time Spent: 1h 10m

> Remaining Estimate: 0h

>

> Reported on the mailing list:

> {noformat}

> I am using Beam 2.11.0, Runner - beam-runners-flink-1.7, Flink Cluster -

> 1.7.2.

> I have this flow in my pipeline:

> KafkaSource(withCreateTime()) --> ApplyWindow(SessionWindow with

> gapDuration=1 Minute, lateness=3 Minutes, AccumulatingFiredPanes, default

> trigger) --> BeamSQL(GroupBy query) --> Window.remerge() --> Enrichment

> --> KafkaSink

> I am generating data in such a way that the first two records belong to two

> different sessions. And, generating the third record before the first session

> expires with the timestamp for the third record in such a way that the two

> sessions will be merged to become a single session.

> For Example, These are the sample input and output obtained when I ran the

> same pipeline in DirectRunner.

> Sample Input:

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-27-44"}}

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-28-51"}}

> {"Col1":{"string":"str1"}, "Timestamp":{"string":"2019-03-27 15-28-26"}}

> Sample Output:

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":1},"WST":{"string":"2019-03-27

> 15-27-44"},"WET":{"string":"2019-03-27 15-28-44"}}

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":1},"WST":{"string":"2019-03-27

> 15-28-51"},"WET":{"string":"2019-03-27 15-29-51"}}

> {"Col1":{"string":"str1"},"NumberOfRecords":{"long":3},"WST":{"string":"2019-03-27

> 15-27-44"},"WET":{"string":"2019-03-27 15-29-51"}}

> Where "NumberOfRecords" is the count, "WST" is the Avro field Name which

> indicates the window start time for the session window. Similarly "WET"

> indicates the window End time of the session window. I am getting "WST" and

> "WET" after remerging and applying ParDo(Enrichment stage of the pipeline).

> The program ran successfully in DirectRunner. But, in FlinkRunner, I am

> getting this exception when the third record arrives:

> 2019-03-27 15:31:00,442 INFO

> org.apache.flink.runtime.executiongraph.ExecutionGraph- Source:

> DfleKafkaSource/KafkaIO.Read/Read(KafkaUnboundedSource) -> Flat Map ->

> DfleKafkaSource/ParDo(ConvertKafkaRecordtoRow)/ParMultiDo(ConvertKafkaRecordtoRow)

> -> (Window.Into()/Window.Assign.out ->

> DfleSql/SqlTransform/BeamIOSourceRel_4/Convert.ConvertTransform/ParDo(Anonymous)/ParMultiDo(Anonymous)

> ->

> DfleSql/SqlTransform/BeamAggregationRel_45/Group.CombineFieldsByFields/Group.ByFields/Group

> by fields/ParMultiDo(Anonymous) -> ToKeyedWorkItem,

> DfleKafkaSink/ParDo(RowToGenericRecordConverter)/ParMultiDo(RowToGenericRecordConverter)

> -> DfleKafkaSink/KafkaIO.KafkaValueWrite/Kafka values with default

> key/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink/KafkaIO.KafkaValueWrite/KafkaIO.Write/Kafka

> ProducerRecord/Map/ParMultiDo(Anonymous) ->

> DfleKafkaSink/KafkaIO.KafkaValueWrite/KafkaIO.Write/KafkaIO.WriteRecords/ParDo(KafkaWriter)/ParMultiDo(KafkaWriter))

> (1/1) (d00be62e110cef00d9d772042f4b87a9) switched from DEPLOYING to RUNNING.

> 2019-03-27 15:33:25,427 INFO

> org.apache.flink.runtime.executiongraph.ExecutionGraph-

> DfleSql/SqlTransform/BeamAggregationRel_45/Group.CombineFieldsByFields/Group.ByFields/GroupByKey

> ->

> DfleSql/SqlTransform/BeamAggregationRel_45/Group.CombineFieldsByFields/Combine.GroupedValues/ParDo(Anonymous)/ParMultiDo(Anonymous)

> ->

> DfleSql/SqlTransform/BeamAggregationRel_4

[jira] [Work logged] (BEAM-3312) Add convenient "with" to MqttIO.ConnectionConfiguration

[ https://issues.apache.org/jira/browse/BEAM-3312?focusedWorklogId=220491&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220491 ] ASF GitHub Bot logged work on BEAM-3312: Author: ASF GitHub Bot Created on: 29/Mar/19 11:22 Start Date: 29/Mar/19 11:22 Worklog Time Spent: 10m Work Description: EdgarLGB commented on pull request #8167: [BEAM-3312] Improve the builder to MqttIO connection URL: https://github.com/apache/beam/pull/8167 Hi all, Here is the PR to add the builder methods allowing to configure the MqttIO connection. The idea is to stick to the builder style instead of putting the arguments directly in the constructor. Thanks in advance for the review. (R @iemejia ) Regards, Guobao Post-Commit Tests Status (on master branch) Lang | SDK | Apex | Dataflow | Flink | Gearpump | Samza | Spark --- | --- | --- | --- | --- | --- | --- | --- Go | [](https://builds.apache.org/job/beam_PostCommit_Go/lastCompletedBuild/) | --- | --- | --- | --- | --- | --- Java | [](https://builds.apache.org/job/beam_PostCommit_Java/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Apex/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Dataflow/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Flink/lastCompletedBuild/)[](https://builds.apache.org/job/beam_PostCommit_Java_PVR_Flink_Batch/lastCompletedBuild/)[](https://builds.apache.org/job/beam_PostCommit_Java_PVR_Flink_Streaming/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Gearpump/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Samza/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Spark/lastCompletedBuild/) Python | [](https://builds.apache.org/job/beam_PostCommit_Python_Verify/lastCompletedBuild/)[](https://builds.apache.org/job/beam_PostCommit_Python3_Verify/lastCompletedBuild/) | --- | [](https://builds.apache.org/job/beam_PostCommit_Py_VR_Dataflow/lastCompletedBuild/) [](https://builds.apache.org/job/beam_PostCommit_Py_ValCont/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PreCommit_Python_PVR_Flink_Cron/lastCompletedBuild/) | --- | --- | --- Pre-Commit Tests Status (on master branch) --- |Java | Python | Go | Website --- | --- | --- | --- | --- Non-portable | [](https://builds.apache.org/job/beam_PreCommit_Java_Cron/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PreCommit_Python_Cron/lastCompletedBuild/) | [ Streaming FlinkTransformOverrides are not applied without explicit streaming mode

Maximilian Michels created BEAM-6937:

Summary: Streaming FlinkTransformOverrides are not applied without

explicit streaming mode

Key: BEAM-6937

URL: https://issues.apache.org/jira/browse/BEAM-6937

Project: Beam

Issue Type: Bug

Components: runner-flink

Reporter: Maximilian Michels

Assignee: Maximilian Michels

Fix For: 2.12.0

When streaming is set to false the streaming mode will be switched to true if

the pipeline contains unbounded sources. There is a regression which prevents

PipelineOverrides to be applied correctly in this case.

As reported on the mailing list:

{noformat}

I just upgraded to Flink 1.7.2 from 1.6.2 with my dev cluster and from Beam

2.10 to 2.11 and I am seeing this error when starting my pipelines:

org.apache.flink.client.program.ProgramInvocationException: The main method

caused an error.

at

org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:546)

at

org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:421)

at

org.apache.flink.client.program.ClusterClient.run(ClusterClient.java:427)

at

org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:813)

at

org.apache.flink.client.cli.CliFrontend.runProgram(CliFrontend.java:287)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:213)

at

org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

at

org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

at

org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1126)

Caused by: java.lang.UnsupportedOperationException: The transform

beam:transform:create_view:v1 is currently not supported.

at

org.apache.beam.runners.flink.FlinkStreamingPipelineTranslator.visitPrimitiveTransform(FlinkStreamingPipelineTranslator.java:113)

at

org.apache.beam.sdk.runners.TransformHierarchy$Node.visit(TransformHierarchy.java:665)

at

org.apache.beam.sdk.runners.TransformHierarchy$Node.visit(TransformHierarchy.java:657)

at

org.apache.beam.sdk.runners.TransformHierarchy$Node.visit(TransformHierarchy.java:657)

at

org.apache.beam.sdk.runners.TransformHierarchy$Node.visit(TransformHierarchy.java:657)

at

org.apache.beam.sdk.runners.TransformHierarchy$Node.visit(TransformHierarchy.java:657)

at

org.apache.beam.sdk.runners.TransformHierarchy$Node.visit(TransformHierarchy.java:657)

at

org.apache.beam.sdk.runners.TransformHierarchy$Node.access$600(TransformHierarchy.java:317)

at

org.apache.beam.sdk.runners.TransformHierarchy.visit(TransformHierarchy.java:251)

at org.apache.beam.sdk.Pipeline.traverseTopologically(Pipeline.java:458)

at

org.apache.beam.runners.flink.FlinkPipelineTranslator.translate(FlinkPipelineTranslator.java:38)

at

org.apache.beam.runners.flink.FlinkStreamingPipelineTranslator.translate(FlinkStreamingPipelineTranslator.java:68)

at

org.apache.beam.runners.flink.FlinkPipelineExecutionEnvironment.translate(FlinkPipelineExecutionEnvironment.java:111)

at org.apache.beam.runners.flink.FlinkRunner.run(FlinkRunner.java:108)

at org.apache.beam.sdk.Pipeline.run(Pipeline.java:313)

at org.apache.beam.sdk.Pipeline.run(Pipeline.java:299)

at ch.ricardo.di.beam.KafkaToBigQuery.main(KafkaToBigQuery.java:175)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at

org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:529)

... 9 more

{noformat}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Work logged] (BEAM-6937) Streaming FlinkTransformOverrides are not applied without explicit streaming mode

[ https://issues.apache.org/jira/browse/BEAM-6937?focusedWorklogId=220511&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220511 ] ASF GitHub Bot logged work on BEAM-6937: Author: ASF GitHub Bot Created on: 29/Mar/19 12:42 Start Date: 29/Mar/19 12:42 Worklog Time Spent: 10m Work Description: mxm commented on pull request #8168: [BEAM-6937] Apply FlinkTransformOverrides correctly with inferred streaming mode URL: https://github.com/apache/beam/pull/8168 When streaming is set to false the streaming mode will be switched to true if the pipeline contains unbounded sources. There is a regression which prevents PipelineOverrides to be applied correctly in this case. CC @tweise @angoenka Post-Commit Tests Status (on master branch) Lang | SDK | Apex | Dataflow | Flink | Gearpump | Samza | Spark --- | --- | --- | --- | --- | --- | --- | --- Go | [](https://builds.apache.org/job/beam_PostCommit_Go/lastCompletedBuild/) | --- | --- | --- | --- | --- | --- Java | [](https://builds.apache.org/job/beam_PostCommit_Java/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Apex/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Dataflow/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Flink/lastCompletedBuild/)[](https://builds.apache.org/job/beam_PostCommit_Java_PVR_Flink_Batch/lastCompletedBuild/)[](https://builds.apache.org/job/beam_PostCommit_Java_PVR_Flink_Streaming/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Gearpump/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Samza/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PostCommit_Java_ValidatesRunner_Spark/lastCompletedBuild/) Python | [](https://builds.apache.org/job/beam_PostCommit_Python_Verify/lastCompletedBuild/)[](https://builds.apache.org/job/beam_PostCommit_Python3_Verify/lastCompletedBuild/) | --- | [](https://builds.apache.org/job/beam_PostCommit_Py_VR_Dataflow/lastCompletedBuild/) [](https://builds.apache.org/job/beam_PostCommit_Py_ValCont/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PreCommit_Python_PVR_Flink_Cron/lastCompletedBuild/) | --- | --- | --- Pre-Commit Tests Status (on master branch) --- |Java | Python | Go | Website --- | --- | --- | --- | --- Non-portable | [](https://builds.apache.org/job/beam_PreCommit_Java_Cron/lastCompletedBuild/) | [](https://builds.apache.org/job/beam_PreCommit_Python_Cron/lastCompletedBuild/) | [](https://b

[jira] [Work started] (BEAM-3312) Add convenient "with" to MqttIO.ConnectionConfiguration

[

https://issues.apache.org/jira/browse/BEAM-3312?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Work on BEAM-3312 started by LI Guobao.

---

> Add convenient "with" to MqttIO.ConnectionConfiguration

> ---

>

> Key: BEAM-3312

> URL: https://issues.apache.org/jira/browse/BEAM-3312

> Project: Beam

> Issue Type: Improvement

> Components: io-java-mqtt

>Reporter: Jean-Baptiste Onofré

>Assignee: LI Guobao

>Priority: Major

> Labels: triaged

> Time Spent: 10m

> Remaining Estimate: 0h

>

> Now, for instance, {{MqttIO}} requires a {{ConnectionConfiguration}} object

> to pass the URL and topic name. It means, the user has to do something like:

> {code}

> MqttIO.read()

> .withConnectionConfiguration(MqttIO.ConnectionConfiguration.create("tcp://localhost:1883",

> "CAR"))

> {code}

> It's pretty verbose and long. I think it makes sense to provide convenient

> "direct" method allowing to do:

> {code}

> MqttIO.read().withUrl().withTopic()

> {code}

> or even:

> {code}

> MqttIO.read().withConnection("url", "topic")

> {code}

> The same apply for some other IOs (JMS, ...).

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Work logged] (BEAM-6876) User state cleanup in portable Flink runner

[

https://issues.apache.org/jira/browse/BEAM-6876?focusedWorklogId=220520&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220520

]

ASF GitHub Bot logged work on BEAM-6876:

Author: ASF GitHub Bot

Created on: 29/Mar/19 13:20

Start Date: 29/Mar/19 13:20

Worklog Time Spent: 10m

Work Description: tweise commented on pull request #8118: [BEAM-6876]

Cleanup user state in portable Flink Runner

URL: https://github.com/apache/beam/pull/8118#discussion_r270404554

##

File path:

runners/flink/src/test/java/org/apache/beam/runners/flink/translation/wrappers/streaming/ExecutableStageDoFnOperatorTest.java

##

@@ -340,6 +359,101 @@ public void testStageBundleClosed() throws Exception {

verifyNoMoreInteractions(bundle);

}

+ @Test

+ @SuppressWarnings("unchecked")

+ public void testEnsureStateCleanupWithKeyedInput() throws Exception {

+TupleTag mainOutput = new TupleTag<>("main-output");

+DoFnOperator.MultiOutputOutputManagerFactory outputManagerFactory

=

+new DoFnOperator.MultiOutputOutputManagerFactory(mainOutput,

VarIntCoder.of());

+VarIntCoder keyCoder = VarIntCoder.of();

+ExecutableStageDoFnOperator operator =

+getOperator(mainOutput, Collections.emptyList(), outputManagerFactory,

keyCoder);

+

+KeyedOneInputStreamOperatorTestHarness,

WindowedValue>

+testHarness =

+new KeyedOneInputStreamOperatorTestHarness(

+operator, val -> val, new CoderTypeInformation<>(keyCoder));

+

+RemoteBundle bundle = Mockito.mock(RemoteBundle.class);

+when(bundle.getInputReceivers())

+.thenReturn(

+ImmutableMap.>builder()

+.put("input", Mockito.mock(FnDataReceiver.class))

+.build());

+when(stageBundleFactory.getBundle(any(), any(), any())).thenReturn(bundle);

+

+testHarness.open();

+

+Object doFnRunner = Whitebox.getInternalState(operator, "doFnRunner");

+assertThat(doFnRunner, instanceOf(DoFnRunnerWithMetricsUpdate.class));

+

+// There should be a StatefulDoFnRunner installed which takes care of

clearing state

+Object statefulDoFnRunner = Whitebox.getInternalState(doFnRunner,

"delegate");

+assertThat(statefulDoFnRunner, instanceOf(StatefulDoFnRunner.class));

+ }

+

+ @Test

+ public void testEnsureStateCleanupWithKeyedInputCleanupTimer() throws

Exception {

+InMemoryTimerInternals inMemoryTimerInternals = new

InMemoryTimerInternals();

+Consumer keyConsumer = Mockito.mock(Consumer.class);

+KeyedStateBackend keyedStateBackend =

Mockito.mock(KeyedStateBackend.class);

+Lock stateBackendLock = Mockito.mock(Lock.class);

+StringUtf8Coder keyCoder = StringUtf8Coder.of();

+GlobalWindow window = GlobalWindow.INSTANCE;

+GlobalWindow.Coder windowCoder = GlobalWindow.Coder.INSTANCE;

+

+// Test that cleanup timer is set correctly

+ExecutableStageDoFnOperator.CleanupTimer cleanupTimer =

+new ExecutableStageDoFnOperator.CleanupTimer<>(

+inMemoryTimerInternals,

+stateBackendLock,

+WindowingStrategy.globalDefault(),

+keyCoder,

+windowCoder,

+keyConsumer,

+keyedStateBackend);

+cleanupTimer.setForWindow(KV.of("key", "string"), window);

+

+Mockito.verify(stateBackendLock).lock();

+ByteBuffer key = ByteBuffer.wrap(CoderUtils.encodeToByteArray(keyCoder,

"key"));

+Mockito.verify(keyConsumer).accept(key);

+Mockito.verify(keyedStateBackend).setCurrentKey(key);

+assertThat(

+inMemoryTimerInternals.getNextTimer(TimeDomain.EVENT_TIME),

+is(window.maxTimestamp().plus(1)));

+Mockito.verify(stateBackendLock).unlock();

+ }

+

+ @Test

+ public void testEnsureStateCleanupWithKeyedInputStateCleaner() throws

Exception {

+GlobalWindow.Coder windowCoder = GlobalWindow.Coder.INSTANCE;

+InMemoryStateInternals stateInternals =

InMemoryStateInternals.forKey("key");

+List userStateNames = ImmutableList.of("state1", "state2");

+ImmutableList.Builder> bagStateBuilder =

ImmutableList.builder();

+for (String userStateName : userStateNames) {

+ BagState state =

+ stateInternals.state(

+ StateNamespaces.window(windowCoder, GlobalWindow.INSTANCE),

+ StateTags.bag(userStateName, StringUtf8Coder.of()));

+ bagStateBuilder.add(state);

+ state.add("this should be cleaned");

+}

+ImmutableList> bagStates = bagStateBuilder.build();

+

+// Test that state is cleanup up correctly

Review comment:

```suggestion

// Test that state is cleaned up correctly

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about

[jira] [Work logged] (BEAM-6876) User state cleanup in portable Flink runner

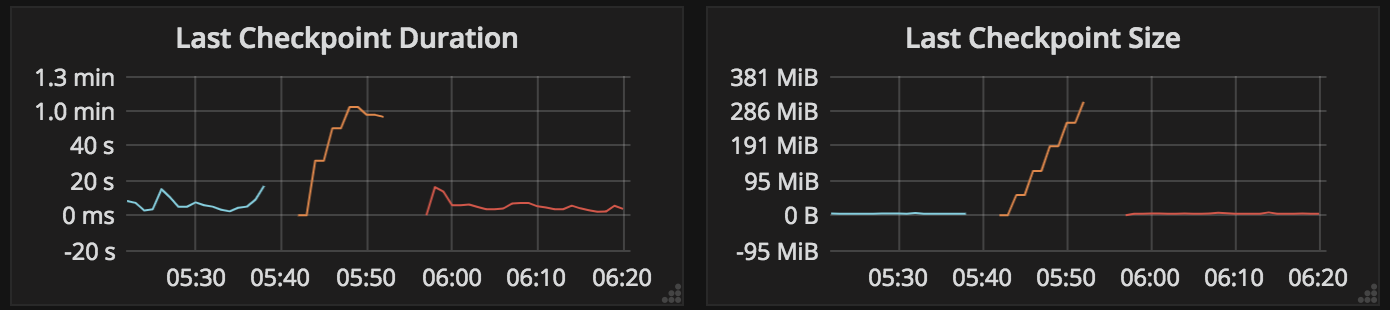

[ https://issues.apache.org/jira/browse/BEAM-6876?focusedWorklogId=220521&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-220521 ] ASF GitHub Bot logged work on BEAM-6876: Author: ASF GitHub Bot Created on: 29/Mar/19 13:24 Start Date: 29/Mar/19 13:24 Worklog Time Spent: 10m Work Description: tweise commented on issue #8118: [BEAM-6876] Cleanup user state in portable Flink Runner URL: https://github.com/apache/beam/pull/8118#issuecomment-477996082 The effect of cleanup is clearly visible when monitoring the checkpoint size. Without the fix, checkpoint size is climbing fast:  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 220521) Time Spent: 2h 40m (was: 2.5h) > User state cleanup in portable Flink runner > --- > > Key: BEAM-6876 > URL: https://issues.apache.org/jira/browse/BEAM-6876 > Project: Beam > Issue Type: Bug > Components: runner-flink >Affects Versions: 2.11.0 >Reporter: Thomas Weise >Assignee: Maximilian Michels >Priority: Major > Labels: portability-flink, triaged > Time Spent: 2h 40m > Remaining Estimate: 0h > > State is currently not being cleaned up by the runner. > [https://lists.apache.org/thread.html/86f0809fbfa3da873051287b9ff249d6dd5a896b45409db1e484cf38@%3Cdev.beam.apache.org%3E] > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Work logged] (BEAM-6876) User state cleanup in portable Flink runner