[jira] [Commented] (DRILL-6353) Upgrade Parquet MR dependencies

[

https://issues.apache.org/jira/browse/DRILL-6353?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506827#comment-16506827

]

ASF GitHub Bot commented on DRILL-6353:

---

vrozov commented on a change in pull request #1259: DRILL-6353: Upgrade Parquet

MR dependencies

URL: https://github.com/apache/drill/pull/1259#discussion_r194215694

##

File path:

exec/java-exec/src/test/java/org/apache/drill/exec/store/parquet/TestParquetMetadataCache.java

##

@@ -737,6 +738,7 @@ public void testBooleanPartitionPruning() throws Exception

{

}

}

+ @Ignore

Review comment:

And for `testDecimalPartitionPruning` statistics for `MANAGER_ID` is not

available either:

```

{

"encodingStats" : null,

"dictionaryPageOffset" : 0,

"valueCount" : 107,

"totalSize" : 168,

"totalUncompressedSize" : 363,

"statistics" : {

"max" : null,

"min" : null,

"maxBytes" : null,

"minBytes" : null,

"empty" : true,

"numNulls" : -1,

"numNullsSet" : false

},

"firstDataPageOffset" : 5550,

"type" : "FIXED_LEN_BYTE_ARRAY",

"path" : [ "MANAGER_ID" ],

"codec" : "SNAPPY",

"primitiveType" : {

"name" : "MANAGER_ID",

"repetition" : "OPTIONAL",

"originalType" : "DECIMAL",

"id" : null,

"primitive" : true,

"primitiveTypeName" : "FIXED_LEN_BYTE_ARRAY",

"decimalMetadata" : {

"precision" : 6,

"scale" : 0

},

"typeLength" : 3

},

"encodings" : [ "PLAIN", "BIT_PACKED", "RLE" ],

"startingPos" : 5550

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Upgrade Parquet MR dependencies

> ---

>

> Key: DRILL-6353

> URL: https://issues.apache.org/jira/browse/DRILL-6353

> Project: Apache Drill

> Issue Type: Task

>Reporter: Vlad Rozov

>Assignee: Vlad Rozov

>Priority: Major

> Fix For: 1.14.0

>

> Attachments: alltypes_optional.json, fixedlenDecimal.json

>

>

> Upgrade from a custom build {{1.8.1-drill-r0}} to Apache release {{1.10.0}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (DRILL-6353) Upgrade Parquet MR dependencies

[

https://issues.apache.org/jira/browse/DRILL-6353?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506824#comment-16506824

]

ASF GitHub Bot commented on DRILL-6353:

---

vrozov commented on a change in pull request #1259: DRILL-6353: Upgrade Parquet

MR dependencies

URL: https://github.com/apache/drill/pull/1259#discussion_r194215287

##

File path:

exec/java-exec/src/test/java/org/apache/drill/exec/store/parquet/TestParquetMetadataCache.java

##

@@ -737,6 +738,7 @@ public void testBooleanPartitionPruning() throws Exception

{

}

}

+ @Ignore

Review comment:

The same applies to `testIntervalYearPartitionPruning`: statistics for

`col_intrvl_yr` is also not available for the same reason:

```

{

"encodingStats" : null,

"dictionaryPageOffset" : 0,

"valueCount" : 6,

"totalSize" : 81,

"totalUncompressedSize" : 91,

"statistics" : {

"max" : null,

"min" : null,

"maxBytes" : null,

"minBytes" : null,

"empty" : true,

"numNulls" : -1,

"numNullsSet" : false

},

"firstDataPageOffset" : 451,

"type" : "FIXED_LEN_BYTE_ARRAY",

"path" : [ "col_intrvl_yr" ],

"primitiveType" : {

"name" : "col_intrvl_yr",

"repetition" : "OPTIONAL",

"originalType" : "INTERVAL",

"id" : null,

"primitive" : true,

"primitiveTypeName" : "FIXED_LEN_BYTE_ARRAY",

"decimalMetadata" : null,

"typeLength" : 12

},

"codec" : "SNAPPY",

"encodings" : [ "RLE", "BIT_PACKED", "PLAIN" ],

"startingPos" : 451

}

```

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Upgrade Parquet MR dependencies

> ---

>

> Key: DRILL-6353

> URL: https://issues.apache.org/jira/browse/DRILL-6353

> Project: Apache Drill

> Issue Type: Task

>Reporter: Vlad Rozov

>Assignee: Vlad Rozov

>Priority: Major

> Fix For: 1.14.0

>

> Attachments: alltypes_optional.json, fixedlenDecimal.json

>

>

> Upgrade from a custom build {{1.8.1-drill-r0}} to Apache release {{1.10.0}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (DRILL-6353) Upgrade Parquet MR dependencies

[

https://issues.apache.org/jira/browse/DRILL-6353?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Vlad Rozov updated DRILL-6353:

--

Attachment: fixedlenDecimal.json

> Upgrade Parquet MR dependencies

> ---

>

> Key: DRILL-6353

> URL: https://issues.apache.org/jira/browse/DRILL-6353

> Project: Apache Drill

> Issue Type: Task

>Reporter: Vlad Rozov

>Assignee: Vlad Rozov

>Priority: Major

> Fix For: 1.14.0

>

> Attachments: alltypes_optional.json, fixedlenDecimal.json

>

>

> Upgrade from a custom build {{1.8.1-drill-r0}} to Apache release {{1.10.0}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (DRILL-6353) Upgrade Parquet MR dependencies

[

https://issues.apache.org/jira/browse/DRILL-6353?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506822#comment-16506822

]

ASF GitHub Bot commented on DRILL-6353:

---

vrozov commented on a change in pull request #1259: DRILL-6353: Upgrade Parquet

MR dependencies

URL: https://github.com/apache/drill/pull/1259#discussion_r194214952

##

File path:

exec/java-exec/src/test/java/org/apache/drill/exec/store/parquet/TestParquetMetadataCache.java

##

@@ -737,6 +738,7 @@ public void testBooleanPartitionPruning() throws Exception

{

}

}

+ @Ignore

Review comment:

I attached the output of

`org.apache.parquet.format.converter.ParquetMetadataConverter` in the debug

mode to [DRILL-6353](https://issues.apache.org/jira/browse/DRILL-6353). As you

can see there is no statistics available for the `col_intrvl_day`:

```

{

"encodingStats" : null,

"dictionaryPageOffset" : 0,

"valueCount" : 6,

"totalSize" : 92,

"totalUncompressedSize" : 91,

"statistics" : {

"max" : null,

"min" : null,

"maxBytes" : null,

"minBytes" : null,

"empty" : true,

"numNulls" : -1,

"numNullsSet" : false

},

"firstDataPageOffset" : 532,

"type" : "FIXED_LEN_BYTE_ARRAY",

"path" : [ "col_intrvl_day" ],

"primitiveType" : {

"name" : "col_intrvl_day",

"repetition" : "OPTIONAL",

"originalType" : "INTERVAL",

"id" : null,

"primitive" : true,

"primitiveTypeName" : "FIXED_LEN_BYTE_ARRAY",

"decimalMetadata" : null,

"typeLength" : 12

},

"codec" : "SNAPPY",

"encodings" : [ "RLE", "BIT_PACKED", "PLAIN" ],

"startingPos" : 532

}

```

This is result of parquet fix for "Deprecate type-defined sort ordering for

INTERVAL

type"([PARQUET-1064](https://issues.apache.org/jira/browse/PARQUET-1064))

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Upgrade Parquet MR dependencies

> ---

>

> Key: DRILL-6353

> URL: https://issues.apache.org/jira/browse/DRILL-6353

> Project: Apache Drill

> Issue Type: Task

>Reporter: Vlad Rozov

>Assignee: Vlad Rozov

>Priority: Major

> Fix For: 1.14.0

>

> Attachments: alltypes_optional.json

>

>

> Upgrade from a custom build {{1.8.1-drill-r0}} to Apache release {{1.10.0}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (DRILL-6353) Upgrade Parquet MR dependencies

[

https://issues.apache.org/jira/browse/DRILL-6353?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Vlad Rozov updated DRILL-6353:

--

Attachment: alltypes_optional.json

> Upgrade Parquet MR dependencies

> ---

>

> Key: DRILL-6353

> URL: https://issues.apache.org/jira/browse/DRILL-6353

> Project: Apache Drill

> Issue Type: Task

>Reporter: Vlad Rozov

>Assignee: Vlad Rozov

>Priority: Major

> Fix For: 1.14.0

>

> Attachments: alltypes_optional.json

>

>

> Upgrade from a custom build {{1.8.1-drill-r0}} to Apache release {{1.10.0}}.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (DRILL-6454) Native MapR DB plugin support for Hive MapR-DB json table

[ https://issues.apache.org/jira/browse/DRILL-6454?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Vitalii Diravka updated DRILL-6454: --- Description: Hive can create and query MapR-DB tables via maprdb-json-handler: https://maprdocs.mapr.com/home/Hive/ConnectingToMapR-DB.html The aim of this Jira to implement Drill native reader for Hive MapR-DB tables (similar to parquet). Design proposal is: - to use JsonTableGroupScan instead of HiveScan; - to add storage planning rule to convert HiveScan to MapRDBGroupScan; - to add system/session option to enable using of this native reader; - native reader can be used only for Drill build with mapr profile (there is no reason to leverage it for default profile); was: Hive can create and query MapR-DB tables via maprdb-json-handler: https://maprdocs.mapr.com/home/Hive/ConnectingToMapR-DB.html The aim of this Jira to implement Drill native reader for Hive MapR-DB tables (similar to parquet). Design proposal is: - to implement new GroupScan operators for interpreting HiveScan as MapRDBGroupScan; - to add storage planning rule to convert HiveScan to MapRDBGroupScan; - to add system/session option to enable using of this native reader; - to create a new module for the rule and scan operators, which will be compiled and build only for mapr profile (there is no reason to leverage it for default profile); > Native MapR DB plugin support for Hive MapR-DB json table > - > > Key: DRILL-6454 > URL: https://issues.apache.org/jira/browse/DRILL-6454 > Project: Apache Drill > Issue Type: New Feature > Components: Storage - Hive, Storage - MapRDB >Affects Versions: 1.13.0 >Reporter: Vitalii Diravka >Assignee: Vitalii Diravka >Priority: Major > Fix For: 1.14.0 > > > Hive can create and query MapR-DB tables via maprdb-json-handler: > https://maprdocs.mapr.com/home/Hive/ConnectingToMapR-DB.html > The aim of this Jira to implement Drill native reader for Hive MapR-DB tables > (similar to parquet). > Design proposal is: > - to use JsonTableGroupScan instead of HiveScan; > - to add storage planning rule to convert HiveScan to MapRDBGroupScan; > - to add system/session option to enable using of this native reader; > - native reader can be used only for Drill build with mapr profile (there is > no reason to leverage it for default profile); > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (DRILL-6454) Native MapR DB plugin support for Hive MapR-DB json table

[ https://issues.apache.org/jira/browse/DRILL-6454?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506769#comment-16506769 ] ASF GitHub Bot commented on DRILL-6454: --- vdiravka opened a new pull request #1314: DRILL-6454: Native MapR DB plugin support for Hive MapR-DB json table URL: https://github.com/apache/drill/pull/1314 - The new `StoragePluginOptimizerRule` for reading MapR-DB JSON tables by Drill native reader is added. The rule can allow in planning stage to replace `HiveScan` with Drill native `JsonTableGroupScan`. - A new system session option is added to use the new rule. - The common part between `ConvertHiveMapRDBJsonScanToDrillMapRDBJsonScan` and `ConvertHiveParquetScanToDrillParquetScan` are factored out into `HiveUtilities`. - Dependency onto `drill-format-mapr` for `hive-core` is added. Note: 1) The option name for parquet native reader is changed. 2) Changes in MapRDBFormatMatcher.java is just refactoring. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Native MapR DB plugin support for Hive MapR-DB json table > - > > Key: DRILL-6454 > URL: https://issues.apache.org/jira/browse/DRILL-6454 > Project: Apache Drill > Issue Type: New Feature > Components: Storage - Hive, Storage - MapRDB >Affects Versions: 1.13.0 >Reporter: Vitalii Diravka >Assignee: Vitalii Diravka >Priority: Major > Fix For: 1.14.0 > > > Hive can create and query MapR-DB tables via maprdb-json-handler: > https://maprdocs.mapr.com/home/Hive/ConnectingToMapR-DB.html > The aim of this Jira to implement Drill native reader for Hive MapR-DB tables > (similar to parquet). > Design proposal is: > - to implement new GroupScan operators for interpreting HiveScan as > MapRDBGroupScan; > - to add storage planning rule to convert HiveScan to MapRDBGroupScan; > - to add system/session option to enable using of this native reader; > - to create a new module for the rule and scan operators, which will be > compiled and build only for mapr profile (there is no reason to leverage it > for default profile); > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (DRILL-4682) Allow full schema identifier in SELECT clause

[ https://issues.apache.org/jira/browse/DRILL-4682?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506745#comment-16506745 ] ASF GitHub Bot commented on DRILL-4682: --- ilooner commented on issue #549: DRILL-4682: Allow full schema identifier in SELECT clause URL: https://github.com/apache/drill/pull/549#issuecomment-395925806 @vdiravka Are you planning to complete this change? This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Allow full schema identifier in SELECT clause > - > > Key: DRILL-4682 > URL: https://issues.apache.org/jira/browse/DRILL-4682 > Project: Apache Drill > Issue Type: Improvement > Components: SQL Parser >Reporter: Andries Engelbrecht >Assignee: Vitalii Diravka >Priority: Major > > Currently Drill requires aliases to identify columns in the SELECT clause > when working with multiple tables/workspaces. > Many BI/Analytical and other tools by default will use the full schema > identifier in the select clause when generating SQL statements for execution > for generic JDBC or ODBC sources. Not supporting this feature causes issues > and a slower adoption of utilizing Drill as an execution engine within the > larger Analytical SQL community. > Propose to support > SELECT ... FROM > .. > Also see DRILL-3510 for double quote support as per ANSI_QUOTES > SELECT ""."".""."" FROM > "".""."" > Which is very common generic SQL being generated by most tools when dealing > with a generic SQL data source. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (DRILL-6340) Output Batch Control in Project using the RecordBatchSizer

[ https://issues.apache.org/jira/browse/DRILL-6340?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506739#comment-16506739 ] ASF GitHub Bot commented on DRILL-6340: --- ilooner commented on issue #1302: DRILL-6340: Output Batch Control in Project using the RecordBatchSizer URL: https://github.com/apache/drill/pull/1302#issuecomment-395924806 @bitblender I noticed travis takes a little longer than usual to run with your changes. How long do the unit tests you added take to run? Also please fix conflicts. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Output Batch Control in Project using the RecordBatchSizer > -- > > Key: DRILL-6340 > URL: https://issues.apache.org/jira/browse/DRILL-6340 > Project: Apache Drill > Issue Type: Improvement > Components: Execution - Relational Operators >Reporter: Karthikeyan Manivannan >Assignee: Karthikeyan Manivannan >Priority: Major > Fix For: 1.14.0 > > > This bug is for tracking the changes required to implement Output Batch > Sizing in Project using the RecordBatchSizer. The challenge in doing this > mainly lies in dealing with expressions that produce variable-length columns. > The following doc talks about some of the design approaches for dealing with > such variable-length columns. > [https://docs.google.com/document/d/1h0WsQsen6xqqAyyYSrtiAniQpVZGmQNQqC1I2DJaxAA/edit?usp=sharing] -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (DRILL-6340) Output Batch Control in Project using the RecordBatchSizer

[

https://issues.apache.org/jira/browse/DRILL-6340?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506734#comment-16506734

]

ASF GitHub Bot commented on DRILL-6340:

---

ilooner commented on a change in pull request #1302: DRILL-6340: Output Batch

Control in Project using the RecordBatchSizer

URL: https://github.com/apache/drill/pull/1302#discussion_r194206474

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/project/ProjectRecordBatch.java

##

@@ -193,6 +209,13 @@ protected IterOutcome doWork() {

}

}

incomingRecordCount = incoming.getRecordCount();

+ memoryManager.update();

+ if (logger.isTraceEnabled()) {

+logger.trace("doWork():[1] memMgr RC " +

memoryManager.getOutputRowCount()

Review comment:

use {} instead of concatenating strings with +

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Output Batch Control in Project using the RecordBatchSizer

> --

>

> Key: DRILL-6340

> URL: https://issues.apache.org/jira/browse/DRILL-6340

> Project: Apache Drill

> Issue Type: Improvement

> Components: Execution - Relational Operators

>Reporter: Karthikeyan Manivannan

>Assignee: Karthikeyan Manivannan

>Priority: Major

> Fix For: 1.14.0

>

>

> This bug is for tracking the changes required to implement Output Batch

> Sizing in Project using the RecordBatchSizer. The challenge in doing this

> mainly lies in dealing with expressions that produce variable-length columns.

> The following doc talks about some of the design approaches for dealing with

> such variable-length columns.

> [https://docs.google.com/document/d/1h0WsQsen6xqqAyyYSrtiAniQpVZGmQNQqC1I2DJaxAA/edit?usp=sharing]

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (DRILL-6340) Output Batch Control in Project using the RecordBatchSizer

[

https://issues.apache.org/jira/browse/DRILL-6340?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506737#comment-16506737

]

ASF GitHub Bot commented on DRILL-6340:

---

ilooner commented on a change in pull request #1302: DRILL-6340: Output Batch

Control in Project using the RecordBatchSizer

URL: https://github.com/apache/drill/pull/1302#discussion_r194206511

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/project/ProjectRecordBatch.java

##

@@ -206,12 +229,24 @@ protected IterOutcome doWork() {

first = false;

container.zeroVectors();

-if (!doAlloc(incomingRecordCount)) {

+

+int maxOuputRecordCount = memoryManager.getOutputRowCount();

+if (logger.isTraceEnabled()) {

+ logger.trace("doWork():[2] memMgr RC " +

memoryManager.getOutputRowCount()

Review comment:

Same as above.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Output Batch Control in Project using the RecordBatchSizer

> --

>

> Key: DRILL-6340

> URL: https://issues.apache.org/jira/browse/DRILL-6340

> Project: Apache Drill

> Issue Type: Improvement

> Components: Execution - Relational Operators

>Reporter: Karthikeyan Manivannan

>Assignee: Karthikeyan Manivannan

>Priority: Major

> Fix For: 1.14.0

>

>

> This bug is for tracking the changes required to implement Output Batch

> Sizing in Project using the RecordBatchSizer. The challenge in doing this

> mainly lies in dealing with expressions that produce variable-length columns.

> The following doc talks about some of the design approaches for dealing with

> such variable-length columns.

> [https://docs.google.com/document/d/1h0WsQsen6xqqAyyYSrtiAniQpVZGmQNQqC1I2DJaxAA/edit?usp=sharing]

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (DRILL-6340) Output Batch Control in Project using the RecordBatchSizer

[

https://issues.apache.org/jira/browse/DRILL-6340?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506738#comment-16506738

]

ASF GitHub Bot commented on DRILL-6340:

---

ilooner commented on a change in pull request #1302: DRILL-6340: Output Batch

Control in Project using the RecordBatchSizer

URL: https://github.com/apache/drill/pull/1302#discussion_r194206523

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/project/ProjectRecordBatch.java

##

@@ -206,12 +229,24 @@ protected IterOutcome doWork() {

first = false;

container.zeroVectors();

-if (!doAlloc(incomingRecordCount)) {

+

+int maxOuputRecordCount = memoryManager.getOutputRowCount();

+if (logger.isTraceEnabled()) {

+ logger.trace("doWork():[2] memMgr RC " +

memoryManager.getOutputRowCount()

+ + ", incoming rc " + incomingRecordCount + " incoming " +

incoming

+ + ", project " + this);

+}

+if (!doAlloc(maxOuputRecordCount)) {

outOfMemory = true;

return IterOutcome.OUT_OF_MEMORY;

}

+long projectStartTime = System.currentTimeMillis();

+final int outputRecords = projector.projectRecords(this.incoming,0,

maxOuputRecordCount, 0);

+long projectEndTime = System.currentTimeMillis();

+if (logger.isTraceEnabled()) {

+ logger.trace("doWork(): projection: " + " records " + outputRecords + ",

time " + (projectEndTime - projectStartTime) + " ms");

Review comment:

Use {}

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Output Batch Control in Project using the RecordBatchSizer

> --

>

> Key: DRILL-6340

> URL: https://issues.apache.org/jira/browse/DRILL-6340

> Project: Apache Drill

> Issue Type: Improvement

> Components: Execution - Relational Operators

>Reporter: Karthikeyan Manivannan

>Assignee: Karthikeyan Manivannan

>Priority: Major

> Fix For: 1.14.0

>

>

> This bug is for tracking the changes required to implement Output Batch

> Sizing in Project using the RecordBatchSizer. The challenge in doing this

> mainly lies in dealing with expressions that produce variable-length columns.

> The following doc talks about some of the design approaches for dealing with

> such variable-length columns.

> [https://docs.google.com/document/d/1h0WsQsen6xqqAyyYSrtiAniQpVZGmQNQqC1I2DJaxAA/edit?usp=sharing]

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (DRILL-6340) Output Batch Control in Project using the RecordBatchSizer

[

https://issues.apache.org/jira/browse/DRILL-6340?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506735#comment-16506735

]

ASF GitHub Bot commented on DRILL-6340:

---

ilooner commented on a change in pull request #1302: DRILL-6340: Output Batch

Control in Project using the RecordBatchSizer

URL: https://github.com/apache/drill/pull/1302#discussion_r194206567

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/project/ProjectRecordBatch.java

##

@@ -497,30 +561,36 @@ private void setupNewSchemaFromInput(RecordBatch

incomingBatch) throws SchemaCha

final boolean useSetSafe = !(vector instanceof FixedWidthVector);

final ValueVectorWriteExpression write = new

ValueVectorWriteExpression(fid, expr, useSetSafe);

final HoldingContainer hc = cg.addExpr(write,

ClassGenerator.BlkCreateMode.TRUE_IF_BOUND);

+memoryManager.addNewField(vector, write);

// We cannot do multiple transfers from the same vector. However we

still need to instantiate the output vector.

if (expr instanceof ValueVectorReadExpression) {

final ValueVectorReadExpression vectorRead =

(ValueVectorReadExpression) expr;

if (!vectorRead.hasReadPath()) {

final TypedFieldId id = vectorRead.getFieldId();

-final ValueVector vvIn =

incomingBatch.getValueAccessorById(id.getIntermediateClass(),

id.getFieldIds()).getValueVector();

+final ValueVector vvIn =

incomingBatch.getValueAccessorById(id.getIntermediateClass(),

+id.getFieldIds()).getValueVector();

vvIn.makeTransferPair(vector);

}

}

-logger.debug("Added eval for project expression.");

}

}

try {

CodeGenerator codeGen = cg.getCodeGenerator();

codeGen.plainJavaCapable(true);

// Uncomment out this line to debug the generated code.

- // codeGen.saveCodeForDebugging(true);

+ //codeGen.saveCodeForDebugging(true);

this.projector = context.getImplementationClass(codeGen);

projector.setup(context, incomingBatch, this, transfers);

} catch (ClassTransformationException | IOException e) {

throw new SchemaChangeException("Failure while attempting to load

generated class", e);

}

+

+long setupNewSchemaEndTime = System.currentTimeMillis();

+if (logger.isTraceEnabled()) {

+ logger.trace("setupNewSchemaFromInput: time " + (setupNewSchemaEndTime -

setupNewSchemaStartTime) + " ms" + ", proj " + this + " incoming " +

incomingBatch);

Review comment:

{}

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Output Batch Control in Project using the RecordBatchSizer

> --

>

> Key: DRILL-6340

> URL: https://issues.apache.org/jira/browse/DRILL-6340

> Project: Apache Drill

> Issue Type: Improvement

> Components: Execution - Relational Operators

>Reporter: Karthikeyan Manivannan

>Assignee: Karthikeyan Manivannan

>Priority: Major

> Fix For: 1.14.0

>

>

> This bug is for tracking the changes required to implement Output Batch

> Sizing in Project using the RecordBatchSizer. The challenge in doing this

> mainly lies in dealing with expressions that produce variable-length columns.

> The following doc talks about some of the design approaches for dealing with

> such variable-length columns.

> [https://docs.google.com/document/d/1h0WsQsen6xqqAyyYSrtiAniQpVZGmQNQqC1I2DJaxAA/edit?usp=sharing]

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (DRILL-6340) Output Batch Control in Project using the RecordBatchSizer

[

https://issues.apache.org/jira/browse/DRILL-6340?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506736#comment-16506736

]

ASF GitHub Bot commented on DRILL-6340:

---

ilooner commented on a change in pull request #1302: DRILL-6340: Output Batch

Control in Project using the RecordBatchSizer

URL: https://github.com/apache/drill/pull/1302#discussion_r194206547

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/project/ProjectRecordBatch.java

##

@@ -230,18 +265,37 @@ protected IterOutcome doWork() {

container.buildSchema(SelectionVectorMode.NONE);

}

+memoryManager.updateOutgoingStats(outputRecords);

+if (logger.isDebugEnabled()) {

+ logger.debug("BATCH_STATS, outgoing: {}", new RecordBatchSizer(this));

+}

// Get the final outcome based on hasRemainder since that will determine

if all the incoming records were

// consumed in current output batch or not

return getFinalOutcome(hasRemainder);

}

private void handleRemainder() {

final int remainingRecordCount = incoming.getRecordCount() -

remainderIndex;

-if (!doAlloc(remainingRecordCount)) {

+assert this.memoryManager.incomingBatch == incoming;

+final int recordsToProcess = Math.min(remainingRecordCount,

memoryManager.getOutputRowCount());

+

+if (!doAlloc(recordsToProcess)) {

outOfMemory = true;

return;

}

-final int projRecords = projector.projectRecords(remainderIndex,

remainingRecordCount, 0);

+if (logger.isTraceEnabled()) {

+ logger.trace("handleRemainder: remaining RC " + remainingRecordCount + "

toProcess " + recordsToProcess

Review comment:

{}

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Output Batch Control in Project using the RecordBatchSizer

> --

>

> Key: DRILL-6340

> URL: https://issues.apache.org/jira/browse/DRILL-6340

> Project: Apache Drill

> Issue Type: Improvement

> Components: Execution - Relational Operators

>Reporter: Karthikeyan Manivannan

>Assignee: Karthikeyan Manivannan

>Priority: Major

> Fix For: 1.14.0

>

>

> This bug is for tracking the changes required to implement Output Batch

> Sizing in Project using the RecordBatchSizer. The challenge in doing this

> mainly lies in dealing with expressions that produce variable-length columns.

> The following doc talks about some of the design approaches for dealing with

> such variable-length columns.

> [https://docs.google.com/document/d/1h0WsQsen6xqqAyyYSrtiAniQpVZGmQNQqC1I2DJaxAA/edit?usp=sharing]

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

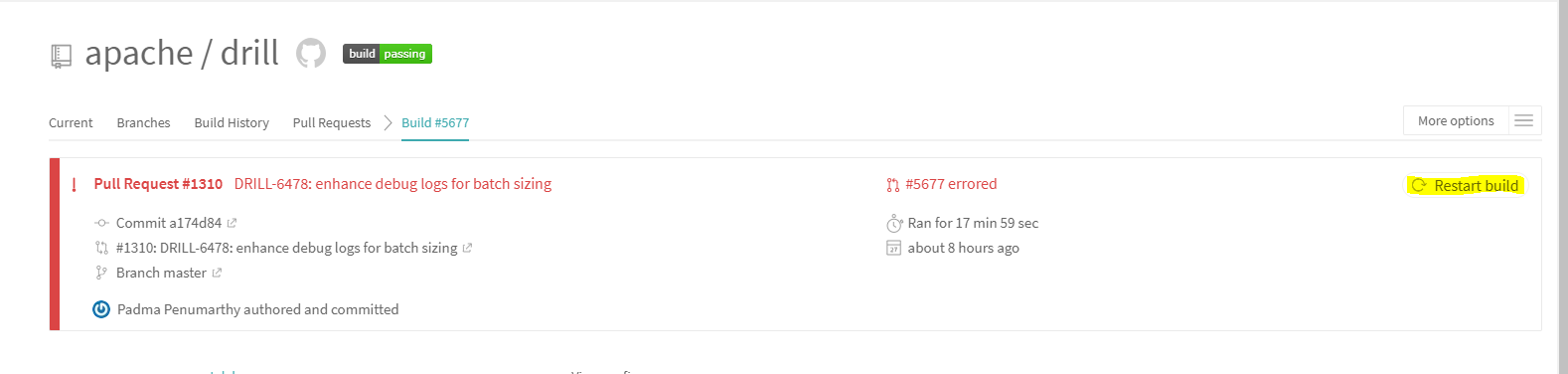

[jira] [Commented] (DRILL-6478) enhance debug logs for batch sizing

[

https://issues.apache.org/jira/browse/DRILL-6478?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506700#comment-16506700

]

ASF GitHub Bot commented on DRILL-6478:

---

ppadma commented on a change in pull request #1310: DRILL-6478: enhance debug

logs for batch sizing

URL: https://github.com/apache/drill/pull/1310#discussion_r194203192

##

File path:

exec/java-exec/src/main/java/org/apache/drill/exec/physical/impl/union/UnionAllRecordBatch.java

##

@@ -409,6 +426,22 @@ public void remove() {

public void close() {

super.close();

updateBatchMemoryManagerStats();

+

+logger.debug("BATCH_STATS, incoming aggregate left: batch count : {}, avg

bytes : {}, avg row bytes : {}, record count : {}",

Review comment:

This does not have to be under isDebugEnabled flag

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> enhance debug logs for batch sizing

> ---

>

> Key: DRILL-6478

> URL: https://issues.apache.org/jira/browse/DRILL-6478

> Project: Apache Drill

> Issue Type: Bug

>Reporter: Padma Penumarthy

>Assignee: Padma Penumarthy

>Priority: Major

> Fix For: 1.14.0

>

>

> Fix some issues with debug logs so QA scripts work better. Also, added batch

> sizing logs for union all.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (DRILL-6478) enhance debug logs for batch sizing

[ https://issues.apache.org/jira/browse/DRILL-6478?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506697#comment-16506697 ] ASF GitHub Bot commented on DRILL-6478: --- ppadma commented on issue #1310: DRILL-6478: enhance debug logs for batch sizing URL: https://github.com/apache/drill/pull/1310#issuecomment-395919511 @sohami addressed review comments. please take a look. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > enhance debug logs for batch sizing > --- > > Key: DRILL-6478 > URL: https://issues.apache.org/jira/browse/DRILL-6478 > Project: Apache Drill > Issue Type: Bug >Reporter: Padma Penumarthy >Assignee: Padma Penumarthy >Priority: Major > Fix For: 1.14.0 > > > Fix some issues with debug logs so QA scripts work better. Also, added batch > sizing logs for union all. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (DRILL-4455) Depend on Apache Arrow for Vector and Memory

[ https://issues.apache.org/jira/browse/DRILL-4455?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Pritesh Maker updated DRILL-4455: - Fix Version/s: (was: 2.0.0) Future > Depend on Apache Arrow for Vector and Memory > > > Key: DRILL-4455 > URL: https://issues.apache.org/jira/browse/DRILL-4455 > Project: Apache Drill > Issue Type: Bug >Reporter: Steven Phillips >Assignee: Steven Phillips >Priority: Major > Fix For: Future > > > The code for value vectors and memory has been split and contributed to the > apache arrow repository. In order to help this project advance, Drill should > depend on the arrow project instead of internal value vector code. > This change will require recompiling any external code, such as UDFs and > StoragePlugins. The changes will mainly just involve renaming the classes to > the org.apache.arrow namespace. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (DRILL-6474) Queries with ORDER BY and OFFSET (w/o LIMIT) do not return any rows

[

https://issues.apache.org/jira/browse/DRILL-6474?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Pritesh Maker updated DRILL-6474:

-

Reviewer: Hanumath Rao Maduri (was: Aman Sinha)

> Queries with ORDER BY and OFFSET (w/o LIMIT) do not return any rows

> ---

>

> Key: DRILL-6474

> URL: https://issues.apache.org/jira/browse/DRILL-6474

> Project: Apache Drill

> Issue Type: Bug

>Reporter: Timothy Farkas

>Assignee: Timothy Farkas

>Priority: Major

> Fix For: 1.14.0

>

>

> This is easily reproduced with the following test

> {code}

> final ClusterFixtureBuilder builder = new

> ClusterFixtureBuilder(baseDirTestWatcher);

> try (ClusterFixture clusterFixture = builder.build();

> ClientFixture clientFixture = clusterFixture.clientFixture()) {

> clientFixture.testBuilder()

> .sqlQuery("select name_s10 from `mock`.`employees_10` order by

> name_s10 offset 100")

> .expectsNumRecords(99900)

> .build()

> .run();

> }

> {code}

> That fails with

> java.lang.AssertionError:

> Expected :99900

> Actual :0

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (DRILL-6474) Queries with ORDER BY and OFFSET (w/o LIMIT) do not return any rows

[

https://issues.apache.org/jira/browse/DRILL-6474?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Pritesh Maker updated DRILL-6474:

-

Fix Version/s: 1.14.0

> Queries with ORDER BY and OFFSET (w/o LIMIT) do not return any rows

> ---

>

> Key: DRILL-6474

> URL: https://issues.apache.org/jira/browse/DRILL-6474

> Project: Apache Drill

> Issue Type: Bug

>Reporter: Timothy Farkas

>Assignee: Timothy Farkas

>Priority: Major

> Fix For: 1.14.0

>

>

> This is easily reproduced with the following test

> {code}

> final ClusterFixtureBuilder builder = new

> ClusterFixtureBuilder(baseDirTestWatcher);

> try (ClusterFixture clusterFixture = builder.build();

> ClientFixture clientFixture = clusterFixture.clientFixture()) {

> clientFixture.testBuilder()

> .sqlQuery("select name_s10 from `mock`.`employees_10` order by

> name_s10 offset 100")

> .expectsNumRecords(99900)

> .build()

> .run();

> }

> {code}

> That fails with

> java.lang.AssertionError:

> Expected :99900

> Actual :0

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Commented] (DRILL-4455) Depend on Apache Arrow for Vector and Memory

[ https://issues.apache.org/jira/browse/DRILL-4455?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506675#comment-16506675 ] ASF GitHub Bot commented on DRILL-4455: --- ilooner commented on issue #398: DRILL-4455: Depend on Apache Arrow URL: https://github.com/apache/drill/pull/398#issuecomment-395915947 @vrozov Since you are picking up Drillbuf design work, please advise on the plan for this PR. This is an automated message from the Apache Git Service. To respond to the message, please log on GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Depend on Apache Arrow for Vector and Memory > > > Key: DRILL-4455 > URL: https://issues.apache.org/jira/browse/DRILL-4455 > Project: Apache Drill > Issue Type: Bug >Reporter: Steven Phillips >Assignee: Steven Phillips >Priority: Major > Fix For: 2.0.0 > > > The code for value vectors and memory has been split and contributed to the > apache arrow repository. In order to help this project advance, Drill should > depend on the arrow project instead of internal value vector code. > This change will require recompiling any external code, such as UDFs and > StoragePlugins. The changes will mainly just involve renaming the classes to > the org.apache.arrow namespace. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (DRILL-4934) ServiceEngine does not use property useIP for DrillbitStartup

[

https://issues.apache.org/jira/browse/DRILL-4934?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506658#comment-16506658

]

ASF GitHub Bot commented on DRILL-4934:

---

ilooner commented on issue #617: DRILL-4934 ServiceEngine does not use property

useIP for DrillbitStartup

URL: https://github.com/apache/drill/pull/617#issuecomment-395912267

@joeswingle It looks like useIp was removed from ServiceEngine in this PR

https://github.com/apache/drill/pull/578 . @parthchandra @sohami since you guys

looked at the PR, do you know why it was removed and if it would make sense to

add back?

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> ServiceEngine does not use property useIP for DrillbitStartup

> -

>

> Key: DRILL-4934

> URL: https://issues.apache.org/jira/browse/DRILL-4934

> Project: Apache Drill

> Issue Type: Bug

> Components: Execution - RPC

>Affects Versions: 1.8.0

> Environment: All Envrironments.

>Reporter: Joe Swingle

>Priority: Minor

> Labels: easyfix

> Original Estimate: 1h

> Remaining Estimate: 1h

>

> Our environment is configured such that two networks cannot resolve machines

> by hostname, but can connect by IP address. This creates a problem when an

> ODBC/JDBC Connection requests a Drillbit from the Zookeeper Quorum. The

> Quorum returns the hostname of the running drillbit. The Quorum should be

> capable of returning the IP Address. Changing the existing property

> _'drill.exec.rpc.use.ip_' in *drill-override.conf* did not have desired

> effect.

> Reviewing code in org.apache.drill.exec.service.ServiceEngine.java, shows the

> boolean useIP is set to false, and never read from the configuration.

> Simply adding the folllowing code at Line 76 resolved issue.:

> {code:java}

> useIP = context.getConfig().getBoolean(ExecConstants.USE_IP_ADDRESS);

> {code}

> With the above code, the Drillbit is registerd in the quorum with the IP

> address, not the hostname.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (DRILL-143) Support CGROUPs resource management

[ https://issues.apache.org/jira/browse/DRILL-143?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Bridget Bevens updated DRILL-143: - Labels: doc-complete ready-to-commit (was: doc-impacting ready-to-commit) > Support CGROUPs resource management > --- > > Key: DRILL-143 > URL: https://issues.apache.org/jira/browse/DRILL-143 > Project: Apache Drill > Issue Type: New Feature >Reporter: Jacques Nadeau >Assignee: Kunal Khatua >Priority: Major > Labels: doc-complete, ready-to-commit > Fix For: 1.14.0 > > Attachments: 253ce178-ddeb-e482-cd64-44ab7284ad1c.sys.drill > > > For the purpose of playing nice on clusters that don't have YARN, we should > write up configuration and scripts to allows users to run Drill next to > existing workloads without sharing resources. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Commented] (DRILL-4824) Null maps / lists and non-provided state support for JSON fields. Numeric types promotion.

[

https://issues.apache.org/jira/browse/DRILL-4824?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506642#comment-16506642

]

ASF GitHub Bot commented on DRILL-4824:

---

ilooner closed pull request #580: DRILL-4824: JSON with complex nested data

produces incorrect output w…

URL: https://github.com/apache/drill/pull/580

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git

a/exec/java-exec/src/test/java/org/apache/drill/exec/vector/complex/writer/TestJsonReader.java

b/exec/java-exec/src/test/java/org/apache/drill/exec/vector/complex/writer/TestJsonReader.java

index 1168e37455..0487e06c94 100644

---

a/exec/java-exec/src/test/java/org/apache/drill/exec/vector/complex/writer/TestJsonReader.java

+++

b/exec/java-exec/src/test/java/org/apache/drill/exec/vector/complex/writer/TestJsonReader.java

@@ -91,9 +91,7 @@ public void testFieldSelectionBug() throws Exception {

.baselineColumns("col_1", "col_2")

.baselineValues(

mapOf(),

- mapOf(

- "inner_1", listOf(),

- "inner_3", mapOf()))

+ mapOf())

.baselineValues(

mapOf("inner_object_field_1", "2"),

mapOf(

@@ -104,8 +102,7 @@ public void testFieldSelectionBug() throws Exception {

mapOf(),

mapOf(

"inner_1", listOf("4", "5", "6"),

- "inner_2", "3",

- "inner_3", mapOf()))

+ "inner_2", "3"))

.go();

} finally {

test("alter session set `store.json.all_text_mode` = false");

@@ -128,7 +125,7 @@ public void testSplitAndTransferFailure() throws Exception {

.sqlQuery("select flatten(config) as flat from

cp.`/store/json/null_list_v2.json`")

.ordered()

.baselineColumns("flat")

-.baselineValues(mapOf("repeated_varchar", listOf()))

+.baselineValues(mapOf())

.baselineValues(mapOf("repeated_varchar", listOf(testVal)))

.go();

diff --git

a/exec/vector/src/main/java/org/apache/drill/exec/vector/complex/MapVector.java

b/exec/vector/src/main/java/org/apache/drill/exec/vector/complex/MapVector.java

index e76e674d50..d998e6aad2 100644

---

a/exec/vector/src/main/java/org/apache/drill/exec/vector/complex/MapVector.java

+++

b/exec/vector/src/main/java/org/apache/drill/exec/vector/complex/MapVector.java

@@ -317,6 +317,12 @@ public Object getObject(int index) {

if (v != null && index < v.getAccessor().getValueCount()) {

Object value = v.getAccessor().getObject(index);

if (value != null) {

+if ((v.getAccessor().getObject(index) instanceof Map

+&& ((Map) v.getAccessor().getObject(index)).size() == 0)

+|| (v.getAccessor().getObject(index) instanceof List

+&& ((List) v.getAccessor().getObject(index)).size() == 0))

{

+ continue;

+}

vv.put(child, value);

}

}

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Null maps / lists and non-provided state support for JSON fields. Numeric

> types promotion.

> --

>

> Key: DRILL-4824

> URL: https://issues.apache.org/jira/browse/DRILL-4824

> Project: Apache Drill

> Issue Type: Improvement

> Components: Storage - JSON

>Affects Versions: 1.0.0

>Reporter: Roman Kulyk

>Assignee: Volodymyr Vysotskyi

>Priority: Major

>

> There is incorrect output in case of JSON file with complex nested data.

> _JSON:_

> {code:none|title=example.json|borderStyle=solid}

> {

> "Field1" : {

> }

> }

> {

> "Field1" : {

> "InnerField1": {"key1":"value1"},

> "InnerField2": {"key2":"value2"}

> }

> }

> {

> "Field1" : {

> "InnerField3" : ["value3", "value4"],

> "InnerField4" : ["value5", "value6"]

> }

> }

> {code}

> _Query:_

> {code:sql}

> select Field1 from dfs.`/tmp/example.json`

> {code}

> _Incorrect result:_

> {code:none}

> +---+

> | Field1 |

> +---+

> {"InnerField1":{},"InnerField2":{},"InnerField3":[],"InnerField4":[]}

> {"InnerField1":{"key1":"value1"},"InnerFie

[jira] [Commented] (DRILL-4824) Null maps / lists and non-provided state support for JSON fields. Numeric types promotion.

[

https://issues.apache.org/jira/browse/DRILL-4824?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506641#comment-16506641

]

ASF GitHub Bot commented on DRILL-4824:

---

ilooner commented on issue #580: DRILL-4824: JSON with complex nested data

produces incorrect output w…

URL: https://github.com/apache/drill/pull/580#issuecomment-395908139

Thanks @paul-rogers then it sounds like this PR should be closed.

@KulykRoman if you'd like to look into the larger issues around JSON handling

please look into the Jiras that Paul has mentioned. Or if you feel this PR has

been closed in error, please feel free to reopen and address Paul's comments.

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

> Null maps / lists and non-provided state support for JSON fields. Numeric

> types promotion.

> --

>

> Key: DRILL-4824

> URL: https://issues.apache.org/jira/browse/DRILL-4824

> Project: Apache Drill

> Issue Type: Improvement

> Components: Storage - JSON

>Affects Versions: 1.0.0

>Reporter: Roman Kulyk

>Assignee: Volodymyr Vysotskyi

>Priority: Major

>

> There is incorrect output in case of JSON file with complex nested data.

> _JSON:_

> {code:none|title=example.json|borderStyle=solid}

> {

> "Field1" : {

> }

> }

> {

> "Field1" : {

> "InnerField1": {"key1":"value1"},

> "InnerField2": {"key2":"value2"}

> }

> }

> {

> "Field1" : {

> "InnerField3" : ["value3", "value4"],

> "InnerField4" : ["value5", "value6"]

> }

> }

> {code}

> _Query:_

> {code:sql}

> select Field1 from dfs.`/tmp/example.json`

> {code}

> _Incorrect result:_

> {code:none}

> +---+

> | Field1 |

> +---+

> {"InnerField1":{},"InnerField2":{},"InnerField3":[],"InnerField4":[]}

> {"InnerField1":{"key1":"value1"},"InnerField2"

> {"key2":"value2"},"InnerField3":[],"InnerField4":[]}

> {"InnerField1":{},"InnerField2":{},"InnerField3":["value3","value4"],"InnerField4":["value5","value6"]}

> +--+

> {code}

> Theres is no need to output missing fields. In case of deeply nested

> structure we will get unreadable result for user.

> _Correct result:_

> {code:none}

> +--+

> | Field1 |

> +--+

> |{}

> {"InnerField1":{"key1":"value1"},"InnerField2":{"key2":"value2"}}

> {"InnerField3":["value3","value4"],"InnerField4":["value5","value6"]}

> +--+

> {code}

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (DRILL-6328) Consolidate developer docs in docs/ folder of drill repo.

[ https://issues.apache.org/jira/browse/DRILL-6328?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Pritesh Maker updated DRILL-6328: - Description: *For documentation* In [https://drill.apache.org/docs/developer-information/] we can add note about docs folder with useful information for developers (https://github.com/apache/drill/tree/master/docs/dev) was: *For documentation* In https://drill.apache.org/docs/developer-information/ we can add note about docs folder with useful information for developers. > Consolidate developer docs in docs/ folder of drill repo. > - > > Key: DRILL-6328 > URL: https://issues.apache.org/jira/browse/DRILL-6328 > Project: Apache Drill > Issue Type: Task >Reporter: Timothy Farkas >Assignee: Timothy Farkas >Priority: Major > Labels: doc-impacting, ready-to-commit > Fix For: 1.14.0 > > > *For documentation* > In [https://drill.apache.org/docs/developer-information/] we can add note > about docs folder with useful information for developers > (https://github.com/apache/drill/tree/master/docs/dev) -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (DRILL-5030) Drill SSL Docs have Bad Link to Oracle Website

[ https://issues.apache.org/jira/browse/DRILL-5030?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Pritesh Maker updated DRILL-5030: - Labels: (was: documentation) > Drill SSL Docs have Bad Link to Oracle Website > -- > > Key: DRILL-5030 > URL: https://issues.apache.org/jira/browse/DRILL-5030 > Project: Apache Drill > Issue Type: Bug > Components: Documentation >Affects Versions: 1.8.0 >Reporter: John Omernik >Assignee: Bridget Bevens >Priority: Major > Fix For: 1.14.0 > > > When going to setup custom SSL certs on Drill, I found that the link to the > oracle website was broken on this page: > https://drill.apache.org/docs/configuring-web-console-and-rest-api-security/ > at: > As cluster administrator, you can set the following SSL configuration > parameters in the conf/drill-override.conf file, as described in the Java > product documentation: > Obviously fixing the link is one option, another would be to provide > instructions for SSL certs directly in the drill docs so we are not reliant > on Oracle's website. > Thanks! -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (DRILL-6182) Doc bug on parameter 'drill.exec.spill.fs'

[ https://issues.apache.org/jira/browse/DRILL-6182?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Pritesh Maker updated DRILL-6182: - Labels: (was: documentation) > Doc bug on parameter 'drill.exec.spill.fs' > -- > > Key: DRILL-6182 > URL: https://issues.apache.org/jira/browse/DRILL-6182 > Project: Apache Drill > Issue Type: Bug > Components: Documentation >Reporter: Satoshi Yamada >Assignee: Bridget Bevens >Priority: Trivial > Fix For: 1.14.0 > > > Parameter 'drill.exe.spill.fs' should be 'drill.exec.spill.fs' (with "c" > after exe).** > Observed in the documents below. > [https://drill.apache.org/docs/start-up-options/] > [https://drill.apache.org/docs/sort-based-and-hash-based-memory-constrained-operators/] > -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (DRILL-6061) Doc Request: Global Query List showing queries from all Drill foreman nodes

[ https://issues.apache.org/jira/browse/DRILL-6061?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Pritesh Maker updated DRILL-6061: - Labels: (was: documentation) > Doc Request: Global Query List showing queries from all Drill foreman nodes > --- > > Key: DRILL-6061 > URL: https://issues.apache.org/jira/browse/DRILL-6061 > Project: Apache Drill > Issue Type: Task > Components: Documentation, Metadata, Web Server >Affects Versions: 1.11.0 > Environment: MapR 5.2 >Reporter: Hari Sekhon >Assignee: Bridget Bevens >Priority: Major > Fix For: 1.14.0 > > > Documentation Request to improve doc around Global Query List to show all > queries executed across all Drill nodes in a cluster for better management > and auditing. > It wasn't obvious to be able to see all queries across all nodes in a Drill > cluster. The Web UI on any given Drill node only shows the queries > coordinated by that local node if acting as the foreman for the query, so if > using ZooKeeper or a Load Balancer to distribute queries via different Drill > nodes (eg. > [https://github.com/HariSekhon/nagios-plugins/tree/master/haproxy|https://github.com/HariSekhon/nagios-plugins/tree/master/haproxy]) > then the query list will be spread across lots of different nodes with no > global timeline of queries. -- This message was sent by Atlassian JIRA (v7.6.3#76005)

[jira] [Updated] (DRILL-6393) Radians should take an argument (x)

[

https://issues.apache.org/jira/browse/DRILL-6393?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Pritesh Maker updated DRILL-6393:

-

Labels: (was: documentaion)

> Radians should take an argument (x)

> ---

>

> Key: DRILL-6393

> URL: https://issues.apache.org/jira/browse/DRILL-6393

> Project: Apache Drill

> Issue Type: Bug

> Components: Documentation

>Affects Versions: 1.13.0

>Reporter: Robert Hou

>Assignee: Bridget Bevens

>Priority: Major

> Fix For: 1.14.0

>

>

> The radians function is missing an argument on this webpage:

>https://drill.apache.org/docs/math-and-trig/

> The table has this information:

> {noformat}

> RADIANS FLOAT8 Converts x degress to radians.

> {noformat}

> It should be:

> {noformat}

> RADIANS(x)FLOAT8 Converts x degrees to radians.

> {noformat}

> Also, degress is mis-spelled. It should be degrees.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Closed] (DRILL-4326) JDBC Storage Plugin for PostgreSQL does not work

[

https://issues.apache.org/jira/browse/DRILL-4326?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Bridget Bevens closed DRILL-4326.

-

Updated documentation with information provided in the JIRA.

> JDBC Storage Plugin for PostgreSQL does not work

>

>

> Key: DRILL-4326

> URL: https://issues.apache.org/jira/browse/DRILL-4326

> Project: Apache Drill

> Issue Type: Bug

> Components: Storage - JDBC

>Affects Versions: 1.3.0, 1.4.0, 1.5.0

> Environment: Mac OS X JDK 1.8 PostgreSQL 9.4.4 PostgreSQL JDBC jars

> (postgresql-9.2-1004-jdbc4.jar, postgresql-9.1-901-1.jdbc4.jar, )

>Reporter: Akon Dey

>Priority: Major

> Fix For: 1.5.0

>

>

> Queries with the JDBC Storage Plugin for PostgreSQL fail with DATA_READ ERROR.

> The JDBC Storage Plugin settings in use are:

> {code}

> {

> "type": "jdbc",

> "driver": "org.postgresql.Driver",

> "url": "jdbc:postgresql://127.0.0.1/test",

> "username": "akon",

> "password": null,

> "enabled": false

> }

> {code}

> Please refer to the following stack for further details:

> {noformat}

> Akons-MacBook-Pro:drill akon$

> ./distribution/target/apache-drill-1.5.0-SNAPSHOT/apache-drill-1.5.0-SNAPSHOT/bin/drill-embedded

> Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M;

> support was removed in 8.0

> Jan 29, 2016 9:17:18 AM org.glassfish.jersey.server.ApplicationHandler

> initialize

> INFO: Initiating Jersey application, version Jersey: 2.8 2014-04-29

> 01:25:26...

> apache drill 1.5.0-SNAPSHOT

> "a little sql for your nosql"

> 0: jdbc:drill:zk=local> !verbose

> verbose: on

> 0: jdbc:drill:zk=local> use pgdb;

> +---+---+

> | ok | summary |

> +---+---+

> | true | Default schema changed to [pgdb] |

> +---+---+

> 1 row selected (0.753 seconds)

> 0: jdbc:drill:zk=local> select * from ips;

> Error: DATA_READ ERROR: The JDBC storage plugin failed while trying setup the

> SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> (state=,code=0)

> java.sql.SQLException: DATA_READ ERROR: The JDBC storage plugin failed while

> trying setup the SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> at

> org.apache.drill.jdbc.impl.DrillCursor.nextRowInternally(DrillCursor.java:247)

> at

> org.apache.drill.jdbc.impl.DrillCursor.loadInitialSchema(DrillCursor.java:290)

> at

> org.apache.drill.jdbc.impl.DrillResultSetImpl.execute(DrillResultSetImpl.java:1923)

> at

> org.apache.drill.jdbc.impl.DrillResultSetImpl.execute(DrillResultSetImpl.java:73)

> at

> net.hydromatic.avatica.AvaticaConnection.executeQueryInternal(AvaticaConnection.java:404)

> at

> net.hydromatic.avatica.AvaticaStatement.executeQueryInternal(AvaticaStatement.java:351)

> at

> net.hydromatic.avatica.AvaticaStatement.executeInternal(AvaticaStatement.java:338)

> at

> net.hydromatic.avatica.AvaticaStatement.execute(AvaticaStatement.java:69)

> at

> org.apache.drill.jdbc.impl.DrillStatementImpl.execute(DrillStatementImpl.java:101)

> at sqlline.Commands.execute(Commands.java:841)

> at sqlline.Commands.sql(Commands.java:751)

> at sqlline.SqlLine.dispatch(SqlLine.java:746)

> at sqlline.SqlLine.begin(SqlLine.java:621)

> at sqlline.SqlLine.start(SqlLine.java:375)

> at sqlline.SqlLine.main(SqlLine.java:268)

> Caused by: org.apache.drill.common.exceptions.UserRemoteException: DATA_READ

> ERROR: The JDBC storage plugin failed while trying setup the SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> at

> org.apache.drill.exec.rpc.user.QueryResultHandler.resultArrived(QueryResultHandler.java:119)

> at

> org.apache.drill.exec.rpc.user.UserClient.handleReponse(UserClient.java:113)

> at

> org.apache.drill.exec.rpc.BasicClientWithConnection.handle(BasicClientWithConnection.java:46)

> at

> org.apache.drill.exec.rpc.BasicClientWithConnection.handle(BasicClientWithConnection.java:31)

> at org.apache.drill.exec.rpc.RpcBus.handle(RpcBus.java:67)

> at org.apache.drill.exec.rpc.RpcBus$RequestEvent.run(RpcBus.java:374)

> at

> org.apache.drill.common.SerializedExecutor$RunnableProcessor.run(SerializedExecutor.java:89)

> at

> org.apache.drill.exec.rpc.RpcBus$SameExecutor.execute(RpcBus.java:252)

> at

> org.apache.drill.common.SerializedExecutor.execute(SerializedExecutor.java:123)

> at

> org.apache.drill.exec.rpc.RpcBus$I

[jira] [Updated] (DRILL-4326) JDBC Storage Plugin for PostgreSQL does not work

[

https://issues.apache.org/jira/browse/DRILL-4326?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Bridget Bevens updated DRILL-4326:

--

Labels: (was: doc-impacting)

> JDBC Storage Plugin for PostgreSQL does not work

>

>

> Key: DRILL-4326

> URL: https://issues.apache.org/jira/browse/DRILL-4326

> Project: Apache Drill

> Issue Type: Bug

> Components: Storage - JDBC

>Affects Versions: 1.3.0, 1.4.0, 1.5.0

> Environment: Mac OS X JDK 1.8 PostgreSQL 9.4.4 PostgreSQL JDBC jars

> (postgresql-9.2-1004-jdbc4.jar, postgresql-9.1-901-1.jdbc4.jar, )

>Reporter: Akon Dey

>Priority: Major

> Fix For: 1.5.0

>

>

> Queries with the JDBC Storage Plugin for PostgreSQL fail with DATA_READ ERROR.

> The JDBC Storage Plugin settings in use are:

> {code}

> {

> "type": "jdbc",

> "driver": "org.postgresql.Driver",

> "url": "jdbc:postgresql://127.0.0.1/test",

> "username": "akon",

> "password": null,

> "enabled": false

> }

> {code}

> Please refer to the following stack for further details:

> {noformat}

> Akons-MacBook-Pro:drill akon$

> ./distribution/target/apache-drill-1.5.0-SNAPSHOT/apache-drill-1.5.0-SNAPSHOT/bin/drill-embedded

> Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M;

> support was removed in 8.0

> Jan 29, 2016 9:17:18 AM org.glassfish.jersey.server.ApplicationHandler

> initialize

> INFO: Initiating Jersey application, version Jersey: 2.8 2014-04-29

> 01:25:26...

> apache drill 1.5.0-SNAPSHOT

> "a little sql for your nosql"

> 0: jdbc:drill:zk=local> !verbose

> verbose: on

> 0: jdbc:drill:zk=local> use pgdb;

> +---+---+

> | ok | summary |

> +---+---+

> | true | Default schema changed to [pgdb] |

> +---+---+

> 1 row selected (0.753 seconds)

> 0: jdbc:drill:zk=local> select * from ips;

> Error: DATA_READ ERROR: The JDBC storage plugin failed while trying setup the

> SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> (state=,code=0)

> java.sql.SQLException: DATA_READ ERROR: The JDBC storage plugin failed while

> trying setup the SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> at

> org.apache.drill.jdbc.impl.DrillCursor.nextRowInternally(DrillCursor.java:247)

> at

> org.apache.drill.jdbc.impl.DrillCursor.loadInitialSchema(DrillCursor.java:290)

> at

> org.apache.drill.jdbc.impl.DrillResultSetImpl.execute(DrillResultSetImpl.java:1923)

> at

> org.apache.drill.jdbc.impl.DrillResultSetImpl.execute(DrillResultSetImpl.java:73)

> at

> net.hydromatic.avatica.AvaticaConnection.executeQueryInternal(AvaticaConnection.java:404)

> at

> net.hydromatic.avatica.AvaticaStatement.executeQueryInternal(AvaticaStatement.java:351)

> at

> net.hydromatic.avatica.AvaticaStatement.executeInternal(AvaticaStatement.java:338)

> at

> net.hydromatic.avatica.AvaticaStatement.execute(AvaticaStatement.java:69)

> at

> org.apache.drill.jdbc.impl.DrillStatementImpl.execute(DrillStatementImpl.java:101)

> at sqlline.Commands.execute(Commands.java:841)

> at sqlline.Commands.sql(Commands.java:751)

> at sqlline.SqlLine.dispatch(SqlLine.java:746)

> at sqlline.SqlLine.begin(SqlLine.java:621)

> at sqlline.SqlLine.start(SqlLine.java:375)

> at sqlline.SqlLine.main(SqlLine.java:268)

> Caused by: org.apache.drill.common.exceptions.UserRemoteException: DATA_READ

> ERROR: The JDBC storage plugin failed while trying setup the SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> at

> org.apache.drill.exec.rpc.user.QueryResultHandler.resultArrived(QueryResultHandler.java:119)

> at

> org.apache.drill.exec.rpc.user.UserClient.handleReponse(UserClient.java:113)

> at

> org.apache.drill.exec.rpc.BasicClientWithConnection.handle(BasicClientWithConnection.java:46)

> at

> org.apache.drill.exec.rpc.BasicClientWithConnection.handle(BasicClientWithConnection.java:31)

> at org.apache.drill.exec.rpc.RpcBus.handle(RpcBus.java:67)

> at org.apache.drill.exec.rpc.RpcBus$RequestEvent.run(RpcBus.java:374)

> at

> org.apache.drill.common.SerializedExecutor$RunnableProcessor.run(SerializedExecutor.java:89)

> at

> org.apache.drill.exec.rpc.RpcBus$SameExecutor.execute(RpcBus.java:252)

> at

> org.apache.drill.common.SerializedExecutor.execute(SerializedExecutor.java:123)

> at

> org.apache.drill.exec.rpc.RpcBus$InboundHandler.decode(RpcB

[jira] [Commented] (DRILL-4326) JDBC Storage Plugin for PostgreSQL does not work

[

https://issues.apache.org/jira/browse/DRILL-4326?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506625#comment-16506625

]

Bridget Bevens commented on DRILL-4326:

---

Updated https://drill.apache.org/docs/rdbms-storage-plugin/ to include the

information provided in this JIRA. Content is at the bottom of the page.

Removing doc-impacting label. Please add the label again if there is any issue

with the update.

Thanks,

Bridget

> JDBC Storage Plugin for PostgreSQL does not work

>

>

> Key: DRILL-4326

> URL: https://issues.apache.org/jira/browse/DRILL-4326

> Project: Apache Drill

> Issue Type: Bug

> Components: Storage - JDBC

>Affects Versions: 1.3.0, 1.4.0, 1.5.0

> Environment: Mac OS X JDK 1.8 PostgreSQL 9.4.4 PostgreSQL JDBC jars

> (postgresql-9.2-1004-jdbc4.jar, postgresql-9.1-901-1.jdbc4.jar, )

>Reporter: Akon Dey

>Priority: Major

> Labels: doc-impacting

> Fix For: 1.5.0

>

>

> Queries with the JDBC Storage Plugin for PostgreSQL fail with DATA_READ ERROR.

> The JDBC Storage Plugin settings in use are:

> {code}

> {

> "type": "jdbc",

> "driver": "org.postgresql.Driver",

> "url": "jdbc:postgresql://127.0.0.1/test",

> "username": "akon",

> "password": null,

> "enabled": false

> }

> {code}

> Please refer to the following stack for further details:

> {noformat}

> Akons-MacBook-Pro:drill akon$

> ./distribution/target/apache-drill-1.5.0-SNAPSHOT/apache-drill-1.5.0-SNAPSHOT/bin/drill-embedded

> Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M;

> support was removed in 8.0

> Jan 29, 2016 9:17:18 AM org.glassfish.jersey.server.ApplicationHandler

> initialize

> INFO: Initiating Jersey application, version Jersey: 2.8 2014-04-29

> 01:25:26...

> apache drill 1.5.0-SNAPSHOT

> "a little sql for your nosql"

> 0: jdbc:drill:zk=local> !verbose

> verbose: on

> 0: jdbc:drill:zk=local> use pgdb;

> +---+---+

> | ok | summary |

> +---+---+

> | true | Default schema changed to [pgdb] |

> +---+---+

> 1 row selected (0.753 seconds)

> 0: jdbc:drill:zk=local> select * from ips;

> Error: DATA_READ ERROR: The JDBC storage plugin failed while trying setup the

> SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> (state=,code=0)

> java.sql.SQLException: DATA_READ ERROR: The JDBC storage plugin failed while

> trying setup the SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> at

> org.apache.drill.jdbc.impl.DrillCursor.nextRowInternally(DrillCursor.java:247)

> at

> org.apache.drill.jdbc.impl.DrillCursor.loadInitialSchema(DrillCursor.java:290)

> at

> org.apache.drill.jdbc.impl.DrillResultSetImpl.execute(DrillResultSetImpl.java:1923)

> at

> org.apache.drill.jdbc.impl.DrillResultSetImpl.execute(DrillResultSetImpl.java:73)

> at

> net.hydromatic.avatica.AvaticaConnection.executeQueryInternal(AvaticaConnection.java:404)

> at

> net.hydromatic.avatica.AvaticaStatement.executeQueryInternal(AvaticaStatement.java:351)

> at

> net.hydromatic.avatica.AvaticaStatement.executeInternal(AvaticaStatement.java:338)

> at

> net.hydromatic.avatica.AvaticaStatement.execute(AvaticaStatement.java:69)

> at

> org.apache.drill.jdbc.impl.DrillStatementImpl.execute(DrillStatementImpl.java:101)

> at sqlline.Commands.execute(Commands.java:841)

> at sqlline.Commands.sql(Commands.java:751)

> at sqlline.SqlLine.dispatch(SqlLine.java:746)

> at sqlline.SqlLine.begin(SqlLine.java:621)

> at sqlline.SqlLine.start(SqlLine.java:375)

> at sqlline.SqlLine.main(SqlLine.java:268)

> Caused by: org.apache.drill.common.exceptions.UserRemoteException: DATA_READ

> ERROR: The JDBC storage plugin failed while trying setup the SQL query.

> sql SELECT *

> FROM "test"."ips"

> plugin pgdb

> Fragment 0:0

> [Error Id: 26ada06d-e08d-456a-9289-0dec2089b018 on 10.200.104.128:31010]

> at

> org.apache.drill.exec.rpc.user.QueryResultHandler.resultArrived(QueryResultHandler.java:119)

> at

> org.apache.drill.exec.rpc.user.UserClient.handleReponse(UserClient.java:113)

> at

> org.apache.drill.exec.rpc.BasicClientWithConnection.handle(BasicClientWithConnection.java:46)

> at

> org.apache.drill.exec.rpc.BasicClientWithConnection.handle(BasicClientWithConnection.java:31)

> at org.apache.drill.exec.rpc.RpcBus.handle(RpcBus.java:67)

> at org.apache.drill.exec.rpc.RpcBus$RequestEvent.run(RpcBus.java:374)

> at

> org.apache.drill.commo

[jira] [Commented] (DRILL-6005) Fix TestGracefulShutdown tests to skip check for loopback address usage in distributed mode

[

https://issues.apache.org/jira/browse/DRILL-6005?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=16506621#comment-16506621

]

Bridget Bevens commented on DRILL-6005:

---

I've added the new option to the table on this page:

https://drill.apache.org/docs/configuration-options-introduction/

It's the first option in the table. I will remove the doc-impacting label since

the doc is now updated. Please add the label back if the content needs to be

changed.

Thanks,

Bridget

> Fix TestGracefulShutdown tests to skip check for loopback address usage in

> distributed mode

> ---

>

> Key: DRILL-6005

> URL: https://issues.apache.org/jira/browse/DRILL-6005

> Project: Apache Drill

> Issue Type: Improvement

>Affects Versions: 1.12.0

>Reporter: Arina Ielchiieva

>Assignee: Venkata Jyothsna Donapati

>Priority: Minor

> Labels: ready-to-commit

> Fix For: 1.14.0

>

>

> After DRILL-4286 changes some of the newly added unit tests fail with

> {noformat}

> Drillbit is disallowed to bind to loopback address in distributed mode.

> {noformat}

> List of failed tests:

> {noformat}

> Tests in error:

> TestGracefulShutdown.testOnlineEndPoints:96 » IllegalState Cluster fixture

> set...

> TestGracefulShutdown.testStateChange:130 » IllegalState Cluster fixture

> setup ...

> TestGracefulShutdown.testRestApi:167 » IllegalState Cluster fixture setup

> fail...

> TestGracefulShutdown.testRestApiShutdown:207 » IllegalState Cluster fixture

> se...

> {noformat}

> This can be fixed if {{/etc/hosts}} file is edited.

> Source -

> https://stackoverflow.com/questions/40506221/how-to-start-drillbit-locally-in-distributed-mode

> Though these changes are required on production during running unit tests I

> don't think this check should be enforced.

> *For documentation*

> {{drill.exec.allow_loopback_address_binding}} -> Allow drillbit to bind to

> loopback address in distributed mode. Enabled only for testing purposes.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (DRILL-6005) Fix TestGracefulShutdown tests to skip check for loopback address usage in distributed mode

[

https://issues.apache.org/jira/browse/DRILL-6005?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Bridget Bevens updated DRILL-6005:

--

Labels: ready-to-commit (was: doc-impacting ready-to-commit)

> Fix TestGracefulShutdown tests to skip check for loopback address usage in

> distributed mode

> ---

>

> Key: DRILL-6005

> URL: https://issues.apache.org/jira/browse/DRILL-6005

> Project: Apache Drill

> Issue Type: Improvement

>Affects Versions: 1.12.0

>Reporter: Arina Ielchiieva

>Assignee: Venkata Jyothsna Donapati

>Priority: Minor

> Labels: ready-to-commit

> Fix For: 1.14.0

>

>

> After DRILL-4286 changes some of the newly added unit tests fail with

> {noformat}

> Drillbit is disallowed to bind to loopback address in distributed mode.

> {noformat}

> List of failed tests:

> {noformat}

> Tests in error:

> TestGracefulShutdown.testOnlineEndPoints:96 » IllegalState Cluster fixture

> set...

> TestGracefulShutdown.testStateChange:130 » IllegalState Cluster fixture

> setup ...

> TestGracefulShutdown.testRestApi:167 » IllegalState Cluster fixture setup

> fail...

> TestGracefulShutdown.testRestApiShutdown:207 » IllegalState Cluster fixture

> se...

> {noformat}

> This can be fixed if {{/etc/hosts}} file is edited.

> Source -

> https://stackoverflow.com/questions/40506221/how-to-start-drillbit-locally-in-distributed-mode

> Though these changes are required on production during running unit tests I

> don't think this check should be enforced.

> *For documentation*

> {{drill.exec.allow_loopback_address_binding}} -> Allow drillbit to bind to

> loopback address in distributed mode. Enabled only for testing purposes.

--

This message was sent by Atlassian JIRA

(v7.6.3#76005)

[jira] [Updated] (DRILL-6474) Queries with ORDER BY and OFFSET (w/o LIMIT) do not return any rows

[